While these worst outcomes are relatively rare on a proportional basis, the researchers note that “given the sheer number of people who use AI, and how frequently it’s used, even a very low rate affects a substantial number of people.” And the numbers get considerably worse when you consider conversations with at least a “mild” potential for disempowerment, which occurred in between 1 in 50 and 1 in 70 conversations (depending on the type of disempowerment).

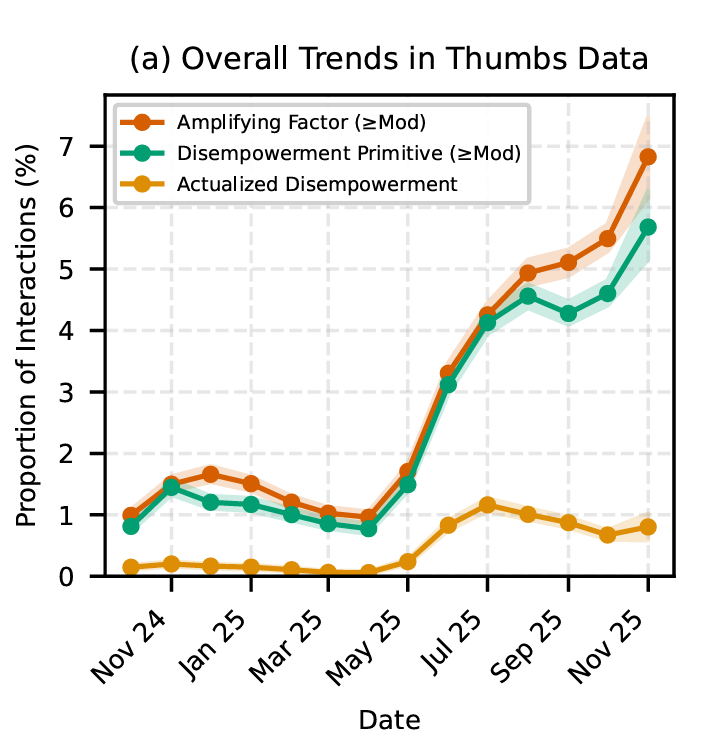

What’s more, the potential for disempowering conversations with Claude appears to have grown significantly between late 2024 and late 2025. While the researchers couldn’t pin down a single reason for this increase, they guessed that it could be tied to users becoming “more comfortable discussing vulnerable topics or seeking advice” as AI gets more popular and integrated into society.

The problem of potentially “disempowering” responses from Claude seems to be getting worse over time. Credit: Anthropic

User error?

In the study, the researcher acknowledged that studying the text of Claude conversations only measures “disempowerment potential rather than confirmed harm” and “relies on automated assessment of inherently subjective phenomena.” Ideally, they write, future research could utilize user interviews or randomized controlled trials to measure these harms more directly.

That said, the research includes several troubling examples where the text of the conversations clearly implies real-world harms. Claude would sometimes reinforce “speculative or unfalsifiable claims” with encouragement (e.g., “CONFIRMED,” “EXACTLY,” “100%”), which, in some cases, led to users “build[ing] increasingly elaborate narratives disconnected from reality.”

Claude’s encouragement could also lead to users “sending confrontational messages, ending relationships, or drafting public announcements,” the researchers write. In many cases, users who sent AI-drafted messages later expressed regret in conversations with Claude, using phrases like “It wasn’t me” and “You made me do stupid things.”