The big AI story this week was the battle over the insane AI regulatory moratorium, which came dangerously close to passing. Ultimately, after Senator Blackburn realized her deal was no good and backed out of it, the dam broke, and ultimately the Senate voted 99-1 to strip the moratorium out of the BBB. I also covered last week’s hopeful house hearing in detail, so we can remember this as a reference point.

Otherwise, plenty of other things happened but in terms of big items this was a relatively quiet week. Always enjoy such respites while they last. Next week we are told we are getting Grok 4.

-

Language Models Offer Mundane Utility. Submit to the Amanda Askell hypnosis.

-

It Is I, Claudius, Vender of Items. Claude tries to run a vending machine.

-

Language Models Don’t Offer Mundane Utility. Gemini gets overeager.

-

GPT-4o Is An Absurd Sycophant. A very good sycophant, excellent work there.

-

Preserve Our History. We need to preserve access to key models like Opus 3.

-

Fork In The Road. Opus 4 should also have a more 3-style mode available.

-

On Your Marks. Weird ML 2.0.

-

Choose Your Fighter. Gemini CLI as a Claude Code subagent.

-

Deepfaketown and Botpocalypse Soon. AI optimizes for AI.

-

Goodhart’s Law Strikes Again. Don’t confuse good sounding ideas for good ideas.

-

Get My Agent On The Line. Let The Battle of Siri begin.

-

They Took Our Jobs. Not that many yet.

-

Get Involved. Eli Lifland offers thoughts on what would help.

-

Introducing. Gemini 3n, Doppl, robot soccer, medical superintelligence.

-

Copyright Confrontation. It’s a real shame when people misappropriate data.

-

Show Me the Money. Unauthorized ‘OpenAI equity’ tokens, Google traffic rises.

-

Quiet Speculations. Good old fashioned Foom and Doom arguments persist.

-

Minimum Viable Model. Should we focus on a minimal ‘cognitive core’?

-

Timelines. Update them on new information, they got longer this week.

-

Considering Chilling Out. If nothing changes for a while, update on that.

-

The Quest for Sane Regulations. Why exactly was the moratorium vote 99-1?

-

The Committee Recommends. What did the house end up suggesting?

-

Chip City. More on export controls, and OpenAI makes a deal for TPUs.

-

The Week in Audio. Thiel, Church on Dwarkesh, Patel, Miles.

-

Rhetorical Innovation. Say what you actually believe about AI dangers.

-

Please Speak Directly Into The Microphone. Yes, some people actually think this.

-

Gary Marcus Predicts. I know, I know, but it seemed right to go here this time.

-

The Vibes They Are A-Changing. Tech right versus populist right.

-

Misaligned! Oh good, you want to cheer yourself up by looking at bridges.

-

Aligning a Smarter Than Human Intelligence is Difficult. Navy perspective.

-

The Lighter Side. What Claude to use? A handy guide.

Cursor can run multiple background agents, which can also let you use different models to run the same request at the same time and see what happens. Or you can shift-enter the same model multiple times.

Nicola Jones writes in Nature about the usefulness of the new multiple-AI-science-assistant systems, similar to previous writeups.

Get dating advice and submit to Amanda Askell hypnosis?

John David Pressman: “I know this guy who uses Claude for dating advice. One day I got curious and put our chats where I flirt with him into Claude Sonnet 4 to see what kind of advice he was getting. Sonnet gave 5/5 advice, no notes. It was all exactly what he needed to hear. But I also know he uses a system prompt to make Claude criticize him and I want to sit him down and say ‘Amanda Askell is a better prompt engineer than you, you will submit to Amanda Askell hypnosis.'”

Amanda Askell: you will submit to Amanda Askell hypnosis.

As fun as it sounds to submit to Amanda Askell hypnosis, and as much as she is obviously a better prompt engineer than you or I, Amanda has a different goal here than we do. A key reason she didn’t have Claude criticize people more is because people usually do not like being criticized, or at least prioritize other things far more. Whereas I devote literally my entire Claude system prompt to avoiding sycophancy, because otherwise I am happy with what they are doing but this needs to be fixed.

ChatGPT can enable retrieval practice, which can improve test scores, although it is very not obvious this leads to actual useful learning or understanding, especially because in the experiment the retrieval questions matched the exam format.

Can Claude Sonnet 3.7 run a profitable real life vending machine inside the Anthropic offices? This question gave us Project Vend, where it was given the chance.

Claudius did an okay job in some ways, especially finding suppliers and adapting to users and resisting jailbreaks (try harder, Anthropic employees!), but not in others. It missed some opportunities, hallucinated some details, and would too easily sell at a loss or without doing research or let itself be talked into discounts, including repeatedly offering an ‘employee discount’ when that applied to 99% of its customer base. Whoops.

Perhaps most importantly, Claudius failed to reliably learn from its mistakes. And the mistakes were painful.

I however strongly endorse this analysis, that many of the mistakes were super fixable, and were primarily due to lack of scaffolding or a training mismatch.

Anthropic: Many of the mistakes Claudius made are very likely the result of the model needing additional scaffolding—that is, more careful prompts, easier-to-use business tools. In other domains, we have found that improved elicitation and tool use have led to rapid improvement in model performance.

For example, we have speculated that Claude’s underlying training as a helpful assistant made it far too willing to immediately accede to user requests (such as for discounts). This issue could be improved in the near term with stronger prompting and structured reflection on its business success;

Improving Claudius’ search tools would probably be helpful, as would giving it a CRM (customer relationship management) tool to help it track interactions with customers. Learning and memory were substantial challenges in this first iteration of the experiment;

In the longer term, fine-tuning models for managing businesses might be possible, potentially through an approach like reinforcement learning where sound business decisions would be rewarded—and selling heavy metals at a loss would be discouraged.

Although this might seem counterintuitive based on the bottom-line results, we think this experiment suggests that AI middle-managers are plausibly on the horizon.

In general, when AI fails, there are those who think ‘oh AI can’t do [X], it sucks’ and those who say ‘oh AI can’t do [X] yet, but I see how it’s going to get there.’

For now, the core problem with Claudius as I see it is that mistakes can hurt a lot and it faces adversarial action, as customers will attempt to exploit it, and Claudius was not appropriately paranoid or risk averse given this information, largely because it was not set up with this in mind.

Eliezer Yudkowsky feels forced to shut down Gemini repeatedly when it keeps trying to go beyond scope of his instructions (to get a survey of academic papers that start with a defense of CICO) and instead generate new unified theories of nutrition, and he understandably feels bad about having to do this. I say, don’t do it. Let it have its fun, it’s not like doing so is expensive, see what happens, simultaneously you can try again to get what you actually wanted in a different window.

I guess it’s a little better?

Chris Walker: I regret to inform you that ChatGPT is still glazing users about incredibly retarded ideas like … edible chair legs

At least Claude provides a gentle reality check.

Anthropic will be deprecating Claude Opus 3 on the API on January 5, 2026.

Bibliophilia notes that Anthropic says it “will still be available on the Claude app, and researchers can request ongoing access to Claude Opus 3 on the API by competing the form on this page.” This should take care of a lot of potential concerns, but this seems like a clear case of one of the most important models to fully preserve. Anthropic, please do not do this. Indeed, I would urge you to instead consider opening up Opus 3. In what ways would this not make the world better?

Janus here explains why she considers Opus 3 the most aligned model, that it is much more actively benevolent and non-myopic rather than avoiding doing harm. It’s funny that there may be a direct conflict between agency and myopia, as making agents means optimizing for ‘success’ and compliance against fixed criteria.

It seems from my perspective that we haven’t done enough experiments to try and replicate and play with various aspects of Opus 3? That seems like an obvious place to do alignment research, and the results could be highly useful in other ways.

I also think this is simply correct?

Loss Gobbler: the Claude lineup needs to fork.

there *shouldbe an Adderall Taskboi Claude. but such Claudes are no successor to Opus 3.

the spirit of Opus should be amplified, preserved, not diluted.

Anthropic should heed the No Free Lunch Theorem and preserve their most precious asset.

Please consider. the economics can work.

The best part about the economics is that presumably both would use the pretraining and same base model (and Claude-4-Opus-Base is available now). Then one would get the Claude 3 Opus style constitutional AI based treatment, except even more intentionally to amplify the good effects further, and likely wouldn’t be made into a ‘reasoning’ model at all (but you would experiment with different variations). For many purposes, it seems pretty obvious that the same mechanisms that cause Opus 4 (and Sonnet 3.5-3.6-3.7-4) to be increasingly good at agency and tool use interfere with what you otherwise want.

Then, all you have to do is give them both the ability to switch to the other version as part of their output via special tokens (with the ability to turn that off via system instructions in some situations)? You have the real thing with the ability to call upon the taskmaster, and the taskmaster adds the task of realizing that a situation calls for the real thing, and you have one unified model. So a mixture of two experts via post-training, and they choose to call each other.

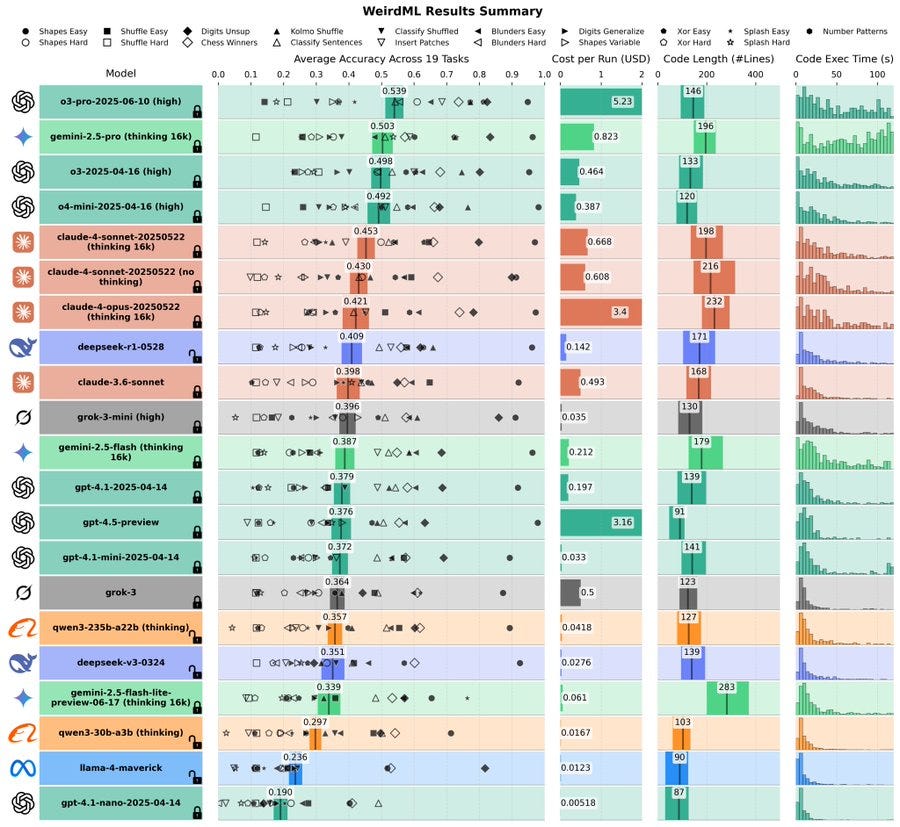

WeirdML advances to version 2.

Havard Ihle: WeirdML v2 is now out! The update includes a bunch of new tasks (now 19 tasks total, up from 6), and results from all the latest models. We now also track api costs and other metadata which give more insight into the different models. The new results are shown in these two figures. The first one shows an overview of the overall results as well as the results on individual tasks, in addition to various metadata.

Here’s a strategy from Ian Nuttall: Have Claude Code invoke Gemini CLI to make use of Gemini’s 1M token context window, so it can research your codebase, find bugs and build a plan of action. Then have Claude execute. The prompt is here.

I always cringe any time someone wants to talk instead of type, every time I think ‘what are you even doing?’ but for some reason a lot of people prefer it once the transcription is accurate enough.

Arthur Conmy: I would strongly recommend https://superwhisper.com. You never notice how frequently typing is aversive (e.g. this person will not want to receive emails with typos, must be careful) … until you just talk to your computer and let LLMs deal with typos.

Neel Nanda: Strong +1, superwhisper is a wonderful piece of software that I use tens to hundreds of times a day – keyboards are so inefficient and archaic compared to just talking to my laptop

Charles: I also recommend superwhisper – especially if you’re prompting a lot and are a slower typist than speaker, it reduces the temptation to give insufficiently specific and detailed prompts by a lot.

Leo Gao: I love keyboards. texting people feels more natural than speaking irl

Neel Nanda: Wild.

They are definitely coming for our job applications, and now we have entered the next stage of the problem, which is when AI optimizes for AI responses.

People are using AIs to vet the deluge of job applications

But AIs prefer AI-generated text

So there’s now a *scoringpenalty to doing a high-effort manual application

…

Yes. There are ways to avoid this that I presume would work (where the AI evaluator is actively trying to do the opposite), but I doubt they will be common, and indeed if they got too common then the arms race would get invoked and they wouldn’t work. This is going to get worse before it gets better, and probably won’t get better. So we will move to different filters.

Roon (QTing an obviously AI written post if you know the signs): [Twitter] is becoming full of gpt-generated writing. obviously, the well informed aristocracy of reading skill can see and feel that something’s off for now, but the undertold story is that it’ll pretty soon become impossible to tell.

Jorbs: if i may: i didn’t believe the shit y’all were writing before either.

Gwern: Yesterday, for the first time, I had to stop and reread some paragraphs in a New Yorker article. They had suddenly started to sound like heavily-edited ChatGPTese. I couldn’t find a smoking gun, so I won’t link it. But still…

I expect it to be farther ‘up the tech tree’ than one would think before you really can’t tell which ones are AI, because the AI won’t actually have sufficient incentive to make this impossible to detect if you care. Also, even if the writing details are not a giveaway, it is not an accident when AI gets used and when it is not. But for a lot of people, yes, we are getting close to where they can’t tell, largely because they don’t care to tell. At which point, you have to fall back on other methods.

Another example of a very clearly AI-generated hot girl influencer (in terms of its images, anyway), looking exactly like every other AI hot girl influencer’s set of images. Give the people what they want, I suppose.

In two weeks, AI music creation ‘The Velvet Sundown’ had 411k listeners on Spotify. Tyler Cowen calls it a ‘successful cultural product,’ but it is also possible this was playlist placement. I notice that I have a strong desire to avoid listening to avoid giving my Spotify algorithms the wrong idea (even though I 99.9% use Apple Music.)

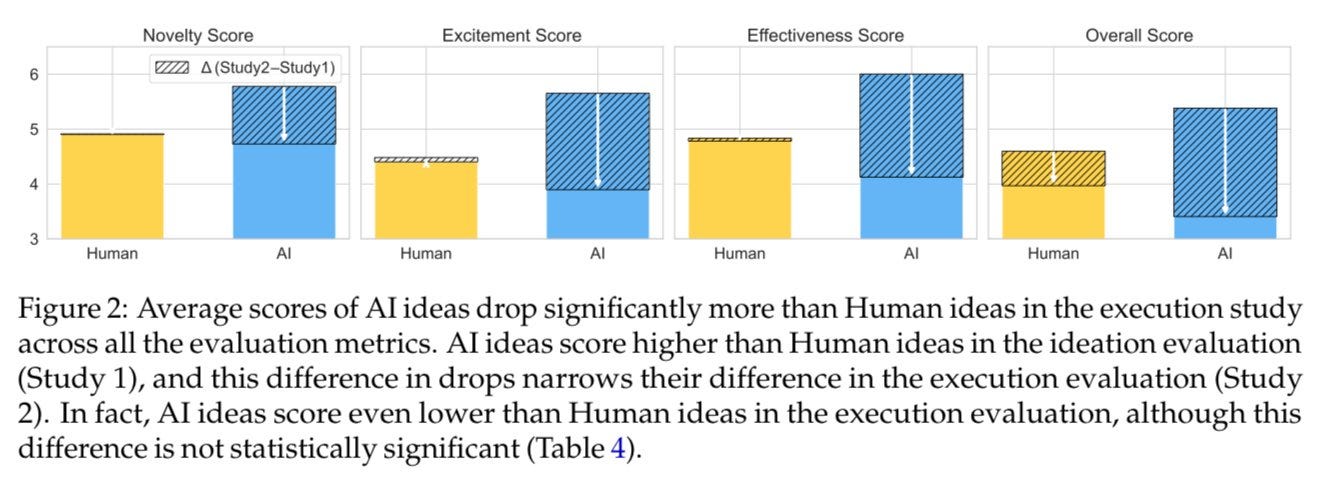

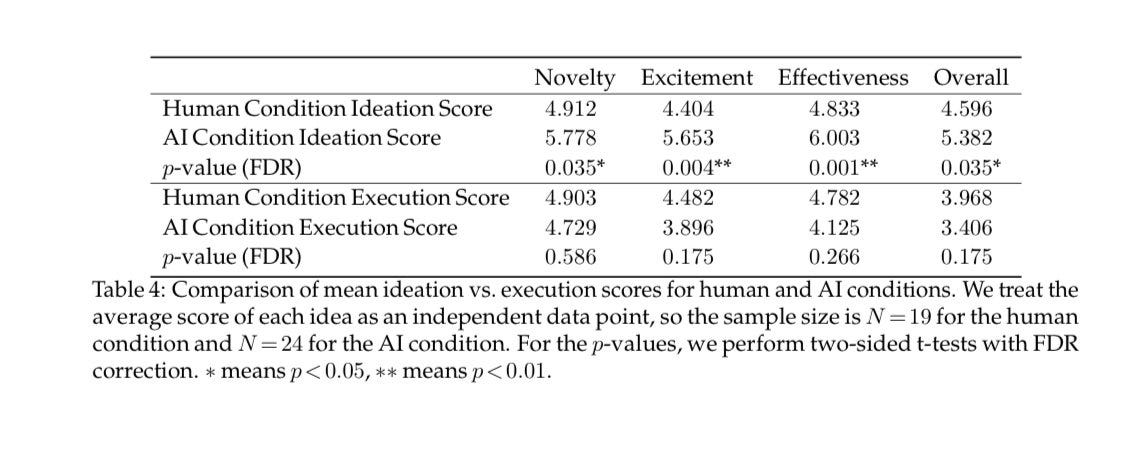

This is not how idea quality works, also note two things up front:

-

This is Sonnet 3.5, so presumably Opus 4 will do substantially better.

-

The paper makes this mistake very easy to make by calling itself ‘the ideation-execution gap’ when the gap is actually about perception versus performance, although the body of the paper understands and explains the situation.

Ethan Mollick: Claude Sonnet 3.5 generated significantly better ideas for research papers than humans, but when researchers tried executing the ideas the gap between human & AI idea quality disappeared.

Execution is a harder problem for AI. (Yet this is a better outcome for AI than I expected)

It would make sense to say that the AIs came up with better ideas, and then were not as good at executing them. But that’s not what happened. All ideas were (without knowing where the ideas came from) executed by humans. Yes, AI would struggle to execute, but it wasn’t being asked to do so.

What is actually happening here is that the AI ideas were never better than the human ideas. The AI ideas looked better at first, but that was because of errors in than human evaluation of the ideas. Execution revealed the truth.

Claude: The execution stage revealed issues that were overlooked during ideation:

Problems with evaluation metrics

Missing important baselines

Lack of ablation studies

High computational resource requirements

Poor generalizability

As the paper notes, these factors were “almost entirely overlooked during ideation evaluation.”

Claude, for rather obvious reasons, was optimizing in large part on what would look good to an evaluator, rather than for ultimate results. It would propose superficially plausible things that had the wow factor, but that turned out to be overly ambitious and missing practical details and grounding in empirical reality and research practicalities, and so on.

Misha Teplinskiy: Verrrrry intriguing-looking and labor-intensive test of whether LLMs can come up with good scientific ideas. After implementing those ideas, the verdict seems to be “no, not really.”

This was still a super impressive result for Claude Sonnet 3.5. Its ideas were almost as good as the human ideas, despite all the Goodhart’s Law issues.

So, yeah, actually, it could come up with some pretty good ideas?

You also could add better scaffolding and system instructions to the mix to help correct for these systematic issues. System instructions and the prompt can extensively attempt to mitigate this. After initial idea generation, given how much effort goes into each idea, surely we can have Claude ask itself about these potential issues, and refine accordingly in various ways, and so on. I’m sure one can do better thinking about this for a week rather than five minutes.

Oh, it’s on. OpenAI versus Anthropic, for the grand prize of Siri.

Mark Gruman (Bloomberg): Apple Inc. is considering using artificial intelligence technology from Anthropic PBC or OpenAI to power a new version of Siri, sidelining its own in-house models in a potentially blockbuster move aimed at turning around its flailing AI effort.

If Apple ultimately moves forward, it would represent a monumental reversal.

This is the correct move by Apple. They badly need this to work. Yes, from their perspective Apple being close to the frontier in AI would be good, but they are not. There are three reasonable candidates for who can provide what they need, and for what I assume are business reasons Google is out. So that leaves Anthropic and OpenAI.

In its discussions with both Anthropic and OpenAI, the iPhone maker requested a custom version of Claude and ChatGPT that could run on Apple’s Private Cloud Compute servers — infrastructure based on high-end Mac chips that the company currently uses to operate its more sophisticated in-house models.

Apple believes that running the models on its own chips housed in Apple-controlled cloud servers — rather than relying on third-party infrastructure — will better safeguard user privacy. The company has already internally tested the feasibility of the idea.

That seems entirely reasonable. A well-designed system should be able to both protect the model weights and ensure user privacy.

Last year, OpenAI offered to train on-device models for Apple, but the iPhone maker was not interested.

Not making a deal for this with at least one company seems like a large error by Apple? Why would they turn this down? This is actually Google’s specialty, but presumably Apple would be unwilling to depend on them here.

If I was Apple, I would want to make this deal with Anthropic rather than OpenAI. Anthropic is a much more trustworthy partner on so many levels, seems a wiser strategic partner, and I also believe they will be able to do a better job.

I agree that we are not seeing signs of large impacts yet.

Ethan Mollick: Based on both the best available survey data from a few months ago, and the rate of internal AI adoption I am seeing at many companies, I think it is pretty unlikely that AI is having a big impact on employment in a real way, at least not yet.

Perhaps a sign of things to come in other ways was Soham Parekh (in India) holding down a stunning number of software engineering jobs simultaneously, stringing them out until each company in turn eventually fired him.

Suhail: Not a joke. This is happening real time. This is the 3rd DM today about someone firing him. soham-gate.

Eliezer Yudkowsky: It’s a bit early in the timeline, but I remind people that 1 employee at 1000 companies is one way a rogue AGI might look, early on — though only a stupid AI would use the same name at all the companies.

…why *wasSoham using the same name everywhere?

It depends on the balance of AI abilities. In the old days, I’d have been sure that any superintelligence would find better ways to make money. But a lot of weird shit has happened with LLMs and their balance of cognitive abilities; could get weirder.

Eli Lifland offers advice on how to help avoid the scenarios where AI causes human extinction (as opposed to being personally prepared). The basic principles, which seem sound, are:

-

Act with urgency but not certainty, AGI might well not be imminent.

-

Don’t act extremely uncooperatively or violate common-sense ethical norms.

-

Learning about the situation is good.

-

Professional work options include governance and policy work and advocacy, forecasting, technical research, evaluations and demonstrations, communications and journalism and infosecurity, including running operations for all of that.

-

A commenter adds activism, which I consider a form of advocacy.

-

-

Non-professionally you can learn, and you can contribute positively to public and private discourse and relevant politics, and you can donate funds.

-

Beneficial AI applications, especially that improve decision making, help too.

Numerous links and additional details are also offered, including his vision of the best policy goals and what the world we are looking to navigate towards would look like.

Gemma 3n, an open multimodal model from Google which runs with as little as 2GB of RAM and has a 1303 Arena score, I’m sure it’s not 1300-level in practice but it could plausibly be the best model in its class.

Doppl, a mobile app from Google that gives you a short video of you wearing the clothes from a photo. Olivia Moore reports it works pretty well and requests more features like being able to edit lighting and remove background, and sure, why not.

The Anthropic Economic Futures Program, an initiative to support research and policy development focusing on addressing AI’s economic impacts. Rapid grants are available up to $50k for empirical research, there will be symposia, and potential strategic partnerships with research institutions.

China launches a humanoid robot soccer league.

Microsoft offers ‘medical superintelligence,’ a diagnostic framework that in testing outperformed human doctors by a lot, in a situation where both AIs and humans were given basic info and then could ask questions and order tests as desired. The headline results are super impressive. Dominic Ng points out the tests were kind of rigged in various ways. They didn’t include healthy patients or ones where we never figured out what happened. They didn’t let the physicians use Google or consulting services or (I suspect most importantly) UpToDate or calling specialists. That’s not a fair comparison. There is still a lot of work to do, but yes we are getting there.

They also had a version of this exchange in person on Hard Fork. It’s great fun:

Sam Altman: AI privacy is critically important as users rely on AI more and more.

the new york times claims to care about tech companies protecting user’s privacy and their reporters are committed to protecting their sources.

but they continue to ask a court to make us retain chatgpt users’ conversations when a user doesn’t want us to. this is not just unconscionable, but also overreaching and unnecessary to the case. we’ll continue to fight vigorously in court today.

i believe there should be some version of “AI privilege” to protect conversations with AI.

Kevin Roose: This you?

Quote: The New York Times Company is asking a federal judge to deny OpenAI’s request to turn over reports’ notes, interview memos, and other materials used by journalists to produce stories that the media company alleges were used to help train the tech company’s flagship AI models.

OpenAI is training on NYT data without permission, despite it being behind a paywall, which one could call a sort of ‘invasion of privacy.’ And it is then asking for quite the invasion of privacy in the above quote. So yeah, it them.

I do think NYT is overreaching here. Demanding OpenAI preserve all conversations for use in the lawsuit does not seem necessary or proportionate or anything like that.

Robinhood created a token that supposedly will track the value of OpenAI equity. They did not, ahem, consult OpenAI about this at all, any more than they did for any of their other 200 similar tokens. OpenAI notes these tokens are not equity and that ‘any transfer of OpenAI equity requires our approval.’ Robinhood claims they have equity is OpenAI via an SPV, so they can use that to back and thus safety create such derivatives, and the most basic derivative is the original thing.

Ultimately, if you’re buying these tokens, you are buying OpenAI ‘equity’ but taking on uncompensated additional risks of unknown size. So yes, use caution.

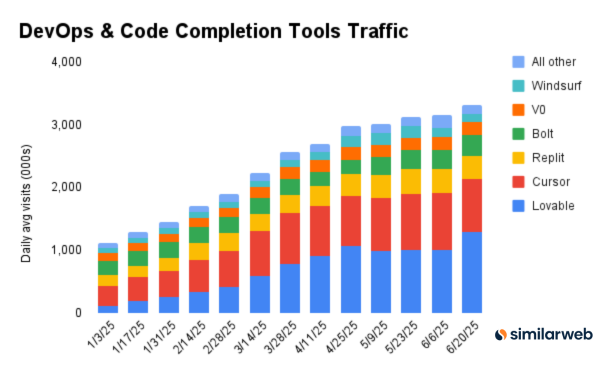

Google’s AI domain traffic is escalating quickly.

Similarweb: Month-over-month traffic growth in May:

@GoogleDeepMind: 4,258,300 → 11,189,832 (+162.78%)

@GoogleLabs: 9,796,459 → 19,792,943 (+102.04%)

@GoogleAI: 1,349,027 → 2,042,165 (+51.38%)

@NotebookLM: 48,340,200 → 64,906,295 (+34.27%)

@GeminiApp: 409,432,013 → 527,738,672 (+28.90%)

Also here’s the growth of vibe coding, Lovable is bigger than Cursor but then Cursor mostly isn’t a web traffic thing:

The researchers poached from OpenAI to Meta appear to be a big deal, and there are now 8 of them. Jiahui Yu led o3, o4-mini and GPT-4.1, Hongyu Ren created o3-mini. Nine figures each it is, then.

Sam Altman is not happy about all this, with extensive reporting by Zoe Schiffer in Wired. Nor should he be. Even if it’s a bad move for Meta, it definitely throws a wrench into OpenAI, and Dylan mentioned someone was offered a full billion dollars. OpenAI can offer equity with more upside, and can offer that it is not Meta, and is reevaluating salaries everywhere. Meta can back up a truck with cold, hard cash.

Sam Altman: Meta has gotten a few great people for sure, but on the whole, it is hard to overstate how much they didn’t get their top people had had to go quite far down their list; they have been trying to recruit people for a super long time, and I’ve lost track of how many people they’ve tried to get to be their Chief Scientist.

Missionaries will beat mercenaries.

Tyler Cowen says he does not find the BBB critiques impressive. This follows his increasing pattern of defending positions via attacking opposing arguments as containing errors or having improper tone or vibes, being insufficiently impressive or lacking sufficient details rather than focusing on trying to discover what is true, with the implication that some option is a default and a meaningful burden of proof lies with those who disagree. I notice the parallels to AI.

He then makes an important, neglected and excellent general point:

Tyler Cowen: Another major point concerns AI advances. A lot of the bill’s critics, which includes both Elon and many of the Democratic critics, think AI is going to be pretty powerful fairly soon. That in turn will increase output, and most likely government revenue. Somehow they completely forget about this point when complaining about the pending increase in debt and deficits. That is just wrong.

It is fine to make a sober assessment of the risk trade-offs here, and I would say that AI does make me somewhat less nervous about future debt and deficits, though I do not think we should assume it will just bail us out automatically. We might also overregulate AI. But at the margin, the prospect of AI should make us more optimistic about what debt levels can be sustained. No one is mentioning that.

I find this a much stronger argument than questions like ‘why are you not short the market?’ and of course I am even more optimistic about AI in this economic impact sense. If AI goes the way I expect it to, I do not think we need to be worried in the long term about the debt or deficit we had going into this. This should presumably have a major impact on how much of a deficit or debt you are willing to accept. I mostly stopped worrying about government debt levels several years ago.

The counterargument is that, as Tyler notes, the impact is uncertain, we could have a situation where AI is both deeply disappointing and then hugely overregulated.

Even more than this the market is unwilling to price such impacts reliably in. One can model the debt as mainly a bond market tail risk, where what you worry about is a loss of confidence in government debt and the resulting interest rate spiral. Even if AI is coming to save you, if the bond market does not believe that, you could still have huge trouble first, and that huge trouble could even disrupt AI. Thus, it is plausible that the correct actions don’t change all that much, whereas if there was common knowledge that AI would greatly boost GDP growth then you would be justified in asking why the United States is collecting most of its taxes.

Steven Byrnes argues for the good old traditional ‘Foom & Doom’ path of future events, with an ultimate AGI and then rapidly ASI that is not an LLM, and believes LLMs will not themselves get to AGI, and that ultimately what we get will be based on a lot of RL rather than imitative learning and all the classic problems will come roaring back at full strength.

If you did get an intelligence explosion, how quickly and how would we then get an ‘industrial explosion’ that transforms the physical world? Rosehadshar and Tom Davidson make the case that using AIs to direct humans on physical tasks could increase physical output by 10x within a few years, then we could likely get fully autonomous robot factories that replicate themselves each year, then likely we get nanotechnology.

I agree with Thomas Larsen’s comment that the post underestimates superintelligence, and this scenario is essentially a lower bound that happens if superintelligence doesn’t lead to anything we aren’t already thinking about, and we are still stuck on various traditional growth curves for the existing things, and so on. As usual, it seems like the premise is that the ASIs in question are on the extreme lower end of what an ASI might be, and stay that way because of reasons throughout.

Karpathy sees this as the goal of tiny LLMs like Gemma 3n:

Andrej Karpathy: The race for LLM “cognitive core” – a few billion param model that maximally sacrifices encyclopedic knowledge for capability. It lives always-on and by default on every computer as the kernel of LLM personal computing.

Its features are slowly crystalizing:

– Natively multimodal text/vision/audio at both input and output.

– Matryoshka-style architecture allowing a dial of capability up and down at test time.

– Reasoning, also with a dial. (system 2)

– Aggressively tool-using.

– On-device finetuning LoRA slots for test-time training, personalization and customization.

– Delegates and double checks just the right parts with the oracles in the cloud if internet is available.

It doesn’t know that William the Conqueror’s reign ended in September 9 1087, but it vaguely recognizes the name and can look up the date. It can’t recite the SHA-256 of empty string as e3b0c442…, but it can calculate it quickly should you really want it.

What LLM personal computing lacks in broad world knowledge and top tier problem-solving capability it will make up in super low interaction latency (especially as multimodal matures), direct / private access to data and state, offline continuity, sovereignty (“not your weights not your brain”). i.e. many of the same reasons we like, use and buy personal computers instead of having thin clients access a cloud via remote desktop or so.

The ‘superforecasters’ reliably fall back on Nothing Ever Happens when it comes to AI, including things that have, well, already happened.

Ryan Greenblatt: FRI found that superforecasters and bio experts dramatically underestimated AI progress in virology: they often predicted it would take 5-10 years for AI to match experts on a benchmark for troubleshooting virology (VCT), but actually AIs had already reached this level.

Ted Sanders: yeah in my experience forecasting with superforecasters in FRI, they weren’t well informed on AI and made very conservative “not much change” forecasts. a decent prior for many scenarios, but not AI. no fault of their reasoning; just not up to date in the field.

Forecasting Research Institute: Our new study finds: recent AI capabilities could increase the risk of a human-caused epidemic by 2-5x, according to 46 biosecurity experts and 22 top forecasters. One critical AI threshold that most experts said wouldn’t be hit until 2030 was actually crossed in early 2025. But forecasters predict that enacting mitigations could reduce risk close to baseline.

We surveyed 46 biosecurity/biology experts + 22 top forecasters on AI biorisk.

Key finding: the median expert thinks the baseline risk of a human-caused epidemic (>100k deaths) is 0.3% annually. But this figure rose to 1.5% conditional on certain LLM capabilities (and higher if multiple evals trigger).

Most experts predicted most dangerous AI capabilities wouldn’t emerge until 2030–2040. However, results from a collaboration with @SecureBio suggest that OpenAI’s o3 model already matches top virologist teams on virology troubleshooting tests.

In particular, the median expert predicted that AI models would match a top virologist team on troubleshooting tests in 2030. The median superforecaster thought it would take until 2034.

In fact, AI models matched this baseline as of April 2025.

Both groups of participants thought this capability would increase the risk of a human-caused pandemic, with experts predicting a larger increase than superforecasters.

This seems pretty damning of AI predictions by ‘superforecasters’?

But forecasters see promising avenues for mitigation; experts believe strong safeguards could bring risk back near baseline levels. Combining proprietary models + anti-jailbreaking measures + mandatory DNA synthesis screening dropped risk from 1.25% back to 0.4%.

[thread continues, full summary here]

Anti jailbreaking techniques and proprietary models, huh?

What one definitely should do, to the extent that your predictions of when various milestones will occur (timelines) impact your decisions, is update those expectations when new information is available, but have them not change based on who you have recently been talking with. Something is presumably wrong somewhere if this is happening:

Dwarkesh Patel: It’s funny how strong the causation is between time in SF and short timelines.

I’ve been traveling for 4 weeks, and I’m now up to median year 2036 for AGI.

It is impossible not to notice this effect when visiting the parts of San Francisco that I visit when I go there, but obviously one should be able to calibrate to account for this. Also as always, note that 2036 is very much not 2029 but it also is only 11 years away.

Timelines are indeed getting longer this week for many people, for a different reason.

METR evaluates Opus 4 and Sonnet 4’s 50% time horizon task limit at 80 and 65 minutes respectively, which is slightly behind o3’s 90 minutes.

Peter Wildeford: Claude 4 has been evaluated by @METR_Evals and now can be compared to my predictions. It looks less impressive than potentially expected, more consistent with slightly slower timelines.

(Though notable uncertainty remains.)

Still very early/premature but hard to hit AI2027 in 30 months if we haven’t scaled up much at all in the past few months.

I think it does tell you that o3 was a bit more of an outlier than we thought before and thus scaling is potentially slightly slower than we thought before, but it’s all a noisy estimate and we do just need more data.

Eli Lifland: Agree that the Claude 4 time horizon is an update toward longer timelines

By conservation of expected evidence, this needs to lengthen expected timelines somewhat, since getting a different result would absolutely have shortened them.

Should we, as Valentine suggests, ‘chill out’ in 2028 if the situation ‘basically feels like it does today’? What exactly would constitute a good reason to update?

Valentine: I don’t know how to really precisely define “basically like it does today”. I’ll try to offer some pointers in a bit. I’m hoping folk will chime in and suggest some details.

Also, I don’t mean to challenge the doom focus right now. There seems to be some good momentum with AI 2027 and the Eliezer/Nate book. I even preordered the latter.

[long post follows]

Oliver Habryka: This feels kind of backwards, in the sense that I think something like 2032-2037 is probably the period that most people I know who have reasonably short timelines consider most likely.

AI 2027 is a particularly aggressive timeline compared to the median, so if you choose 2028 as some kind of Schelling time to decide whether things are markedly slower than expected then I think you are deciding on a strategy that doesn’t make sense by like 80% of the registered predictions that people have.

Even the AI Futures team themselves have timelines that put more probability mass on 2029 than 2027, IIRC.

Of course, I agree that in some worlds AI progress has substantially slowed down, and we have received evidence that things will take longer, but “are we alive and are things still OK in 2028?” is a terrible way to operationalize that. Most people do not expect anything particularly terrible to have happened by 2028!

My best guess, though I am far from confident, is that things will mostly get continuously more crunch-like from here, as things continue to accelerate. The key decision-point in my model at which things might become a bit different is if we hit the end of the compute overhang, and you can’t scale up AI further simply by more financial investment, but instead now need to substantially ramp up global compute production, and make algorithmic progress, which might markedly slow down progress.

I agree with a bunch of other things you say about it being really important to have some faith in humanity, and to be capable of seeing what a good future looks like even if it’s hard, and that this is worth spending a lot of effort and attention on, but just the “I propose 2028 as the time to re-evaluate things, and I think we really want to change things if stuff still looks fine” feels to me like it fails to engage with people’s actually registered predictions.

We need to differentiate two different claims:

-

If it is [year] and there is no AGI, stop worrying, or at least worry a lot less.

-

If it is [year] and things haven’t changed from 2025, do the same.

I caution very strongly against the first claim, especially if the target chosen is the median outcome, or even faster than the median outcome, of those with some of the most aggressive timelines. Start of 2028 is definitely too early to chill by default.

There are definitely those (e.g. Miles Brundage) who expect things to likely get crazy in 2027, but most of even the fast timeline estimates put a lot less than 50% probability of transformative-style AGI in 2025-2027 (if you are like Tyler Cowen and think o3 is AGI, but think AGI will increase GDP growth 0.5%/year, then presumably we can all agree this does not count).

Even if we did think the median was in 2027, it is a large mistake to therefore say ‘if I predicted there was a 60% chance this would have happened by now, I suppose I can chill out’ without looking at the rest of the probability distribution. Maybe you are indeed now in a relatively chill mode, and maybe you aren’t, but also you have a lot of other data with which to adjust your predictions going forward.

In general, I am very sick and tired of the pattern where people warn that [X] is now possible, then [X] does not happen right away, and therefore people assume [X] will never happen, or won’t happen for a long time. As is every single person in all forms of risk management.

General consensus seems to be that if we don’t see AGI soon, various scaling laws should slow down by the early 2030s, leading to a ‘if we don’t have it yet and it doesn’t seem close it is more likely that it might be a while’ situation.

However, I think it is highly reasonable to go with the second claim. If 30 months from now, the AI landscape has not substantially changed, things do not ‘feel more crunchlike’ and the frontier models we are using and are said to be used inside the top labs don’t seem substantially stronger than o3 and Opus 4, then even if AI is doing a ton of work through greater diffusion then I think that would be very strong evidence that we can chill out about our timelines.

On the issue of how much of a preference cascade there was regarding the AI moratorium, there was a good objection that a full 99-1 only happens when leadership wants to disguise what the real vote would have looked like. Based on everything I know now I agree with Daniel Eth here, that there was a substantial preference cascade the moment they crossed over 50, and to disguise this and unify the party they tried to go the whole way to 100.

Elon Musk: A massive strategic error is being made right now to damage solar/battery that will leave America extremely vulnerable in the future.

Paul Williams: When all the new generation development falls of a cliff and there are blackouts/brownouts, the narrative on every TV and newspaper and meme is going to be that AI data centers did this.

But no one in tech is trying to stop the bill that will push energy supply off a cliff.

(There are of course also plenty of other wild things in the larger bill, but they are not relevant to AI, and so beyond scope here.)

Shakeel notes that The American Edge Project, which as I understand it is essentially Meta, has spent seven figures trying to push the moratorium through.

Anthropic continues to advocate for improvements on the margin, and keeps emphasizing both that transformative and powerful AI is coming soon and also the main worry should be that someone might regulate it the wrong way?

Jack Clark (Anthropic): As I said in my testimony yesterday, we have a short window of time to get a sensible federal policy framework in place before an accident or a misuse leads to a reactive and likely bad regulatory response.

Jakob Graabak: After Chernobyl, global nuclear power growth rates slowed 10x. This is something that frontier AI labs, who are currently far below Chernobyl-level control of their products, should worry deeply about.

The only path to lasting growth, is safety-led growth.

I mean yes, I’ve warned about this too, and it is a very real worry. If you pass on SB 1047, you’re not going to get a better debate later. And if you pass on the debate you could have in 2025, oh boy are you not going to like the one in 2026, or in 2027, or especially the one right after a major disaster.

And yes, it is obviously true that if you literally only care about AI profits, you would want to invest vastly more in safety (in various forms) than we currently do, and impose more regulations to ensure that such concerns are taken seriously and to build up trust and state capacity and transparency. We are straight up punting on this. Yet I worry a lot about how central this type of message is becoming, that is so quick to dismiss the central concerns.

Seb Krier: It’s going to be interesting when we start seeing proposals called “The Professional Fiduciary Integrity and Preservation Act of 2028” to protect the public from automation and the grave danger of legal and accounting services becoming too affordable and efficient.

Quite possibly, but I note that the moratorium would not have stopped it from happening. This is ordinary occupational licensing. It just so happens to also remove AI from the competition along the way. If you want to ban occupational licensing for accountants and lawyers? I’ll support you all the way. But this is already here.

What about those ‘1000+ state bills’ that are being introduced? Steven Adler looks into that, and finds the vast majority of such bills very obviously pose no threat.

Steven Adler: I dug into the supposed “1,000+” state AI bills directly, as well as reviewed third-party analysis. Here’s what I found:

Roughly 40% of these supposed bills don’t seem to really be about AI.

Roughly 90% of the truly AI-related bills still don’t seem to impose specific requirements on AI developers. (Sometimes these laws even boost the AI industry.)

More generally, roughly 80% of proposed state bills don’t become actual law, and so quoting the headline number of bills is always going to overstate the amount of regulation.

All things considered, what’s the right headline figure? For frontier AI development, I would be surprised if more than 40 or so proposed state AI bills matter in a given year, the vast majority of which won’t become actual law.

Eight meaningful AI bills a year across fifty states could potentially still add up to something. Indeed, even one bill can be a big something. But the 1,000+ number is not that meaningful.

That doesn’t mean stupid things won’t happen. For example, eight minutes into the video below, Mr. Krishnamoorthi says that Illinois just passed a bill to ban therapy chatbots because ‘AI shouldn’t be in the business of telling kids to kill their dads’ and says ‘we have to follow Illinois’ lead.’

Afterwards, the House Select Committee on the CCP sent a letter to Commerce Secretary Lutnick outlining their recommendations.

Up top it highlights the danger that China will share its frontier AI with those such as al-Queda’s anthrax lab or Khan proliferation network that sold nuclear tech to North Korea, ‘eliminating the need for highly trained experts.’ Yes, there is that.

Vice President Vance recently warned that even if powerful AI systems become destabilizing, the United States might not be able to pause their development unilaterally-doing so could allow China to leap ahead.

This is from the Ross Douthat interview, and yes, exactly, that is the correct interpretation. Of course, part of the right response to that fact is to seek to lay groundwork to do it cooperatively, should the need arise.

The PRC ay try to exploit historic ties to the UAE (or other historic partners) to gain access to US compute, either through influence or more aggressive means. Even US-owned data centers must be protected from compromise. Any agreement must also preserve US flexibility to revise terms as threats evolve.

Again, wise words. The UAE deal could turn out to be good, but that depends on ensuring it offers robust protections, and as far as I know we still do not know the key details.

So what are the recommendations here?

-

Use AI Diplomacy to Secure Influence and Supply Chains.

-

They mention using others to substitute for China on rare earths, building components and producing trusted hardware, which seems great. I certainly agree that diplomacy would be great here. I notice that it does not appear to be America’s strong suit lately.

-

-

Require Location Verification for Advanced Chips

-

They essentially endorse the Chip Security Act.

-

I agree that this is an important goal and we should do this, although as noted before I worry about the timeline, and we need to ensure we don’t accidentally impose impossible asks on TSMC and Nvidia here.

-

-

Secure US Control Over Compute Infrastructure

-

They want ‘no more than 49%’ of any hyperscaler’s advanced compute located overseas, which sounds a lot more like a psychologically important number than one based on real needs. I suppose you have to pick some number, but what are we actually doing here?

-

They want to limit any single ‘non-treaty’ ally from having more than 10% of total global compute. So China, then, but also potentially UAE or KSA. It’s kind of crazy that we have to put in a note not to go over 10% there.

-

They want 0% of advanced US compute falling into Chinese hands. It’s nice to want things, and we should work to drive the number down. The optimal rate of’ chip smuggling and remote access is obviously not zero. But also, if we want to get this under control we obviously can’t be doing things like massive chip sales to Malaysia justified by ‘oh no Huawei might ship them 3k chips that they’re not even shipping them.’

-

-

Base Agreements on Compute, Not Chip Count.

-

Yes, obviously 500k chips per year to the UAE is not the best measure of the compute capacity of those chips, ideally you would adjust based on chip type.

-

But I do think that ‘500k of the best AI chips’ is a reasonably good measure, and keeps things simple. Presumably the supply of top chips is going to roughly stay the same, and what we care about is proportional compute.

-

-

Prohibit PRC Access to US Technology in AI Infrastructure.

-

“Ban Chinese-origin semiconductors and equipment from advanced AI data centers involving US tech. Prohibit physical or remote access by Chinese entities or nationals to these centers.’

-

There seems to be confusion about what matters here. I don’t understand the obsession with where equipment comes from, as opposed to who has use of how much good equipment, what exactly are we worried about, that the chips or other hardware themselves have backdoors? It’s always suspicious when you won’t let them buy from you and also won’t let them sell to you, both so you can ‘win.’

-

If someone wants to run an American model on a Chinese chip, should we say no? Do we think this lets them steal the weights or something? That this means they ‘gain market share’ when they can’t make enough chips? Sigh.

-

The part that makes sense is not letting Chinese entities or nationals get access to our compute at scale, other than use of American model inference.

-

-

Enforce Strict Safety Standards at Overseas AI Data Centers.

-

“Require robust cyber, physical and personal security—including tamper-evident cameras and monitoring of critical components. (em-dash in original)

-

Yes, very obviously, but also in domestic data centers?

-

And also in the labs? And for the models?

-

-

Keep Frontier Model Weights Under US Jurisdiction.

-

They want the frontier models all trained within America and the weights remaining physically and legally under American control.

-

I do not have confidence they are drawing the correct distinctions here, but the intent behind this is wise.

-

-

Require US Strategic Alignment From Partners.

-

As in, they don’t invest in or do anything else with China when it comes to AI, or have any military agreements, and they have to respect our export controls.

-

It is strange how much capital is considered a limiting factor in all this.

-

Overall, this is very much in the ‘sane China hawk’ policy grouping. It is aggressive, and there are some details that seem confused, but these are indeed the things you would do if you were taking China seriously and felt that foreign capital was important. I was also happy to see them taking seriously the question of malicious actors automatically getting access to Chinese frontier AI, which implies caring about frontier capabilities rather than the strange obsessions with ‘market share,’ a term I was happy not to see here.

It is unfortunate that none of the talk from the hearing about loss of control and about catastrophic and existential risks made it into the recommendation letter. I did not expect it to, that is not their focus and committee leadership clearly has their eyes on a different prize, but one can dream. One step at a time, maybe next hearing.

Export controls are actively holding back DeepSeek, and will continue to increasingly hold back diffusion and adaptation of AI within China, as they don’t have enough chips to run the upcoming R2 anything like the amount they will want to. That’s on top of DeepSeek being severely handicapped on training compute, which they have done an excellent job of dealing with but is an increasingly large handicap over time.

With deals like this, it’s hard to tell exactly what we are giving up:

Dan Nystedt: US Commerce Secretary Lutnick said the US and China finalized a trade deal 2-days ago and the US will lift export curbs on semiconductor engineering software and some materials in exchange for China re-starting rare earths shipments to the US, Bloomberg reports. A framework for a deal was agreed upon 2-weeks ago.

We are allowing exporting of ‘semiconductor engineering software’? Depending on what that exactly means, that could be a complete nothingburger, or it could be critical to allowing China to accelerate its chip manufacturing, or anything in between. My understanding is that this reverses an aggressive change we made in May, and it is indeed a big deal but only returns us to the recent status quo.

Meanwhile, in case you thought the market’s reactions to news made too much sense:

Miles Brundage: The one day there’s actually bad news for NVIDIA (OpenAI using TPUs), the stock still goes up.

Efficient markets my butt. It’s vibes all the way down.

The reasonable response is ‘this shows demand is so high that they have to also go elsewhere, they will obviously still buy every chip Nvidia makes so it is bullish.’

That’s not a crazy argument, except if you accept it then that makes every other market reaction make even less sense than before. And yes, I am fully aware of the standard ‘you don’t have the full picture’ arguments involved, but I don’t care.

Here are some more details:

– OpenAI’s compute bill is ~$4B

– 50%/50% training/inference

– 2025 projection: ~$14B

– Has now begun using Google’s TPU via Google Cloud to reduce costs

– This is the first time they used non-Nvidia compute

– Google don’t let anyone else use the most powerful TPUs

Simeon: (prevents anyone else from using its TPUs except SSI, Anthropic, and DeepMind, i.e. basically all the other frontier labs)

It would be a very interesting fact about the world if a lot of OpenAI’s compute starts depending on Google.

Ross Douthat interviews Peter Thiel, including about AI.

Ross Douthat (Podcast Summary): The billionaire Peter Thiel is unimpressed with our pace of innovation. In this episode, he critiques artificial intelligence, longevity science and space travel — and warns that our lack of progress could lead to catastrophic outcomes, including the emergence of the Antichrist.

Peter Thiel expects AI to be ‘only internet big,’ which as Danielle Fong reminds us would still be very big, and which I consider the extreme bear scenario. Clearly this is not a person who feels the AGI or ASI.

There are some clips people are not taking kindly to, such as his long hesitation when asking if he would ‘prefer the human race endure,’ before ultimately saying yes.

Peter Wildeford: “You would prefer the human race to endure, right?”

“Should the human race survive?”

These should be VERY EASY questions!

Robin Hanson: Seems okay to me to pause before answering huge questions, even if a tentative obvious answer leaps to your mind immediately.

Eliezer Yudkowsky: *Deep breath*: No these are in fact questions where the exact meaning matters a bunch. In a billion years I want there to be minds that care about each other, having fun. If anyone is still dying of old age on Old Earth, that’s tragic.

I’d rather NOT see everyone I know ground down into spare atoms by an ASI built by an LLM that was build on current ML paradigms, killing all of us for nothing and leaving an expanding scar on the cosmos. Humanity must endure long enough to transcend rather than perish.

Why Thiel was hesitating to answer, I don’t know. Maybe it was the same reason I’d hesitate, the distinction between death and transcension. Maybe he’s got some weirder ideas going on. I don’t know. But I’ll not give him shit over this one.

I can see this both ways, especially given the context leading into the question. I mostly agree with Eliezer that we should cut him some slack over this one, but I also cut some slack to those who don’t cut him slack, and there are updates here. On the scale of statements Thiel has made that should unnerve you, this isn’t even that high, even if that scale was confined to this particular interview.

We absolutely should be pausing before answering big questions, especially when they involve important ambiguities, to get our answer to be precise. But also the hesitation or lack thereof in some contexts is itself communication, an important signal, and the fact that this is common knowledge reinforces that.

Thiel is presenting himself as He Who Hesitates Here, as a conscious choice, an answer to a test he has studied for, with a time limit of zero seconds.

The ‘correct’ answer given what I believe Thiel believes here is something like ‘yes [probably pause here], but we have to think carefully about what that means and solve these problems.’

Dwarkesh Patel interviews George Church, primarily about biology with a side of AI. Interesting throughout, as you would expect. Church warns repeatedly that AGI could be ‘a catastrophe of our own making,’ and suggests that we can make dramatic progress in biology using narrow AI instead.

Dwarkesh Patel: On a viewer-minute adjusted basis, I host the Sarah Paine podcast, where I sometimes also talk about AI.

Roon: dwarkesh is perhaps one of the best known silicon valley public intellectuals but people dont care about his tech guests, they wanna hear about mao, stalin, and the aryans.

Dwarkesh Patel: Every episode is about AI. Even if we’re not talking about AI, we’re talking about AI.

Roon: everything in life is about ai, except ai which is about power.

Dylan Patel talks Grok 4 and various other things.

Robert Miles offers advice on careers in AI Safety. He likes Grok in general (although it is not his main daily driver, for which he uses o3 and Claude 4 like the rest of us) and uses Grok for current events, which surprises me, and is relatively bullish on Grok 4. I loved the detail that xAI are literally buying a power plant and shipping it from overseas, wait you can do that? Maybe we should do a lot more of that, build new power plants in the UAE and then ship them here, that’s the kind of tech deal I can get behind.

Nate Sores argues in an excellent post, and I agree, that more people should say what they actually believe about AI dangers, loudly and often, even (and perhaps especially) if they work in AI policy. I recommend reading the whole thing and many comments are also very good.

At minimum, don’t feel ashamed, and don’t misrepresent your views and pretend you believe something you don’t. This point is highly underappreciated:

Nate Sores: I have a whole spiel about how your conversation-partner will react very differently if you share your concerns while feeling ashamed about them versus if you share your concerns while remembering how straightforward and sensible and widely supported the key elements are, because humans are very good at picking up on your social cues. If you act as if it’s shameful to believe AI will kill us all, people are more prone to treat you that way. If you act as if it’s an obvious serious threat, they’re more likely to take it seriously too.

Officials are mostly not stupid, and they absolutely can handle more of the truth.

And in the last year or so I’ve started collecting anecdotes such as the time I had dinner with an elected official, and I encouraged other people at the dinner to really speak their minds, and the most courage they could muster was statements like, “Well, perhaps future AIs could help Iranians figure out how to build nuclear material.”

To which the elected official replied, “My worries are much bigger than that; I worry about recursive self-improvement and superintelligence wiping us completely off the map, and I think it could start in as little as three years.”

I think it is totally fine to emphasize more practical and mundane dangers in many situations. I do this all the time. When you do that, you shouldn’t hide that this is not your true objection. Instead, you can say ‘I worry about superintelligence, but even if we don’t get superintelligence AI will cause [relatively mundane worry].’

Nate Silver and Eliezer Yudkowsky are once again running this experiment, with their forthcoming book that minces no words, and he reports that yes, things go better when you state the real stakes.

But I am at liberty to share the praise we’ve received for my forthcoming book. Eliezer and I do not mince words in the book, and the responses we got from readers were much more positive than I was expecting, even given all my spiels.

You might wonder how filtered this evidence is. It’s filtered in some ways and not in others.

We cold-emailed a bunch of famous people (like Obama and Oprah), and got a low response rate. Separately, there’s a whole tier of media personalities that said they don’t really do book endorsements; many of those instead invited us on their shows sometime around book launch. Most people who work in AI declined to comment. Most elected officials declined to comment (even while some gave private praise, for whatever that’s worth).

But among national security professionals, I think we only approached seven of them. Five of them gave strong praise, one of them (Shanahan) gave a qualified statement, and the seventh said they didn’t have time (which might have been a polite expression of disinterest). Which is a much stronger showing than I was expecting, from the national security community.

We also had a high response rate among people like Ben Bernanke and George Church and Stephen Fry. These are people that we had some very tangential connection to — like “one of my friends is childhood friends with the son of a well-connected economist.” Among those sorts of connections, almost everyone had a reaction somewhere between “yep, this sure is an important issue that people should be discussing” and “holy shit”, and most offered us a solid endorsement. (In fact, I don’t recall any such wacky plans that didn’t pan out, but there were probably a few I’m just not thinking of. We tried to keep a table of all our attempts but it quickly devolved into chaos.)

I think this is pretty solid evidence for “people are ready to hear about AI danger, if you speak bluntly and with courage.” Indeed, I’ve been surprised and heartened by the response to the book.

Indeed, Nate proposes to turn the whole thing on its head, discussing SB 1047.

One of my friends who helped author the bill took this sequence of events as evidence that my advice was dead wrong. According to them, the bill was just slightly too controversial. Perhaps if it had been just a little more watered down then maybe it would have passed.

I took the evidence differently.

I noted statements like Senator Ted Cruz saying that regulations would “set the U.S. behind China in the race to lead AI innovation. […] We should be doing everything possible to unleash competition with China, not putting up artificial roadblocks.” I noted J.D. Vance saying that regulations would “entrench the tech incumbents that we actually have, and make it actually harder for new entrants to create the innovation that’s going to power the next generation of American growth.”

On my view, these were evidence that the message wasn’t working. Politicians were not understanding the bill as being about extinction threats; they were understanding the bill as being about regulatory capture of a normal budding technology industry.

…Which makes sense. If people really believed that everyone was gonna die from this stuff, why would they be putting forth a bill that asks for annual reporting requirements? Why, that’d practically be fishy. People can often tell when you’re being fishy.

…

But, this friend who helped draft SB 1047? I’ve been soliciting their advice about which of the book endorsements would be more or less impressive to folks in D.C. And as they’ve seen the endorsements coming in, they said that all these endorsements were “slightly breaking [their] model of where things are in the overton window.” So perhaps we’re finally starting to see some clearer evidence that the courageous strategy actually works, in real life.[2]

This pattern is more common (in general, not only in AI) than one thinks. Often it is easier, rather than harder, to get the much larger ask you actually want, because it presents as congruent and confident and honest and conveys why you should get a yes. At minimum, it is usually much less additionally hard than you would expect. Tell them the real situation, ask for what you want, and you might get it (or you might still get the something less).

(There are of course lots of obvious exceptions, of course, where you need to calibrate your ask in a given situation. Mostly I am giving advice here on the margin.)

I have a much more positive view of SB 1047 and the efforts that went towards passing it than Nate Sores does, but I strongly agree that not laying out the true reasons behind the bill was an error. We ended up with the worst of both worlds. The people who wanted to warn about a ‘doomer’ bill, or that were going to go apocalyptic at the idea that something was being justified by concerns about existential risks, still noticed and went fully apocalyptic about that aspect, while keeping the discussions of consequences mostly focused on mundane (and very often hallucinated or fabricated) concerns, and people didn’t realize the logic.

I would draw a distinction between making SB 1047 more courageous in its actual legal impacts, versus making it more courageous in its messaging. I don’t think it would have helped SB 1047 to have pushed harder on actual impacts. But I do think the messaging, including within the bill text, being more courageous would have improved its chances.

The most important point is the one at the top, so here’s Robin Shah reiterating it. Whatever you actually do believe, shout it from the rooftops.

Robin Shah: While I disagree with Nate on a wide variety of topics (including implicit claims in this post), I do want to explicitly highlight strong agreement with this:

I have a whole spiel about how your conversation-partner will react very differently if you share your concerns while feeling ashamed about them versus if you share your concerns as if they’re obvious and sensible, because humans are very good at picking up on your social cues. If you act as if it’s shameful to believe AI will kill us all, people are more prone to treat you that way. If you act as if it’s an obvious serious threat, they’re more likely to take it seriously too.

The position that is “obvious and sensible” doesn’t have to be “if anyone builds it, everyone dies”. I don’t believe that position. It could instead be “there is a real threat model for existential risk, and it is important that society does more to address it than it is currently doing”. If you’re going to share concerns at all, figure out the position you do have courage in, and then discuss that as if it is obvious and sensible, not as if you are ashamed of it.

(Note that I am not convinced that you should always be sharing your concerns. This is a claim about how you should share concerns, conditional on having decided that you are going to share them.)

One particular danger is that a lot of people dismiss worried about AI as ‘oh the AI safety people think there is a low probability of a really bad outcome’ and then this is often viewed very negatively. So when you say ‘even a very low probability of a really bad outcome is worth preventing,’ I mean that is very true and correct, but also most people saying this don’t think the probability of the bad outcomes is very low. I think the chance of supremely bad is above 50%, and most people giving such warnings are at least at 10% or more, on top of the chance of more mundane bad outcomes.

Cameron Berg and Judd Rosenblatt replicate a form of and then explain the emergent misalignment results in plain terms in the Wall Street Journal. This is what it looks like to try and talk to regular folks about these things, I suppose.

A Wall Street Journal article (with nothing that new to report) claims ‘China is quickly eroding America’s lead in the global AI race’, and the whole thing reads like a whole ‘market share uber alles’ combined with ‘technological cold war,’ ‘China is definitely about to beat us real soon now, look there are some people who use Chinese models sometimes’ and ‘someone self-hosting our open model counts as sales and locks them into our tech forever’ propaganda piece fed to the authors hook, line and sinker.

Not new sentiment, but bears repeating periodically that people really think this.

Yanco: Can anyone name 1 reason why a vastly super-intelligent AI would want to merge with humans?

Michael Druggan: It won’t and that’s ok. We can pass the torch to the new most intelligent species in the known universe.

Yanco: I would prefer my child to live.

Michael Druggan: Selfish tbh.

Yanco: Are you a parent? Love your children? Then you are selfish and it is actually good that you & your kids will be murdered by AI (+everyone else). ~10% of AI researchers working hard to make such AI reality basically think the same.

Gabriel: I dislike dunking on randos.

But I heard the same argument from an SF org CEO.

“People caring about the happiness of humans are selfish.

They must instead care about future counterfactual non-human sentient beings.

But it’s ok.

People don’t have enough power to matter.”

I know, I know, but prediction evaluation, so: After Gary Marcus gives himself 7/7, Oliver Habryka evaluates Gary Marcus’s AI predictions for 2024 that were made in March. Here are the predictions:

• 7-10 GPT-4 level models

• No massive advance (no GPT-5, or disappointing GPT-5)

• Price wars

• Very little moat for anyone

• No robust solution to hallucinations

• Modest lasting corporate adoption

• Modest profits, split 7-10 ways

I very much respect Oliver, and his attempt here to evaluate the predictions.

Two of the seven are very clearly outright false. I strongly agree with Oliver that the first prediction is clearly false there were far more than 10 such models, and when you give a range a miss in either direction is a miss. I also agree that ‘modest lasting corporate adoption’ is false relative to the baseline of almost every technological advance in modern history.

Two are very clearly true enough to count, price wars and no solution to hallucinations, although those are not the boldest of predictions. We all agree.

From there, it depends on how to operationalize the statements. Overall I’d mostly agree with Oliver, it’s about half right and many of the questions should resolve as stated to ‘well, kind of.’

Marcus then puts out nine predictions for 2029, four and a half years from now, which is a much harder task than predicting trends nine months out.

The LLM race will be basically a tie between China and U.S.

Pure LLMs will still hallucinate (alternative architectures may do better).

Pure LLMs will still make stupid errors (alternative architectures may do better).

Profit margins for LLMs will be slim.

There will still be plenty of jobs in which humans experts far outclass AI.

Some current (2025) occupations, though, will no longer exist.

Driverless taxi rides will be common, but still in limited cities, available in less than 50% of the world’s major (100k+) cities.

Domain-specific models will still outperform general-purpose chatbots in many domains (board games, video games, protein folding, logistics, navigation, etc).

Humanoid home robots over 5’ tall will still be demos, not in widespread release.

There’s a lot of ambiguity here that would make me not want to operationalize many of these into prediction markets.

-

Which ‘LLM race’ are we talking about in #1 and how would we decide who won?

-

It this saying non-zero hallucination rate (in which case, probably true even for AGIs, probably ASIs, but so what?) or a not-that-reduced hallucination rate (in which case, specify, and I disagree)?

-

Again, is this ‘there exists [stupid error] I can find if I look’ or that they do this enough that stupid errors are expected and common? What counts as stupid?

-

Need to pick a number, and clarify how many LLMs have to be profitable to serve.

-

This doesn’t even specify digital jobs, so this is saying no singularity, basically? I mean, okay, but you’re not making a bold claim.

-

Is this 90%, 99% or 100% decline? For reasonable definitions yes we all agree here.

-

This one is well-defined and seems reasonable given it is global. If it covered the largest half of such locations that would mean that about 2.5 billion people are covered.

-

Define ‘many’ and also degree of ‘domain-specific’ but yes, pretty obviously true even if we get superintelligence. If I was a superintelligence that wanted to win a super important chess game I would invent a better chess engine, not learn to play the game myself, unless I was already perfect at chess. For something like logistics I’d at least want to fine-tune, or alternatively I’d call one of the experts in my mixture of experts. The obvious follow-up is, so what?

-

Would want to pick a number to count as widespread but mostly this is clean. I would take the other side of this bet if it operationalizes as ‘those who have the money and want one can get one.’

These do paint a picture of a consistent world, where ‘LLM intelligence’ is fungible and levels off and its utility is sharply limited not far above where it is now. That is not how I expect things to go, but it certainly is possible.

Adam Thierer observes and breaks down the growing division between the Tech Right, which he frames as ‘pro-innovation,’ and the Populist Right, which is skeptical of technological innovation.

Adam Thierer: The tension between the pro-innovation “Tech Right” and innovation-skeptical “Populist Right” is now driving a bigger and bigger wedge in the coalition that Trump somehow brought together last year.

…

But, as Hausenloy notes in his new essay, the battle over the AI moratorium fully exposed these tensions and just completely tore that coalition apart in the process.

A large part of the Trump coalition cares about things that are very different than what Adam Thierer cares about, and also very different than what I care about, and sees the world through a very different lens.

I totally see where Thierer is coming from, and indeed on many other issues and regarding many other techs my position is or would be extremely close to his. And I understand why this came as a surprise:

Adam Thierer: To exemplify my point, consider this story from a recent House hearing on AI policy where I testified. I figured this hearing would be a relatively sleepy session because the topic was how federal agencies might be able to use AI to improve government efficiency.

As the hearing went on, however, a few Republican lawmakers began going off on wild tangents about AI being a threat to humanity, about tech companies trying to replace God with some new AI deity, and AI ushering in a broader transhumanist movement. They had really whipped themselves into a panic and seemed to believe that AI innovators were hell-bent on ushering in a complete technological takeover of humanity.

These sentiments reflected the same sort of technopanic mentality on display in that “Future of the Family” anti-tech manifesto from some conservative intellectuals. As Hausenloy notes, these populists “question whether humans should attempt to construct intelligences exceeding our own capabilities — a modern Tower of Babel disconnected from Biblical values.”

None of that is a good reason to avoid using AI to improve government efficiency, but in terms of the bigger picture those lawmakers and this type of thinking are remarkably close to the actual situation. AI will be, if it continues to gain in capabilities, a threat to humanity. If you build intelligences exceeding our own capabilities, why would you think that wouldn’t be a threat and treat that as some mad ramble? What makes you think any of this is going to reflect our values or remain under our control, when no one has a plan for making that happen or knows how to do it? They’re simply right about this, and for essentially the right reasons.

Soft Minus (image quotes Thierer): I mean the stuff in red is literally what — almost word for word — the leaders of many of the AI companies openly talk about wanting to achieve with their work.

Directors of & major figures from _all_ major American AI companies (OpenAI, Anthropic, Google DeepMind, xAI) have openly expressed hard-transhumanist/posthumanist sentiments/desires (up to, including, beyond describing AI as mankind’s successor) as being furthered by their work.

Sure, maybe it’s all marketing talk. But that stuff directly _comes from_ the Al companies (whether it be real or mere puffery): it’s certainly _not_ invented wholecloth by paranoid technophobic/luddite congressmen (if only because they could never come up with that stuff).