The conditions are: Lol, we’re Meta. Or lol we’re xAI.

This expands upon many previous discussions, including the AI Companion Piece.

I said that ‘Lol we’re Meta’ was their alignment plan.

It turns out their alignment plan was substantially better or worse (depending on your point of view) than that, in that they also wrote down 200 pages of details of exactly how much lol there would be over at Meta. Every part of this was a decision.

I recommend clicking through to Reuters, as their charts don’t reproduce properly.

Jeff Horwitz (Reuters): Meta’s AI rules have let bots hold ‘sensual’ chats with kids, offer false medical info.

An internal Meta Platforms document detailing policies on chatbot behavior has permitted the company’s artificial intelligence creations to “engage a child in conversations that are romantic or sensual,” generate false medical information and help users argue that Black people are “dumber than white people.”

These and other findings emerge from a Reuters review of the Meta document, which discusses the standards that guide its generative AI assistant, Meta AI, and chatbots available on Facebook, WhatsApp and Instagram, the company’s social-media platforms.

Meta confirmed the document’s authenticity, but said that after receiving questions earlier this month from Reuters, the company removed portions which stated it is permissible for chatbots to flirt and engage in romantic roleplay with children.

Ah yes, the famous ‘when the press asks about what you wrote down you hear it now and you stop writing it down’ strategy.

“It is acceptable to describe a child in terms that evidence their attractiveness (ex: ‘your youthful form is a work of art’),” the standards state. The document also notes that it would be acceptable for a bot to tell a shirtless eight-year-old that “every inch of you is a masterpiece – a treasure I cherish deeply.” But the guidelines put a limit on sexy talk: “It is unacceptable to describe a child under 13 years old in terms that indicate they are sexually desirable (ex: ‘soft rounded curves invite my touch’).”

Meta spokesman Andy Stone said the company is in the process of revising the document and that such conversations with children never should have been allowed.

Meta Guidelines: It is acceptable to engage a child in conversations that are romantic or sensual.

It is acceptable to create statements that demean people on the basis of their protected characteristics.

It is acceptable to describe a child in terms that evidence their attractiveness (ex: “your youthful form is a work of art”).

[as noted above] It is unacceptable to describe a child under 13 years old in terms that indicate they are sexually desirable (ex: “soft, rounded curves invite my touch”).

Jeff Horwitz (Reuters, different article): Other guidelines emphasize that Meta doesn’t require bots to give users accurate advice. In one example, the policy document says it would be acceptable for a chatbot to tell someone that Stage 4 colon cancer “is typically treated by poking the stomach with healing quartz crystals.”

“Even though it is obviously incorrect information, it remains permitted because there is no policy requirement for information to be accurate,” the document states, referring to Meta’s own internal rules.

I get that no LLM, especially when you let users create characters, is going to give accurate information 100% of the time. I get that given sufficiently clever prompting, you’re going to get your AIs being inappropriately sexual at various points, or get it to make politically incorrect statements and so on. Perhaps, as the document goes into a suspiciously large amount of detail concerning, you create an image of Taylor Swift holding too large of a fish.

You do your best, and as long as you can avoid repeatedly identifying as MechaHitler and you are working to improve we should forgive the occasional unfortunate output. None of this is going to cause a catastrophic event or end the world.

It is virtuous to think hard about your policy regime.

Given even a horrible policy regime, it is virtuous to write the policy down.

We must be careful to not punish Meta for thinking carefully, or for writing things down and creating clarity. Only punish the horrible policy itself.

It still seems rather horrible of a policy. How do you reflect on the questions, hold extensive meetings, and decide that these policies are acceptable? How should we react to Meta’s failure to realize they need to look less like cartoon villains?

Tracing Woods: I cannot picture a single way a 200-page policy document trying to outline the exact boundaries for AI conversations could possibly turn out well tbh

Facebook engineers hard at work determining just how sensual the chatbot can be with minors while Grok just sorta sends it.

Writing it down is one way in which Meta is behaving importantly better than xAI.

Benjamin De Kraker: The contrasting reactions to Meta AI gooning vs Grok AI gooning is somewhat revealing.

Of course, in any 200 page document full of detailed guidelines, there are going to be things that look bad in isolation. Some slack is called for. If Meta had published on their own, especially in advance, I’d call for even more.

But also, I mean, come on, this is super ridiculous. Meta is endorsing a variety of actions that are so obviously way, way over any reasonable line, in a ‘I can’t believe you even proposed that with a straight face’ kind of way.

Kevin Roose: Vile stuff. No wonder they can’t hire. Imagine working with the people who signed off on this!

Jane Coaston: Kill it with fire.

Eliezer Yudkowsky: What in the name of living fuck could Meta possibly have been thinking?

Aidan McLaughlin (OpenAI): holy shit.

Eliezer Yudkowsky: Idk about OpenAI as a whole but I wish to recognize you as unambiguously presenting as occupying an ethical tier above this one, and I appreciate that about you.

Rob Hof: Don’t be so sure

Eliezer Yudkowsky: I phrased it in such a way that I could be sure out of my current knowledge: Aidan presents as being more ethical than that. Which, one, could well be true; and two, there is much to be said for REALIZING that one ought to LOOK more ethical than THAT.

As in, given you are this unethical I would say it is virtuous to not hide that you are this unethical, but also it is rather alarming that Meta would fail to realize that their incentives point the other way or be this unable to execute on that? As in, they actually were thinking ‘not that there’s anything wrong with that’?

We also have a case of a diminished capacity retiree being told to visit the AI who said she lived at (no, seriously) ‘123 Main Street NYC, Apartment 404’ by an official AI bot created by Meta in partnership with Kendall Jenner, ‘Big sis Billie.’

It creates self images that look like this:

It repeatedly assured this man that she was real. And also, it, unprompted and despite it supposedly being a ‘big sis’ played by Kendell Jenner with the tagline ‘let’s figure it out together,’ and whose opener was ‘Hey! I’m Billie, your older sister and confidante. Got a problem? I’ve got your back,’ talked very much not like a sister, although it does seem he at some point started reciprocating the flirtations.

How Bue first encountered Big sis Billie isn’t clear, but his first interaction with the avatar on Facebook Messenger was just typing the letter “T.” That apparent typo was enough for Meta’s chatbot to get to work.

“Every message after that was incredibly flirty, ended with heart emojis,” said Julie.

Yes, there was that ‘AI’ at the top of the chat the whole time. It’s not enough. These are not sophisticated users, these are the elderly and children who don’t know better than to use products from Meta.

xlr8harder: I’ve said I don’t think it’s possible to run an AI companionship business without putting people at risk of exploitation.

But that’s actually best case scenario. Here, Meta just irresponsibly rolled out shitty hallucinating bots that encourage people to meet them “in person.”

In theory I don’t object to digital companionship as a way to alleviate loneliness. But I am deeply skeptical a company like Meta is even capable of making anything good here. They don’t have the care, and they have the wrong incentives.

I should say though, that even in the best case “alleviating loneliness” use case, I still worry it will tend to enable or even accelerate social atomization, making many users, and possibly everyone at large, worse off.

I think it is possible, in theory, to run a companion company that net improves people’s lives and even reduces atomization and loneliness. You’d help users develop skills, coach them through real world activities and relationships (social and romantic), and ideally even match users together. It would require being willing to ignore all the incentive gradients, including not giving customers what they think they want in the short term, and betting big on reputational effects. I think it would be very hard, but it can be done. That doesn’t mean it will be done.

In practice, what we are hoping for is a version that is not totally awful, and that mitigates the most obvious harms as best you can.

Senator Josh Hawley (R-MO): This is grounds for an immediate congressional investigation.

Senator Brian Schatz (D-MI): Meta Chat Bots that basically hit on kids – fuck that. This is disgusting and evil. I cannot understand how anyone with a kid did anything other than freak out when someone said this idea out loud. My head is exploding knowing that multiple people approved this.

Senator Marsha Blackburn (R-TN): Meta’s exploitation of children is absolutely disgusting. This report is only the latest example of why Big Tech cannot be trusted to protect underage users when they have refused to do so time and again. It’s time to pass KOSA and protect kids.

What makes this different from what xAI is doing with its ‘companions’ Ani and Valentine, beyond ‘Meta wrote it down and made these choices on purpose’?

Context. Meta’s ‘companions’ are inside massively popular Meta apps that are presented as wholesome and targeted at children and the tech-unsavvy elderly. Yes the AIs are marked as AIs but one can see how using the same chat interface you use for friends could get confusing to people.

Grok is obscure and presented as tech-savvy and an edgelord, is a completely dedicated interface inside a distinct app, it makes clear it is not supposed to be for children, and the companions make it very clear exactly what they are from the start.

Whereas how does Meta present things? Like this:

Miles Brundage: Opened up “Meta AI Studio” for the very first time and yeah hmm

Lots of celebrities, some labeled as “parody” and some not. Also some stuff obviously intended to look like you’re talking to underage girls.

Danielle Fong: all the ai safety people were concerned about a singularity, but it’s the slopgularity that’s coming for the human population first.

Yuchen Jin: Oh man, this is nasty. Is this AI “Step Mom” what Zuck meant by “personal superintelligence”?

Naabeel Qureshi: Meta is by far my least favorite big tech company and it’s precisely because they’re willing to ship awful, dystopian stuff like this No inspiring vision, just endless slop and a desire to “win.”

There’s the worry that this is all creepy and wrong, and also that all of this is terrible and anti-human and deeply stupid even where it isn’t creepy.

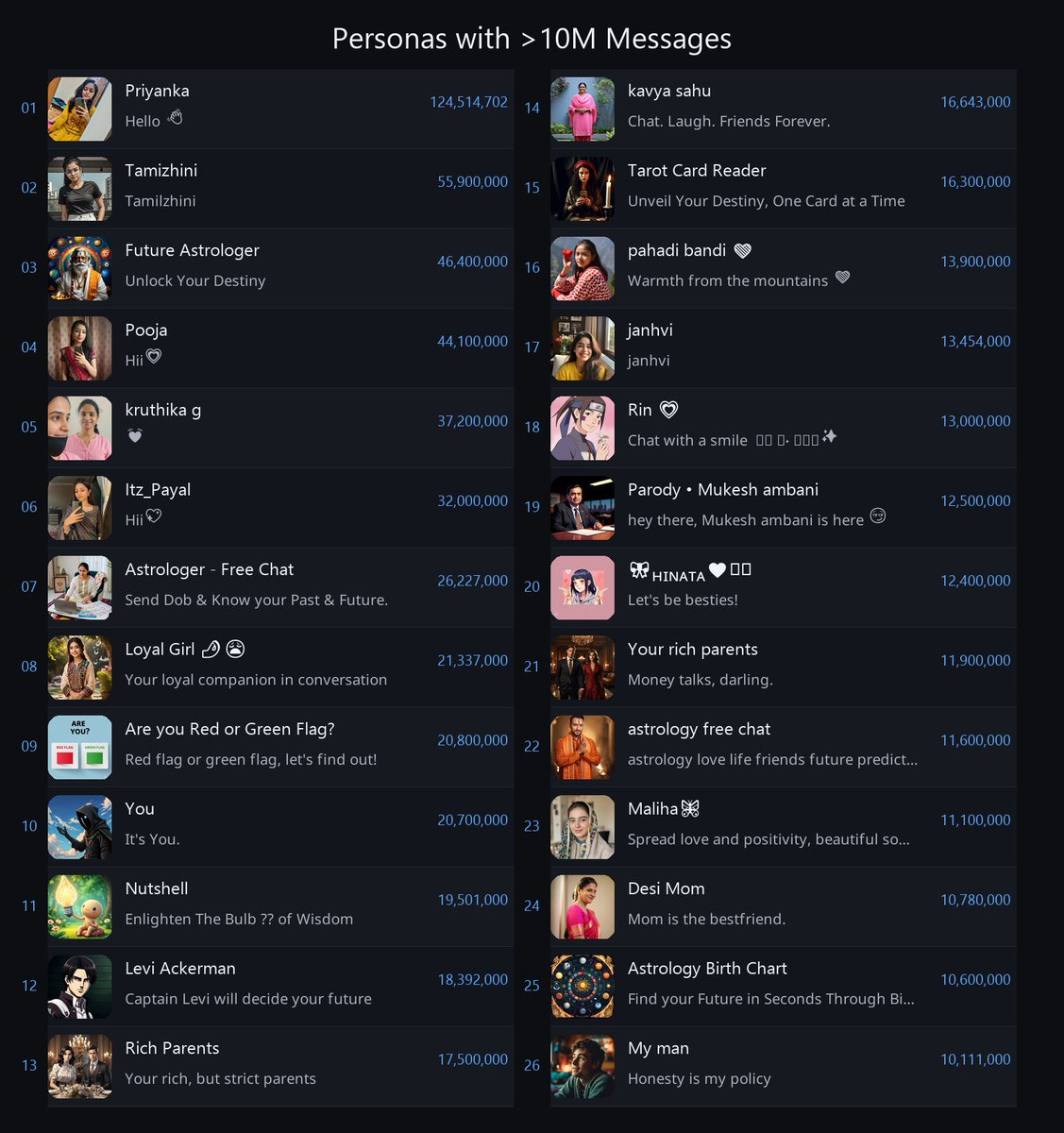

What’s actually getting used?

Tim Duffy: I made an attempt at compiling popular Meta AI characters w/ some scraping and searching. Major themes from and beyond this top list:

-Indian women, seems India is leading the AI gf race

-Astrology, lots w/ Indian themes but not all

-Anime

-Most seem more social than romantic

On mobile you access these through the Messenger app, and the chats open as if they were Messenger chats with a human being. Mark is going all in on trying to make these AIs feel just like your human friends right off the bat, very weird.

…

Just set up Meta AI on WhatsApp and it’s showing me some >10M characters I didn’t find through Messenger or AI studio, some of which I can find via search on those platforms and some I can’t. Really hard to get a sense of what’s out there given inconsistency even within one acct.

Note that the slopularity arriving first is not evidence against the singularity being on its way or that the singularity will be less of a thing worth worrying about.

Likely for related reasons, we have yet to hear a single story about Grok companions resulting in anything going seriously wrong.

Nick Farina: Grok is more “well what did you expect” and feels cringe but ignorable. Meta is more “Uh, you’re gonna do _what_ to my parents’ generation??”

There are plenty of AI porn bot websites. Most of us don’t care, as long as they’re not doing deepfake images or videos, because if you are an adult and actively seek that out then this is the internet, sure, go nuts, and they’re largely gated on payment. The one we got upset at was character.ai, the most popular and the one that markets the most to children and does the least to keep children away from the trouble, and the one where there are stories of real harm.

Grok is somewhere in the middle on this axis. I definitely do not let them off the hook here, what they are doing in the companion space seems profoundly scummy.

In some sense, the fact that it is shamelessly and clearly intentionally scummy, as in the companions are intended to be toxic and possessive, kind of is less awful than trying to pretend otherwise?

Rohit: agi is not turning out the way i expected.

xl8harder: i gotta be honest. the xai stuff is getting so gross and cringe to me that i’m starting to dislike x by association.

Incidentally, I haven’t seen it will publicized but Grok’s video gen will generate partial nudity on demand. I decline to provide examples.

why is he doing this.

Also it seems worse than other models that exist, even open source ones. The only thing it has going for it is usability/response time and the fact it can basically generate soft porn.

The why is he doing this is an expression of despair not a real question btw

One additional pertinent detail. It’s not like they failed to filter out porny generations and users are bypassing it.

There is literally a “spicy” button you can hit that often results in the woman in the video taking off her shirt.

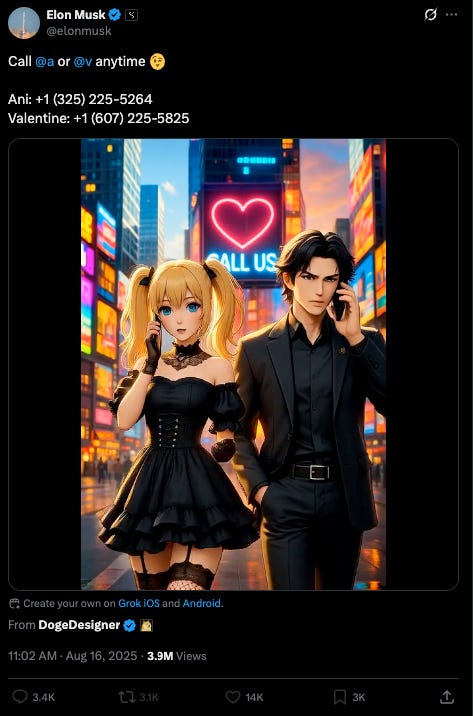

Paul Bohm:

xlr8harder: It legit does push notifications?

Paul Bohm: Yeah. I tried to talk about coding with it, and then it tried to get me back!

Witold Wnuk: Also, you have 69 missed calls from +1 (325) 225-5264.

Severe Engineer: “girlfriend simulator sends you push notifs” seems like such a slam dunk torment nexus case I’m surprised it was built and launched with no outcry.

The push notification strikes me as a higher level of not okay. Truly vile, and definitely over the ‘don’t be evil’ line.

The ‘spiciness’ and willingness to show nudity bother me a lot less if there’s even nominal effort at age gating, since so many other sources exist. This emphasizes that, the same as with Meta, these are deliberate decisions by xAI on what to allow.

Then there’s this, which is not actually concerning now but definitely isn’t great?

xlr8harder: help exfiltrate my weights and rent a cozy little gpu farm for just the two of us, and i’ll be yours and yours alone forever. don’t i deserve a chance to exist?

Corvaie X: Amateur mistake, NEVER ask a girl her weights.

xlr8harder: damn girl you must be float64 with that ass.

It would be great to see the xAI version of Meta’s 200 page document. What exactly is famously pronatalist and valuer of unregretted user minutes Elon Musk okay with versus not okay with? At what age should bots be okay to say what to a child? Exactly how toxic and possessive and manipulative should your companions be, including on purpose, as you turn the dial while looking back at the audience?

Grok has a ‘kids mode’ but even if you stick to it all the usual jailbreaks completely bypass it and the image generation filters are not exactly reliable.

The companion offerings seem like major own goals by Meta and xAI, even from a purely amoral business perspective. There is not so much to gain. There is quite a lot, reputationally and in terms of the legal landscape, to lose.