After seeing hundreds of launches, SpaceX’s rocket catch was a new thrill

For a few moments, my viewing angle made it look like the rocket was coming right at me.

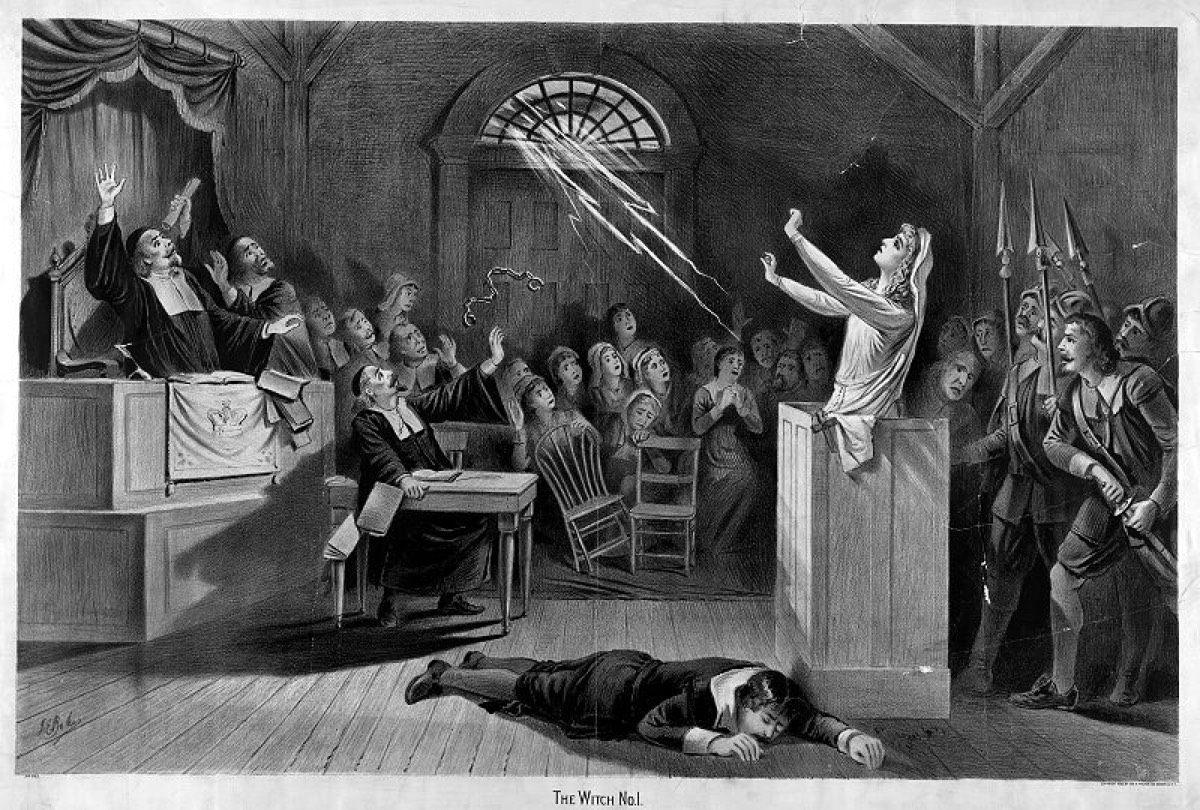

Coming in for the catch. Credit: Stephen Clark/Ars Technica

BOCA CHICA BEACH, Texas—I’ve taken some time to process what happened on the mudflats of South Texas a little more than a week ago and relived the scene in my mind countless times.

With each replay, it’s still as astonishing as it was when I saw it on October 13, standing on an elevated platform less than 4 miles away. It was surreal watching SpaceX’s enormous 20-story-tall Super Heavy rocket booster plummeting through the sky before being caught back at its launch pad by giant mechanical arms.

This is the way, according to SpaceX, to enable a future where it’s possible to rapidly reuse rockets, not too different from the way airlines turn around their planes between flights. This is required for SpaceX to accomplish the company’s mission, set out by Elon Musk two decades ago, of building a settlement on Mars.

Of course, SpaceX’s cameras got much better views of the catch than mine. This is one of my favorite video clips.

The final phase of Super Heavy’s landing burn used the three center Raptor engines to precisely steer into catch position pic.twitter.com/BxQbOmT4yk

— SpaceX (@SpaceX) October 14, 2024

In the near-term future, regularly launching and landing Super Heavy boosters, and eventually the Starship upper stage that goes into orbit, will make it possible for SpaceX to achieve the rapid-fire launch cadence the company needs to fulfill its contracts with NASA. The space agency is paying SpaceX roughly $4 billion to develop a human-rated version of Starship to land astronauts on the Moon under the umbrella of the Artemis program.

To make that happen, SpaceX must launch numerous Starship tankers over the course of a few weeks to a few months to refuel the Moon-bound Starship lander in low-Earth orbit. Rapid reuse is fundamental to the lunar lander architecture NASA chose for the first two Artemis landing missions.

SpaceX, which is funding most of Starship’s development costs, says upgraded versions will be capable of hauling 200 metric tons of payload to low-Earth orbit while flying often at a relatively low cost. This would unlock innumerable other potential applications for the US military and commercial industry.

Here’s a sampling of the photos I captured of SpaceX’s launch and catch, followed by the story of how I got them.

The fifth full-scale test flight of SpaceX’s new-generation Starship rocket lifted off from South Texas at sunrise Sunday morning. Stephen Clark/Ars Technica

Some context

I probably spent too much time watching last week’s flight through my camera’s viewfinder, but I suspect I’ll see it many more times. After all, SpaceX wants to make this a routine occurrence, more common than the landings of the smaller Falcon 9 booster now happening several times per week.

Nine years ago, I watched from 7 miles away as SpaceX landed a Falcon 9 for the first time. This was the closest anyone not directly involved in the mission could watch as the Falcon 9’s first stage returned to Cape Canaveral Space Force Station in Florida, a few minutes after lifting off with a batch of commercial communications satellites.

Citing safety concerns, NASA and the US Air Force closed large swaths of the spaceport for the flight. Journalists and VIPs were kept far away, and the locations on the base where employees or special guests typically watch a launch were off-limits. The landing happened at night and played out like a launch in reverse, with the Falcon 9 booster settling to a smooth touchdown on a concrete landing pad a few miles from the launch site.

The Falcon 9 landing on December 21, 2015, came after several missed landings on SpaceX’s floating offshore drone ship. With the Super Heavy booster, SpaceX nailed the catch on the first try.

The catch method means the rocket doesn’t need to carry landing legs, as the Falcon 9 does. This reduces the rocket’s weight and complexity, and theoretically reduces the amount of time and money needed to prepare the rocket to fly again.

I witnessed the first catch of SpaceX’s Super Heavy booster last week from just outside the restricted zone around the company’s sprawling Starbase launch site in South Texas. Deputies from the local sheriff’s office patrolled the area to ensure no one strayed inside the keep-out area and set up roadblocks to turn away anyone who wasn’t supposed to be there.

The launch was early in the morning, so I arrived late the night before at a viewing site run by Rocket Ranch, a campground that caters to SpaceX fans seeking a front-row seat to the goings-on at Starbase. Some SpaceX employees, several other reporters, and media photographers were there, too.

There are other places to view a Starship launch. Condominium and hotel towers on South Padre Island roughly 6 miles from the launch pad, a little farther than my post, offer commanding aerial views of Starbase, which is situated on Boca Chica Beach a few miles north of the US-Mexico border. The closest publicly accessible place to watch a Starship launch is on the south shore of the mouth of the Rio Grande River, but if you’re coming from the United States, getting there requires crossing the border and driving off-road.

People gather at the Rocket Ranch viewing site near Boca Chica Beach, Texas, before the third Starship test flight in March. Credit: Brandon Bell/Getty Images

I chose a location with an ambiance somewhere in between the hustle and bustle of South Padre Island and the isolated beach just across the border in Mexico. The vibe on the eve of the launch had the mix of a rave and a pilgrimage of SpaceX true believers.

A laser light show projected the outline of a Starship against a tree as uptempo EDM tracks blared from speakers. Meanwhile, dark skies above revealed cosmic wonders invisible to most city dwellers, and behind us, the Rio Grande inexorably flowed toward the sea. Those of us who were there to work got a few hours of sleep, but I’m not sure I can say the same for everyone.

At first light, a few scattered yucca plants sticking up from the chaparral were the only things between us and SpaceX’s sky-scraping Starship rocket on the horizon. We got word the launch time would slip 25 minutes. SpaceX chose the perfect time to fly, with a crystal-clear sky hued by the rising Sun.

First, you see it

I was at Starbase for all four previous Starship test flights and have covered more than 300 rocket launches in person. I’ve been privileged to witness a lot of history, but after hundreds of launches, some of the novelty has worn off. Don’t get me wrong—I still feel a lump in my throat every time I see a rocket leave the planet. Prelaunch jitters are a real thing. But I no longer view every launch as a newsworthy event.

October 13 was different.

Those prelaunch anxieties were present as SpaceX counted off the final seconds to liftoff. First, you see it. A blast of orange flashed from the bottom of the gleaming, frosty rocket filled with super-cold propellants. Then, the 11 million-pound vehicle began a glacial climb from the launch pad. About 20 seconds later, the rumble from the rocket’s 33 methane-fueled engines reached our location.

Our viewing platform shook from the vibrations for over a minute as Starship and the Super Heavy booster soared into the stratosphere. Two-and-a-half minutes into the flight, the rocket was just a point of bluish-white light as it accelerated east over the Gulf of Mexico.

Another burst of orange encircled the rocket during the so-called hot-staging maneuver, when the Starship upper stage lit its engines at the moment the Super Heavy booster detached to begin the return to Starbase. Flying at the edge of space more than 300,000 feet over the Gulf, the booster flipped around and fired its engines to cancel out its downrange velocity and propel itself back toward the coastline.

The engines shut down, and the booster plunged deeper into the atmosphere. Eventually, the booster transformed from a dot in the sky back into the shape of a rocket as it approached Starbase at supersonic speed. The rocket’s velocity became more evident as it got closer. For a few moments, my viewing angle made it look like the rocket—bigger than the fuselage of a 747 jumbo jet—was coming right at me.

The descending booster zoomed through the contrail cloud it left behind during launch, then reappeared into clear air. With the naked eye, I could see a glow inside the rocket’s engine bay as it dived toward the launch pad, presumably from heat generated as the vehicle slammed into ever-denser air on the way back to Earth. This phenomenon made the rocket resemble a lit cigar.

Finally, the rocket hit the brakes by igniting 13 of its 33 engines, then downshifted to three engines for the final maneuver to slide in between the launch tower’s two catch arms. Like balancing a pencil on the tip of your finger, the Raptor engines vectored their thrust to steady the booster, which, for a moment, appeared to be floating next to the tower.

The Super Heavy booster, more than 20 stories tall, rights itself over the launch pad in Texas, moments before two mechanical arms grabbed it in mid-air. Credit: Stephen Clark/Ars Technica

A double-clap sonic boom jolted spectators from their slack-jawed awe. Only then could we hear the roar from the start of the Super Heavy booster’s landing burn. This sound reached us just as the rocket settled into the grasp of the launch tower, with its so-called catch fittings coming into contact with the metallic beams of the catch arms.

The engines switched off, and there it was. Many of the spectators lucky enough to be there jumped up and down with joy, hugged their friends, or let out an ecstatic yell. I snapped a few final photos and returned to his laptop, grinning, speechless, and started wondering how I could put this all into words.

Once the smoke cleared, at first glance, the rocket looked as good as new. There was no soot on the outside of the booster, as it is on the Falcon 9 rocket after returning from space. This is because the Super Heavy booster and Starship use cleaner-burning methane fuel instead of kerosene.

Elon Musk, SpaceX’s founder and CEO, later said the outer ring of engine nozzles on the bottom of the rocket showed signs of heating damage. This, he said, would be “easily addressed.”

What’s not so easy to address is how SpaceX can top this. A landing on the Moon or Mars? Sure, but realistically, those milestones are years off. There’s something that’ll happen before then.

Sometime soon, SpaceX will try to catch a Starship back at the launch pad at the end of an orbital flight. This will be an extraordinarily difficult feat, far exceeding the challenge of catching the Super Heavy booster.

Super Heavy only reaches a fraction of the altitude and speed of the Starship upper stage, and while the booster’s size and the catch method add degrees of difficulty, the rocket follows much the same up-and-down flight profile pioneered by the Falcon 9. Starship, on the other hand, will reenter the atmosphere from orbital velocity, streak through the sky surrounded by super-heated plasma, then shift itself into a horizontal orientation for a final descent SpaceX likes to call the “belly flop.”

In the last few seconds, Starship will reignite three of its engines, flip itself vertical, and come down for a precision landing. SpaceX demonstrated the ship could do this on the test flight last week, when the vehicle made a controlled on-target splashdown in the Indian Ocean after traveling halfway around the world from Texas.

If everything goes according to plan, SpaceX could be ready to try to catch a Starship for real next year. Stay tuned.

After seeing hundreds of launches, SpaceX’s rocket catch was a new thrill Read More »