Rocket Lab chief opens up about Neutron delays, New Glenn’s success, and NASA science

“In the end of the day, NASA has to capture the public’s imagination.”

Peter Beck, founder and chief executive officer of Rocket Lab, during TechCrunch Disrupt in San Francisco on October 28, 2024. Credit: David Paul Morris/Bloomberg via Getty Images

The company that pioneered small launch has had a big year.

Rocket Lab broke its annual launch record with the Electron booster—17 successful missions this year, and counting—and is close to bringing its much larger Neutron rocket to the launch pad.

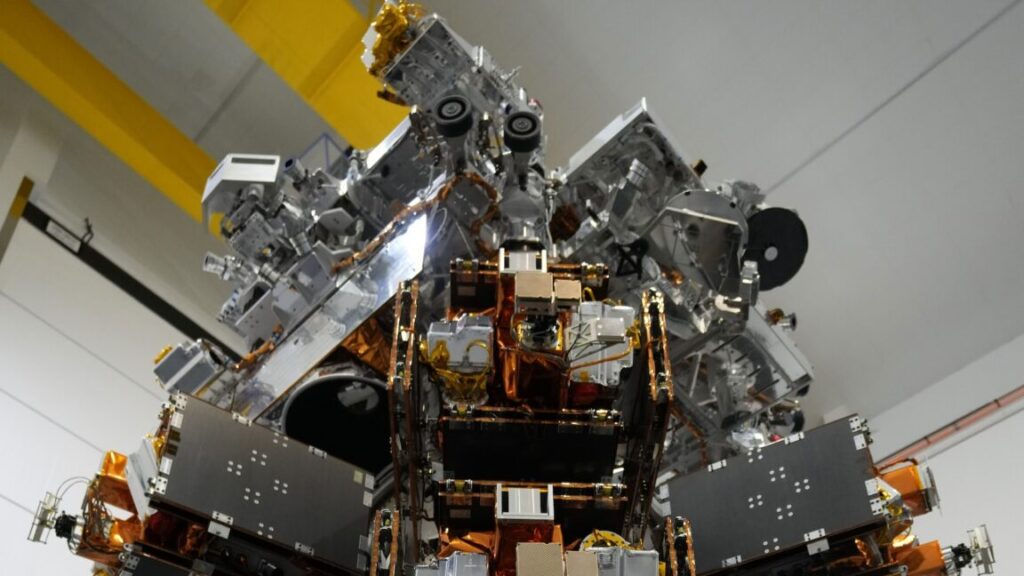

The company also expanded its in-space business, including playing a key role in supporting the landing of Firefly’s Blue Ghost mission on the Moon and building two small satellites just launched to Mars.

Overall, it has been quite a ride for the company founded nearly two decades ago in New Zealand by Peter Beck. A new book about the company’s origins and aspirations, The Launch of Rocket Lab, tells the story of the company’s rise in words and grand images.

Ars recently spoke with Beck about Rocket Lab’s past, present, and future. This interview has been edited lightly for clarity.

Ars: In reading through the book and considering the history of Rocket Lab, I’m continually amazed that a handful of engineers in the country with no space program, no space heritage, built the world’s second most accomplished commercial launch company. What do you attribute that success to?

Peter Beck: It’s hard to know. But there’s a few elements within Rocket Lab that have always remained steadfast, no matter what we do or how big we get. And I think a lot of space companies have tried to see how much they can get away with. And it turns out, in this industry, you just can’t get away with taking very many shortcuts at all. So I think that’s part of it. The attitude of our organization is like, nothing’s too big, nothing’s too hard. We just make it happen. The team works extremely hard. If you drive past the Rocket Lab car park on a Sunday, it looks just like the SpaceX car park on a Sunday. And, you know, the team is very mission-driven. They’re always fighting for a goal, which I think is important. And then, above anything, I just think we can never outspend Elon (Musk) and Jeff (Bezos). We have to out-hustle. And that’s just the reality. The Rocket Lab hustle comes down to just not accepting no as an answer. If a barrier comes up a lot of space companies, or a lot of companies in general, whether its regulatory or technical, it’s easy to submit to the problem, rather than just continue to attack it.

Ars: Electron keeps going. In fact, you’ve just flown a record 17th mission this year, and you continue to sign large deals. How has Electron survived the era of rideshare missions on the Falcon 9?

Beck: We’ve always had the thesis that there is a need for a dedicated small launch. You can put as many Bandwagons and as many Transporters as you want, and you can reduce the price to unsustainably low levels as long as you want. It doesn’t make any difference to us, because it’s a totally different product. As folks are building out constellations, it’s no use just getting dumped out in one orbit. So a lot of Electrons these days are just building out constellations for folks where they have optimized for a specific altitude and inclination and so forth. And we can hit those every time. And if you amortize the cost of launch over the actual lifetime of that constellation and the service that it can provide, it’s cheap, and it’s something rideshares can never deliver.

Ars: It’s surprising to me that after so many years and so many startups, there really isn’t a viable competitor in Electron’s class anywhere in the world.

Beck: It’s pretty hard to build a small rocket. I call it the pressure transducer equilibrium. A pressure transducer on a little rocket is a meaningful amount of mass. A pressure transducer on Neutron is totally irrelevant. Just throw 10 at them, and who cares? But on Electron, if you throw 10 pressure transducers at a problem, then you know, you’ve added a kilo. That’s a meaningful portion of the lift capacity of the vehicle. And there’s no super-magic store where you can go and buy a pressure transducer that scales with the size of the rocket. So you end up with a bunch of stuff that just doesn’t scale, that contributes meaningful mass to the vehicle. If you look at Electron’s payload performance, it’s really high for the size of that rocket. So that’s really hard to do because in an instance where you would throw 10 pressure transducers at a problem, we can only afford to throw one at Electron, but we still want the same redundancy and the same reliability and all of those kinds of things. So that just drives really, really difficult engineering solutions.

And then from a financial standpoint, it’s got a sticker price of $8.5 million, let’s call it. Your flight safety team doesn’t care if it’s a big rocket or a little rocket. Your range team doesn’t care if they’re opening a 12-inch valve or a 2-inch valve. All those teams just have to become ruthlessly efficient at doing that work. So if you go to a big rocket, you might have a flight safety team of 20 people. You come here, it has to be like three. So you have to find ways of really streamlining all those processes. And every little person and dollar and gram has to be ringed out.

Rocket Lab launches an Electron booster with a previously flown engine on Thursday. Credit: Rocket Lab

Ars: What’s going on with the Electron reuse program? My sense is that you’ve kind of learned what you needed to know and are moving on.

Beck: Yeah, that’s pretty much it. It was a hugely valuable learning tool, but if you look at an Electron recovery, we might recover sort of a million dollars worth of stage one booster. And of course, the more we make, the cheaper they get, because we’re continuing to scale so that it’s ever decreasing that return. Quite frankly, and honestly, it’s just like, do we have reusability and recovery teams working on something that returns a million dollars every time it flies? Or, do we have them working on Neutron, where it’s tens of millions of dollars every time you fly? So it’s just about, you know, directing the resource for the biggest bang for the buck.

Ars: I listened to your recent earnings call where you discussed Neutron’s development and delay into 2026. What are the biggest issues you face in getting Neutron over the finish line?

Beck: It would be actually easier if there was an issue, because then I could just say something blew up, or this is a problem. But there’s no real issues. It’s just that we’re not going to put something on the pad that doesn’t meet kind of the standard that’s made us successful. Say something might pass the qualification test, but if we see something in a strain gauge on the back of the panel, or something that we don’t understand, we just don’t move on. We’re not going to move on unless we understand every little element of what’s going on. Maybe I’m on some kind of spectrum for details, but that’s what’s kept us successful. It’s just a bigger rocket, and it’s got more unique features like hungry hippo (the payload fairing opening mechanism) and giant carbon structures. So, you know, it’s not like anything has shit the bed. It’s just a big machine, and there’s some new stuff, and we want to make sure we don’t lose the magic of what we created. A little bit of time now can save a huge amount of heartbreak later on.

Ars: Toward the end of the book, you say that Rocket Lab is best positioned to compete with SpaceX in medium-lift launch, and break up the Falcon 9 monopoly. What is your sense of the competitive landscape going forward? We just saw a New Glenn launch and land, and that was really impressive—

Beck: Bloody impressive. Jeff (Bezos) laid down a new bar. That was incredible. People forget that he’s been working on it for 22 years, but even so, that was impressive.

Ars: Yes, it’s been a journey for them. Anyway, there’s also Vulcan, but that’s only flown one time this year, so they’ve got a ways to go. Then Stoke and Relativity are working at it. What’s your view of your competition going forward?

Beck: I hate comparing it to aviation, but I call medium-class lifters the Boeing 737 of the industry. Then you got your A380s, which are your Starships and your New Glenns. And then you’ve got your Electrons, which are your private jets. And you know, if you look at the aviation sector, nobody comes in and just brings an airplane in and wipes everybody out, because there’s different needs and different missions. And just like there’s a 737 there’s an A320 and that’s kind of what Neutron is intending to be. We had a tremendous pull from our customers, both government and commercial, for alternatives to what’s out there.

The other thing to remember is, for our own aspirations, we need a high-cadence, reusable, low-cost, multi-ton lift capability. I think I’ve been clear that I think the large space companies of the future are going to be a little bit blurry. Are they a space company, or are they something else? But there’s one thing that is absolutely sure, that if you have multi-ton access to orbit in a reusable, low-cost way, it’s going to be very, very difficult to compete with if you’re someone who doesn’t have that capability. And if you look at our friends at SpaceX, yeah, Starlinks are great satellites and all the rest of it. But what really enabled Starlink was the Falcon 9. Launch is a difficult business. It’s kind of lumpy and deeply complex, but at the end of the day, it is the access to orbit. And, you know, having multi-ton access to orbit is just critical. If you’re thinking that you want to try and build one of the biggest space companies in the world, then you just have to have that.

Ars: Rocket Lab has expressed interest in Mars recently, both the Mars Telecommunications Orbiter and a Mars Sample Return mission. As Jared Isaacman and NASA think about commercial exploration of Mars, what would you tell them about what Rocket Lab could bring to the table?

Beck: I’m a great believer that government should do things for which it makes no sense for commercial entities to do, and commercial should do the things that it makes no sense for governments to do. Consider Mars Sample Return, we looked at that, and the plan was $11 billion and 20 years? It’s just, come on. It was crazy. And I don’t want to take the shine off. It is a deeply technical, deeply difficult mission to do. But it can be done, and it can be done commercially, and it can be done at a fraction of the price. So let industry have at it.

And look, Eric, I love planetary science, right? I love exploring the planets, and I think that if you have a space company that’s capable of doing it, it’s almost your duty for the knowledge of the species to go and do those sorts of things. Now, we’re a publicly traded company, so we have to make margin along the way. We’ve proven we can do that. Look at ESCAPADE. All up, it was like $50 million cost, launched, and on its way to Mars. I mean, that’s the sort of thing we need to be doing, right? That’s great bang for your buck. And you know, as you mentioned, we’re pushing hard on the MTO. The reality is that if you’re going to do anything on Mars, whether it’s scientific or human, you’ve got to have the comms there. It’s just basic infrastructure you’ve got to have there first. It’s all very well to do all the sexy stuff and put some humans in a can and send them off to Mars. That’s great. But everybody expects the communication just to be there, and you’ve got to put the foundations in first. So we think that’s a really important mission, and something that we can do, and something we can contribute to the first humans landing on Mars.

Rocket Lab’s Neutron rocket is shown in this rendering delivering a stack of satellites into orbit. Credit: Rocket Lab

Ars: You mentioned ESCAPADE. How’s your relationship with Jeff Bezos? I heard there was some tension last year because Rocket Lab was being asked to prepare the satellite for launch, even when it was clear New Glenn was not going to make the Mars window.

Beck: I know you want me to say yes, there is, but the honest truth is absolutely zero. I know David (Limp, Blue Origin’s CEO) super well. We’re great friends. Jeff and I were texting backwards and forwards during the launch. There’s just honestly none. And you know that they gave us a great ride. They were bang on the numbers. It was awesome. Yeah, sure, it would have been great to get there early. But it’s a rocket program, right? Nobody can show me a rocket program that turned up exactly on time. And yep, it may have been obvious that it might not have been able to launch on the first (window), but we knew there’s always other ways. Worst-case scenario, we have to go into storage for a little bit. These missions are years and years long. So what’s a little bit longer?

Ars: Speaking of low-cost science missions, I know Isaacman is interested in commercial planetary missions. Lots of $4 billion planetary missions just aren’t sustainable. If NASA commits to commercial development of satellite buses and spacecraft like it did to commercial cargo and crew, what could planetary exploration look like a decade from now?

Beck: I think that’d be tremendously exciting. One of the reasons why we did CAPSTONE was to prove that you can go to the Moon for $10 million. Now, we lost a lot of money on that mission, so that ultimately didn’t prove to be true. But it wasn’t crazy amounts, and we still got there miles cheaper than anybody else could have ever got there. And ESCAPADE, we have good margins on, and it’s just a true success, right? Touch wood to date, like we’ve got a long way to go, but success in the fact that the spacecraft were built, delivered, launched, and commissioned.

This is the thing. Take your billion-dollar mission. How many $50 million missions, or $100 million missions, could you do? Imagine the amount of science you can do. I think part of the reason why the public gets jaded with some of these science missions is because they happen once a decade, and they’ve got billions of dollars of price tags attached to them. It’s kind of transitorily exciting when they happen, but they’re so far apart. In the end of the day, NASA has to capture the public’s imagination, because the public are funding it. So it has to seem relevant, relevant to mum and dad at home. And you know, when mum and dad are home and it’s tough, and then they just hear billions of dollars and, you know, years of overrun and all the rest of it, how can they feel good about that? Whereas, if they can spend much less and deliver it on time and have a constant stream of really interesting missions in science, I think that it’s great for public justification. I think it’s great for planetary science, because obviously you’re iterating on your results, and it’s great for the whole community to just have a string of missions. And also, I think it’s great for US space supremacy to be blasting around the Solar System all the time, rather than just now and again.

Ars: Ok Pete, it’s November 18. How confident should we be in a Neutron launch next year? 50/50?

Beck: Hopefully better than 50/50. That would be a definite fail. We’re taking the time to get it right. I always caveat anything, Eric, that it’s a rocket program, and we’ve got some big tests in front of us. But to date, if you look at the program, it’s been super smooth; like we haven’t exploded tanks, we haven’t exploded engines. We haven’t had any major failure, especially when we’re pushing some new boundaries and some new technology. So I think it’s going really, really smoothly, and as long as it continues to go smoothly, then I think we’re in good shape.

Eric Berger is the senior space editor at Ars Technica, covering everything from astronomy to private space to NASA policy, and author of two books: Liftoff, about the rise of SpaceX; and Reentry, on the development of the Falcon 9 rocket and Dragon. A certified meteorologist, Eric lives in Houston.

Rocket Lab chief opens up about Neutron delays, New Glenn’s success, and NASA science Read More »