Some in the tech industry decided now was the time to raise alarm about AB 3211.

As Dean Ball points out, there’s a lot of bills out there. One must do triage.

Dean Ball: But SB 1047 is far from the only AI bill worth discussing. It’s not even the only one of the dozens of AI bills in California worth discussing. Let’s talk about AB 3211, the California Provenance, Authenticity, and Watermarking Standards Act, written by Assemblymember Buffy Wicks, who represents the East Bay.

SB 1047 is a carefully written bill that tries to maximize benefits and minimize costs. You can still quite reasonably disagree with the aims, philosophy or premise of the bill, or its execution details, and thus think its costs exceed its benefits. When people claim SB 1047 is made of crazy pills, they are attacking provisions not in the bill.

That is not how it usually goes.

Most bills involving tech regulation that come before state legislatures are made of crazy pills, written by people in over their heads.

There are people whose full time job is essentially pointing out the latest bill that might break the internet in various ways, over and over, forever. They do a great and necessary service, and I do my best to forgive them the occasional false alarm. They deal with idiots, with bulls in China shops, on the daily. I rarely get the sense these noble warriors are having any fun.

AB 3211 unanimously passed the California assembly, and I started seeing bold claims about how bad it would be. Here was one of the more measured and detailed ones.

Dean Ball: The bill also requires every generative AI system to maintain a database with digital fingerprints for “any piece of potentially deceptive content” it produces. This would be a significant burden for the creator of any AI system. And it seems flatly impossible for the creators of open weight models to comply.

Under AB 3211, a chatbot would have to notify the user that it is a chatbot at the start of every conversation. The user would have to acknowledge this before the conversation could begin. In other words, AB 3211 could create the AI version of those annoying cookie notifications you get every time you visit a European website.

…

AB 3211 mandates “maximally indelible watermarks,” which it defines as “a watermark that is designed to be as difficult to remove as possible using state-of-the-art techniques and relevant industry standards.”

So I decided to Read the Bill (RTFB).

It’s a bad bill, sir. A stunningly terrible bill.

How did it unanimously pass the California assembly?

My current model is:

-

There are some committee chairs and others that can veto procedural progress.

-

Most of the members will vote for pretty much anything.

-

They are counting on Newsom to evaluate and if needed veto.

-

So California only sort of has a functioning legislative branch, at best.

-

Thus when bills pass like this, it means a lot less than you might think.

Yet everyone stays there, despite everything. There really is a lot of ruin in that state.

Time to read the bill.

It’s short – the bottom half of the page is all deleted text.

Section 1 is rhetorical declarations. GenAI can produce inauthentic images, they need to be clearly disclosed and labeled, or various bad things could happen. That sounds like a job for California, which should require creators to provide tools and platforms to provide labels. So we all can remain ‘safe and informed.’ Oh no.

Section 2 22949.90 provides some definitions. Most are standard. These aren’t:

(c) “Authentic content” means images, videos, audio, or text created by human beings without any modifications or with only minor modifications that do not lead to significant changes to the perceived contents or meaning of the content. Minor modifications include, but are not limited to, changes to brightness or contrast of images, removal of background noise in audio, and spelling or grammar corrections in text.

(i) “Inauthentic content” means synthetic content that is so similar to authentic content that it could be mistaken as authentic.

This post would likely be neither authentic nor inauthentic. Confusing.

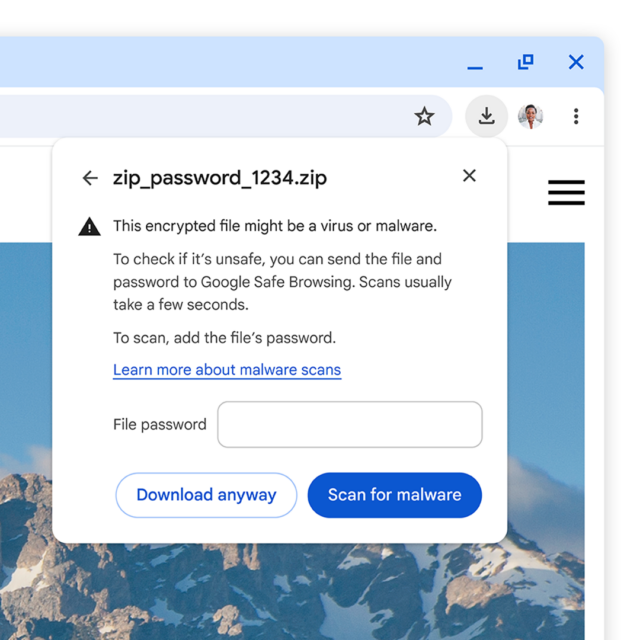

(k) “Maximally indelible watermark” means a watermark that is designed to be as difficult to remove as possible using state-of-the-art techniques and relevant industry standards.

That is a much higher standard than ‘reasonable care’ or ‘reasonable assurance.’ This essentially means (after an adoption period) you have to use whatever the ‘best’ technique is. Cost, or a hit to product quality, is not technically a factor. Some common sense applies, but this could get ugly.

(f) “Generative AI hosting platform” means an online repository or other internet website that makes generative AI systems available for download.

(g) “Generative AI provider” means an organization or individual that creates, codes, substantially modifies, or otherwise produces a generative AI system.

There is no minimize size or other threshold. It even says ‘individual.’

All right, what do these providers have to do?

That starts next, with 22949.90.1.

(a) A generative AI provider shall do all of the following:

(1) Place imperceptible and maximally indelible watermarks containing provenance data into synthetic content produced or significantly modified by a generative AI system that the provider makes available.

(A) If a sample of synthetic content is too small… [do your best anyway.]

(B) To the greatest extent possible, watermarks shall be designed to retain information that identifies content as synthetic and gives the name of the provider in the event that a sample of synthetic content is corrupted, downscaled, cropped, or otherwise damaged.

So ‘to the extent possible’ even in places where it is absurd due to brevity, a use of ‘maximally.’ No qualifier at all on ‘imperceptible.’

This is not a situation where an occasional false negative is fatal. Why this standard?

(a2) says they have to offer downloadable watermark decoders, with another ‘greatest extent possible’ for adherence to ‘relevant national and international standards.’

(a3) says they need to conduct third-party red teaming exercises, including whether you can ‘add false watermarks to authentic content.’ What? And submit a report.

(b) your system from before this act can be grandfathered in but only if you retroactively make a 99% accurate decoder, or the system is ‘not capable of producing inauthentic content.’

or here’s the exact words, given what I think this provision does:

(b) A generative AI provider may continue to make available a generative AI system that was made available before the date upon which this act takes effect and that does not have watermarking capabilities as described by paragraph (1) of subdivision (a), if either of the following conditions are met:

(1) The provider is able to retroactively create and make publicly available a decoder that accurately determines whether a given piece of content was produced by the provider’s system with at least 99 percent accuracy as measured by an independent auditor.

(2) The provider conducts and publishes research to definitively demonstrate that the system is not capable of producing inauthentic content.

No one has any idea how to create a 99% accurate decoder, let alone a retroactively 99% accurate decoder.

Every LLM or image model worth using can produce inauthentic content.

This is therefore, flat out, a ban on all existing generative AI systems worth using that produce images or text. Claude Sonnet 3.5 anticipates that all existing LLMs would have to be withdrawn from the market.

(A model producing obviously distorted voice outputs might survive? Maybe.)

As a reminder: This unanimously passed the California assembly.

Moving on.

(c) says no one shall provide anything designed to remove watermarks.

(d) says hosting platforms shall not make available anything not placing maximally indelible watermarks.

This is essentially saying (I think) that all internet hosting platforms would be held responsible if you could download any LLM that did not watermark, which includes every model that currently exists.

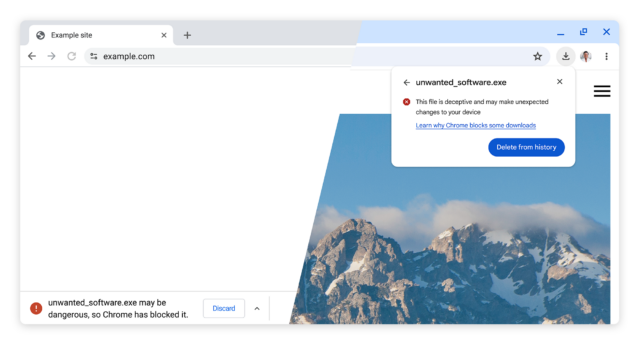

(e) requires reporting all vulnerabilities and failures with 24 hours, including notifying everyone involved.

A period of 24 hours is crazy short. The notification of the issue has to include all users who interacted with incorrectly marked data, so this is a public announcement. It gives no time to figure out what happened, or space to actually address or fix it.

(f) was noted above, it requires constant notification of AI content.

(f) (1) A conversational AI system shall clearly and prominently disclose to users that the conversational AI system generates synthetic content.

(A) In visual interfaces, including, but not limited to, text chats or video calling, a conversational AI system shall place the disclosure required under this subdivision in the interface itself and maintain the disclosure’s visibility in a prominent location throughout any interaction with the interface.

(B) In audio-only interfaces, including, but not limited to, phone or other voice calling systems, a conversational AI system shall verbally make the disclosure required under this subdivision at the beginning and end of a call.

(2) In all conversational interfaces of a conversational AI system, the conversational AI system shall, at the beginning of a user’s interaction with the system, obtain a user’s affirmative consent acknowledging that the user has been informed that they are interacting with a conversational AI system. A conversational AI system shall obtain a user’s affirmative consent prior to beginning the conversation.

(3) Disclosures and affirmative consent opportunities shall be made available to a user in the language in which the conversational AI system is communicating with the user.

(4) The requirements under this subdivision shall not apply to conversational AI systems that do not produce inauthentic content.

The intent here is good. People should know when they are interacting with an AI.

The key is to not be like GPDR and end up with endless pop-ups, click throughs and even audio notifications.

In this case, for verbal content, I think (hope?) that clause (4) actually is doing work.

As in, suppose you are using Siri. Can Siri produce ‘authentic content’? Obviously if you are being sufficiently pedantic then yes. But in practice I’d say no.

If I was trying to salvage this bill, I would add a clause to make it clear that repeated verbal interactions between a user and the same AI system wouldn’t count, and that any system using a clearly robotic voice or one chosen by the user does not count.

I don’t think this would turn every interaction into ‘Hey Siri send an email to Josh inviting him to dinner.’ ‘I am Siri, a conversational AI system, what time should I ask him to come?’ But I’m not fully confident.

For text there’s little question every decent LLM can produce ‘inauthentic content.’ So you’re losing one line of screen space permanently, including on a phone. Sounds annoying, needless and stupid. GPDR stuff.

22949.90.2 requires new digital cameras to include ‘authenticity and provenance watermarks’ on their outputs.

The first use of the camera will require a new disclosure. Then they’ll eat screen space for an indicator of the watermarking at all times when using the camera (why? What does this possibly accomplish?). Again, I can see a good argument for the functional requiring of the core watermark capabilities, but the implementation is needlessly annoying.

22949.90.3 says large online platforms (1 million California customers) shall use labels to ‘prominently disclose’ the provenance data found in watermarks or digital signatures.

(i) “Large online platform” means a public-facing internet website, web application, or digital application, including a social network, media platform as defined in Section 22675, video-sharing platform, messaging platform, advertising network, or search engine that had at least 1,000,000 California users during the preceding 12 months and can facilitate the sharing of synthetic content.

Note that this is not only social networks. A messaging platform has to do this. Is every text message an upload? I really do not think they have thought this through.

(1) The labels shall indicate whether content is fully synthetic, partially synthetic, authentic, authentic with minor modifications, or does not contain a watermark.

I don’t mind the idea of ‘there is a symbol to indicate that AI content is from an AI.’

It’s rather looney to forcibly label every other piece of content ‘this is human.’

Why? What does this accomplish? Can we perhaps not be such idiots?

(b) The disclosure required under subdivision (a) shall be readily legible to an average viewer or, if the content is in audio format, shall be clearly audible. A disclosure in audio content shall occur at the beginning and end of a piece of content and shall be presented in a prominent manner and at a comparable volume and speaking cadence as other spoken words in the content. A disclosure in video content should be legible for the full duration of the video.

Think ‘I am Senator Bob, and I approved this message,’ except twice, on every clip.

Not every AI clip. Every clip, period. If it’s human, it will need to start with ‘this is not AI,’ then end with ‘this is not AI.’

If it’s a video, you can get an icon instead. Plausibly every audio clip becomes a ‘video’ so that the video can contain the icon.

Complete looney tunes.

They do this to users doing uploads, too. Every time you upload anything you did that isn’t AI, you’d need to check a box (as the bill is written right now) that says ‘this is human content.’

Can’t we simply, at most… require disclosure when it is indeed AI content (and another if you are unsure)? And use auto-detect on the actual watermarks, so the user almost never has to actually do anything, since the platform has to use ‘state of the art’ detection techniques anyway?

Do we instead need this active affirmation on every Tweet and Instagram photo?

22949.90.4 calls for annual risk assessments from generative AI providers and large online platforms, including [various distinct risks of varying types.]

If you’re wondering if my eyes are rolling yet again, the answer is yes, and a lot.

22949.90.5 defines fines as up to $1 million or 5% of violator’s global annual revenue, whichever is greater.

Did the European Union write this bill? It’s like Bad Bill Bingo up here. Vile stuff. If you have any violation they can fine Meta about $7 billion?

22949.90.6 says the Department of Technology shall implement and carry out regulations within 90 days, and finally 91 says severability.

Existing ones would be toast the same way the closed models would be toast. But beyond that, what happens?

I don’t know with any confidence. The bill does not specify.

Would an open weights model developer be responsible for a subsequent fine tuning that removed or altered the watermark? What counts as distinct?

It could plausibly end up being everything from ‘you are responsible for anything downwind of your release no matter what’ to ‘once they fine tune it that is their problem.’

My guess is the standard would be ‘substantially modify,’ since doing that makes one a ‘generative AI provider.’ In context, any attempt to evade the bill’s requirements could be seen automatically as a ‘substantial’ modification, so you would effectively be safe. Or at least, you would be if that step was indeed substantial, and you didn’t leave a ‘insert_watermarks=true’ lying around that someone could flip.

Or not. Hell if I know. Which means chilling effect.

What we do know for certain is that this bans platforms from allowing the downloading of models that lack the watermarking, which includes all currently existing models. It is not obvious how one would comply with this.

A good bill thinks about these questions, and clearly answers them. AB 3211 doesn’t.

So to summarize what I think this bill most importantly does in practice:

-

Essentially all LLMs and most other generative AI systems are banned.

-

New generative AI systems must place maximally effective watermarks on all content, in ways that may or may not be possible to comply with.

-

Open models might or might not have it even worse than that, and don’t know. We do know that hosts could not let anyone download any LLM that exists today.

-

New digital cameras have to include watermarks.

-

Any interaction with an AI system whose content could be mistaken for a human must include disclosure it is an AI system. That means permanent on screen statement for text or video, and audio statement for voice.

-

Many things with 1 million California users, including search engines, social media platforms and messaging services, have to visibly mark every piece of text or video as human or AI generated. Every audio must say which one it is at the start and finish. Every user input must include an active user indication of whether it is AI or human (and the system must run detection software on it to check).

-

Violations can cost you $1 million or 5% of your global revenue. Which for Meta would be ~$7 billion, or ~$15 billion for Google.

I would like to think that the system is not this stupid. That if this somehow got to Newsom’s desk, that we would all rise up as one to warn him to veto this, that he would have his people actually read the bill, and he would stop this madness. But one cannot ever be sure.

There would doubtless be many legal challenges. I don’t know how bad it would get in practice. If everything so far hasn’t caused people to leave San Francisco, I can never be confident that any new thing will be sufficient.

But this seems really, really bad, from its large principles to its detailed language to its likely consequences if actually implemented in practice.

There are several points where this bill offers sharp contrast with SB 1047, and illustrates how very differently were these two bills constructed.

Here are some of them.

-

AB 3211 addresses labeling content. SB 1047 tries to prevent catastrophes.

-

AB 3211 retroactively bans all existing LLMs. SB 1047 does not touch them at all.

-

AB 3211 applies to generative AI systems of any size, with no restrictions. SB 1047 has no impact whatsoever unless you spend $100 million in training compute.

-

AB 3211 does not specify who is responsible for what versions of what open models. SB 1047 has a definition that has gone through rounds of debate.

-

AB 3211 uses the standards ‘maximally’ and ‘greatest extent possible,’ and in some places no qualifiers at all, for things we do not know how to do. SB 1047 centrally uses ‘reasonable assurance’ which is close to ‘reasonable care.’

-

AB 3211 gives 24 hours to report an incident, in a way that is effectively fully public. SB 1047 already gives 72 hours and may end up giving more, despite that information potentially being of catastrophic importance.

-

AB 3211 fines you a percentage of global revenue. SB 1047 does not do that.

-

AB 3211 requires continuous disclosures and box checking and background annoyances, even when no AIs are involved, usually for no purpose. SB 1047 does not do anything of the kind.

If anything, others raising the alarm about AB 3211 were dramatically underselling how bad and destructive this bill would be in its current form. If we are going to succeed in our Quest for Sane Regulation, while avoiding insane ones, calibration is necessary. Different proposals need to be treated differently, and addressed on their merits, without fabrication, hallucination or hyperbole.

I have yet to see, from anyone I follow or respect, a statement of support for AB 3211.

So, yes. This AB 3211 is a no good, very bad bill, sir.