In honor of San Francisco failing its housing target and becoming subject to SB 423, this special edition of housing deals with various state and local developments. It is presumed (although plans never survive contact with the enemy) that this greatly streamlines getting housing built, to the tune of several thousand per year.

Oakland rents falling most of all large US cities after building more housing. No way.

Court says UCSF is exempt from local height and zoning restrictions because it’s an educational institution serving the public good. I notice this ruling confuses me, especially because the building proposed is a hospital. That seems like something where zoning should apply. Or else you could say all hospitals are public goods, and I would agree.

San Francisco mayor London Breed vows to veto any and all anti-housing legislation, says San Francisco must be a housing leader, a city of yes.

Well, that is going to take a lot more than vetoing new laws.

What seems to be her plan for accomplishing this noble goal?

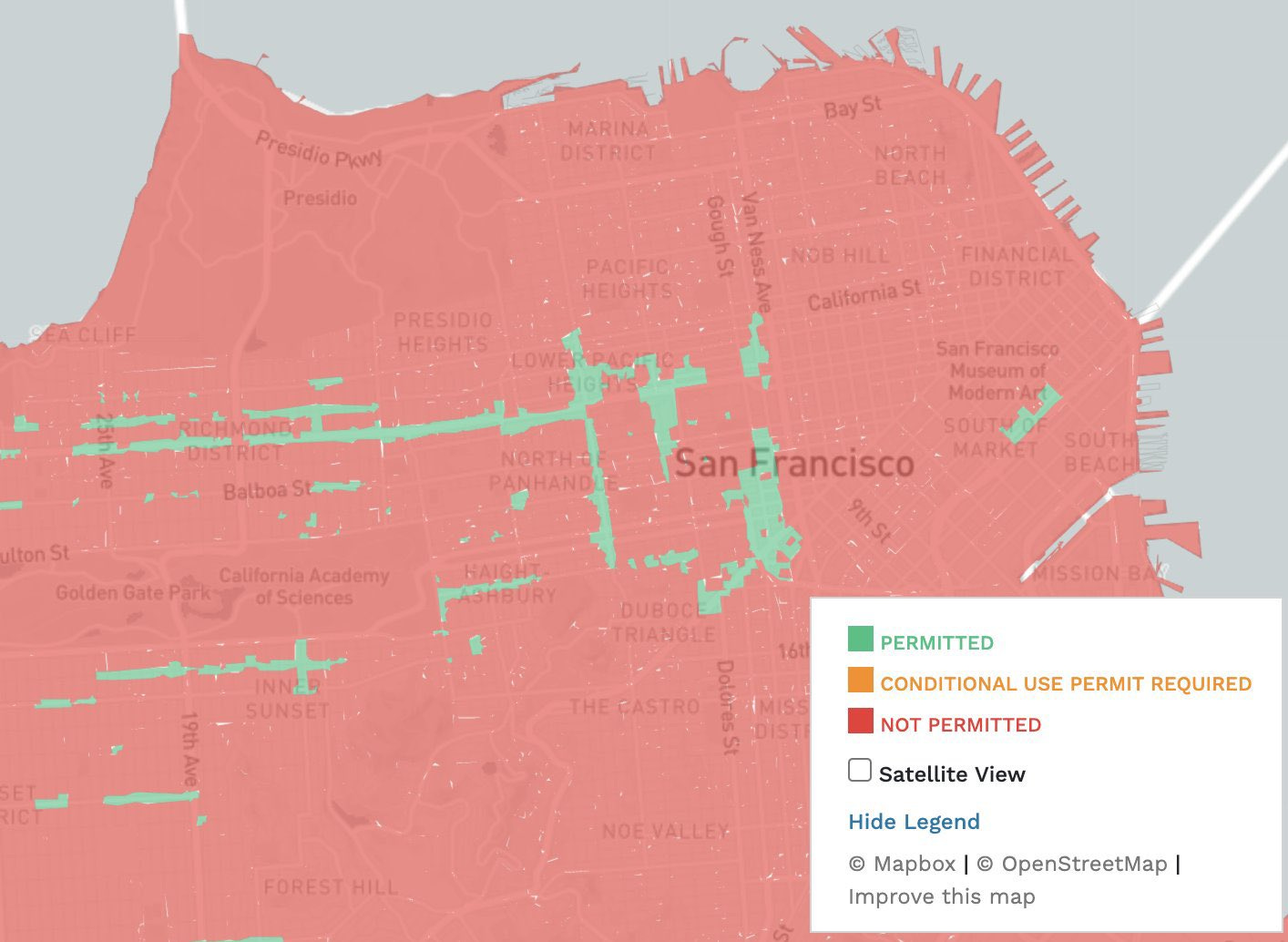

Since the current situation seems more like this?

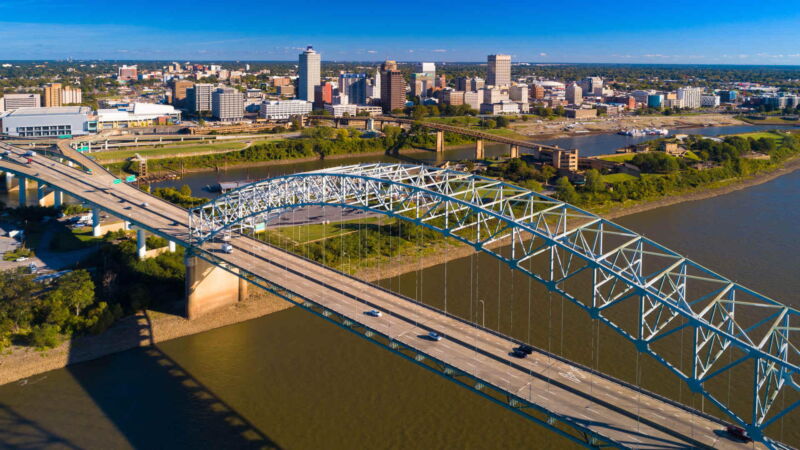

Hayden Clarkin: Given an 8-3 vote tonight by San Francisco’s leaders to downzone the wealthy waterfront neighborhoods to protect the views of millionaire homeowners, I figure I’d show a visual representation of the amount of housing the city approved in January. Yes, it’s in red, look closely.

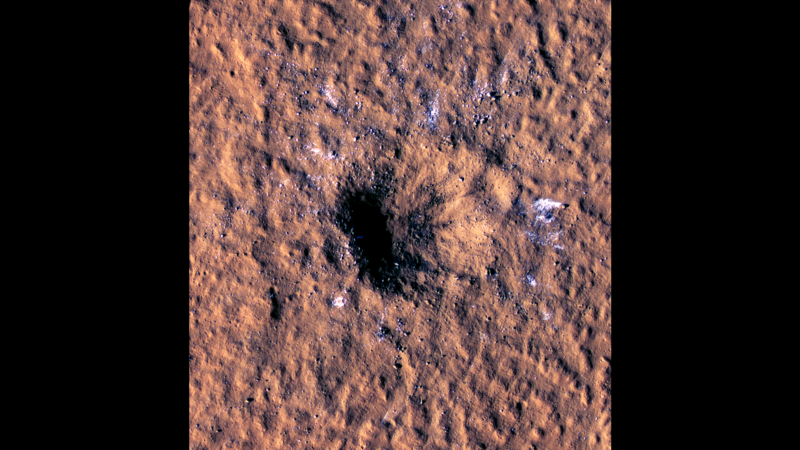

This photograph makes it very clear that whatever the area in question is about, building housing is not it. That is a huge amount of land on which building high would be highly profitable and no one can build.

This next photograph is also illustrative.

Hayden Clarkin: Don’t have the eyes of a hawk? No problem.

Sachin Agarwal: Eight Supervisors voted to save Aaron Peskin’s view. Unbelievable. Every one of these people has got to go.

London Breed: Today is a setback in our work to get to yes on housing. But I will not let this be the first step in a dangerous course correction back towards being a city of no. We will not move backward.

My statement on the Board of Supervisors downzoning vote.

I appreciate that she gets it in principle, but saying boo is not a strategy. What you get in practice, then, is:

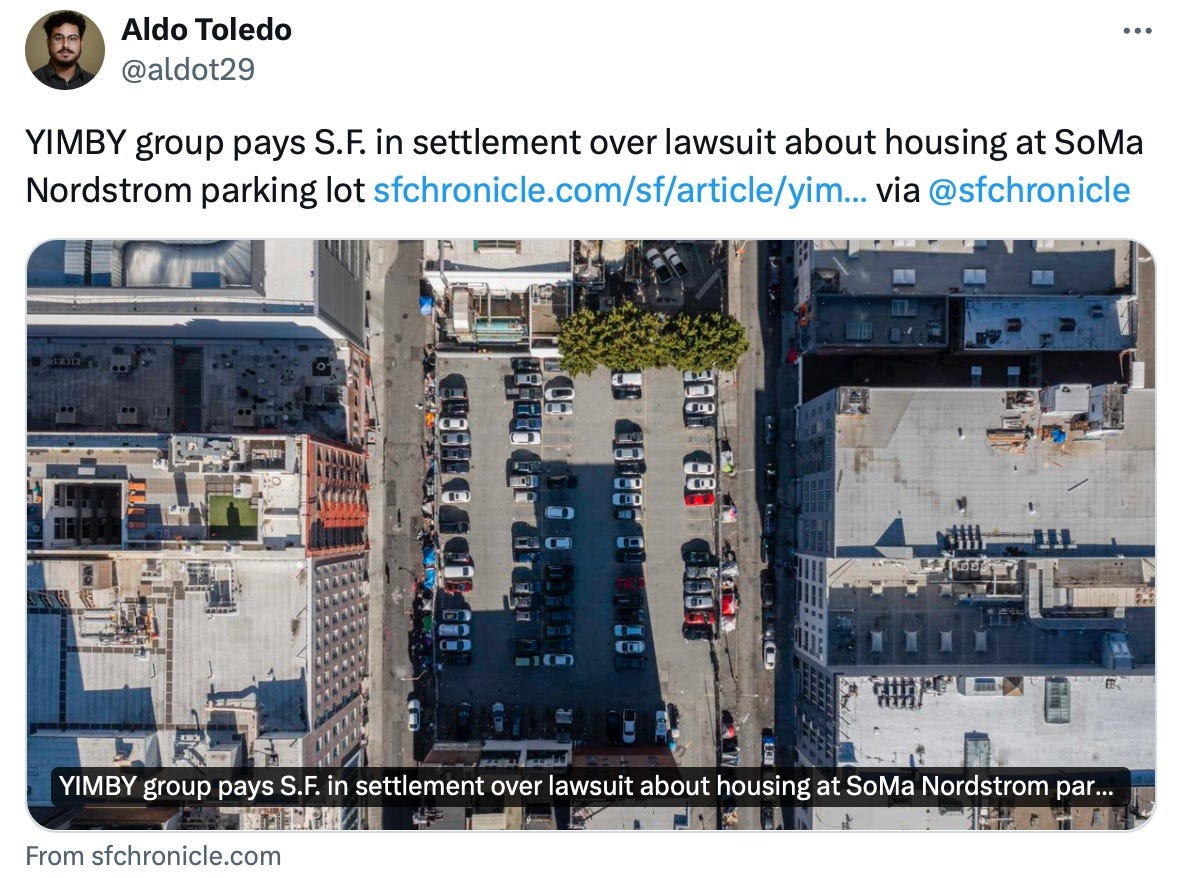

Gianmatteo Costanza: YIMBY action lost this lawsuit re 469 Stevenson parking lot? How disappointing.

Garry Tan: The worthless SF bureaucrats are hard to beat. “Historic Nordstrom parking lot” is preserved, instead of building 495 new units of housing in a transit corridor (23% affordable) …

Corrupt nonprofit TODCO, Aaron Peskin and Dean Preston and their NIMBY agenda won this time.

sp6=Underrated: Will @California_HCD do anything about this? #sanfrancisco permitted 1 housing unit in February, bringing the total to 7 for the year. And 1,143 under this housing element.

…

I seriously don’t know why @CAgovernor even bothers talking about housing if he’s not going to intervene in a city that thumbs its nose at statewide housing targets.

San Francisco needs 82,069 units of housing under the RHNA formula (which is too low but besides the point).#sanfrancisco has only permitted >5k houses in 1 year since 1980.

@GavinNewsom you worked here. You know SF will never build willingly.

Armand Domalewski: San Francisco permitted ONE UNIT OF HOUSING in Februrary and we have someone launching a Mayoral campaign on Saturday centered on the premise that this is too much.

So London Breed’s plan is, despite this, to rezone the city for more housing.

In particular, her plan is to upzone some places from 3 stories to 8 stories, and reducing or avoiding super-tall 50 story buildings elsewhere, partly because with current interest rates those get expensive.

All right. How is she going to do that?

She is going to write a letter politely asking the San Francisco Planning Commission to rezone for more housing especially around transit stops, instead of doing their best not to.

London Breed (link includes text of letter): We need a future that includes housing for all San Franciscans. This past week, I directed Planning to revise our proposed citywide rezoning plan so we see actual construction spread across the entire City.

Rezoning is an iterative process. We have time before our January 2026 deadline to propose a rezoning that is far bolder than the City’s current draft, and results in actually building new homes.

But what’s most important is that San Francisco can, and should, adopt a rezoning plan that results in far more housing than the current draft. This is the only way to meet our CA state requirements, and this is what we committed to do when we passed the Housing Element.

My North Star on housing has always been grounded in being ambitious, data-driven, and embracing change. These are my San Francisco values. Let’s redo the math and re-up our commitment to housing.

I’m excited to see what the talented staff at the Planning Department returns in response to my letter, and this renewed call to recalculate our zoning plan based on the actual probability of where development will actually occur.

We have until January 2026 to pass a robust, citywide rezoning that will be the most significant change to San Francisco housing since parts of this city were downzoned in the 1970s. It’s time to lean into this historic moment and see this challenge as an opportunity.

The San Francisco Planning Commission is not interested in a plan to build more housing. They are interested in a plan to not build more housing. To build as much housing as Mark Zuckerberg paid attention to the Social Network’s legal proceedings.

How is it going in the meantime?

sp6r=underrated: #sanfrancisco housing permits through 15 months of the current RHNA cycle.

15 Permits this calendar year. 1,151 total.

I have no idea why SF’s nimbys feel so down. You guys are winning.

I don’t why anyone brags about yimby Sacramento laws. They aren’t doing anything.

Perhaps one place to start on building more housing would be to not give money to organizations whose central purpose is to stop housing construction? As in TODCO, a SF ‘affordable housing nonprofit’ that works to block the construction of any new affordable housing while reducing upkeep on its existing buildings and providing no new affordable housing. Why play into such a racket?

It is not only housing. Remember that story about how so many women dreamed of opening a 24 hour coffee shop and bookstore with a cat?

It is legal in the areas in green.

But wait! What is that I hear?

Things are about to get feisty. Could be huge.

Scott Weiner (yep, same guy from SB 1047): Today, San Francisco goes from the slowest in CA to approve new homes to one of the fastest. Why?

Because my new expanded housing permit streamlining law, SB 423, takes effect in San Francisco. SF is the 1st city in CA it’s in effect.

It’s super hard to build new homes in SF, partly b/c we made getting permits a chaotic, politicized, long process. We had the longest housing approval time in CA (26 months on average).

Under SB 423, that timeline is now capped at 6 months.

Permit streamlining is already in use across California under SB 35, the 1st law I authored as Senator. But it’s mainly used for 100% affordable housing, which is a small fraction of total homes. SB 423 expands SB 35’s successful streamlining to the vast majority of new homes.

San Francisco needs streamlined housing approvals badly: Last year, the state housing agency found that 18 SF housing policies and practices violated state law.

But we’re not stopping here—if cities don’t meet their housing goals, more will start triggering SB 423 next year 😈

Grow SF: San Francisco needs to build 82,000 units of housing in the next eight years. Since our Board of Supervisors can’t get the job done, the state has taken over control. It’s time to build.

SF Chronicle: S.F. being subject to SB 423 means that most proposed housing projects will not require approval from the Planning Commission and therefore won’t be able to be appealed to the Board of Supervisors.

Using the ‘ask Claude’ principle, I get a prediction of several thousand additional units of housing built per year, accelerating to close to 5,000 new units per year in the long term (e.g. 5+ years out). Presumably the city will do its best to prevent anything from actually being built.

Los Angeles city planning department proposal would revise zoning codes to make room for up to 250,000 new homes, also expand the adaptive reuse program to move from only applying to things built before 1974 to applying to anything built more than 15 years ago (so 2009), or 5 years with a conditional use permit. They have about 180,000 homes worth of empty offices.

Meanwhile Los Angeles is dealing with the consequences of ED1, an emergency declaration that the city needs more affordable housing. It turned out that no, they did not actually want lots of new affordable housing. I am not sure exactly why not, but there are limits, they are declaring them.

Jake: We’re doing the entitlements for a 39 unit ED1 project in LA, and here is where we are at:

-

Case was submitted about 2 months ago

-

We received a hold letter from the city

-

We addressed every change/correction with the architect

-

We resubmitted to the city

-

They deemed the case complete

-

They confirmed they are working on the letter of determination, which they will be issuing shortly

-

We then receive a letter of non compliance

-

We say WTF

So we get on a call with the city planner to find out what is going on, and it turns out their interpretation of state density bonus law has changed, and they will no longer be granting more than 5 incentives and 1 waiver for these projects.

Their stance must have changed in the last week. It’s a strong stance though. They made it very clear that this is the position of the city.

My advice to those working on these types of projects is to be proactive, and get in touch with your city planner to discuss what the best option is moving forward.

Toby Muresianu: Unbelievable but true: If you want to build exactly the 100% low-income Affordable housing the city declared an emergency need, years into a crisis, on land you own. They can’t simply tell you how much you’re allowed to build & may change their mind months after they do.

Mishka: LA deputy mayor of housing told NIMBY crowd @ UCLA’s land use law conference a few months ago that they were going to water down ED1 because it was getting too much housing production done, too soon. Citing a story of a dryer vent ‘blowing directly out onto a single family home.’

Los Angeles wrote an “emergency declaration” to address the housing crisis that somewhat accidentally *actually unleashed a torrent of applications to buildand now the city is furiously backpedaling.

There’s lots of room to disagree about specifics + genuine uncertainty about which policies generate lots of new units.

But the fundamental question for politicians to ask themselves is do they, in fact, want to make housing less scarce? If not it would be better to say so.

That’s right. The problem was that there was too much purely affordable housing being built. Can’t have that.

Here is a dashboard of currently proposed ED1 projects. Joe Cohen is hard at work pointing out exactly how illegal are various attempts to deny the permits.

Wall Street Journal notices Austin’s rents are down 7%, frames this as bad news. Then we have this, saying rents declined 12.5% in Austin in December, describing this great news as a ‘nosedive.’

Similarly:

Hayden Donnell: Auckland’s housing market has been struck by a disastrous plague of affordability, to the point that some buyers might even be *pauses to retch violentlygetting bargains.

Not content with their several other efforts I have noticed recently, the Minnesota Democrats propose requiring electrical vehicle parking and charging stations for all new homes. Why not use ‘electric’ as a reason to massively increase parking requirements?

I suppose you need that parking spot once you have driven away Uber and Lyft.

Jared Polis signs a new bill and embraces strong YIMBY rhetoric, calls for More Housing Now. The bill he signed prohibits discriminatory residential occupancy limits, one of the lowest hanging of fruits. Let people live in houses. So of course the top two comments are:

Monthly Earth Day – Crypto Whales NFT: This is ridiculous…..we can all read between the lines on the purpose behind this! 😡

Ariesangel1329: You want more housing yet you’re not deporting the EXTRA 50,000 illegal aliens that taxpayers are now paying for which includes housing. So in reality this will now allow 30 people in a 2 bedroom. The single-family neighborhoods are now gone. NWO plans are happening in CO.

It’s rough out there. Here is an op-ed on the subject from the Denver Post Editorial Board, arguing that the combination of ADUs and ending occupancy limits are excellent steps in the right direction.

This was only one of six major land-use bills signed by Polis this cycle. In addition to ADUs, we get increased density around bus and train stops, we eliminate many parking minimums, a new ‘right of first refusal’ for the government to buy properties to turn them into affordable housing (seems like an invitation to incinerate cash but not that destructive I guess), lifting occupancy restrictions (underrated), and requiring housing need assessments. Not bad.

But then Polis goes and makes a much bigger problem elsewhere? What is this?

Merrill Stillwell: Big shift in Colorado with a light form of rent control. This does not have the formal process in other States but it does open up lease renewals to legal scrutiny (including for unreasonable rent increases).

Sam Dangremond: Happy 4/20 to my fellow Colorado housing providers – and guess what… you’re now subject to a type of rent control!

Yesterday, @jaredpolis signed into law HB24-1098, the “For Cause Evictions” bill sponsored by (among others) Pueblo’s @NickForCO.

This law make it so that existing tenants cannot be “termed out” on a lease, and must be allowed to continue to rent a property UNLESS certain conditions apply.

One of those conditions is if the tenant refuses to sign a new lease “with reasonable terms.”

So, how much rent increase is still “reasonable”? Who knows! We’ll be spending lots of lawyer fees to find out.

The law even specifically says that we can’t try to get around being forced to continue to rent to a tenant by engaging in “retaliatory rent increases.”

But what’s this we see over here…? This law of course explicitly prohibits discriminatory and retaliatory rent increases, in accordance with existing law… but they also somehow snuck in a ban on “unconscionable” rent increases as well!

How much rent increase is “unconscionable“? Who knows!

Morgan: This is awesome! Now landlords can’t refuse to re-rent to you just so that they can jack up the prices for more profit.

Real Estate Ranger: I would prefer rent control over this lol.

Sam Dangremond: I had the same thought. An outright rent control regime at least gives owners certainty about what we can or can’t do.

Moses Kagan: Caused me to walk away from a sale process we were spending a bunch of time on.

They are very stupid.

Honestly don’t know how you underwrite a value-add MF deal in CO, at least until there are some court decisions about what constitutes “reasonable” I am particularly concerned that a court will look at the incremental return on capex for a given unit, rather than property-wide returns.

I don’t get why Polis did not know better. This is a disaster.

The good news is it could have been worse. According to the summary, you can choose to live in the property or have a family member do so, or put the property on sale.

Still, ‘sign a lease on reasonable terms’ is not something you ever want to be writing into a law. You need to define what are reasonable terms, at least to the extent of creating a generous safe harbor. Otherwise, this is rent control.

One obvious response to this is that a lot of properties might change hands a lot more. If the only way to evict a tenant is to sell the property, and the tenant is below market price and you can’t raise the rent? Well, guess what.

The lack of permission for a 24 hour bookstore is not unique to San Francisco.

Bernoulli Defect: It’s literally illegal to open a 24 hour coffeeshop (with a cat) in the UK without a council issued license.

These regularly get denied for spurious reasons and are a major reason London’s nightlife is below par.

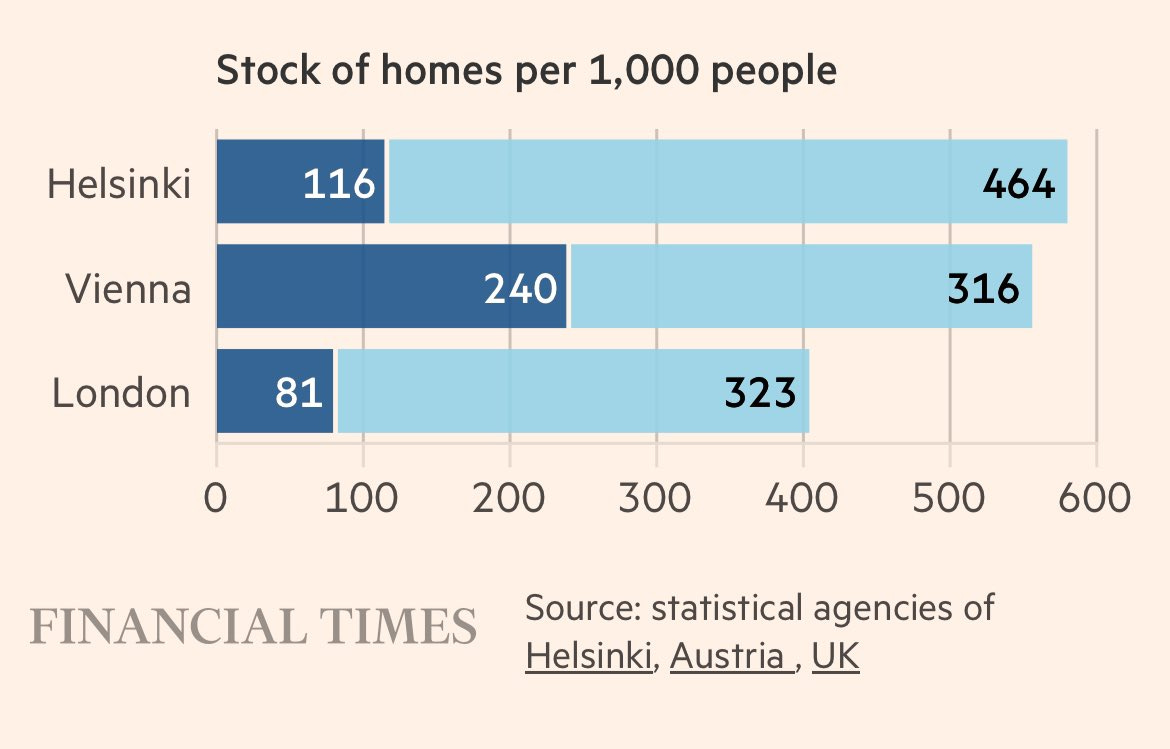

Iron Economist (May 17): Great article by @jburnmurdoch in the FT today. People really don’t get the scale of the Uk problem. If we want to have outcomes like the good places we really do need to expand the total stock by 25-35%.

Simo: London – a city of 9 million people with the housing stock of a city of 6.5 million. In that context, the crazy numbers you see for rent start to make sense.

You would need to push more than that to get to normal levels, because there is tons of latent demand to live in London.

Following up on British growth deterioration, Tyler Cowen notes Claude 3 estimates 12%-15% of British GDP is land rent, and he estimates NIMBY issues account for about 15% of their GDP shortfall. I continue to think the counterfactual cost is vastly higher, if we compare to actually building to demand. Lowering the cost of housing would radically alter competitiveness and supercharge everything else, if it were fully fixed. That does not tell us what would be available from practical forward-looking marginal changes. My guess is still quite a lot.

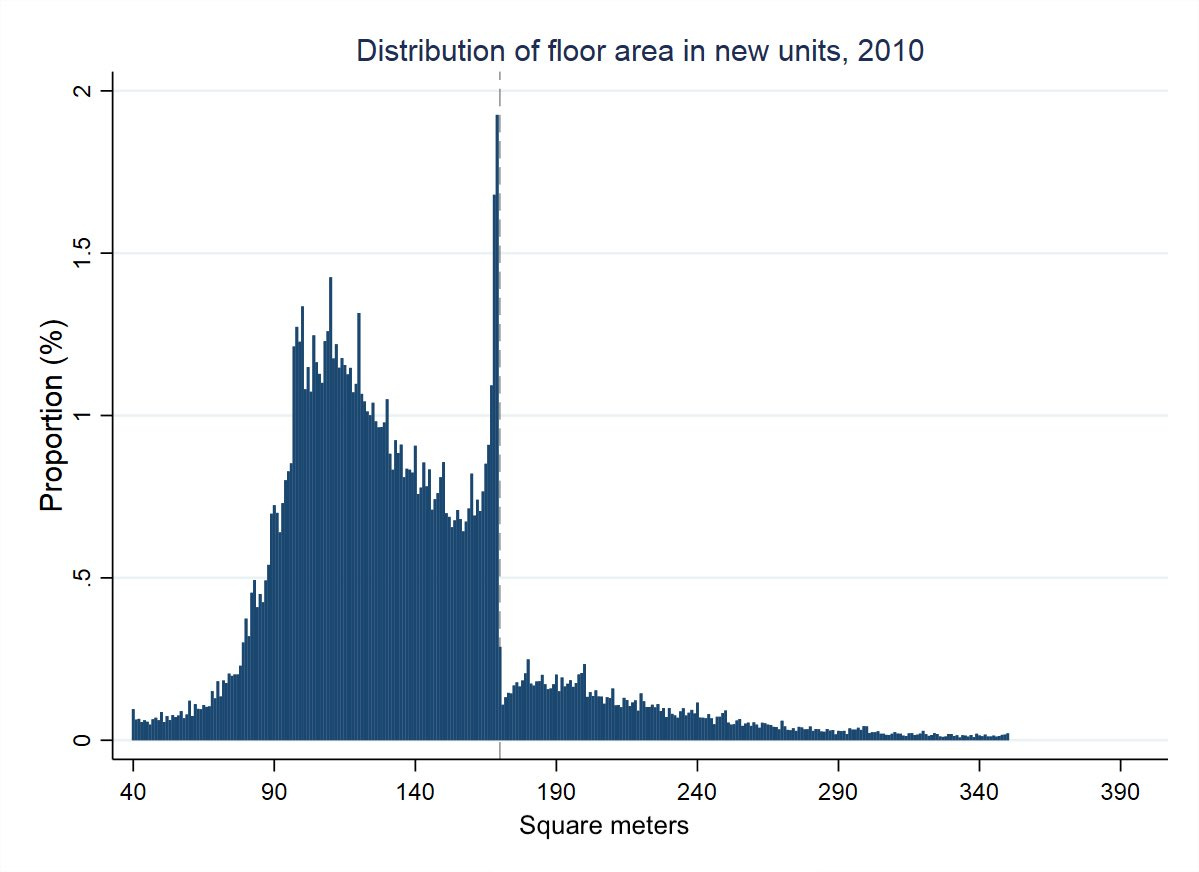

Alce Stapp: Guess the floor area threshold at which French law requires a licensed architect to establish the plans for a new home.

A reasonable extrapolation is this means 75% of square footage above 170 ART (about 1830 sq ft) was not built due to this requirement. That is one hell of a cost, well in excess of what the architect could plausibly cost themselves especially if you presume that having one should be a benefit. So this must somehow be a much bigger deal than that, presumably around regulatory uncertainty and delays and that architects are absolute pains in the ass.

The Chinese real estate boom was easy to see, but no one wanted to stop it, says The Wall Street Journal’s Rebecca Feng. Well, one person named Xi did not want to stop it, until Xi suddenly did want to stop it. Then it stopped.

There is a difference between noticing absurdity and wanting it to stop or to bet against it, and being able to pull any of that off. The story here is a lot of people assuming Xi would not want to stop it, or at least being sufficiently worried that Xi would choose not to stop it. Yes, the investments clearly made no economic sense, and were in some sense absurd.

But we all know the market can stay crazy longer than you can stay solvent. For the Chinese government doubly so. These were not easy short bets to make.

What this doubles down on is that when there is what we will later call a historic real estate bubble, to the extent that ‘bubble’ is a thing, it is going to be rather obvious that a bubble is present. China recently, America in the 2000s, Japan before that, and so on. These were not subtle situations. These were very obvious, absurd situations. You might not be able to profitably bet on a reckoning, but if you buy in near the top that is on you.

Supply of rental homes up 240% and real rents down 34% from when Milei took office to May 20. That is what happens when you dismantle rent control in a country with extreme inflation, meaning that the resulting rents could easily get absurd in all directions.

Taiwan has one of most severe housing crises, the post goes into detail of how that happened, which is of course a lot of restrictions on building housing.

Sacramento adapts new general pro-housing plan, mayor claims it makes it the most pro-housing city in the country. He correctly points out this is highly progressive, in the sense that this advances things progressives care about, although it also helps with things conservatives care about.

Salt Lake City exploring reducing minimum lot sizes, from 5,000 square feet to 1,400.

Here’s a building in Washington, DC that’s zoned for that.

Arizona legislature does it.

Welcoming Neighbors Network: The hits keep on coming in Arizona! HB 2721, which legalizes up to fiveplexes on all residential lots in larger cities just passed the AZ House of Representatives with a big bipartisan vote of 36 to 18.

There was talk that the governor would not sign, but Katie Hobbs came through, which now makes four pro-housing bills in Arizona.

She did veto another law, HB 2570:

Dennis Welch: Governor Hobbes vetoes the bipartisan “Arizona Starter Homes Act” (HB2570) that would have limited the zoning authority of most of the state’s cities and towns.

Daryl Fairweather: This logic makes no sense to me. Why is the Department of Defense telling the Governor of Arizona to block zoning for starter homes?