SpaceX’s unmatched streak of perfection with the Falcon 9 rocket is over

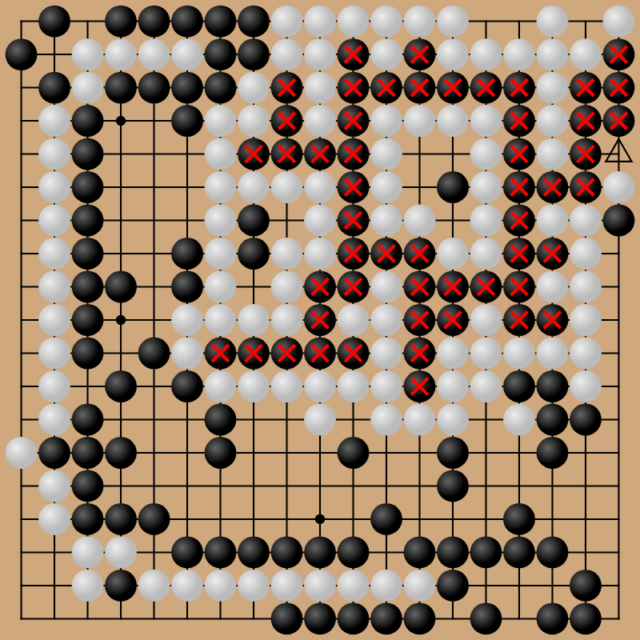

Enlarge / Numerous pieces of ice fell off the second stage of the Falcon 9 rocket during its climb into orbit from Vandenberg Space Force Base, California.

SpaceX

A SpaceX Falcon 9 rocket suffered an upper stage engine failure and deployed a batch of Starlink Internet satellites into a perilously low orbit after launch from California Thursday night, the first blemish on the workhorse launcher’s record in more than 300 missions since 2016.

Elon Musk, SpaceX’s founder and CEO, posted on X that the rocket’s upper stage engine failed when it attempted to reignite nearly an hour after the Falcon 9 lifted off from Vandenberg Space Force Base, California, at 7: 35 pm PDT (02: 35 UTC).

Frosty evidence

After departing Vandenberg to begin SpaceX’s Starlink 9-3 mission, the rocket’s reusable first stage booster propelled the Starlink satellites into the upper atmosphere, then returned to Earth for an on-target landing on a recovery ship parked in the Pacific Ocean. A single Merlin Vacuum engine on the rocket’s second stage fired for about six minutes to reach a preliminary orbit.

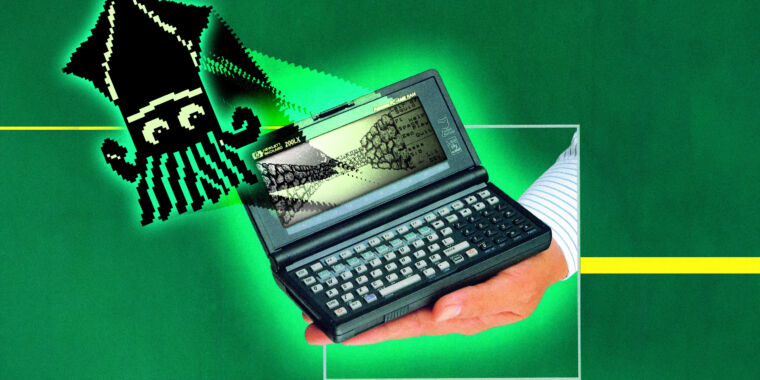

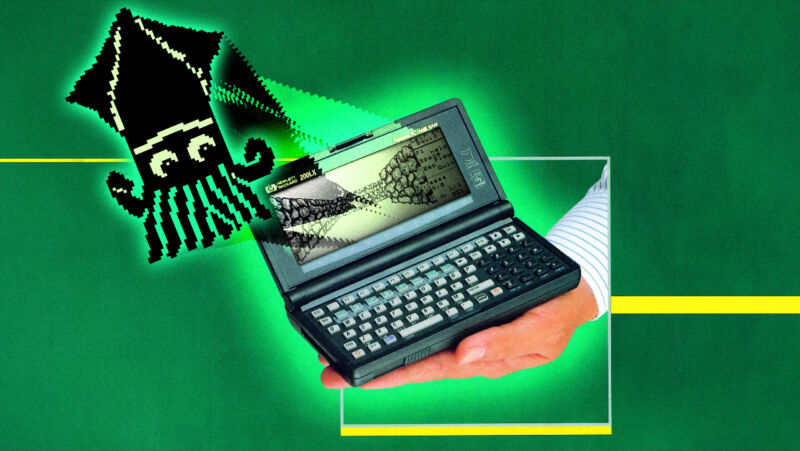

A few minutes after liftoff of SpaceX’s Starlink 9-3 mission, veteran observers of SpaceX launches noticed an unusual build-up of ice around the top of the Merlin Vacuum engine, which consumes a propellant mixture of super-chilled kerosene and cryogenic liquid oxygen. The liquid oxygen is stored at a temperature of several hundred degrees below zero.

Numerous chunks of ice fell away from the rocket as the upper stage engine powered into orbit, but the Merlin Vacuum, or M-Vac, engine appeared to complete its first burn as planned. A leak in the oxidizer system or a problem with insulation could lead to ice accumulation, although the exact cause, and its possible link to the engine malfunction later in flight, will be the focus of SpaceX’s investigation into the failure.

A second burn with the upper stage engine was supposed to raise the perigee, or low point, of the rocket’s orbit well above the atmosphere before releasing 20 Starlink satellites to continue climbing to their operational altitude with their own propulsion.

“Upper stage restart to raise perigee resulted in an engine RUD for reasons currently unknown,” Musk wrote in an update two hours after the launch. RUD (rapid unscheduled disassembly) is a term of art in rocketry that usually signifies a catastrophic or explosive failure.

“Team is reviewing data tonight to understand root cause,” Musk continued. “Starlink satellites were deployed, but the perigee may be too low for them to raise orbit. Will know more in a few hours.”

Telemetry from the Falcon 9 rocket indicated it released the Starlink satellites into an orbit with a perigee just 86 miles (138 kilometers) above Earth, roughly 100 miles (150 kilometers) lower than expected, according to Jonathan McDowell, an astrophysicist and trusted tracker of spaceflight activity. Detailed orbital data from the US Space Force was not immediately available.

Ripple effects

While ground controllers scramble to salvage the 20 Starlink satellites, SpaceX engineers began probing what went wrong with the second stage’s M-Vac engine. For SpaceX and its customers, the investigation into the rocket malfunction is likely the more pressing matter.

SpaceX could absorb the loss of 20 Starlink satellites relatively easily. The company’s satellite assembly line can produce 20 Starlink spacecraft in a few days. But the Falcon 9 rocket’s dependability and high flight rate have made it a workhorse for NASA, the US military, and the wider space industry. An investigation will probably delay several upcoming SpaceX flights.

The first in-flight failure for SpaceX’s Falcon rocket family since June 2015, a streak of 344 consecutive successful launches until tonight.

A lot of unusual ice was observed on the Falcon 9’s upper stage during its first burn tonight, some of it falling into the engine plume. https://t.co/1vc3P9EZjj pic.twitter.com/fHO73MYLms

— Stephen Clark (@StephenClark1) July 12, 2024

Depending on the cause of the problem and what SpaceX must do to fix it, it’s possible the company can recover from the upper stage failure and resume launching Starlink satellites soon. Most of SpaceX’s launches aren’t for external customers, but deploy satellites for the company’s own Starlink network. This gives SpaceX a unique flexibility to quickly return to flight with the Falcon 9 without needing to satisfy customer concerns.

The Federal Aviation Administration, which licenses all commercial space launches in the United States, will require SpaceX to conduct a mishap investigation before resuming Falcon 9 flights.

“The FAA will be involved in every step of the investigation process and must approve SpaceX’s final report, including any corrective actions,” an FAA spokesperson said. “A return to flight is based on the FAA determining that any system, process, or procedure related to the mishap does not affect public safety.”

Two crew missions are supposed to launch on SpaceX’s human-rated Falcon 9 rocket in the next six weeks, but those launch dates are now in doubt.

The all-private Polaris Dawn mission, commanded by billionaire Jared Isaacman, is scheduled to launch on a Falcon 9 rocket on July 31 from NASA’s Kennedy Space Center in Florida. Isaacman and three commercial astronaut crewmates will spend five days in orbit on a mission that will include the first commercial spacewalk outside their Crew Dragon capsule, using new pressure suits designed and built by SpaceX.

NASA’s next crew mission with SpaceX is slated to launch from Florida aboard a Falcon 9 rocket around August 19. This team of four astronauts will replace a crew of four who have been on the International Space Station since March.

Some customers, especially NASA’s commercial crew program, will likely want to see the results of an in-depth inquiry and require SpaceX to string together a series of successful Falcon 9 flights with Starlink satellites before clearing their own missions for launch. SpaceX has already launched 70 flights with its Falcon family of rockets since January 1, an average cadence of one launch every 2.7 days, more than the combined number of orbital launches by all other nations this year.

With this rapid-fire launch cadence, SpaceX could quickly demonstrate the fitness of any fixes engineers recommend to resolve the problem that caused Thursday night’s failure. But investigations into rocket failures often take weeks or months. It was too soon, early on Friday, to know the true impact of the upper stage malfunction on SpaceX’s launch schedule.

SpaceX’s unmatched streak of perfection with the Falcon 9 rocket is over Read More »