With another record broken, the world’s busiest spaceport keeps getting busier

It’s not just the number of rocket launches, but how much stuff they’re carrying into orbit.

With 29 Starlink satellites onboard, a Falcon 9 rocket streaks through the night sky over Cape Canaveral Space Force Station, Florida, on Monday night. Credit: Stephen Clark/Ars Technica

CAPE CANAVERAL, Florida—Another Falcon 9 rocket fired off its launch pad here on Monday night, taking with it another 29 Starlink Internet satellites to orbit.

This was the 94th orbital launch from Florida’s Space Coast so far in 2025, breaking the previous record for the most satellite launches in a calendar year from the world’s busiest spaceport. Monday night’s launch came two days after a Chinese Long March 11 rocket lifted off from an oceangoing platform on the opposite side of the world, marking humanity’s 255th mission to reach orbit this year, a new annual record for global launch activity.

As of Wednesday, a handful of additional missions have pushed the global figure this year to 259, putting the world on pace for around 300 orbital launches by the end of 2025. This will more than double the global tally of 135 orbital launches in 2021.

Routine vs. complacency

Waiting in the darkness a few miles away from the launch pad, I glanced around at my surroundings before watching SpaceX’s Falcon 9 thunder into the sky. There were no throngs of space enthusiasts anxiously waiting for the rocket to light up the night. No line of photographers snapping photos. Just this reporter and two chipper retirees enjoying what a decade ago would have attracted far more attention.

Go to your local airport and you’ll probably find more people posted up at a plane-spotting park at the end of the runway. Still, a rocket launch is something special. On the same night that I watched the 94th launch of the year depart from Cape Canaveral, Orlando International Airport saw the same number of airplane departures in just three hours.

The crowds still turn out for more meaningful launches, such as a test flight of SpaceX’s Starship megarocket in Texas or Blue Origin’s attempt to launch its second New Glenn heavy-lifter here Sunday. But those are not the norm. Generations of aerospace engineers were taught that spaceflight is not routine for fear of falling into complacency, leading to failure, and in some cases, death.

Compared to air travel, the mantra remains valid. Rockets are unforgiving, with engines operating under extreme pressures, at high thrust, and unable to suck in oxygen from the atmosphere as a reactant for combustion. There are fewer redundancies in a rocket than in an airplane.

The Falcon 9’s established failure rate is less than 1 percent, well short of any safety standard for commercial air travel but good enough to be the most successful orbital-class in history. Given the Falcon 9’s track record, SpaceX seems to have found a way to overcome the temptation for complacency.

A Chinese Long March 11 rocket carrying three Shiyan 32 test satellites lifts off from waters off the coast of Haiyang in eastern China’s Shandong province on Saturday. Credit: Guo Jinqi/Xinhua via Getty Images

Following the trend

The upward trend in rocket launches hasn’t always been the case. Launch numbers were steady for most of the 2010s, following a downward trend in the 2000s, with as few as 52 orbital launches in 2005, the lowest number since the nascent era of spaceflight in 1961. There were just seven launches from here in Florida that year.

The numbers have picked up dramatically in the last five years as SpaceX has mastered reusable rocketry.

It’s important to look at not just the number of launches but also how much stuff rockets are actually putting into orbit. More than half of this year’s launches were performed using SpaceX’s Falcon 9 rocket, and the majority of those deployed Starlink satellites for SpaceX’s global Internet network. Each spacecraft is relatively small in size and weight, but SpaceX stacks up to 29 of them on a single Falcon 9 to max out the rocket’s carrying capacity.

All this mass adds up to make SpaceX’s dominance of the launch industry appear even more absolute. According to analyses by BryceTech, an engineering and space industry consulting firm, SpaceX has launched 86 percent of all the world’s payload mass over the 18 months from the beginning of 2024 through June 30 of this year.

That’s roughly 2.98 million kilograms of the approximately 3.46 million kilograms (3,281 of 3,819 tons) of satellite hardware and cargo that all the world’s rockets placed into orbit during that timeframe.

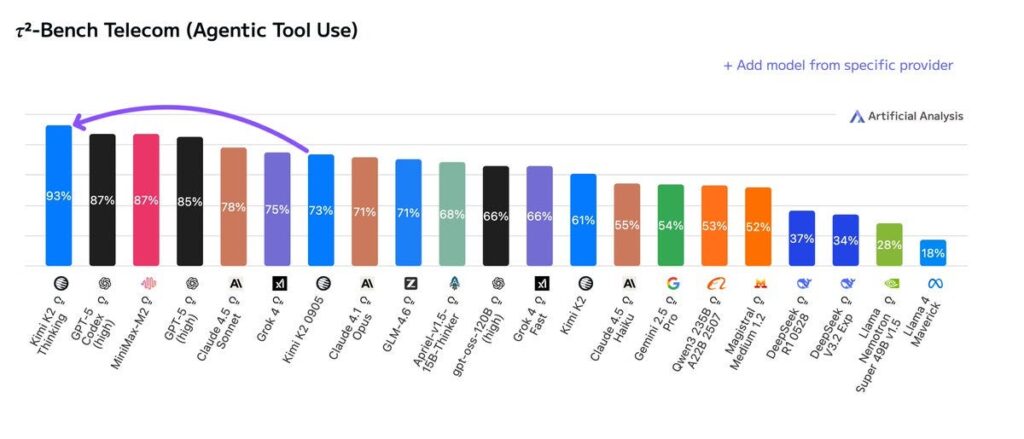

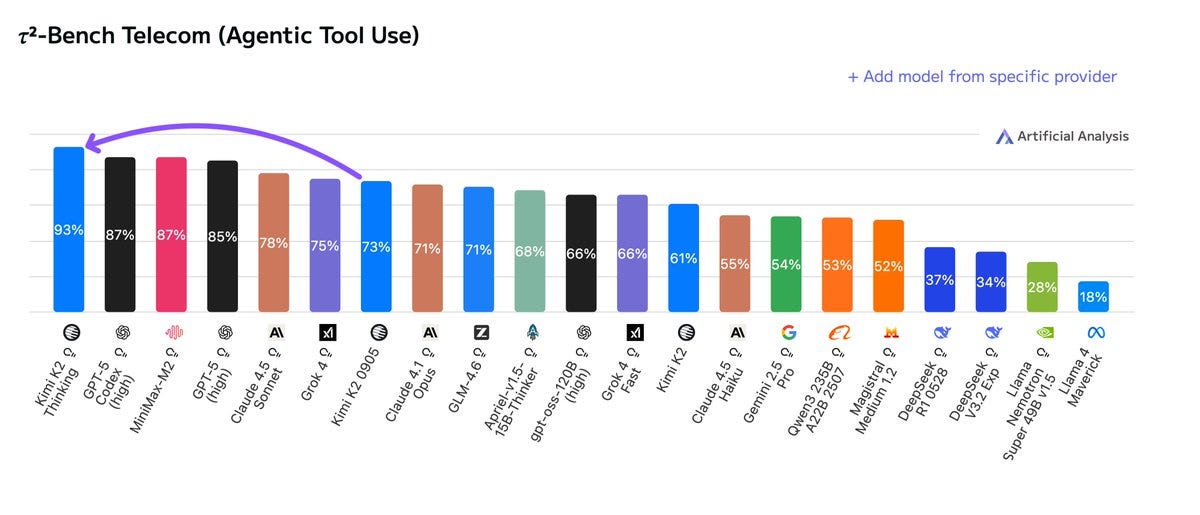

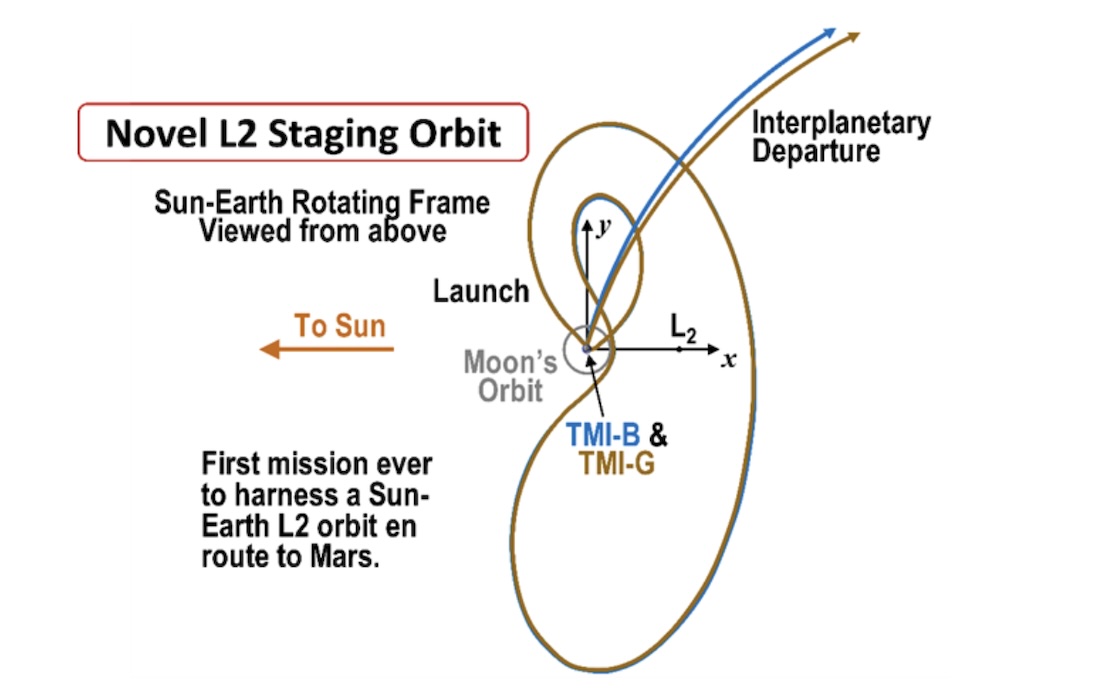

The charts below were created by Ars Technica using publicly available launch numbers and payload mass estimates from BryceTech. The first illustrates the rising launch cadence at Cape Canaveral Space Force Station and NASA’s Kennedy Space Center, located next to one another in Florida. Launches from other US-licensed spaceports, primarily Vandenberg Space Force Base, California, and Rocket Lab’s base at Māhia Peninsula in New Zealand, are also on the rise.

These numbers represent rockets that reached low-Earth orbit. We didn’t include test flights of SpaceX’s Starship rocket in the chart because all of its launches have intentionally flown on suborbital trajectories.

In the second chart, we break down the payload upmass to orbit from SpaceX, other US companies, China, Russia, and other international launch providers.

Launch rates are on a clear upward trend, while SpaceX has launched 86 percent of the world’s total payload mass to orbit since the beginning of 2024. Credit: Stephen Clark/Ars Technica/BryceTech

Will it continue?

It’s a good bet that payload upmass will continue to rise in the coming years, with heavy cargo heading to orbit to further expand SpaceX’s Starlink communications network and build out new megaconstellations from Amazon, China, and others. The US military’s Golden Dome missile defense shield will also have a ravenous appetite for rockets to get it into space.

SpaceX’s Starship megarocket could begin flying to low-Earth orbit next year, and if it does, SpaceX’s preeminence in delivering mass to orbit will remain assured. Starship’s first real payloads will likely be SpaceX’s next-generation Starlink satellites. These larger, heavier, more capable spacecraft will launch 60 at a time on Starship, further stretching SpaceX’s lead in the upmass war.

But Starship’s arrival will come at the expense of the workhorse Falcon 9, which lacks the capacity to haul the next-gen Starlinks to orbit. “This year and next year I anticipate will be the highest Falcon launch rates that we will see,” said Stephanie Bednarek, SpaceX’s vice president of commercial sales, at an industry conference in July.

SpaceX is on pace for between 165 and 170 Falcon 9 launches this year, with 144 flights already in the books for 2025. Last year’s total for Falcon 9 and Falcon Heavy was 134 missions. SpaceX has not announced how many Falcon 9 and Falcon Heavy launches it plans for next year.

Starship is designed to be fully and rapidly reusable, eventually enabling multiple flights per day. But that’s still a long way off, and it’s unknown how many years it might take for Starship to surpass the Falcon 9’s proven launch tempo.

A Starship rocket and Super Heavy booster lift off from Starbase, Texas. Credit: SpaceX

In any case, with Starship’s heavy-lifting capacity and upgraded next-gen satellites, SpaceX could match an entire year’s worth of new Starlink capacity with just two fully loaded Starship flights. Starship will be able to deliver 60 times more Starlink capacity to orbit than a cluster of satellites riding on a Falcon 9.

There’s no reason to believe SpaceX will be satisfied with simply keeping pace with today’s Starlink growth rate. There are emerging market opportunities in connecting satellites with smartphones, space-based computer processing and data storage, and military applications.

Other companies have medium-to-heavy rockets that are either new to the market or soon to debut. These include Blue Origin’s New Glenn, now set to make its second test flight in the coming days, with a reusable booster designed to facilitate a rapid-fire launch cadence.

Despite all of the newcomers, most satellite operators see a shortage of launch capacity on the commercial market. “The industry is likely to remain supply-constrained through the balance of the decade,” wrote Caleb Henry, director of research at the industry analysis firm Quilty Space. “That could pose a problem for some of the many large constellations on the horizon.”

United Launch Alliance’s Vulcan rocket, Rocket Lab’s Neutron, Stoke Space’s Nova, Relativity Space’s Terran R, and Firefly Aerospace and Northrop Grumman’s Eclipse are among the other rockets vying for a bite at the launch apple.

“Whether or not the market can support six medium to heavy lift launch providers from the US alone—plus Starship—is an open question, but for the remainder of the decade launch demand is likely to remain high, presenting an opportunity for one or more new players to establish themselves in the pecking order,” Henry wrote in a post on Quilty’s website.

China’s space program will need more rockets, too. That nation’s two megaconstellations, known as Guowang and Qianfan, will have thousands of satellites requiring a significant uptick on Chinese launches.

Taking all of this into account, the demand curve for access to space is sure to continue its upward trajectory. How companies meet this demand, and with how many discrete departures from Earth, isn’t quite as clear.

With another record broken, the world’s busiest spaceport keeps getting busier Read More »