Protection from COVID reinfections plummeted from 80% to 5% with omicron

“The short-lived immunity leads to repeated waves of infection, mirroring patterns observed with common cold coronaviruses and influenza,” Hiam Chemaitelly, first author of the study and assistant professor of population health sciences at Weill Cornell Medicine-Qatar, said in a statement. “This virus is here to stay and will continue to reinfect us, much like other common cold coronaviruses. Regular vaccine updates are critical for renewing immunity and protecting vulnerable populations, particularly the elderly and those with underlying health conditions.”

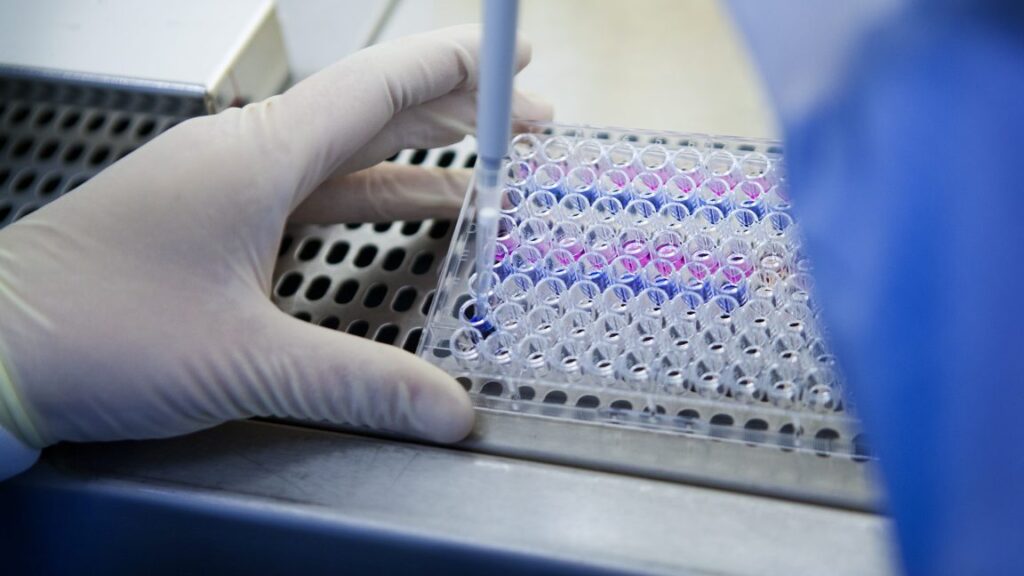

Chemaitelly and colleagues speculate that the shift in the pandemic came from shifts in evolutionary pressures that the virus faced. In early stages of the global crisis, the virus evolved and spread by increasing its transmissibility. Then, as the virus lapped the globe and populations began building up immunity, the virus faced pressure to evade that immunity.

However, the fact that researchers did not find such diminished protection against severe, deadly COVID-19 suggests that the evasion is likely targeting only certain components of our immune system. Generally, neutralizing antibodies, which can block viral entry into cells, are the primary protection against non-severe infection. On the other hand, immunity against severe disease is through cellular mechanisms, such as memory T cells, which appear unaffected by the pandemic shift, the researchers write.

Overall, the study “highlights the dynamic interplay between viral evolution and host immunity, necessitating continued monitoring of the virus and its evolution, as well as periodic updates of SARS-CoV-2 vaccines to restore immunity and counter continuing viral immune evasion,” Chemaitelly and colleagues conclude.

In the US, the future of annual vaccine updates may be in question, however. Prominent anti-vaccine advocate and conspiracy theorist Robert F. Kennedy Jr. is poised to become the country’s top health official, pending Senate confirmation next week. In 2021, as omicron was rampaging through the country for the first time, Kennedy filed a petition with the Food and Drug Administration to revoke access and block approval of all current and future COVID-19 vaccines.

Protection from COVID reinfections plummeted from 80% to 5% with omicron Read More »