Judge mulls sanctions over Google’s “shocking” destruction of internal chats

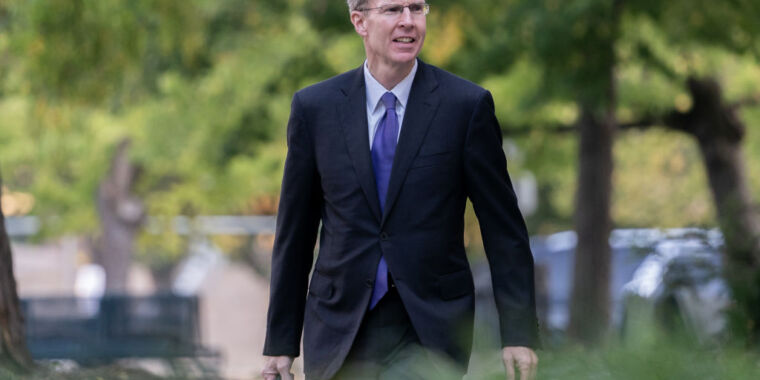

Enlarge / Kenneth Dintzer, litigator for the US Department of Justice, exits federal court in Washington, DC, on September 20, 2023, during the antitrust trial to determine if Alphabet Inc.’s Google maintains a monopoly in the online search business.

Near the end of the second day of closing arguments in the Google monopoly trial, US district judge Amit Mehta weighed whether sanctions were warranted over what the US Department of Justice described as Google’s “routine, regular, and normal destruction” of evidence.

Google was accused of enacting a policy instructing employees to turn chat history off by default when discussing sensitive topics, including Google’s revenue-sharing and mobile application distribution agreements. These agreements, the DOJ and state attorneys general argued, work to maintain Google’s monopoly over search.

According to the DOJ, Google destroyed potentially hundreds of thousands of chat sessions not just during their investigation but also during litigation. Google only stopped the practice after the DOJ discovered the policy. DOJ’s attorney Kenneth Dintzer told Mehta Friday that the DOJ believed the court should “conclude that communicating with history off shows anti-competitive intent to hide information because they knew they were violating antitrust law.”

Mehta at least agreed that “Google’s document retention policy leaves a lot to be desired,” expressing shock and surprise that a large company like Google would ever enact such a policy as best practice.

Google’s attorney Colette Connor told Mehta that the DOJ should have been aware of Google’s policy long before the DOJ challenged the conduct. Google had explicitly disclosed the policy to Texas’ attorney general, who was involved in DOJ’s antitrust suit over both Google’s search and adtech businesses, Connor said.

Connor also argued that Google’s conduct wasn’t sanctionable because there is no evidence that any of the missing chats would’ve shed any new light on the case. Mehta challenged this somewhat, telling Connor, “We just want to know what we don’t know. We don’t know if there was a treasure trove of material that was destroyed.”

During rebuttal, Dintzer told Mehta that Google’s decision to tell Texas about the policy but not the federal government did not satisfy their disclosure obligation under federal rules of civil procedure in the case. That rule says that “only upon finding that the party acted with the intent to deprive another party of the information’s use in the litigation may” the court “presume that the lost information was unfavorable to the party.”

The DOJ has asked the court to make that ruling and issue four orders sanctioning Google. They want the court to order the “presumption that deleted chats were unfavorable,” the “presumption that Google’s proffered justification” for deleting chats “is pretextual” (concealing Google’s true rationale), and the “presumption that Google intended” to delete chats to “maintain its monopoly.” The government also wants a “prohibition on argument by Google that the absence of evidence is evidence of adverse inference,” which would stop Google from arguing that the DOJ is just assuming the deleted chats are unfavorable to Google.

Mehta asked Connor if she would agree that, at “minimum,” it was “negligent” of Google to leave it to employees to preserve chats on sensitive discussions, but Connor disagreed. She argued that “given the typical use of chat,” Google’s history-off policy was “reasonable.”

Connor told Mehta that the DOJ must prove that Google intended to hide evidence for the court to order sanctions.

That intent could be demonstrated another way, Mehta suggested, recalling that “Google has been very deliberate in advising employees about what to say and what not to say” in discussions that could indicate monopolistic behaviors. That included telling employees, “Don’t use the term markets,” Mehta told Connor, asking if that kind of conduct could be interpreted as Google’s intent to hide evidence.

But Connor disagreed again.

“No, we don’t think you can use it as evidence,” Connor said. “It’s not relevant to the claims in this case.”

But during rebuttal, Dintzer argued that there was evidence of its relevance. He said that testimony from Google employees showed that Google’s chat policy “was uniformly used as a way of communicating without creating discoverable information” intentionally to hide the alleged antitrust violations.

Judge mulls sanctions over Google’s “shocking” destruction of internal chats Read More »