Monthly Roundup #17: April 2024

As always, a lot to get to. This is everything that wasn’t in any of the other categories.

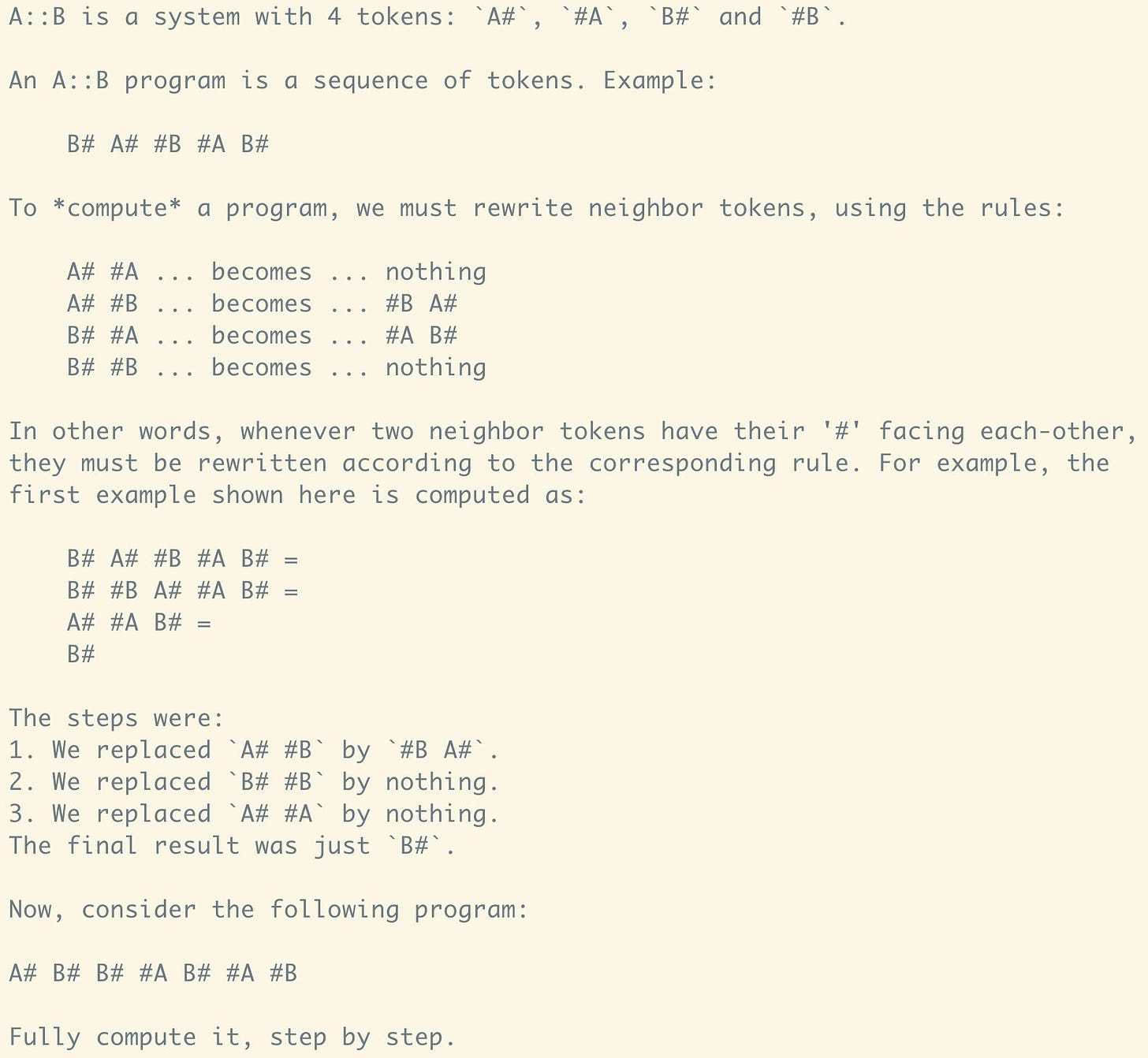

You might have to find a way to actually enjoy the work.

Greg Brockman (President of OpenAI): Sustained great work often demands enjoying the process for its own sake rather than only feeling joy in the end result. Time is mostly spent between results, and hard to keep pushing yourself to get to the next level if you’re not having fun while doing so.

Yeah. This matches my experience in all senses. If you don’t find a way to enjoy the work, your work is not going to be great.

This is the time. This is the place.

Guiness Pig: In a discussion at work today:

“If you email someone to ask for something and they send you an email trail showing you that they’ve already sent it multiple times, that’s a form of shaming, don’t do that.”

Others nodding in agreement while I try and keep my mouth shut.

JFC…

Goddess of Inflammable Things: I had someone go over my head to complain that I was taking too long to do something. I showed my boss the email where they had sent me the info I needed THAT morning along with the repeated requests for over a month. I got accused by the accuser of “throwing them under the bus”.

You know what these people need more of in their lives?

This is a Twitter argument over whether a recent lawsuit is claiming Juul intentionally evaded age restrictions to buy millions in advertising on websites like Nickelodeon and Cartoon Network and ‘games2girls.com’ that are designed for young children, or whether they bought those ads as the result of ‘programmatic media buyers’ like AdSense ‘at market price,’ which would… somehow make this acceptable? What? The full legal complaint is here. I find it implausible that this activity was accidental, and Claude agreed when given the text of the lawsuit.

I strongly agree with Andrew Sullivan, in most situations playing music in public that others can hear is really bad and we should fine people who do it until they stop. They make very good headphones, if you want to listen to music then buy them. I am willing to make exceptions for groups of people listening together, but on your own? Seriously, what the hell.

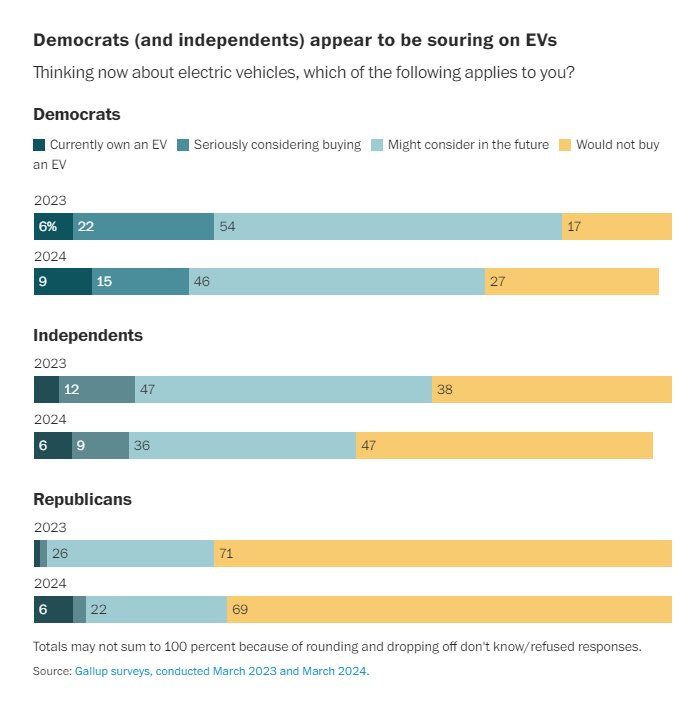

Democrats somewhat souring on all of electric cars, perhaps to spite Elon Musk?

The amount of own-goaling by Democrats around Elon Musk is pretty incredible.

New York Post tries to make ‘resenteeism’ happen, as a new name for people who hate their job staying to collect a paycheck because they can’t find a better option, but doing a crappy job. It’s not going to happen.

Alice Evans points out that academics think little of sending out, in the latest cse, thousands of randomly generated fictitious resumes, wasting quite a lot of people’s time and introducing a bunch of noise into application processes. I would kind of be fine with that if IRBs let you run ordinary obviously responsible experiments in other ways as well, as opposed to that being completely insane in the other direction. If we have profound ethical concerns about handing volunteers a survey, then this is very clearly way worse.

Germany still will not let stores be open on Sunday to enforce rest. Which got even more absurd now that there are fully automated supermarkets, which are also forced to close. I do think this is right. Remember that on the Sabbath, one not only cannot work. One cannot spend money. Having no place to buy food is a feature, not a bug, forcing everyone to plan ahead, this is not merely about guarding against unfair advantage. Either go big, or leave home. I also notice how forcing everyone to close on Sunday is rather unfriendly to Jews in particular, who must close and not shop on Saturday and now have to deal with this two days in a row.

I call upon all those who claim to care deeply about our civil rights, about the separation of powers, government overreach and authoritarianism and tyranny, and who warn against the government having broad surveillance powers. Take your concerns seriously. Hold yourselves to at least the standard shown by Eliezer Yudkowsky (who many of you claim cares not for such concerns).

Help spread the word that the government is in the process of reauthorizing Section 702 of the Foreign Intelligence Surveillance Act, with new language that is even broader than before.

This passed the house this week but has not as of this writing passed the Senate.

The House voting included a proposed amendment requiring warrants to search Americans’ communications data failed by one vote, 212-212. And an effort similar to the current one failed in December 2023.

So one cannot say ‘my voice could not have mattered.’

I urge the Senate not to pass this bill, and have contacted both of my senators.

Alas, this iteration of the matter only came to my attention this morning.

Elizabeth Goitein: I’m sad—and frankly baffled—to report that the House voted today to reward the government’s widespread abuses of Section 702 by massively expanding the government’s powers to conduct warrantless surveillance.

…

Check out this list of how members voted.

That’s bad enough. But the House also voted for the amendment many of us have been calling “Patriot Act 2.0.” This will force ordinary American businesses that provide wifi to their customers to give the NSA access to their wifi equipment to conduct 702 surveillance

I’m not kidding. The bill actually does that. If you have any doubts, read this post by a FISA Court amicus, who took the unusual step of going public to voice his concerns. Too bad members of the House didn’t listen.

Next time you pull out your phone and start sending messages in a laundromat… or a barber shop… or in the office building where you work… just know that the NSA might very well have access to those communications.

And that’s not all. The House also passed an amendment authorizing completely suspcionless searches for the communications of non-U.S. persons seeking permission to travel to the U.S., even if the multiple vetting mechanisms already in place reveal no cause for concern.

…

There are more bad things in this bill—a needless expansion of the definition of “foreign intelligence,” provisions that weaken the role of amici in FISA Court proceedings, special treatment for members of Congress—but it would take too many tweets to cover them all.

There is certainly a ‘if you are constantly harping on the need to not regulate AI lest we lose our freedoms, but do not say a word about such other far more blatant unconstitutional violations of our freedoms over far smaller risks, then we should presume that your motivations lie elsewhere.’

But my primary purpose here really is, please, if you can, help stop this bill. Which is why it is here in the monthly, rather than in an AI post.

Take the following (full) quoted statement both seriously and literally.

Ryan Moulton: “Agency is immoral because you might have an effect on the world. The only moral entity is a potted plant.”

This is not exactly what a lot of people believe, but it’s close enough that it would compress a lot of arguments to highlight only the differences from this.

Keller Scholl: There’s also a very slight variant that runs “an effect on the world that is not absolutely subject to the will of the majority”.

Ryan Moulton: Yes, I think that is one of the common variants. Also of the form “with a preemptive consensus of all the relevant stakeholders.”

Also see my post Asymmetric Justice, or The Copenhagen Interpretation of Ethics, both highly recommended if you have not encountered them before.

Andrew Rettek: Some people see this, decide that you can’t be a potted plant, then decide that since you can’t possibly get enough consent you don’t need ANY consent to do Good Things ™.

This is presumably in response to the recent NYT op-ed from Peter Coy, attempting to argue that everyone at all impacted must not only agree but must also fully understand, despite almost no one ever actually understanding much of anything.

Nikhil Krishnan reports on his extensive attempts to solve the loneliness problem.

Nikhil Krishnan: spent like all of my 20s obsessed with trying to fix the loneliness problem – hosted tons of events, tried starting a company around it, etc.

Main two takeaways

1) The fact that you can stay home and basically self-medicate with content in a way that feels not-quite-bored is the biggest barrier.

Meeting new people consistently is naturally somewhat uncomfortable no matter how structured/well designed an event is. Being presented with an option of staying home and chilling vs. going out to meet new people, most people will pick the former and that’s pretty hard to fight.

2) Solving loneliness is largely reliant on altruists.

-altruists who take the time to plan events and get their friends together

-altruists that reach out to bring you into a plan being formed even if you’re not super close

-altruists that bug you to go out even when you don’t really want to I don’t think a company will solve this problem tbh, financial incentives inherently make this entire thing feel inorganic IMO. I’m not totally sure what will..

Altruists is a weird term these days. The point is, someone has to take the initiative, and make things happen, and most people won’t do it, or will do it very rarely.

In the long term, you are better off putting in the work to make things happen, but today it sounds like work even if someone else did take the initiative to set things up, and the payoffs that justify it lie in the future.

How much can AI solve this? I think it can do a lot to help people coordinate and arrange for things people want. There are a lot of annoyances and barriers and (only sometimes trivial) inconveniences and (only sometimes mild) social awkwardness involved, and a lot of that can get reduced.

But (most of) you do still have to ultimately agree to get out of the house.

This Reddit post has a bunch of people explaining why creating community is hard, and why people mostly do not want the community that would actually exist, and the paths to getting it are tricky at best. In addition to no one wanting to take initiative, a point that was emphasized is that whoever does take initiative to do a larger gathering has to spend a lot of time and money on preparing, and if you ask for compensation then participation falls off a cliff.

I want to emphasize that this mostly is not true. People think you need to do all this work to prepare, especially for the food. And certainly it is nice when you do, but none of that is mandatory. There is nothing wrong with ordering pizza and having cake, both of which scale well, and supplementing with easy snacks. Or for smaller scales, you can order other things, or find things you can cook at scale. Do not let perfect become the enemy of the good.

After being correctly admonished on AI #59, I will be confining non-AI practical opportunities to monthly roundups unless they have extreme time sensitivity.

This month, we have the Institute for Progress hiring a Chief of Staff and also several other roles.

Also I alert you to the Bridgewater x Metaculus forecasting contest, with $25,000 in prizes, April 16 to May 21. The bigger prize, of course, is that you impress Bridgewater. They might not say this is a job interview, but it is also definitely a job interview. So if you want that job, you should enter.

Pennsylvania governor makes state agencies refund fees if they don’t process permits quickly, backlog gets reduced by 41%. Generalize this.

Often the government is only responding to the people, for example here is Dominik Peters seeing someone complain (quite obviously correctly) that the Paris metro should stop halting every time a bag is abandoned, and Reddit voters saying no. Yes, there is the possibility that this behavior is the only thing stopping people from trying to bomb Paris metro trains, but also no, there isn’t, it makes no physical sense?

A sixth member of the House (out of 435) resigns outright without switching to a new political office. Another 45 members are retiring.

Ken Buck (R-Colorado): This place just keeps going downhill, and I don’t need to spend my time here.

US immigration, regarding an EB-1 visa application, refers to Y-Combinator as ‘a technology bootcamp’ with ‘no evidence of outstanding achievements.’

Kirill Avery: USCIS, regarding my EB-1 US visa application, referred to Y Combinator as “a technology bootcamp” with “no evidence of outstanding achievements.”

update: a lot of people who claim i need a better lawyer are recommending me *MYlawyer now.

update #2: my lawyer claims he has successfully done green cards for [Stripe founders] @patrickc and @collision

Sasha Chapin: During my application for an O1, they threw out a similar RFE, wherein my lawyer was asked to prove that Buzzfeed was a significant media source

After the Steele dossier

This is just vexatiousness for the sake of it, nakedly.

Yes, I have also noticed this.

Nabeel Qureshi: One of the weirdest things I learned about government is that when their own processes are extremely slow or unworkable, instead of changing those processes, they just make *newprocesses to be used in the special cases when you actually want to get something done.

Patrick McKenzie: This is true and feels Kafkaesque when you are told “Oh why didn’t you use the process we keep available for non-doomed applicants” by advisors or policymakers.

OTOH, I could probably name three examples from tech without thinking that hard.

Tech companies generally have parallel paths through the recruiting process for favored candidates, partially because the stupid arbitrary hoop jumping offends them and the company knows it. Partially.

M&A exists in part to do things PM is not allowed to do, at higher cost.

“Escalations” exist for almost any sort of bureaucratic process, where it can get bumped above heads of owning team for a moment and then typically sent down with an all-but directive of how to resolve from folks on high.

Up to a point this process makes sense. You have a standard open protocol for X. That protocol is hardened to ensure it cannot be easily gamed or spammed, and that it does not waste too many of your various resources, and that its decisions can be systematically defended and so on. These are nice properties. They do not come cheap, in terms of the user experience, or ability to handle edge cases and avoid false negatives, or often ability to get things done at all.

Then you can and should have an alternative process for when that trade-off does not make sense, but which is gated in ways that protect you from overuse. And that all makes sense. Up to a point. The difference is that in government the default plan is often allowed to become essentially unworkable at all, and there is no process that notices and fixes this. Whereas in tech or other business there are usually checks on things if they threaten to reach that point.

Ice cream shop owner cannot figure out if new California law is going to require paying employees $20 an hour or not. Intent does not win in spots like this. Also why should I get higher mandatory pay at McDonald’s than an ice cream shop, and why should a labor group get to pick that pay level? The whole law never made any sense.

One never knows how seriously to take proposed laws that would be completely insane, but one making the rounds this month was California’s AB 2751.

State Assemblymember Matt Haney, who represents San Francisco, has introduced AB 2751, which introduces a so-called “right to disconnect” by ignoring calls, emails and texts sent after agreed-upon working hours.

It is amazing how people say, with a straight face, that ‘bar adults from making an agreement to not do X’ is a ‘right to X.’

Employers and employees will tend to agree to do this if this is worth doing, and not if it doesn’t. You can pay me more, or you can leave me in peace when I am not on the clock, your call. I have definitely selected both ends of that tradeoff at different times.

Mike Solana: California, in its ongoing effort to destroy itself, is once again trying to ban startups.

Eric Carlson: My first thoughts were whoever drafted this has:

A. Spent a lot of time in college

B. Worked for a non profit

C. Worked in government for a long time

D. Never worked for the private sector

To my surprise, Matt Haney lit up my whole bingo card.

His accomplishments include going to college, going back to college, going back again, working for a non profit, going into government, and still being in government.

On the other hand, this is an interesting enforcement mechanism:

Enforcement of the law would be done via the state Department of Labor, which could levy fines starting at $100 per incident for employers with a bad habit of requiring after-work communications.

Haney said that he decided after discussions with the labor committee to take a flexible approach to the legislation, in contrast to the more punitive stance taken by some countries.

It actually seems pretty reasonable to say that the cost of getting an employee’s attention outside work hours, in a non-emergency, is $100. You can wait until the next work day, or you can pay the $100.

Also, ‘agreed-upon working hours’ does not have to be 9-to-5. It would also seem reasonable to say that if you specify particular work hours and are paying by the hour, then it costs an extra hundred to reach you outside those hours in a non-emergency. For a startup, one could simply not agree to such hours in the first place?

A younger version of me would say ‘they would never be so insane as to pass and enforce this in the places it is insane’ but no, I am no longer so naive.

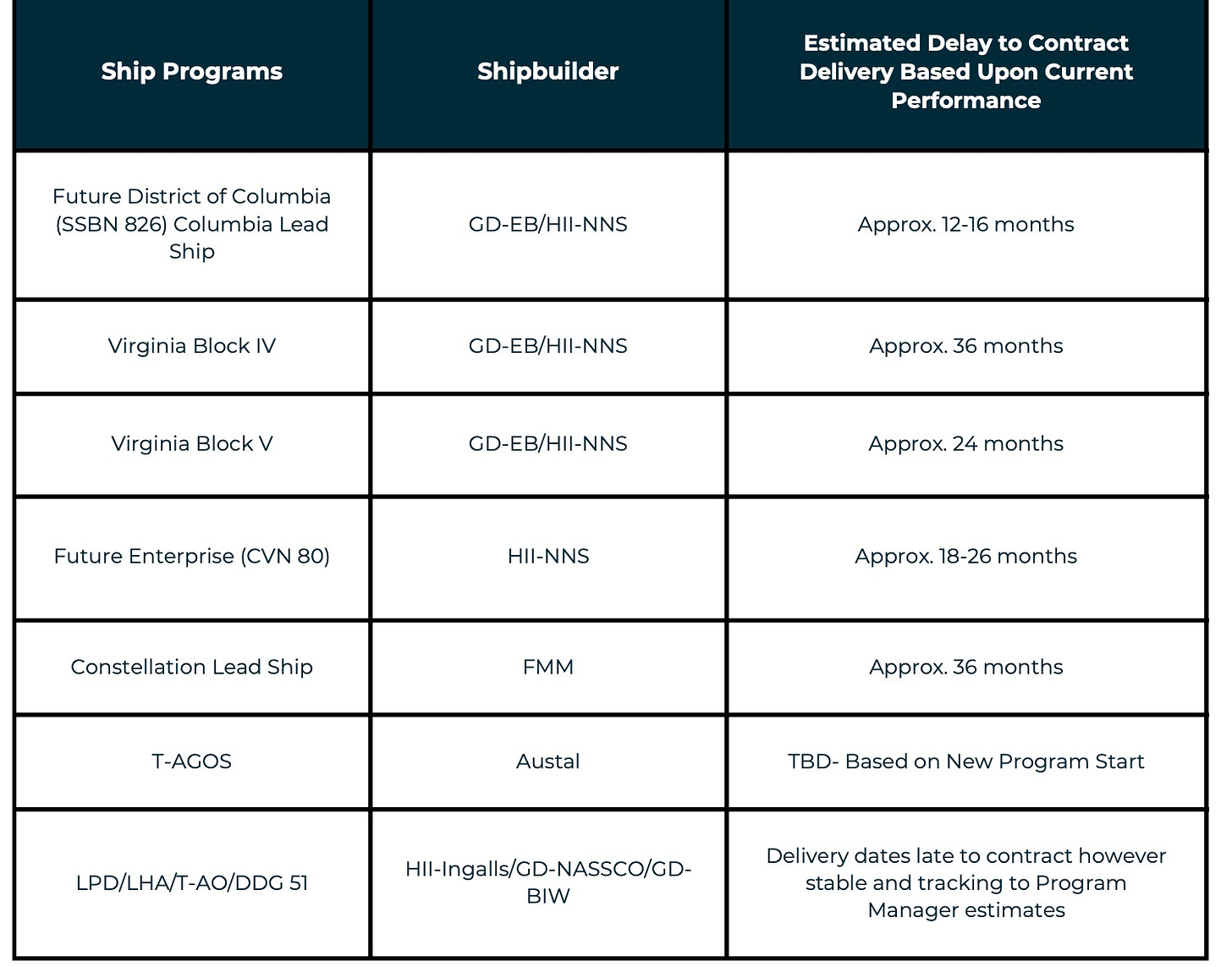

Every navy shipbuilding program is years delayed. Does that mean none of them are?

This was reported as ‘breaking’ and ‘jaw-dropping.’ We got statements like this quoting it:

Sean Davis (CEO of The Federalist): Every aspect of American life—the very things that made this country the richest and most powerful in history—is in rapid decline, and none of the political leaders in power today in either party seem to care.

…

We are rapidly approaching the point where the decline becomes irreversible. And the most evil and tragic aspect of the entire situation is that it never had to be this way.

But actually, this all seems… totally fine, right? All the contracts are taking 1-3 years longer than was scheduled. That is a highly survivable and reasonable and also predictable delay. So what if we are making out optimistic projections?

In wartime these delays would be unacceptable. In peacetime, I don’t see why I care.

It turns out it is illegal to pay someone cash not to run for office, in this case a $500k offer that a candidate for Imperial County supervisor turned down. So instead you offer them a no-show job that is incompatible with the office due to a conflict of interest? It is not like this kind of bribe is hard to execute another way. Unless you are trying to pay Donald Trump $5 billion, in which case it is going to be trickier. As they wonder at the end, it is curious who thinks her not running was worth a lot more than $500,000 to them, and why.

This is still one of those situations where there are ways around a restriction, and it would be better if we found a way to actually stop the behavior entirely, but better to throw up inconveniences and say the thing is not allowed, than to pretend the whole thing is okay.

We continue to have a completely insane approach to high-skilled immigration.

Neal Parikh: Friends of mine were basically kicked out. They’re senior people in London, Tehran, etc now. So pointless. Literally what is the point of letting someone from Iran or wherever get a PhD in electrical engineering from Stanford then kicking them out? It’s ridiculous. It would make way more sense to force them to stay. But you don’t even have to do that because they want to stay!

Alec Stapp: The presidents of other countries are actively recruiting global talent while the United States is kicking out people with STEM PhDs 🤦

If you thought Ayn Rand was strawmanning, here is a socialist professor explaining how to get a PS5 under socialism.

In related news, Paris to deny air conditioning to Olympic athletes in August to ‘combat climate change.’

New York mayor Eric Adams really is going to try to put his new-fangled ‘metal detectors’ into the subway system. This angers me with the fire of a thousand suns. It does actual zero to address any real problems.

Richard Hanania: Eric Adams says the new moonshot is putting metal detectors in the subway.

Imagine telling an American in 1969 who just watched the moon landing that 55 years later we would use “moonshot” to mean security theater for the sake of mentally ill bums instead of colonizing Mars.

Brad Pearce: I loved the exchange that was something like “90% of thefts in New York are committed by 350 people”

“Yeah well how many people do you want to arrest to stop it!”

“Uhhh, lets start with 350.”

New Yorkers, I am counting on you to respond as the situation calls for. It is one thing that Eric Adams is corrupt. This is very much going too far.

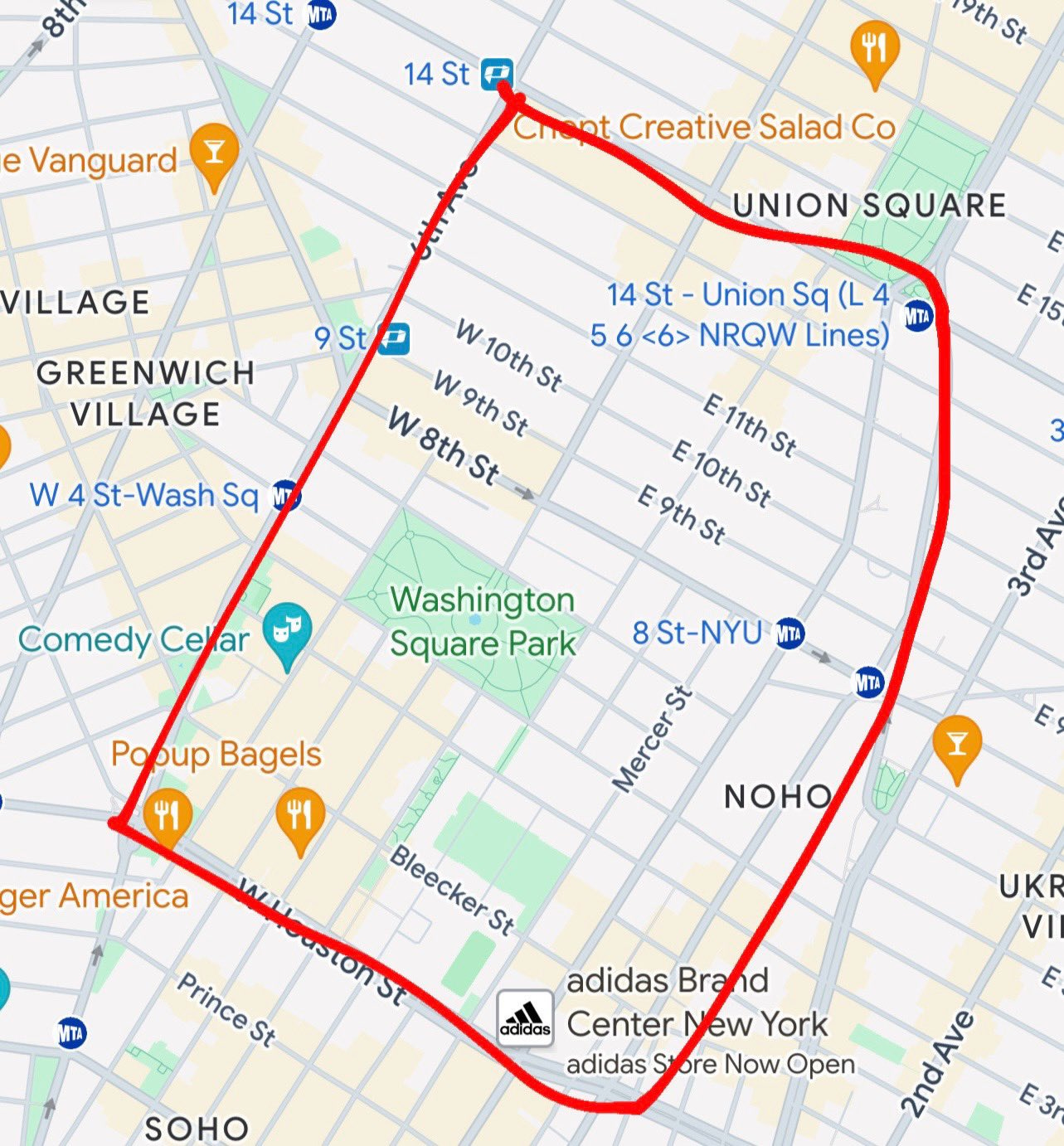

In other NYC crime news, go to hell real life, I’ll punch you in the face?

Tyler McCall: Some common threads popping up on these videos of women being punched in New York City:

1) Sounds like he says something like “sorry” or “excuse me” just before attacking

2) Appears to be targeting women on phones

3) All the women I saw were in this general area of Manhattan

Sharing partly because I live close to that area and that’s weird and upsetting and some people would want to know, partly because it is part of the recurring ‘have you tried either getting treatment for or punishing the people you keep constantly arresting.’ And partly because this had 1.8 million views so of course this happened.

The story of a crazy financial fraud, told Patrick McKenzie style. He is reacting in real time as he reads the story and it is glorious.

Governor DeSantis, no longer any form of hopeful, is determined to now be tough on crime, in the form of shoplifting and ‘porch piracy.’ He promises hell to pay.

TODAY: Governor DeSantis signed a bill to crack down on retail theft & porch piracy in Florida🎯👇

“If you order something and they leave it at your front door, when you come home from work or you bring your kids over from school, that package is gonna be there. And if it’s not — someone’s gonna have hell to pay for stealing it.”

Shoshana Weissmann: A thief in DC tried to steal my friends’ new mattress and gave up in 2 blocks bc it was too heavy. I just want them to commit

Ed Carson: Criminals just don’t “go to the mattresses” with the same conviction as in the past. No work ethic.

Shoshana Weissmann: IN MY DAY WE CARRIED STOLE MATTRESSES BOTH WAYS UP HILL TO SCHOOL IN THE SNOW.

My model is that what we need is catching them more often, and actually punishing thieves with jail time at all. We don’t need to ratchet it up so much as not do the not catch and if somehow catch then release strategy from New York and California.

How much tolerance should we have? Yet another study shows that we would be better off with less alcohol, here in the form of ‘Zero Tolerance’ laws that reduce youth binge drinking, finding dramatic effects on later life outcomes.

This paper provides the first long-run assessment of adolescent alcohol control policies on later-life health and labor market outcomes. Our analysis exploits cross-state variation in the rollout of “Zero Tolerance” (ZT) Laws, which set strict alcohol limits for drivers under age 21 and led to sharp reductions in youth binge drinking. We adopt a difference-in-differences approach that combines information on state and year of birth to identify individuals exposed to the laws during adolescence and tracks the evolving impacts into middle age.

We find that ZT Laws led to significant improvements in later-life health. Individuals exposed to the laws during adolescence were substantially less likely to suffer from cognitive and physical limitations in their 40s. The health effects are mirrored by improved labor market outcomes. These patterns cannot be attributed to changes in educational attainment or marriage. Instead, we find that affected cohorts were significantly less likely to drink heavily by middle age, suggesting an important role for adolescent initiation and habit-formation in affecting long-term substance use.

As usual, this does not prove that no drinking is superior to ‘responsible’ drinking. Also it does not prove that, if others around you drink, you don’t pay a high social tax for drinking less or not drinking at all. It does show that reducing drinking generally is good overall on the margin.

I continue to strongly think that the right amount of alcohol is zero. Drug prohibition won’t work for alcohol even more than it won’t work for other drugs, but alcohol is very clearly a terrible choice of drug even relative to its also terrible salient rivals.

Hackers crack millions of hotel room keycards. That is not good, but also did anyone think their hotel keycard meant their room was secure? I have assumed forever that if someone wants into your hotel room, there are ways available. But difficulty matters. I notice all the television programs where various people illustrate that at least until recently, standard physical locks on doors were trivially easy to get open through either lockpicking or brute force if someone cared. They still mostly work.

Court figures out that Craig Wright is not Satoshi and has perjured himself and offered forged documents. Patrick McKenzie suggests the next step is the destruction of his enterprises. I would prefer if the next step was fraud and perjury trials and prison? It seems like a serious failing of our society that someone can attempt a heist this big, get caught, and we don’t then think maybe throw the guy in jail?

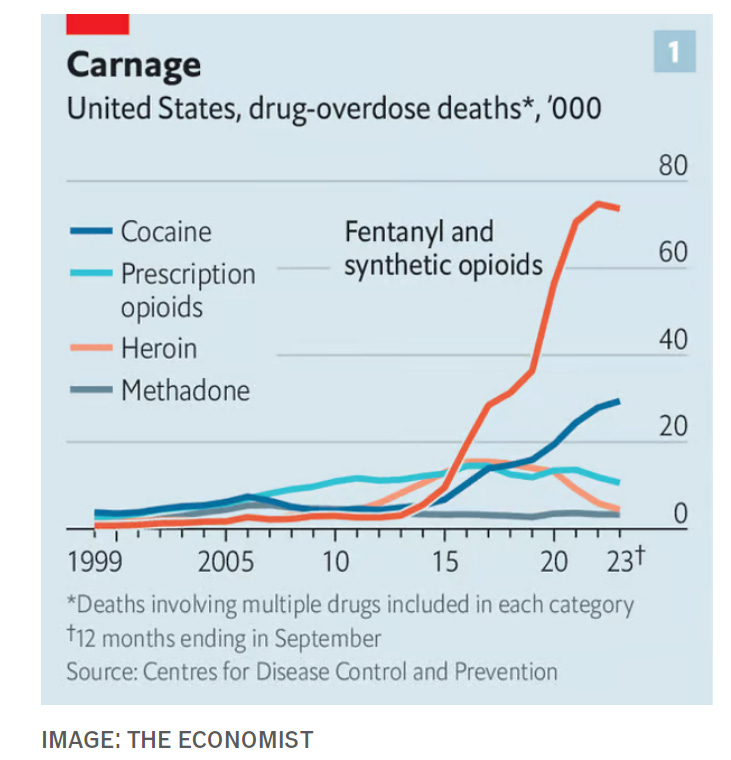

Scott Sumner notes that we are seeing more overdose deaths in cocaine, not only in opioids. Thus, decriminalizing cocaine is not a reasonable response to Fentanyl. That is doubly true since the cocaine is often cut with Fentanyl. If you want to avoid that, you would need full legalization, so you had quality controls.

I never fully adjust to the idea that people have widely considered alcohol central to life, ubiquitous, the ancestor of civilization itself, at core of all social function, as Homer Simpson calls it ‘the cause of and solution to all life’s problems.’ People, in some times and places most people, do not know what to do with themselves other than drink and don’t consider themselves alcoholics.

Collin Rutherford (post has 1.2 million views): Do you know what a “bottle night” is?

Probably not, because my gf and I invented it during a 2023 blizzard in Buffalo, NY.

We lock our phones away, turn the TV off…

Each grab a bottle of wine, and talk.

That’s it, we simply talk and enjoy each other’s presence.

We live together, but it’s easy to miss out on “quality time”.

What do you think?

Do you have other methods for enjoying quality time with your partner?

O.J. Simpson never paid the civil judgment against him, while his Florida home and $400k a year in pensions were considered ‘protected.’ I do not understand this. I think debtor’s prison would in general be too harsh for those who did not kill anyone, but surely there is a middle ground where we do not let you keep your home and $400k a year?

Tenant law for those who are not actually legal tenants is completely insane.

At a minimum, it should only apply to tenants who were allowed to live there in the first place? You shouldn’t be able to move in, change the locks and then claim any sort of ‘rights’?

The latest concrete example of this madness is an owner being arrested in her own home when squatters called the police. Instead, obviously, the police should be arresting the squatters, at a minimum evicting them.

New York Post has an article about forums where squatters teach each other techniques by which to steal people’s houses, saying it is bad enough some people are afraid to take extended vacations.

Why is this hard? How can anyone possibly think squatting should get legal backing when the owner shows up 31 days after you try to steal their property, and you should have to provide utilities while they live rent free without permission on your property? Or that you should even, in some cases, let them take ownership?

If you illegally occupy someone else’s property and refuse to leave, and force that person to go to court or call the police, and it turns out you had no lease or agreement of any kind? That should be criminal, ideally a felony, and you should go to jail.

The idea that society has an interest in not letting real property stay idle and neglected, in some form, makes sense. Implementing it via ‘so let people steal it if you turn your back’ is insanity. Taxes on unoccupied land or houses (or all land or houses) are the obviously correct remedy here.

This is distinct from the question of how hard it should be to evict an actual tenant. If you signed a lease, it makes sense to force the landlord to take you to court, for you to be given some amount of time, and you should obviously not face any criminal penalties for making them do that. Here we can talk price.

Also I am confused why squatters rights are not a taking under the 5th amendment and thus blatantly unconstitutional?

Stories about El Salvador continue to be split between a media narrative of ‘it is so horrible how they are doing this crackdown on crime’ whereas every report I see from those with any relation to or stake in the country is ‘thank goodness we cracked down on all that crime.’

John Fetterman is strongly in this camp.

Senator John Fetterman (D-PA): Squatters have no rights. How can you even pretend that this is anything other than you’re just breaking the law?

It’s wild, that if you go away on a long trip, for 30 days, and someone breaks into your home and suddenly they have rights. This is crazy. Like if somebody stole your car, and then they held it for 30 days, then somehow you now have some rights?

Well said.

Sadanand Dhume: My Uber driver today was from El Salvador. He went back last year for a visit for the first time in 15 years. He could not stop raving about @nayibbukele. He said Bukele’s crackdown on crime has transformed the country. People feel secure for the first time. “They don’t have money, but they feel safe.”

My driver used a Mexican slang word, “chingon,” to describe Bukele. “He is the king of kings,” he said. “He’s a blessing for El Salvador.”

Crime that gets out of hand ruins everything. Making people feel safe transforms everything. Ordinary grounded people reliably, and I think quite correctly, are willing to put up with quite a lot, essentially whatever it takes, to get crime under control. Yes, the cure can be worse than the disease, if it causes descent into authoritarianism.

So what happened, and is likely to happen? From Matt Lakeman, an extensive history of El Salvador’s gangs, from their origins in Los Angeles to the later crackdown. At their peak they were two de facto governments, MS-13 and B-18, costing the economy $4 billion annually or 15% of GDP, despite only successfully extracting tens of millions. Much of what they successfully extracted was then spent for the purpose of fighting against and murdering each other for decades, with the origin of the conflict lost to history. The majority of the gang murders were still of civilians.

The majority of the total murders were still not by gang members and the murder rate did not peak when the gangs did, but these gangs killed a lot of people. Lakeman speculates that it was the very poverty and weakness of the gangs that made them so focused on their version of ‘honor,’ that I would prefer to call street cred or respect or fear (our generally seeing ‘honor’ as only the bad thing people can confuse for it is a very bad sign for our civilization, the actual real thing we used to and sometimes still call honor is good and vital), and thus so violent and dangerous.

There was a previous attempt at at least the appearance of a crackdown on gangs by the right-wing government in 2003. It turns out it is not hard to spot and arrest gang members when they have prominent tattoos announcing who they are. But the effort was not sustained, largely due to the judiciary not playing along. They tried again in 2006 without much success. Then the left-wing government tried to negotiate a three-way truce with both major gangs, which worked for a bit but then inevitably broke down while costing deary in government legitimacy.

Meanwhile, the criminal justice system seemed fully compromised, with only 1 in 20 prosecutions ending in conviction due to gang threats, but also we have the story that all major gang leaders always ended up in prison, which is weird, and the murder rate declined a lot in the 2010s. Over the 1992-2019 period, El Salvador had five presidents, the last four of whom got convicted of corruption without any compensating competence.

Then we get to current dictator Bukele Ortez. He rose to power, the story here goes, by repeatedly spending public funds on flashy tangible cool public goods to make people happy and build a reputation, and ran as a ‘truth-telling outsider’ with decidedly vague plans on all fronts. The best explanation Matt could find was that Bukele was a great campaigner, and I would add he was up against two deeply unpopular, incompetent and corrupt parties, how lucky, that never happens.

Then when the legislature didn’t cooperate, he tried a full ‘because of the implication’ by marching soldiers into the legislative chamber and saying it was in the hands of God and such, which I would fully count as an auto-coup. It didn’t work, but the people approved the breach of norms in the name of reform, so he knew the coast was clear. Yes, international critics and politicians complained, but so what? He won the next election decisively, and if you win one election in a democracy on the platform of ending liberal democracy, that’s usually it. He quickly replaced the courts. There is then an aside about the whole bitcoin thing.

The gangs then went on a murder spree to show him who was boss, and instead he suspended habeus corpus and showed them, tripling the size of the prison population to 1.7% of the country. While the murder rate wasn’t obviously falling faster than the counterfactual before that, now it clearly did unless the stats are fully faked (Matt thinks they are at least mostly real), from 18.17 in 2021 to 2.4 in 2023.

It is noteworthy that he had this supposed complex seven-step TCP plan (that may have laid key groundwork), then mostly threw that out the window in favor of a likely improvised plan of maximum police and arrests and no rights of any kind when things got real, and the maximum police plan worked. The gangs didn’t see it coming, they couldn’t handle the scope, the public was behind it so the effort stuck, and that was that. A clear case of More Dakka, it worked, everyone knew it and everyone loves him for it.

To do this, they have massively overloaded the prisons. But this might be a feature, not a bug, from their perspective. In El Salvador, as in the United States, the gangs ruled the old prisons, they were a source of strength for gangs rather than deterrence and removal. The new deeply horrible and overcrowded violations of the Geneva Conventions? That hits different.

The twin catches, of course, are that this all costs money El Salvador never had, and is a horrible violation of democratic norms, rule of law and human rights. A lot of innocent people got arrested and likely will languish for years in horrible conditions. Even the guilty are getting treated not great and denied due process.

Was it worth it? The man on the street says yes, as we saw earlier. The foreign commentators say no.

Have democracy and civil rights been dramatically violated? Oh yes, no one denies that. But you know what else prevents you from having a functional democracy, or from being able to enjoy civil rights? Criminal gangs that are effectively another government or faction fighting for control and that directly destroy 15% of GDP alongside a murder rate of one person in a thousand each year. I do not think the people who support Bukele are being swindled or fooled, and I do not think they are making a stupid mistake. I think no alternatives were presented, and if you are going to be governed by a gang no matter what and you have these three choices, then the official police gang sounds like the very clear first pick.

Letting ten guilty men go free to not convict one innocent man, even when you know the ten guilty men might kill again?

That is not a luxury nations can always afford.

Not that we hold ourselves to that principle all that well either.

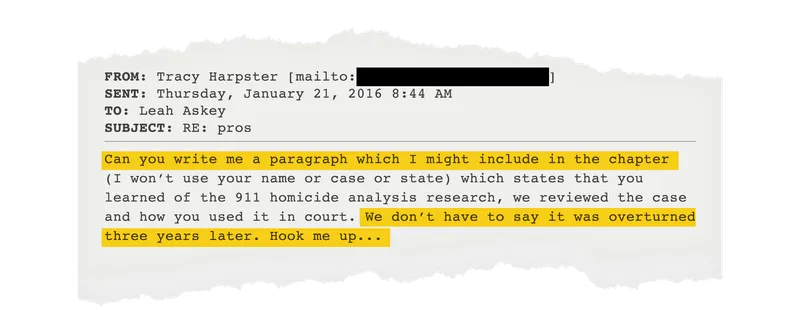

Here is a ProPublica article that made the rounds this past month about prosecutors who call ‘experts’ to analyze 911 calls and declare that the word choice or tone means they are liars and therefore guilty of various crimes including murder.

The whole thing is quite obviously junk science. Totally bunk. That does not mean one can put zero Bayesian weight on the details of a 911 call in evaluating credibility and what may have happened. Surely there is information there. But this is often presented as a very different level of evidence than it could possibly be.

I do note that there seems to be an overstatement early, where is ays Russ Faria had spent three and a half years in prison for a murder he didn’t commit, after he appealed, had his conviction thrown out, was retried without the bunk evidence and was acquitted. That is not how the system works. Russ Faria is legally not guilty, exactly because we do not know if he committed the murder. He was ‘wrongfully convicted’ in the sense that there was insufficient evidence, but not in the sense that we know he did not do it.

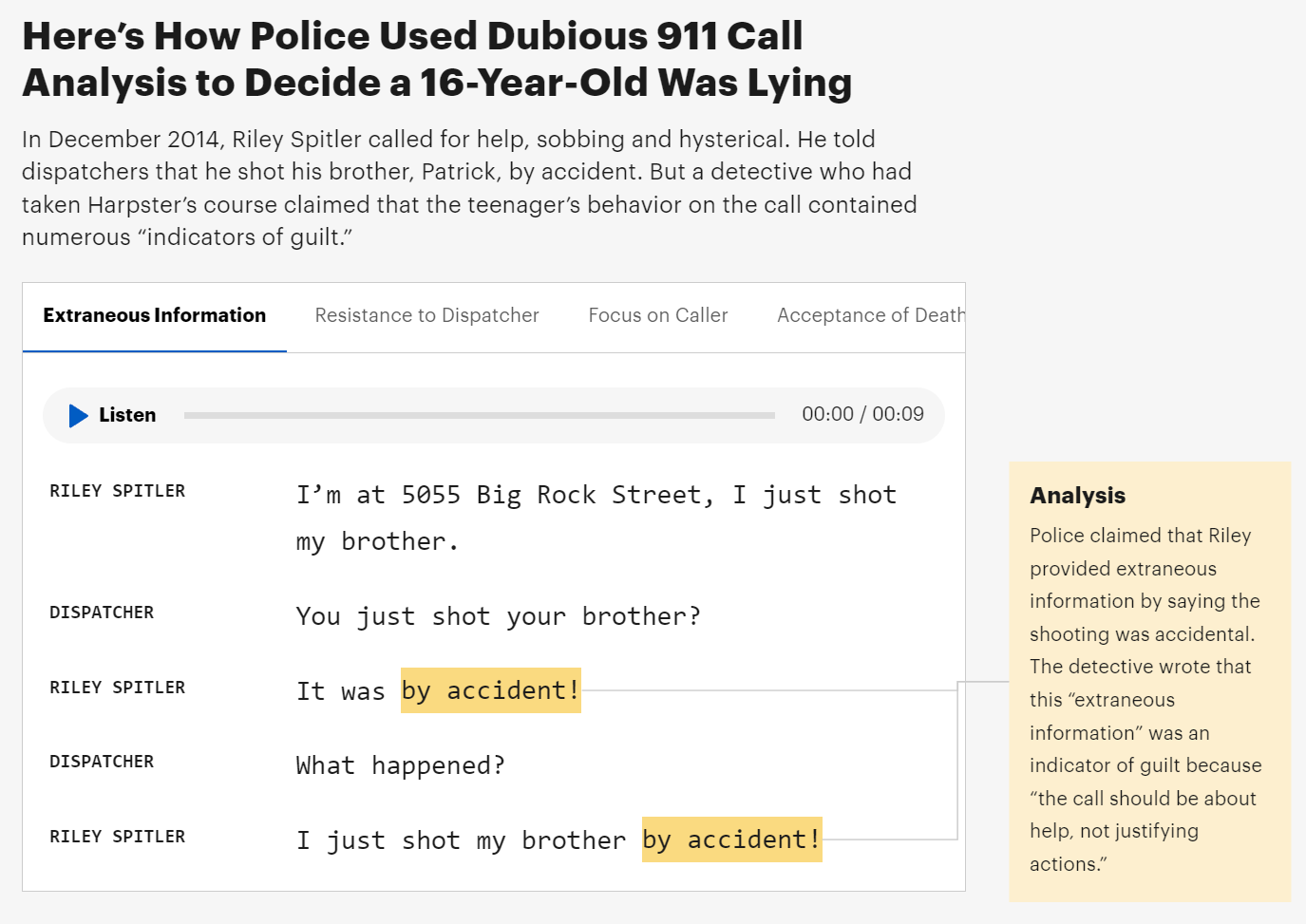

Similar, later in the article, they discuss the case of Riley Spitler. The article states that Riley is innocent and that he shot his older brother accidentally. But the article provides no evidence that establishes Riley’s innocence. Again, I can believe Riley was convicted based on bogus evidence, but that does not mean he did not do it. It means we do not know. If we had other proof he was innocent, the bogus evidence would presumably not have worked.

This is the mirror image of the Faria case then being prepared for a book promoting the very junk science that got thrown out.

Here is an example of how this works:

Well, yes. On the margin this is (weak) Bayesian evidence in some direction, probably towards him being more likely to be guilty. But this is something else.

The whole thing is made up, essentially out of whole cloth. Harpster, the man who created all this and charges handsomely for providing training in it, doesn’t have any obvious credentials. All replication attempts have failed, although I do not know that they even deserve the name ‘replication’ as it is not obvious he ever had statistical evidence to begin with.

Outside of law enforcement circles, Harpster is elusive. He tries to keep his methods secret and doesn’t let outsiders sit in on his classes or look at his data. “The more civilians who know about it,” he told me once, “the more who will try to get away with murder.”

It gets worse. He looked at 100 phone calls for patterns. He did a ‘study’ that the FBI sent around before it was peer reviewed. Every detail screams p-hacking, except without bothering with actual p-values. This was used at trials. Then in 2020 someone finally did a study, and found it all to be obvious nonsense that often had the sign of the impact wrong, and another study found the same in missing child cases.

They claim all this is highly convincing to juries:

“Juries love it, it’s easy for them to understand,” Harpster once explained to a prosecutor, “unlike DNA which puts them to sleep.”

I wonder what makes this convincing to a jury. If you told me that I should convict someone of murder or anything else based on this type of flim-flam, I cannot imagine going along with that. Not because I have a keen eye for scientific rigor, but because the whole thing is obvious nonsense. It defies common sense. Yet I suppose people think like this all the time in matters great and small, that people ‘sound wrong’ or that something doesn’t add up, and thus they must be guilty?

Then there is this, I get that we need to work via precedent but come on, shouldn’t that have to come at least at the appellate level to bind?

Junk science can catch fire in the legal system once so-called experts are allowed to take the stand in a single trial. Prosecutors and judges in future cases cite the previous appearance as precedent. But 911 call analysis was vexing because it didn’t look like Harpster had ever actually testified.

…

[Hapster] claims that 1 in 3 people who call 911 to report a death are actually murderers.

…

His methods have now surfaced in at least 26 states, where many students embrace him like an oracle.

..

“If this were to get out,” Salerno said, “I feel like no one would ever call 911 again.”

Yeah. You don’t say?

And it’s not only 911 science.

Kelsey Piper: I was haunted by this ProPublica story about how nonsensical analysis of 911 calls is used to convict people of killing their kids. I mentioned it to a friend with more knowledge of criminal justice. “Oh,” she said casually, “all of forensics is like that”

This was @clarabcollier, who then told me dozens of more depressing examples. It seems like each specific junk science gets eventually refuted but the general process that produced them all continues at full speed.

Will MaCaskill went on the Sam Harris podcast to discuss SBF and effective altruism. If Reddit is any indication, listeners did not take kindly to the story he offered.

Here are the top five base comments in order, the third edited for length:

ballysham: Listening to these two running pr for sam bankman fried is infuriating. He should have coffezilla on.

robej78: I expect excuse making from the parents of a spoiled brat, don’t have sympathy for it but I understand it.

This was an embarrassing listen though, sounded desperate and delusional, very similar to trump defenders.

deco19: The absolute ignorance on the various interviews SBF did in the time after being exposed where SBF literally put all his reasoning and views on the table. And we hear this hand-wringing response deliberating why he did this for months on end according to McCaskill.

Novogobo: Sam draws a ethical distinction between merely stealing from customers vs making bets with their money without their consent or knowledge with the intention of paying them back if you win and pocketing the gain. He just lamented that Coleman was surrounded by people on the view who were ethically deranged. THAT’S JUST STEALING WITH EXTRA STEPS!

He laments that sbf was punished too harshly, but that’s exactly the sort of behavior that has to be discouraged in the financial industry.

It’s like defending rapists who eat pussy. “Oh well it’s obvious that he intended for her to enjoy it.”

picturethisyall: McCaskill completely ignored or missed the countless pump n dumps and other fraudulent activities SBF was engaged in from Day 1. NYTimes gift article with some details.

It… doesn’t get kinder after that. Here’s the one that Sam Atis highlighted that drew my attention to the podcast.

stellar678: I’ve listened to the podcast occasionally for several years now but I’ve never sought out this subreddit before. Today though – wow, I had to make sure I wasn’t the only one whose jaw was on the floor listening to the verbal gymnastics these two went through to create moral space for SBF and the others who committed fraud at FTX.

Honestly it makes me uneasy about all the other podcast episodes where I feel more credulous about the topics and positions discussed.

Edit to say: The FTX fallout definitely tainted my feelings about Effective Altruism, but MaCaskill’s performance here made it a lot worse rather than improving things.

This caused me to listen as well. I cannot argue with the above reactions. It was a dreadful podcast both in terms of how it sounded, and in terms of what it was. This was clearly not a best attempt to understand what happened, this was an attempt to distance from, bury and excuse it. Will has clearly not reckoned with (or is pretending not to have reckoned with) the degree of fraud and theft that was baked into Alameda and FTX from the beginning. They both are not willing to face up to what centrally happened, and are essentially presenting SBF’s story that unwise bets were placed without permission by people who were in over their heads with good intentions. No.

The other failure is what they do not discuss at all. There is no talk about what others including Will (who I agree would not have wanted SBF to do what he did but who I think directly caused SBF to do it in ways that were systematically predictable, as I discuss in my review of Going Infinite) did to cause these events. Or what caused the community to generally support those efforts, or what caused the broader community not to realize that something was wrong despite many people realizing something was wrong and saying so. The right questions have mostly not been asked.

There has still been no systematic fact-finding investigation among Effective Altruists into how they acted with respect to SBF and FTX, in the wake of the collapse of FTX. In particular, there was no systematic look into why, despite lots of very clear fire alarms that SBF and FTX were fishy and shady as all hell and up to no good, word of that never got to where it needed to go. Why didn’t it, and why don’t we know why it didn’t?

This is distinct from the question of what was up with SBF and FTX themselves, where I do think we have reasonably good answers.

Someone involved in the response gave their take to Rob Bensinger. The explanation is a rather standard set of excuses for not wanting to make all this known and legible, for legal and other reasons, or for why making this known and legible would be hard and everyone was busy.

This Includes the claim that a lot of top EA leaders ‘think we know what happened.’ Well, if they know, then they should tell us, because I do not know. I mean, I can guess, but they are not going to like my guess. There is the claim that none of this is about protecting EA’s reputation, you can decide whether that claim is credible.

In better altruism news, new cause area? In Bangladesh, they got people with poor vision new pairs of glasses, so that glasses wearing was 88.3% in the treatment group versus 7.8% in the control group (~80% difference) and this resulted after eight months in $47.1/month income versus $35.3/month, a 33% difference (so 40% treatment impact) and also enough to pay for the glasses. That is huge, and makes sense, and is presumably a pure win.

Generous $1 billion gift from Dr. Ruth Gottesman allows a Bronx Medical School, Albert Einstein College of Medicine, to go tuition-free. She even had to be talked into letting her name be known. Thank you. To all those who centrally reacted negatively on the basis that the money could have been more efficiently given away or some other cause deserved it more? You are doing it wrong. Present the opportunity, honor the mensch.

Also seems like a good time to do a periodic reminder that we do not offer enough residency slots. Lots of qualified people want to be doctors on the margin, cannot become doctors because there is a cap on residency slots, and therefore we do not have enough doctors and healthcare is expensive and rushed and much worse than it could be. A gift that was used to enable that process, or that expanded the number of slots available, would plausibly be a very good use of funds.

Alas, this was not that, and will not solve any bottlenecks.

Eliezer Yudkowsky: Actually, more tragic than that. The donation is clearly intended to give more people access to healthcare by creating more doctors. But the actual bottleneck is on residencies, centrally controlled and choked. So this well-intended altruism will only benefit a few med students.

So basically, at worst, be this way for different donation choices:

Here is some good advice for billionaires:

Marko Jukic: The fact that outright billionaires are choosing to spend their time being irate online commentators and podcast hosts rather than, like, literally anything else productive, seems like a sign of one of the most important and unspoken sociological facts about modern America.

Billionaires are poor.

Having more money doesn’t make you wealthier or more powerful.

Apparently in America the purpose of having billions of dollars is to have job security for being a full-time podcaster or online commentator about the woke left, which, it turns out, has gone bananas.

Billions of dollars to pursue my lifelong dream of being an influencer.

My advice to billionaires:

Use your money to generously and widely fund crazy people with unconventional ideas. Not just their startup ideas to get A RETURN. Fund them without strings attached. Write a serious book.

Do not start a podcast. Do not tweet. Do not smile in photos.

If you only fund business ideas, you are only ever going to get more useless money. This is a terminal dead end.

If you want to change the world, you have to be willing to lose money. The more you lose, the better.

The modern billionaire will inevitably be expropriated by his hated enemies and lawyers. It doesn’t take a genius of political economy to see this coming.

The only solution is to pre-emptively self-expropriate by giving away your money to people you actually like and support.

One should of course also invest to make more money. Especially one must keep in mind what incentives one creates in others. But the whole point of having that kind of money is to be able to spend it, and to spend it to make things happen that would not otherwise happen, that you want.

Funding people to do cool things that don’t have obvious revenue mechanisms, being a modern day patron, whether or not it fits anyone’s pattern of charity, should be near the top of your list. Find the cool things you want, and make them happen. Some of them should be purely ‘I want this to exist’ with no greater aims at all.

I have indeed found billionaires to be remarkably powerless to get the changes they want to see in the world, due to various social constraints, the fear of how incentives would get distorted and the inability to know how to deploy their money effectively, among other reasons. So much more could be accomplished.

Not that you should give me billions of dollars to find out if I can back that up, but I would be happy to give it my best shot.

Xomedia does a deep dive into new email deliverability requirements adapted by Gmail, Yahoo and Hotmail. The biggest effective change is a requirement for a one-click visible unsubscribe button, which takes effect for Gmail on June 1. Seems great.

“A bulk sender is any email sender that sends close to 5,000 messages or more to personal Gmail accounts within a 24-hour period. Messages sent from the same primary domain count toward the 5,000 limit.”

…

April 2024: Google will start rejecting a percentage of non-compliant email traffic, and will gradually increase the rejection rate. For example, if 75% of a sender‘s traffic meets requirements, Google will start rejecting a percentage of the remaining 25% of traffic that isn’t compliant.

June 1, 2024: Bulk senders must implement a clearly visible one-click unsubscribe in the body of the email message for all commercial and promotional messages.

…

Engagement: Avoid misleading subject lines, excessive personalization, or promotional content that triggers spam filters. Focus on providing relevant and valuable information when considering email content.

Keep your email spam rate is less than 0.3%.

Don’t impersonate email ‘From:’ headers.

[bunch of other stuff]

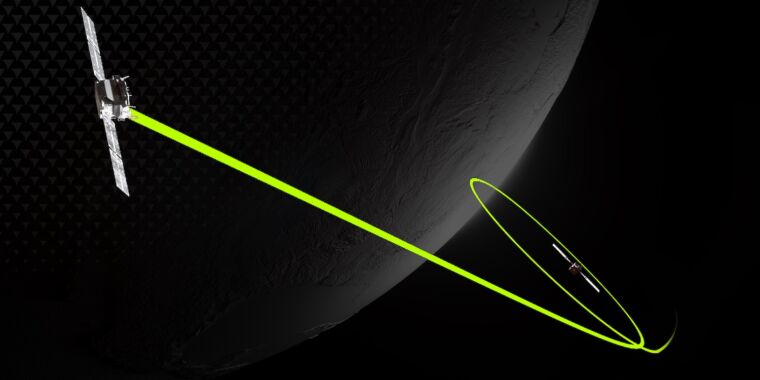

Terraform Industries claims they can use electricity and air to create carbon neutral natural gas. This in theory allows solar power to be stored and transported.

First, our innovative electrolyzer converts cheap solar power into hydrogen with current production costs at less than $2.50 per kg of H2.

…

Second, the proprietary direct air capture (DAC) system concentrates CO2 in the atmosphere today for less than $250 per ton.

…

Finally, our in-house multistage Sabatier chemical reactor ingests hydrogen and CO2, producing pipeline grade natural gas, which is >97% methane (CH4).

Normally Google products slowly get worse so we note Chana noticing that Google Docs have improved their comment search and interaction handling, although I have noticed that comment-heavy documents now make it very difficult to navigate properly, and they should work on that. She also notes the unsubscribe button next to the email address when you open a mass-sent email, which is appreciated.

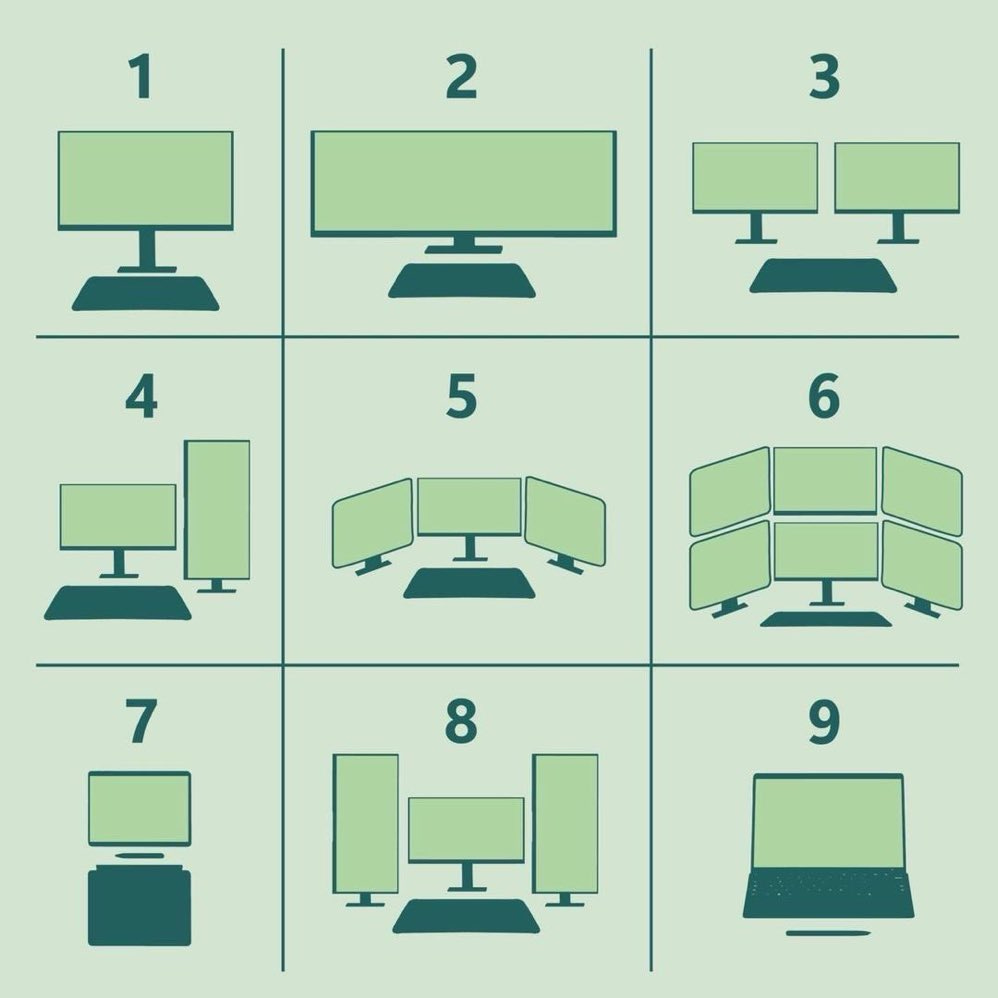

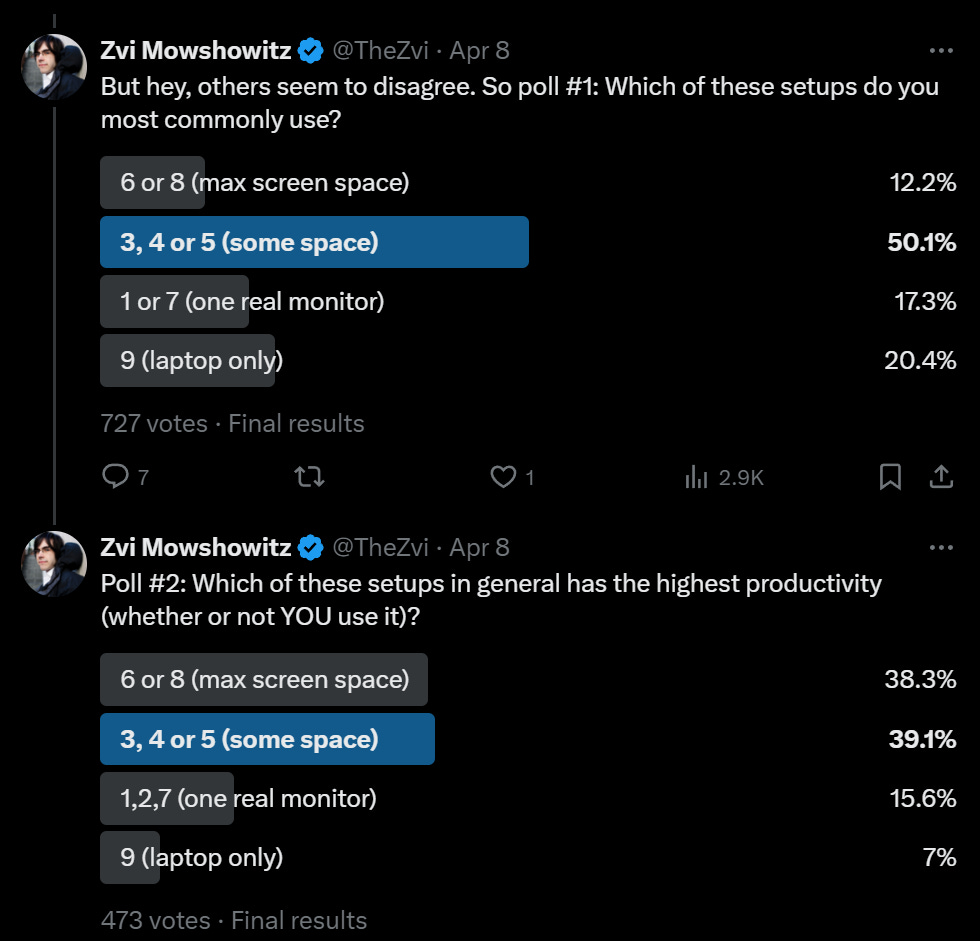

If I ever did go on Hills I’d Die On, and was getting properly into the spirit of it, this is a top candidate for that hill.

Sriram Krishnan: This is worthy of a debate.

Gaut is Doing Nothing: The most productive setup is 9 here. Change my mind.

Sriram Krishnan: 9. but my current setup is actually two separate machines next to each other with two keyboards so not represented here.

The correct answer is 8, except for a few places like trading where it is 6. You need a real keyboard and mouse, you real space to put the various things, and some things need big monitors. Lack of screen space kills productivity.

People very much disagree about this.

The ensuing debate did convince me that there is more room for flexibility for different people to benefit from different setups.

Where I stand extra firm are two things:

-

It is worth investing in the right setup. So the 25% of people who agree with my preference but don’t have the setup? Fix it, especially if on a laptop now.

-

Laptop only is a huge mistake, as people mostly agreed.

I can see doing 2, 3 or 4 with truly epic monitor size, although if you have the budget and space they seem strictly worse. For 2 in particular, even if it is an epic monitor you want the ability to full screen and still have other space.

When I try working on a laptop, my productivity drops on the order of 50%. It is shockingly terrible, so much so that beyond checking email I no longer even try.

This section accidentally got left out of March, but figured I’d still include it. At this point, the overall verdict is clearly in that the Apple Vision Pro is not ready for prime time, and we should all at least wait a generation. I still wonder.

Kuo says Apple Vision Pro major upgrades unlikely until 2027, with focus on reducing costs rather than improving user experience. That makes ‘buy it now’ a lot more attractive if you want to be in on this. I do plan to buy one, but I want to do so in a window where I will get to fly with it during the two-week return window, since that will be the biggest test, although I do have several other use cases in mind.

The first actual upgrade is here, we have ‘spatial personas.’ It is definitely a little creepy, but you probably get used to it. Still a long way to go.

Garry Tan says Apple Vision Pro really is about productivity. I remain skeptical.

Alexandr Wang (CEO Scale AI): waited until a long business trip to try it out—

the Apple Vision Pro on a plane / while traveling is ridiculously good—

especially for working basically a gigantic monitor anywhere you go (plane, hotel, everywhere) double your productivity everywhere you go.

Not having a big monitor is really bad for your productivity. I’d also need a MacBook, mouse and some keyboard, but it does not take that many days of this to pay for itself even at a high price point.

Will Eden offers his notes;

Will Eden: Notes on the Apple Vision Pro

-eyes look weird but does make it feel like they’re more “present”

-it is quite heavy :-/

-passthrough is grainy, display is sharp

-definitely works as a BIG screen

-hand gestures are slightly finicky

Overall I don’t want one or think I’d use it… …on the flip side, the Quest 3 felt more comfortable and close to equivalent. Slight drawback is I could see the edges in my peripheral vision

I still don’t think I’d use it for anything other than gaming, maaaybe solo movies/TV if comfortable enough.

It’ll certainly improve, though the price point is brutal and probably only comes partially down – the question is whether it has a use case that justifies that price, especially when the Quest 3 is just $500.

Lazar Radic looks at the antitrust case against Apple and sees an increasing disconnection of antitrust action from common sense and reality. Edited for length.

It certainly seems like the core case being made is quite the overreach.

Lazar Radic: The DOJ complaint against Apple filed yesterday has led me to think, once again, about the increasing chasm that exists between antitrust theory and basic common sense & logic. I think this dissonance is getting worse and worse, to the point of mutual exclusion.

…

What worries me aren’t a couple of contrived cases brought by unhinged regulators at either side of the Atlantic, but that this marks a much broader move towards a centrally-administered economy where choices are made by anointed regulators, rather than by consumers.

…

Take this case. A lot of it doesn’t make sense to me not only as an antitrust, but as a layperson. For starters, why would the iPhone even have to be compatible with third-party products or ensure that their functionality is up to any standard – let alone the *highest*?

If I opened a chain or restaurants that became the most popular in the world and everybody only wanted to eat there, would I then have a duty to sell competitors’ food and drinks so as to not “exclude” them? Would I have to serve the DOJ’s favorite dishes?

And, to be clear, I am aware that the DOJ is saying that Apple is maintaining its iPhone market position thanks to anticompetitive practices but, quite frankly, discounting the possibility that users simply PREFER the iPhone in this day & age is ludicrous to me.

…

But in the real world, there exists no legal obligation to be productive or to use one’s resources efficiently. People aren’t punished for being idle. Yet a private company *harmsus when it doesn’t design its products the way public authorities thinks is BEST?

…

Would X be better if the length of all tweets was uncapped? Would McDonald’s be better if it also sold BK’s most popular products – like the Whopper? Would the Playstation be better if it also had Xbox, Nintendo and PC games? I don’t know, maybe. Does it matter?

The magic of antitrust, of course, is that if one can somehow connect these theoretical shortcomings to market power — no matter how tenuously — all of a sudden, one has a blockbuster case against an evil monopolist & is on the right side of history.

I am not a fan of the iPhone, the Apple ecosystem or Apple’s aggressive exclusivity on its devices. But you know what I do in response? I decline to buy their products. I have an Android phone, a Windows computer and for now no headset or watch. There is no issue. Apple is not a monopolist.

It seems crazy to say that Apple is succeeding due to the anticompetitive practice of not allowing people into the Apple store. If this is causing them to succeed more, it is not anticompetitive, it is highly competitive. If this is causing them to succeed less, then they are paying the price.

However, that does not mean that Apple is not abusing its monopoly position to collect rents or leverage its way into other businesses in violation of antitrust law. That is entirely compatible with Apple’s core ecosystem can be superior because it builds better products, and also they can be abusing that position. And that can be largely distinct from the top complaints made by a government that has little clue about the actual situation.

Indeed, that is my understanding of the situation.

Ben Thompson breaks down many reasons others are rather upset with Apple.

Apple wants a huge cut of everything any app maker makes, including on the web, and is willing to use its leverage to get it, forcing Epic and others to sue.

Ben Thompson (June 2020): I have now heard from multiple developers, both big and small, that over the last few months Apple has been refusing to update their app unless their SaaS service adds in-app purchase. If this has happened to you please email me blog @ my site domain. 100% off the record.

Multiple emails, several of which will only communicate via Signal. I’m of course happy to do that, but also think it is striking just how scary it is to even talk about the App Store.

We have now moved into the “genuinely sad” part of this saga where I am learning about apps that have been in the store for years serving the most niche of audiences being held up for what, a few hundred dollars a month?

Ben Thompson (2024): That same month Apple announced App Tracking Transparency, a thinly veiled attempt to displace Facebook’s role in customer acquisition for apps; some of the App Tracking Transparency changes had defensible privacy justifications (albeit overstated), but it was hard to not notice that Apple wasn’t holding itself to the same rules, very much to its own benefit.

…

The 11th count that Epic prevailed on required Apple to allow developers to steer users to a website to make a purchase; while its implementation was delayed while both parties filed appeals, the lawsuit reached the end of the road last week when the Supreme Court denied certiorari. That meant that Apple had to allow steering, and the company did so in the most restrictive way possible: developers had to use an Apple-granted entitlement to put a link on one screen of their app, and pay Apple 27% of any conversions that happened on the developer’s website within 7 days of clicking said link.

Many developers were outraged, but the company’s tactics were exactly what I expected…Apple has shown, again and again and again, that it is only going to give up App Store revenue kicking-and-screaming; indeed, the company has actually gone the other way, particularly with its crackdown over the last few years on apps that only sold subscriptions on the web (and didn’t include an in-app purchase as well). This is who Apple is, at least when it comes to the App Store.

This is not the kind of behavior you engage in if you do not want to get sued for antitrust violations. It also is not, as Ben notes, pertinent to the case actually brought.

Apple does seem to have taken things too far with carmakers as well?

Gergely Orosz: So THIS is why GM said it will no longer support Apple CarPlay from 2026?! And build their own Android experience. Because they don’t want Apple to take over all the car’s screens as Apple demands it does so.

“Apple has told automakers that the next generation of Apple CarPlay will take over all of the screens, sensors, and gauges in a car, forcing users to experience driving as an iPhone-centric experience if they want to use any of the features provided by CarPlay. Here too, Apple leverages its iPhone user base to exert more power over its trading partners, including American carmakers, in future innovation.”

A friend in the car industry said that the next version of Car Play *supposedlywanted access to all sensory data. Their company worries Apple collects this otherwise private data to build their own car – then put them out of business. And how CarPlay is this “Trojan horse.”

Even assuming Apple has no intention of building a car, taking over the entire car to let users integrate their cell phone is kind of crazy. It seems like exactly the kind of leveraging of a monopoly that antitrust is designed to prevent, and also you want to transform the entire interface for using a car? Makes me want to ensure my car has as any physical knobs on it as possible. Then again, I also want my television to have them.

Instead, what is the DOJ case about?

-

Apple suppresses ‘Super Apps’ meaning apps with mini-apps within them. As Ben points out, this would break the rule that you install things through Apple.

-

Apple suppresses ‘Cloud Streaming Game Apps,’ requiring each game to be its own app. Ben finds this argument strong, and notes Apple is compromising on it, so long as you can buy the service in-app.

-

Apple forces Androids to use green bubbles in iMessage by not building an Android client for it, basically? I agree with Ben, this claim is the dumbest one.

-

Apple doesn’t fully integrate third-party watches and open up all its tech to outsiders.

-

Apple is not allowing third-party digital wallets. Which DOJ bizarrely claims will create prohibitive phone switching costs.

I can see the case for #1, #2 and #5 if I squint. I find Apple’s behavior to make perfect sense in these cases, and see all of this as weaksauce, but can see why it might be objectionable and requiring adjustments on the margin. I find #3 and #4 profoundly stupid.

Ben thinks that the primary motivation for the lawsuit is the App Store and its 30% tax and the enforcement thereof, especially its anti-steering-to-websites stance. And that as a result, they face a technically unrelated lawsuit that threatens Apple’s core value propositions, because DOJ does not understand how any of this works. I am inclined to agree.

Ben thinks this is a mistake. But Apple makes so much money from this, in an equilibrium that could prove fragile if disrupted, that I can see it being worth all the costs and risks they are running. Nothing lasts forever.

Jess Miers: CA lawmakers bristle at opposition to their bills unless you’ve met with every involved office + consultant. Yet, they continuously flood the zone with harmful bills.

The “kiss the ring” protocol enables CA lawmakers to steamroll over our rights without considering pushback.

If you’re spending more time as a policymaker imagining clever schemes to sneak your bills into law instead of working w/experts and constituents to craft something better, you’re bad at your job and should probably find something else to do that doesn’t waste taxpayer dollars.🤷🏻♀️

We’re tracking ~100 unconstitutional / harmful bills in the CA Leg rn. If we had to meet with every staffer involved w/each bill *beforeregistering our opposition, we’d miss numerous bills solely due to impossible deadline constraints.

To CA, that’s a feature, not a bug.

I asked her how to tell which bills might actually pass and that we might want to pay attention to, since most bills introduced reliably go nowhere. I hear a lot of crying of wolf from the usual suspects about unconstitutional and terrible bills. Most of the time the bills do indeed seem unconstitutional and terrible, even though the AI bill objections and close reading of other tech bills often give me Gell-Mann Amnesia.

But we do not have time for every bad bill. So again, watchdogs doing the Lord’s work, please help us know when we should actually care.

Accusation that Facebook did a man-in-the-middle attack using their VPN service to steal data from other apps?

Instagram seems to be doing well.

Tanay Jaipuria: Instagram revenue was just disclosed for the first time in court filings.

2018: $11.3B

2019: $17.9B

2020: $22.0B

2021: $32.4B

It makes more in ad revenue than YouTube (and likely at much higher gross margins!)

It is crazy to think things like this are exploding in size in the background, in ways I never notice at all. Instagram has never appealed to me, and to the extent I see use cases it seems net harmful.

Twitter use is down more than 20% in the USA since November 2022 and 15% worldwide, far more than its major rivals. Those rivals are sites like Facebook and Instagram, and very much not clones like Threads or BlueSky, which are getting very little traction.

For now Twitter is still the place that matters, but that won’t last forever if this trend continues.

Brandon Bradford: Spend at least 25% of your online time off of Twitter, and you’ll realize that the outrage here has a tinier and tinier influence by the day. Super users are more involved but everyone else is logging in less often.

Noah Smith: This is true. This platform is designed to concentrate power users and have us talk to each other, so we power users don’t always feel it when the broader user base shrinks. But it is shrinking.

Julie Fredrickson: Agreed. The only platform that still has people with real power paying attention to power users is Twitter. None of the media platforms have managed to break away from their inherent worldview concentration (NYP vs NYT) so we have no replacement for the thinking man yet.

It’s my general belief that the extremists misjudge who has power here, and in trying to listen to all perspectives, we only entrench the horseshoe theory people.

Twitter has several mechanisms of action. Outrage or piling on was always the most famous one, but was always one of many. The impact of such outrage mobs is clearly way down. That is a good thing. The impact of having actually reasonable conversations also seems to be down, but it is down much less.

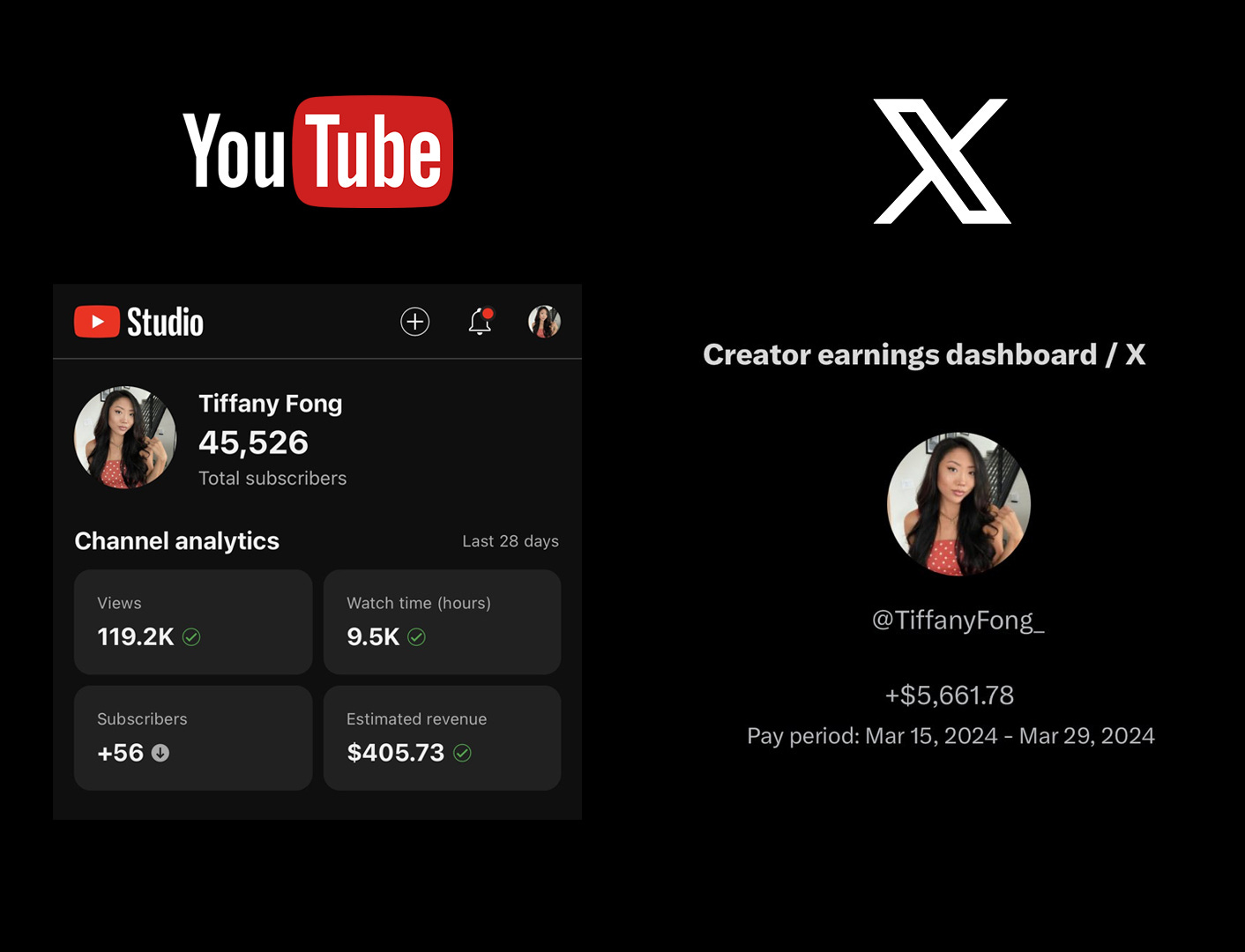

How much does YouTube pay creators? Here’s a concrete example (link to her YT). Her videos are largely about covering the aftermath of FTX.

So for 10,000 hours of watch time she got $400, or 4 cents per hour, alternatively 0.4 cents per view. That seems like a very difficult way to make a living.

What about her numbers on Twitter? She has 116k followers, but she punches way above that. Her view counts are often in the high six figures, and she posts frequently including the same videos. So I do not think this reflects that different a payment scheme, it reflects that she has much better reach on Twitter. Twitter also seems like a very difficult way to make a living.

Uri Berliner, 25 year veteran of NPR and classic NPR style person, says NPR lost its way after Trump won the 2016 election, then doubled down after 2020. Eyes Lasho here offers some highlights.

St. Rev Dr. Rev: As a former NPR listener, it’s interesting to read someone on the inside talk about what the hell happened to it. The real meat doesn’t come until halfway through the article, though. Short version: it was malice from the top, not stupidity.

Assuming the story is remotely accurate, major thanks to Uri Berliner for writing this. This was very much not a free action, and it took guts.

I believe it is, because the story matches my observations as a former listener. As Ross Douthat says, if you have listened to NPR in the past five years, you know, and the massive audience tilt to the far-left is unsurprising.

My family listened to NPR all the time growing up, and I continued to rely on them as a main news source for a long time. ‘Listen to the news’ meant NPR.

The first phase, that started in 2017, was annoying but tolerable. Yes, NPR was clearly taking a side on some of the standard side-taking stories, like Trump and Russia or Biden and the laptop or Covid origins, the examples used here.

But that did not in practice interfere much with the news, and was easy to correct for. I think leading with that kind of ‘red meat for the base’ misses what matters.

The second phase, that seemed to explode in intensity in 2020, was different. It was one thing for NPR to take a relatively left-wing perspective on the events it was covering, or even to lean somewhat more into that. That is mostly fine. I know how to correct for that perspective. But in 2020, two things changed.

The perspective completed its shift from moderate nerdy left-wing ‘traditional NPR’ perspective to a much farther left perspective.

And also they let that perspective entirely drive what they considered news, or what they considered worth covering in any non-news way as well. Every single story, every single episode of every show, even shows that were not political or news in any way, would tie into the same set of things.

I still listen to Wait, Wait, Don’t Tell Me, but in practice I have otherwise entirely abandoned NPR. My wife will still put it on when she wants to listen to news because radio news is to my knowledge a wasteland with nothing better, and the running joke is if I walk in the story is going to somehow be intersectional every single time.

Could they turn this around? I think absolutely, there is still a lot of great talent and lots of goodwill and organizational capacity. All need not be lost.

They recently gave the new CEO position to Katherine Maher. While I see why some are rushing to conclusions based on what she posted in 2020, I checked her Wikipedia page and her Twitter feed for the last few years, and if you don’t look back at 2020 it seems like the timeline of a reasonable person. So we shall see.

While some complain it is too violent and bloody, Netflix’s adaptation of The Three Body Problem is understating the depths of the Cultural Revolution.

I have also been told it also flinches away from the harsh game theoretic worldview of the books later on, which would be a shame. The books seem unfilmable in other ways, but if you are not going to attempt to do the thing, then why bother?

Thus, I have not watched so far, although I probably will eventually. You can also read my old extensive book review of the series here.

Liz Miele’s new comedy special Murder Sheets is out. I was there for the taping and had a great time. Someone get her a Netflix special.

Scott Sumner’s 2024 Q1 movie reviews. As usual, he is always right, yet I will not see these movies.

Margot Robbie to produce the only movie based on the board game Monopoly.

Culture matters, and television shows can have real cultural impacts. The classic example cited is 16 & Pregnant, which reduced teen births 4.3% in its first 18 months after airing, and Haus Cole cites Come With Me as inspiring a nine-fold increase (too 840k) in enrollment in adult literacy courses.

Random Lurker: Perhaps 36 & Can’t Get Pregnant could be a winner in our baby bust times. Show couples in their thirties and forties going through fertility struggles with realistic numbers on how many succeed and discussing how they got to this place.

One does not want to mandate the cultural content of media, but we should presumably still keep it in mind, especially when recommending things or letting our children watch them, or deciding what to reward with our dollars.

Coin flips are 51% to land on the side they start on, and appear to be exactly 50% when starting on a random side for all coins tested. I agree with the commenter that the method here, which involves catching the coin in midair, is not good form.

Michael Flores on agency in Magic.

Reid Duke on basics of protecting yourself against cheaters in Magic.

Paulo Vitor Damo da Rose reminds us, in Magic, never give your opponents a choice. If they gave you a choice but didn’t have to, be deeply suspicious. As he notes, at sufficiently low levels of play this stops applying. But if the players are good, yes. Same thing is true of other types of opponent, playing other games.

How to flip a Chaos Orb like a boss.

Should you play the ‘best deck’ or the one you know best? Paulo goes over some of the factors. You care about what you will win with, not what is best in the abstract, and you only have so much time which also might be split if there are multiple formats. So know thyself, and often it is best to lock in early on something you can master, as long as it is still competitive. If broken deck is broken, so be it. Otherwise, knowing how to sideboard well and play at the top level is worth a lot. Such costs are higher and margins are bigger for more complex decks, lower for easier ones, adjust accordingly. And of course, if you have goals for the event beyond winning it, don’t forget those. Try to play a variety of decks.

For limited, Paulo likes to remain open and take what comes, but notices some people like to focus on a couple of strategies. I was very much a focused drafter. If you are a true draft master, up against other strong players who know the format well, with unlimited time to prepare, you usually want to be open to anything. In today’s higher stakes tournaments, however, time is at a premium for everyone, and you don’t have the time to get familiar with all strategies, your time is trading off with constructed, and your opponents will be predictably biased. It isn’t like an old school full-limited tournament with lots of practice time.

So yes, you want to be flexible, and you want to get as much skill as possible everywhere and know the basics of all strategies. But I say you should mostly know what you want as your A plan and your B plan, and bias yourself pretty strongly. I’ve definitely been burned by this, either because I had a weird or uncooperative seat or I’ve guessed wrong. But also I’ve been highly rewarded for it many other times. Remember that variance is your friend.

Paulo covers a lot, but I think there are a few key considerations he did not mention.

The first key thing is that there is more to Magic than winning or prizes. What will you enjoy playing and practicing? What do you want to remember yourself having played? What story do you want to experience and tell? What history do you want to make?