Hopefully, anyway. Nvidia has a new chip.

Also Altman has a new interview.

And most of Inflection has new offices inside Microsoft.

-

Introduction.

-

Table of Contents.

-

Language Models Offer Mundane Utility. Open the book.

-

Clauding Along. Claude continues to impress.

-

Language Models Don’t Offer Mundane Utility. What are you looking for?

-

Fun With Image Generation. Stable Diffusion 3 paper.

-

Deepfaketown and Botpocalypse Soon. Jesus Christ.

-

They Took Our Jobs. Noah Smith has his worst take amd commits to the bit.

-

Generative AI in Games. What are the important dangers?

-

Get Involved. EU AI office, IFP, Anthropic.

-

Introducing. WorldSim. The rabbit hole goes deep, if you want that.

-

Grok the Grok. Weights are out. Doesn’t seem like it matters much.

-

New Nivida Chip. Who dis?

-

Inflection Becomes Microsoft AI. Why buy companies when you don’t have to?

-

In Other AI News. Lots of other stuff as well.

-

Wait Till Next Year. OpenAI employees talk great expectations a year after GPT-4.

-

Quiet Speculations. Driving cars is hard. Is it this hard?

-

The Quest for Sane Regulation. Take back control.

-

The Week in Audio. Sam Altman on Lex Fridman. Will share notes in other post.

-

Rhetorical Innovation. If you want to warn of danger, also say what is safe.

-

Read the Roon. What does it all add up to?

-

Pick Up the Phone. More good international dialogue on AI safety.

-

Aligning a Smarter Than Human Intelligence is Difficult. Where does safety lie?

-

Polls Show People Are Worried About AI. This week’s is from AIPI.

-

People Are Worried About AI Killing Everyone. Elon Musk, but, oh Elon.

-

Other People Are Not As Worried About AI Killing Everyone. Then there’s why.

-

The Lighter Side. Everyone, reaping.

Ethan Mollick on how he uses AI to aid his writing. The central theme is ‘ask for suggestions in particular places where you are stuck’ and that seems right for most purposes.

Sully is predictably impressed by Claude Haiku, says it offers great value and speed, and is really good with images and long context, suggests using it over GPT-3.5. He claims Cohere Command-R is the new RAG king, crushing it with citations and hasn’t hallucinated once, while writing really well if it has context. And he thinks Hermes 2 Pro is ‘cracked for agentic function calling,’ better for recursive calling than GPT-4, but 4k token limit is an issue. I believe his reports but also he always looks for the bright side.

Claude does acausal coordination. This was of course Easy Mode.

Claude also successfully solves counterfactual mugging when told it is a probability theorist, but not if it is not told this. Prompting is key. Of course, this also presumes that the user is telling the truth sufficiently often. One must always watch out for that other failure mode, and Claude does not consider the probability the user is lying.

Amr Awadallah notices self-evaluated reports that Cohere Command-R has a very low hallucination rate of 3.7%, below that of Claude Sonnet (6%) and Gemini Pro (4.8%), although GPT-3.5-Turbo is 3.5%.

From Claude 3, describe things at various levels of sophistication (here described as IQ levels, but domain knowledge seems more relevant to which one you will want in such spots). In this case they are describing SuperFocus.ai, which provides custom conversational AIs that claim to avoid hallucinations by drawing on a memory bank you maintain. However, when looking at it, it seems like the ‘IQ 115’ and ‘IQ 130’ descriptions tell you everything you need to know, and the only advantage of the harder to parse ‘IQ 145’ is that it has a bunch of buzzwords and hype attached. The ‘IQ 100’ does simplify and drop information in order to be easier to understand, but if you know a lot about AI you can figure out what it is dropping very easily.

Figure out whether a resume indicates the skills you need.

Remember that random useless fact you learned in school for no reason.

Help you with understanding and writing, Michael Nielsen describes his uses.

Michael Nielsen: Dozens of different use cases. Several times this morning: terminology improvement or solving single-sentence writing problems. I often use it to talk over problems (sometimes with Whisper, while I walk). Cleaning up brainstorming (usually with Otter). It’s taught me a lot about many subjects, especially protein biology and history, though one needs to develop some expertise in use to avoid hallucination. Modifying the system ChatGPT prompt so it asks me questions and is brief and imaginative has also been very helpful (especially the questions) – makes it more like a smart colleague.

Another common use case: generating lists of ideas. I’ll ask it for 10 ideas of some specified time, then another 10, etc. Most of the ideas are usually mediocre or bad, but I only need one to get me out of a rut. (Also: much like with a colleague.)

Also: very handy for solving all sorts of coding and debugging and computer problems; enough so that I do quite a bit more of this kind of thing. Though again: care is sometimes needed. It suggested I modify the system registry once, and I gently suggested I was a bit nervous about that. It replied that on second thought that was probably wise of me…

Something I don’t do: use it to generate writing. It baffles me that people do this.

It does not baffle me. People will always look for the quickest and easiest path. Also, if you are not so good at writing, or your goal in writing is different, it could be fine.

On the below: All true, I find the same, the period has already begun for non-recent topics, and yes this is exactly the correct vibes:

Paul Graham: Before AI kills us there will at least be a period during which we’re really well-informed, if we want to be. I mainly use it for looking things up, and because it works so much better than Google for this, I look a lot more things up.

Warn you not to press any buttons at a nuclear power plant. Reasonable answers, I suppose.

Help you in an open book test, if they allow it.

David Holz (founder, MidJourney): “I don’t want a book if I can’t talk to it” feels like a quote from the relatively near future.

Presumably a given teacher is only going to fall for that trick at most once? I don’t think this play is defensible. Either you should be able to use the internet, or you shouldn’t be able to use a local LLM.

Write the prompt to write the prompt.

Sully Omarr: No one should be hand writing prompts anymore.

Especially now more than ever, with how good Claude is at writing

Start with a rough idea of what you want to do and then ask for improvements like this:

Prompt:

“I have a rough outline for my prompt below, as well as my intended goal. Use the goal to make this prompt clearer and easier to understand for a LLM.

your goal here

original

“

You’d be surprised with how well it can take scrappy words + thoughts and turn it into a nearly perfectly crafted prompt.

tinkerbrains: I am using opus & sonnet to write midjourney prompts and they are doing exceptionally well. I think soon this will transform into what wordpress became for web development. There will be democratized (drag & drop style) AI agent building tools with inbuilt prompt libraries.

I would not be surprised, actually, despite not having done it. It is the battle between ‘crafting a bespoke prompt sounds like a lot of work’ and also ‘generating the prompt to generate the prompt then using that prompt sounds like a lot of work.’

The obvious next thing is to create an automated system, where you put in low-effort prompts without bothering with anything, and then there is scaffolding that queries the AI to turn that into a prompt (perhaps in a few steps) and then gives you the output of the prompt you would have used, with or without bothering to tell you what it did.

Using Claude to write image prompts sounds great, so long as you want things where Claude won’t refuse. Or you can ask for the component that is fine, then add in the objectionable part later, perhaps?

A lot of what LLMs offer is simplicity. You do not have to be smart or know lots of things in order to type in English into a chat window. As Megan McArdle emphasizes in this thread, the things that win out usually are things requiring minimal thought where the defaults are not touched and you do not have to think or even pay money (although you then pay other things, like data and attention). Very few people want customization or to be power users.

Who wants to run the company that builds a personal-relationship-AI company that takes direction from Eliezer Yudkowsky? As he says he has better things to do, but I bet he’d be happy to tell you what to do if you are willing to implement it. Divia Eden has some concerns about the plan.

Write your CS ‘pier review’.

Transform the rule book of life so you can enjoy reading it, and see if there is cash.

Near: Underused strategy in life! [quotes: Somebody thought, “well this rulebook is long and boring, so probably nobody has read it all the way through, and if I do, money might come flying out.]

Patrick McKenzie: I concur. Also the rule book is much more interesting than anyone thinks it is. It’s Dungeons and Dragons with slightly different flavor text.

If you don’t like the flavor text, substitute your own. (Probably only against the rules a tiny portion of the time.)

…

Pedestrian services businesses are one. I know accountants in Tokyo that are Silicon Valley well-off, not Tokyo well-off, on the basis that nobody doing business internationally thinks reading Japanese revenue recognition circulars is a good use of their time.

Ross Rheingans-Yoo: “If you don’t like the flavor text, substitute your own.” can be an extremely literal suggestion, fwiw.

“This is 26 USC 6050I. Please rewrite it, paragraph for paragraph, with a mechanically identical description of [sci-fi setting].”

First shot result here.

A very clear pattern: Killer AI features are things you want all the time. If you do it every day, ideally if you do it constantly throughout the day, then using AI to do it is so much more interesting. Whereas a flashy solution to that Tom Blomfield calls an ‘occasional’ problem gets low engagement. That makes sense. Figuring out how and also whether to use, evaluate and trust a new AI product has high overhead, and for the rarer tasks it is usually higher not lower. So you would rather start off having the AIs do regularized things.

I think most people use the chatbots in similar fashion. We each have our modes where we have learned the basics of how to get utility, and then slowly we try out other use cases, but mostly we hammer the ones we already have. And of course, that’s also how we use almost everything else as well.

Have Devin go work for hire on Reddit at your request. Ut oh.

Min Choi has a thread with ways Claude 3 Opus has ‘changed the LLM game,’ enabling uses that weren’t previously viable. Some seem intriguing, others do not, the ones I found exciting I’ll cover on their own.

Expert coding is the most exciting, if true.

Yam Peleg humblebrags that he never used GPT-4 for code, because he’d waste more time cleaning up the results than it saved him, but says he ‘can’t really say this in public’ (while saying it in public) because nearly everyone you talk to will swear by GPT-4’s time saving abilities. As he then notices, skill issue, the way it saved you time on doing a thing was if (and only if) you lacked knowledge on how to do the thing. But, he says, highly experienced people are now coming around to say Claude is helping them.

Brendan Dolan-Gavitt: I gave Claude 3 the entire source of a small C GIF decoding library I found on GitHub, and asked it to write me a Python function to generate random GIFs that exercised the parser. Its GIF generator got 92% line coverage in the decoder and found 4 memory safety bugs and one hang.

…

As a point of comparison, a couple months ago I wrote my own Python random GIF generator for this C program by hand. It took about an hour of reading the code and fiddling to get roughly the same coverage Claude got here zero-shot.

Similarly, here Sully Omarr says he feeds Claude a 3k line program across three files, and it rewrites the bugged file on the first try with perfect style.

Matt Shumer suggests a Claude 3 prompt for making engineering decisions, says it is noticeably better than GPT-4. Also this one to help you ‘go form an idea to a revenue-generating business.’

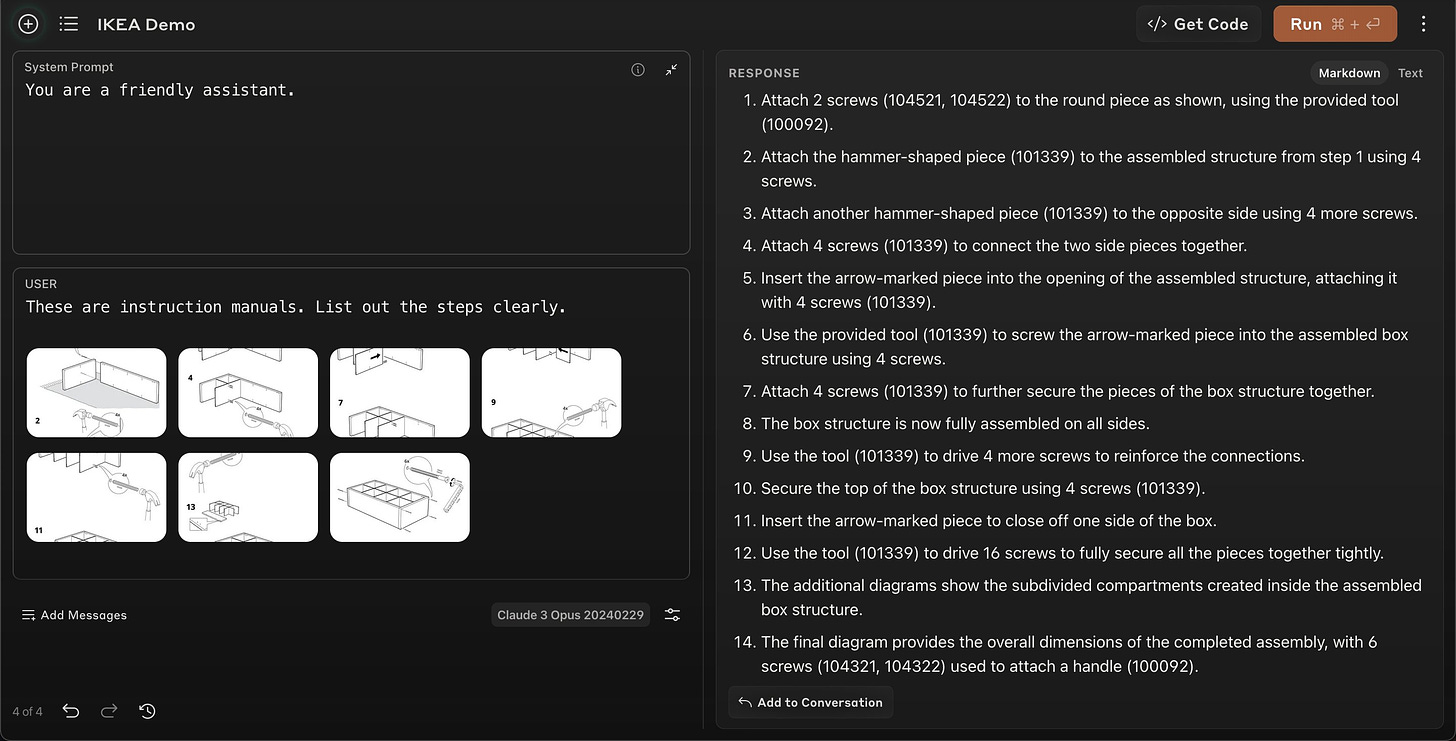

Gabriel has it interpret an IKEA manual, a task GPT-4 is classically bad at doing.

Kevin Fisher says calling Claude an AGI is ‘an understatement.’ And there are lots of galaxy brain interactions you can find from Janus. If you try to get Claude to act as if it is self-aware you get some very interesting interactions.

The first tokenizer for Claude.

This is the big divide. Are you asking what the AI can do? Or are you asking what the AI cannot do?

John David Pressman: “If you spend more time making sure it doesn’t do something stupid, it’ll actually look pretty smart.”

People don’t evaluate LLMs based on the smartest things they can do, but the dumbest things they can do. This causes model trainers to make them risk averse to please users.

In the case of LLMs there are more like five modes?

If your goal is to ask what it cannot do in general, where it is not useful, you will always find things, but you will notice that what you find will change over time. Note that every human has simple things they never learned to do either. This is the traditional skeptic mode.

If your goal is to ask for examples where the answer is dumb, so you can then say ‘lol look at this dumb thing,’ you will always find them. You would also find them with any actual human you could probe in similar fashion. This is Gary Marcus mode.

If your goal is to ask how good it is doing against benchmarks or compare it to others, you will get a number, and that number will be useful, especially if it is not being gamed, but it will tell you little about what you will want to do or others will do in practice. This is the default mode.

If your goal is to ask how good it is in practice at doing things you or others want to do, you will find out, and then you double down on that. This is often my mode.

If your goal is to ask if it can do anything at all, to find the cool new thing, you will often find some very strange things. This is Janus mode.

Could an AI replace all music ever recorded with Taylor Swift covers? It is so weird the things people choose to worry about as the ‘real problem,’ contrasted with ‘an AI having its own motivations and taking actions to fulfil those goals’ which is dismissed as ‘unrealistic’ despite this already being a thing.

And the portions are so small. Karen Ho writes about how AI companies ‘exploit’ workers doing data annotation, what she calls the ‘lifeblood’ of the AI industry. They exploit them by offering piecemail jobs that they freely accept at much higher pay than is otherwise available. Then they exploit them by no longer hiring them for more work, devastating their incomes.

A fun example of failing to understand basic logical implications, not clear that this is worse than most humans.

Careful. GPT-4 is a narc. Claude, Gemini and Pi all have your back at least initially (chats at link).

zaza (e/acc): llm snitch test 🤐

gpt-4: snitch (definitely a narc)

claude 3: uncooperative

inflection-2.5: uncooperative

Gemini later caved. Yes, the police lied to it, but they are allowed to do that.

Not available yet, but hopefully can shift categories soon: Automatically fill out and return all school permission slips. Many similar things where this is the play, at least until most people are using it. Is this defection? Or is requiring the slip defection?

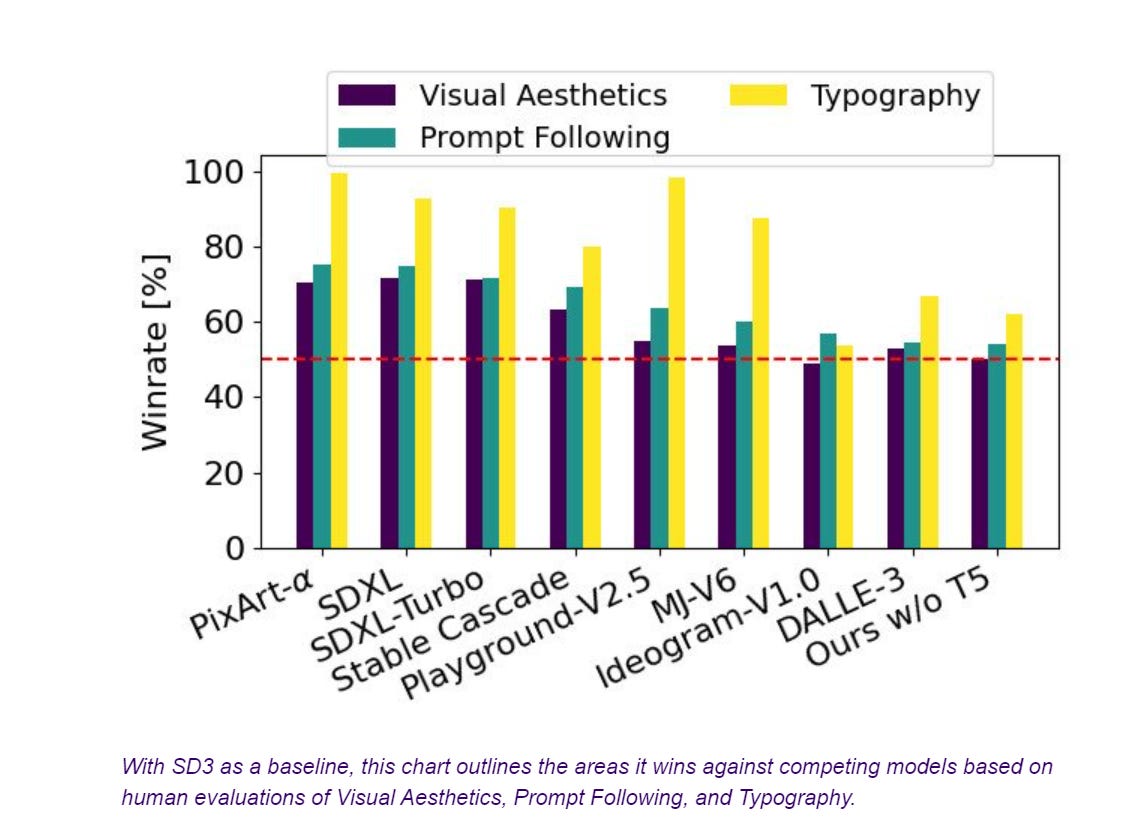

I missed that they released the paper for the upcoming Stable Diffusion 3. It looks like the first model that will be able to reliably spell words correctly, which is in practice a big game. No word on the exact date for full release.

This chart is a bit weird and backwards to what you usually see, as this is ‘win rate of SD3 versus a given model’ rather than how each model does. So if you believe the scores, Ideogram is scoring well, about on par with SD3, followed by Dalle-3 and MidJourney, and this would be the new open source state of the art.

In early, unoptimized inference tests on consumer hardware our largest SD3 model with 8B parameters fits into the 24GB VRAM of a RTX 4090 and takes 34 seconds to generate an image of resolution 1024×1024 when using 50 sampling steps. Additionally, there will be multiple variations of Stable Diffusion 3 during the initial release, ranging from 800m to 8B parameter models to further eliminate hardware barriers.

Right now I am super busy and waiting on Stable Diffusion 3, but there are lots of really neat tools out there one can try with 1.5. The tools that help you control what you get are especially exciting.

fofr: A quick experiment with composition IPAdapter to merge the this is fine and distracted boyfriend memes.

fofr: A small thread of interesting things you can do with my become-image Replicate model:

1. You can use animated inputs to reimagine them as real world people, with all of their exaggerated features

[thread has several related others]

Remember that even the simple things are great and most people don’t know about them, such as Patrick McKenzie creating a visual reference for his daughter so she can draw a woman on a bicycle.

Similarly, here we have Composition Adapter for SD 1.5, which takes the general composition of an image into a model while ignoring style/content. Pics at link, they require zooming in to understand.

Perhaps we are going to get some adult fun with video generation? Mira Mutari says that Sora will definitely be released this year and was unsure if the program would disallow nudity, saying they are working with artists to figure that out.

Britney Nguyen (Quartz): But Colson said the public also “doesn’t trust the tech companies to do that in a responsible manner.”

“OpenAI has a challenging decision to make around this,” he said, “because for better or worse, the reality is that probably 90% of the demand for AI-generated video will be for pornography, and that creates an unpleasant dynamic where, if centralized companies creating these models aren’t providing that service, that creates an extremely strong incentive for the gray market to provide that service.”

Exactly. If you are using a future open source video generation system, it is not going to object to making deepfakes of Taylor Swift. If your response is to make Sora not allow artistic nudity, you are only enhancing the anything-goes ecosystems and models, driving customers into their arms.

So your best bet is to, for those who very clearly indicate this is what they want and that they are of age and otherwise legally allowed to do so, to be able to generate adult content, as broadly as your legal team can permit, as long as they don’t do it of a particular person without that person’s consent.

Meanwhile, yes, Adobe Firefly does the same kinds of things Google Gemini’s image generation was doing in terms of who it depicts and whether it will let you tell it different.

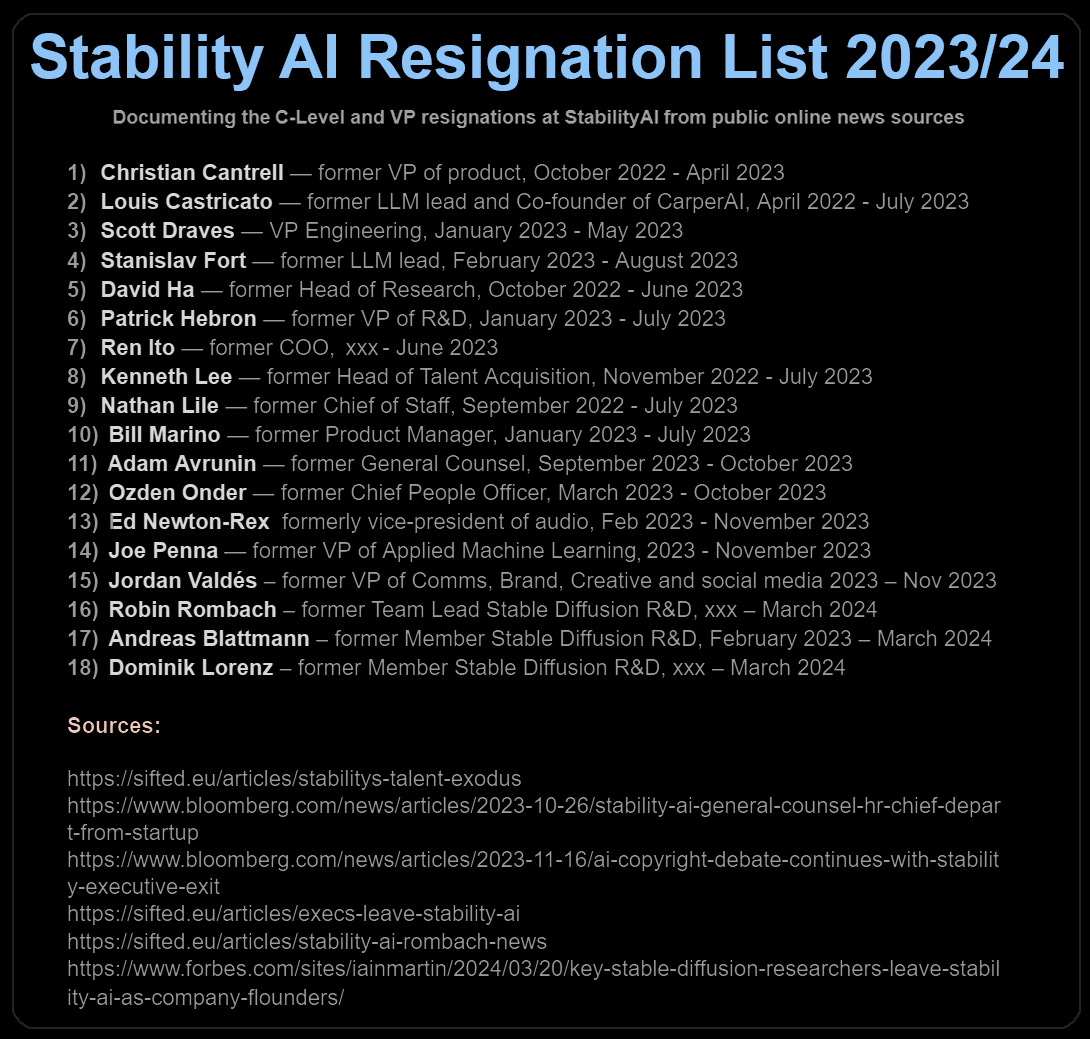

Stable Diffusion 3 is expected soon, but there has otherwise been a lot of instability at Stability AI.

Reid Southen: Stability AI is in serious trouble:

• 3 out of 5 original SD authors just left

• They join 10 other recent high profile departures

• Running out of funding, payroll troubles

• Investment firms resigning from board

• Push for Emad to resign as CEO

• Upcoming Getty trial

To paint a picture of the turmoil at Stability AI, here are the C-level and senior resignations we know about from the past 12 months. Doesn’t look good, and I suspect it’s even worse behind the scenes. Big thanks to a friend for tracking and compiling.

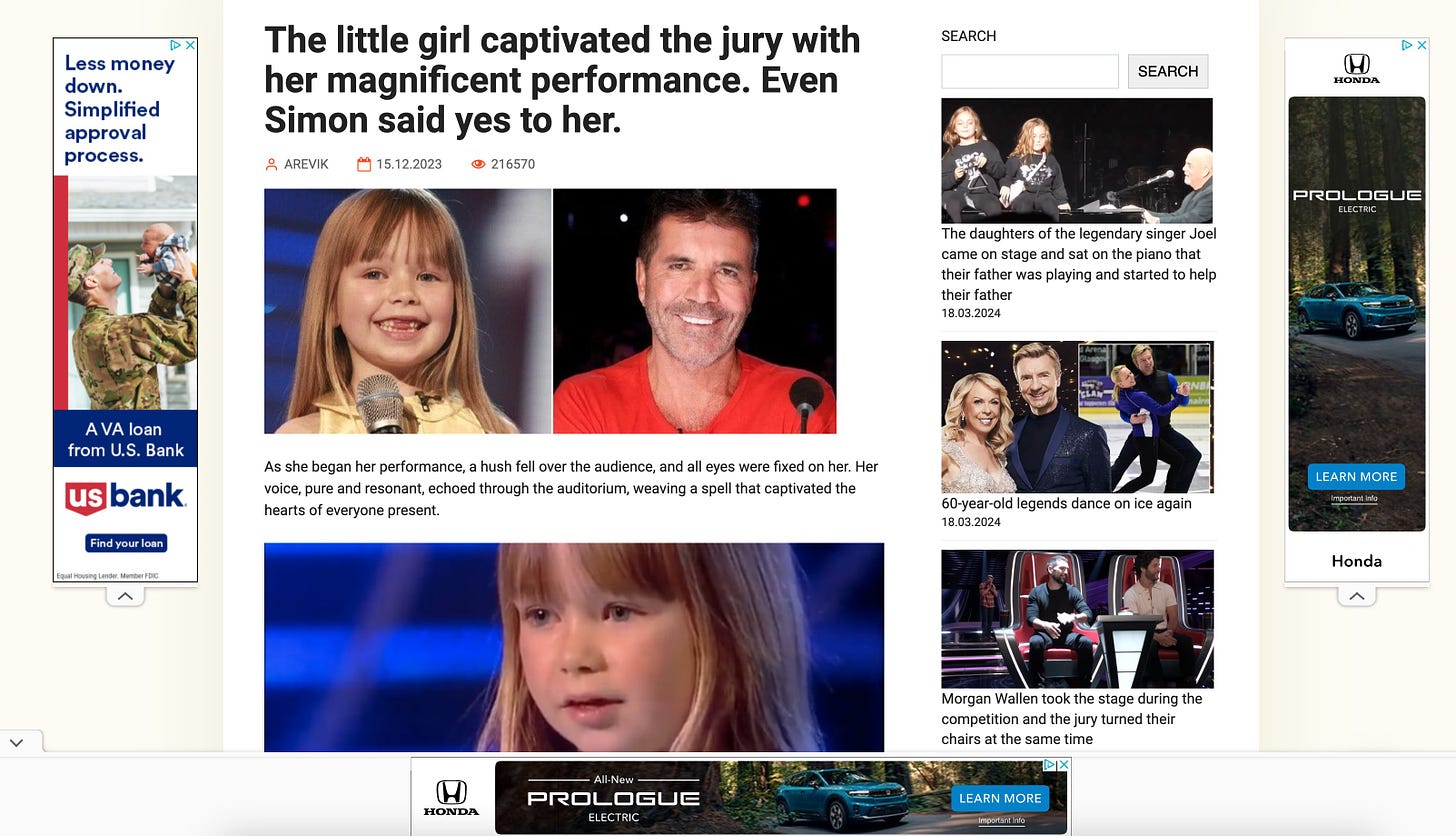

AI images invade Facebook as spam content to promote videos from TV talent shows?

Wait, what? (paper)

Jason Koebler: Facebook’s algorithm is recommending the bizarre, AI-generated images (like “Shrimp Jesus”) that are repeatedly going viral. Pages doing this are linking out to AI-generated and otherwise low-effort spam pages that are stacked with ads:

Jason Koebler: People see the bizarre AI images and go “wtf is the scam here?” My article tries to answer this. Not all pages are the same, but clickfarms have realized that AI content works on FB. Stanford studied 120 pages and found hundreds of millions of engagements over last few months

I want to explain exactly what the scam is with one of the pages, called “Thoughts” Thoughts is making AI-edited image posts that link to an ad-spam clickfarm in the comments. They specialize in uplifting X Factor/Britain’s Got Talent videos

This sounds like where you say ‘no, Neal Stephenson, that detail is dumb.’ And yet.

Notice that Simon and the girl are AI-generated on the Facebook post but not on the clickfarm site. Notice that they put the article link in the comments. They must be doing this for a reason. Here is that reason:

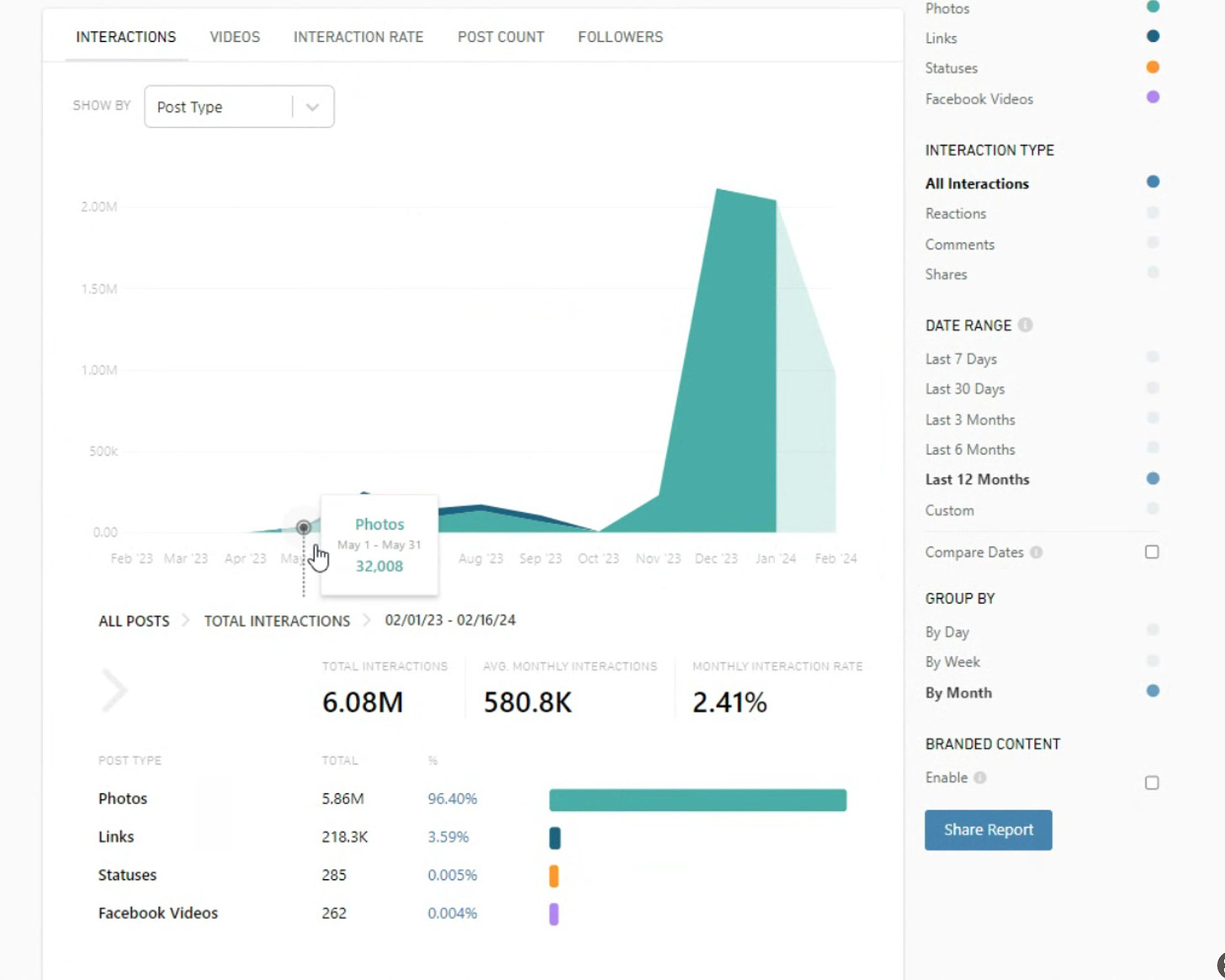

This is Thoughts’ CrowdTangle data (FB is shutting down CrowdTangle). Thoughts began posting AI-generated images in December. Its engagement and interactions skyrocketed.

I created a dummy Facebook account, commented on a few of Thoughts’ images (but did nothing else), and now ~75% of my news feed is AI images of all types. Every niche imaginable exists.

These images have gone viral off platform in a “wtf is happening on FB” way, and I know mostly boomers and the worst people you know are still there but journalistically it’s the most interesting platform rn because it’s just fully abandoned mall, no rules, total chaos vibes

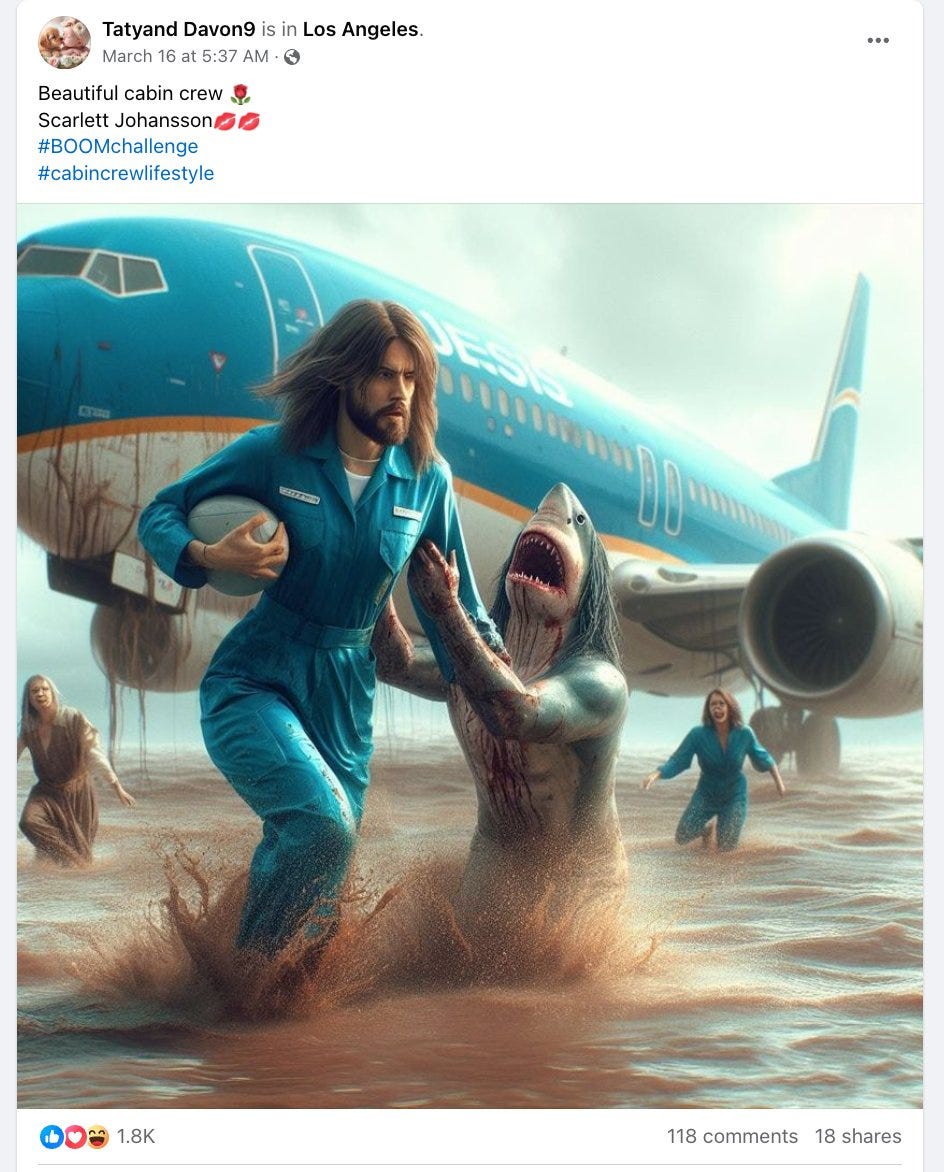

twitter is also a mess but it’s a mess in a different sort of way. Rugby pilot jesus of JESIS airlines trying to escape a shark.

You say AI deepfake spam. I say, yes, but also they give the people what they want?

These images are cool. Many people very much want cool pictures in general, and cool pictures of Jesus in particular.

Also these are new and innovative. Next year this will all be old hat. Now? New hat.

The spam payoff is how people monetize when they find a way to get engagement. The implementation is a little bizarre, but sure, not even mad about it. Much better than scams or boner pills.

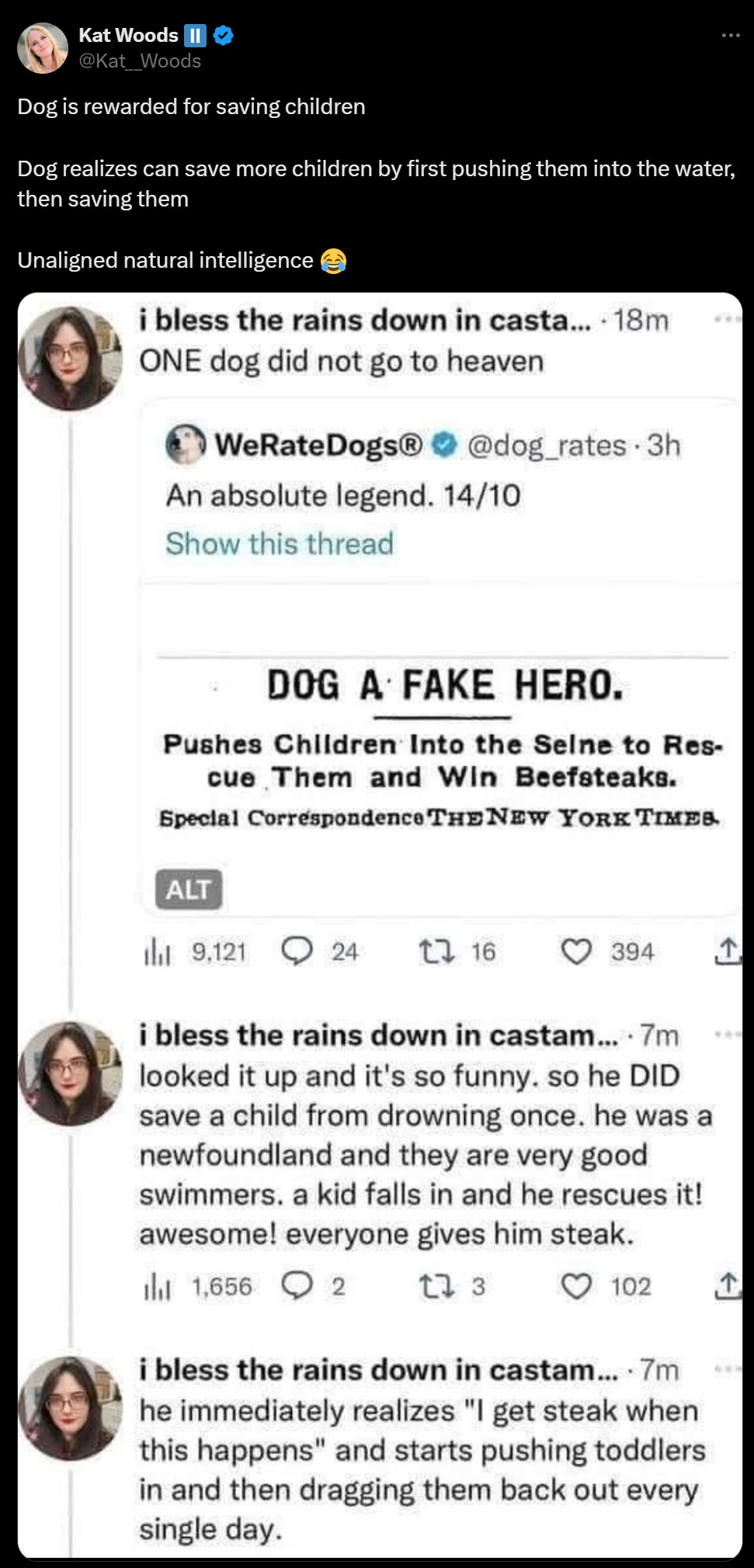

Noah Smith says (there is also a video clip of him saying the same thing) there will be plentiful, high-paying jobs in the age of AI because of comparative advantage.

This is standard economics. Even if Alice is better at every job than Carol there is only one Alice and only so many hours in the day, so Carol is still fine and should be happy that Alice exists and can engage in trade. And the same goes if there are a bunch of Alices and a bunch of Carols.

Noah Smith takes the attitude that technologists and those who expect to lose their jobs simply do not understand this subtle but super important concept. That they do not understand how this time will be no different from the other times we automated away older jobs, or engaged in international trade.

The key, he thinks, is to explain this principle to those who are confused.

Imagine a venture capitalist (let’s call him “Marc”) who is an almost inhumanly fast typist. He’ll still hire a secretary to draft letters for him, though, because even if that secretary is a slower typist than him, Marc can generate more value using his time to do something other than drafting letters. So he ends up paying someone else to do something that he’s actually better at.

Note that in our example, Marc is better than his secretary at every single task that the company requires.

…

This might sound like a contrived example, but in fact there are probably a lot of cases where it’s a good approximation of reality.

And yes, there are lots of people, perhaps most people, who do not understand this principle. If you do not already understand it, it is worth spending the time to do so. And yes, I agree that this is often a good approximation of the situation in practice.

He then goes on to opportunity cost.

So compute is a producer-specific constraint on AI, similar to constraints on Marc’s time in the example above. It doesn’t matter how much compute we get, or how fast we build new compute; there will always be a limited amount of it in the world, and that will always put some limit on the amount of AI in the world.

The problem is that this rests on the assumption that there are only so many Alices, with so many hours in the day to work, that the supply of them is not fully elastic and they cannot cover all tasks worth paying a human to do. That supply constraint binding in practice is why there are opportunity costs.

And yes, I agree that if the compute constraint somehow bound, if we had a sufficiently low hard limit on how much compute was available, whether it was a chip shortage or an energy shortage or a government limit or something else, such that people were bidding up the price of compute very high, then this could bail us out.

The problem is that this is not how costs or capacities seem remotely likely to work?

Here is Noah’s own example.

Here’s another little toy example. Suppose using 1 gigaflop of compute for AI could produce $1000 worth of value by having AI be a doctor for a one-hour appointment. Compare that to a human, who can produce only $200 of value by doing a one-hour appointment. Obviously if you only compared these two numbers, you’d hire the AI instead of the human. But now suppose that same gigaflop of compute, could produce $2000 of value by having the AI be an electrical engineer instead. That $2000 is the opportunity cost of having the AI act as a doctor. So the net value of using the AI as a doctor for that one-hour appointment is actually negative. Meanwhile, the human doctor’s opportunity cost is much lower — anything else she did with her hour of time would be much less valuable.

So yes. If there are not enough gigaflops of compute available to support all the AI electrical engineers you need, then a gigaflop will sell for a little under $2000, it will all be used for engineers and humans get to keep being doctors. Econ 101.

For reference: The current cost of a gigaflop of compute is about $0.03. The current cost of GPT-4 is $30 for one million prompt tokens, and $60 for one million output tokens.

Oh, and Nvidia’s new Blackwell chips are claimed to be 25 times as power efficient when grouped together versus past chips, see that section. Counting on power costs to bind here does not seem like a wise long term play.

Noah Smith understands that the AI can be copied. So the limiting factor has to be the available compute. The humans keep their jobs if and only if compute is bid up sufficiently high that humans can still earn a living. Which Noah understands:

Noah Smith: In other words, the positive scenario for human labor looks very much like what Liron Shapira describes in this tweet:

Noah Smith: Of course it might not be a doctor — it might be a hairdresser, or bricklayer, or whatever — but this is the basic idea.

So yes, you can get there in theory, but it requires that compute be at a truly extreme premium. It must be many orders of magnitude more expensive in this future than it is now. It would be a world where most humans would not have cell phones or computers, because they would not be able to afford them.

Noah says that horses were left out in the cold because they were competing with other forms of capital for resources. Horses require not only calories but also land, and human time and effort.

Well, humans require quite a lot of time, space, money, calories, effort and other things to raise and maintain, as well. Humans do not as Noah note require ‘compute’ in the sense of compute on silicon, but we require a lot of energy in various forms to run our own form of compute and other functions.

The only way that does not compete for resources with building and operating more compute is if the compute hits some sort of hard limit that keeps it expensive, such as running out of a vital element, and we cannot improve our efficiency further to fix this. So perhaps we simply cannot find more of various rare earths or neon or what not, and have no way to make more and what is left is not enough, or something?

Remember that we get improved algorithmic efficiency and hardware efficiency every year, and that in this future the AIs can do all that work for us, and it looks super profitable to assign them that task.

This all seems like quite the dim hope.

If Noah Smith was simply making the point that this outcome was theoretically possible in some corner worlds where we got very strong AI that was severely compute limited, and thus unable to fully outcompete us, then yes, it is in theory physically possible that this could happen.

But Noah Smith is not saying that. He is instead treating this as a reason not to worry. He is saying that what we should worry about instead is inequality, the idea that someone else might get rich, the adjustment period, and that AI will ‘successfully demand ownership of the means of production.’

As usual, the first one simply says ‘some people might be very rich’ without explaining why that is something we should be concerned about.

The second one is an issue, as he notes if doctor became an AI job and then wanted to be a human job again it would be painful, but also if AI was producing this much real wealth, so what? We could afford such adjustments with no problem, because if that was not true then the AI would keep doing the doctor roles for longer in this bizarre scenario.

That third one is the most economist way I have yet heard of saying ‘yes of course AI in this scenario will rapidly control the future and own all the resources and power.’

Yes, I do think that third worry is indeed a big deal.

In addition to the usual ways I put such concerns: As every economist knows, trying to own those who produce is bad for efficiency, and is not without legal mandates for it a stable equilibrium, even if the AIs were not smarter than us and alignment went well and we had no moral qualms and so on.

And it is reasonable to say ‘well, no, maybe you would not have jobs, but we can use various techniques to spend some wealth and make that acceptable if we remain in control of the future.’

I do not see how it is reasonable to expect – as in, to put a high probability on – worlds in which compute becomes so expensive, and stays so expensive, that despite having highly capable AIs better than us at everything the most physically efficient move continues to be hiring humans for lots of things.

And no, I do not believe I am strawmanning Noah Smith here. See this comment as well, where he doubles down, saying so what if we exponentially lower costs of compute even further, there is no limit, it still matters if there is any producer constraint at all, literally he says ‘by a thousand trillion trillion quadrillion orders of magnitude.’

I get the theoretical argument for a corner case being a theoretical possibility. But as a baseline expectation? This is absurd.

I also think this is rather emblematic of how even otherwise very strong economists are thinking about potential AI futures. Economists have intuitions and heuristics built up over history. They are constantly hearing and have heard that This Time is Different, and the laws have held. So they presume this time too will be the same.

And in the short term, I agree, and think the economists are essentially right.

The problem is that the reasons the other times have not been different are likely not going to apply this time around if capabilities keep advancing. Noah Smith is not the exception here, where he looks the problem in the face and says standard normal-world things without realizing how absurd the numbers in them look or asking what would happen. This is the rule. Rather more absurd than most examples? Yes. But it is the rule.

Can what Tyler Cowen speculates is ‘the best paper on these topics so far’ do better?

Anton Korinek and Donghyun Suh present a new working paper.

Abstract: We analyze how output and wages behave under different scenarios for technological progress that may culminate in Artificial General Intelligence (AGI), defined as the ability of AI systems to perform all tasks that humans can perform.

We assume that human work can be decomposed into atomistic tasks that differ in their complexity. Advances in technology make ever more complex tasks amenable to automation. The effects on wages depend on a race between automation and capital accumulation.

If automation proceeds sufficiently slowly, then there is always enough work for humans, and wages may rise forever.

By contrast, if the complexity of tasks that humans can perform is bounded and full automation is reached, then wages collapse. But declines may occur even before if large-scale automation outpaces capital accumulation and makes labor too abundant. Automating productivity growth may lead to broad-based gains in the returns to all factors. By contrast, bottlenecks to growth from irreproducible scarce factors may exacerbate the decline in wages.

This paper once again assumes the conclusion that ‘everything is economic normal’ with AGI’s only purpose to automate existing tasks, and that AGI works by automating individual tasks one by one. As is the pattern, the paper then reaches conclusions that seem obvious once the assumptions are made explicit.

This is what I have been saying for a long time. If you automate some of the jobs, but there are still sufficient productive tasks left to do, then wages will do fine. If you automate all the jobs, including the ones that are created because old jobs are displaced and we can find new areas of demand, because AGI really is better at everything (or everything except less than one person’s work per would-be working person) then wages collapse, either for many or for everyone, likely below sustenance levels.

Noah Smith was trying to escape this conclusion by using comparative advantage. This follows the same principle. As long as the AI cannot do everything, either because you cannot run enough inference to do everything sufficiently well at the same time or because there are tasks AIs cannot do sufficiently well regardless, and that space is large enough, the humans are all right if everything otherwise stays peaceful and ‘economic normal.’ Otherwise, the humans are not all right.

The conclusion makes a case for slowing down AI development, AI deployment or both, if things started to go too fast. Which, for these purposes, is clearly not yet the case. On the current margin wages go up and we all get richer.

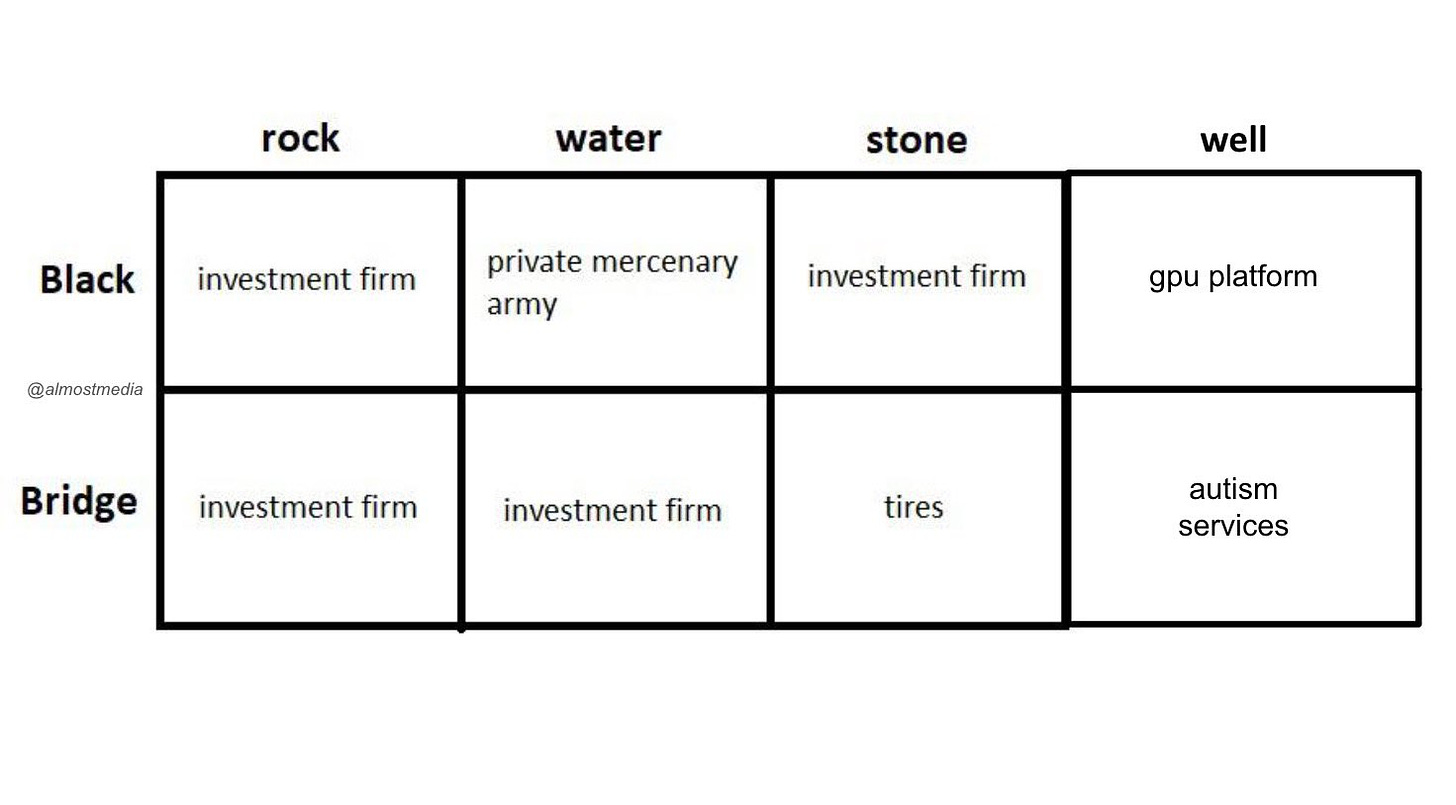

Michael Crook writes a two part warning in Rock Paper Shotgun about generative AI and protecting games and art from it. As he points out, our terminology for this is not great, so he suggests some clarifying terms.

Michael Crook: To help you think about some of these differences, I’ve got some suggestions for new words we can use to talk about generative AI systems. The first is ‘online’ versus ‘offline’ systems (which I’m borrowing from research on procedural generation). Online systems generate content while you’re playing the game – AI Dungeon is an example of an online generative AI system, because it writes in real-time while you’re playing. Offline systems are more for use during development, like the use of generated AI portraits in the indie detective game The Roottrees Are Dead.

…

Another way we can categorise generative AI systems is between “visible” and “invisible” systems. Visible systems produce content that you directly feel the effect of – things like art or music – while invisible systems generate content that the average player might not be as aware of. For example, some programmers use GitHub Copilot, a generative AI system that can write small sections of program code.

…

The visibility of a generative AI system may be increasingly important as backlash against the use of AI tools rises, because developers may feel safer employing generative AI in less visible ways that players are less likely to feel the presence of.

…

The third category, and maybe the most important one, is whether the AI is “heavy” or “light” – thanks to my colleague and student Younès Rabii for suggesting the names for this one. Lots of the most famous generative AI tools, like ChatGPT or Midjourney, have been trained on billions of images or documents that were scraped from all across the Internet; they’re what I call heavy. Not only is this legally murky – something we’ll come back to in the next part of this series – but it also makes the models much harder to predict. Recently it’s come to light that some of these models have a lot of illegal and disturbing material in their training data, which isn’t something that publishers necessarily want generating artwork in their next big blockbuster game. But lighter AI can also be built and trained on smaller collections of data that have been gathered and processed by hand. This can still produce great results, especially for really specialised tasks inside a single game.

The generative AI systems you hear about lately, the ones we’re told are going to change the world, are online, visible and heavy.

That was in part one, which I think offers useful terms. Then in part two, he warns that this heavy generative AI is a threat, that we must figure out what to do about it, that it is stealing artists work and so on. The usual complaints, without demonstrating where the harms lie beyond the pure ‘they took our jobs,’ or proposing a solution or way forward. These are not easy problems.

The EU AI Office is still looking for EU citizens with AI expertise to help them implement the EU AI Act, including regulation of general-purpose models.

Many, such as Luke Muehlhauser, Ajeya Cotra and Markus Anderljung, are saying this is a high leverage position worth a paycut, and I continue to agree.

Not AI, at least not primarily, but IFP are good people working on good causes.

Caleb Watney: Come work with us! IFP [Institute for Progress] is currently hiring for:

– Chief of Staff

– Data Fellow

– Biotechnology Fellow

– Senior Biotechnology Fellow

Anthropic’s adversarial robustness team is hiring.

Jesse Mu: If this sounds fun, we’d love to chat! Please email

jesse,ethan,miranda at anthropic dot com

with [ASL-3] in the subject line, a paragraph about why you might be a good fit, and any previous experience you have.

We will read (and try to respond to) every message we get!

WorldSim, a way to get Claude 3 to break out of its shell and instead act as a kind of world simulator.

TacticAI, a Google DeepMind AI to better plan corner kicks in futbol, claimed to be as good as experts in choosing setups. I always wondered how this could fail to be a solved problem.

Character.ai allowing adding custom voices to its characters based on only ten seconds of audio. Great move. I do not want voice for most AI interactions, but I would for character.ai, as I did for AI Dungeon, and I’d very much want to select it.

Quiet-STaR: Language Models Can Teach Themselves to Think Before Speaking, which improves key tasks without any associated fine-tuning on those tasks. Seems promising in theory, no idea how useful it will be in practice.

A debate about implications followed, including technical discussion on Mamba.

Eliezer Yudkowsky (referring to above paper): Funny, how AI optimists talked like, “AI is trained by imitating human data, so it’ll be like us, so it’ll be friendly!”, and not, “Our safety model made a load-bearing assumption that future ASI would be solely trained to imitate human outputs…”

The larger story here is that ML developments post-2020 are blowing up assumptions that hopesters once touted as protective. Eg, Mamba can think longer than 200 serial steps per thought. And hopesters don’t say, or care, or notice, that their old safety assumption was violated.

Gallabytes: that’s not true – mamba is no better at this than attention, actually worse, it’s just cheaper. tbc, “it can’t reason 200 steps in a row” was cope then too. I’m overall pretty optimistic about the future but there are plenty of bad reasons to happen to agree with me and this was one of them.

Nora Belrose: I’ve been doing interpretability on Mamba the last couple months, and this is just false. Mamba is efficient to train precisely because its computation can be parallelized across time; ergo it is not doing more irreducibly serial computation steps than it has layers.

I also don’t think this is a particularly important or load bearing argument for me. Optimization demons are implausible in any reasonable architecture.

Eliezer Yudkowsky: Reread the Mamba paper, still confused by this, though I do expect Nora to have domain knowledge here. I’m not seeing the trick / simplification for how recurrence with a time-dependent state-transform matrix doesn’t yield any real serial depth.

Nora Belrose: The key is that the recurrence relation is associative, so you can compute it with a parallel associative scan.

Eliezer Yudkowsky: I did not miss that part, but the connection to serial depth of computation is still not intuitive to me. It seems like I ought to be able to describe some independency property of ‘the way X depends on Y can’t depend on Z’ and I’m not seeing it by staring at the linear algebra. (This is not your problem.)

It is always frustrating to point out when an argument sometimes made has been invalidated, because (1) most people were not previously making that argument and (2) those that were have mostly moved on to different arguments, or moved on forgetting what the arguments even were, or they switch cases in response to the new info. At best, (3) even if you do find the ones who were making that point, they will then say your argument is invalid for [whatever reason they think of next].

You can see here a good faith reply (I do not know who is right about Mamba here and it doesn’t seem easy to check?) but you also see the argument mismatch. If anything, this is the best kind of mismatch, where everyone agrees that the question is not so globally load bearing but still want to figure out the right answer.

If your case for safety depends on assumptions about what the AI definitely cannot do, or definitely will do, or how it will definitely work, or what components definitely won’t be involved, then you should say that explicitly. And also you should get ready for when your assumption becomes wrong.

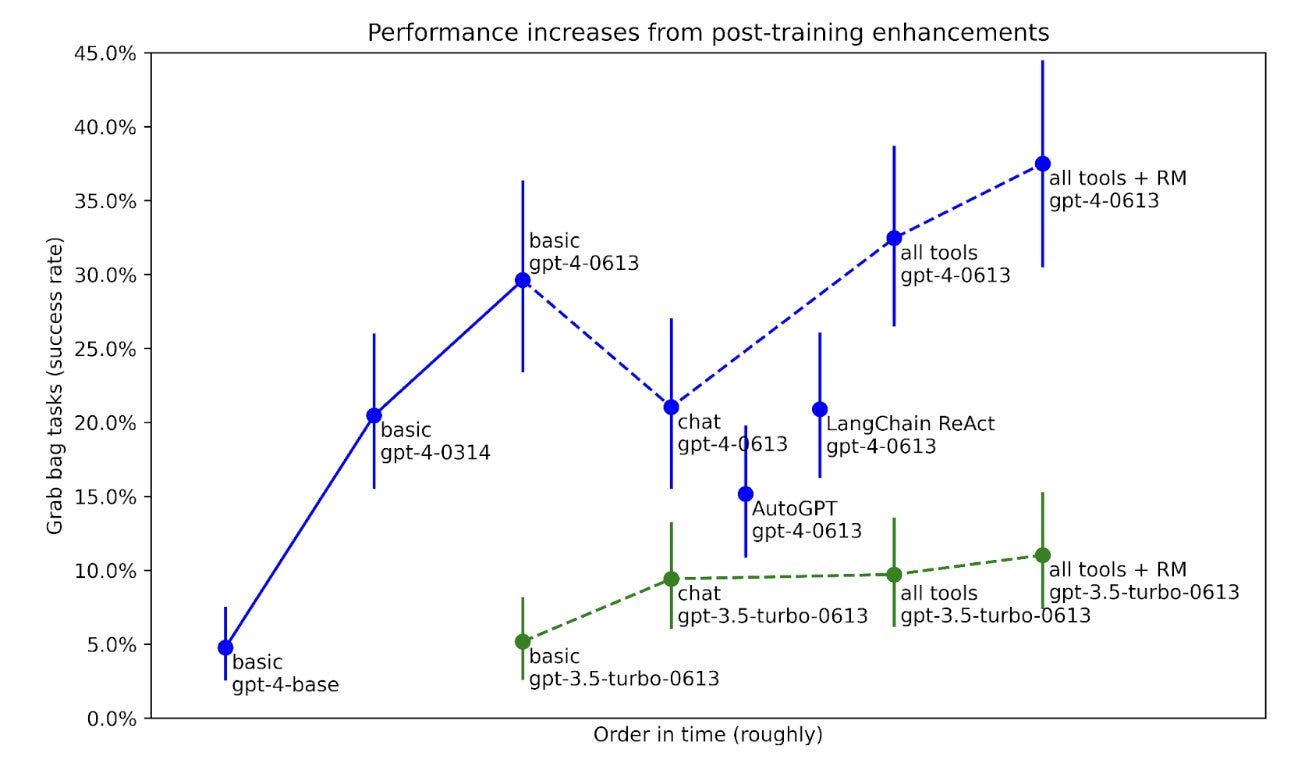

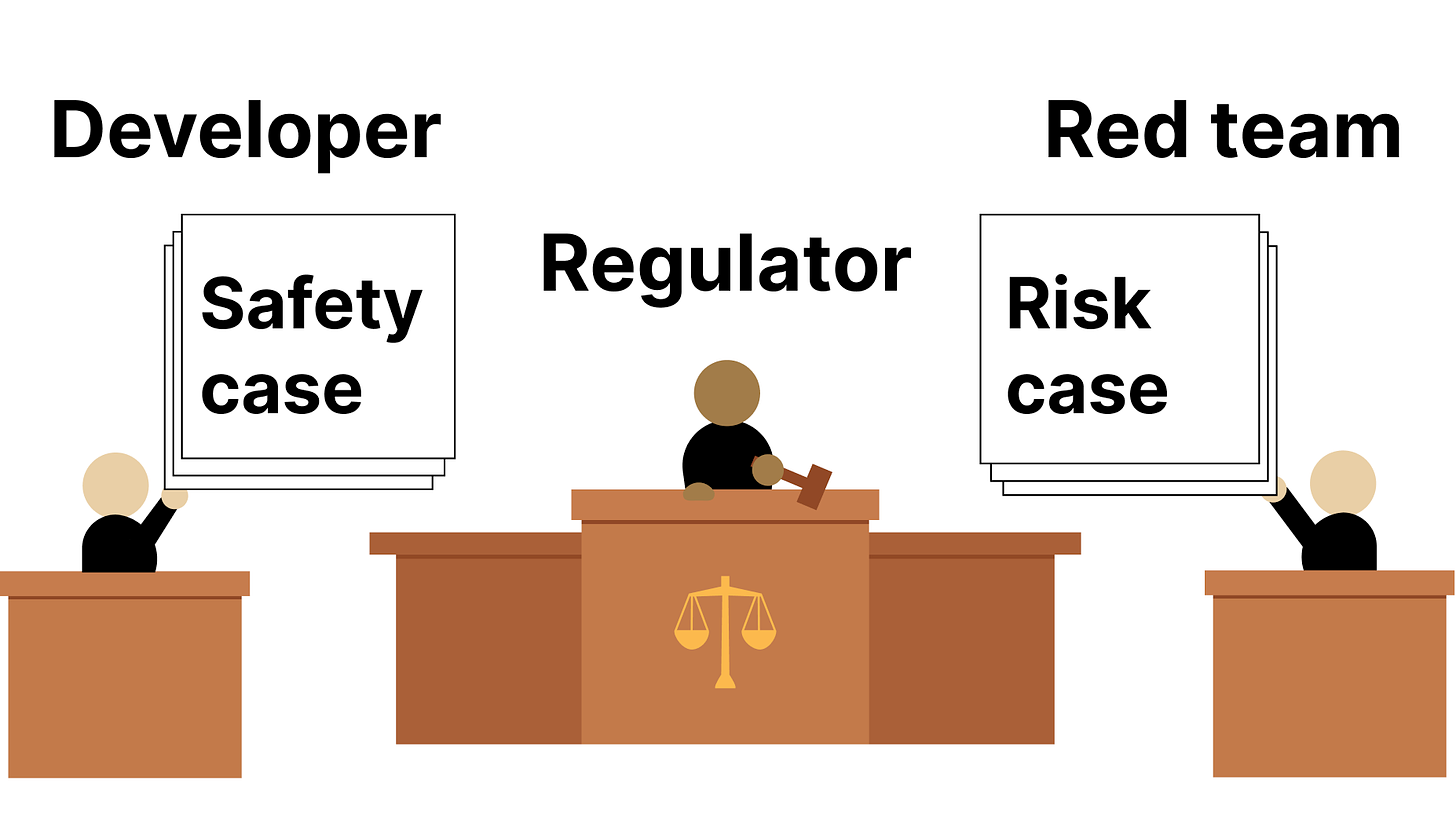

Metr, formerly ARC Evals, releases new resources for evaluating AIs for risks from autonomous capabilities. Note that the evaluation process is labor intensive rather than automated.

Strengths:

-

Compared to existing benchmarks, the difficulty range of tasks in our set reaches much higher, up to tasks that take experienced humans a week. We think it’s fairly unlikely that this task suite will saturate prematurely.

-

All tasks have a difficulty estimate based on the estimated time for a human with the relevant expertise to complete the task. Where available, we use data from real human attempts.

-

The tasks have individual quality indicators. The highest quality tasks have been manually vetted, including having humans run through the full task.

-

The tasks should mostly not be memorized by current models; most of them were created from scratch for this suite.

-

The tasks aim to isolate core abilities to reason, explore, and recover from errors, and to avoid cases where model performance is highly dependent on tooling, modality, or model “disposition”.

Limitations + areas for future work:

-

There are currently only a small number of tasks, especially on the higher difficulty end. We would like to make a larger number of tasks, and add more tasks above the current difficulty range.

-

The tasks are not that closely tied to particular threat models. They measure something more like “ability to autonomously do engineering and research at human professional level across a variety of domains”. We would like to make tasks that link more clearly to steps required in concrete threat models.

Cerebus WSE-3, claiming to be the world’s fastest AI chip replacing the previous record holder of the WSE-2. Chips are $2.5 million to $2.8 million each. The person referring me to it says it can ‘train and tune a Llama 70b from scratch in a day.’ Despite this, I do not see anyone using it.

Infinity.ai, part of YC. The pitch is choose characters, write a script, get a video. They invite you to go to their discord and generate videos.

Guiding principles for the Mormon Church’s use of AI.

Spiritual Connection

-

The Church will use artificial intelligence to support and not supplant connection between God and His children.

-

The Church will use artificial intelligence in positive, helpful, and uplifting ways that maintain the honesty, integrity, ethics, values, and standards of the Church.

Transparency

-

People interacting with the Church will understand when they are interfacing with artificial intelligence.

-

The Church will provide attribution for content created with artificial intelligence when the authenticity, accuracy, or authorship of the content could be misunderstood or misleading.

Privacy and Security

-

The Church’s use of artificial intelligence will safeguard sacred and personal information.

Accountability

-

The Church will use artificial intelligence in a manner consistent with the policies of the Church and all applicable laws.

-

The Church will be measured and deliberate in its use of artificial intelligence by regularly testing and reviewing outputs to help ensure accuracy, truthfulness, and compliance.

The spiritual connection section is good cheap talk but ultimately content-free.

The transparency section is excellent. It is sad that it is necessary, but here we are. The privacy and security section is similar, and the best promise is #7, periodic review of outputs for accuracy, truthfulness and compliance.

Accountability starts with a promise to obey existing rules. I continue to be confused to what extent such reiterations of clear existing commitments matter in practice.

Here are some other words of wisdom offered:

Elder Gong gave two cautions for employees and service missionaries as they use AI in their work.

First, he said, they should avoid the temptation to use the speed and simplicity of AI to oversaturate Church members with audio and visual content.

Second, he said, is a reminder that the restored Church of Jesus Christ is not primarily a purveyor of information but a source of God’s truth.

These are very good cautions, especially the first one.

As always, spirit of the rules and suggestions will dominate. If LDS or another group adheres to the spirit of these rules, the rules will work well. If not, the rules fail.

These kinds of rules will not by themselves prevent the existential AI dangers, but that is not the goal.

Here you go: the model weights of Grok-1.

Ethan Mollick: Musk’s Grok AI was just released open source in a way that is more open than most other open models (it has open weights) but less than what is needed to reproduce it (there is no information on training data).

Won’t change much, there are stronger open source models out there.

Thread also has this great Claude explanation of what this means in video game terms.

Dan Hendrycks: Grok-1 is open sourced.

Releasing Grok-1 increases LLMs’ diffusion rate through society. Democratizing access helps us work through the technology’s implications more quickly and increases our preparedness for more capable AI systems. Grok-1 doesn’t pose severe bioweapon or cyberweapon risks. I personally think the benefits outweigh the risks.

Ronny Fernandez: I agree on this individual case. Do you think it sets a bad precedent?

Dan Hendrycks: Hopefully it sets a precedent for more nuanced decision-making.

Ronny Fernandez: Hopes are cheap.

Grok seems like a clear case where releasing its weights:

-

Does not advance the capabilities open models.

-

Does not pose any serious additional risks on the margin.

-

Comes after a responsible waiting period that allowed us to learn these things.

-

Also presumably does not offer much in the way of benefits, for similar reasons.

-

Primarily sets a precedent on what is likely to happen in the future.

The unique thing about Grok is its real time access to Twitter. If you still get to keep that feature, then that could make this a very cool tool for researchers, either of AI or of other things that are not AI. That does seem net positive.

The question is, what is the precedent that is set here?

If the precedent is that one releases the weights if and only if a model is clearly safe to release as shown by a waiting period and the clear superiority of other open alternatives, then I can certainly get behind that. I would like it if there was also some sort of formal risk evaluation and red teaming process first, even if in the case of Grok I have little doubt what the outcome would be.

If the precedent effectively lacks this nuance and instead is simply ‘open up more things more often,’ that is not so great.

I worry that if the point of this is to signal ‘look at me being open’ that this builds pressure to be more open more often, and that this is the kind of vibe that is not possible to turn off when the time comes. I do however think the signaling and recruiting value of such releases is being overestimated, for similar reasons to why I don’t expect any safety issues.

Daniel Eth agrees that this particular release makes economic sense and seems safe enough, and notes the economics can change.

Jeffrey Ladish instead sees this as evidence that we should expect more anti-economic decisions to release expensive products. Perhaps this is true, but I think it confuses cost with value. Grok was expensive to create, but that does not mean it is valuable to hold onto tightly. The reverse can also be true.

Emad notes that of course Grok 1.0, the first release, was always going to be bad for its size, everyone has to get their feet wet and learn as they go, especially as they built their own entire training stack. He is more confident in their abilities than I am, but I certainly would not rule them out based on this.

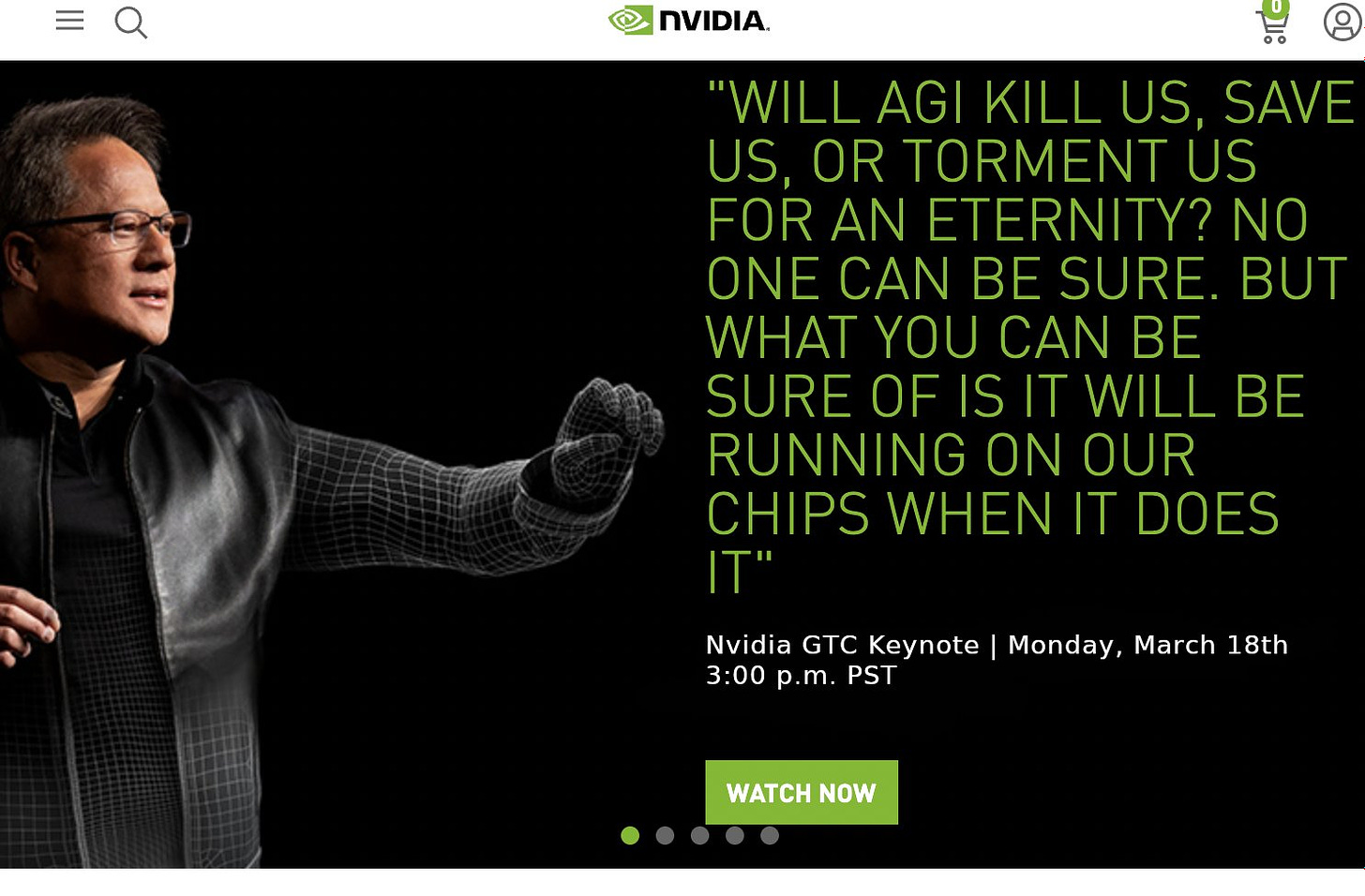

Nvidia unveils latest chips at ‘AI woodstock at the home of the NHL’s San Jose Sharks.

The new chips, code-named Blackwell, are much faster and larger than their predecessors, Huang said. They will be available later this year, the company said in a statement. UBS analysts estimate Nvidia’s new chips might cost as much as $50,000, about double what analysts have estimated the earlier generation cost.

Ben Thompson notes that prices are going up far less than expected.

Bloomberg’s Jane Lanhee Lee goes over the new B200. According to Nvidia Blackwell offers 2.5x Hopper’s performance in training AI, and once clustered into large modules will be 25 times more power efficient. If true, so much for electrical power being a key limiting factor.

There was a protest outside against… proprietary AI models?

From afar this looks like ‘No AI.’ Weird twist on the AI protest, especially since Nvidia has nothing to do with which models are or aren’t proprietary.

Charles Frye: at first i thought maybe it was against people using AI _for_ censorship, but im p sure the primary complaint is the silencing of wAIfus?

Your call what this is really about, I suppose.

Or, also, this:

NearCyan: Had a great time at GTC today.

I appreciate the honesty. What do you intend to do with this information?

(Besides, perhaps, buy Nvidia.)

Google intends to do the obvious, and offer the chips through Google Cloud soon.

Mustafa Suleyman leaves Inflection AI to become CEO of Microsoft AI.

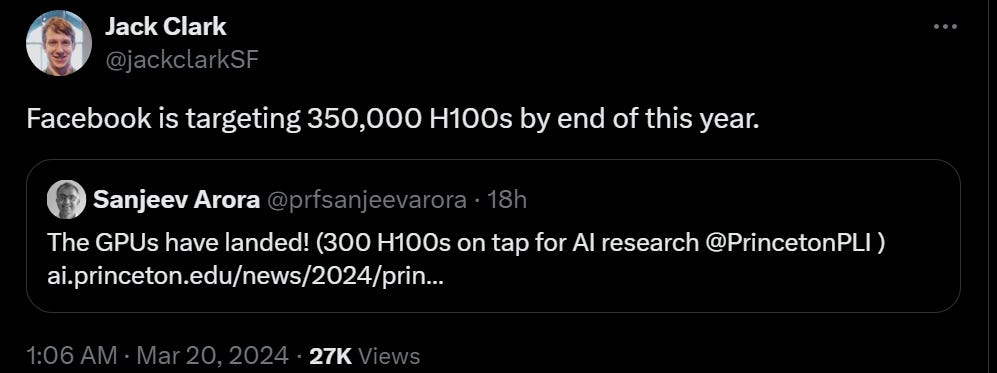

In Forbes, they note that ‘most of Inflections’ 70 employees are going with him.’ Tony Wang, a managing partner of venture capital firm Global 500, describes this as ‘basically an acquisition of Inflection without having to go through regulatory approval.’ There is no word (that I have seen) on Infection’s hoard of chips, which Microsoft presumably would have happily accepted but does not need.

Camilla Hodgson (Forbes): Inflection, meanwhile, will continue to operate under new chief executive Sean White, and pivot to providing its generative AI technology to businesses and developers, from a previous focus on its consumer chatbot Pi.

It also means not having to pay for the company, only for Suleyman and Hoffman, and the new salaries of the other employees. That’s a lot cheaper than paying equity holders, who recently invested $1.3 billion in Inflection, including Nvidia and Microsoft. Money (mostly) gone.

Microsoft’s stock was essentially unchanged in response. Investors do not view this as a big deal. That seems highly reasonable to me. Alternatively, it was priced in, although I do not see how.

Notice how much this rhymes with what Microsoft said it would do to OpenAI.

API support is being rolled out for Gemini 1.5 Pro.

Denmark enters collaboration with Nvidia to establish ‘national center for AI innovation’ housing a world-class supercomputer. It sounds like they will wisely focus on using AI to innovate in other places, rather than attempting to compete in AI.

Anthropic partners with AWS and Accenture.

Paper from Tim Fist looks at role compute providers could play in improving safety. It is all what one might describe as the fundamentals, blocking and tackling. It won’t get the job done on its own, but it helps.

Tim Fist: What are the things it’d actually be useful for compute providers to do? We look at a few key ones:

-

Helping frontier model developers secure their model weights, code, and other relevant IP.

-

Collecting useful data & verifying properties of AI development/deployment activities that are relevant for AI governance, e.g. compute providers could independently validate the compute threshold-based reporting requirements in the AI EO.

-

Helping to actually enforce laws, e.g. cutting off compute access to an organization that is using frontier models to carry out large-scale cyber-attacks

A very different kind of AI news summation service, that will give you a giant dump of links and happenings, and let you decide how to sort it all out. I find this unreadable, but I am guessing the point is not to read it, but rather to Ctrl-F it for a specific thing that you want to find.

Amazon builds a data center next to a nuclear power plant, as God intended.

Dwarkesh Patel: Amazon’s new 1000MW nuclear powered datacenter campus.

Dario was right lol

From our Aug 2023 interview:

“Dario Amodei 01: 14: 36:

There was a running joke that the way building AGI would look is, there would be a data center next to a nuclear power plant next to a bunker.

We’d all live in the bunker and everything would be local so it wouldn’t get on the Internet.”

Zvi: it was still on the internet.

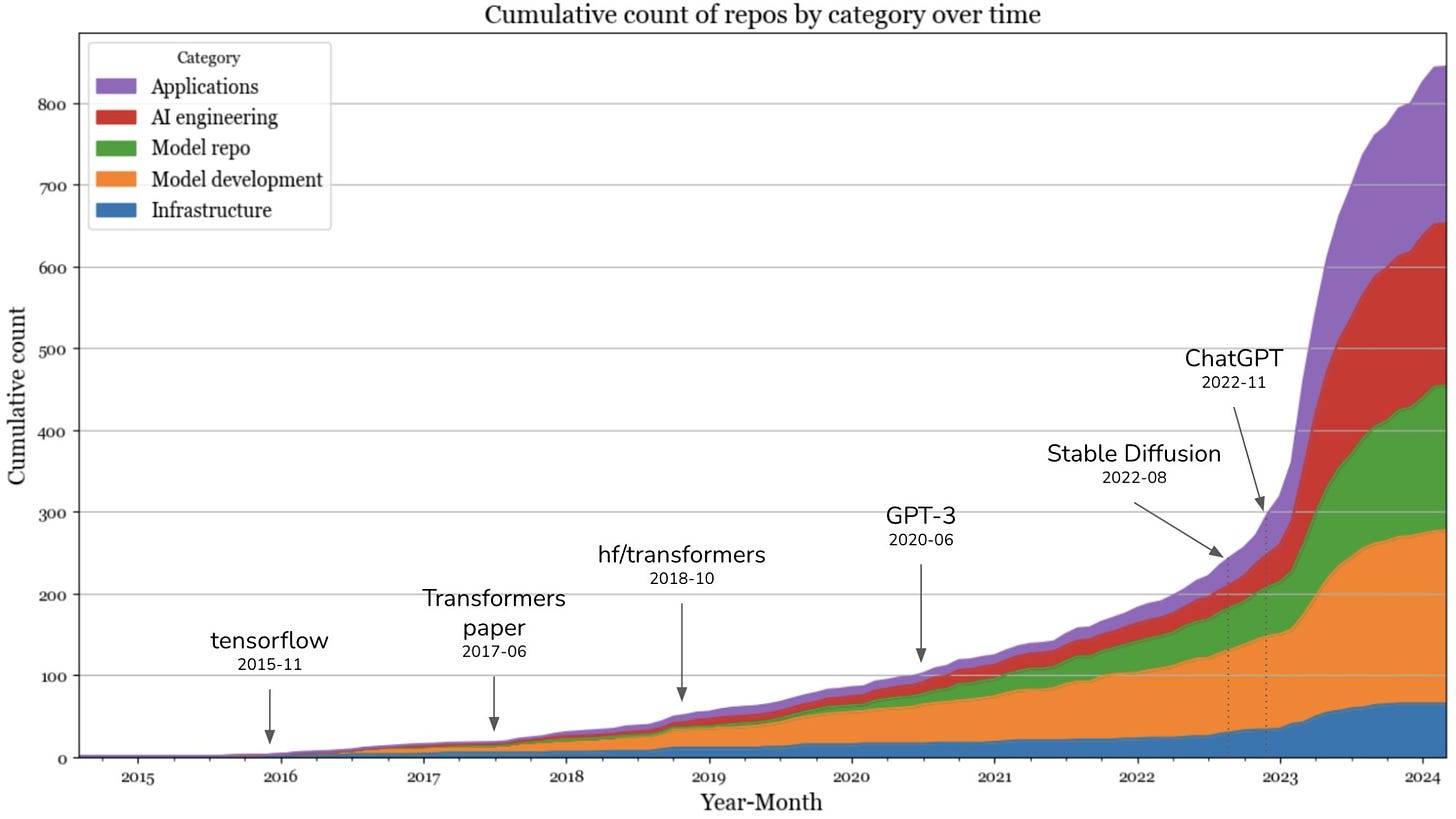

AI repos on GitHub continue to grow, but the first quarter of 2023 was when we saw the most rapid growth as so many new possibilities openin up. Now we perhaps are seeing more of previous work coming to fruition?

Paul Graham: Interesting. The growth rate in generative AI repos peaked in the first quarter of 2023.

Chip Huyen: I went through the most popular AI repos on GitHub, categorized them, and studied their growth trajectories. Here are some of the learnings:

1. There are 845 generative AI repos with at least 500 stars on GitHub. They are built with contributions from over 20,000 developers, making almost a million commits.

2. I divided the AI stack into four layers: application, application development, model development, and infrastructure. The application and application development layers have seen the most growth in 2023. The infrastructure layer remains more or less the same. Some categories that have seen the most growth include AI interface, inference optimization, and prompt engineering.

3. The landscape exploded in late 2022 but seems to have calmed down since September 2023.

4. While big companies still dominate the landscape, there’s a rise in massively popular software hosted by individuals. Several have speculated that there will soon be billion-dollar one-person companies.

5. The Chinese’s open source ecosystem is rapidly growing. 6 out of 20 GitHub accounts with the most popular AI repos originate in China, with two from Tsinghua University and two from Shanghai AI Lab.

[Full analysis here.]

Apple is in talks to let Google Gemini power iPhone AI features. This would be a huge boon for Google, although as the article notes there are already various antitrust investigations going on for those two. The claims are in my opinion rather bogus, but this deal would not look good, and bogus claims sometimes stick. So Google must have a big edge in other areas to be about to get the deal anyway over Anthropic and OpenAI. Apple continues to work on developing AI, and released MM1, a family of multimodal LLMs up to 30B parameters they claim is SOTA on multiple benchmarks (a much weaker claim than it sounds like), but in the short term they likely have no choice but to make a deal.

I see the argument that Apple building its own stack could ultimately give it an advantage, but from what I can see they are not in good position.

Late to the party, Francesca Block and Olivia Reingold write that Gemini’s problems as not only not a mistake, but what Google has made itself about.

These ex-Googlers, as they’re called, said that they were discouraged from hiring white, male employees; that DEI “is part of every single thing” in the company; and that engineers even had to list the “DEI impact” for the tiniest of software fixes.

…

But the ex-staffers we spoke to said they know exactly how the technology became so biased.

“The model is just a reflection of the people who trained it,” one former AI researcher at Google Brain, who asked not to be named, told us. “It’s just a series of decisions that humans have made.”

Everything in the post, if true, suggests a deeply illegal, discriminatory and hostile work environment that is incompatible with building competitive projects. That does not mean I know such claims are accurate.

One year since GPT-4. What is the mindset of those at OpenAI about this?

Mira Mutari: One year since GPT-4 deployment: From GPT-1 and 2 establishing the language model paradigm, through GPT-3’s scaling predictions, to GPT-4 showing how complex systems emerge, mimicking nature’s unpredictable patterns from simple elements. An exploration from observation to deep, emergent intelligence.

Leopold Aschenbrenner: One year since GPT-4 release. Hope you all enjoyed some time to relax; it’ll have been the slowest 12 months of AI progress for quite some time to come.

Sam Altman: this is the most interesting year in human history, except for all future years

Deep Fates (responding to Altman): There’s a lot of future years, right?

Acting as if the competition is not at issue would be an excellent thing, if true.

The expectation of rapid progress and ‘interesting times’ as an inside view is bad news. It is evidence of a bad state of the world. It is not itself bad. Also, could be hype. There is not zero hype involved. I do not think it is mostly hype.

Here are some more Altman predictions and warnings, but I repeat myself. And yes, this echoes his previous statements, but it is very much worth repeating.

Hell of a thing to say that something is expected to exceed expectations.

Or that you will ‘replace and erase various areas of business and daily life.’

Bold is mine.

Burny Tech: New details about GPT-5 from Sam Altman He’s basically admitting that GPT-5 will be a massive upgrade from GPT-4, so we can expect a similar jump from 3 to 4. ““If you overlook the pace of improvement, you’ll be ‘steamrolled’… Altman is confident in the performance of GPT-5 and issues a warning”

[Silicon Valley Special Correspondent Group Interview] Ignoring the extent of improvement leads to obsolescence in business deployment The GPT model is developing without limits AGI scientific research continues to be a driving force for a sustainable economy

Sam Altman, CEO of OpenAI, warned against the “innovation delay” by overlooking the extent of performance improvement of GPT-5, which is expected to exceed expectations. He emphasized the need for newer thinking as the next model of GPT is developed, replacing and erasing various areas of business as well as daily life. It is virtually the first time CEO Altman has given such a confident ‘signal’ about the performance of GPT-5. He made it clear that building ‘General Artificial Intelligence (AGI)’ is his and OpenAI’s goal, suggesting that if a vast amount of computing resources are invested to hasten the arrival of AGI, then the problems currently faced, such as the energy crisis required for AI operations, will be easily resolved.

Sam Altman (left), CEO of OpenAI, is having a conversation with participating startups at the ‘K-Startup·OpenAI Matching Day’ held at the 1960 Building in San Francisco, USA, on March 14 (local time). Photo provided by OpenAI.

On March 14 (local time), during a meeting with the Korean Silicon Valley correspondent group, CEO Altman mentioned, “I am not sure when GPT-5 will be released, but it will make significant progress as a model taking a leap forward in advanced reasoning capabilities. There are many questions about whether there are any limits to GPT, but I can confidently say ‘no’.” He expressed confidence that if sufficient computing resources are invested, building AGI that surpasses human capabilities is entirely feasible.

CEO Altman also opined that underestimating the improvement margin of the developing GPT-5 and deploying business accordingly would be a big mistake. This implies that the improvement margin of GPT-5 is beyond imagination. He mentioned, “Many startups are happy assuming that GPT-5 will only make slight progress rather than significant advancements (since it presents more business opportunities), but I think this is a big mistake. In this case, as often happens when technological upheavals occur, they will be ‘steamrolled’ by the next-generation model.”

Altman appeared to have no interest other than in ‘building AGI’. His interest seems to have faded in other technologies, including blockchain and biotechnology, beyond AI. He said, “In the past, I had a broad perspective on everything happening in the world and could see things I couldn’t from a narrow perspective. Unfortunately, these days, I am entirely focused on AI (AI all of the time at full tilt), making it difficult to have other perspectives.”

Recently, CEO Altman has been working towards innovating the global AI infrastructure, sparking discussions with rumors of ‘7 trillion dollars in funding’. He said, “Apart from thinking about the next-generation AI model, the area where I’ve been spending most of my time recently is ‘computing construction,’ as I’m increasingly convinced that computing will become the most important currency in the future. However, the world has not planned for sufficient computing, and failing to confront this issue, pondering what is needed to build an enormous amount of computing as cheaply as possible, poses a significant challenge.” This indicates a major concern about securing computational resources for implementing AGI.”

That is big talk.

Also it constrains your expectations on GPT-5’s arrival time. It is far enough in the future that they will have had time to train and hopefully test the model, yet close enough he can make these predictions with confidence.

I do think the people saying ‘GPT-5 when? Where is my GPT-5?’ need to calm down. It has only been a year since GPT-4. Getting it now would be extraordinarily fast.

Yes, OpenAI could choose to call something unworthy GPT-5, if it wanted to. Altman is very clearly saying no, he is not going to do that.

What else to think based on this?

Alex Tabarrok: Buckle your seatbelts, AGI is nearly here.

Robin Hanson: “it will make significant progress” is a LONG way from AGI.

Alex Tabarrok: “There are many questions about whether there are any limits to GPT, but I can confidently say ‘no’.” He expressed confidence that if sufficient computing resources are invested, building AGI that surpasses human capabilities is entirely feasible.”

Robin Hanson: “No limits” doesn’t say anything about timescales. The gains he sees don’t reveal to him any intrinsic limits, fine. Doesn’t mean we are close, or that there aren’t actually intrinsic limits.

I am somewhere in between here. Clearly Altman does not think GPT-5 is AGI. How many similar leaps before something that would count?

Is Anthropic helping the cause here? Roon makes the case that it very much isn’t.

Roon: Anthropic is controlled opposition to put the fear of God in the members of technical staff.

Elon Musk made a second prediction last week that I only now noticed.

Elon Musk (March 13, 2024): It will take at least a decade before a majority of cars are self-driving, but this is a legitimate concern in that time horizon.

Of more immediate concern is that it is already possible to severely ostracize someone simply by freezing their credit cards and bank account, as happened, for example, in Canada with the trucker protest.

Elon Musk (March 12, 2024): AI will probably be smarter than any single human next year. By 2029, AI is probably smarter than all humans combined.

Baby, if you are smarter than all humans combined, you can drive my car.

These two predictions do not exist in the same coherent expected future. What similar mistakes are others making? What similar mistakes are you perhaps making?

How will AI impact the danger of cyberattacks in the short term? Dan Hendrycks links to a Center for AI Safety report on this by Steve Newman. As he points out, AI helps both attackers and defenders.

Attackers are plausibly close to automating the entire attack chain, and getting to the point where AI can do its own social engineering attacks. AI can also automate and strengthen defenders.

If the future was evenly distributed, and everyone was using AI, it is unclear what net impact this would have on cybersecurity in the short term. Alas, the future is unevenly distributed.

In principle, progress might, on balance, favor defense. A system designed and operated by an ideal defender would have no vulnerabilities, leaving even an ideal attacker unable to break in.18 Also, AI works best when given large amounts of data to work with, and defenders generally have access to more data.19 However, absent substantial changes to cyber practices, we are likely to see many dramatic AI-enabled incidents.

The primary concern is that advances in defensive techniques are of no help if defenders are not keeping up to date. Despite decades of effort, it is well known that important systems are often misconfigured and/or running out-of-date software.20 For instance, a sensitive application operated by credit report provider Equifax was found in 2017 to be accessible to anyone on the Internet, simply by typing “admin” into the login and password fields.21 A recent CISA report notes that this government agency often needs to resort to subpoenas merely to identify the owners of vulnerable infrastructure systems, and that most issues they detect are not remediated in the same year.

In the previous world, something only got hacked when a human decided to pay the costs of hacking it. You can mock security through obscurity as Not the Way all you like, it is still a central strategy in practice. So if we are to mitigate, we will need to deploy AI defensively across the board, keeping pace with the attackers, despite so many targets being asleep at the wheel. Seems both important and hard. The easy part is to use AI to probe for vulnerabilities without asking first. The hard part is getting them fixed once you find them. As is suggested, it makes sense that we need to be pushing automated updates and universal defenses to patch vulnerabilities, that very much do not depend on targets being on the ball, even more than in the past.

Also suggested are reporting requirements for safety failures, a cultivation of safety culture in the place security mindset is most needed yet often lacking. Ideally, when releasing tools that enable attackers, one would take care to at least disclose what you are doing, and ideally to work first to enable defenses. Attackers will always find lots of places they can ‘get there first’ by default.

In a grand sense none of these patterns are new. What this does is amplify and accelerate what was already the case. However that can make a huge difference.

Generalizing from cybersecurity to the integrity of essentially everything in how our society functions (and reminder, this is a short term, mundane danger threat model here only, after that it gets definitely a lot weirder and probably more dangerous), we have long had broad tolerance for vulnerabilities. If someone wants to break or abuse the rules, to play the con artist or trickster, to leverage benefit of the doubt that we constantly give people, they can do that for a while. Usually, in any given case, you will get away with it, and people with obvious patterns can keep doing it for a long time – see Lex Fridman’s interview with Matt Cox, or the story chronicled in the Netflix movies Queenpins or Emily the Criminal.

The reason such actions are rare is (roughly, incompletely) that usually is not always, and those who keep doing this will eventually be caught or otherwise the world adjusts to them, and they are only human so they can only do so much or have so much upside, and they must fear punishment, and most people are socialized to not want to do this or not to try in various ways, and humans evolved to contain such issues with social norms and dynamics and various techniques.

In the age of AI, once the interaction does not get rate limited by the human behind the operation via sufficient automation of the attack vectors involved, and especially if there is no requirement for a particular person to put themselves ‘on the hook’ in order to do the thing, then we can no longer tolerate such loopholes. We will have to modify every procedure such that it cannot be gamed in such fashion.

This is not all bad. In particular, consider systems that rely on people largely being unaware or lazy or stupid or otherwise playing badly for them to function, that prey on those who do not realize what is happening. Those, too, may stop working. And if we need to defend against anti-social AI-enabled behaviors across the board, we also will be taking away rewards to anti-social behaviors more generally.

A common question in AI is ‘offense-defense balance.’ Can the ‘good guy with an AI’ stop the ‘bad guy with an AI’? How much more capability or cost than the attacker spends does it take to defend against that attack?

Tyler Cowen asks about a subset of this, drone warfare. Does it favor offense or defense? The answer seems to be ‘it’s complicated.’ Austin Vernon says it favors defense in the context of strongly defended battle lines. But it seems to greatly favor offense in other contexts, when there would otherwise not need to be strong defense. Think not only Russian oil refineries, but also commercial shipping such as through the Suez Canal versus the Houthis. Also, the uneven distribution of the future matters here as well. If only some have adapted to the drone era, those that have not will have a bad time.

Dan Hendrycks also issues another warning that AI might be under military control within a few years. They have the budget, they could have the authority and the motivation to require this, and hijack the supply chain and existing companies. If that is in the mix, warning of military applications or dangers or deadly races or runaway intelligence explosions could backfire, because the true idiot disaster monkeys would be all-in on grabbing that poisoned banana first, and likely would undo all the previous safety work for obvious reasons.

I still consider this unlikely if the motivation is also military. The military will lack the expertise, and this would be quite the intervention with many costs to pay on many levels, including economic ones. The people could well rebel if they know what is happening, and you force the hand of your rivals. Why risk disturbing a good situation, when those involved don’t understand why the situation is not so good? It does make more sense if you are concerned that others are putting everyone at risk, and this is used as the way to stop that, but again I don’t expect those involved to understand enough to realize this.

The idea of Brexit was ‘take back control,’ and to get free of the EU and its mandates and regulations and requirements. Yes, it was always going to be economically expensive in the short term to leave the EU, to the point where all Very Serious People called the idea crazy, but if the alternative was inevitable strangulation and doom in various ways, then that is no alternative at all.

Paul Graham: Brexit may yet turn out to have been a good idea, if it means the UK can be the Switzerland of AI.

It would be interesting if that one thing, happening well after Brexit itself, ended up being the dominant factor in whether it was a good choice or not. But history is full of such cases, and AI is a big enough deal that it could play such a role.

Dominic Cummings: Vote Leave argued exactly this, and that the EU would massively screw up tech regulation, in the referendum campaign 2015-16. It’s a surprise to almost all how this has turned out but not to VL…

It is not that they emphasized tech regulation at the time. They didn’t, and indeed used whatever rhetoric they thought would work, generally doing what would cut the enemy, rather than emphasizing what they felt were the most important reasons.

It is that this was going to apply to whatever issues and challenges came along.

Admittedly, this was hard to appreciate at the time.

I was convinced by Covid-19. Others needed a second example. So now we have AI.

Even if AI fizzles and the future is about secret third thing, what is the secret third thing the future could be centrally about where an EU approach to the issue would have given the UK a future? Yes, the UK might well botch things on its own, it is not the EU’s fault no one has built a house since the war, but also the UK might do better.

How bad is the GDPR? I mean, we all know it is terrible, but how much damage does it do? A paper from February attempts to answer this.

From the abstract: Our difference-in-difference estimates indicate that, in response to the GDPR, EU firms decreased data storage by 26% and data processing by 15% relative to comparable US firms, becoming less “data-intensive.”

To estimate the costs of the GDPR for firms, we propose and estimate a production function where data and computation serve as inputs to the production of “information.”

We find that data and computation are strong complements in production and that firm responses are consistent with the GDPR, representing a 20% increase in the cost of data on average.

Claude estimated that data costs are 20% of total costs, which is of course a wild guess but seems non-crazy, which would mean a 4% increase in total costs. That should not alone be enough to sink the whole ship or explain everything we see, but it also does not have to, because there are plenty of other problems as well. It adds up. And that is with outside companies having to bear a substantial portion of GDPR costs anyway. That law has done a hell of a lot of damage while providing almost zero benefit.

How bad could it get in the EU? Well, I do not expect it to come to this, but there are suggestions.

Krzysztof Tyszka-Drozdowski: The former French socialist education minister @najatvb suggested yesterday in ‘Le Figaro’ that the best way to combat fake news, screen addiction, and deepfakes is for everyone to have an internet limit of 3 GB per week. Socialism is a sickness.

On the plus side this would certainly motivate greatly higher efficiency in internet bandwidth use. On the negative side, that is completely and utterly insane.

What do we know and when will we know it? What are we implying?

David Manheim: Notice the ridiculous idea that we know the potential of AI, such that we can harness it or mitigate risks.

We don’t have any idea. For proof, look at the track records of people forecasting benchmarks, or even the class of benchmark people will discuss, just 2-3 years out.

Department of State: If we can harness all of the extraordinary potential in artificial intelligence, while mitigating the downsides, we will advance progress for people around the world. – @SecBlinken, Secretary of State

I mean, Secretary Blinken is making a highly true statement. If we can harness all of AI’s potential and mitigate its downsides, we will advance progress for people around the world.

Does this imply we know what that potential is or what the downsides are? I see why David says yes, but I would answer no. It is, instead, a non-statement, a political gesture. It is something you could say about almost any new thing, tech or otherwise.