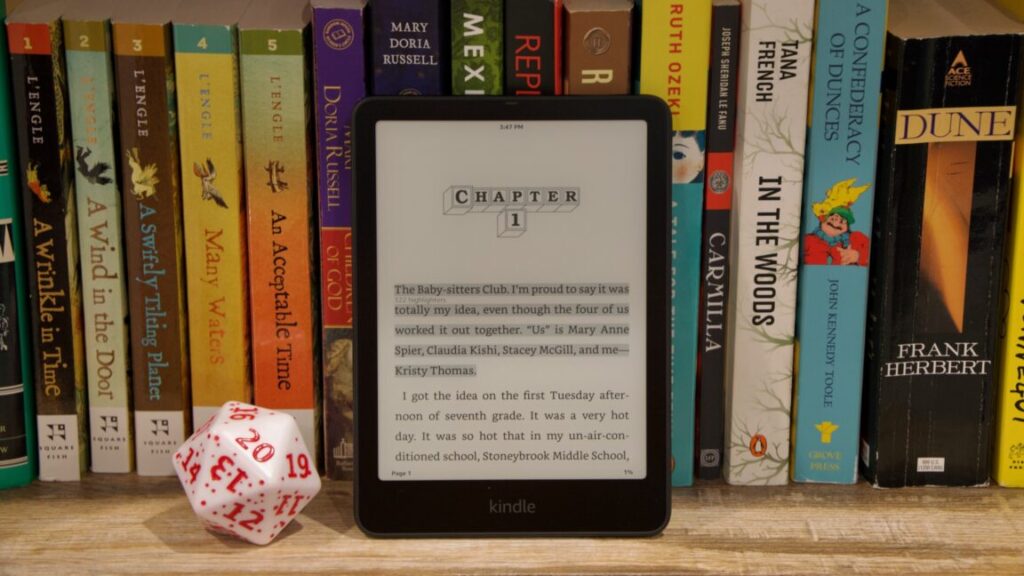

Review: Amazon’s 2024 Kindle Paperwhite makes the best e-reader a little better

A fast Kindle?

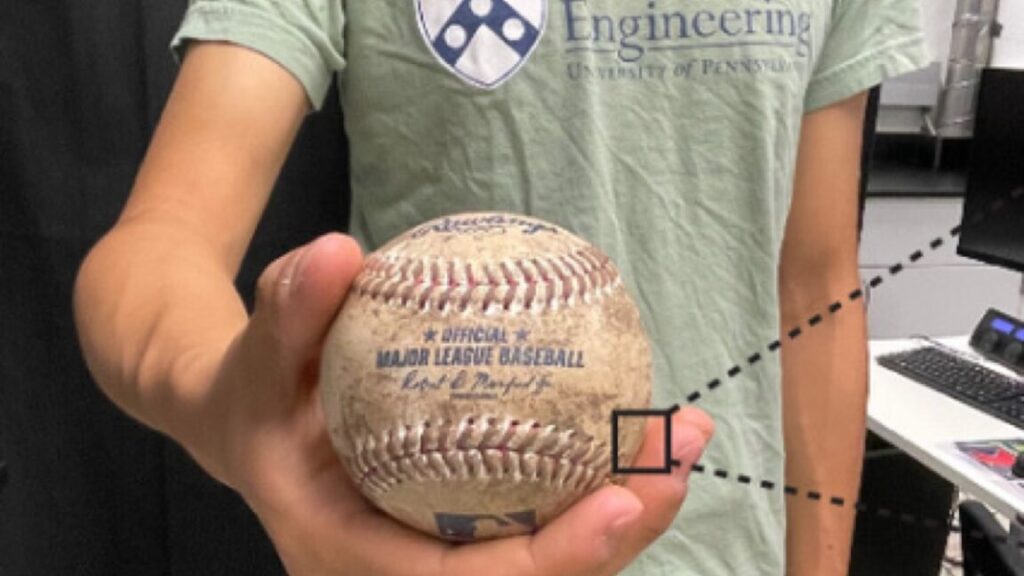

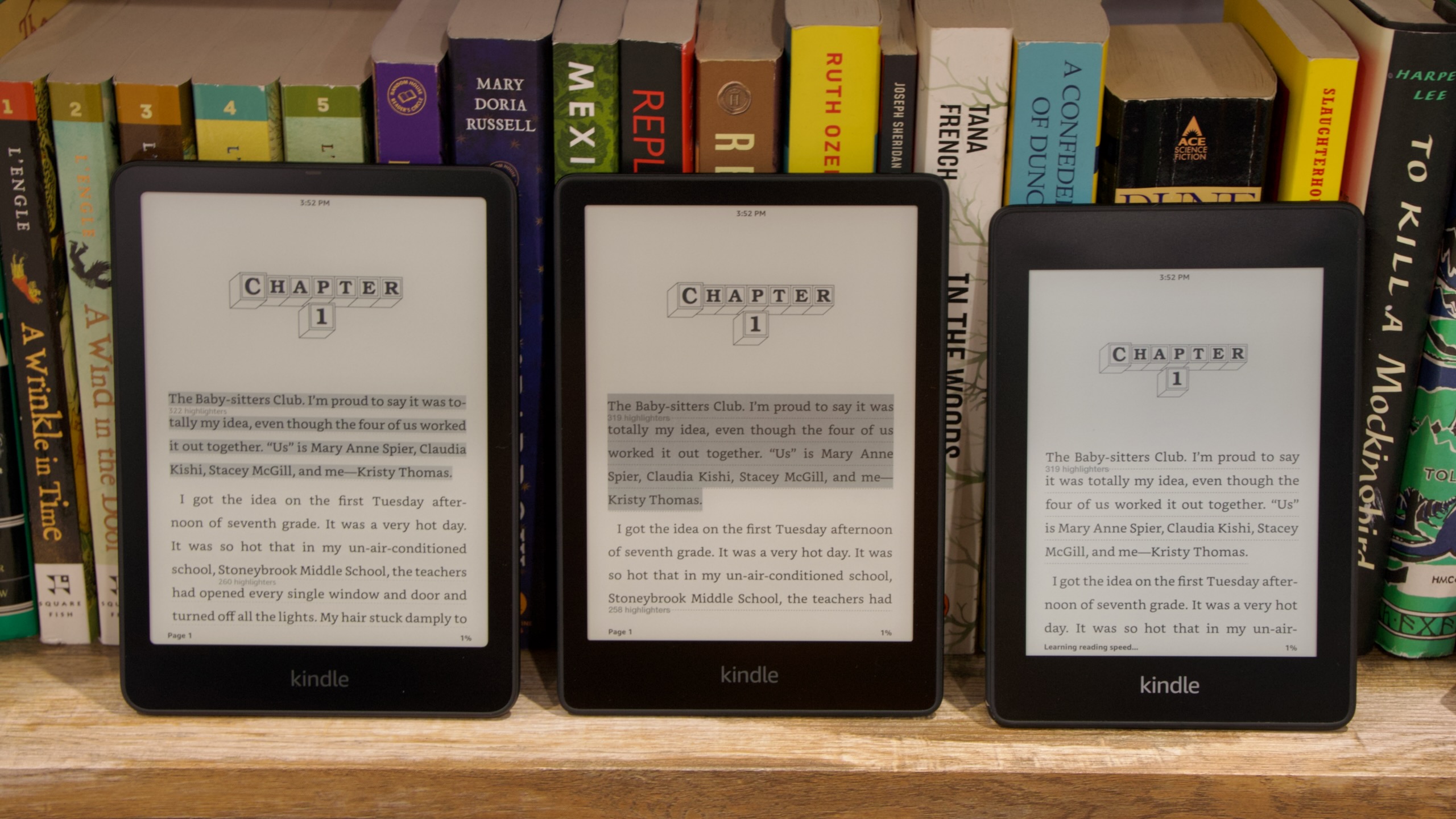

From left to right: 2024 Paperwhite, 2021 Paperwhite, and 2018 Paperwhite. Note not just the increase in screen size, but also how the screen corners get a little more rounded with each release. Credit: Andrew Cunningham

I don’t want to oversell how fast the new Kindle is, because it’s still not like an E-Ink screen can really compete with an LCD or OLED panel for smoothness of animations or UI responsiveness. But even compared to the 2021 Paperwhite, tapping buttons, opening menus, opening books, and turning pages feels considerably snappier—not quite instantaneous, but without the unexplained pauses and hesitation that longtime Kindle owners will be accustomed to. For those who type out notes in their books, even the onscreen keyboard feels fluid and responsive.

Compared to the 2018 Paperwhite (again, the first waterproofed model, and the last one with a 6-inch screen and micro USB port), the difference is night and day. While it still feels basically fine for reading books, I find that the older Kindle can sometimes pause for so long when opening menus or switching between things that I wonder if it’s still working or whether it’s totally locked up and frozen.

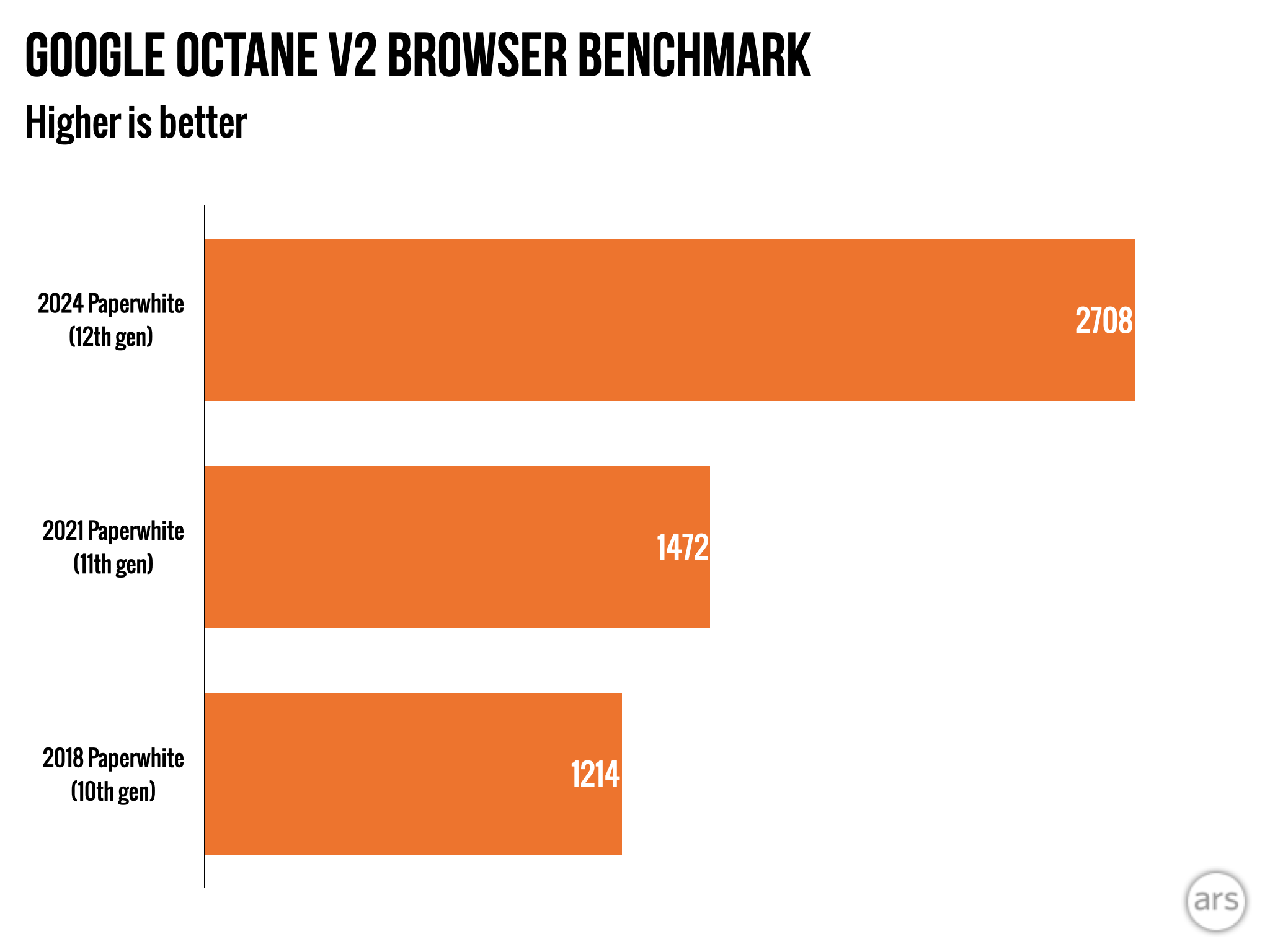

“Kindle benchmarks” aren’t really a thing, but I attempted to quantify the performance improvements by running some old browser benchmarks using the Kindle’s limited built-in web browser and Google’s ancient Octane 2.0 test—the 2018, 2021, and 2024 Kindles are all running the same software update here (5.17.0), so this should be a reasonably good apples-to-apples comparison of single-core processor speed.

The new Kindle is actually way faster than older models. Credit: Andrew Cunningham

The 2021 Kindle was roughly 30 percent faster than the 2018 Kindle. The new Paperwhite is nearly twice as fast as the 2021 Paperwhite, and well over twice as fast as the 2018 Paperwhite. That alone is enough to explain the tangible difference in responsiveness between the devices.

Turning to the new Paperwhite’s other improvements: compared side by side, the new screen is appreciably bigger, more noticeably so than the 0.2-inch size difference might suggest. And it doesn’t make the Paperwhite much larger, though it is a tiny bit taller in a way that will wreck compatibility with existing cases. But you only really appreciate the upgrade if you’re coming from one of the older 6-inch Kindles.

Review: Amazon’s 2024 Kindle Paperwhite makes the best e-reader a little better Read More »