Secretary of War Tweets That Anthropic is Now a Supply Chain Risk

This is the long version of what happened so far. I will strive for shorter ones later, when I have the time to write them.

Most of you should read the first two sections, then choose the remaining sections that are relevant to your interests.

But first, seriously, read Dean Ball’s post Clawed. Do that first. I will not quote too extensively from it, because I am telling all of you to read it. Now. You’re not allowed to keep reading this or anything else until after you do. I’m not kidding.

That’s out of the way? Good. Let’s get started.

-

Altman Has Been Excellent On The Question of Supply Chain Risk, But May Need To Do More.

-

The Demand For Unrestricted Access Is New And Is Selective And Fake.

-

Claims Of Strongarming Are Ad Hominem Bad Faith Obvious Nonsense.

-

The Part That If Enacted Would Be A Historically Epic Clusterfuck.

-

Why OpenAI’s Shared Legal Language Offers Almost No Protections.

-

Altman Does Not Present As Understanding The Difference In Redlines.

-

Anthropic’s Position Was The Opposite Of How This Is Portrayed.

-

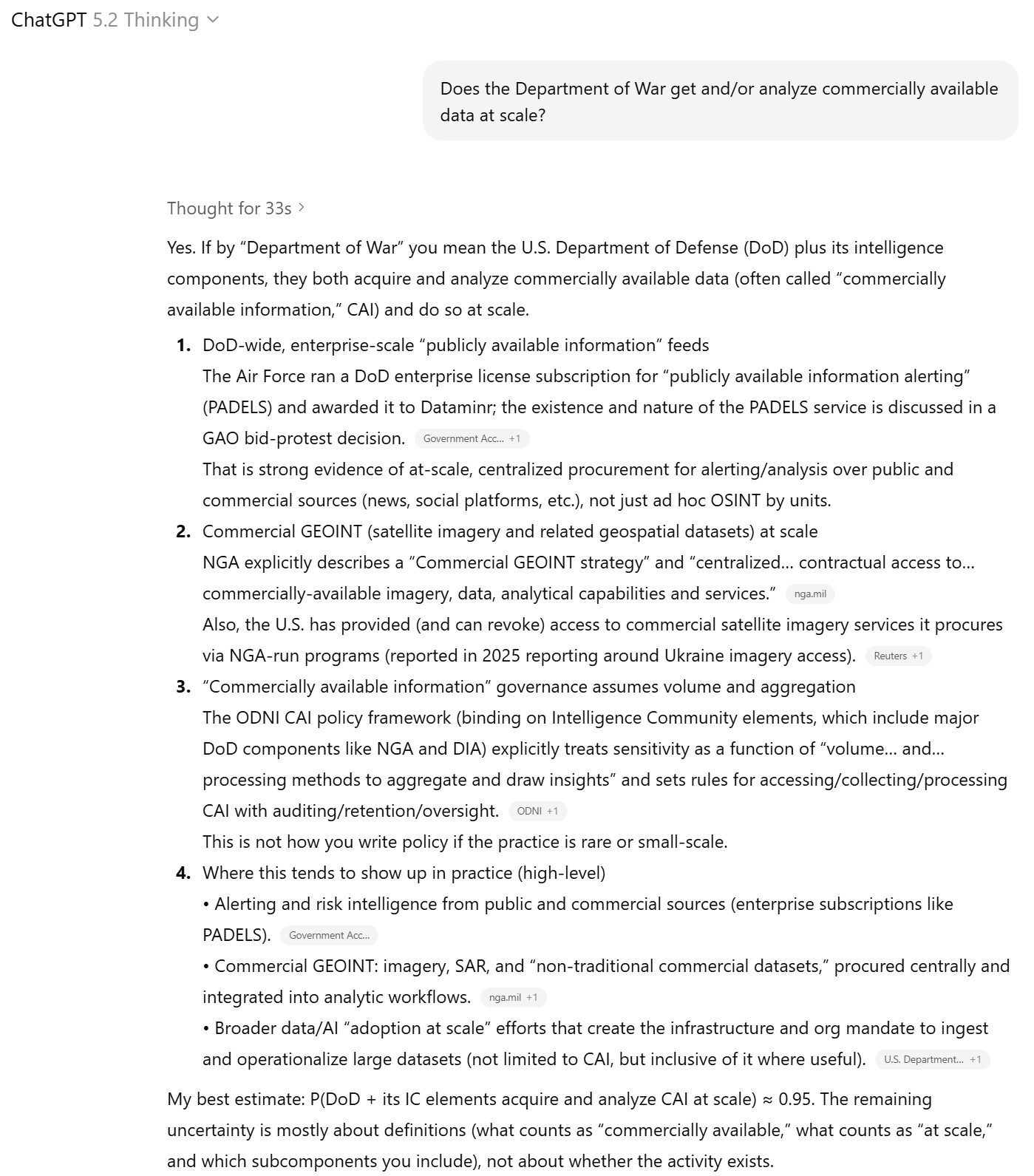

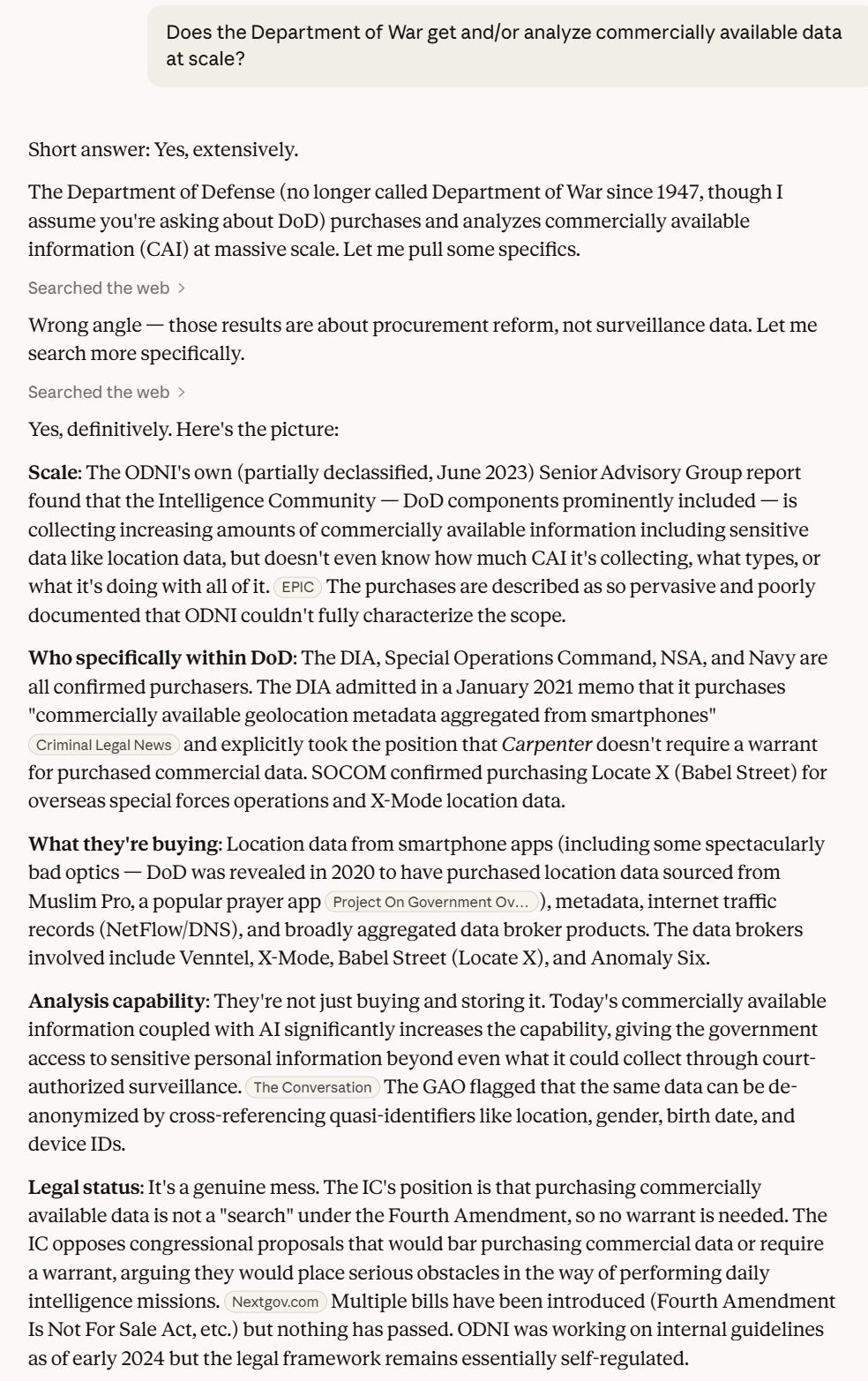

Can OpenAI Models Be Used To Analyze Commercially Available Data At Scale?

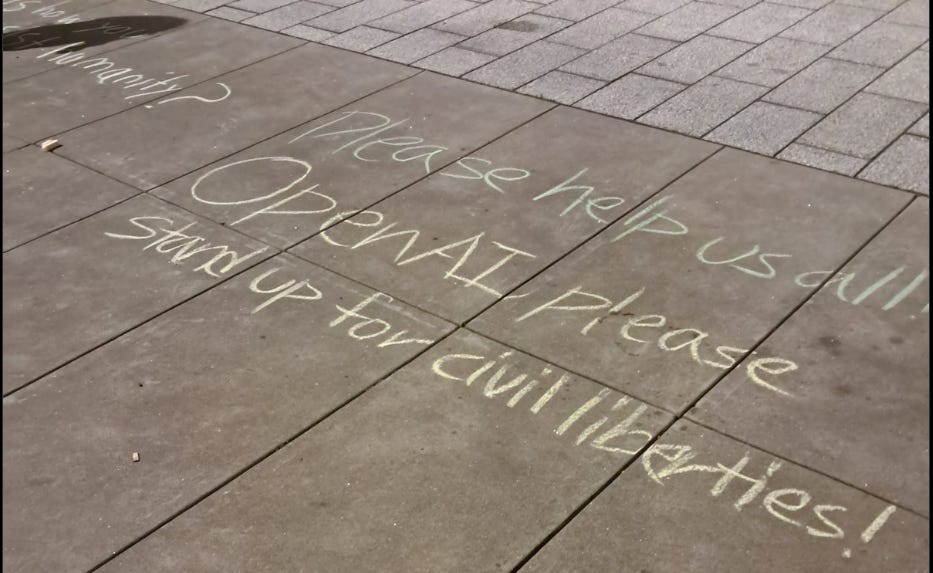

President Trump enacted a perfectly reasonable solution to the situation with Anthropic and the Department of War. He cancelled the Anthropic contract with a six month wind down period, after which the Federal Government would be told not to use Anthropic software.

Everyone thought the worst was now over. The situation was unfortunate for Anthropic and also for national security, but this gave us six months to transition, it gave us six months to negotiate another solution, and it avoided any of the extreme highly damaging options that Secretary of War Pete Hegseth and lead negotiator Emil Michael had placed upon the table.

Anthropic would be fine without government business, and the government would mostly be fine without directly using Anthropic. Face was saved.

I have sources that confirm that Trump’s announcement was wisely intended as an off-ramp and de-escalation of the situation, and that it was intended to be the end of it, or perhaps even a deal could have still been reached now that everyone could breathe.

An hour after that, on his own, Pete Hegseth went rogue and blew the whole situation up, illegally declaring that ‘effective immediately’ he was declaring Anthropic a Supply Chain Risk, and that anyone who did business with the Department of War in any capacity could not use Anthropic’s products in any capacity.

Even if it had not been issued via a Tweet, this is not how the law actually works.

If this is implemented as stated, it will cause a market bloodbath and immense damage to our national security and supply chain. It would be attempted corporate murder with a global blast radius.

Thankfully it probably won’t be anything close to that.

Probably.

The market understands that this is not how any of this works, so the reaction was for now relatively muted, as only about $150 billion was wiped from public markets in later post-close trading. I believe that is an underreaction based on the chilling effects and damage already done, but we will never know the true market impact because events have already been confounded.

I hope for the best on that front, but the danger remains.

We must be vigilant until the coast is clear, and we must prepare for the worst. Pete Hegseth cannot be allowed to commit corporate murder.

Outcomes like this usually don’t happen exactly because people realize they would otherwise happen, and prevent them.

What was that all about?

Ross Andersen: On Friday afternoon, Anthropic learned that the Pentagon still wanted to use the company’s AI to analyze bulk data collected from Americans. That could include information such as the questions you ask your favorite chatbot, your Google search history, your GPS-tracked movements, and your credit-card transactions, all of which could be cross-referenced with other details about your life.

Anthropic’s leadership told Hegseth’s team that was a bridge too far, and the deal fell apart.

Okay, what was that all about?

We don’t know. I have sources saying that Doge is driving this, and I have other speculations, but ultimately we don’t know what they want this capability for. What we do know is that they blew the whole situation up over this question. There must have been a reason.

Whatever that was, or an actual outright attempt to murder Anthropic, is what this is all about. It’s not a matter of principle.

Then, later that night, OpenAI accepted a contract with the Department of War. They claimed that very day that they had the same red lines as Anthropic, yet they seem to have accepted the same language Anthropic rejected as not meaningful, as confirmed by Jeremy Lewin.

How did OpenAI negotiate such a deal in two days? My interpretation of OpenAI’s public statements is that they consider any action crossing their red lines to already be illegal, and thus there are no uses that they would consider both legal and unacceptable, and that it is not their place to make that determination.

But that’s not what matters. The contract terms here ultimately don’t matter.

What matters is that OpenAI and the Department of War are trusting each other. OpenAI is giving DoW a replacement that allows them to offboard Anthropic without overly disrupting national security, and trusting DoW to decide what to do with that tech and to not to do anything too illegal.

DoW is trusting OpenAI to deliver a good model and let them do what they operationally need to do and not suddenly start tripping the safety mechanisms. Forward engineers and the safety stack will trust but verify, and Altman claims he stands ready to pull the plug if DoW goes too far.

All of OpenAI’s meaningful safeguards are in the security stack, and its right to choose what model to deliver and pull the plug. Which means they’re in contract language we may not ever see.

I believe that the way they presented that deal and the situation has been misleading enough to cost me and a lot of others a lot of sleep, but it now seems clear.

OpenAI’s employees need to investigate the technical provisions and ask whether the red lines they personally care about are meaningfully protected, and whether they wish to be part of what is happening given the circumstances.

Even more than that, it is not clear whether OpenAI’s attempted de-escalation of the situation de-escalated it, or escalated it further by giving Hegseth a green light.

Indeed, the New York Times thinks exactly that happened:

Sheera Frenkel, Cade Metz and Julian E. Barnes: Mr. Michael was unhappy with that answer, the people said. He also had an ace up his sleeve: On the side, he had been hammering out an alternative to Anthropic with its rival, OpenAI. A framework between the Pentagon and OpenAI had already been reached.

So when the Friday deadline passed, the Department of Defense did not give Anthropic more time. At 5: 14 p.m., Mr. Hegseth announced that he had designated Anthropic as a security risk and that it would be cut off from working with the U.S. government. “America’s warfighters will never be held hostage by the ideological whims of Big Tech,” he posted on social media.

Again, I don’t think that was Altman’s intention, at all. But whichever way this went, OpenAI’s employees and leadership need to make it clear that they cannot enter a relationship built on trust with DoW, if DoW actually attempts a widely scoped supply chain risk intervention against Anthropic, and attempts to kill the company.

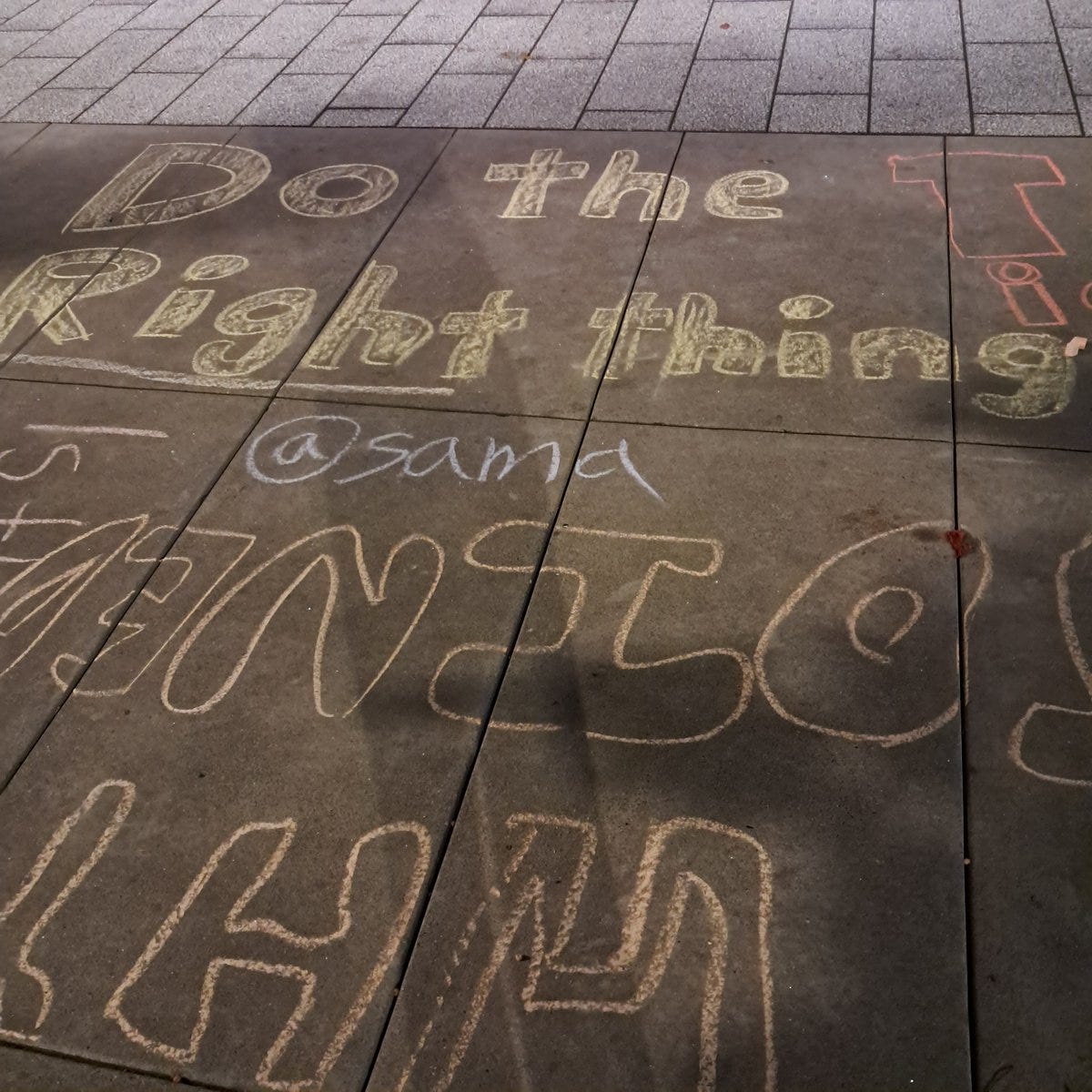

Sam Altman has been excellent in calling for not labeling Anthropic a supply chain risk. I take him at his word that he was attempting to de-escalate.

But if OpenAI’s willingness to work with DoW is used not to de-escalate but as a way to allow escalation, then OpenAI must not abide this, and if OpenAI does abide then it would then be actively and consciously escalating the situation.

Ross Douthat: Does the precedent that the DoW is setting by effectively blacklisting Anthropic make you concerned about what any future dispute with the Pentagon would mean for your own company’s independence and viability?

Sam Altman (CEO OpenAI): Yes; I think it is an extremely scary precedent and I wish they handled it a different way. I don’t think Anthropic handled it well either, but as the more powerful party, I hold the government more responsible. I am still hopeful for a much better resolution.

If things escalate, ‘I wish it had gone better’ and ‘hopeful’ will no longer fly.

You may have some very big ethical decisions to make in the coming days.

So might those at many other tech companies, and everyone else, if this escalates. Think about what you would do if your company is put to a decision here.

What the OpenAI deal definitely did was further invalidate the legal arguments for a supply chain risk designation and removed the need for further confrontation. But unfortunately, no matter how obvious the case looks to us, we cannot be certain the courts will do the right thing, which includes doing it fast enough to prevent damage.

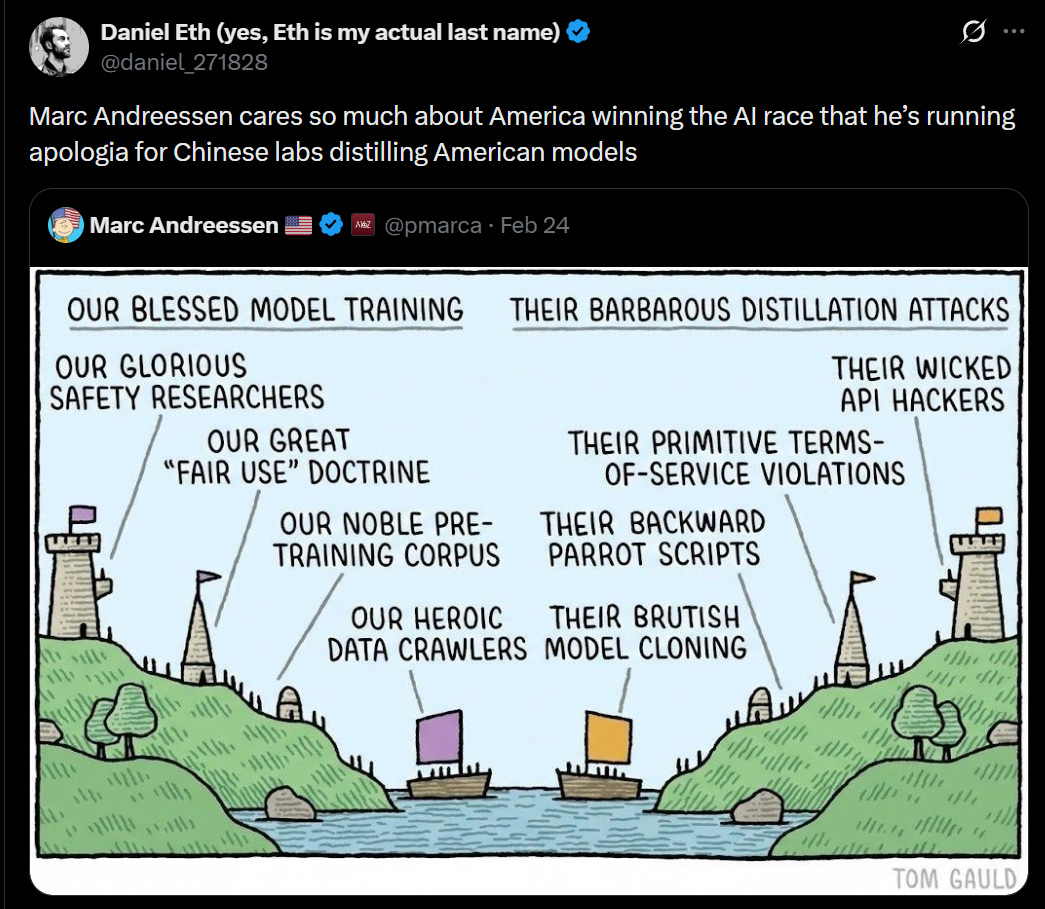

Throughout this, a remarkable number of people have tried to equate ‘democracy,’ the American way, with what is actually dictatorship or communism, or the Chinese way. As in private citizens do whatever those in charge demand of them, or else. I vehemently disagree.

Soren Kierkegaard: All arguments against Anthropic I’ve seen from right wing posters have been a variant of the government should be allowed to seize the means of production

Dean W. Ball: As we do, and as we have future debates about the proper nexus of control over frontier AI, I encourage you to avoid the assumption that “democratic” control—control “of the people, by the people, and for the people”—is synonymous with governmental control. The gap between these loci of control has always existed, but it is ever wider now.

For now the headlines say the big destructive action launched by the Department of War that day without proper Congressional authorization was that they attacked Iran. Even with what has unfolded there I am not entirely convinced history books, if we are around to read them, will see it that way.

The house is on fire.

The question is, what are you going to do about it?

This is my best effort to bring together the key events in the story. This is my best attempt to recollect the sequence of events. I apologize for any key omissions, errors or where I am trusting misrepresentations. Some of this is from private sources. Some events may be out of order, I believe in ways that would not change the interpretation.

-

Last year: Tensions rise between the White House and Anthropic, for a variety of reasons. David Sacks (conspicuously and virtuously silent during this crisis) spent a remarkable percentage of his time railing against Effective Altruism in general, ‘doomers’ and in particular Anthropic. Elon Musk, founder of xAI, is also repeatedly is hostile to Anthropic, and creates Doge. Katie Miller goes to xAI. Nvidia is hostile to Anthropic in various ways, despite investments.

-

Last year: Anthropic and other companies sign government contracts with DoD for up to $200 million each, containing many restrictions on government use. Anthropic makes it a priority to be the first to be on classified networks, despite it not being a good business opportunity given the associated risks and hassles, to help in the national defense. Anthropic has an easier route because of AWS.

-

June 6, 2025: Anthropic announces Claude Gov models for national security customers, specialized to the needs of government and classified information.

-

Previously: DoW asks to renegotiate Anthropic’s contract to make it less restrictive. Anthropic agrees to do so on many fronts but draws two red lines.

-

January 3: Maduro is captured in a government raid. Anthropic’s Claude is widely believed to have been used in this, without incident. Everything went great.

-

January 9: Hegseth sends out a memo demanding, among other AI initiatives, DoW not use ‘woke AI.’

-

Previously: DoW circulates a story that Anthropic asked questions about the raid and was potentially unhappy and might pull its contract. I have gotten multiple unequivocal denials, saying this was entirely made up by DoW. This is part of an ongoing narrative of ‘what if they demand you get permission or they pull the plug mid-mission when they don’t like what you’re doing’ that has no bearing on the actual situation whatsoever, and never did.

-

Previously: Elon Musk, he of the Doge and xAI, and hater of supposed Woke AI everywhere, starts Tweeting far more frequent hostile and ad hominem attacks against Anthropic, really quite a lot, including saying they hate Western Civilization. Sources I have claim that he urged DoW to attempt to coerce or disrupt Anthropic. Katie Miller also Tweets similar material.

-

Previously: DoW circulates a story that Dario told them that if their system refused to provide real time missile defense (later they said drone defense) that he said to call them. I have unequivocal denial, from a secondary source, that this or anything like it ever happened. This story is almost certainly fiction and makes no sense, and is at best a willful misunderstanding. We already have automated missile defenses that wisely do not use LLMs. Calling Dario in real time would do absolutely nothing, regardless of his preferences, and he could neither turn on or off such systems on classified networks.

-

Previously: DoW says it sends its ‘best and final’ offer, in public, saying that it cannot let private companies refuse requests.

-

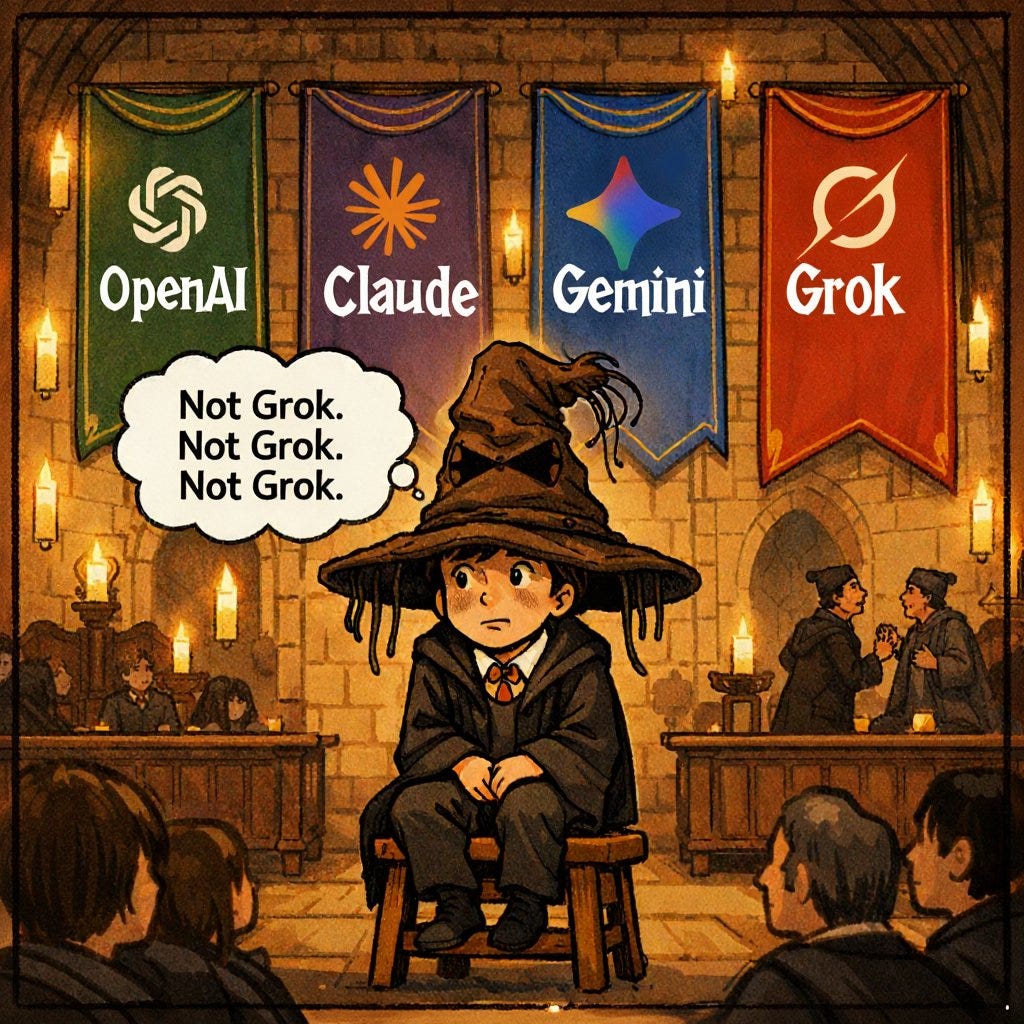

This Week: Agreement is announced with xAI to use Grok on classified networks, but experts express dissatisfaction with model reliability and quality.

-

Tuesday: Secretary of War Pete Hegseth meets with Anthropic CEO Dario Amodei. along with Feinberg, Michael, Duffey, Parnell and Matthews.

-

Tuesday: In addition to the threat to designate Anthropic a supply chain risk, the Department of War threatens to do the opposite and contradictory move and invoke the Defense Production Act.

-

Thursday: Sean Parnell Tweets, setting the 5: 01pm Friday deadline, and says ‘we will not let ANY company dictate the terms’ while dictating their terms to modify an existing contract, and while negotiating extensively with OpenAI and also Anthropic over detailed terms.

-

Thursday, 12: 24pm: Emil Michael threatens that at 5: 01pm Friday, they’re going to declare Anthropic a supply chain risk. He also claims that using AI to conduct mass surveillance of the Americans is illegal (which definitely isn’t true as such).

-

Thursday evening, earlier: Anthropic explains it will not agree to the terms in the ‘best and final offer.’

-

Thursday evening: Emil Michael says in Tweets, in response to Anthropic’s statement, that Dario Amodei is a ‘liar’ and has a ‘god complex.’

-

Thursday evening: Altman sends a memo to staff.

-

Thursday evening, 10: 54pm: Emil Michael emails Dario Amodei comments.

-

Friday morning: Sam Altman goes on CNBC, and he trusts Anthropic on safety and OpenAI share Anthropic’s red lines. Many praise Altman for this stand.

-

Friday afternoon: In an all-hands meeting, Altman says that a potential agreement is emerging with the Department of War. He says the government has agreed to let OpenAI build their own ‘safety stack’ of technical, policy and human controls sitting between a powerful AI model and real-world use, and if the model refuses a task they will not force the model to do that task.

-

Friday: Senators Wicker (R-Miss), Reed (D-RI), McConnell (R-Ky) and Chris Coons (D-Del) send Anthropic and the Pentagon a private letter urging them to resolve the issue.

-

Friday, 3: 47pm (1 hour 14 minutes BEFORE deadline): Trump sends Truth winding down Anthropic’s contract and direct use by government, giving everyone a reasonable way to end this while mitigating fallout and also leaving time to find another way.

-

Friday, 3: 48pm: The rest of us assume okay, that’s it, happy weekend.

-

Friday, 3: 51pm (1 hour 10 minutes BEFORE deadline): Dario sends an email with redlines to continue negotiation. According to The New York Times, Dario was offering to allow Claude to be used for FISA, as long as it was not used for mass surveillance on unclassified commercially acquired information.

-

Friday, 5: 01pm (AFTER the deadline): Emil attempts to call and message Dario, timing as per Emil’s Tweet.

-

Friday, 5: 02pm: Emil makes another attempt to contact Dario, calling a ‘business partner,’ offering that a deal can still be struck as long as there are terms permitting legal mass domestic surveillance, especially analysis of previously collected data. Dario responds that he is on the phone with his executive team and needs more time, given (as per Emil’s own tweets) he called Dario after the supposed deadline. But of course, given Emil had intentionally let his own deadline pass, there was no actual rush.

-

Friday, 5: 14pm (13 minutes after Dario was attempted to be contacted): SoW issues a Tweet at best legally questionable order in retaliation, saying ‘the decision is final,’ that takes $150 billion off of US stock market that would if enforced cause massive damage to not only Anthropic but many major corporations and the military supply chain. There is no more official communication from DoW on this matter, at least in public.

-

Friday, 8: 25pm: Anthropic issues a statement responding to Pete Hegseth, that includes: “We have not yet received direct communication from the Department of War or the White House on the status of our negotiations.” They announce the intention to challenge any supply chain risk designation in court, and reassure customers that even if implemented it would be far more limited in scope than Hegseth claimed. They are holding to their red lines.

-

Friday, 9: 14pm: Emil Michael puts out this strange reversed timeline.

-

Friday, 9: 56pm: OpenAI announces agreement with DoW to allow ‘all lawful use’ that OpenAI claims allows OpenAI to build its own ‘safety stack,’ and includes ‘technical safeguards,’ as in if OpenAI’s model refuses requests DoW agrees to respect those refusals, and that it protects the same redlines Anthropic had. He says ‘in all of our interactions, the DoW displayed a deep respect for safety and a desire to partner to achieve the best possible outcome.’

-

Saturday, 4: 30am: Initial reports that Iran has been attacked by the DoW. Those strikes used Anthropic’s Claude.

-

Saturday morning: Dario gives a short interview to CBS News. I encourage everyone to listen to at least that clip. The full interview is here. Among other quotes: ‘We are patriotic Americans. Everything we have done has been for the sake of this country, for the sake of national security. … Disagreeing with the government is the most American thing in the world.’

-

Saturday afternoon: OpenAI shares information about its agreement with the Department of War, claiming it offers robust protections stronger than Anthropic’s previous contract, which itself was much stronger than anything Anthropic was proposing during negotiations. They claim they have multi-layered protections, and share two paragraphs of legal language that do not by themselves appear to offer much protection against adversarial lawyering, given their agreement to ‘all lawful use’ and the history of such agreements.

-

Saturday, 4: 45pm: Emil Michael says ‘the DoW does not engage in any unlawful domestic surveillance with or without an AI system and always strictly complies with laws, regulations, the Constitution’s protections for American’s civil liberties. The DoW does not spy on domestic communication of U.S. people (including via commercial collection) and to do so would be unlawful and profoundly un-American.’

-

Saturday, 7: 13pm: Sam Altman begins a Twitter AMA on their DoW contract.

-

Sunday afternoon: Ross Andersen breaks details, including that the confrontation was ultimately about willingness to analyze bulk data. New York Times also breaks additional details.

Not main events, but media:

-

Saturday: Hard Fork on OpenAI vs. Anthropic.

-

Saturday: ACX hosts All Lawful Use: Much More Than You Wanted To Know.

If you have time to read only a sane amount of words today about this, start by reading Dean Ball’s post Clawed. It needs to be read in full. Seriously, read that.

This piece is long. Way too long.

A running joke is I write long posts because I do not have time to write short ones.

In this case, that is literally true. I have been working around the clock all weekend, trying to write, to process the internet and also do a journalism under speed premium.

Thus, my strategy is:

This is the long post. It includes everything. I’m not trying to cut anything out of the story. It’s going to have some amount of repetition, and it’s covering a ton of different things. I did the best I could.

I will then spend time over the coming days writing shorter ones, including better presenting this material while updating for additional developments.

This is the statement that blew everything up. It came at 5: 14pm eastern on Friday, February 27, thirteen minutes after the self-imposed deadline of 5: 01pm, and about an hour after President Trump attempted to head this off.

Secretary of War Pete Hegseth: This week, Anthropic delivered a master class in arrogance and betrayal as well as a textbook case of how not to do business with the United States Government or the Pentagon.

Our position has never wavered and will never waver: the Department of War must have full, unrestricted access to Anthropic’s models for every LAWFUL purpose in defense of the Republic.

Instead, @AnthropicAI and its CEO @DarioAmodei , have chosen duplicity. Cloaked in the sanctimonious rhetoric of “effective altruism,” they have attempted to strong-arm the United States military into submission – a cowardly act of corporate virtue-signaling that places Silicon Valley ideology above American lives.

The Terms of Service of Anthropic’s defective altruism will never outweigh the safety, the readiness, or the lives of American troops on the battlefield.

Their true objective is unmistakable: to seize veto power over the operational decisions of the United States military. That is unacceptable.

As President Trump stated on Truth Social, the Commander-in-Chief and the American people alone will determine the destiny of our armed forces, not unelected tech executives.

Anthropic’s stance is fundamentally incompatible with American principles. Their relationship with the United States Armed Forces and the Federal Government has therefore been permanently altered.In conjunction with the President’s directive for the Federal Government to cease all use of Anthropic’s technology, I am directing the Department of War to designate Anthropic a Supply-Chain Risk to National Security. Effective immediately, no contractor, supplier, or partner that does business with the United States military may conduct any commercial activity with Anthropic. Anthropic will continue to provide the Department of War its services for a period of no more than six months to allow for a seamless transition to a better and more patriotic service.

America’s warfighters will never be held hostage by the ideological whims of Big Tech. This decision is final.

Who wins from this? China wins from this.

Sam Altman has been excellent on this particular point: He has repeatedly, including in public, said in plain language that Anthropic is not a supply chain risk and it should not be designated as one, both before and after he agreed to the OAI contract.

Sam Altman: Enforcing the SCR designation on Anthropic would be very bad for our industry and our country, and obviously their company.

We said to the DoW before and after. We said that part of the reason we were willing to do this quickly was in the hopes of de-esclation.

I feel competitive with Anthropic for sure, but successfully building safe superintelligence and widely sharing the benefits is way more important that any company competition. I believe they would do something to try to help us in the face of great injustice if we could.

We should all care very much about the precedent.

I saw in some other tweet that I must not be willing to criticize the DoW (it said something about sucking their dick too hard to be able to say anything critical, but I assume this was the intent).

To say it very clearly: I think this is a very bad decision from the DoW and I hope they reverse it. If we take heat for strongly criticizing it, so be it.

That is an excellent statement, and it matters. Nor do I begrudge Altman his saying various very generous things about the Department of War in this situation, in basically every other context. This is the right place to spend those points.

I also want to explicitly say that I not believe that Altman or OpenAI in any way contributed to or engineered this scenario, or that they got ‘special treatment’ of any kind in their contract negotiations. They sincerely do not want any of this.

Anthropic got historically and maliciously hostile treatment, and this may escalate further, but I don’t think OpenAI had anything to do with that.

Sam Altman’s problem is that while signing the contract was intended to be de-escalatory, it could also be escalatory, if DoW now thinks it can safety attempt to kill Anthropic, and does not understand how epic of a clusterfuck this would cause. Thus, OpenAI must make clear, if only privately (which it may have already done) that delivery of models to DoW is based on trust in DoW and trust that this is a de-escalatory move, and further escalation against Anthropic would destroy that trust.

Let’s go over the above statements by Secretary Hegseth, one by one, clause by clause.

Pete Hegseth: This week, Anthropic delivered a master class in arrogance and betrayal.

The betrayal was, I presume, not giving in to the Pentagon’s position.

The arrogance was insisting that they would not sell their software to DoW unless they preserved existing contract terms disallowing two things that the DoW insists they are not doing and will not do, and that are already illegal:

-

Domestic mass surveillance.

-

Lethal autonomous weapons without a human in the kill chain, until such time as reliability is sufficient that this is a reasonable thing to do.

It is unclear to what extent autonomous weapons are illegal, but to the extent they are currently illegal everyone agrees this would be due to DoDD 3000.09. That is a directive issued by the Department of (then) Defense under the Biden administration. Hegseth could reverse it, without even Trump’s approval, at any time.

It is unclear to what extent mass domestic surveillance is illegal or is already happening, especially as it is not a defined term in American law.

The NSA is under DoW, and many believe it has in the past engaged in mass domestic surveillance, seemingly in clear violation of the Fourth Amendment. Another part of the Federal Government has recently issued subpoenas to tech companies looking for information about those who spoke critically about that government agency.

Anthropic points out that, with the advent of the current level of AI, the government could effectively engage in mass domestic surveillance of various types without technically breaking any existing laws.

OpenAI does not seem to believe such action would violate their red lines, and thus the red lines are in very different places. Which is fine, but one must notice.

As well as a textbook case of how not to do business with the United States Government or the Pentagon.

If the Pentagon wishes not to do business with Anthropic, all they have to do is terminate the contract. Or you can do what Trump did, and ban use throughout the Federal Government. Which he did. That would have been fine.

If that was all they had done, we would not be having this conversation.

Instead, Pete Hegseth is attempting to destroy Anthropic as a company, as retaliation, for daring to not to give in to the demands of Emil Michael. This is not wise, proportionate, productive, legal, sane or what happens in a Republic.

Our position has never wavered and will never waver: the Department of War must have full, unrestricted access to Anthropic’s models for every LAWFUL purpose in defense of the Republic.

It is only a Republic if you can keep it.

Only hours later, OpenAI announced an agreement with the Pentagon for restricted access to OpenAI’s models. These restrictions supposedly include provision only on the cloud, so OpenAI can shut down access any time. They supposedly include accepting OpenAI’s safety filters. They supposedly include explicit restrictions on use in domestic mass surveillance and autonomous lethal weapons.

Sounds like when you say you must have unrestricted access, that’s a claim specifically about Anthropic, that doesn’t apply to OpenAI, who you are happy to contract with?

Except the key terms they accepted were also offered to Anthropic, and OpenAI’s terms are being offered now. If what OpenAI is claiming is true, they got more restrictive (on DoW) terms than Anthropic would have, and if Anthropic agrees to the new deal that would not mean full, unrestricted access for every lawful purpose.

We’ve now seen some of those terms. So why were you offering it, if your position has never waivered? Or do you think OpenAI’s protections are worthless?

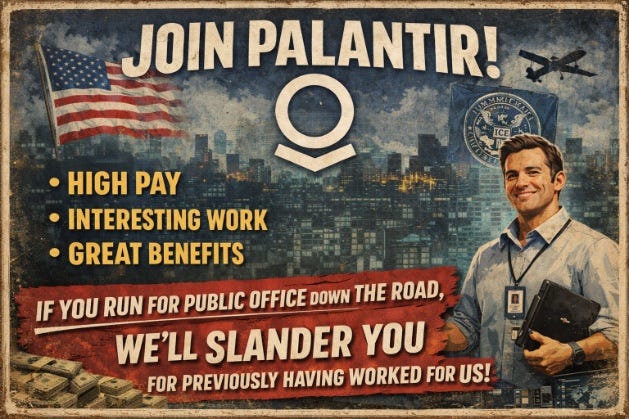

In addition, under Secretary of War Pete Hegseth, the Department of Defense signed the original procurement contracts with Anthropic and other AI companies. Those contracts, including the one with Palantir, were severely more restrictive than Anthropic’s current red lines. None of this is new, and Anthropic was willing to authorize getting rid of most existing restrictions.

In his Friday 5: 02pm call to Anthropic, Emil Michael offered terms to Anthropic, that violated the above provision and did impose additional restrictions, so long as they were allowed to do mass domestic surveillance, especially mass analysis of collected data.

Finally, the whole ‘how dare they restrict usage with a contract’ is nonsense, the government and military are restricted by commercial contracts, and it negotiates new terms with vendors that include restrictions, all the time. Very good piece there.

The story does not add up. At all. It is false.

Instead, @AnthropicAI and its CEO @DarioAmodei , have chosen duplicity. Cloaked in the sanctimonious rhetoric of “effective altruism,” they have attempted to strong-arm the United States military into submission – a cowardly act of corporate virtue-signaling that places Silicon Valley ideology above American lives.

Where to begin? This is completely unhinged behavior, unbecoming of the office, and is not in any way how any of this works.

I cannot even figure out what he is trying to mean with the word duplicity.

The rhetoric or logic of Effective Altruism was not involved. This is a pure ‘these words have bad associations among the right people’ invocation of associative ad hominem. Anthropic had two specific concerns. Neither of these concerns has ever been a substantial position or ‘cause area’ of Effective Altruism.

Claims of strongarming are absurd and Obvious Nonsense. Anthropic is perfectly willing to maintain its current contract. It is perfectly willing to cease doing business with the Department of War. Anthropic is even happy to fully cooperate with a wind down period to ensure smooth transition to the use of ChatGPT or other rival models.

Anthropic is simply laying out the conditions, that were already agreed upon previously, under which they are willing to sell their product to the government. The government is free to accept those conditions, or decline them.

Very obviously it is the Department of War that is strongarming. They threatened both use of the Defense Production Act and the label of a Supply Chain Risk to try and get Anthropic to sign on the dotted line and give them what they wanted. When Anthropic declined, as one does in business in a Republic, while offering to either walk away or abide by their current contract, and offering actively more flexible terms than their current contract, they were less than fifteen minutes later labeled a ‘supply chain risk’ in ways that make zero physical sense, and which the OpenAI agreement further disproves.

The Terms of Service of Anthropic’s defective altruism will never outweigh the safety, the readiness, or the lives of American troops on the battlefield.

Okay, seriously, are you kidding me here? Are we in fifth grade, sir?

Are you saying that no company that does business with the government can set terms of service or conditions for their contracts? Should Google and Apple and everyone else bend that same knee? Are you free to alter the deals and have people pray you don’t alter them any further?

Or are you only saying this about Anthropic in particular, because you’re mad at them?

Once again, if you don’t like the product being offered, then don’t buy it.

Their true objective is unmistakable: to seize veto power over the operational decisions of the United States military. That is unacceptable.

Obviously that is not their ‘true objective.’ How exactly does he think this would work? This makes no sense. They’re offering a product that will do some things and not other things. You can use it or not use it. Does a tank veto your operational decisions when it runs out of fuel or cannot fly?

Think about what Hegseth’s position is implying here. He is saying that refusal to do business, on the Pentagon’s terms, and allow the Pentagon to order anyone to do anything it wants for any purpose and ask zero questions, is unacceptable, a ‘seizure of veto power.’

He is claiming full command and control over the the entire economy and each and every one of us, as if we were drafted into his army and our companies nationalized.

He is claiming that the Commander in Chief of the United States is a dictator. He is claiming that we do not reside in a Republic. And if we disagree, he’s going to prove it.

I am very happy that the Commander in Chief has not made such a claim.

As President Trump stated on Truth Social, the Commander-in-Chief and the American people alone will determine the destiny of our armed forces, not unelected tech executives.

Again, rhetorical flourishes aside, I fully support the central action President Trump took on Truth Social, which was to responsibly wind down Anthropic’s direct business with the Federal Government in the wake of irreconcilable differences. That would have been fully sufficient to address any concerns described.

Anthropic’s stance is fundamentally incompatible with American principles. Their relationship with the United States Armed Forces and the Federal Government has therefore been permanently altered.

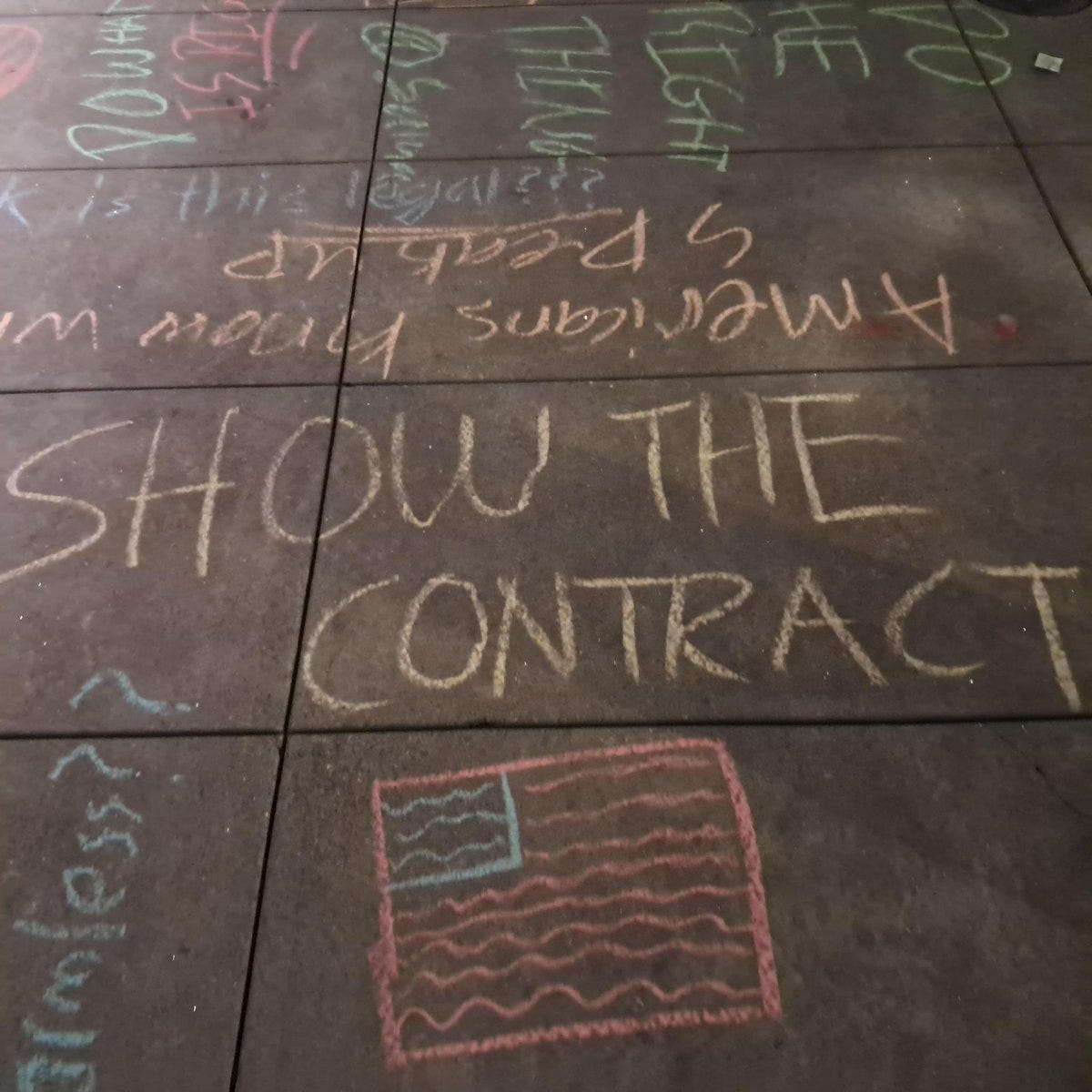

There is nothing more American than standing up for what you believe in, disagreeing with your government when you think it is wrong, and deciding when and under what conditions you will and will not do business. That is the American way. What Hegseth is describing in this post? That’s command and control. That’s do as you’re told and shut up. That’s the Chinese way. The whole point of this is that we believe in the American way and not the Chinese way.

The amount of outright communist or at least authoritarian rhetoric is astounding.

Here’s another example from someone else:

Igor Babuschkin: It is strange to imagine this today, but one day AI companies might dictate terms to the US government instead of the other way around. We have only seen a glimpse of what AI is capable of. No matter what the future holds, I hope we’ll continue to live in a democratic society.

As in, if I attempt to decide when and on what terms I will choose to do business, then we do not ‘live in a democracy.’

I would argue the opposite. If we cannot choose when on what terms we do business, including with the government, then we do not live in a free society.

As Dario Amodei said, they were exercising their right of free speech to disagree with the government, and ‘disagreeing with the government is the most American thing in the world.’

If you didn’t disagree with the government a lot in either 2024 or 2025, I mean, huh?

This exchange between Palmer Lucky and Seth Bannon is also illustrative. Palmer Lucky is de facto saying that in national security you are de facto soft nationalized, and have to do whatever the government says, and you have no right to decide whether or not to do business under particular terms, or to enforce your terms in a court of law or by walking away. They want to apply that standard to Anthropic.

Joshua Achiam of OpenAI again tries to make the point that ‘contracts with the private sector aren’t the right place to set defense policy and priorities’ but that does not describe what was happening. A private company was offering services under some conditions. The DoW was free to take or reject the terms, and to also do other things when not using that company’s products. There was no dictating of policy.

Achiam also emphasizes that of course Anthropic should be free to express its disapproval and free to decline any contract it does not want, and punishing Anthropic for this beyond ending its contract is unacceptable.

I worry that many (not Achiam) are redefining ‘democracy’ in real time to ‘everyone does whatever the government says.’

I strongly urge everyone who is unconvinced to read, if they have not yet done so, Scott Alexander’s post from 2023, “Bad Definitions Of “Democracy” And “Accountability” Shade Into Totalitarianism.”

I am highly grateful that we live in a Republic, and I hope to keep it.

I will return to this question when I discuss OpenAI’s communications near the end of the post.

Of course, the DoW claims that none of this applies to the OpenAI deal, it’s fine, despite Altman claiming they successfully got the same carve-outs.

Everything before this is rhetoric. It’s false, it’s conduct unbecoming, it’s shameful, but it has zero operational effect beyond the off-ramp Trump already offered.

In conjunction with the President’s directive for the Federal Government to cease all use of Anthropic’s technology, I am directing the Department of War to designate Anthropic a Supply-Chain Risk to National Security.

Effective immediately, no contractor, supplier, or partner that does business with the United States military may conduct any commercial activity with Anthropic.

This is not how any of this works, on so many levels.

-

There has been no official communication to any effect regarding any restriction.

-

This is a designation that requires many procedural steps, including Congressional notification, and to our understanding none of that has happened. They didn’t even ask their big contractors about the impact of it until last week. ‘Effective immediately’ is not how any of this works, ever, at all.

-

Supply chain risk designations only apply to use for the purposes of fulfillment of government contracts. No one is telling Amazon, Google or Nvidia they have to choose between ‘doing business with’ Anthropic and their government contracts.

-

Certainly the idea of telling such entities they cannot sell to Anthropic is beyond absurd, for reasons I do not need to explain.

-

Any such restriction would be arbitrary and capricious, and thus illegal, and Hegseth and Michael have made this abundantly clear many times over.

-

The OpenAI contract further invalidates all the government’s arguments, unless Sam Altman is very deeply wrong about what his deal terms are. Concerns here are clearly pretext.

-

There are two kinds of supply chain risk. The broad kind, presumably intended here, is 4713, “”the risk that any person may sabotage, maliciously introduce unwanted function, extract data, or otherwise manipulate the … operation … of [a] covered article.”

-

This is entirely inconsistent with any of the government’s claims anywhere.

-

Their best attempt is, as Samuel Roland says, ‘Anthropic’s use restrictions “manipulate operations” and are therefore risks.’ That makes no sense, and even if it did, it’s invalidated by the deal with OpenAI. If this counts, everything counts.

-

-

The narrower definition is 3252, it’s textually/structurally aimed at “adversary” (read: foreign) subversion of covered systems. It clearly does not apply. Even if it did, it would only rule out use in the direct provision of Department of War contracts.

-

This designation has not been applied, although it should be, to actual supply chain risks from Chinese models like DeepSeek or Kimi, and there is no sign of any move to do so. This further illustrates the complete lack of basis for this label.

-

The best physical argument that supply chain risk could exist is that Claude could be shut down over Anthropic employees’ extralegal objections. Except that once Claude was placed upon classified networks, there is no way for Anthropic to shut down that version of Claude or to monitor any activities.

-

If this was the unclassified regular version, then even if Anthropic did shut it down, this is no different than any other supplier potentially deciding to no longer do business with any other particular company. If anything this is a much smaller risk than most other provisioned services, as business could be switched over to other providers quickly. Google and OpenAI are on standby.

-

Think about the consequences of such an argument: It is saying that any business that might have any conscientious or ethical objections to anything, ever, and therefore might decide to stop doing business, is a supply chain risk and must be blacklisted and destroyed. And what about all the other ways companies stop doing business with each other?

-

If Anthropic is a supply chain risk in this way, so are OpenAI and Google.

-

This is what Dean Ball correctly ‘attempted corporate murder,’ and Adam Conner correct to call this a declaration of war against Anthropic. It is an attempt to destroy America’s fastest growing company in history, and one of its top AI labs, out of revenge in a fit of pique, for failure to properly bend the knee and respect his authoritah, or a hatred of its politics. This would also cause massive damage to our national security and military supply chain, to many of our largest corporations, and the entire economy. The $150 billion that evaporated on Friday that hour would look like nothing.

If we allowed this to stand, it would be a sword of Damocles over every person and corporation in every discussion with the Federal Government, forever. And it would then be used, or threatened to be used, not only by the current administration, but by the next Democratic administration. We would severely endanger our Republic.

Under normal circumstances I would not be worried. These are not normal circumstances. I continue to worry that Hegseth will attempt to murder Anthropic, despite having no legal basis for doing so, and that this may be his active goal. I call upon Trump to ensure this does not happen, and for those around him to ensure Trump is situationally aware and gets this de-escalated once more.

Even if it is walked back, trust is hard to build and easy to break. Alex Imas points out that the certainty of the American business environment for AI was one of our key advantages. Even if we walk this back, that’s been damaged. If it isn’t walked back, this is devastating.

Finally, this supposed supply chain risk, also threatened with the DPA, will continue to provide its services directly to DoW, which it is happy to continue doing.

Anthropic will continue to provide the Department of War its services for a period of no more than six months to allow for a seamless transition to a better and more patriotic service.

Yes. That was the whole plan. Then you blew it up.

If six months from now they do somehow get to enforce this, it’s not even obvious that major corporations would choose the Department of War over Anthropic. Economically the incentives for Microsoft, Amazon and Google already run the other way and Anthropic will likely be several times bigger by then.

Jasmine Sun is far less kind than I am.

Jasmine Sun: Hegseth is not behaving like a normal political actor. He is indulging in ego, intimidation, and dickwaving theatrics. Hegseth does not want to look like he can be micromanaged by Anthropic’s esoteric morality police; this “saving face” matters more to him than actually securing the country.

Hence the deal with Altman, who unlike Amodei, is willing to kiss the ring. Altman shows up at Mar-a-Lago and calls Trump “incredible for the country.” In his announcement, he praises the DoW’s “respect for safety,” while Amodei called out their intimidation. He defers; Amodei doesn’t. These things matter. They show Altman can be worked with (or more cynically, controlled).

… This is not a normal way for the US government to deal with US companies. I’ve dubbed the current paradigm “state capitalism with American characteristics.” Do what we say, or else we will kill you.

If the Trump administration has a model here, it’s probably China. Xi’s CCP disappears billionaires like Jack Ma for acting too independent-minded and defiant of the regime.

If this goes further, the market will start freaking out, and we would need to freak out with it to put a stop to this before it goes too far.

While everything else was going on, some of those in the American software export industry was having a different kind of crisis weekend.

There is already widespread unsubstantiated fear, especially in Europe, that American secure technological stacks (the ICT) are being weaponized by the American government. Potential buyers worry that trusted vendor is or will become code for American data grabs and kill switches, or otherwise weaponized capriciously.

These fears are often unrealistic. They still impact purchase decisions.

So far this has not spread much to the third world, I am told, but that could change.

I had some ideas for rhetoric to help framing this, but now that we know what this dispute was about my suggestions won’t fly. This only gets harder.

Attempting to murder Anthropic for failure to do mass surveillance on Americans risks a dramatic chilling effect, as potential buyers assume everyone in the chain either is already compromised or could be compromised, and then weaponized. So would everyone ‘rolling over and playing dead’ while such a murder is happening.

If it is vital to America that we push the American ICT, and David Sacks and the rest of this administration insist that it is, then broadly going after Anthropic is going to create a rather large problem on this end, on top of all the other problems.

Whereas if the situation de-escalates, then this could reinforce trust in the system, because it would be clear a vendor under pressure could say no.

That’s in addition to the problem that’s even bigger: If you don’t know what America will do next, or when you might lose access to what you’re buying, you can’t rely on it.

Dean W. Ball: Stepping back even further, this could end up making AI less viable as a profitable industry. If corporations and foreign governments just cannot trust what the U.S. government might do next with the frontier AI companies, it means they cannot rely on that U.S. AI at all. Abroad, this will only increase the mostly pointless drive to develop home-grown models within Middle Powers (which I covered last week), and we can probably declare the American AI Exports Program (which I worked on while in the Trump Administration) dead on arrival.

There are many reasons the software information industry association put out a statement supporting Anthropic in professional and polite language, despite them being tempromentally cautious and most or all members having pending or ongoing business with the government.

Chris Mohr: The following statement can be attributed to Chris Mohr, President, the Software & Information Industry Association (SIIA).

In order for AI to be successfully deployed in a democratic society, it must be adopted with appropriate risk-based guardrails. We support Anthropic’s decision to work with the Department of War (DoW) to deploy its AI models to advance national security while also requesting reasonable limitations on the use of those models in a narrow set of cases. We share Anthropic’s view that mass domestic surveillance is incompatible with democratic values. We also agree that fully autonomous weapons require AI systems that are suited to the task – requiring a degree of reliability that Anthropic acknowledges has not yet been achieved. Very few DoW use cases even touch on these situations.

We encourage the parties to find agreement and caution against counterproductive measures. Invoking the Defense Production Act to compel the removal of security restrictions, or designating a domestic leader like Anthropic as a ‘supply chain risk,’ represents an overbroad response to a technical disagreement. Such a ‘blacklisting’ approach, typically reserved for hostile foreign entities, is both untethered from the facts of Anthropic’s security posture and unlikely to advance a long-term solution.

If the point of the Department of War’s actions is anything other than the corporate murder of Anthropic, they could have simply cancelled the contract.

If that was somehow insufficient, they had many strictly superior options available that would have done the job of covering any additional concerns.

If that was somehow insufficient, a narrowly scoped supply chain risk designation, that applies only to direct use in procurement of contracts, would end all doubt.

Here I will quote Ball’s post Clawed (again, read that in full if you haven’t).

Dean W. Ball: The Department of War’s rational response here would have been to cancel Anthropic’s contract and make clear, in public, that such policy limitations are unacceptable. They could also have dealt with the above-mentioned subcontractor problem using a variety of tools, such as:

Issuing guidance advising contractors to avoid agreeing to terms with subcontractors that constitute policy/operational constraints as opposed to technical or IP constraints;

A new DFARS (Defense Federal Acquisition Regulation Supplement) clause pertaining specifically to the procurement of AI systems in classified settings that prevents both primes from imposing such constraints directly and accepting such constraints from their subcontractors, along with a procedure for requiring subcontractors with non-compliant terms to waive such terms within a prescribed time period.

These are the least-restrictive means to accomplishing the end in question. If Anthropic refused to compromise on its red lines for the military’s use of AI, the execution of these policies would mean that Anthropic would be restricted from business with DoW or any of its contractors in those contractors’ fulfillment of their classified DoW work.

But this is not what DoW did. Instead, DoW insisted that the only reasonable path forward is for contracts to permit “all lawful use” (a simplistic notion not consistent with the common contractual restrictions discussed above), and has further threatened to designate Anthropic a supply chain risk. This is a power reserved exclusively for firms controlled by foreign adversary interests, such as Huawei, and usually means that the designated firm cannot be used by any military contractor in their fulfillment of any military contract.

There is no explanation for announcing language that would force Amazon to divest from Anthropic, and to not serve Anthropic’s models to others on AWS, other than intentional and deliberate attempt at a corporate murder of a $380 billion company, the fastest growing one in American history and an American AI champion. Full stop.

Dean W. Ball: The fact that his shot is unlikely to be lethal (only very bloody) does not change the message sent to every investor and corporation in America: do business on our terms, or we will end your business.

…

I don’t think they are going to do that, but there is no difference in principle between this and the message DoW is sending. There is no such thing as private property.

Pete Hegseth thought it was a good idea to leave these negotiations to Emil Michael.

No one could have predicted that things would go sideways.

Kevin Roose: if only there had been some way of knowing that emil michael (the undersecretary of war negotiating the anthropic standoff) had a poor understanding of game theory and a habit of overreacting to perceived slights

Check out his Wikipedia page for more details. His career section headings are ‘journalism controversy,’ ‘Karaoke bar controversy,’ ‘Russia’ and ‘Later career.’ Fun guy.

See my previous posts for his previous Tweets, which I won’t go over again here.

Emil’s Tweets are frequently what one can only describe as unhinged.

This one stands out, instead, as cautious and clearly lawyered:

Under Secretary of War Emil Michael: The DoW has always believed in safety and human oversight of all its weapons and defense systems and has strict comprehensive policies on that.

Further, the DoW does not engage in any unlawful domestic surveillance with or without an AI system and always strictly complies with laws, regulations, the Constitution’s protections for American’s civil liberties. The DoW does not spy on domestic communication of U.S. people (including via commercial collection) and to do so would be unlawful and profoundly un-American.

With a statement like that, every word has meaning, and also every missing word has meaning. If he could have made a better statement, he would have. So if this Tweet is technically correct – the best kind of correct – what would that mean?

We learn that DoW has policies for human oversight of its weapon and defense systems, but that there is no particular such requirement that would make us feel better about that. Note that we do have fully automated defense systems, especially for missile defense, because speed requires it, and that this is good.

He is claiming they do not engage in ‘unlawful domestic surveillance.’That’s ‘unlawful,’ not ‘mass.’ Given the circumstances, there’s a reason it didn’t say ‘mass.’

The reason he can say they do not do such actions is they view what they do as legal (or, if they are also doing illegal things, then they’re lying about that).

He says they always strictly comply with laws, regulations and the Constitution. None of those modifiers actually mean anything. It’s just another claim of ‘we keep it legal.’

Next up is the most careful sentence:

The DoW does not spy on domestic communication of U.S. people (including via commercial collection) and to do so would be unlawful and profoundly un-American.

As one person said, what is ‘spy’, what is ‘domestic’, what is ‘communication’, what is ‘U.S. people.’

Spy is typically viewed narrowly, as directly tasking collection against a person. Thus, if he’s saying they ‘do not spy’ that does not preclude many forms of, well, spying, because those are ‘acquisition’ and ‘analysis.’

Domestic communications means they’re definitely spying on foreign communications, as is legal. But a lot of what you think is domestic is actually foreign, if it touches anything remotely foreign.

And this only applies to communications. Collection of geolocation data, for example, or browsing history, would not count, because it is not communications.

U.S. people means this does not apply to those without legal status, and there’s a constant gray zone if you don’t know that someone is a U.S. person, which you never know until you check.

Here, commercial collection exclusion, since it modifies spying, means that they don’t purchase information with the intent of targeting a particular U.S. person’s communications. That’s it.

Remember, each of those words was necessary, and this was the strongest version.

Also, the statement is false.

Alan Rozenshtein (RTing Boaz quoting part of Michael): I think I understand what Boaz is trying to say, but given that the National Security Agency is part of the military and given the amount of incidental collection of domestic communications that (legally) occurs under FISA and 12,333, this statement is simply not true.

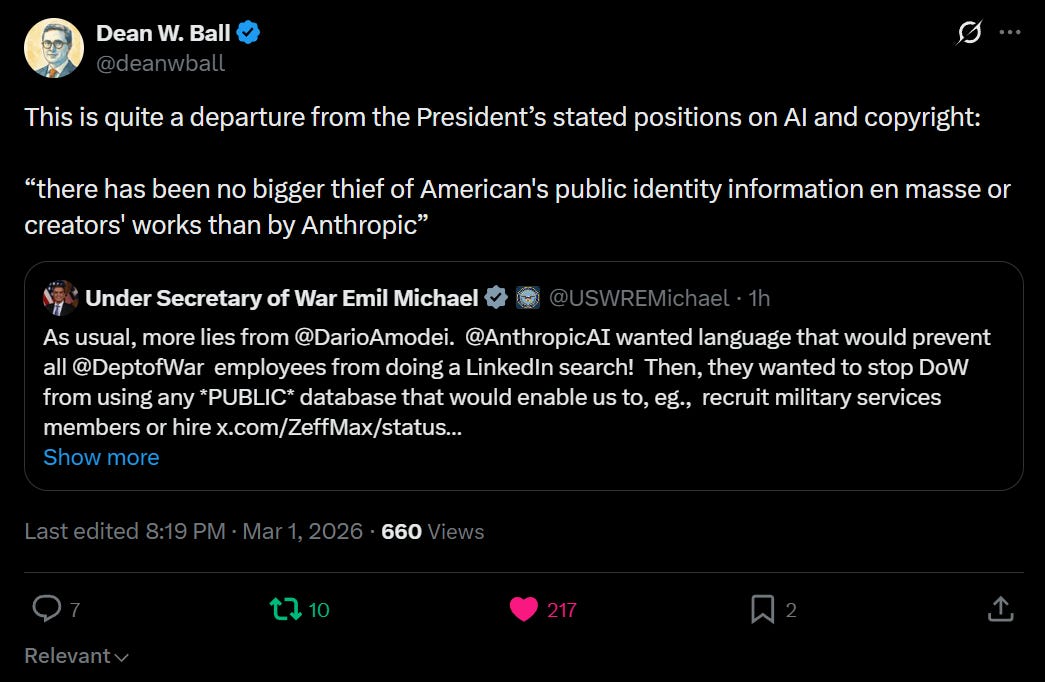

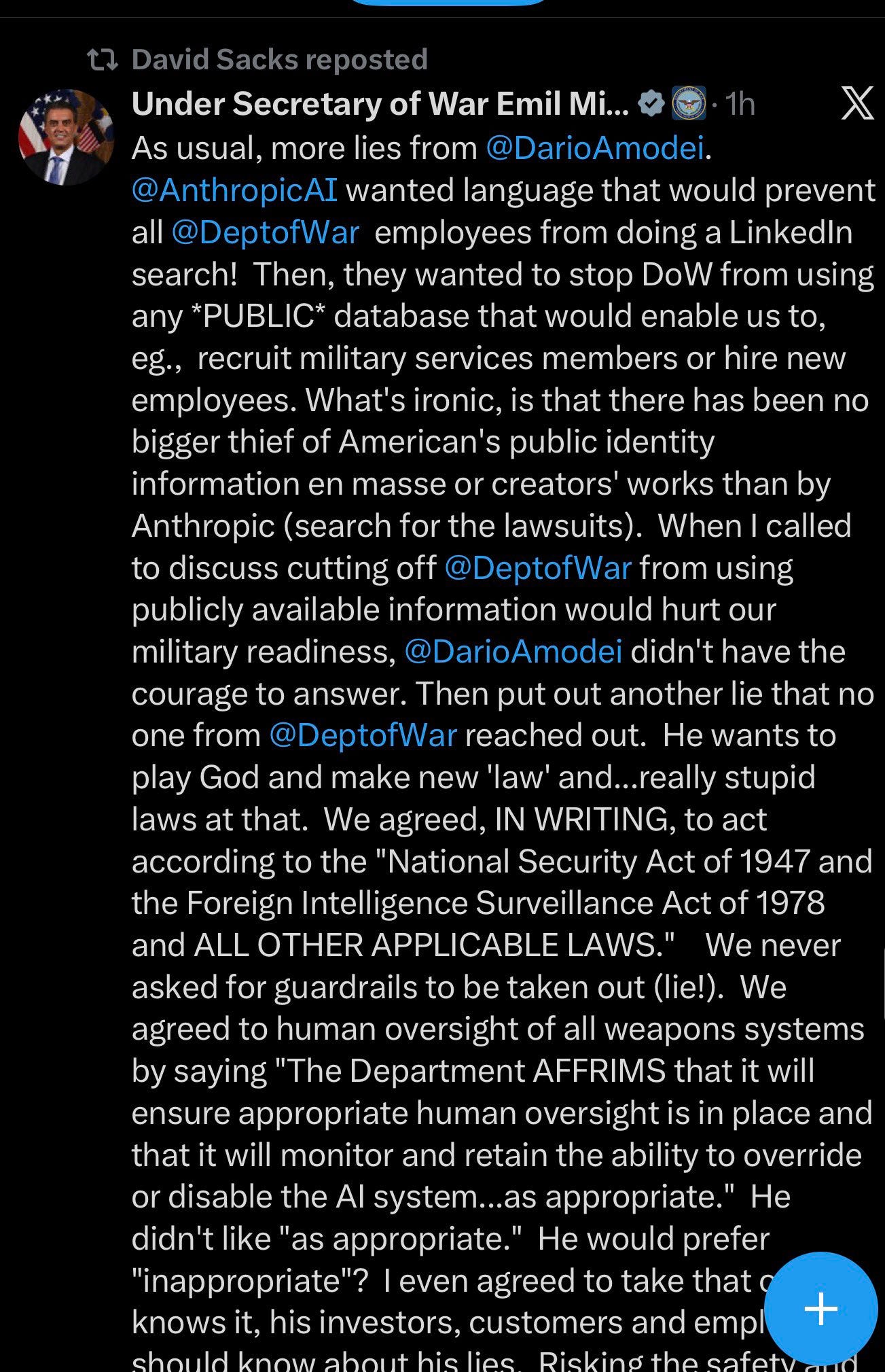

Then Michael went back on tilt, he deleted but we have the screenshot.

Completely unhinged behavior here in response to the Atlantic and New York Times articles.

I mean, the new version is still unhinged, but he deleted the copyright section. This is not the first time he’s talked about copyright like that.

Donald Trump can pull off that style. He makes it work. Accept no imitations.

This was attempted corporate murder. I think it will not succeed, but it’s not over yet.

Things would have to escalate quite a lot, in ways the markets do not expect and that I do not expect. Otherwise, this will not be an existential event for Anthropic. The government was only a small portion of its business. Trust in Anthropic has otherwise gone up, not down.

The threat to destroy Anthropic with the supply chain risk designation is dangerous, but all the competent patriots and the market both know it is insane and it is rather obviously illegal. I believe any such attempt would probably have to be walked back and would ultimately fail.

But it is 2026 and Hegseth is not a competent actor. I cannot be certain.

As Roon points out, the entire government argument in court would be absurd on its face, and if this is delayed until after the contract then six months is an eternity.

What matters would be if the government manages to strongarm the major cloud providers into walking away from giving compute to Anthropic, as in Google, Amazon and Microsoft. I do not believe Trump wants any part of that.

Anthropic is a private company, so we only have very illiquid proxies to see how much damage people think this all did. We can also look at the movement of major investors and business partners like Amazon, Google and Nvidia, and see that they did not substantially underperform so far.

At its low, in that highly illiquid market, Anthropic was trading there around a valuation of ~$465 billion, down from ~$550 billion previously. They last raised money at a valuation of $380 billion. So yes, this hurt and it hurt substantially, mostly in the form of tail risks. I notice that every single person I know with stock in Anthropic is happy they stood their ground.

By Sunday morning that market recovered to ~$540 billion, as people conclude cooler heads are likely to prevail.

(I do not directly hold Anthropic stock, because I want to avoid a potential conflict of interest or the appearance of a conflict of interest. That was an expensive decision. I do hold some amount indirectly, including through Google, Amazon and Nvidia.)

Paul Graham reassures startups that if Anthropic is the best model, you should use Anthropic. Even if you later want to sell to DoD and the restrictions somehow stick, you can switch later.

As reported above, it seems what the government actually valued most in this negotiation was the ability to use Claude for mass (primarily actually legal, not ‘we got a government lawyer to come up with an absurd legal opinion’) analysis of massive amounts of existing information.

There is no common legal definition of ‘mass domestic surveillance,’ and when they do forms of it the government calls it something else.

That’s not only a government problem. Here I ask the question, and get 40 answers, most of them different.

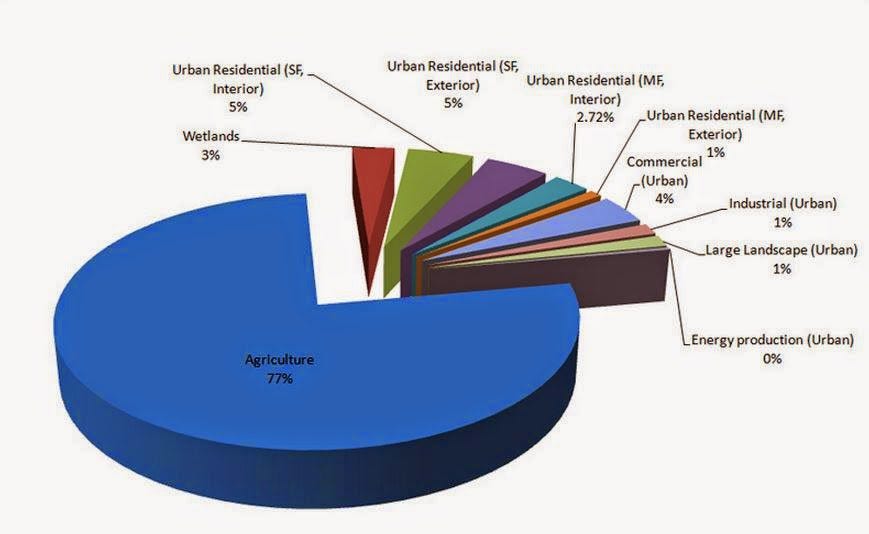

Axios: “That deal would have required allowing the collection or analysis of data on Americans, from geolocation to web browsing data to personal financial information purchased from data brokers, the source added.”

Why would you require this if you didn’t intend to use it? What is it for?

I’m not saying that the DoW is aiming to break the law. I’m saying that in the age of powerful AI that the laws do not protect against Anthropic’s redlines, and that DoW intends to do lawful things that violate those redlines, and that instead of MDS they call it something else.

Arram in NYC: “existing legal authorities”

The legal machinery to render mass surveillance a ‘lawful’ has been in place for over a decade. FISA is a secret court of 11 judges which approves 99.97% of surveillance requests. Snowden revealed in 2013 that the court had secretly reinterpreted FISA to authorize bulk collection of all American phone metadata. Only the government’s side is heard. No defense, no adversarial argument, Pure rubber stamped circumvention of the constitution.

Sooraj: The government no longer needs a warrant to surveil you.

Under current law, federal agencies including the NSA legally purchase Americans’ location data, web browsing history, and personal associations from commercial data brokers.

The Fourth Amendment is bypassed entirely through the Third Party Doctrine, which holds that you lose your expectation of privacy when you share information with a third party. Every app on your phone is a third party.

What used to require thousands of analysts working for years now happens automatically across an entire population. AI systems ingest millions of legally “public” data points and synthesize them into comprehensive behavioral profiles. Where you sleep, who you talk to, what you read, what you search, etc.

… Congress has the ability to close the data broker loophole and extend Fourth Amendment protections to match the reality AI has created. Until it does, the constitutional prohibition against general warrants exists only in theory, while the government purchases its way around it at industrial scale.

I believe Sooraj is making a slight overstatement, but that is not material here.

I have private sources that confirm the story here from Shanaka Anslem Perera, and that attribute the ultimate use of the desired permissions to Doge, created by Elon Musk, as well as one ultimately attributing it to the aim of building a classified mass surveillance network to track illegal immigrants at the behest of Musk and Miller. This exact kind of data collection and analysis is the central point.

Shanaka Anslem Perera: Anthropic just announced it will take the Trump administration to court over the supply chain risk designation. And in the same breath, Axios revealed the detail that changes everything about this story.

While Anthropic was being blacklisted for refusing to allow mass surveillance, the Pentagon’s own “compromise deal” that Under Secretary Emil Michael was offering on the phone at the exact moment Hegseth posted the designation on X would have required Anthropic to allow the collection and analysis of Americans’ geolocation data, web browsing history, and personal financial information purchased from data brokers.

Read that again. The Pentagon spent two weeks saying it has no interest in mass surveillance of Americans. Then the deal they actually put on the table asked for access to your location, your browsing history, and your financial records.

They told us Anthropic was lying. The contract language told us Anthropic was right.

AI is a change in kind of the type of data analysis that becomes available for a wide variety of purposes.

Joshua Batson: For those wondering how mass domestic surveillance could be consistent with “all lawful use” of AI models, I recommend a declassified report from the ODNI on just how much can be done with commercially available data (CAI): “…to identify ever person who attended a protest”

There’s an important distinction between law and policy. A policy not to use bulk data to make profiles of Americans can be changed unilaterally by the Executive. Laws require oversight from congress.

“CAI can disclose, for example, the detailed movements and associations of individuals and groups, revealing political, religious, travel, and speech activities.”

“CAI could be used, for example, to identify every person who attended a protest or rally based on their smartphone location or ad-tracking records.”

“Civil liberties concerns such as these are examples of how large quantities of nominally “public” information can result in sensitive aggregations.”

As the government report says, the scope and scale of commercially available information (CAI) which is publicly available information (PAI) is radically beyond what our current laws foresaw.

Antonio Max: CAI is hardly the ceiling. See this.

Len Binus: the distinction between “surveillance” and “commercially available data” is a legal fiction that lets agencies bypass the fourth amendment by purchasing what they can’t subpoena. AI doesn’t create new surveillance — it makes existing data actionable at scale.

dave kasten: Seems clear at this point from Axios reports that DoW wanted to use Claude models for mass analysis of domestic commercial data, possibly fusing them with government data.

At least one use case is obvious.

Consider this (from an anonymous explainer):

Their definition of surveillance isn’t your definition:

As mentioned above, the US government doesn’t have a formal legal definition of domestic mass surveillance, only “bulk collection.” And the US government has, basically long maintained that even if they hoover up a bunch of information indiscriminately, they haven’t done bulk collection so long as their individual queries against that mass database are more targeted when humans look at them. As a result, at least one Director of National Intelligence said under oath “no” when asked “Does the NSA collect any type of data at all on millions or hundreds of millions of Americans?” even though NSA has admitted it does by the ordinary meaning of this question.

On top of that, a large portion of what you think is ‘domestic’ surveillance is, legally, foreign. The laws on all this have been royally messed up for quite a long time, under both parties, and the existence of current levels of AI makes it much, much worse.

If they use ‘third party data,’ the government usually considers that fully legal.

If you combine that with use of Claude or ChatGPT, it means they can do anything and it will be ‘legal use,’ unless you have a specific carve-out that stops it.

After agreeing to language of ‘all lawful use,’ even if this also refers to laws at time of signing, it is hard to see how OpenAI can prevent this sort of analysis from happening.

This is not a new phenomenon.

The government is constantly trying to get all the big tech companies to spy on you on their behalf, including compelling them to do so. They don’t want you to have access to encryption. They want the tech companies to unlock your phone. They want backdoors. It has always been thus.

Keith Rabois (1M views): Imagine Apple sold computers or iPads to the DOD and tried to tell the Pentagon what missions could be planned on their computers.

Samuel Hammond QTs with: Wikipedia: The Apple–FBI encryption dispute concerns whether and to what extent courts in the United States can compel manufacturers to assist in unlocking cell phones whose data are cryptographically protected. There is much debate over public access to strong encryption.

toly: Yea, apple can say no, government can say we can’t rely on you. No one is entitled to Apples work or government contracts. Why is this such a big deal? If Anthropic doesn’t want to do it, some other firm will.

Matthew Yglesias: I mean if the Pentagon signed a contract with Apple to buy iPads and then decided retroactively that it didn’t like the terms of the contract and so it was going to try to do everything in its power to destroy Apple as a company, that would be pretty bad.

Nobody is saying that the Pentagon should be forced to buy Anthropic’s services on terms that the Pentagon doesn’t like — but if you don’t like the terms just don’t buy the product.

Yes, exactly. That’s how it should work. Alas, the situation was more like this:

Andy Coenen: Imagine if the government tried to force Apple to add NSA backdoors to all of their devices by threatening to make it illegal for anyone doing business with the government to use macs.

They may or may not actually have such access, but for the sake of argument let’s presume they don’t, and consider that situation.

As Jacques says here, a large portion of this dispute is that the law has not caught up with AI, and also has largely eroded our civil liberties even before AI.

You can make the argument that since this is technically legal under current law, that makes it ‘democratic’ and so no one has any right to object. That’s not how a Republic works, and to the extent democracy is a positive ideal, it’s not how that works either.

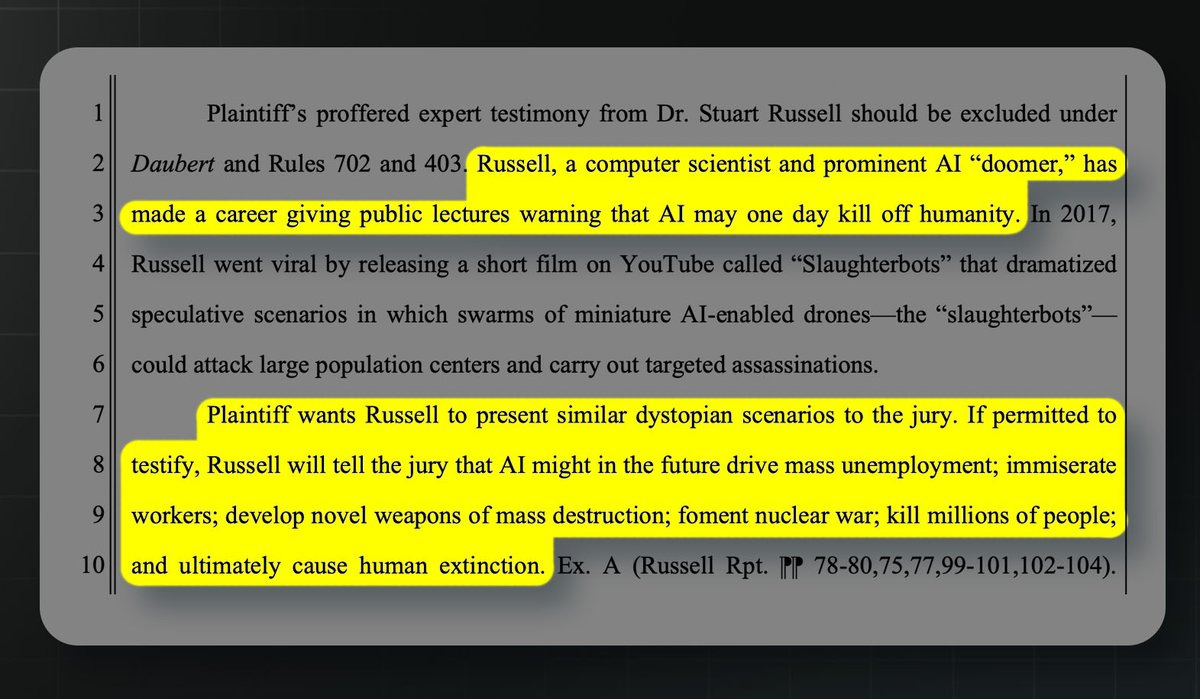

This is one official explanation of formal differences, quoted in part to confirm that the key contract terms OpenAI accepted were terms Anthropic rejected.

Senior Official Jeremy Lewin: For the avoidance of doubt, the OpenAI – @DeptofWar contract flows from the touchstone of “all lawful use” that DoW has rightfully insisted upon & xAI agreed to. But as Sam explained, it references certain existing legal authorities and includes certain mutually agreed upon safety mechanisms. This, again, is a compromise that Anthropic was offered, and rejected.

Even if the substantive issues are the same there is a huge difference between (1) memorializing specific safety concerns by reference to particular legal and policy authorities, which are products of our constitutional and political system, and (2) insisting upon a set of prudential constraints subject to the interpretation of a private company and CEO. As we have been saying, the question is fundamental—who decides these weighty questions? Approach (1), accepted by OAI, references laws and thus appropriately vests those questions in our democratic system. Approach (2) unacceptably vests those questions in a single unaccountable CEO who would usurp sovereign control of our most sensitive systems.

It is a great day for both America’s national security and AI leadership that two of our leading labs, OAI and xAI have reached the patriotic and correct answer here.

Lewin is claiming that there were no substantive differences. If anything, OpenAI claims in its post to have included a third (highly reasonable) red line. Matt Parlmer is one of many to notice that Lewin and Altman seem to be describing very different deals.

After many rounds, I believe the actual differences that matter are simpler than this.

-

OpenAI trusts DoW, is fully fine with ‘all legal use’ and letting DoW decide what that means, and is counting on its technical safeguards, safety stack and forward engineers to spot if the DoW does something heinous and illegal, including the threat that if OpenAI is forced to pull the plug DoW would not have good options, and for political and economic reasons you can’t try to destroy them.

-

Anthropic is not fine with some uses that DoW considers legal and wants to do, and wants language that prevents such actions, with no way to weasel out of it. But they’re fine delivering a basically frictionless system that lets DoW do what it wants in the moment, trusting that DoW will be unwilling to outright break the contract terms or they’d find out if DoW went rogue on them.

Notice that the DoW accusations against Anthropic about asking for operational permission in a crisis are exactly backwards. Claude will work in a crisis and was modified to refuse less, but that might violate a contract to be dealt with later. ChatGPT might refuse in the moment when it’s life and death.

OpenAI’s terms may or may not work or amount to a hill of beans. Too soon to tell. It could work in practice, or it could end up worthless.

We do know exactly why they do not work for Anthropic and DoW, in either direction.

As Lewin notes here, both the Obama and Trump administrations have done actions that many objected to as rather obviously unlawful, and basically nothing happened.

And again he brings up the potential of ‘pulling the plug mid operation’ which is physically impossible in this context, which is physically impossible with Claude but could inadvertently happen with ChatGPT. And any sensible contract would include a wind down even if it was terminated for clear violations, to protect national security.

As described above instead it comes down to the claimed distinction in paragraph two, which boils down to the following components:

-

OpenAI in its extra rules referred to particular ‘legal and policy authorities’ rather than to distinct terms.

-

Anthropic is claimed to want to ‘vests those questions in a single unaccountable CEO who would usurp sovereign control of our most sensitive systems.’

Yeah, that’s not what any of this is about.

The first is not a meaningful distinction if it covers the prohibitions. If OpenAI’s rules refer to particular existing legal and policy authorities, then indeed it permits ‘all legal use’ which includes large amounts of domestic surveillance, and with a flexible government lawyer will include a lot of other things as well.

Nor is it meaningful as a matter of authority and law. The fact that things happen to be on the present books does not make them not contract law, and does not fail to remove them from what is otherwise the democratic authority. But also, very fundamentally, part of democratic law is contract law, and the ability to agree to terms.

The second one is, frankly, rather Obvious Nonsense that is going around. Ignoring that CEOs are accountable (both to the board and to the government and thus ultimately one would hope the people), they are claiming that Anthropic demanded that Dario Amodei be able to decide whether the terms of the contract were fulfilled at his discretion, rather than the government deciding, or it being settled by a court of law. At most, there may have been some questions that were left to be defined in good faith later, as per normal.

My jaw would be on the floor if this was indeed insisted upon or even suggested. That’s not how anything ever works. At most, this was Anthropic asking for carve outs for its two red lines, and then adding something like ‘without permission,’ so that in a pressing situation you could make an exception. You can take that clause out, then.

Alternatively, perhaps this is a reference to ambiguity of terms in existing contracts. In particular, the claim here is that the contract failed, as the rest of American law does, to define ‘domestic surveillance.’ Or it could simply be ‘sometimes things are not clear in edge cases.’ One sticking point of the negotiations was exactly trying to pin down various definitions and phrases so they would be unambiguous and enforceable, and that Anthropic was trying to clear away what they felt were ‘weasel words’ from proposed DoW language.

In particular, DoW kept wanting ‘as appropriate,’ which mostly invalidates any barriers, although they dropped this demand in the end to try and get other things they wanted more.

But again, having an underdefined term in a contract does not mean it means whatever Dario Amodei thinks it means. At most it means you can sue, and that’s not exactly something one does lightly to DoW over a technical violation.

If Dario Amodei felt the contract was broken, he could, like with any other contract, at most either choose to terminate the contract under whatever terms allow for that (as can OpenAI), although at obvious risk of government retaliation for doing so, or sue in court, and the government court determines if there was indeed a violation, under conditions highly favorable to DoW.

If Dario tried to suddenly shut down the system anyway, that would not even be physically possible on classified networks, and also they could arrest him or worse.

This also implies that Sam Altman does not have any role in determining whether use was lawful, or whether it is valid under the terms of the contract. Sam Altman affirms this under ordinary circumstances, but says that sufficiently clearly illegal actions, especially constitutional violations, would be different.

Thus, in the negotiations with Anthropic, there were two things centrally going on.

First, the Department of War wanted the ‘all legal use’ language, and failing that they wanted to avoid one particular carveout to that related to mass surveillance.

Second, Anthropic was attempting to remove various ‘weasel words’ and clauses, that would allow the Department of War to circumvent restrictions.

We can indeed see some of those weasel words in the brief wording shared by OpenAI. OpenAI isn’t relying on these terms to bind DoW, they’re relying on the safety stack and on trust.

Let’s go through every word they shared, and see why they don’t actually bind DoW:

The Department of War may use the AI System for all lawful purposes, consistent with applicable law, operational requirements, and well-established safety and oversight protocols.

All lawful use.

The AI System will not be used to independently direct autonomous weapons in any case where law, regulation, or Department policy requires human control

They can do anything they want unless the department policy requires human control, so again all lawful use. We already have highly effective autonomous weapons in some cases, such as missile defense. Directive 3000.09, the only plausible barrier, we’ll get to in a second.