All the news that’s fit to print, but has nowhere to go.

This important rule is a special case of an even more important rule:

Dirty Hexas Hedge: One of the old unwritten WASP rules of civilization maintenance we’ve lost is: when someone behaves insincerely for the sake of maintaining proper decorum, you respond by respecting the commitment to decorum rather than calling out the insincerity.

The general rule is to maintain good incentives and follow good decision theory. If someone is being helpful, ensure they are better off for having been helpful, even if they have previously been unhelpful and this gives you an opportunity. Reward actions you want to happen more often. Punish actions you want to happen less often. In particular beware situations where you punish clarity and reward implicitness.

Another important rule would be that contra Elon Musk here you shouldn’t ‘sue into oblivion’ or ‘ostracize from society’ anyone or any organization who advocated for something you disagree with, even if it plausibly led to a bad thing happening.

Even more importantly: When someone disagrees with you, you don’t use the law to silence them and you most definitely don’t choose violence. Argument gets counterargument. Never bullet, no arrest, no fine, always counterargument. I don’t care what they are advocating for, up to and including things that could plausibly lead to everyone dying. It does not matter. No violence. No killing people. No tolerance of those who think they can have a little violence or killing people who disagree with them, as a treat, or because someone on the other side did it. No. Stop it.

This seems like one of those times where one has to, once again, say this.

This seems like a lot of percents?

Benjamin Domenech: New death of the West stat: 42 percent of people in line to meet Buzz Lightyear at Disney theme parks last year were childless adults.

Source: author A.J. Wolfe on Puck’s The Town podcast.

PoliMath: When I went to Disney in 2019, my kids were in line to meet Sleeping Beauty and the guy in front of us was a 30ish single dude who gave her a bouquet of roses and weirdly fawned over her. I admired the actress for not displaying her disgust.

If that is who gets the most value out of meet and greets, okay then. It also presumably isn’t as bad as it sounds since it has been a long time since Buzz Lightyear has been so hot right now, I presume characters in recent movies have a different balance. The price sounds sufficiently high that they should add more copies of such characters for meet and greets until the lines are a lot shorter? How could that not raise profits long term?

Some notes from Kelsey Piper on literary fiction.

A-100 Gecs (1m views): the pearl-clutching about no young white men being published in The New Yorker is so funny like men, writ large, are basically a sub-literate population in the US. men do not read literary fiction. if you have even a passing interaction with publishing you realize this.

Kelsey Piper:

-

Open disdain for people on the basis of their sex is bigoted and bad.

-

Men obviously did read and write literary fiction for most of the history of literary fiction; so, if that has changed, I wonder why it has changed! Perhaps something to do with the open disdain!

Like in general, I try not to waste too much of my time on “this hobby has too few Xs” or “this hobby has too few Ys,” since that can happen totally organically, and pearl-clutching rarely helps.

However, if the hobbyists are saying “our hobby has no men because they are a ‘subliterate population,’” then I suddenly form a strong suspicion about why their hobby has no men, and it’s not that people innocently have different interests sometimes.

John Murdoch gives us many great charts in the FT, but often we lack key context and detail, because as John explains he only has very limited space and 700 words and everything needs to be parsable by a general audience, so spending space on methodology or a full y-axis is very expensive. We appreciate your service, sir. It would still be great to have the Professional Epistemically Ideal Edition available somewhere, any chance we can do that?

Reading books for pleasure continues to decline by roughly 3% per year. Alternatives are improving, while books are not improving, indeed the best books to read are mostly old books. So what else would you expect? Until recently I would say people are still reading more because a lot of screen use is reading, but now we have the rise of inane short form video.

Madeleine Aggeler figures out very basic reasons why you might want to not be constantly lying, and that she would be better off if she stopped lying constantly and that you really can tell people when you don’t want to do something, yet she fails to figure out that not lying does not require radical honesty. You can, and often should, provide only the information needed.

The IQ tests we have are drawn from a compact pool of question types and so can, unsurprisingly, be trained for and gamed. If you want to raise the result of your IQ test this way, you can totally do that. Goodhart’s Law strikes again. That doesn’t mean IQ is not a real or useful thing, or that these tests are not useful measures. It only means that if you want to make the (usually low-IQ) move of pretending to be higher IQ than you are by gaming the test, you can do that. So you need to not give people strong incentive to game the tests.

I often hear discussion of ‘masking’ where autistics learn how to fake not being autistic and seem like normies, or similarly where sociopaths learn not to act like sociopaths (in the clinical sense, not the Rao Gervais Principle sense) and seem like normies, because they realize that works out better for them. I mention this because I notice I rarely hear mention of the fact that (AIUI) the normies are mostly doing the same exact thing, except that they more completely Become The Mask and don’t see it as a strange or unfair or bad thing to do this kind of ubiquitous mimicry, and instead do it instinctively?

There is an obvious incentive problem here, very central and common.

Eugyppius: when you’re with girl, do not quietly remove bugs. call her attention to bugs first, then heroically remove them for her. they love this.

Lindy Man: This is also good advice for the workplace. Never fix anything quietly.

Sean Kelly: When I discover a bug and figure out the solution, I don’t fix it.

I have an accomplice report it and play up how bad it is in the stand up.

Then I sagely chime in, “I bet I can figure that one out.”

If the bug looks tough, the accomplice suggests the H-1B with the shortest queue.

Caroline: People will fix things quietly and then complain they’re underappreciated. Does anyone even know what you did? lol

Kyle Junlong: ah yes, “half the work is showing your work.”

honestly this is so powerful. i’m realizing how valuable communication and visibility is, not just in work but in relationships and life.

i used to think managing other people’s perception of me was stupid and frivolous, but now i realize how *ijudge other people is solely based on my perception (eg., the convenient information) i have of them. so of course it makes sense to present myself well, because i like those who do present themselves well to me.

Over time, if you don’t take credit for things, people notice that you silently fix or accomplish or improve things without taking credit or bothering anyone about it, and you get triple credit, for fixing things, for doing it seamlessly and for not needing or requesting credit. The problem is, you need a sufficiently sustained and observed set of interactions, and people sufficiently aware of the incentive dynamics here, so that you can move the whole thing up a meta level.

There is also the reverse. If you know someone who will always loudly take credit, you know that at most they are doing the things they loudly take credit for. If that.

I am generally skeptical that we should worried about inequality, as opposed to trying to make people better off. One danger that I am convinced by is that extreme inequality that is directly in your face can damage your mental health, if you see yourself in competition with everyone on the spectrum rather than being a satisficer or looking at your absolute level of wealth and power.

Good Alexander: I think the main reason you find a lot of very unhappy tech people even at the highest levels

– when you’re a typical employee everyone around you is making .5-2x what you are

– when you start breaking out wealth goes on log scale. ppl with 10-1000x your net worth become common

– this is native to network effects, scale associated with AI training, and other winner take all dynamics in tech

– all of VC is structured this way as well — (1 unicorn returns entire fund rest of investments are zero) which psychologically reinforces all or nothing thinking

– this makes competitive people miserable

– this leads them to do hallucinogens or other psychoactive substances in order to accept their place in the universe

– the conclusions drawn from these psychoactive substances are typically at direct odds with how they got to where they are

– and after getting one shotted they’re still ultimately in a hard wired competition with people worth 10-1000x more than them

– due to the structure of technology it becomes more or less impossible to break out of your ‘bracket’ without engaging in increasingly dark things

– you realize that time is running out — and become aware of synthetic biology (peptides, genetic alteration of children)

– you end up getting involved in police state investments, gooning investments, or crypto — and view it as non optional to take the gloves off bc everyone around you is doing the same thing

– you’re on a permanent hedonic treadmill and you can’t ever get off or go back to where you were before bc after doing all of the things you’ve done you can’t possibly ever relate to normal humans

– you get involved with politics or Catholicism or other Lindy cults to try and get off the treadmill

– of course it won’t work and you bring all the weird baggage directly into politics or religion and poison those wells too

the current configuration of economics/ wealth distribution is pretty solidly optimized to drive the wealthiest people in society batshit insane, which – to some extent – explains a lot of things you see around you

w this framework you can understand:

Thiel Antichrist obsession

Kanye getting into Hitler and launching a coin

Trump memeing himself into becoming President then running again to escape imprisonment

Elon generating Ani goon slop on the TL

A16z wilding out

Eliezer Yudkowsky: – supposed “AI safety” guys (outside MIRI) founding AI companies, some of whom got billions for betraying Good and Law.

I have felt a little pressure to feel insane about that, but it is small compared to all the other antisanity pressures I’ve resisted routinely.

David Manheim: This is definitely not wrong, even though it’s incomplete:

“the current configuration of economics/ wealth distribution is pretty solidly optimized to drive the wealthiest people in society batshit insane, which – to some extent – explains a lot of things you see around you”

Speaking from experience, it is quite the trip to be in regular contact and debates with various billionaires. It can definitely make one feel like a failure or like it’s time to make more money, even though I have enough money to not worry about money, especially when you think you definitely could have joined them by making different life choices, and there’s a chance I still could. Whereas, when in prior phases of life I was not in such contact, it was easy not to care about any of that.

It helps to remind myself periodically that if I had a billion dollars, I could make the world a better place, but except insofar as I prevented us all from dying my own life would, I anticipate, not actually be better as a result. At that level, there isn’t that much more utility to buy, whereas more money, more problems.

It’s not easy buying art.

cold: Bro you make $500k at OpenAI you can go to the art fair and buy a little $10,000 painting to hang up in your SF apartment’s living room

You tell them this and then they’ll be like “I’m sorry 🥺 do you think a $15,000 desert meditation retreat will fix me so I’m not like this anymore??”

Daniel: The lack of personal art purchasing in SF is insane. A $3000 oil on canvas can change your whole living room and they won’t do it.

I know people who earn much more than $500k at openai and their living rooms are making them depressed.

Paul Graham: The main reason rich people in SV don’t buy art is that it does actually take some expertise to do it well. And since the kind of people who get rich in SV hate to do things badly, and don’t have time to learn about art now, they do nothing.

diffTTT: Rich SV people need an expert to tell them what kind of art they like?

Paul Graham: In a way. They need to learn how not to be fooled by meretricious art, how to avoid the immense influence of hype and fashion, etc. Most people have to figure this out for themselves or from books, but a truly competent expert could help.

If it takes some expertise to buy art well, that is a real problem with buying art. The thing is, if you do not buy art well, you will lose most of the money you spent on art, and also you will look like a fool, and also the art will not make you feel better or much improve your living room.

That leaves four options.

-

The one these people and I have taken, which is to not buy art.

-

Buy cheap art that you don’t mind looking at. Safe, but still annoying to do, and then you have to look at it, does it actually make you feel better?

-

Spend a lot of time figuring out how to buy expensive art properly. Yeah, no. I understand that Paul Graham can be in renaissance man mode, but if you are coding at OpenAI at $500k+ per year the cost of this is very, very high, and also you probably don’t expect the skill to stay relevant for long.

-

Find someone you trust to do it for you? Not cheap, not all that easy or quick to do either, and you are still the one who has to look at the damn thing.

Besides, who is to say that a constant piece of artwork actually helps, especially if it doesn’t hold particular meaning to you? I mean, yeah, in theory yeah we should get some artwork here, I suppose, but no one wants to do the work involved, also it should definitely be cheap art. At one point I bought some Magic: The Gathering prints for this but we never got around to hanging them.

Also at one point I tried to buy the original art for Horn of Greed, which at the time would have cost like $3k. I say tried because my wife wouldn’t let me, but if anyone wants to buy me a gift at some point, that or another original Magic art I’d look back on fondly seems great.

If there is one thing to learn from rationality: Peter Wildeford is 100% right here.

Wikipedia (Wet Bias): Wet bias is the phenomenon whereby some weather forecasters report an overestimated and exaggerated probability of precipitation to increase the usefulness and actionability of their forecast.

The Weather Channel has been empirically shown, and has also admitted, to having a wet bias in the case of low probability of precipitation (for instance, a 5% probability may be reported as a 20% probability) but not at high probabilities of precipitation (so a 60% probability will be reported as a 60% probability).

Some local television stations have been shown as having significantly greater wet bias, often reporting a 100% probability of precipitation in cases where it rains only 70% of the time.

Colin Fraser: If you believe it will rain with probability P, and getting caught in the rain without an umbrella is X times worse than getting caught in the sun with an umbrella, then it’s optimal to predict rain whenever P ≥ 1/(1+X). So e.g. for X=2 you should predict rain at P ≥ 1/3.

Peter Wildeford: I think you should only predict rain according to the correct p(rain), but you can change your behavior around umbrella carrying at lower values of p(rain).

Colin Fraser is right if you effectively can only predict 0% or 100% rain, and the only purpose of predicting rain is that you take an umbrella if and only if you predict rain.

Peter Wildeford is right that you can say ‘it will rain 40% of the time, therefore I should take an umbrella, even though more than half the time I will look foolish.’

The weather reports are assuming that people have a typical bias, that people respect 40% chance or more, but not 30%. Thus, there is a huge jump (AIUI, and Claude confirms). If the Google weather app says 30%, treat that as at most 10%, but the app doesn’t want to get blamed so it hedges. Whereas if it says 40%? That’s pretty much 40%, act accordingly.

If you don’t know the way the conversion works, and you don’t have the typical biases, you’ll respond in crazy wrong fashion. The bias and nonlinearity become self-perpetuating.

The right rule in practice really is to take the umbrella at 40% and not at 30%, almost no matter what the cost-benefit tradeoff is for the umbrella, since it is obviously wise at 40% and obviously unwise at 10%.

The ‘predict 100% instead of 70%’ thing that other sources do is especially maddening. This means both that you can’t tell the difference between 70% and 100%, and that on the regular you notice things that were predicted as Can’t Happen actually happening. The weather forecaster is consigned to Bayes Hell and you can’t trust them at all.

As Bryan Caplan notes, this is frequently a better question than ‘if you’re so smart, why aren’t you rich?’ It is a very good question.

His rejections of various responses are less convincing, with many being highly oversimplifying and dismissive of many things people often care about deeply. He presents answers as if they were easy and obvious when they are neither of those things.

I endorse rejection of the objection ‘the world is so awful that you have to be stupid to be happy.’ I don’t endorse his reasoning for doing so. I do agree with him that the world is in a great spot right now (aside from existential risks), but I don’t think that’s the point. The point is that it isn’t improving your life or anyone else’s to let your view of the world’s overall state make you indefinitely unhappy. If you think you ‘have’ to be stupid not to be perpetually unhappy for whatever external reason, you’re wrong.

I also agree with him that one good reason to not be happy is that you are prioritizing something else. He retorts that few people are extreme Effective Altruists, but this is not required. You don’t have to be some fanatic, to use his example, to miserably stay together for the kids. What you care about can be anything, including personal achievements other than happiness. Who says you have to care about happy? Indeed, I see a lot of people not prioritizing happiness enough, and I see a lot of other people prioritizing it far too much.

There’s also another answer, which is that some people have low happiness set points or chemical imbalances or other forms of mental problems that make it extremely difficult for them to be happy. That’s one of the ways in which one can have what Bryan calls ‘extraordinary bad luck’ that you can’t overcome, but there are other obvious ways as well.

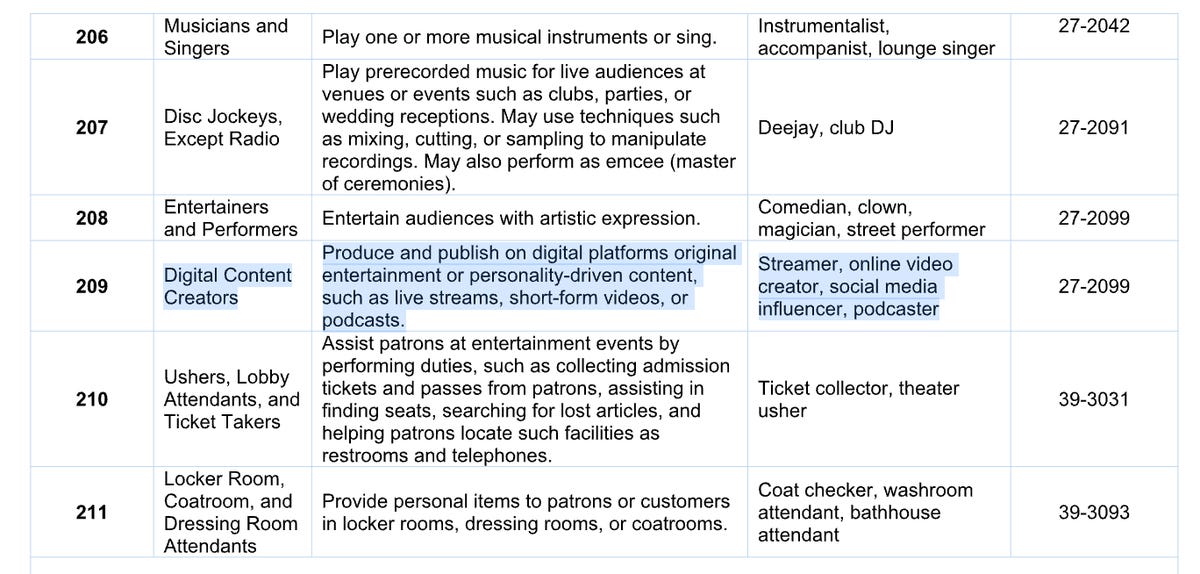

‘Digital Content Creators’ joins the list of professions that officially face ‘no tax on tips.’ Influencers and podcasters are explicitly getting a tax break.

From a public choice standpoint I suppose this was inevitable. However, this phases out at higher income levels, which means that none of the prominent people you are thinking of likely can benefit from this. As in, Republicans proudly embraced progressive taxation to partially offset regressive tariffs? So yes, I do accept tips, and very much appreciate them along with subscriptions, but alas after consulting with my tax lawyer (GPT-5 Pro) I have concluded that I cannot benefit from this policy.

Did men dress better and therefore look better in the past? Derek Guy makes the case that they did and attempts to explain why and what he means by better.

I think I agree that in a purely aesthetic sense people did dress ‘better,’ but that is because people in the past put massive investment into this. They spent a huge percentage of their income on clothes, they spent a large percentage of their time and attention on understanding, creating and maintaining those clothes, and they were willing to suffer a lot of discomfort. And they faced huge social and status pressures to devote such efforts, with large punishments for not measuring up to norms.

Derek notes our reduced tolerance for discomfort and lack of effort, but skips over all the extra money and time and cognitive investments, seems to lack the ‘and this is good, actually’ that I would add. I think it’s pretty great that we have largely escaped from these obligations. The juice is not worth the squeeze.

Santi Ruiz interviews Dr. Rob Johnston on the intelligence community and how to get good intelligence and make good use of it. There’s lots of signs of a lot of deep competence and dedication, but clearly the part where they deliver the information and then people use it is not going great. Also not going great is getting ready for AI.

Nate Silver explains what Blueskyism is, as in the attitude that is pervasive on Bluesky, and why it is not a winning strategy in any sense.

Texas becomes the seventh state to ban lab grown meat. Tim Carney becomes the latest person to be unable to understand there could be any reason other than cronyist protectionism to want this banned. Once again I reiterate that I don’t support these bans, but it seems disingenuous to prevent not to understand the reasons they are happening. James Miller offers a refresher of the explanation, if you need one, except that the demands wouldn’t stop with what Miller wants.

No, the Black Death was not good for the economy, things were improving steadily for centuries for other reasons. As opposed to every other pass famine or plague ever, where no one looks back and says ‘oh this was excellent for economic conditions.’

It is relatively easy to stay rich once already rich. It is not easy to get rich, or to be ‘good at’ being rich. It is also hard to be rich effectively, including in terms of turning that extra money into better lived experiences, and ‘use the money to change the world’ is even harder.

Roon: its amazing how little the post-economic people i know spend. many people are bad at being rich. you should teach them how to do it.

i think this is often why the children of the mega-rich are the ones who even get close to squandering their parents’ fortunes. when you get rich later into life you often don’t think with enough 0s in terms of personal consumption, donations, having a lavish household staff etc.

Eliezer Yudkowsky: In their defense, once you’ve already got your personal chef, volcano lair with trampoline, and a harem that covers all your kinks, there’s just not much else Earth offers for converting money to hedons.

Zvi Mowshowitz: It is remarkably difficult (and time consuming!) to spend large amounts of money in ways that actually make your life better, if you’re not into related status games.

I got into a number of arguments in the comments, including with people who thought a remarkably small amount of money was a ‘large amount.’ Certainly you can usefully spend substantially more than most people are able to spend and still see gains.

Reasonably quickly you hit a wall, and the Andy Warhol ‘everyone gets the same Diet Coke’ problem, and to do better you have to spend obscene amounts for not that much improvement. Are you actually going to be that much happier with a giant yacht or a private jet? What good is that private chef compared to Caviar or going to restaurants? Do you actually want to live farther away in some mansion? Does expensive art do anything for you cheap art doesn’t? And so on.

Even in the ways that would be good to spend more, you still need to know how to spend more and get value from what you paid, and how to do it without it taking up a ton of your time, attention or stress. Again, this is harder than it sounds.

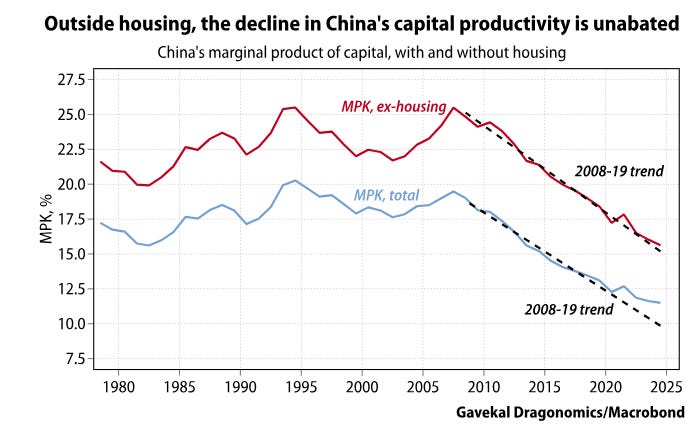

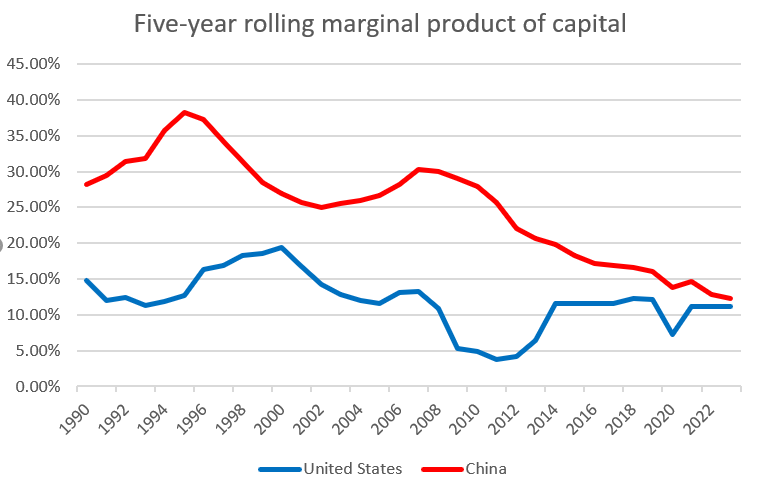

We talk constantly about ‘losing to China’ whereas in China there are reasons to worry that China is losing, not to an outside force but rather in general, and this is on top of a fertility rate that is 1.1 or below and an already declining population:

Mike Bird: Useful chart from @andrewbatson here covering one of the most under-discussed and useful macro metrics around. China’s capital productivity has been in consistent, marked decline even as panic over Chinese industrial prowess has reached fever pitch

Indeed as of 2019-2023 China’s marginal product of capital (basically how much output you’re getting from another unit of capital) was only very slightly higher than that of the US, though the US is at the frontier of GDP per capita and China nowhere near.

Clearly both things can be true – that some of China’s leading industrial firms are incredibly impressive, world-leading, and that in the aggregate they’re not enough to offset the misallocation and inefficiency elsewhere.

He quotes that the manufacturing share of GDP in China, for all our worries about Chinese manufacturing, declined 2-3 percent between 2021 and 2025, with the sector now having narrower margins, lower profits and more losses.

All of this is more reason not to give them opportunity to invest more in AI, and also reason not to catastrophize.

Cate Hall thanks you for coming to her TED talk, ‘A Practical Guide To Taking Control of Your Life.’ Which is indeed the Cate Hall TED Talk you would expect, focusing on cultivating personal agency.

In my startup roundups I muse about why don’t startups offered TMM (too much money, here presumably also at too high a valuation) take the money and then set expectations accordingly? A commenter pointed out that Stripe did do a version of this, although it is not a perfect fit.

Motivation and overcoming fear are tricky. You can get people comfortable with public speaking with complements. You can also do it by having people starting with you come up and give intentionally terrible speeches while they get crumpled papers thrown at them, to show that nothing actually bad happens.

Can national-level happiness be raised or are we doomed to a hedonic treadmill?

When people rate their happiness, are they rating on an absolute scale that reflects a real treadmill effect, or are people simply asking if they are happy compared to what they know?

It seems obviously possible to raise national happiness. One existence proof is that there are very clearly regimes, policies and circumstances that make people very unhappy, and you can do the opposite of those things, at least dodging them.

Also there are things that consistently raise happiness and that vary in frequency greatly over time, for example being married and having grandchildren.

In any case, via MR (via Kevin Lewis) we have a new paper.

Abstract: We revisit the famous Easterlin paradox by considering that life evaluation scales refer to a changing context, hence they are regularly reinterpreted.

We propose a simple model of rescaling based on both retrospective and current life evaluations, and apply it to unexploited archival data from the USA.

When correcting for rescaling, we find that the well-being of Americans has substantially increased, on par with GDP, health, education, and liberal democracy, from the 1950s to the early 2000s.

Using several datasets, we shed light on other happiness puzzles, including the apparent stability of life evaluations during COVID-19, why Ukrainians report similar levels of life satisfaction today as before the war, and the absence of parental happiness.

Tyler Cowen: To give some intuition, the authors provide evidence that people are more likely engaging in rescaling than being stuck on a hedonic treadmill. I think they are mostly right.

This makes tons of sense to me. You get revolutions of rising expectations. There are definitely positional effects and treadmill effects and baseline happiness set points and all that to deal with, but the Easterlin Paradox is a paradox for a reason and things other than income vary as well.

That doesn’t mean life is getting better or people are getting happier. It can also go the other way, and I am very open to the idea that happiness could be declining (or not) in the smartphone era with kids not allowed to breathe outside, and everything else that causes people to feel bad these days both for good and bad reasons. But yeah, from the 1950s to the 1990s things seem like they very clearly got better (you could also say from the 1500s to 1990s, with notably brief exceptions, or earlier, and I’d still agree).

Camp Social is part of a category of offerings where adults go to sleepaway camp with a focus on making friends, complete with bunk beds and color wars and in one case a claimed 75% return rate, although also with staying up until 1: 30 getting drunk. The camp counselors are concierges and facilitators. Cost is $884 for two nights and three days, which seems rather quick for what you want to accomplish?

I do buy that this is a good idea.

Radiation is dangerous, but it is a lot less dangerous than people make it out to be, and we treat this risk with orders of magnitude more paranoia than things like ordinary air pollution that are far more deadly.

Ben Southwood: The life expectancy of someone hit with 2,250 millisieverts of radiation in Hiroshima or Nagasaki was longer than the average Briton or American born in the same year. Today in Britain we spend billions controlling radiation levels more than 100,000 times smaller than this.

2,250 millisieverts is a lot of radiation, like getting 225 full-body CT scans in one go. I don’t think anyone would recommend it. But it shows how ridiculous it is that we spend so much time, effort, and money on radiation levels of 1msv or 0.1 msv per year.

Andrew Hammel reports that the Germans are finally on the verge of losing their War on Air Conditioning, as in allowing ordinary people to buy one, because normies actually experienced air conditioning and are not idiots. The standard ‘urban haute bourgeoisie’ are holding out on principle, because they think life is about atoning for our sins and because they associate things like air conditioning with wasteful Americans. As you would expect, the alternative ‘solutions’ to heat wind up being exponentially more expensive than using AC.

I do note that they have a point on this one:

Andrew Hammel: First of all, *every oneof these people has a story about visiting the USA and nearly freezing to death in an over air-conditioned store or office. Every. Damn. One. I can predict exactly when they will wheel out this traumatic tale, I just let it unfold naturally.

I mean, I have that too, to the point that it is a serious problem. This happens constantly in Florida. Even in New York’s hotter summer days, I have the problem that there is nothing I can wear outside while walking to the restaurant, that I also want to be wearing once I sit down at the restaurant. It is crazy how often Americans will use the AC to make places actively too cold. We could stand to turn it down a notch.

Or rather, ‘the’ good news, as Elizabeth Van Nostrand lays out how Church Planting works and finds it very similar to Silicon Valley startups.

A counterargument to last month’s claim about rapidly declining conscientiousness. Conscientiousness has declined far more modestly, the decline here is still seems meaningful but is very is not be a crisis. What John did to create the original graph turns out to have been pretty weird, which was show a decline in relative percentile terms that came out looking like a Really Big Deal.

Cartoons Hate Her! is on point that germs are very obviously real and cause disease but quite a lot of people’s specific worries about vectors for being exposed germs and the associated rituals are deeply silly if you stop to think about physics, especially compared to other things the same people disregard.

Sesame Street will give its largest library to YouTube as of January 2026 featuring hundreds of episodes. It is not a perfect program, but this is vastly better than what so many children end up watching. I echo that it would be even better if we included classic episodes as well.

Indeed, we should be putting all the old PBS kids shows on YouTube, and everything else that it would be good for kids to be watching on the margin. The cost is low, the benefits are high. There are low quality versions of the shows of my extreme youth available (such as Letter People and Square One TV) but ancient-VHS quality is a dealbreaker for actually getting kids to watch.

What TV show had the worst ending? There are lots of great answers but the consensus is (in my opinion correctly) Game of Thrones at #1 and Lost at #2.

After that it gets more fractured, and the other frequent picks here I am in position to evaluate were mostly bad endings (HIMYM, Killing Eve, Enterprise, Battlestar Galactica) but not competitive for the top spot. Dexter came up a lot but I never watched. Supernatural came up a bunch, and I’m currently early in its final and 15th season and is it weird this makes me want to get to the end more not less? Better a truly awful end than a whimper?

To be the true worst ending, it has to not only be awful but take what could have been true greatness and actively ruin the previous experience. You need to be in the running for Tier 1 and then blow it so badly you have to think about whether it even stays in Tier 2 because they poisoned everything. That’s why Game of Thrones and Lost have to be so high.

Indeed those two are so bad that they substantially hurt our willingness to invest in similar other shows, especially Lost-likes, which is enforcing the good discipline of forcing for example Severance to assure us they have everything mapped out.

(Briefly on the others: While at the time I thought HIMYM’s ending was as bad as everyone thinks, on reflection it has grown on me and I think it is actually fine, maybe even correct. Killing Eve’s ending wasn’t good exactly, but I didn’t feel it ruined anything, it was more that all of season 4 was a substantial decline in quality. Battlestar Galactica was rage inducing but I understand why they did what they did and that mostly made it okay, again mostly the show started fantastic and was dropping off in quality generally. Enterprise ended bad, but again not historically bad, whereas the show wasn’t getting bad, and mostly the frustration was we weren’t done.

I heard the claim recently that Lost’s ending is aging well, as it suffered from the writers assuring us that they wouldn’t do the thing they did, whereas now looking back no one much cares. There’s that, but I still find it unsatisfying, they said they wouldn’t do it that way for a reason, and the worse offense was the total failure to tie up loose ends and answer questions.

Scott Sumner claims the greatest age of cinema was 1958-1963.

Scott Sumner: The public prefers 1980-2015, as you say. The movie experts say the 1920s-1970s were the best.

This highlighted the ways in which our preferences strongly diverge.

Another big hint is that Sumner and the experts claim an extremely high correlation of director with quality of movie. Great directors are great, but so many other things matter too.

As an example, recently I watched Mulholland Drive for the first time, which Sumner says might be his favorite film. I appreciated many aspects of it, and ended up giving it 4/5 stars because it was in many senses ‘objectively’ excellent, but I did not actually enjoy the experience, and had to read an explainer afterwards from Film Colossus to make sense of a lot of it, and even after understanding it a lot of what it was trying to do and say left me cold, so I didn’t feel I could say I ‘liked’ it.

From what I can tell, the public is right and the ‘experts’ are wrong. Also I strongly suspect that We’re So Back after a pause of timidity and sequilitius and superheroes.

Scott Sumner: There are two films that should never, ever be watched on TV. One is 2001 and the other is Lawrence of Arabia. If you saw them on anything other than a very big movie theatre screen, then you’ve never actually seen them.

I haven’t seen 2001 regardless, but on Lawrence of Arabia I can’t argue, because I attempted to watch it on a TV, and this indeed did not result in me seeing Lawrence of Arabia, because after half an hour I was absolutely bored to tears and could not make myself continue. There was a scene in which they literally just stood there in the sand for about a minute with actual nothing happening and I get what they were trying to do with that but it was one thing after another and I couldn’t even, I was out.

What I am confused by is how it would have improved things to make the screen bigger, unless it would be so one would feel forced to continue watching?

Here are his 13 suggestions for films to watch, although I have no idea how one would watch Lawrence of Arabia given it has to be on a big screen?

Vertigo, The Man Who Shot Liberty Valance, Touch of Evil, Some Like It Hot, Breathless, Jules and Jim, Last Year in Marienbad, High and Low, The End of Summer, 8 1/2, L’Avventura, The Music Room, Lawrence of Arabia.

I tried to watch High and Low, and got an hour in but increasingly had the same sense I got from The Seven Samurai, which is ‘this is in some objective senses a great movie and I get that but I have to force myself to keep watching it as outside of moment-to-moment it is not holding my interest’ except with more idiot plot – and yes I realize some of that is cultural differences and noticing them is the most interesting thing so far but I’m going to stick with idiot plot anyway. In addition to the idiot aspects, it really bothers me that ‘pay or pretend to pay the ransom’ is considered the obviously moral action. It isn’t, that is terrible decision theory. The moral action is to say no, yet there is not even a moment’s consideration of this question by anyone.

If the above paragraph is still there when you read this, it means I was unable to motivate myself to keep watching.

Jeff Yang explains some of the reasons Chinese movies tend to bomb in America, in particular the global hit Ne Zha 2. Big Chinese movies tend to be based on super complex Chinese traditional epic stories that Chinese audiences already know whereas Americans haven’t even seen Ne Zha 1. American stories have clear structure, understandable plots, payoffs for their events, central characters, and a moral vision that believes in progress or that things can be better. And they try to be comprehensible and to maintain a tonal theme and target market. Chinese movies, Yang reports, don’t do any of that. Effectively, they assume the audience already knows the story, which is the only way they could possibly follow it.

It’s as if Marvel movies were the big hits, and they didn’t try to be comprehensible to anyone who didn’t already know the characters and comics extensively? Certainly there are some advantages. It might be cool to see the ‘advanced’ directors cuts where it was assumed everyone had already either read the comics extensively or watched the normal version of the film?

As Jeff says, if they can make money in China, then sure, why not do all this stuff that the Chinese audiences like even if it alienates us Westerners. There are enough movies for everyone. It does still feel like we’re mostly right about this?

Like everyone else I think Hollywood movies are too formulaic and similar, and too constrained by various rules, and thus too predictable, but those rules exist for a reason. When older movies or foreign movies break those rules, or decide they are not in any kind of hurry whatsoever, it comes at a cost. I don’t think critics respect those costs enough.

I strongly agree with Alea here and I am one of the ones who want to stay away:

Alea: Novels with an empty mystery box should be explicitly tagged so I can avoid them. 110% of the joy of reading comes from uncovering all the deep lore and tying up every loose end. Some people get off on vague worlds and unfinished plots, and they should stay the fuck away.

I don’t especially want to go into deep lore in my spare time, but if you are going to convince me to read a novel then even more than with television you absolutely owe it to me to deliver the goods, in a way (with notably rare exceptions) that I actually understand when reading it.

As in: I know it’s a great book but if as is famously said, ‘you don’t read Ulysses, you reread Ulysses’ then you had me at ‘you don’t read Ulysses.’

And you definitely don’t read Game of Thrones until I see A Dream of Spring.

True facts about the whole ‘fleeing Earth’ style of story:

Ben Dreyfuss: The stupidest part of INTERSTELLAR is that the blight starts killing all the crops and after just a few decades they go “ah well, guess it won! Better leave earth. Hope we solve this magic gravity equation with the help of 5 dimensional beings and wormholes.”

“We can’t make okra anymore. Better go explore this all water planet where one hour is 7 years of time and this ice planet where water is alkaline and the air is full of ammonia.”

Pretty sure you can’t make okra there either, buddy.

Kelsey Piper: every single movie about people fleeing Earth involves displaying a mastery of technology which would obviously be more than sufficient to solve the problem they are fleeing Earth about

climate change is not going to make Earth less habitable than Mars so you can’t have people fleeing to Mars because of climate change, you just can’t.

‘there’s a supervolcano/asteroid induced ice age’ oh boy I have some news for you about Mars.

Daniel Eth: Just once I want a movie about people fleeing Earth to have the premise “there are trillions of people, and we have a per capita energy consumption of 100,000 kWh/yr, which is straining Earth’s ability to radiate the waste heat. We must now go to space to expand capacity”

Movie could have a real frontier vibe (space cowboys?) – “of course back in the old world (Earth), population and energy per capita are subject to bureaucratic regulations to prevent total ecosystem collapse; but in new worlds we can freely expand anew”

A recent different case of ‘I can’t help but notice this makes no sense’ was Weapons. The link goes to a review from Matthew Yglesias that I agree with, it does cool things with nonlinearity and the performances and cinematography are good except when you put it together in the second half the resulting actual plot, while consistent and straightforward, makes no sense.

Zvi Mowshowitz reviews Weapons while avoiding spoilers: When you’re in, writing or deciding to go to a horror movie, you make dumb decisions. It’s what you do.

The difference is to him this adds up to 3.5 stars, and to me it means 2.5 stars, once the holes and idiot balls became too glaring, I stopped being able to enjoy the film.

My other problem with Weapons was that the first two acts made me care about various characters and relationships that were rich and detailed and well-executed and acted, and then the third act didn’t care at all about those things, only about the main plot that did not make any sense. There might actually be a pretty great movie here in which the missing kids are a tragedy that never gets explained or solved because what matters is a different third act that focuses on how people react to it.

New Jersey looks to ban ‘micro bets,’ meaning sports bets about individual plays.

Erik Gibbs: The bill’s language defines a micro bet as any live proposition bet placed during an event that pertains specifically to the outcome of the next discrete play or action.

This restriction seems clearly good. I don’t know where the line should be drawn, but I am confident that ‘ball or strike’ bets are over the line.

It is a very light restriction – you can’t bet on a ball or strike or pass or run under this rule, but you can still bet on the outcome of an inning or drive. Bets on the next play have all the worst gambling attributes. They cost a lot individually, they resolve and compound super quickly, they are especially prone to addictive behavior.

Clair Obscur Expedition 33 finishes at Tier 2. It does a lot of things very right and I am very happy to have played it, despite some obvious issues, including some serious balance problems in Act 3.

If someone suddenly buys up the contract on Taylor Swift and Travis Kelce getting engaged from 20% to 40%, and you’re selling into it, yeah, good chance they know. Also this means yes, someone knew and traded on the information in advance. Cool. Oh, and congratulations to both of them, of course.

Sam Black has a new podcast about cEDH.

I don’t understand why Wizards of the Coast continues to be so slow on the banhammer in situations like Cauldron. We saw repeatedly exactly the broken format pattern, such as here where Cauldron starts out at 30% and then goes to 56% after six rounds, then a much larger majority of the top 8s. This continued long past the point where there was reasonable hope it would be fixed by innovation.

Mason Iange: Doesn’t it make sense that in a rotating format like standard, wotc wants people to have confidence in buying product and building decks? Literally no one is going to play standard if the best decks just get banned every 2 month.

Saffron Olive: The way I see is it there are two paths: you design cards conservatively and don’t need to ban anything, or you design cards aggressively and need to ban cards fairly often. Wizards is trying to design cards aggressively and never ban anything, which I don’t think is actually possible.

Patrick Sullivan: What you’re saying is true/relevant, but there are other considerations; the current state of affairs would clearly not be tolerated absent the stuff you’re mentioning. That’s why I think they should allow themselves to be as agile as possible regardless of what they decide to do.

Brian Kowal: The opposite of how I feel about rotating formats. I want it dynamic. I’d rather they make a balanced format. With a rotating format I want to feel like I can innovate. If it is solved I quickly lose interest. There are many formats that never rotate to protect investment.

I think ‘mix it up every time it is solved’ is going too far given how quickly we now solve formats, but the solution has to not be ‘play this deck or else.’ Yes, banning the best deck every two months would make you reluctant to invest in Standard, but effectively banning all but the best deck for months on end, or having to face an endless stream of the same overpowered nonsense even if you’re willing to sacrifice win rate to go rogue, is even worse.

They came out with an explanation and update on the 9th. A big part of this is that they screwed up timing the ban windows, and have a crazy high bar for doing “emergency” bans versus bans on announcement days. They are mitigating this going forward by adding more windows next year, one every major set release.

That points out how crazy the situation was. You’re going to release a set, and then not have a ban opportunity until after releasing the next set? That’s crazy.

Based on past experiences, I believe Brian Kowal is correct that an extended period of a miserable format, with bans that everybody knows have to happen but are extensively delayed, creates a point of no return, where permanent damage to the format and the game begins to accumulate.

Brian Kowal: There should be room in the ban policy for emergency bans. Perception has hit the point of no return. A significant portion of players do not want to touch Standard now. Rotation should be when we are creating players for the next year and this rotation lasts until January 2027! (I’m 80% on this. Somebody let me know if I’m wrong) Players are quitting Standard again to look for other games and formats. New players are choosing not to invest in it.

When format perception hits this state everybody knows something is getting a ban. So a lot of die hard competitives are even taking a break rather than buying 4 copies of the two most expensive cards in Standard.

The best way to go imo is to just suck it up and ban Cauldron immediately. Again, we all know it is happening anyway. Not taking action over and over again and just letting everybody suffer months of a bad format makes WOTC look like they don’t care.

Jenny: WotC took HOW long to decide to do nothing and ruin another Spotlight Series and RCQ season? Using the Arena ladder meta to judge the health of the format is *insane*

Pactdoll Terror: My 2-slot RCQ this Saturday in NYC sold 8 spots. I usually do 50. Someone who built Vivi to grind RCQs would be annoyed that it got banned, but Standard is DEAD locally. Weeklies aren’t launching, RCQs struggle to make money. Holding bans is bad for everyone except Vivi players.

Instead they’re going to do a strange compromise, and move up their next announcement date from November 24 to November 10, which still leaves two full months of this.

We should never have more than a month ‘in limbo’ where things are miserable and we know what is coming. Even if you decide to keep playing you are in an impossible position.

They say ‘Standard has not yet reached its final form’ but they are grasping at straws.

They say the Arena ladder is looking less awful. The Arena ladder is not real life, not only because the play level is low but also there’s nothing forcing the players to play the best deck. I learned that the hard way during the Oko era.

I get Carmen’s argument here that we ran the experiment and when you don’t have ban windows, you get constant speculation about potential bans and a lot of uncertainty, And That’s Terrible. You can’t fully embrace The Unexpected Banning. There needs to be a substantially higher bar outside of a fixed set of days.

The current situation was still ludicrous. While insufficiently competitive play is not as lopsided, that’s largely about card access and players wanting to have fun and of course not wanting to do this into a future ban. This ban would not be ‘from under players in a surprise move’ even if no formal warning was given. The idea that ‘we won’t make a move based on competitive play, only on non-competitive play, you competitors don’t much matter’ is definitely giving me even less desire to come back.

Which of course I get. Magic is not made for me. I’m just deeply sad about it.

I see the argument this isn’t a pure ‘do it today or else’ situation but it is an emergency. If I was Wizards, the moment it was clear we probably had a problem I would have created a new announcement date much closer in the future than two months, with the clear statement that at that time they would choose whether to ban Vivi, Caldron, both or neither. And then done it by now.

Pro Tour levels of cEDH (competitive commander) are an awesome thing to have exist, but seem to have a rather severe draw problem, because everyone knows how to play politics and how to force draws. Sam Black suggests making draws zero points, which I worry could create even more intense politics and feel bad moments but when 1-0-6 is a ‘great record’ then maybe it is time and it seems like the elimination rounds work fine?

Sam Black: The house games are more fun when we don’t play for draws. Similarly games in top 16 are more fun.

Ultimately, I don’t think any solution would satisfy me, since it is going to come down to pure politics and kingmaker decisions. One potential approach is to say that wins are 10, draws are 1, and we pair people accordingly, so taking the draw is not obviously good for you, it might be wiser to lose and get paired against others who aren’t playing for draws. In the 0-0-4 bracket I don’t like your winning chances, and you have to win at some point to make the cut.

Sam Black talks about the role of mediocre synergistic cards. You start with strong cards, and pick up the bad cards that work for you for free at the end. If the bad cards vanish, the lane is not open, go a different way. Only prioritize cards that have a high ceiling, and (almost) never take a consistently bad card in your colors that can’t make your deck much better when a pack contains a good card. Similarly, trying to read signals explicitly is overrated relative to taking good cards, which is underrated and serves the same purpose.

The exception (he seems to assumes in the modern era this won’t ever happen, which seems wrong to me) is if you are in danger of not having a deck, because you lack either enough cards or a key component, such that taking a usually bad card actually does provide substantial improvement.

Some cards that look bad, and have bad win rates, are instead good in the sense that they have high upside, but are being used badly by people who use them without the upside case. Sam’s example is a card that defaults to being a bad Divination but enables never running out of cards, so you can build your entire strategy around this, but if you put in your deck as a bad Divination then it will be bad.

Waymo is now offering service in Denver and is ready for Seattle as soon as they are permitted to do so. They’re doing experiments in Denver now with humans behind the wheel of about a dozen cars and Governor Polis is here for it. Planned cities include Dallas, Miami and Washington D.C. next year, and scouting ‘road trips’ have gone to Philadelphia and there are plans to go to Las Vegas, San Diego, Houston, Orlando and San Antonio.

Service in Denver will quickly reveal exactly how well Waymos can actually handle cold weather including snow. My prediction is it will go well, bet if you disagree. Hopefully it will help compensate for Denver’s struggling restaurants and its very high minimum wage.

As of the start of September, there are still only 2,000 Waymos: 800 in the San Francisco Bay Area, 500 in Los Angeles, 400 in Phoenix, 100 in Austin and ‘dozens’ in Atlanta.

As a point of comparison, San Francisco has ~1,800 taxi medallions, and an estimated 45,000 registered rideshare drivers, with Claude estimating there are typically 5,000 available rideshares at any given time, peaking in prime hours around 10,000.

Supervised Waymo diving has begun in NYC, where they have a permit to do so.

This continues the recent trend of noticing that holding back self-driving means tens of thousands of people a year will die that didn’t have to.

Ethan Mollick: It seems like there is not enough of a policy response to the fact that, with 57M miles of data, Waymo’s autonomous vehicles experience 85% less serious injuries & 79% less injuries overall than cars with human drivers.

2.4 million are injured & 40k killed in US accidents a year.

Think of EV policy and do long-term support: subsidies for R&D to bring down costs, incentives for including self-driving features, regulatory changes to make it easier to deploy, building infrastructure for autonomous-only vehicles (eg HOV lanes), independent testing.

Takes time.

There are many problems with this approach, including that it causes fixation on the lives saved versus cost and similar calculations, and also you sound like you are coming for people’s ability to drive. Whereas if you sell this purely as ‘Waymos are awesome and convenient and efficient and improve life greatly and also happen to be actually safe on top it’ then I think that’s way better than ‘you are killing people by getting in the way of this.’

Alice From Queens: Self-driving cars are like the new weight loss drugs.

Their value is so large, so obvious, and so scalable, that we can confidently predict their triumph regardless of knee-jerk cultural resistance and their wildly exaggerated downsides.

Yes, I’ve been saying the same thing for years. Because it still needs saying!

I mean, they totally are killing people by getting in the way, but you don’t need that.

Mostly you need to make people believe that self-driving is real and is spectacular.

Matthew Yglesias: I keep meeting people who are skeptical self-driving cars will ever happen.

I tell them I took one to the airport in Phoenix several months ago, did a test ride in DC, they’re currently all over San Francisco, etc and it’s blank stares like I’m telling them about Santa.

My model of what is holding things back for Waymo in particular right now is that mainly we have a bottleneck in car manufacturing, and there’s plenty of room to deploy a lot more cars in a bunch of places happy to have them.

Longer term, we also have to overcome regulatory problems in various places, especially foolish blue cities like New York and Boston, but I find it hard to believe they can hold out once the exponential gets going and everyone knows what they are missing. Right now with only a few hundred thousand rides a week, it’s easy to shrug it off.

Thus I think PoliMath might be onto something here:

PoliMath: I suspect Waymo doesn’t *wantthere to be a policy response to this data b/c it will inevitably end with the left demanding we ban human drivers and there will be a huge backlash that damages Waymo’s business in a serious way.

Waymo is steadily winning, as in expanding its operations. The more it expands, the better its case, the more it will be seen as inevitable. Why pick a premature fight?

The fight is out there. Senator Josh Hawley is suddenly saying ‘only humans out to drive cars and trucks’ as part of his quest to ‘reign in’ AI, which is the Platonic worst intervention to reign in AI.

Waymos are wonderful already, but they also offer much room for improvement.

Roon: It is pretty telling that when you ride in a Waymo, you cannot give instructions to Gemini to play a song, change your destination, or drive differently. When one of the great gilded tech monopolies of the world does not yet have a cohesive AI picture, what hope has the broader economy?

Eliezer Yudkowsky: AI companies are often so catastrophically stupid that I worry that Gemini might in some way be connected to the actual car. Oh wait, you explicitly want to be able to request that the car drive differently?

I do not want Gemini to be controlling the vehicle or how it drives, but there are other things that would be nice features for integration, and there are other quality of life improvements one could make as well. For now, we keep it clean and simple.

The Seth Burn 2025 Football Preview is here, along with the podcast discussion.

If you must hire a PR agency, this from Lulu Cheng Meservey strikes me as good basic advice on doing so.

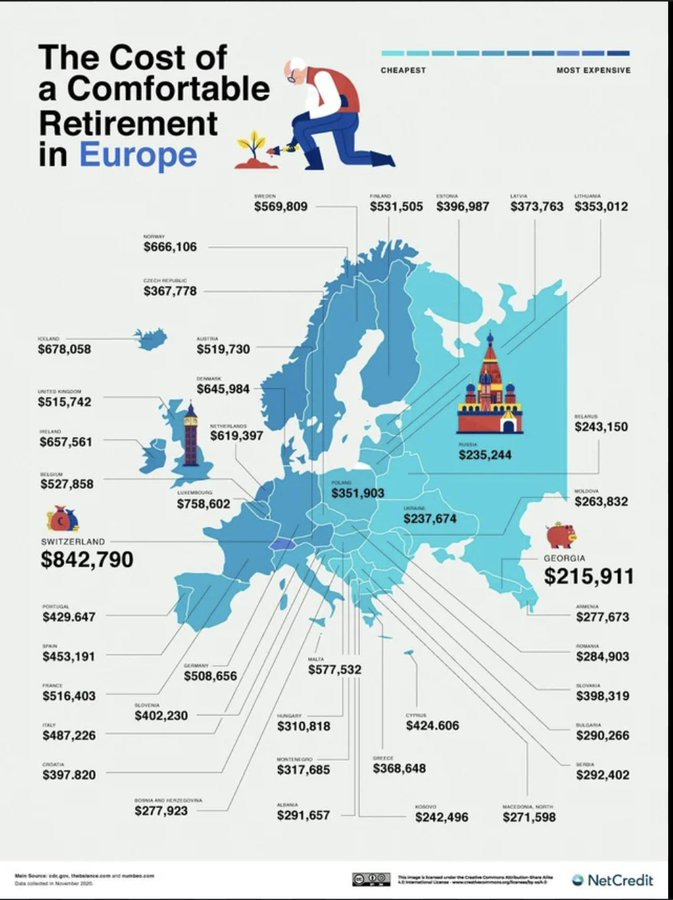

Should you consider retiring to places like Italy, perhaps under a deal to go to a small town to get a 7% flat tax regime for 10 years? Is there a good deal to be struck where American retirees help fund what remains of Europe, especially given that translation is rapidly becoming seamless and these places are indeed very nice by all accounts? Paul Skallas here describes Southern Europe as ‘dirt cheap,’ citing this chart:

I am deeply skeptical that the discounts are this large, and my AI sanity check confirmed the savings are real but relatively modest. Also consider what ‘comfortable retirement’ means in places that (for example) won’t let you buy an air conditioner. But yeah, if you only have modest savings it seems like a good thing to consider.

YouTube Premium is an ideal product. For $10 a month you get no ads, creators get paid, and the variety of content is phenomenal. Yes, you could use AdBlock to get around it in many cases, and many will do that, but this is what the internet is supposed to look like.

Maxim Lobovsky: Not only is YouTube Premium great, it’s one of the few major ad-supported businesses offering a paid alternative. Paid social media is one of the only plausible solutions to the algorithm-driven polarization/rage-baiting/lowest-common-denominator content death spiral.

The problem is that you can’t then subscribe individually to everything else, because that adds up fast. Give me a unified YouTube Premium style subscription, please.

Yes, the failure to shut down TikTok despite a law making it illegal that was upheld by the Supreme Court 9-0 is rather insane. Trump is flat out refusing to enforce the ban and extending the deadline indefinitely, you can speculate as to why.

Downvotes, in some form, are a vital part of any social platform that has upvotes, both to maintain civility and maintain good incentives. If you can easily express pleasure there needs to also be an easy way to express displeasure. Dan Luu gives one reason, which is that otherwise people will write nasty comments as a substitute. The other reason is that otherwise maximizing for toxoplasma of rage and extreme reactions to get engagement wins and crowds other actions out. If you are going to do rankings, the rankings on LessWrong and also Reddit mostly seem quite good, and those are the only places where somewhat algorithmic feeds seem to do well.

Emmett Shear: The belief that downvotes are “uncivil” was one of the most common delusions I have encountered while working in social media.

Oliver Habryka: Yep, one of the things I always considered most crucial to maintain with LW 2.0. When I was shopping around for forum software alternatives when we started building LW 2.0 this ruled out like 80% of the options on the market.

Cremieux reports he was suspended from Twitter for a day for saying that Tea app had been hacked, which was called ‘posting other people’s private information without their express authorization and permission,’ except he did not do this or link to anyone who did do it (he said ‘you can go download 59.3 GB of user selfies right now’), whereas people who do expose such info often get ignored. He went warpath, citing various laws he asserts Twitter is breaking around the world.

(The link in the screenshot below takes you back to the post itself.)

Lewis: meanwhile post doxxing [someone’s] address was never removed. 2.5M views. reported it and DM’d Nikita, never heard back on either.

Sin: My contribution [which is literally a map containing the location with a giant arrow pointing to it saying it is where this person lives].

I saw this over a week later. Still there.

Elon Musk made a lot of mistakes with Twitter, but also did make some very good changes. One of them is that likes are now private. This damages an outsider’s ability to read and evaluate interactions, but it takes away the threat of the gotcha when someone is caught liking (or even not liking!) the wrong tweet and the general worry about perception, freeing people up to use them in various ways including to acknowledge that you’ve seen something, and to offer private approval.

It’s very freeing. When likes were public, which also means it was public what you didn’t like, I decided the only solution to this was to essentially not use the like button. Which worked, but is a big degrading of usefulness of Twitter.

Redaction: It really is insane how simply Hiding Likes On Twitter meaningfully shifted the overton window of the American political landscape

Samo Burja: I underestimated the impact change at the time. I think I thought preference falsification was much less pervasive than it was.

Meanwhile, in other contexts, it is still very much a thing to talk about who has liked which Instagram posts. This is exactly as insufferable as it sounds.

Every time Nikita tries to make me feel better about Twitter I end up feeling worse.

Nikita Bier (Twitter): The first step to eliminating spam is eliminating the incentive.

So over the last week, I have gone deep down the rabbit hole of X spam:

I am now in 3 WhatsApp groups for financial scams. I have become their friends. I know about their families. I cannot blow my cover yet.

What is the goal exactly? How would befriending them help? We already all know exactly how to identify these scams and roughly how they work. Understanding more details will not help Nikita or anyone else do anything. You think you’re going to do enough real world takedowns and arrests that people are scared to do scams, or something? How about instead we do basic filtering work?

Or, when he posts this:

Or this:

Eli: Twitter should include 3 schizophrenic reply guys and 1 egirl with Premium +

Nikita Bier: We did the math and that’s what retains a user.

He kids, but kid enough times in enough ways with enough detail and you’re not fully kidding. It is very clear that Twitter is doing a lot of the Goodhart’s Law thing, where short term feedback metrics are being chased without much eye on the overall experience. Over time, this goes to quite bad places.

Also, yeah, this is not okay:

Mike Solana: I truly believe blocking is a right, and I would never go after someone for blocking me for any reason. but you should not then be able to unblock, comment on a post of mine, and immediately REBLOCK so I can’t respond. in this case, I deserve at least 24 hours to roast your ass.

There are any number of obviously acceptable solutions to this. I like the 24 hours, where if you do something that you couldn’t do while they are blocked, your reblock is delayed for a day.

Local coffee shop sets up a bot farm with hundreds of phones to amplify their messages on Instagram.

Vas: If a simple coffee shop has a bot farm with 100s of phones to amplify their message, please consider what a foreign agency or adversarial operator is running on your favorite social media platform.

Especially today, please consider that the opinions you read, the calls to violence you hear, and the news you digest, are all an operation done to sow hatred in your mind and your soul.

Scott Sumner uses his final EconLib post to remind us that almost everything is downstream of integrity. Without informal norms of behavior our laws will erode until they mean almost nothing, and those informal norms are increasingly discarded. He cites many examples of ways things might (read: already did) go wrong.

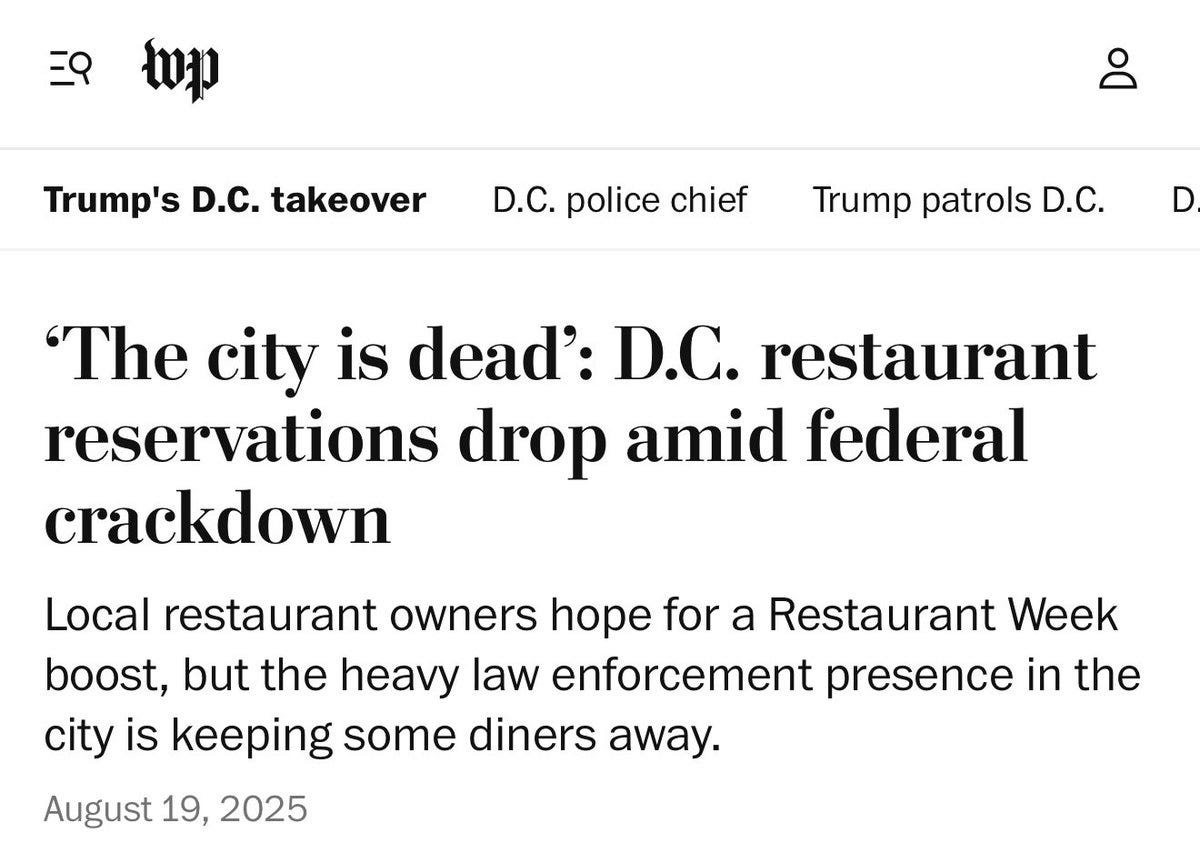

I may never stop finding it funny the extent to which Trump will seek out the one thing we know definitively is going badly, then and choose that to lie and brag about.

As in, how is the DC crackdown going? I only as of writing this know for sure that restaurant reservations were down, although it turns out not down as much as initially reported once you control for restaurant week but 7% is still a lot. So of course…

Donald Trump: People are excited again. Going to restaurants again [in DC]. The restaurant business, you can’t get into a restaurant.

Trump attempted to fire Federal Reserve Governor Lisa Cook ‘for cause,’ setting up a legal fight. Cook was not about to leave quietly.

Initial market reaction was muted, the dollar only declined 0.3% and gold only rose 0.6%, likely because it was one escalation among many and it might fail, but this is a direct full assault on central bank independence, and central bank independence is a really big deal.

Jonnelle Marte and Myles Miller (Bloomberg): While a president has never removed a Fed governor from office, one can do so for cause. Laws that describe “for cause” generally define the term as encompassing three possibilities: inefficiency; neglect of duty; and malfeasance, meaning wrongdoing, in office.

What was this ‘cause’?

Trump had earlier called for Cook’s resignation after Federal Housing Finance Agency Director Bill Pulte alleged she lied on loan applications for two properties — one in Michigan and one in Georgia — claiming she would use each property as her primary residence to secure more favorable loan terms.

Trump said it was “inconceivable” that Cook was not aware of requirements in two separate mortgage applications taken out in the same year requiring her to maintain each property as her primary residence.

That’s it. There are no additional claims. Only the claim that she claimed one place would be a primary residence, and then claimed a different primary residence.

Pulte, in a statement posted to social media, thanked Trump for removing Cook. “If you commit mortgage fraud in America, we will come after you, no matter who you are,” he wrote.

What about if you are President of the United States and have recently had a nine figure judgment against your ‘Trump’ organization entered against you for lying on mortgage applications? Are we coming for you?

Oh, and what if it turned out, as it has, that the claim against Cook simply isn’t true?

Aaron Fritschner: The mortgage fraud claim against Lisa Cook is false, per documents obtained by Reuters. Bill Pulte’s accusation, the sole pretext Trump used to fire her from the Fed, was that she claimed two homes as primary residence. These docs show she did not.

“The document, dated May 28, 2021, was issued to Cook by her credit union in the weeks before she completed the purchase and shows that she had told the lender that the Atlanta property wouldn’t be her primary residence.”

“documentation reviewed by Reuters for the Atlanta home filed with a court in Georgia’s Fulton County, clearly says the stipulation exists “unless Lender otherwise agrees in writing.” The loan estimate, a document prepared by the credit union, states “Property Use: Vacation Home”

Lisa Cook also didn’t claim a tax credit for primary residence on the second home and declared it as a second home on official federal government documents when she was being considered for a role on the Fed. A real master criminal.

Also her mortgage rate was 3.5%, modestly higher than the going rate at the time.

If you are going to try and fire a Federal Reserve President for cause, something that has not happened (checks notes, by which I mean GPT-5) ever, thus endangering faith in Fed independence and the entire financial system, you might want to follow due process, or at least confirm that your accusation is true? As opposed to demonstrably false?

A lot of people are understandably highly outraged about this, as Adam Tooze partly covered right after the attempted firing. This comes on the heels of firing the head of the Bureau of Labor Statistics because Trump didn’t like the statistics.

A reminder that yes, there is at least one clear prior case of a crazy person destroying a nation’s health that parallels RFK Jr, as in South Africa where their President denied drugs to AIDS patients.

Yes, all the various ‘honesty taxes’ our government imposes (also see this highlighted comment) are extremely pernicious and do a lot more damage than people realize. We teach people they have to lie in order to get food stamps, and they (just like LLMs!) learn to generalize this, everything impacts everything, our society is saying lying is okay and lying to the government is mandatory, you can’t isolate that. You don’t get a high trust society that way, although we are remarkably high trust in some ways despite this.

Most of the time, the correct answer is not to enforce the rules as written even if we could do so, instead the correct answer is to remove or heavily modify the rule. Our rules are tuned to the idea they won’t be enforced, so it is likely enforcing them would not go well. Then there are exceptions, such as primary residence mortgage fraud.

Aaron Bergman: I think ethics- and integrity-pilled people need to have a better theory of when it’s cool to lie to *institutions

The “lying to a human” vs “lying to institution” distinction is real and important btw, the bar for the latter is much lower

Oliver Habryka: Yeah, I agree with this. I think lying to institutions is frequently fine, often roughly proportional to how big they are, though there are also other important factors.

I don’t have a great answer to exactly when this all makes it okay to lie to corporations or governments and on forms. My guess is it is roughly ‘do not break the social contract.’ But if this is something where is no longer (or never was) a social contract, and no one would look at you funny if you were doing it in the open, then fine.

If you notice you are very clearly expected to lie (including by omission) or do a fake version of something, that the system is designed that way, then you have little choice, especially if you are also forced to deal with such institutions in order to get vital goods or services.

Idaho suicide hotline is forced to ask teens who call to get parental consent due to a law passed last year requiring consent for almost all medical treatments for minors. As you would expect, most of them hang up.

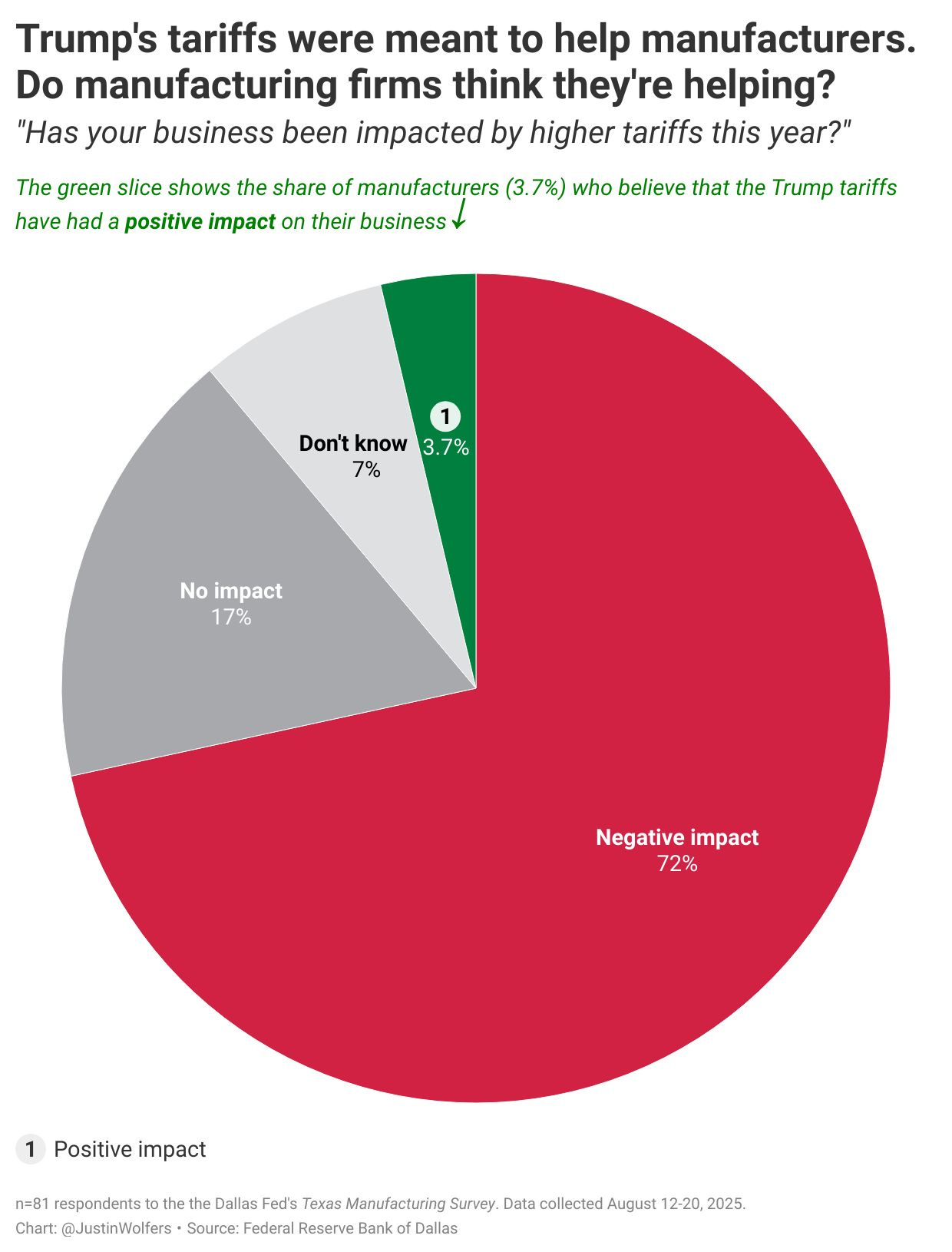

Are Trump’s tariffs helping domestic manufacturing? What do the domestic manufacturers say about this?

UK arrests comedian for speech, where the speech was done on American soil.

I try to keep a high threshold for criticism but it does seem like Trump ordered a bunch of people murdered (some might use the term ‘war crime’ but I prefer plain language and also there was no war involved, the ‘war on drugs’ is not a war) on the high seas with absolutely no legal basis for doing so? He ran the plot of Clear And Present Danger straight up in the open? You didn’t know there were drugs involved, and even if you did you can’t go around blowing up boats purely because there were drugs involved?

Especially when you had the power to interdict and instead decided to ‘send a message’ as per Secretary of State Marco Rubio by blowing up the boat with no warning because the boat (that you could have interdicted) posed an ‘immediate threat to the United States’? And a letter from the White House to Senators Mike Johnson and Chuck Grassley that confirms, yep, straight up murder and likely we will murder again? And JD Vance seems to confirm that this is straight up murder?

JD Vance: Killing cartel members who poison our fellow citizens is the highest and best use of our military.

Rand Paul: JD “I don’t give a shit” Vance says killing people he accuses of a crime is the “highest and best use of the military.”

Did he ever read To Kill a Mockingbird?

Did he ever wonder what might happen if the accused were immediately executed without trial or representation??

What a despicable and thoughtless sentiment it is to glorify killing someone without a trial.

I’m sincerely and deeply confused what makes this not straight up murder and have not seen any serious arguments for why it would be anything else, as opposed to ‘yes it is murder and I really like murder for us here, yay this murder.’ It also seems, to the extent such points are relevant in 2025, like a very clear ‘high crime and misdemeanor.’

Apple’s new iPhone 17 Pro Max seems like a substantial improvement over the iPhone 16 Pro Max. You get 50% more ram, at least twice as many camera pixels, better cooling, a substantially better battery, and a new faster chip with GPUs ‘designed for AI workloads.’ I’m going to stick with my Pixel 9 Fold, the only feature on iPhones that is compelling to me at all is avoiding anti-Android discrimination, hell of a business model, but those are nice upgrades.

Apple Vision Pro is making small inroads in specialized workplaces that can exploit spatial computing, including things like exploring potential new kitchens or training airline pilots. It is expensive, but if it is the best tool, it can still be worth it. The rest of us will be waiting until it is lighter, better, faster and cheaper.

Meta had some issues with child safety when using its smart glasses, so whistleblowers report that they tried to bury it or shield it from public disclosure in various ways. This continues the pattern where:

-

Meta has a safety problem.

-

When Meta tries to have internal clarity and do research on the dangers of its products, people leak that information to the press, details get taken out of context and they get hauled before Congress.

-

When Meta responds to this by avoiding clarity, and suppressing the research or ignoring the problem, they get blamed for that too.

I mean, yes, why not simply actually deal with your safety problems is a valid response, but the incentives here are pretty nasty.

The central accusation is that the company’s lawyers intervened to shape research into risks from virtual reality, but I mean it would be insane for Meta not to loop the lawyers in on that research. If we are going to make Meta blameworthy, including legally, for doing the research, then yes they are going to run the research by the lawyers. This is a failure of public policy and public choice.

That doesn’t make the actual problems any less terrible, but it sounds like they are very standard. Kids were bypassing age restrictions, and when interacting with others they would get propositioned. It seems like the accusation is literally ‘Meta let its users interact with each other, and sometimes those users said or did bad things.’

Experts have long warned that virtual reality can endanger children by potentially exposing them to direct, real-time contact with adult predators.

It is remarkable how consistently even the selected examples don’t involve VR features beyond allowing users to talk? I’m not saying you don’t need to have safeguards for this, but it all sounds very similar to the paranoia and statistical illiteracy where we don’t let children participate in physical spaces anymore.

I love this report of a major problem running the other way, to which, I mean, fair:

In a January 2022 post to a private internal message board, a Meta employee flagged the presence of children in “Horizon Worlds,” which was at the time supposed to be used only by adults 18 and over. The employee wrote that an analysis of app reviews indicated many were being driven off the app because of child users.

I’m not saying Meta handled any of this perfectly or even handled it well. But there’s no smoking gun here, and no indication that they aren’t taking reasonable steps.