After Ukrainian testing, drone-detection radar doubles range with simple software patch

As part of its unprovoked invasion, Russia has been firing massed waves of drones and missiles into Ukraine for years, though the tempo has been raised dramatically in recent months. Barrages of 700-plus drones now regularly attack Ukraine during overnight raids. Russia also appears to have upped the ante dramatically by sending at least 19 drones into Poland last night, some of which were shot down by NATO forces.

Many of these drones are Shahed/Geran types built with technology imported from Iran, and they have recently gained the ability to fly higher, making shootdowns more difficult. Given the low cost of the drones (estimates suggest they cost a few tens of thousands of dollars apiece, and many are simply decoys without warheads), hitting them with multimillion-dollar missiles from traditional air-defense batteries makes little sense and would quickly exhaust missile stocks.

So Ukraine has adopted widespread electronic warfare to disrupt control systems and navigation. Drones not forced off their path are fought with mobile anti-aircraft guns, aircraft, and interceptor drones, many launched from mobile fire teams patrolling Ukraine during the night.

For teams like this, early detection of the attack drones is crucial—even seconds matter when it comes to relocating a vehicle and launching a counter drone or aiming a gun. Take too long to get into position and the attack drone overhead has already passed by on the way to its target.

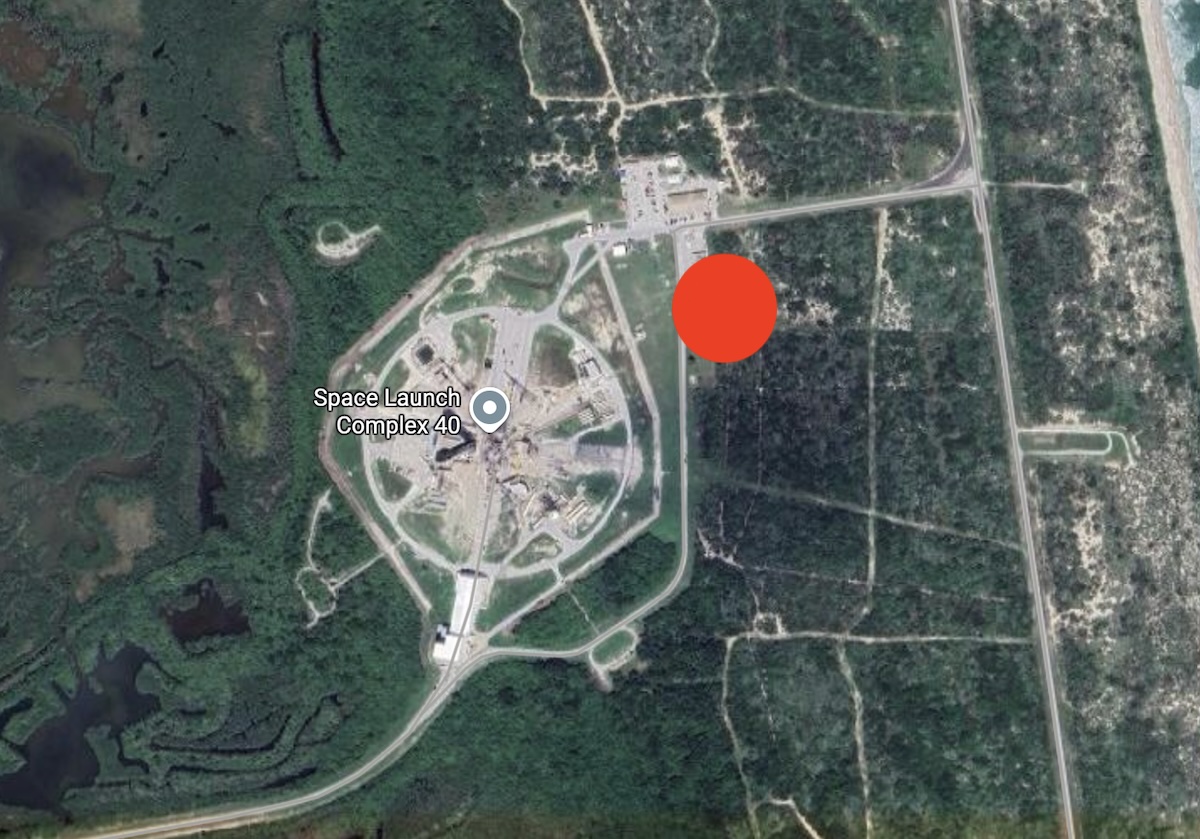

Which brings us to Robin Radar Systems, a Dutch company that initially used radar to detect birds. (Indeed, the name “Robin” is an acronym derived from “Radar OBservation of Bird INtensity.”) This radar technology, good at detecting small flying objects and differentiating them from fauna, has proven useful in Ukraine’s drone war. Last year, the Dutch Ministry of Defence bought 51 mobile Robin Radar IRIS units that could be mounted on vehicles and used by drone defense teams.

After Ukrainian testing, drone-detection radar doubles range with simple software patch Read More »