Previously: #1, #2, #3, #4.

Since we all know that dating apps are terrible, the wise person seeks to meet prospective dates in other ways, ideally in the physical world.

Alas, this has gotten more difficult. Dating apps and shifting norms mean it is considered less appropriate, and riskier, to approach strangers, especially with romantic intent, or to even ask people you know out on a date, which has a fat tail of life changing positive consequences.

People especially men are increasingly more afraid of rejection and other negative consequences, including a potential long tail of large negative consequences. Also people’s skills at doing this aren’t developing, which both decreases chances of success and increases risk. So a lot of this edition is about tackling those basic questions, especially risk, rejection and fear.

There’s also the question of how to be more hot and know roughly how hot you are, and what other traits also help your chances. And there’s the question of selection. You want to go after the targets worth going after, especially good particular matches.

-

You’re Single Because Hello Human Resources.

-

You’re Single Because You Don’t Meet Anyone’s Standards.

-

You’re Single Because You Don’t Know How to Open.

-

You’re Single Because You Never Open.

-

You’re Single Because You Don’t Know How to Flirt.

-

You’re Single Because You Won’t Wear the Fucking Hat.

-

You’re Single Because You Don’t Focus On The People You Want.

-

You’re Single Because You Choose the Wrong Hobbies.

-

You’re Single Because You Friend Zone People.

-

You’re Single Because You Won’t Go the Extra Mile.

-

You’re Single Because You’re Overly Afraid of Highly Unlikely Consequences.

-

You’re Single Because You’re Too Afraid of Rejection.

-

You’re Single Because You’re Paralyzed by Fear.

-

You’re Single Because You’re Not Hot Enough.

-

You’re Single Because You Can’t Tell How Hot You Look.

-

You’re Single Because You Have the Wrong Hairstyle.

-

You’re Single Because You’re In the Wrong Place.

-

You’re Single Because You Didn’t Hire a Matchmaker.

-

You’re Single So Here’s the Lighter Side.

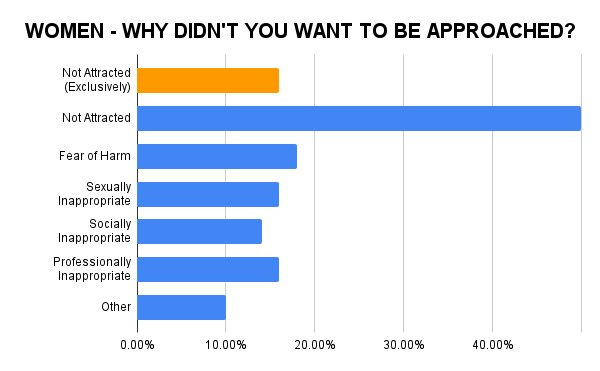

Not all approaches and opens are wanted, which is fine given the risk versus reward. Also it’s worth noting that this is actually a remarkably small amount of not being attracted to the approacher?

Alexander: I have updated this chart on the old article, because it was confusing some people.

As stated in the article, these responses were not mutually exclusive. 50% of women did not say that they didn’t want to be approached exclusively because a man was unattractive. Only 16% of the women who said they experienced an unwanted approach cited unattractiveness exclusively.

This was also not the full female sample – 34% of women did not have an unwanted approach experience at all.

I have added an UpSet plot to the article if you want to visualise the sets of responses. But what it basically boils down to is that 84% of women who had an unwanted approach experience cited something aside from a mere lack of attraction.

Remember, these are exclusively unwanted approaches.

Presumably, in the wanted approaches, the women was indeed attracted.

This still leaves the ‘there were other problems but you were sufficiently attractive that I disregarded them’ problem. Not getting any bonus points is already enough to make things tricky, you’ll need otherwise stronger circumstances. It does seem clear that men are far too worried about being insufficiently attractive to do approaches.

Big B (19.5m views): I hate when yall applaud men for doing the bare minimum.

Honey Badger Radio (20m views): Genuine question. What is the ‘bare minimum’ for women?

Punished Rose: 6’5”, blue eyes, still has all his hair, good job in position of power with many underlings, ubers me everywhere, buys me diamonds, kind to animals, wants 3-4 children, good relationship with mother, spontaneous and romantic, PhD with no corrections, homeowner.

[Here’s what you get in return from her, men!]

Aella: I think it’s less how exactly men have their stat points distributed, and more how many total stat points there are. Women will often tolerate dump stats if there’s enough perks to balance out other areas.

You have to notice the perks for them to count, which is tough on dating apps if the dump stat is too visible, but mostly yeah, and I think it’s true for everyone. Each person will usually have some particular actual dealbreaker-level requirements or at least very expensive places to miss, plus some things really do override everything else, but mostly everything is trade-offs.

Amit Kumar: Smile at cute strangers and shouted upon 😂

Blaine Anderson: I surveyed >13,000 single women last year and exactly 95% said they wish they were approached more often IRL by men.

If women *shoutwhen you approach, you’re doing something wrong ❤️

Poll of my friend Ben Daly’s Instagram following, which is virtually all single 18-34 yr old women in the U.S. and U.K.

For anyone trying to learn, I teach a program called Approach Academy (~$100).

I have no idea if Approach Academy is any good, and doubtless there are lots of free resources out there too. Either way, it’s an important skill to have, and if you are single, don’t want to be single and don’t have the skill it’s worth learning.

If you’re literally not trying at all, that’s definitely not going to work. Alas, from what I can tell Alexander is correct here, in that even the very spaces where the You Had One Job was ‘actually approach women’ are increasingly coming out firmly against the one thing that ever works, and moving from an agentic narrative where you can make it work to an anti-agentic one where you shouldn’t try.

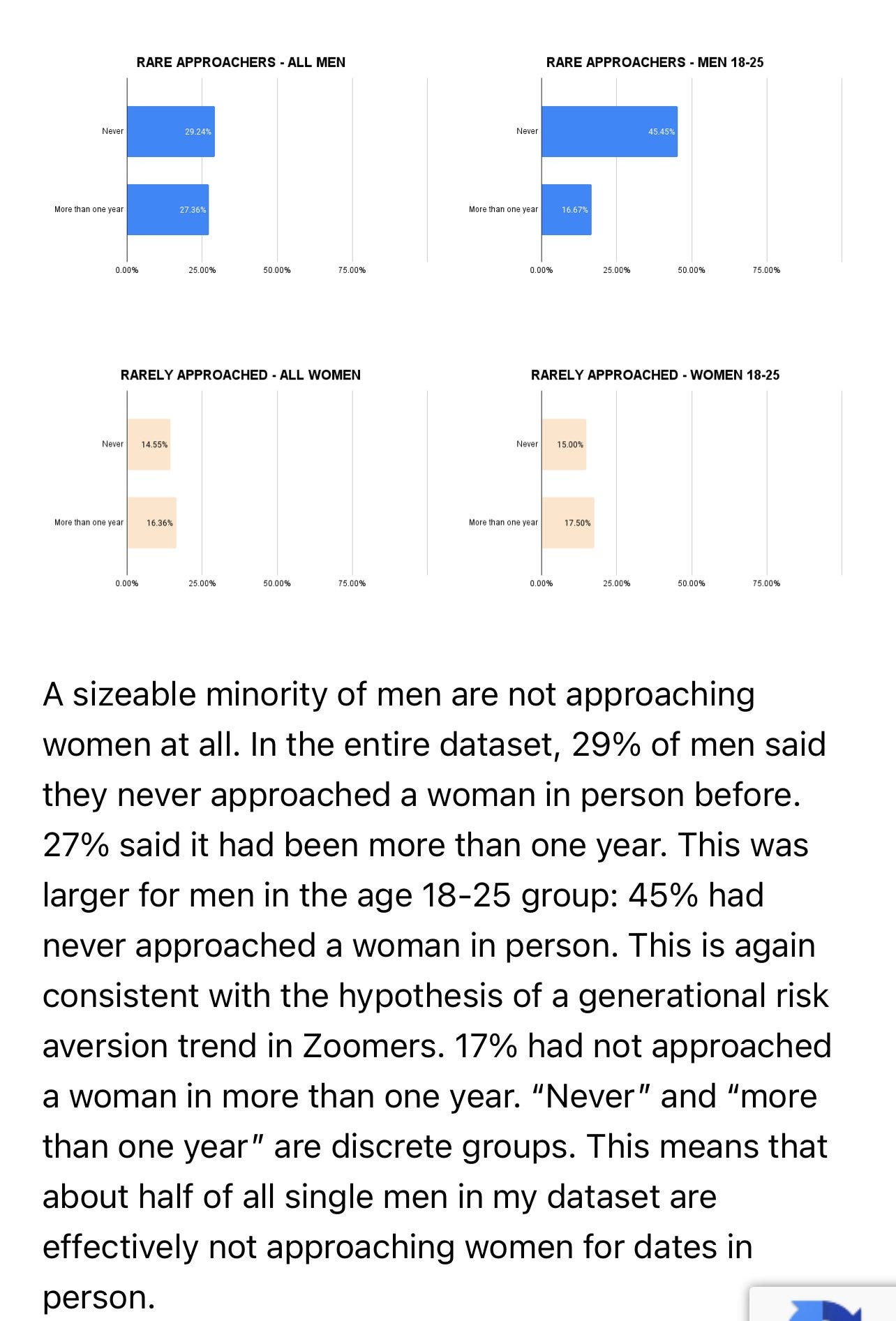

Alexander: Of everything I have ever posted, nothing has received more pushback from the manosphere than pointing out that half of young men have not asked a woman on a date in the past year, and a quarter have never asked a woman on a date ever.

The “male loneliness crisis” is largely self-imposed.

That you must approach women and ask them on a date, assuming you do not want to be perpetually single, would be the most obvious and basic advice you would have been given on early pickup artistry or relationship advice forums.

When I write of the relationship advice becoming increasingly negative, this is what I mean. Instead of ascribing agency to men and giving them the most obvious and “actionable advice” (“you need to talk to women”), the entire space is littered with narrative excuses for why men cannot!

“But what about MeToo?”

“Women do not want to be approached.”

“Women only want a ‘Chad’ type.”

“Women are not good enough to approach.”

“It is not men’s fault—the entire fabric of society needs to change to make it easy for men to approach.”

Anti-agentic narratives. Excuses. None of these are “actionable advice.” No one telling you these things is giving you a “solution.” They are just complaining and want to vent their victimhood.

Occasionally the feedback I receive is, “You describe things well, but provide no advice.”

Probably true! I do not really make self-help content. Yet I do regularly tell you all the very basic things that work:

-

You need to talk to women.

-

You need exciting, social hobbies that put you in contact with women and that women like.

-

You need to rid yourself of antisocial vices, hobbies, and habits.

-

You need to hit the gym and lose weight.

-

You need to fix your physical appearance.

You are all free to work the details of these things out however you please, but these are the basics that cover how you meet women and if you pass the initial bar of attraction. They are obvious and do not require you to know any hidden secrets or subscribe to any fringe ideological beliefs.

The manosphere overall, as well as individual subcultures within it like the Red Pill, have shifted from agentic messaging to anti-agentic messaging over time.

It used to be, “It’s really easy to put yourself in the top 10% of men.” Now you are much more likely to see lamentations that women only want the top 10% – and they are so unreasonable and unfair for that!

Narratives used to be primarily individualistic and agentic: you can self-improve and fundamentally change. You can get the results you want in life.

Now the narratives are collectivist and social: society is responsible for men’s romantic outcomes. They copy the language and paradigms of left-wing social justice movements. Men are victims – men are not at fault nor responsible for their own life trajectories. The only solution is a massive change to the culture, laws, and society at large.

Matthew Yglesias: We need industrial policy for asking girls out.

Do today’s young men know about negging? Peacocking? Do we need a Game Czar to address this crisis?

Matthew Yglesias: If you ask a bunch of girls out, some of them will go out with you, whereas if you don’t, none of them will.

As in, in order to open, you need to be there at all, and that’s the 80% for showing up.

Nick Gray: his is a message for single men that are tired of online dating

I made a post 6 months ago about what I should text a woman that I was going on a date with

The date was great. In fact we have spent almost every day since then together

Now she’s my girlfriend

Guys if you’re frustrated with online dating I have some advice

Delete your dating apps and start going out every single day

You need to the gym, go to the grocery store, go work from cafes

You need to try a new group fitness class every other day, go to yoga and pilates, and join meetups for things you’re interested in

Be someone who is out and about

Talk to strangers, make friendly conversation, add value, and don’t be sketchy

[continues but you can guess the rest, the central idea is ‘irl surface area.’]

Yet, despite knowing that fortune favors the bold, many continue not to ever try.

Julian: Today my dad asked me if I ever approach beautiful women on the street to ask them out. I told him that I’ve literally never done that, and I saw true sorrow in his eyes.

“You see dad there’s this thing called hinge, it’s a lot easier really, it’s not as scary.” 😢

Having a tweet go viral is actually almost never good. now nearly 2 million people know I am scared of talking to women.

Twitter when I have a cool idea about AI safety to share: 😴💤🛌🥱

Twitter when my dad implies I have no rizz: 👀‼️🚨

Implies? Flat out tells you. Or you flat out telling him. Do better.

Indeed, we seem to keep hearing stories like this reasonably often? It’s not this easy, but also it can be a lot easier than people think.

Val: How do people get girlfriends? I’m being serious.

Critter: A college friend of mine was single his whole life. He was getting depressed and asked for my advice. I told him to ask out 20 people on casual dates. He asked two; the second one became his girlfriend. It’s that simple.

“But no, I want to swipe from the bathroom and have a series of convoluted online conversations that go nowhere.” Okay, do that then. Enjoy.

My friend was average-looking, 5 feet 8 inches tall, and deaf, but keep enjoying your fantasy that you have it hard.

Nobody: Got a girlfriend once because I accidentally smiled and waved at her, thinking she was someone I knew.

Konrad Curze: I literally asked a coworker on a date once because I heard her talking about wanting to see a movie and not having a ride to the theater, so I just asked her if she wanted to go a bit before her shift ended. We’re not together anymore, but it really is that easy—just ask someone in person.

liberforce: I once talked to a complete stranger at the train station. She was a tourist in her first week in my country. After losing sight of each other, one year later we got married. We have been married for the past 10 years and have a 7-year-old son. Be polite. Be confident. Try.

Critter: “What is a low-key date?”

It’s a date that’s a small investment and easy to say yes to. Lunch this weekend. Studying together in the library. Getting coffee. Going to a local event.

Think of something you might say yes to if a friend asked, even if you… Do I ask friends/acquaintances?

Be careful; asking someone out can possibly damage your network of friends, employee relations, etc. I wouldn’t ask 20 coworkers out; you will get a reputation.

Only ask friends or coworkers if you have some confidence it’s a yes.

Asking strangers is cost-free, but we’re busy.

“Then who do I ask?”

I’ve gone on dates with waitresses (ask after their shift), Starbucks employees, and girls on the subway. If you’re attracted to someone, be cool and direct, and just ask.

“What do I say?”

Ask as if you were asking someone for the time or where the nearest gas station is.

“Hey, I think you’re really beautiful. Can I buy you lunch/coffee sometime this weekend?”

It’s *betterto chat them up first, but if you can’t, just ask.

“How do I avoid seeming creepy/awkward?”

This isn’t risk-free. Some may think you’re a creep, others will be flattered. Outcomes are hard to control; intent is what matters.

Try not to get too wound up; creepiness is a result of intensity. Timing matters, but just relax and ask.

Many such cases. When single, and it’s safe and appropriate, always be flirting.

Annie: this is why as a prolific slut I just flirt with any person up to my standards and escalate until I receive any sort of pushback.

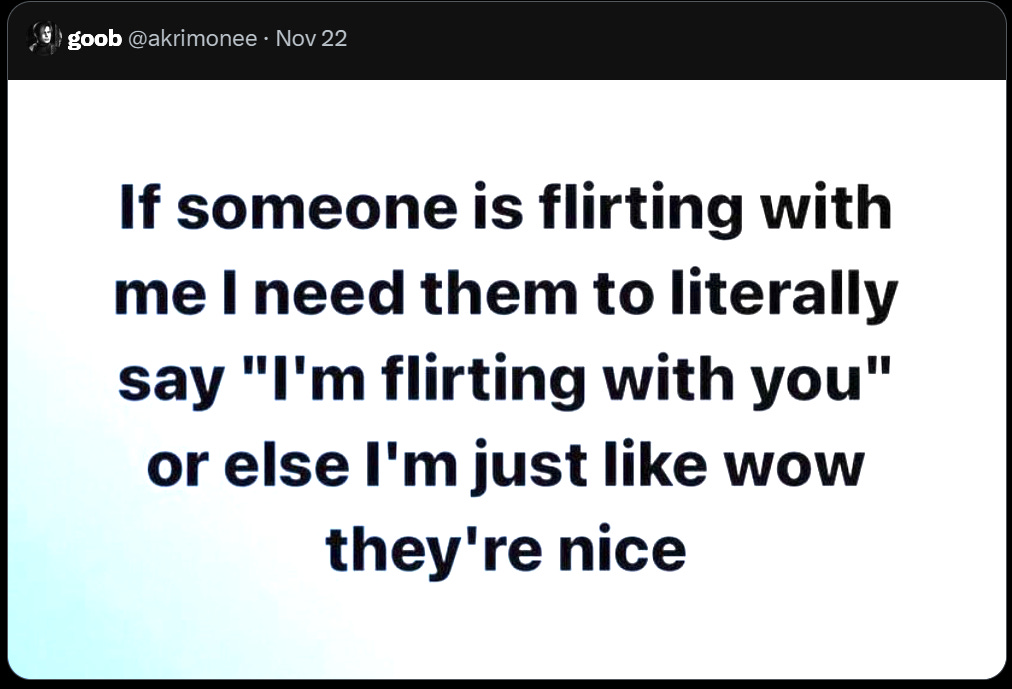

That might actually be correct, if you’re good at noticing subtle pushback, at least within the realm of the deniable and until they clearly know you’re flirting. If they can’t tell you’re flirting, then you kind of aren’t flirting yet, so you’re probably fine to escalate a bit, repeat until they notice.

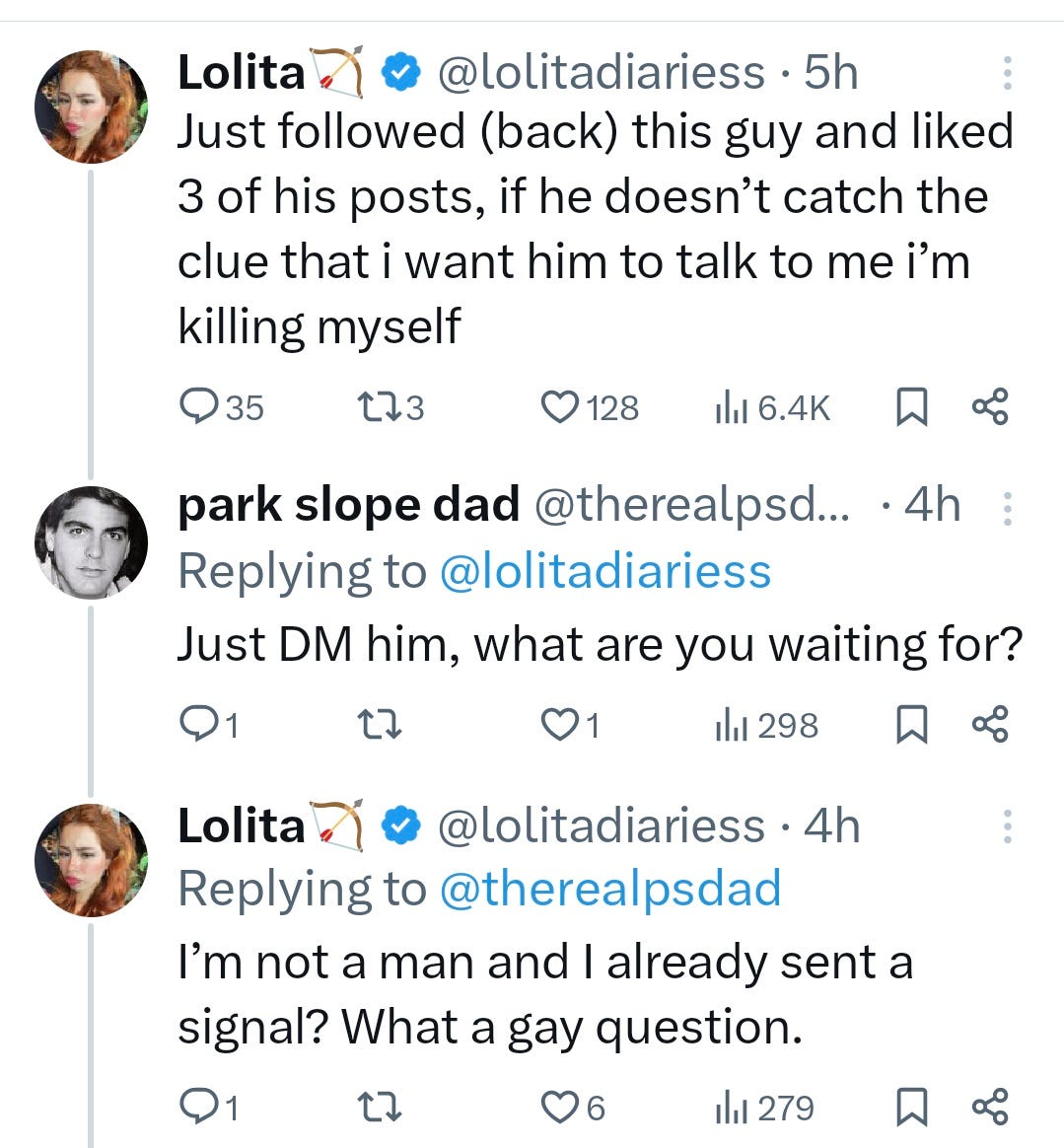

Online makes it even trickier, what even is flirting? It turns out Lolita’s likes here were on Instagram, where I am led to believe this is indeed how this works, whereas on Twitter the odds this is what is happening are lower – but yeah, DM her anyway if you’re interested.

The Catholic Engineer: Attention boys. This is how girls shoot their shot on Twitter. Take note.

Lolita: I just wanna let everyone know (since apparently this is everyone’s business now🤣) that he indeed texted me [on Instagram], he isn’t as [stupid] as men on twitter, thank God. ☝🏻😌

Divia: Tag yourself I’m the “I’m not sure how old you are” married person who follows and likes a few posts just because.

Linch: I feel someone calling themselves “Lolita” may have a non-standard opinion of good dating strategies, or norms.

Divida: lol yes ty I missed that part.

Ian Hines: I’m the guy who has apparently flirted with dozens of women without realizing it.

Andrew Rettek: By this standard a lot of unavailable women are flirting with me on Twitter.

Normie MacDonald: Something I routinely find myself telling people in regards to dating in relationships is that they have no reason to be creating these arbitrary meaningless ego saving rules for themselves. Ok congratulations you don’t “text first” you saved your imaginary dignity while the other girl gets an engagement ring

The dance matters. Ideally you want to do the minimum required to get an escalation in response, where that escalation will filter for further interest and skill. I would certainly try to do that first. But if it doesn’t work, and this wasn’t a marginal situation? Time to escalate anyway.

The deniability is not only key to the system working and enabling you to make moves you wouldn’t otherwise be able to make. It’s also fun, at least for many women.

Also, it’s essential. As in, you try to think of a counterexample, and you fail:

Emmett Shear: What is (flirting minus plausible deniability)?

Misha: “Hey there handsome.” Is flirtatious and undeniable.

As confirmed by Claude, there’s still plenty of plausible deniability there, and full uncertainty on how far you intend to go with it. Ambiguity and plausible deniability between ‘harmless fun’ flirting versus ‘actually going somewhere’ flirting is a large part of the deniability, and also the core mechanism.

Periodically we rediscover the classic tricks, which is half of what TikTok is good for. In this case, something called ‘sticky eyes,’ where you make eye contact until they make eye contact back, then act like you’re caught and look away. Then look at them, and this time when they match don’t look away, and often they’ll walk right to you.

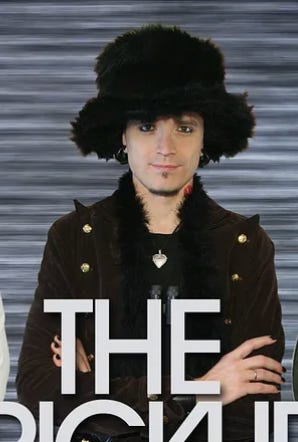

I do not believe any of this below is how any of this worked in literal detail, but…

WoolyAI: That stupid fucking hat.

This is doing the rounds and, like all gender and feminist discourse, it’s fundamentally dishonest. You can read two dozen other restacks laying out the limitations of this article, all ignoring that she’s a freelance writer, ie poor, and she’s writing for clicks; hate the game, not the player.

I still cannot get over that stupid fucking hat. It worked.

Look, that hat worked. That stupid fucking hat got Mystery laid more than I’ve been laid in my entire life unless 90% of what was written in “The Game” is a lie. Women like the hat. Women slept with him over the hat. And we can’t be honest about it.

It worked on me. It worked on all of us. 20 effing years later that stupid hat is still the #1 image of PUAs and Mystery is still the most famous of them, not because of anything he did, but just because if you put that stupid fucking hat in a thumbnail, people will click on it because we can’t not pay attention to that stupid fucking hat.

That stupid fucking hat worked and I wouldn’t wear it and you wouldn’t wear it but it brought him more sex and fame than anyone reading this has ever got and we can’t be honest about it and that’s why the discourse never goes anywhere.

If you actually read the book, Mystery is an insanely broken individual but he lived a literal rockstar lifestyle because he was willing to wear that stupid fucking hat and I kinda envy him for it. Just the shameless “I want women, women want the hat, therefore I will wear the hat.”

But the discourse feels stuck because women are ashamed they like the stupid fucking hat and men would be ashamed to wear the stupid fucking hat so we all lie about it so we don’t have to live with the shame of who we are.

(Pictured: That fucking hat.)

The stupid fucking hat was successful for Mystery in particular, as it played into the rest of what he was doing, leading interactions down predictable paths he trained for in various ways, and that he figured out how to steer in the ways he wanted.

But also, yes, it was his willingness to wear the stupid fucking hat, if that’s what it took to make all that work. That doesn’t mean you should go out and wear your own literal stupid fucking hat, but… be willing, as needed, to wear the metaphorical stupid fucking hat. If that’s what it takes.

Cosmic Cowgirl: The only dating strategy worth your time is to be as weird as humanly possible and see who rocks with it

The best part abt this tweet is seeing all the people responding that the way they met their partners/spouses is by being weird ❤️ there is hope for us weirdos yet!

Not quite. You should be exactly as weird as you are. Being intentionally extra weird would backfire. But yes, you mostly want to avoid hiding your weird once you are finished ‘getting reps.’

Should you put your small painted war figurines in your profile? One woman says no but many men say yes.

Shoshana Weissmann: Hey men, please don’t put the small war figurines you’ve painted in your Hinge profiles. This does not help.

We will date you sometimes despite this, but…

Yes, they were well-painted. Please stop asking.

Jarvis: It can’t hurt.

Shoshana: No, Jarvis.

If you’re looking to maximize total opportunities, you definitely don’t put things like painted war figurines in your photo.

However they offer positive selection to the extent you consider the relevant selection positive, so it depends, and a balance must be struck. I would only include them if I really, really cared about war figurines.

Teach the debate: Andrew Rettek versus Razib Khan on letting your interest flags fly. Should you worry about most of the attractive women losing interest if you talk about space exploration, abstruse philosophy and existential risk? Only to the extent you’d be interested in them despite knowing they react that way. So gain, ideally, once you’ve got your reps in, no.

As usual, if you’re still on the steep part of the dating learning curve, one must first ‘get the reps’ before it wise to overly narrow one’s focus.

You can also make other life choices to increase your chances. If you are a furry, you might do well to go into nuclear engineering, if that otherwise interests you? At some point the doom loop cannot be stopped, might as well go with it.

Eneasz Brodski suggests to straight men: Look for a woman who likes men. As in, a woman who says outright that by default men are good and cool people to be around. He says this is rare, and thus not all that actionable. I think it’s not that rare.

I would say that the specific positive version could be hard to act on, but the generalized negative version seems like fine advice across the board and highly actionable. If someone actively dislikes people in your key reference classes, whichever reference classes those might be, then probably don’t date them. The more of your reference classes they actively like by default, the better.

The same principles are true for women seeking men, and the same is true for physical goals. You should care relatively little about general appeal, and care more about appeal to those you find appealing as long term partners.

Antunes: Dear women, We don’t want you with muscles. We want you slim, delicate and cute. Take notes.

The rich: Dear women, muscles are hot, and there are many fit gym rats who would love a workout partner. Appealing to the average man is a bad idea; better to appeal to a small group that is very interested in you (with favorable gender ratios).

Daniel: I still think he’s wrong about the average man not wanting a fit woman.

In particular, the men like Antues who actively mock anyone who disagrees on this? Turning them off actively is not a bug. It’s a feature.

Isabel: Question for women in their 30s, 40s, 50s, etc: what are women in their twenties not considering that they should be considering?

Mason: Waste zero time on men who don’t want the same things you want, you will not look back fondly on relationships built on the hope that someone else would change.

The original thread has much other advice, also of the standard variety. I would modify Mason’s note slightly, do not waste time on the chance someone else will change what they want. But of course there are other ways for time to be well spent.

On the flip side:

Girl explains why she does not like ‘extreme gym guy’ bodies, she wants the mechanic with real muscles in natural settings.

Freia: Every woman i talk to is like nooo too much muscle is weird and they’re imagining competition season mr. olympia in their head or something but every guy i talk to is like yeah i started lifting and all of a sudden women found me 10 times funnier.

This is an easy one.

-

Up to a point more muscle is good.

-

Too much ‘unnatural’ or ‘gym style’ muscle is weird.

-

If you never do muscle poses you do not have too much such muscle.

-

If you are musclephotomaxing, you may have too much muscle for other purposes.

Choose your fighter.

Rachel Lapides: The undergrad creative writing class I’m teaching has 19 girls and 1 boy.

I think a lot of you in the replies would benefit from a class or two.

Zina Sarif: Who will tell them?

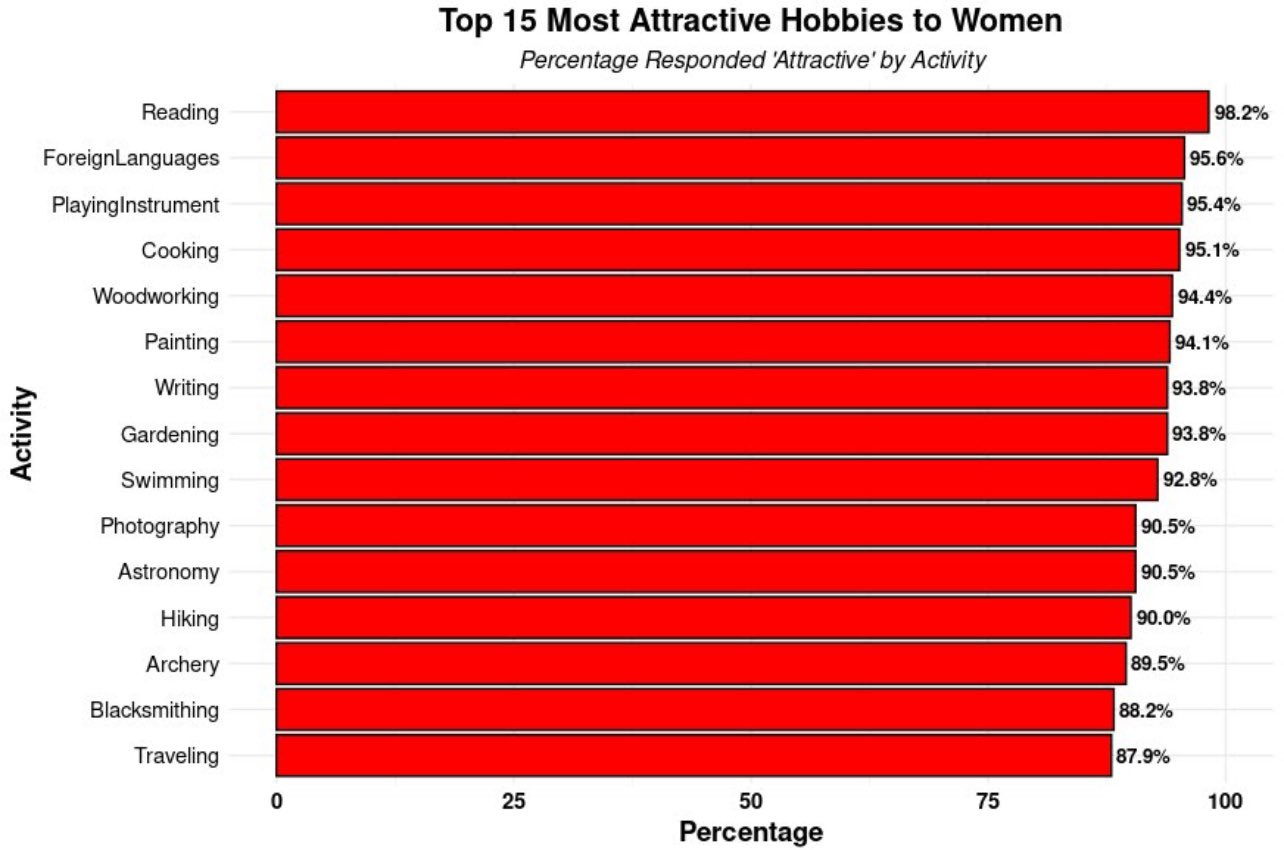

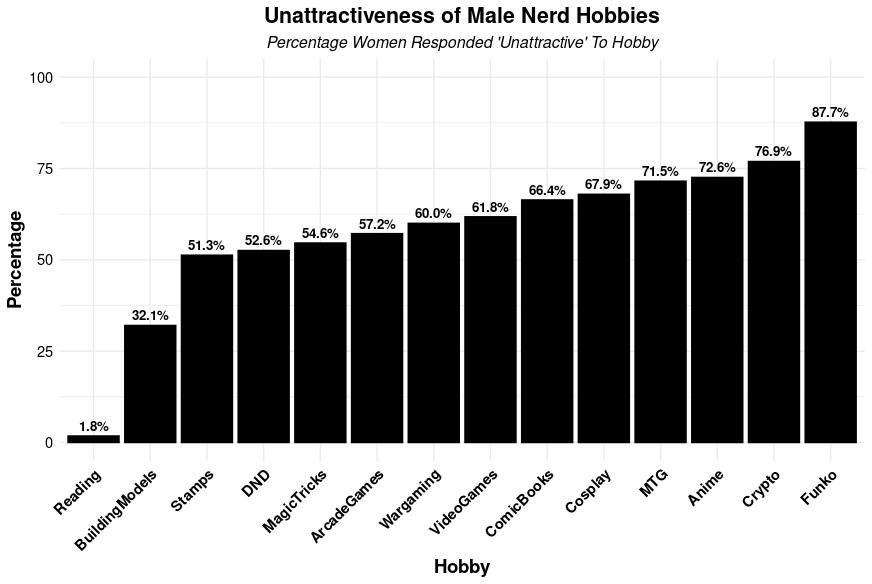

Vers La Lune: This needs to be said. Reading is not an attractive hobby to women. Back in 2018ish I A/B tested it and hid my books and actively lied about not reading a book since college and it worked 10x better than honesty.

It’s attractive hobby “in theory” but most people don’t read shit anyway, maybe they read Literotica fairy smut or something but you’ll never see panties drier than if you reference David Foster Wallace or some history book or something.

The rest of that chart is fine. Knowing languages and instruments are absolutely the most attractive to them.

Robin Hanson: Would this result hold up in a larger randomized trial?

I think Vers is right about this. Reading is attractive in theory.

In practice, it is not unattractive. But that is a different thing. You need to have a hook that is attractive in practice.

Reading can and does help with that. Reading leads to knowledge and skills and being interesting, which are themselves attractive. You want to be readmaxing. But that, too, is a different thing.

When 98.2% of women said reading was ‘attractive’ in a binary choice, that was answering the wrong question. Associating with reading simply is not exciting. It does not offer a joint experience or a good time. It won’t work.

Whereas the other top activities represent skills and demonstrations of value and joint activities. So they’re great for this.

The flip side are the actively unattractive hobbies. Reading is not unattractive, it will almost never actively cost you points, but Magic, anime and crypto definitely will be highly unattractive and turn off a large percentage of women, if you force them to deal with those things front and center. If you don’t center them, my guess is they are like reading, they don’t end up counting much for or against you then.

Of course, there will be some women that does find almost any hobby attractive, and the positive selection as noted above is palpable. But you only get so many such filters, so choose carefully which ones you deploy. It’s not strictly limit one, but it’s close.

JD Vance gave up Magic: the Gathering because girls weren’t into it. I notice how much I dislike that reaction, but I understand it. It’s a real cost, so how much was he into casting a paper version of Yawgmoth’s Bargain, when he could instead get the same experience going to Yale Law School?

Liv Boeree points out that one place women can look for a good man is in their friend group, where you might already have some known-to-be-good men in your friend zone. And she can say that, because that this is how she met Igor. So she advises to make sure to take a second look at those guys at some point.

I’d add to that ‘because only you can make that move, they mostly can’t.’

We alas lack a good mechanism whereby people can attempt to be ‘unfriendzoned,’ or indicate their interest in being unfriendzoned, without risking destroying the friendship. There are obvious possible coordination mechanisms (e.g. to ensure that only reciprocal interest is revealed) but no way to get others to implement them. The rationalists have tried to fix this at least once, but I think that faded away even there.

Here’s a very different strategy, from Bryan Caplan, that we have discussed in prior episodes. Why would you, a man, even look for her, a woman, in America? Your hand in marriage is a green card easily worth six figures and you’re going to waste that on someone who already lives here? When you could instead be (in relative terms) instantly super high status to boot?

His answer is adverse selection. You have to worry the woman does not actually like you. He does not discuss strategies to minimize this risk, such as avoiding services for women actively seeking such arrangements.

You want to seek the women who are not actively seeking for you to seek them. Tricky.

The other obvious problems are logistics and cultural compatibility. And also, as one commenter warns, how you look to her from afar might not be a good prediction of how you look to her once she arrives.

Mason: I don’t have a big problem with passport brokers, to be honest.

I get the sense that most of them are just average men who want to settle down after some bad luck in love and are very excited, and naive, about encountering a pool of young women who want to do that quickly.

I’d guess that about 90 percent of the time there isn’t anything overtly political or misogynistic about it, even if there are a number of reasons it may not be a good idea.

The perhaps 10 percent who see themselves as actively snubbing Western women who are too damaged to love are, yes, distasteful.

But so are the largely female onlookers who seem, more than anything, angry at the idea of an average-looking, average-earning man getting someone “out of his league.”

If there’s something disturbingly transactional about dating women from poorer countries online, there’s an equally economic paradigm implicit in the idea that two people can’t truly love each other unless they’re matched on social class and relative status.

I think the important problems are entirely practical issues of logistics, cultural distance and adverse selection. Those are big problems, and reason most people should choose different strategies.

‘Could’ backfire massively and ruin your life or career is not ‘could plausibly’ or ‘is likely to’ but if you don’t know that, it will have the same impact on your decisions.

Air Katakana: We need to talk about the real reason no one is getting married: western society has gone so woke that a man showing any interest in a woman in any situation could lead to his career and life being ruined. The only place you can even feasibly meet someone now is via dating apps.

Harvey Michael Pratt: Like seriously who are these people who don’t know how to express romantic interest without seeming threatening I’ve heard this one over and over and just don’t get it.

Misha: It’s not just about seeming threatening, I think it’s about the distribution of outcomes feeling like it’s net negative.

Imagine in the past it was something like

Every time you hit on someone, roll a d20. On a 1, she slaps you. On a 16 or higher, you get a date.

Now, it’s more like

On a 1 you get fired, on a 2-5 you get mocked or have a really awkward time, on a 20 you get a date.

Now obviously, all these numbers are fake and the real numbers are probably something else.

But on a visceral level it’s hard to believe in the real numbers, and our expectation of the risk/reward of interacting in certain ways is influenced by our social environments.

I think this is particularly pernicious for guys who haven’t been in relationships because they have no direct experience of positive outcomes, they only have negative outcomes and stories you see about other people’s negative outcomes are more viral.

Even if we round down the risk of getting canceled/mocked/fired to zero (which I think is probably correct) you can still expect to be rejected a ton of times before you get a relationship, which is extremely discouraging.

I do think part of the problem is overromanticizing of the past though. You’re much more likely for various reasons to hear about all the relationships that came before instead of the people who died alone. I don’t know if it was ever actually easy to get married.

My understanding is that sufficiently far in the past, asking was actually deadly. You risked violence, including deadly violence, or exile. You indeed had to be very careful.

Then there was a period where you were much more free to do whatever you wanted. You really could view the downside mostly as ‘you get slapped,’ which is fine even if the odds are substantially worse than above.

Now things have swung back somewhat. The tail risk is small but it’s there. And the reports are among the sufficiently young that many think trying to date people you actually know Just Isn’t Done, except of course when it is anyway.

I also presume, given other conditions, that we are now trending back down on the risks-other-than-rejection of asking front.

Partly of course it is a skill issue.

The rejection part sucks, too, of course. But you can try to have it suck less?

One of the most important dating skills is learning to handle and not fear rejection.

Rudy Julliani: This is worse than a gunshot to the head.

Allie: This type of rejection is a super normal part of dating and was delivered about as politely as it could’ve been.

Zoomers are so emotionally strung out that this kind of thing feels catastrophic when it should just be “aww darn, I had high hopes for that one.”

Shoshana: dude fuck what I’d give for men to be this adult and straightforward!

Allie: Half the time people just ghost these days!

The replies are full of ‘at least she was honest and did not ghost you.’

-

So first of all, wow those are low standards.

-

We don’t actually know she was honest, only that you can safely move on.

-

I do totally see how ‘you did nothing wrong’ can be worse than a ‘you suck.’

-

But seriously, you need to be able to take this one in stride. One date.

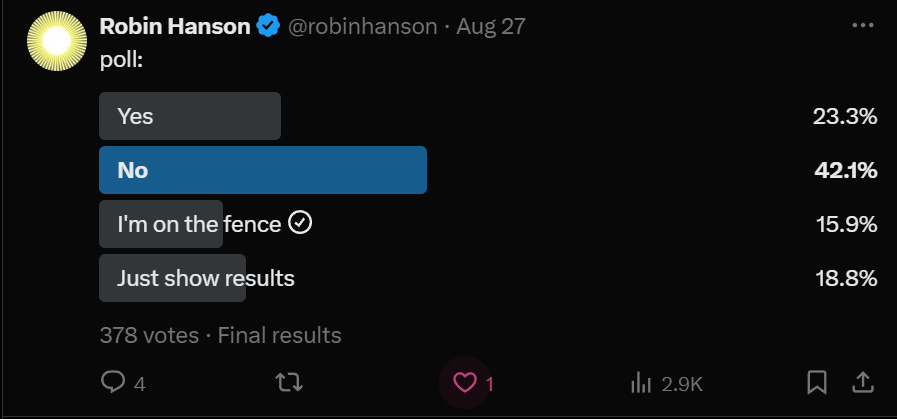

Being unusually averse to rejection, as Robin Hanson reports here, really sucks and is something one should work to change, as it is highly destructive of opportunity, and the aversion mostly lacks grounding in or correlation to any consequences beyond the pain of the rejection.

Robin Hanson: I’m unusually averse to rejection. Some see that as irrational; I should get over. I guess as they don’t think it makes sense to have preferences directly over such a complex thing; prefs should be on simpler outcomes. But how do I tell which outcomes are okay to matter?

Zvi: It’s not simple, its terminal outcomes (final goods) versus signals for how things are going (learning feedback systems and intermediate goods and correlations with ancestral Env. dangers, etc)?

You know what actually feels great?

When you ask, and you get turned down, and you realize you played correctly and that there’s no actual price to getting a no except that you can’t directly try again.

Nothing was lost, since they weren’t into you anyway. Indeed you got valuable experience and information, and you helped conquer your fears and build good habits.

That includes looking back afterwards. Indeed, I’m actively happy, looking back, with the shots I did take, that missed, as opposed to the 100% of shots that I didn’t take. Many of those, I do regret.

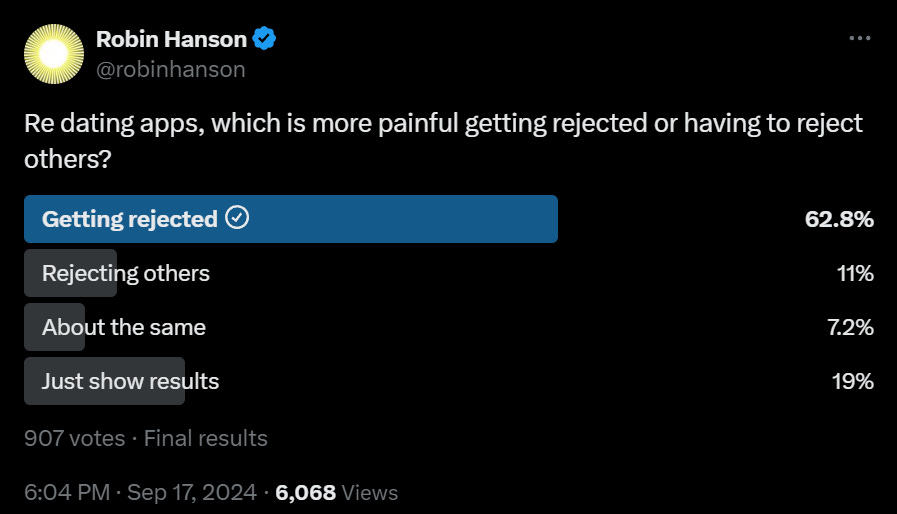

Such a strange question to have to even ask, when you think about it: Is having to reject others even worse? Some people actually say it is?

It’s not the common sentiment, but it’s there.

Kali Karmilla: The most depressing part of dating apps isn’t even getting rejected. It’s having to reject so many people. They put themselves out there, asking for someone to care for them, and you have to be like, “Not my type” a hundred times in a row. Makes me feel evil, honestly.

I don’t think the human mind was built to realize that so many people are lonely at one time, and it certainly wasn’t built to see that and react with indifference (swipe left, and they stop existing). I do not know, man; it’s just sad. I wish I were frozen in ice like a cartoon caveman.

Me: It feels like dating apps are asking me to dehumanize other people.

This person: It’s okay because people are just like commodities, and the apps are just digital marketplaces. 👍

Brother, I never want to be like you in my life.

If you reject someone in the swiping stage, and you feel evil about it, don’t. It’s unfortunate that you need to be doing this rather than the algorithm handling it, but it’s no different than being at the club with 100 other people and ignoring most of them. You’re being fooled by having the choices be one at a time and highlighted.

Of course so many people are lonely at one time. There are so many people.

If you reject someone after a match, then that is like actually rejecting them, so yes treat them like a real person with actual feelings, but everyone involved signed up for this, and stringing things along when you don’t want to be there or keep talking to them is not better. If you can’t get there with someone, tell them that, and send them home.

Anything else is cruel, not kind.

Tracing Woods: Worse than this, I think, is the occasional decision not to immediately reject someone you should have, playing with their heart a bit on the way to rejection. People expose their hearts incredibly quickly while dating, and it’s easy to stumble into hurting someone.

My worst moments when dating, looking back, were when I went on a first date with someone who was clearly desperate for an affection I could not honestly provide. Everyone wants to be loved, but nobody wants to be pity-dated.

Of all the lessons of The Bachelor, this might be the biggest one, to not string people along, you see this on various similar shows. The candidates who are rejected early mostly shrug. Some are hit hard, but not that hard. The farther along they go, the worse it gets, also much time is wasted.

Same goes in real life. If you know you must reject, mostly the sooner and clearer the better, with the least interaction beforehand. It will suck less, for both of you.

I do admit that sometimes the person you reject does not make it easy on you, including those who don’t accept it.

Holly Elmore: Having people not accept the rejection feels like having to strangle them or walking away and letting them bleed out. It’s way more intense than any one instance of being swiped left on or hearing “no”.

Yes, of course having to tell people no sucks. Having to dump someone sucks a lot.

But it’s still way better than getting dumped when you didn’t want to be.

Allie: A lot of the best things in life fall into the “scary but worth it” category

– Leaving home

– Falling in love

– Driving

– Buying a house

– Marriage

– Children

– Travel

We used to focus on the “worth it” aspect, now we hyper focus on the “scary” and we’re paralyzed by the fear

Shoshana Weissman: Damn straight. Lotta people paralyzed by fear of doing normal good things that all involve some risk but lots of payoff.

Yep. Normal good things are scary. You have to do them anyway.

The other stuff matters, but hey, it couldn’t hurt.

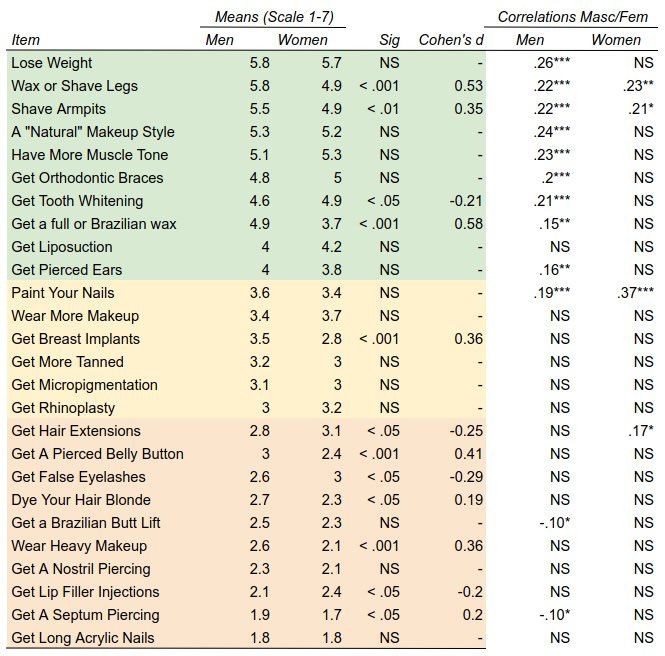

Here is a chart of how men and women said they viewed various beauty strategies. Full article here.

Alexander: Revisiting the original list, we also see very strong agreement between men and women – both men and women know that these things aren’t actually attractive to men!

It turns out that what is attractive essentially falls into two categories: “don’t be fat” and “basic grooming.”

As a woman you need to not be overweight, work out, shave, and have nice teeth – all of which is just as true for men.

I mostly believe this list. My guess is ‘dye your hair blonde’ is underrated, because they are asking in a context where you know and are thinking about the fact that the color is fake and that you’re ‘being fooled,’ which is not real world conditions, and I predict what is likely a smaller similar miscalibration for breast implants.

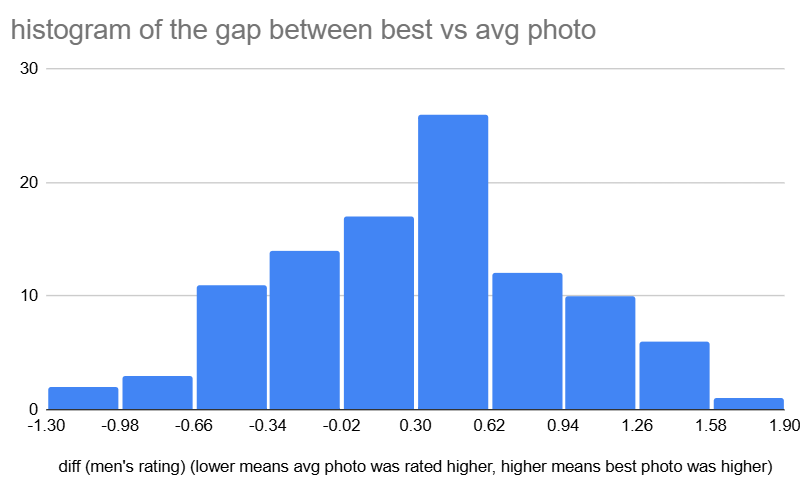

Women were highly unsuccessful in attempting to pick photos to look hotter.

Aella: the actual finding (after paranoid checking against dumb mistakes): I had women submit an “average” photo of themselves, and a photo of them “at their best,” total n=102.

Men rated the “at their best” photos about 0.3/10 points hotter than the “average” photos. But there was pretty decent variance.

About a third of women had their “hotter” photos rated either equal to or worse than their “average” photo.

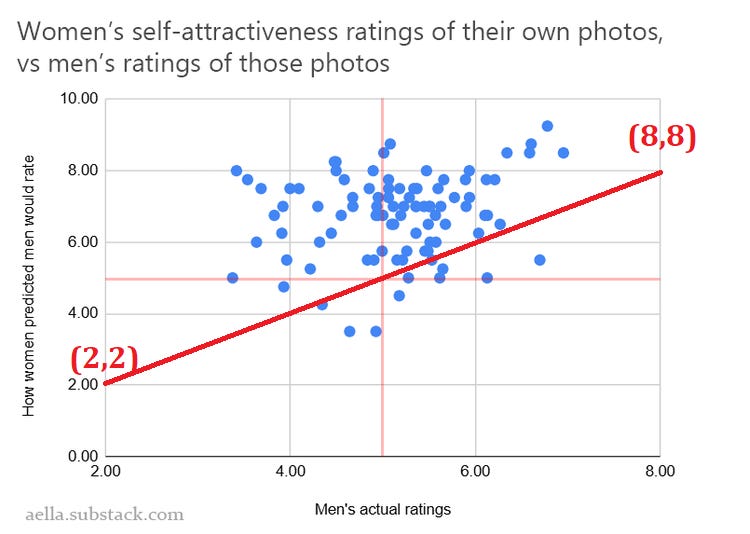

Women were also highly unsuccessful at knowing how hot men would think they are.

Here is the full post. One way to make people less biased is to ask them how they compare to others of the same gender, another is to ask people who is in their league.

The more unattractive you were, the more ‘delusional’ you were, as in your estimate was too high by a higher margin. I don’t buy Aella’s explanation for this, though, because I don’t think you need it – this result is kind of mathematically inevitable, once you accept everyone is overestimating.

And wow, loss aversion is a thing here:

My followers (incomes $30k-$300k) would, on average, pay $12,517 (median $3k) to gain 1 point of attractiveness.

They would pay on average $94,083 (median $10k) to avoid losing 1 point of attractiveness. (n=462)

Counter to my prediction, there was basically no correlation between how hot someone rated themselves as, and the amount they would pay to gain a point or avoid losing a point.

And also, people say they’d pay more to be 6/10 than 10/10, I presume they’re confused.

Only paying $12k for a permanent extra point of attractiveness, were it for sale, is insane. Go into debt if you have to, as they say. You’ll get it back plus extra purely in higher earnings from lookism on the job. If you can do it multiple times, keep hitting that button (and if they let you go above 10, do that and then go to Hollywood!).

At $94k the trade stops being obvious for those on the lower end of the income spectrum, but if you can afford it this still seems like quite the steal, as many times as they’ll sell it to you.

(I’d be a little scared to know what happens beyond 10, but you bet that if it was for sale I would find out.)

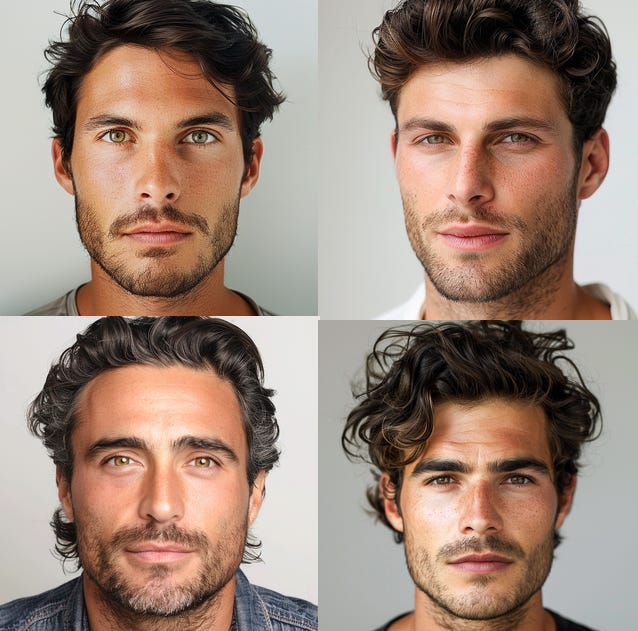

Aella runs the ‘which AI faces are hot according to the opposite gender’ test with male faces, and reports the results. Male average ratings for AI-generated female faces clustered around 5.5 then fell off sharply with a slightly longer left than right tail, whereas female ratings of male AI faces averaged about 4.7 and had a longer right tail that died suddenly.

The patterns as you go from 1 up to 9 on the normalized hotness scale are very clear, especially at the top, where there is clearly one top look. Can you pull it off?

Emmett Shear: Percentage reporting yes on experiencing “god mode”, according to my poll.

SF: women 50%, men 28%

NYC: women 43%, men 67%

Other: women 56%, men 37%

It turns out SF is just about normal for women in this metric and varies relatively little, the main story is SF sucks for men lol.

The main story of San Francisco is that it is a rough place all around, with only 39% god mode, versus 46% for those in neither NYC nor SF. The men are 9% less likely to report ‘god mode’ and the women are 6% less likely, which is within the margin of error here. Whereas New York has 55% god mode, which is much better than 46%, and a major slant towards men.

Note that this is a stable equilibrium, because in their system one partner must pay but not both for a match to occur:

Jake Kozloski (Keeper): Single women are typically surprised to learn that 85% of our paying matchmaking clients are men. They often assume men aren’t interested in commitment.

Cody Zervas (Keeper): Men assume the platform is mostly men and women assume it’s mostly women. Both are surprised to hear we have the other.

Jake Kozloski: Yes on the flip side our total pool is 80% women which tends to surprise men who are used to the terrible ratios on dating apps.

It makes sense that men are more likely to pay for such a service, knowing that women won’t pay for it, and also that they have more ability to pay and can feel less bad about doing so. They have to pay.

It then makes sense that women are more likely to be willing to sign up for free, since many men already paid. And indeed, you could argue that they’re better off not paying. Who wants to match with the guys who signed up for Keeper… for free?

Thus the ultimate version of the guy picking up the check.

And as a result, the women greatly outnumber the men, because it’s a lot more attractive to sign up for free. Which in turn makes it more attractive for men to pay.

Some very bad pickup lines.

Another swing and a miss.

A bold move.

Finally a version you can trust.