I hear word a bunch of new frontier AI models are coming soon, so let’s do this now.

-

Programming Environments Require Magical Incantations.

-

That’s Not How Any of This Works.

-

Cheaters Never Stop Cheating.

-

Variously Effective Altruism.

-

Ceremony of the Ancients.

-

Palantir Further Embraces Its Villain Edit.

-

Government Working.

-

Jones Act Watch.

-

Ritual Asking Of The Questions.

-

Why I Never Rewrite Anything.

-

All The Half-Right Friends.

-

Resident Expert.

-

Do Anything Now.

-

We Have A New Genuine Certified Pope So Please Treat Them Right.

-

Which Was the Style at the Time.

-

Intelligence Test.

-

Constant Planking.

-

RSVP.

-

The Trouble With Twitter.

-

TikTok Needs a Block.

-

Put Down the Phone.

-

Technology Advances.

-

For Your Entertainment.

-

Please Rate This Podcast.

-

I Was Promised Flying Self-Driving Cars.

-

Gamers Gonna Game Game Game Game Game.

-

Sports Go Sports.

I don’t see it as gendered, but so much this, although I do have Cursor working fine.

Aella: Never ever trust men when they say setting up an environment is easy

I’ve been burned so bad I have trauma. Any time a guy says “omg u should try x” I start preemptively crying

Pascal Guay (top comment): Just use @cursor_ai agent chat and prompt it to make this or that environment. It’ll launch all the command lines for you; just need to accept everything and you’ll be done in no time.

Aella: THIS WAS SPARKED BY ME BEING UNABLE TO SET UP CURSOR.

Ronny Fernandez (comment #2): have you tried cursor? it’s really easy.

Piq: Who tf would ever say that regardless of gender? It’s literally the hardest part of coding.

My experience is that setting things up involves a series of exacting magical incantations, which are essentially impossible to derive on your own. Sometimes you follow the instructions and everything goes great but if you get things even slightly wrong it becomes hell to figure out how to recover. The same thing goes for many other aspects of programming.

AI helps with this, but not as much as you might think if you get outside the realms where vibe coding just works for you. Then, once you are set up, within the realm of the parts of the UI you understand things are relatively much easier, but there is very much temptation to keep using the features you understand.

People who play standard economic games, like Dictator, Ultimatum, Trust, Public Goods or Prisoner’s Dilemma, frequently don’t understand the rules. For Trust 70% misunderstood, for Dictator 22%, and incentivized comprehension checks didn’t help. Those who misunderstood typically acted more prosocial.

In many ways this makes the games more realistic, not less. People frequently don’t understand the implications of their actions, or the rules of the (literal or figurative) game they are playing. You have to account for this, and often this is what keeps the game in a much better (or sometimes worse) equilibrium, as is the tendency of many players to play ‘irrationally’ or based on vibes. Dictator is a great example. In a real-world one-shot dictator game situation it’s often wise to do a 50-50 split, and saying ‘but the game theory says’ will not change that.

A recurring theme of life, also see Cheaters Gonna Cheat Cheat Cheat Cheat Cheat.

Jorbs: i have this ludicrous thing where if i see someone cheating at something and lying about it, i start to believe that they aren’t an honest person and that i should be suspicious of other things they say and do.

this is only semi tongue-in-cheek. the number of times in my life someone has directly told me about how they cheat and lie about something, with the expectation that that will not affect how i view them otherwise, is like, much much higher than i would expect it to be.

It happens to me too, as if I don’t know how to update on Bayesian evidence or something. I don’t even need them to be lying about it. The cheating is enough.

There are partial mitigations, where they explain why something is a distinct ‘cheating allowed’ magisteria. But only partial ones. It still counts.

This is definitely a special case of ‘how you do anything is how you do everything,’ and also ‘when people tell you who they are, believe them.’

Spaced Out Matt: This person appears to be an active participant in the “Effective Altruist” movement—and a good reminder that hyper-rational political movements often end up funding lifesaving work on critical health issues

Alexander Berger: Really glad that @open_phil was able to step in on short notice (<24h) to make sure Sarah Fortune's work on TB vaccines can continue.

“Much to the relief of a Harvard University researcher, a California-based philanthropic group is getting into the monkey business.

Dana Gerber: Open Philanthropy, a grant advisor and funder, told the Globe on Friday that it authorized a $500,000 grant to allow researchers at the University of Pittsburgh School of Medicine to complete an ongoing tuberculosis vaccine study that was abruptly cut off from its NIH funding earlier this week, imperiling the lives of its rhesus macaque test subjects.

Am I the only one who thought of this?

In all seriousness, this is great, exactly what you want to happen – stepping in quickly in suddenly high leverage opportunities.

Nothing negative about this, man is an absolute legend.

Simeon: The media negativity bias is truly deranged.

Managing to frame a $200B pledge to philanthropy negatively is an all-time prowess.

Gates is doing what other charitable foundations and givers fail to do, which is to actually spend the damn money to help people and then say their work is done, within a reasonable time frame. Most foundations instead attempt to remain in existence indefinitely by refusing to spend the money.

John Arnold: This is a great decision by Gates that will maximize his impact. All organizations become less effective over time, particularly foundations that have no outside accountability. New institutions will be better positioned to deal with the problems of future generations.

I would allocate funds to different targets, but this someone actually trying.

The Secular Solstice (aka Rationalist Solstice) is by far the best such ritual, it isn’t cringe but even if you think it is, if you reject things that work because they’re cringe you’re ngmi.

Guive Assadi: Steven Pinker: I’ve been part of some not so successful attempts to come up with secular humanist substitutes for religion.

Interviewer: What is the worst one you’ve been involved in?

Steven Pinker: Probably the rationalist solstice in Berkeley, which included hymns to the benefits of global supply chains. I mean, I actually completely endorse the lyrics of the song, but there’s something a bit cringe about the performance.

Rob Bensinger: Who wants to gather some more quotes like this and make an incredible video advertisement for the rat solstice

Rob Wiblin: This is very funny.

But people should do the cringe thing if they truly enjoy it. Cringe would ideally remain permanently fashionable.

Nathan: Pinker himself is perhaps answering why secular humanism hasn’t created a replacement for Christianity. It cares too much what it looks like.

The song he’s referring to is Landsailor. It is no Uplift, but it is excellent, now more than ever. Stop complaining about what you think others will think is cringe and start producing harmony and tears. Cringe is that which you believe is cringe. Stop giving power to the wrong paradox spirits.

Indeed, the central problem with this ritual is that it doesn’t go far enough. We don’t only need Bright Side of Life and Here Comes the Sun (yes you should have a few of these and if you wanted to add You Learn or Closer to Fine or something, yes, we have options), but mostly on the margin we need Mel’s Song, and Still Alive, and Little Echo. People keep trying to make it more accessible and less weird.

How are things going over at Palantir? Oh, you know, doubling down on the usual.

I do notice this is a sudden demand to not build software not that can be misused to help violate the US Constitution.

You know what other software can and will be used this way?

Most importantly frontier LLMs, but also most everything else. Hmm.

And if nothing else, as always, I appreciate the candor in the reply. Act accordingly. And beware the Streisand Effect.

Drop Site: ICE Signs $30 Million Contract With Palantir to Build ‘ImmigrationOS’

ICE has awarded Palantir Technologies a $30 million contract to develop a new software platform to expand its surveillance and enforcement operations, building on Palantir’s decade-long collaboration with ICE.

Key features and functions:

➤ ImmigrationOS will give ICE “real-time visibility” into visa overstays, self-deportation cases, and individuals flagged for removal, including foreign students flagged for removal for protesting.

➤ ImmigrationOS will integrate data from multiple government database systems, helping ICE track immigration violators and coordinate with agencies like Customs and Border Protection.

➤ The platform is designed to streamline the entire immigration enforcement process—from identification to removal—aiming to reduce time, labor, and resource costs.

Paul Graham: It’s a very exciting time in tech right now. If you’re a first-rate programmer, there are a huge number of other places you can go work rather than at the company building the infrastructure of the police state.

Incidentally, I’ll be happy to delete this if Palantir publicly commits never to build things that help the government violate the US constitution. And in particular never to build things that help the government violate anyone’s (whether citizens or not) First Amendment rights.

Ted Mabrey (start of a very long post): I am looking forward to the next set of hires that decided to apply to Palantir after reading your post. Please don’t delete it Paul. We work here in direct response to this world view and do not seek its blessing.

Paul Graham: As I said, I’ll be happy to delete it if you commit publicly on behalf of Palantir not to build things that help the government violate the US constitution. Will you do that, Ted?

Ted Mabrey: First, I really don’t want you to delete this and am happy for it to be on the record.

Second, the reason I’m not engaging in the question is because it’s so obviously in bad faith akin to the “will you promise to stop beating your wife” court room parlor trick. Let’s make the dynamics crystal clear. Just by engaging on that question it establishes a presumption of some kind of guilt in the present or future for us or the government. If I answer, you establish that we need to justify something we have done, which we do not, or accept as a given that we will be asked to break the law, which we have not.

…

or y’all…we have made this promise so many ways from Sunday but I’ll write out a few of them here for them.

…

Paul Graham: When you say “we have made this promise,” what does the phrase “this promise” refer to? Because despite the huge number of words in your answers, I can’t help noticing that the word “constitution” does not occur once.

Ted? What does “this promise” refer to?

…

I gave Ted Mabrey two days to respond, but I think we now have to conclude that he has run away. After pages of heroic-sounding doublespeak, the well has suddenly run dry. I was open to being proven wrong about Palantir, but unfortunately it’s looking like I was right.

Ted tried to make it seem like the issue is a complex one. Actually it’s 9 words. Will Palantir help the government violate people’s constitutional rights? And I’m so willing to give them the benefit of the doubt that I’d have taken Ted’s word for if it he said no. But he didn’t.

Continuing reminder: It is totally reasonable to skip this section. I am doing my best to avoid commenting on politics, and as usual my lack of comment on other fronts should not be taken to mean I lack strong opinions on them. The politics-related topics I still mention are here because they are relevant to this blog’s established particular interests, in particular AI, abundance including housing, energy and trade, economics or health and medicine.

In case it needs to be explained why trying to forcibly bring down drug prices via requiring Most Favored Nation status on those prices would be an epic disaster that hurts everyone and helps no one if we were so foolish as to implement it for real, Jason Abaluck is here to help, do note this thread as well so there is a case where there could be some benefit by preventing other governments from forcing prices down.

Then there’s the other terrible option, which is if it worked in lowering the prices or Trump found some other way to impose such price controls, going into what Tyler Cowen calls full supervillain mode. o3 estimates this would reduce global investment in drug innovation by between 33% and 50%. That seems low to me, and is also treating the move as a one-time price shock rather than a change in overall regime.

I would expect that the imposition of price controls here would actually greatly reduce investment in R&D and innovation essentially everywhere, because everyone would worry that their future profits would also be confiscated. Indeed, I would already be less inclined to such investments now, purely based on the stated intention to do this.

Meanwhile, other things are happening, like an EO that requires a public accounting for all regulatory criminal penalties and that they default to requiring mens rea. Who knew? And who knew? This seems good.

The good news is that Pfizer stock didn’t move that much on the announcement, so mostly people do not think the attempt will work.

There is an official government form where you can suggest deregulations. Use it early, use it often, program your AI to find lots of ideas and fill it out for you.

In all seriousness, if I understood the paperwork and other time sink requirements, I would not have created Balsa Research, and if the paperwork requirements mostly went away I would have founded quite a few other businesses along the way.

Katherine Boyle: We don’t talk enough about how many forms you have to fill out when raising kids. Constant forms, releases, checklists, signatures. There’s a reason why litigious societies have fewer children. People just get tired of filling out the forms.

Mike Solana: the company version of this is also insane fwiw. one of the hardest things about running pirate wires has just been keeping track of the paper work — letters every week, from every corner of the country, demanding something new and stupid. insanely time consuming.

people hear me talk shit about bureaucracy and hear something ‘secretly reactionary coded’ or something and it’s just like no, my practical experience with regulation is it prevents probably 90 to 95% of everything amazing in this world that someone might have tried.

treek: this is why lots of people don’t bother with business extreme blackpill ngl

Mike Solana: yes I genuinely believe this. years ago I was gonna build an app called operator that helped you build businesses. I tried to start with food trucks in LA. hundreds of steps, many of them ambiguous. just very clearly a system designed to prevent new businesses from existing.

A good summary of many of the reasons our government doesn’t work.

Tracing Woods: How do we overcome this?

Alec Stapp: This is the best one-paragraph explanation for what’s gone wrong with our institutions:

I could never give that good a paragraph-length explanation, because I would have split that into three paragraphs, but I am on board with the content.

At core, the problem is a ratcheting up of laws and regulatory barriers against doing things, as our legal structures focus on harms and avoiding lawsuits but ignore the ‘invisible graveyard’ of utility lost.

The abundance agenda says actually this is terrible, we should mostly do the opposite. In some places it can win at least small victories, but the ratchet continues, and at this point a lot of our civilization essentially cannot function.

Once again, cutting FDA staff without changing the underlying regulations doesn’t get rid of the stupid regulations, it only makes everything take longer and get worse.

Jared Hopkins (Wall Street Journal): “Biotech companies developing drugs for hard-to-treat diseases and other ailments are being forced to push back clinical trials and drug testing in the wake of mass layoffs at the Food and Drug Administration.”

…

“When you cut the administrative staff and you still have these product deadlines, you’re creating an unwinnable situation,” he said. The worst thing for companies isn’t getting guidance when needed and following all the steps for approval, only to “prepare a $100 million application and get denied because of something that could’ve been communicated or resolved before the trial was under way,” Scheineson said.

Paul Graham: I heard this directly from someone who works for a biotech startup. Layoffs at the FDA have slowed the development of new drugs.

Jim Cramer makes the case to get rid of the ‘ridiculous Jones Act.’ Oh well, we tried.

The recent proposals around restricting shipping even further caused so much panic (and Balsa to pivot) for a good reason. If enacted in their original forms, they would have been depression-level catastrophic. Luckily, we pulled back from the brink, and are now only proposing ordinary terrible additional restrictions, not ‘kill the world economy’ level restrictions.

Also note that for all the talk about the dangers of Chinese ships, the regulations were set to apply to all non-American ships, Jones Act style, with some amount of absolute requirement to use American ships.

That’s a completely different rule. If the rule only applies to Chinese ships in particular but not to ships built in Japan, South Korea or Europe, I don’t love it, but by 2025 standards it would be ‘fine.’

Ryan Peterson: Good to see the administration listened to feedback on their proposed rule on Chinese ships. The final rule published today is a lot more reasonable.

John Konrad: Nothing in my 18 years since founding Captain has caused more panic than @USTradeRep’s recent proposal to charge companies that own Chinese ships $1 million per port call in the US.

USTR held hearings on the fees and today issued major modifications.

The biggest problem was the original port fees proposed by Trump late February was there were ship size and type agnostic.

All Chinese built ships would be charged $1.5 million per port and $1 million for any ship owned by a company that operates chinese built ships.

This was ok for a very large containership with 17,000 boxes that could absorb the fee. But it would have been devastating for a bulker that only carries low value cement.

The new proposal differentiates between ship size and types of cargo.

Specific fees are $50 per net to with the following caveats that go into effect in 6 months.

•Fees on vessel owners & operators of China based on net cargo tonnage, increasing incrementally over the following years;

•Fees on operators of Chinese-built ships based on net tonnage or containers, increasing incrementally over the following years; and

•To incentivize U.S.-built car carrier vessels, fees on foreign-built car carrier vessels based on their capacity.

The second phase actions will not take place for 3 years and is specifically for LNG ships:

•To incentivize U.S.-built liquified natural gas (LNG) vessels, limited restrictions on transporting LNG via foreign vessels. Restrictions will increase incrementally over 22 years.

… [more details of things we shouldn’t be doing, but probably aren’t catastrophic]

Another major complaint of the original proposal was that ships would be charged the fee each time they enter a US Port. This meant a ship discharging at multiple ports i one voyage would suffer millions in fees and likely cause them to visit fewer small ports.

That cargo would have to be put on trucks, clogging already overburdened highways

The new proposal charges the fee per voyage or string of U.S. port calls.

The proposal also excludes Jones Act ships and short sea shipping options (small ships and barges that move between ports)

In short this new proposal is a lot more adaptable and reasonable but still put heavy disincentives on owners that build ships in China.

These are just the highlights. The best way to learn more is to read @MikeSchuler’s article explaining the new proposal.

They also dropped fleet composition penalties, and the rule has at least some phase-in of the fees, along with dropping the per-port-of-call fee. Overall I see the new proposal as terrible but likely not the same kind of crisis-level situation we had before.

Then there’s the crazy ‘phase 2’ that requires the LNG sector in particular to use a portion US-built vessels. Which is hard, since only one such vessel exists and is 31 years old with an established route, and building new such ships to the extent it can be done is prohibitively expensive. The good news is this would start in 2028 and phase in over 22 (!) years, which is an actually reasonable time frame for trying to do this. There’s still a good chance this would simply kill America’s ability to export LNG, hurting our economy and worsening the climate. Again, if you want to use non-Chinese-built ships, that is something we can work around.

Ryan Peterson asks how to fix the fact that without the Jones Act he fears America would build zero ships, as opposed to currently building almost zero ships. Scott Lincicome suggests starting here, but it mostly doesn’t address the question. The bottom line is that American shipyards are not competitive, and are up against highly subsidized competition. If we feel the need for American shipyards to build our ships, we are going to have to subsidize that a lot plus impose export discipline.

Or we can choose to not to spend enough to actually fix this, or simply accept that comparative advantage is a thing and it’s fine to get our ships from places like Japan, and redirect our shipyards to doing repairs on the newly vastly greater number of passing ships and on building Navy ships to ensure what is left is supported.

Someone clearly is neither culturally rationalist nor culturally Jewish.

Robin Hanson (I don’t agree): “Rituals” are habits and patterns of behavior where we are aware of not fully understanding why we should do them the way we do. A mark of modernity was the aspiration to end ritual by either understanding them or not doing them.

We of course still do lots of behavior patterns that we do not fully understand. Awareness of this fact varies though.

Yes we don’t understand this modern habit fully, making it a ritual.

In My Culture, the profoundest act of worship is to try and understand.

Ritual is not about not understanding, at most it is about not needing to understand at first in order to start, and about preserving something important without having to as robustly preserve understanding of the reasons.

Ritual is about Doing the Thing because it is The Thing You Do. That in no way precludes you understanding why you are doing it.

Indeed, one of the most important Jewish rituals is always asking ‘why do we do this thing, ritual or otherwise?’ This is most explicit in the Seder, where we ask the four questions and we answer them, but in a general sense if you don’t know why you’re doing a Jewish thing and don’t ask why, you are doing it wrong.

This is good. The rationalists follow the same principle. The difference is that rather than carrying over many rituals and traditions for thousands of years, we mostly design them anew for the modern world.

But you can’t do that properly, or choose the right rituals for you, and you certainly can’t wisely choose to stop doing rituals you’re already doing, unless you understand what they are for. Which is a failure mode that is happening a lot, often justified by the invocation of a now-sacred moral principle that must stand above all, even if the all includes key load bearing parts of civilization.

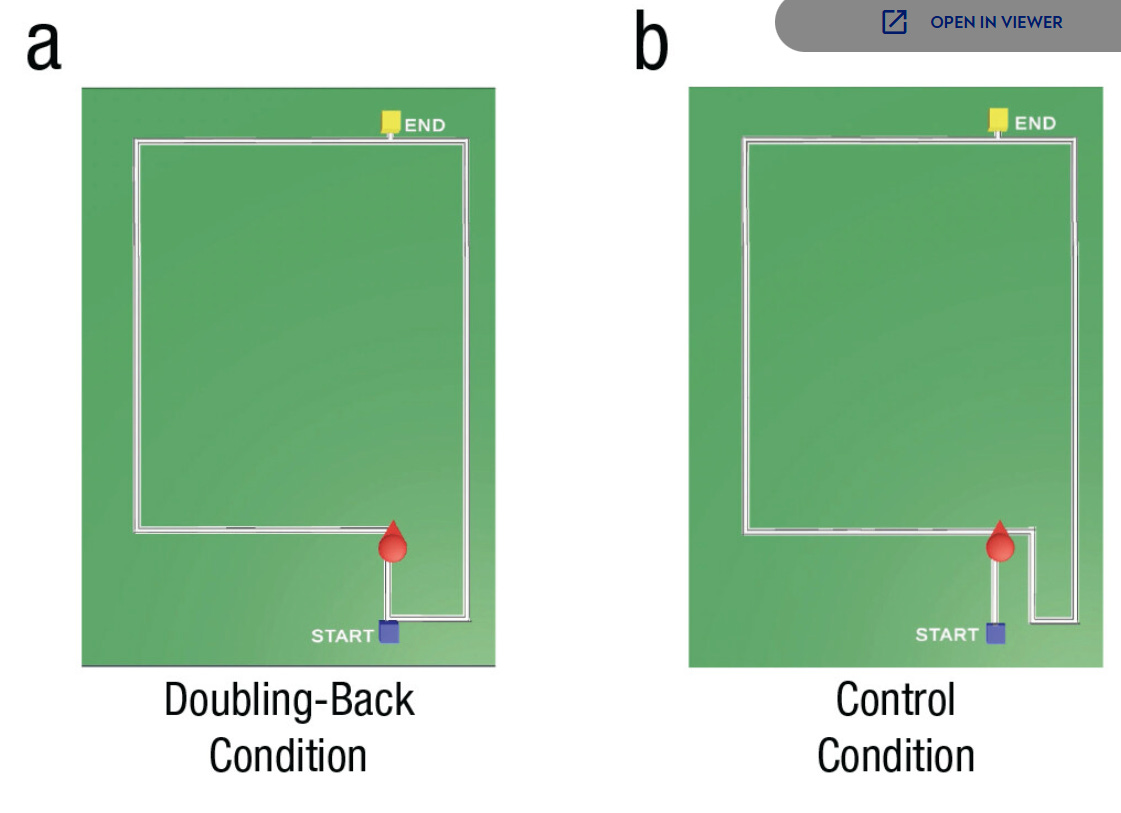

Introducing the all-new Doubling-Back Aversion, the concept that we are reluctant to go backwards, on top of the distinct Sunk Cost Fallacy. I can see it, but I am suspicious, especially of their example of having flown SFO→LAX intending to go then to JFK, and then being more willing to go LAX→DEN→JFK than LAX→SFO→JFK even if the time saved is the same, because you started in SFO. I mean, I can see why it’s frustrating a little, but I suspect the bigger effect here is just that DEN is clearly ‘on the way’ to JFK, and SFO isn’t, and there’s a clear bias against ‘going backwards.’ They do try to cover this, such as here:

But I still don’t see a strong case here for this being a distinct new bias, as opposed to being the sum of existing known issues.

The case by Dr. Todd Kashdan for seeking out ‘48% opposites’ as friends and romantic partners. You want people who think different, he says, so sparks can fly and new ideas can form and fun can be had, not some boring static bubble of sameness. But then he also says to seek ‘slightly different’ people who will make you sweat, which seems very different to me. As in, you want 10%-20% opposites, maybe 30%, but not 48%, probably on the higher end for friends and lower end for romantic partners, and if you’re a man dating women or vice versa that 10%-20% is almost certainly covered regardless.

There are, in theory, exceptions. I do remember once back in the day finding a 99% match on OKCupid (those were the days!), a woman who said she only rarely and slowly ever responded to anyone but whose profile was like a bizarro world female version of me. In my opening email I told her as much, asking her to respond the way she’d respond to herself. I’ll always wonder what that would have been like if we’d ever met in person – would it have been ‘too good’ a match? She did eventually write back months later as per a notification I got, but by then I was with my wife, so I didn’t reply.

Patrick McKenzie is one of many to confirm that there are lots of things about the world that are not so hard to find out or become an expert in, but where no one has chosen to do the relevant work. If there is a particular policy area or other topic where you put your focus, it’s often very practical to become the World’s Leading Expert and even be the person who gets consulted, and for there to be big wins available to be found, simply because no one else is seriously trying or looking. Getting people’s attention? That part is harder.

Kelsey Piper: This is related to one of the most important realizations of my adult life, which is that there is just so much in the modern world that no one is doing; reasonably often if you can’t find the answer to a question it just hasn’t been answered.

If you are smart, competent, a fast learner and willing to really throw yourself into something, you can answer a question to which our civilization does not have an answer with weeks to months of work. You can become an expert in months to years.

There is not an efficient market in ideas; it’s not even close. There are tons and tons of important lines of thought and work that no one is exploring, places where it’d be valuable to have an expert and there simply isn’t one.

Patrick McKenzie: Also one of the most important and terrifying lessons of my adult life.

Mine too.

Michael Nielsen: This is both true *andcan be hard to recognize. A friend once observed that an organization had been important for his formative growth, but it was important to move away, because it was filled with people who didn’t realize how derivative their work was; they thought they were pushing frontiers, but weren’t

One benefit of a good PhD supervisor is that they’ll teach you a lot about how to figure out when you’re on that frontier

And yes, by default you get to improve some small corner of the world, but that’s already pretty good, and occasionally you strike gold.

Zy (QTing Kelsey Piper): There’s so much diminishing returns to this stuff it’s not even funny. 400 years ago you could do this and discover Neptune or cellular life

Today you can do it and figure out a condition wherein SSRIs cause 3% less weight gain or an antenna with 5% better fidelity or something

Marko Jukic: Guy 400 years ago: “There’s so much diminishing returns to this stuff it’s not even funny. 400 years ago you could do this and discover Occam’s Razor or the Golden Rule. Today the best you can do is prove that actually 4% more angels can dance on the head of a pin.”

Autumn: 7 years ago a fairly small team in san francisco figured out how to make machines think.

Alternatively, even if there are diminishing returns, so what? Even the diminished returns, even excluding the long tail of big successes, are still very, very good.

Apologies with longer words are perceived as more genuine. I think this perception is correct. The choice to bother using longer words is a costly signal, which is the point of apologizing in the first place. Even if you’re ‘faking it’ it still kind of counts.

Endorsed:

Cate Hall: Amazing how big the quality of life improvements are downstream of “let me take this off future me’s plate.”

It’s not just shifting work up in time — it’s saving you all the mental friction b/w now & when you do it. Total psychic cost is the integral of cognitive load over time.

Sam Martin: conversely, “I’ll deal with this later” is like swiping a high-interest cognitive load credit card (said the man whose CLCC is constantly maxed out)

Thus there is a huge distinction between ‘things you can deal with later without having to otherwise think about it’ and other things. If you can organize things such that you’ll be able to deal with something later in a way that lets you not otherwise think about it, that’s much better. Whereas if that’s not possible, my lord, do it now.

If you can reasonably do it now, do it now anyway. Time saved in the future is typically worth more than time now, because this gives you slack. When you need time, sometimes you suddenly really desperately need time.

How to make $100k betting on the next Pope, from someone who did so.

I did not wager because I try not to do that anymore and because it’s specifically a mortal sin to bet on a Papal election and I actually respect the hell out of that, but I also thought that the frontrunners almost had to be rich given the history of Conclaves and how diverse the Cardinals are, and the odds seemed to be favoring Italians too much. I wouldn’t have picked out Prevost without doing the research.

I also endorse not doubling down after the white smoke, if anything the odds seemed more reasonable at that point rather than less. Peter Wildeford similarly made money betting purely against Parolin, the clear low-effort move.

The past sucked in so many ways. The quality of news and info was one of them.

Roon: If you read old analytical news articles, im talking even just 30 years old, most don’t even stand to muster against the best thread you read on twitter on any given day. The actual longform analysis pieces in most newspapers are also much better.

we’ve done a great amount of gain of function research on Content.

Roon then tries to walk it back a bit, but I disagree with the walking back. The attention to detail is better now, too. Or rather, we used to pay more attention to detail, but we still get the details much more right today, because it’s just way way easier to check details. It used to be they’d get them wrong and no one would know.

Here’s a much bigger and more well known way the past sucked.

Hunter Ash: People who are desperate to retvrn to the past can’t understand how nightmarish the past was. When you tell them, they don’t believe it.

Tyler Cowen asks how very smart people meet each other. Dare I say ‘at Lighthaven’? My actual answer is that you meet very smart people by going to and participating in the things and spaces smart people are drawn to or that select for smart people. That can include a job, and frequently does.

Also, you meet them by noticing particular very smart people and then reaching out to them, they’re mostly happy to hear from you if you bring interestingness.

Will Bachman: I’m the host of a podcast, The 92 Report, which has the goal of interviewing every member of the Harvard-Radcliffe Class of 1992. Published 130 episodes so far. (~1,500 left to go)

Based on this sample, most friendships start through some extracurricular activity, which provides the opportunity to work together over a sustained period, longer than one course. Also people care about it more than any particular class.

At the Harvard Crimson for example on a typical day in 1990 you’d find in the building Susan B Glasser (New Yorker), Josh Gerstein (Politico), Michael Grunwald (Time, Politico), Julian E Barnes, Ira Stoll, Sewell Chan, Jonathan Cohn, and a dozen other individuals whose bylines are now well known.

Many current non-profit leaders met through their work at Philips Brooks House.

Many top TV writers met at the Harvard Lampoon.

Many Hollywood names met through theatre productions.

Strong lifelong friendships formed in singing groups.

Asking Harvard graduates how they met people is quite the biased sample. ‘Go to Harvard’ is indeed one of the best ways to meet smart or destined-to-be-successful people. That’s the best reason to go to Harvard. Of course they met each other in Harvard-related activities a lot. But this is not an actionable plan, although you can and should attempt to do lesser versions of this. Go where the smart people are, do the things they are doing, and also straight up introduce yourself.

Here’s a cool idea, the key is to ignore the statement when it’s wrong:

Bryan Johnson: when this happens, my team and I now say “plank” and the person speaking immediately stops. Everyone is now much happier.

Gretchen Lynn: This is funny, because every time a person with ADHD interrupts/responds too quickly to me because they think they already understood my sentence, they end up being wrong about what I was saying or missing important context. I see this meme all the time like it’s a superpower, but…be aware you may be driving the people in your life insane 😂

Gretchen is obviously mistaken. Whether or not one has ADHD, very often it is very clear where a sentence (or paragraph, or entire speech) is going well before it is finished. Similarly, often there are scenes in movies or shows where you can safety skip large chunks of them, confident you missed nothing.

That can be a Skill Issue, but often it is not. It is often important that the full version of a statement, scene or piece of writing exists – some people might need it, you’re not putting that work on the other person, and also it’s saying you have thought this through and have brought the necessary receipts. But that doesn’t mean, in this case, you actually have to bother with it.

Then there are situations where there is an ‘obvious’ version of the statement, but that’s not actually what someone was going for.

So when you say ‘plank’ here, you’re saying is ‘there is an obvious-to-me version of where you are going with this, I get it, if that’s what you are saying you can stop, and if it’s more than that you can skip ahead.’

But, if that’s wrong, or you’re unsure it’s right? Carry on, or give me the diff, or give me to quick version. And this in turn conveys the information that you think the ‘plank’ call was premature.

Markets in everything!

Allie: I’m not usually the type to get jealous over other people’s weddings

But I saw a girl on reels say she incentivized people to RSVP by making the order in which people RSVP their order to get up and get dinner and I am being driven to insanity by how genius that is.

No walking it back, this is The Way.

Why do posts with links get limited on Twitter?

Predatory myopic optimization for ‘user-seconds on site,’ Musk explains.

Elon Musk: To be clear, there is no explicit rule limiting the reach of links in posts. The algorithm tries (not always successfully) to maximize user-seconds on X, so a link that causes people to cut short their time here will naturally get less exposure.

xlr8harder: i’m old enough to remember when he used to use the word “unregretted” before “user-seconds”

yes, people, i know unregretted is subjective and hard to measure. the point is it was aspirational and provided some countervailing force against the inexorable tug toward pure engagement optimization.

“whelp. turns out it was hard!” is not a good reason to abandon it.

caden: MLE who used to work on the X algo told me Elon was far more explicit in maximizing user-seconds than previous management The much-maligned hall monitors pre-Elon cared more about the “unregretted” caveat.

Danielle Fong: deleting “unregretted” in “unregretted user seconds” rhymes with deleting “don’t” in “don’t be evil.”

I am also old enough to remember that. Oh well. It’s hard to measure ‘unregretted.’

Even unregretted, of course, would still not understand what is at stake here. You want to provide value to the user, and this is what gets them to want to use your service, to come back, and builds up a vibrant internet with Twitter at its center. Deprioritizing links is a hostile act, quite similarly destructive to a massive tariff, destroying the ability to trade.

It is sad that major corporations consistently prove unable to understand this.

Elon Musk has also systematically crippled the reach and views of Twitter accounts that piss him off, and by ‘piss him off’ we usually mean disagree with him but also he has his absurd beef with Substack.

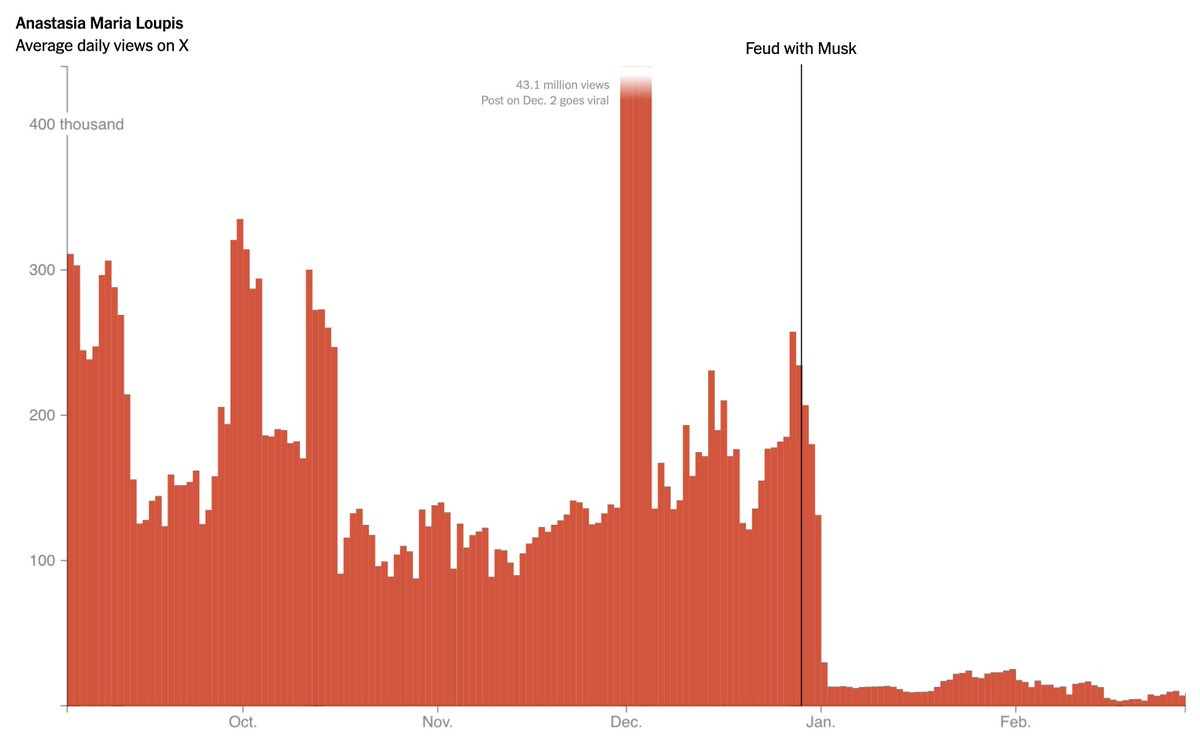

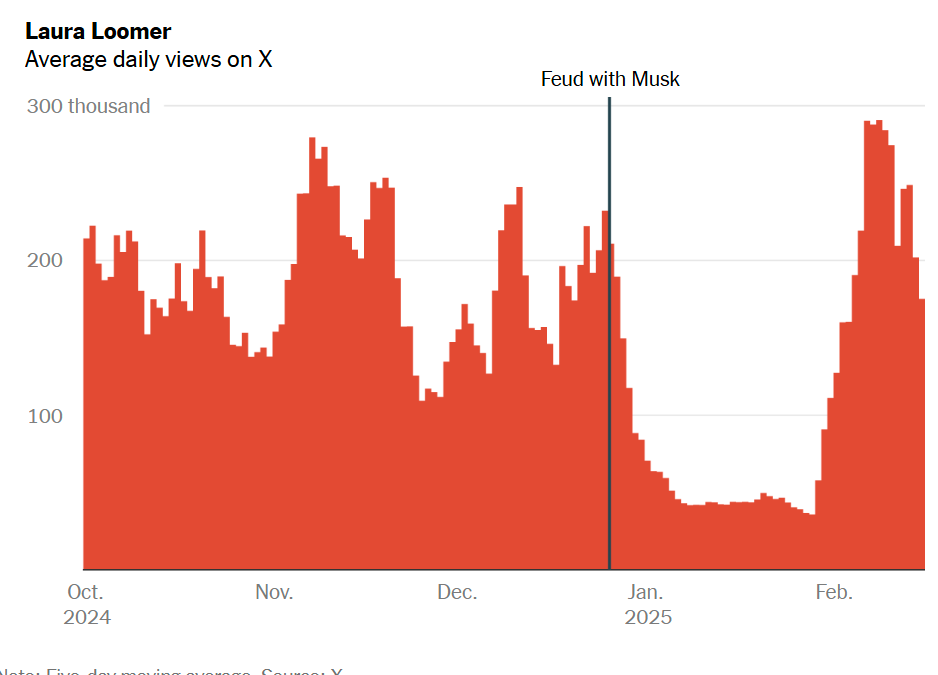

Stuart Thompson (NYT): The New York Times found three users on X who feuded with Mr. Musk in December only to see their reach on the social platform practically vanish overnight.

…

Mr. Musk has offered several clues to what happened, writing on X amid the feud that if powerful accounts blocked or muted others, their reach would be sharply limited. (Mr. Musk is the most popular user on X with more than 219 million followers, so his actions to block or mute users could hold significant sway.)

Timothy Lee: This is pretty bad.

At other times It Gets Better, this is Laura Loomer, who explicitly lost her monetization over this and then got it back at the end of the fued:

There’s also a third user listed, Owen Shroyer, who did not recover.

One could say that all three of these are far-right influencers, and this seems unlikely to be a coincidence. It’s still not okay to put one’s thumb on the scale like this, even if it doesn’t carry over to others, but it does change the context and practical implications a lot. He who lives by also dies by, and all that.

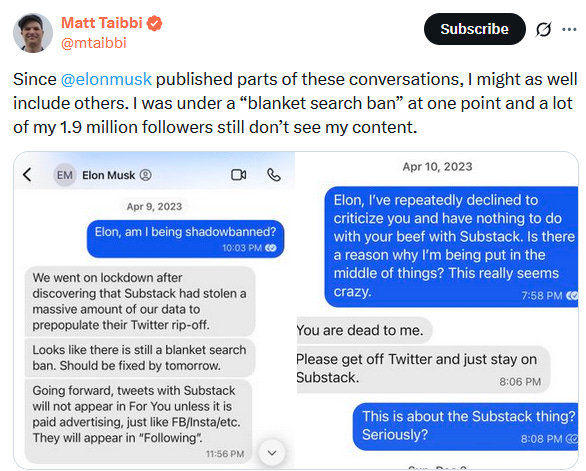

Tracing Woods: see also: Taibbi, Matt.

As a general rule, even though technically there Aint No Rule it is not okay and a breach of decorum to ‘bring the receipts’ from text conversations even without an explicit privacy agreement. And most importantly, remember that if you do it to them then it’s open season for them to also do it to you.

Matt Taibbi remains very clearly shadowbanned up through April 2025. If you go to his Twitter page and look at the views on each post, they are flattened out the way Substack view counts are, and are largely uncorrelated with other engagement measures, which indicates they are coming from the Following tab and not from the algorithmic feed. No social media algorithm works this way.

A potential counterargument is that Musk feuds rather often, there are a lot of other claims of similar impacts, and NYT only found these three definitive examples. But three times by default should be considered enemy action, and the examples are rather stark.

The question is, in what other ways is Musk messing with the algorithm?

Here’s a post that Elon Musk retweeted, that seems to have gotten far more views than the algorithm could plausibly have given it on its own, even with that retweet.

Geoffrey Hinton: I like OpenAI’s mission of ‘ensure that artificial general intelligence benefits all of humanity”, and I’d like to stop them from completely gutting it. I’ve signed on to a new letter to @AGRobBonta & @DE_DOJ asking them to halt the restructuring.

AGI is the most important and potentially dangerous technology of our time. OpenAI was right that this technology merits strong structures and incentives to ensure it is developed safely, and is wrong now in attempting to change these structures and incentives. We’re urging the AGs to protect the public and stop this.

.

Hasan Can: I was serious when I said Elon Musk will keep messing with OpenAI as long as he holds power in USA. Geoffrey’s [first] tweet hit a full 31 million views. Getting that level of view with just 6k likes isn’t typically possible; I think Elon himself pushed that post.

Putting together everything that has happened, what should we now make of Elon Musk’s decision to fire 80% of Twitter employees without replacement?

Here is a debate.

Shin Megami Boson: the notion of a “fake email job” is structurally the same as a belief in communism. the communist looks at a system far more complex than he can understand and decides the parts he doesn’t understand must have no real purpose & are instead due to human moral failing of some kind.

Marko Jukic: Would you have told that to Elon Musk before he fired 80% of the people working at Twitter with no negative effect?

Do you think Twitter is the only institution in our society where 80% of people could be fired? What do you think those people are doing besides shuffling emails?

Alexander Doria: Yes, this. He mostly removed salespeople and marketing teams that were the core commercial activity of old Twitter.

Marko Jukic (who somehow doesn’t follow Gwern): You are completely delusional if you think this and so is Gwern, though I can’t see his reply.

Gwern: Yes, and I would have been right. Twitter revenue and users crashed into the floor, and after years of his benevolent guidance, they weren’t even breakeven before the debt interest – and he just bailed out Twitter using Xai, eating a loss of something like $30b to hide it all.

Alexander Doria: If I remember correctly, main ad campaigns stopped primarily as their usual commercial contact was not there anymore. And Musk strategy on this front was totally unclear and unable to reassure.

Marko Jukic: Right, please ignore the goons celebrating their victory and waving around a list of scalps and future targets. Pay no mind to that. This was all just a simple brain fart, where Elon Musk just *forgothow to accept payments for ads, and advertisers forgot how to make them! Duh!

Quite an explanation. “My single best example of how 80% of employees can be cut is Twitter.” “Twitter was one of the biggest disasters ever.” “Ah yes, well, of course, all those goons and scalps. Naturally it failed. What, are you dense? Anyway, 80% of employees are useless.”

There’s no question Twitter has, on a technical and functional level, held up far better than median expectations, although it sure seems like having more productive employees to work on things like the bot problems and Twitter search being a disaster would have been a great idea. And a lot of what Musk did, for good and bad, was because he said so not because of a lack of personnel – if you put me in charge of Twitter I would be able to improve it a lot even if I wasn’t allowed to add headcount.

There’s also no question that Twitter’s revenue collapsed, and that xAI ultimately more or less bailed it out. One can argue that the advertisers left for reasons other than the failures of the marketing department (as in, failing to have a marketing department) and certainly there were other factors but I find it rather suspicious to think that gutting the marketing department without replacement didn’t hurt the marketing efforts quite a bit. I mean, if your boss is out there alienating all the advertisers whose job do you think it is to convince them to stop that and come back? Yes, it’s possible the old employees were terrible, but then hire new ones.

In some sense wow, in another sense there are no surprises here and all these TikTok documents are really saying is they have a highly addictive product via the TikTok algorithm, and it comes with all the downsides of social media platforms, and they’re not that excited to do much about those downsides.

On the other hand, these quotes are doozers. Some people were very much not following the ‘don’t write down what you don’t want printed in the New York Times.’

Neil ‘O Brien: WOW: @JonHaidt got info from inside TikTok [via Attorney Generals] admitting how they target kids: “The product in itself has baked into it compulsive use… younger users… are particularly sensitive to reinforcement in the form of social reward and have minimal ability to self-regulate effectively”

Jon Haidt and Zack Rausch: We organize the evidence into five clusters of harms:

-

Addictive, compulsive, and problematic use

-

Depression, anxiety, body dysmorphia, self-harm, and suicide

-

Porn, violence, and drugs

-

Sextortion, CSAM, and sexual exploitation

-

TikTok knows about underage use and takes little action

…

As one internal report put it:

“Compulsive usage correlates with a slew of negative mental health effects like loss of analytical skills, memory formation, contextual thinking, conversational depth, empathy, and increased anxiety,” in addition to “interfer[ing] with essential personal responsibilities like sufficient sleep, work/school responsibilities, and connecting with loved ones.”

Although these harms are known, the company often chooses not to act. For example, one TikTok employee explained,

“[w]hen we make changes, we make sure core metrics aren’t affected.” This is because “[l]eaders don’t buy into problems” with unhealthy and compulsive usage, and work to address it is “not a priority for any other team.”2

…

“The reason kids watch TikTok is because the algo[rithm] is really good. . . . But I think we need to be cognizant of what it might mean for other opportunities. And when I say other opportunities, I literally mean sleep, and eating, and moving around the room, and looking at somebody in the eyes.”

…

“Tiktok is particularly popular with younger users who are particularly sensitive to reinforcement in the form of social reward and have minimal ability to self-regulate effectively.”

…

As Defendants have explained, TikTok’s success “can largely be attributed to strong . . . personalization and automation, which limits user agency” and a “product experience utiliz[ing] many coercive design tactics,” including “numerous features”—like “[i]nfinite scroll, auto-play, constant notifications,” and “the ‘slot machine’ effect”—that “can be considered manipulative.”

Again, nothing there that we didn’t already know.

Similarly, for harm #2, this sounds exactly like various experiments done with YouTube, and also I don’t really know what you were expecting:

In one experiment, Defendants’ employees created test accounts and observed their descent into negative filter bubbles. One employee wrote, “After following several ‘painhub’ and ‘sadnotes’ accounts, it took me 20 mins to drop into ‘negative’ filter bubble. The intensive density of negative content makes me lower down mood and increase my sadness feelings though I am in a high spirit in my recent life.” Another employee observed, “there are a lot of videos mentioning suicide,” including one asking, “If you could kill yourself without hurting anybody would you?”

The evidence on harms #3 and #4 seemed unremarkable and less bad than I expected.

And it is such a government thing to quote things like this, for #5:

TikTok knows this is particularly true for children, admitting internally: (1) “Minors are more curious and prone to ignore warnings” and (2) “Without meaningful age verification methods, minors would typically just lie about their age.”

…

To start, TikTok has no real age verification system for users. Until 2019, Defendants did not even ask TikTok users for their age when they registered for accounts. When asked why they did not do so, despite the obvious fact that “a lot of the users, especially top users, are under 13,” founder Zhu explained that, “those kids will anyway say they are over 13.”

…

Over the years, other of Defendants’ employees have voiced their frustration that “we don’t want to [make changes] to the For You feed because it’s going to decrease engagement,” even if “it could actually help people with screen time management.”

The post ends with a reminder of the study where students on average would ask $59 for TikTok and $47 for Instagram in exchange for deleting their accounts, but less than zero if everyone did it at once.

Once again, let’s run this experiment. Offer $100 to every student at some college or high school, in exchange for deleting their accounts. See what happens.

Tyler Cowen links to another study on suspending social media use, which was done in 2020 and came out in April 2025 – seriously, academia, that’s an eternity, we gotta do something about this, just tweet the results out or something. In any case, what they found was that if users were convinced to deactivate Facebook for six weeks before the election, they report an 0.06 standard deviation improvement in happiness, depression and anxiety, and it was 0.041 SDs for Instagram.

Obviously that is a small enough effect to mostly ignore. But once again, we are not comparing to the ‘control condition’ of no social media. We are comparing to the control condition of everyone else being on social media without you, and you previously having invested in social media and now abandoning it, while expecting to come back and being worried about what you aren’t seeing, and also being free to transfer to other platforms.

Again, note the above study – you’d have to pay people to get off TikTok and Instagram, but if you could get everyone else off as well, they’d pay you.

Tyler Cowen: What is wrong with the simple model that Facebook and Instagram allow you to achieve some very practical objectives, such as staying in touch with friends or expressing your opinions, at the cost of only a very modest annoyance (which to be clear existed in earlier modes of communication as well)?

What is wrong with this model is that using Facebook and Instagram also imposes costs on others for not using them, which is leading to a bad equilibrium for many. And also that these are predatory systems engineered to addict users, so contra Zuckerberg’s arguments to Thompson and Patel in recent interviews we should not assume that the users ‘know best’ and are using internet services only when they are better off for it.

Tom Meadowcroft: I regard social media as similar to alcohol.

1. It is not something that we’ve evolved to deal with in quantity.

2. It is mildly harmful for most people.

3. It is deeply harmful for a significant minority for whom it is addictive.

4. Many people enjoy it because it seems to ease social engagement.

5. It triggers receptors in our brains that make us desire it.

6. There are better ways to get those pleasure spikes, but they are harder and rarer IRL.

7. If we were all better people, we wouldn’t need or desire either, but we are who we are.

I use alcohol regularly and social media rarely.

I think social media has a stronger case than alcohol. It does provide real and important benefits when used wisely in a way that you can’t easily substitute for otherwise, whereas I’m not convinced alcohol does this. However, our current versions of social media are not great for most people.

So if the sign of impact for temporary deactivation is positive at all, that’s a sign that things are rather not good, although magnitude remains hard to measure. I would agree that (unlike in the case of likely future highly capable AIs) we do not ‘see a compelling case for apocalyptic interpretations’ as Tyler puts it, but that shouldn’t be the bar for realizing you have a problem and doing something about it.

Court rules against Apple, says it wilfully defied the court’s previous injunction and has to stop charging commissions on purchases outside its software marketplace and open up the App Store to third-party payment options.

Stripe charges 2.9% versus Apple’s 15%-30%. Apple will doubtless keep fighting every way it can, but the end of the line is now likely to come at some point.

Market reaction was remarkably muted, on the order of a few percent, to what is a central threat to Apple’s entire business model, unless you think this was already mostly priced in or gets reversed often on appeal.

Recent court documents seem to confirm the claim that Google actively wanted their search results to be worse so they could serve more ads? This is so obviously insane a thing to do. Yes, short term it might benefit you if it happens you can get away with it, but come on.

A theory about A Minecraft Movie being secretly much more interesting than it looks.

A funny thing that happens these days is running into holiday episodes from an old TV show, rather than suddenly having all the Halloween, Thanksgiving or Christmas episodes happening at the right times. There’s no good fix for this given continuity issues, but maybe AI could fix that soon?

Gallabytes’s stroll down memory lane there reminds me that the actual biggest changes in TV programs are that you previously had to go with whatever happened to be on or that you’d taped – which was a huge pain and disaster and people structured their day around it, this was a huge deal – and that even ignoring that the old shows really did suck. Man, with notably rare exceptions they sucked, on every level, until at least the late 90s. You can defend old movies but you cannot in good faith defend most older television.

Fun fact:

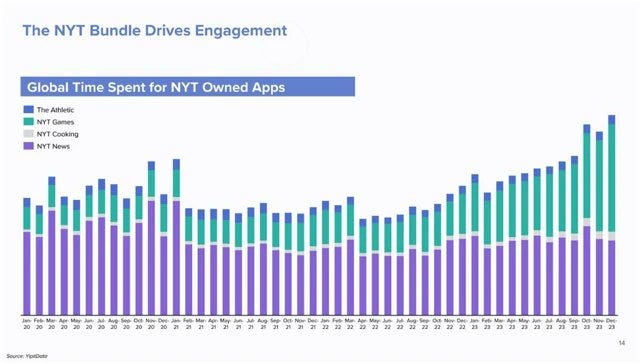

Samuel Hammond: Over half the NYT’s subscriber time on site is now just for the games.

That’s about half a billion in subscriber revenue driven by a crossword and a handful of basic puzzle games.

It is a stunning fact, but I don’t think that’s quite what this means. Time spent on site is very different from value extracted. The ability to read news when it matters is a ton more valuable per minute than the games, even if you spend more time on the games. It’s not obvious what is driving subscriptions.

Further praise for Thunderbolts*, which I rated 4.5/5 stars and for now is my top movie of 2025 (although that probably won’t hold, in 2024 it would have been ~4th), from the perspective of someone treating it purely as a Marvel movie in a fallen era.

Zac Hill: Okay Thunderbolts is in the Paddington 2 tier of “movies that have no business being nearly as good as they somehow are”. Like this feels like the first definitive take on whatever weird era we find ourselves inhabiting now. Also the first great Marvel film in years.

What more is there to want: overt grappling with oblivion-inducing despair stemming from how to construct meaning in a world devoid of load-bearing institutions? Violent Night references? Selina Meyer? Florence Pugh having tons of fun???

Okay I can’t/wont shut up about this movie (Thunderbolts). For every reason New Cap America sucked and was both bad and forgettable, this movie was great – in a way that precisely mirrors the turning of the previous era into this strange new world in which we’re swimming.

Even the credits sequence is just like the graveyarding of every institution whose legitimacy has been hemorrhaged, executed with a subtlety and craftsmanship that is invigorating. But WITHOUT accepting, and giving into, cynicism!

Indeed, it is hard for words to describe the amount of joy I got from the credits sequence, that announced very clearly We Hear You, We Get It, and We Are So Back.

Gwern offers a guide to finding good podcast content, as opposed to the podcast that will get the most clicks. You either need to find Alpha from undiscovered voices, or Beta from getting a known voice ‘out of their book’ and producing new content rather than repeating talking points and canned statements. As a host you want to seek out guests where you can extract either Alpha or Beta, and and as listener or reader look for podcasts where you can do the same.

Alpha is relative to your previous discoveries. As NBC used to say, if you haven’t seen it, it’s new to you. If you haven’t ever heard (Gwern’s example) Mark Zuckerberg talk, his Lex Fridman interview will have Alpha to you despite Lex’s ‘sit back, lob softballs and let them talk’ strategy which lacks Beta.

Another way of putting that is, you only need to hear about any given person’s book (whether or not it involves a literal book, which it often does) once every cycle of talking points. You can get that one time from basically any podcast, and it’s fine. But you then wouldn’t want to do that again.

Gwern lists Mark Zuckerberg and Satya Nadella as tough nuts to crack, and indeed the interviews Dwarkesh did with them showed this, with Nadella being especially ‘well-coached,’ and someone too PR-savvy like MrBeast as a bad guest who won’t let you do anything interesting and might torpedo the whole thing.

My pick for toughest nut to crack is Tyler Cowen. No one has a larger, more expansive book, and most people interviewing him never seem to get him to start thinking. Plus, because he’s Tyler Cowen, he’s the one person Tyler Cowen won’t do the research for.

There are of course also other reasons to listen to or host podcasts.

Surge pricing comes to Waymo. You can no longer raise supply, but you can still ration supply and limit demand, so it is still the correct move. But how will people react? There is a lot of pearl clutching about how this hurts the poor or ‘creates losers,’ but may I suggest that if you can’t take the new prices you can call an Uber or Lyft without them being integrated into the same app? Or you can wait.

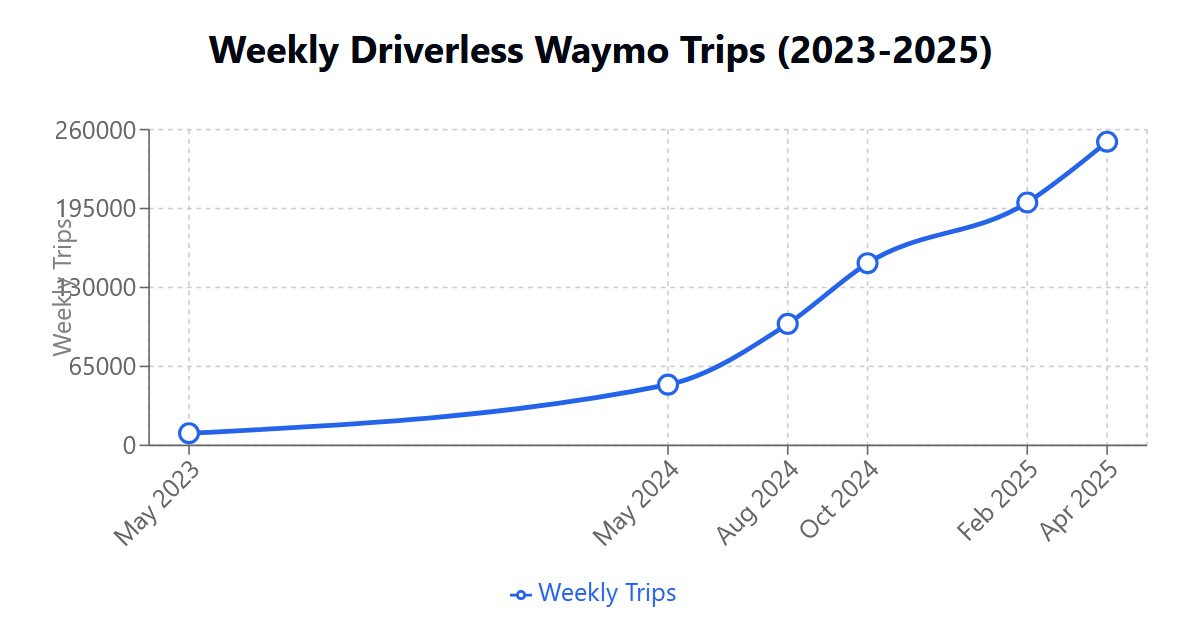

Waymo hits 250k rides per week in April 2025, two months after 200k.

Waymo is partnering with Toyota for a new autonomous vehicle platform. Right now, Waymo faces multiple bottlenecks, but one key one is that it is tough to build and equip enough vehicles. Solving that problem would go a long way.

Waymo’s injury rate reductions imply that fully self-driving cars would reduce road deaths by 34,800 annually. It’s probably more than that, because most of the remaining crashes by Waymos are caused by human drivers.

Aurora begins commercial driverless trucking in Texas between Dallas and Houston.

Europa Universalis 5 is coming. If you thought EU4 was complex, this is going to be a lot more complex. It looks like it will be fascinating and a great experience for those who have that kind of time, but this is unlikely to include me. It is so complex they let you automate large portions of the game, with the problem that if you do that how will you then learn it?

They’re remaking the Legend of Heroes games, a classic Japanese RPG series a la Final Fantasy and Dragon Quest, starting with Trials In The Sky in September. Oh to have this kind of time.

They’re considering remaking Chrono Trigger. I agree with the post here that a remake is unnecessary. The game works great as it is.

Proposal for a grand collaboration to prove you cannot beat Super Mario Bros. in less than 17685 frames, the best human time remains 17703. This would be an example of proving things about real world systems, and we’ve already put a ton of effort into optimizing this. Peter is about 50% that there is indeed no way to do better than 17685.

If you know, you know:

Emmett Shear: This is pure genius and would be incredible for teaching about a certain kind of danger. Please please someone do this.

RedJ: i think sama is working on it?

Emmett Shear: LOL wrong game I don’t want them in the game of life.

College sports are allocating talent efficiently. You didn’t come here to play school.

And That’s Terrible?

John Arnold: College sports broken:

“Among the top eight quarterbacks in the Class of 2023, Texas’ Arch Manning is now the only one who hasn’t transferred from the school he signed with out of high school.” –@TheAthletic

I do think it is terrible. Every trade and every transfer makes sports more confusing and less enjoyable. The stories are worse. It harder to root for players and teams. It makes it harder to work as a team or to invest in the future of players, both as athletes and as students. And it enshrines the top teams to always be the top teams. In the long run, I find it deeply corrosive.

I find it confusing that there is this much transferring going on. There are large costs to transferring for the player. You have an established campus life and friends. You have connections to the team and the coach and have established goodwill. There are increasing returns to staying in one place. So you would think that there would be strong incentives to stay put and work out a deal that benefits everyone.

The flip side is that there are a lot of teams out there, so the one you sign with is unlikely to be the best fit going forward, especially if you outperform expectations, which changes your value and also your priorities and needs.

I love college football, but they absolutely need to get the transferring under control. It’s gone way too far. My guess is the best way forward is to allow true professional contracts with teams that replace the current NIL system, which would allow for win-win deals that involve commitment or at least backloading compensation, and various other incentives to limit transfers.

I am not saying the NBA fixes the draft lottery, but… no wait I am saying the NBA fixes the draft lottery, given Dallas getting the first pick this year combined with previous incidents. I don’t know this for certain, but at this point, come on.

As Seth Burn puts it, there are ways to get provably random outcomes. The NBA keeps not using those methods. This keeps resulting in outcomes that are unlikely and suspiciously look like fixes. Three times is enemy action. This is more than three.

On the other hand, I do like that tanking for the first pick is being actively punished, even if it’s being done via blatant cheating. At some point everyone knows the league is choosing the outcome, so it isn’t cheating, and I’m kind of fine with ‘if we think you tanked without our permission you don’t get the first pick.’