A Chinese-born crypto tycoon—of all people—changed the way I think of space

“Are we the first generation of digital nomad in space?”

Chun Wang orbits the Earth inside the cupola of SpaceX’s Dragon spacecraft. Credit: Chun Wang via X

For a quarter-century, dating back to my time as a budding space enthusiast, I’ve watched with a keen eye each time people have ventured into space.

That’s 162 human spaceflight missions since the beginning of 2000, ranging from Space Shuttle flights to Russian Soyuz missions, Chinese astronauts’ first forays into orbit, and commercial expeditions on SpaceX’s Dragon capsule. Yes, I’m also counting privately funded suborbital hops launched by Blue Origin and Virgin Galactic.

Last week, Jeff Bezos’ Blue Origin captured headlines—though not purely positive—with the launch of six women, including pop star Katy Perry, to an altitude of 66 miles (106 kilometers). The capsule returned to the ground 10 minutes and 21 seconds later. It was the first all-female flight to space since Russian cosmonaut Valentina Tereshkova’s solo mission in 1963.

Many commentators criticized the flight as a tone-deaf stunt or a rich person’s flex. I won’t make any judgments, except to say two of the passengers aboard Blue Origin’s capsule—Aisha Bowe and Amanda Nguyen—have compelling stories worth telling.

Immerse yourself

Here’s another story worth sharing. Earlier this month, an international crew of four private astronauts took their own journey into space aboard a Dragon spacecraft owned and operated by Elon Musk’s SpaceX. Like Blue Origin’s all-female flight, this mission was largely bankrolled by a billionaire.

Actually, it was a couple of billionaires. Musk used his fortune to fund a large portion of the Dragon spacecraft’s development costs alongside a multibillion-dollar contribution from US taxpayers. Chun Wang, a Chinese-born cryptocurrency billionaire, paid SpaceX an undisclosed sum to fly one of SpaceX’s ships into orbit with three of his friends.

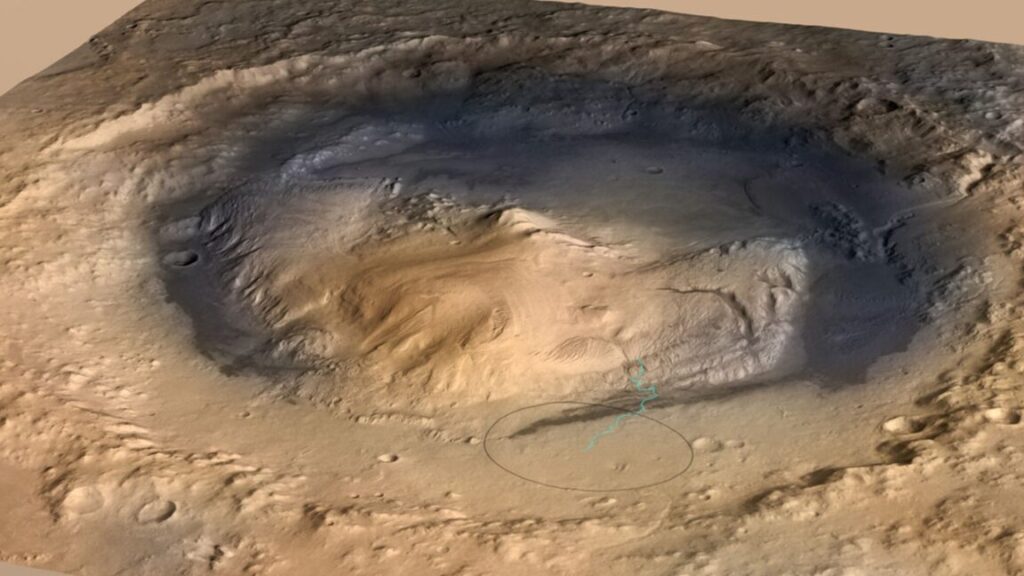

So far, this seems like another story about a rich guy going to space. This is indeed a major part of the story, but there’s more to it. Chun, now a citizen of Malta, named the mission Fram2 after the Norwegian exploration ship Fram used for polar expeditions at the turn of the 20th century. Following in the footsteps of Fram, which means “forward” in Norwegian, Chun asked SpaceX if he could launch into an orbit over Earth’s poles to gain a perspective on our planet no human eyes had seen before.

Joining Chun on the three-and-a-half-day Fram2 mission were Jannicke Mikkelsen, a Norwegian filmmaker and cinematographer who took the role of vehicle commander. Rabea Rogge, a robotics researcher from Germany, took the pilot’s seat and assisted Mikkelsen in monitoring the spacecraft’s condition in flight. Wang and Eric Philips, an Australian polar explorer and guide, flew as “mission specialists” on the mission.

Chun’s X account reads like a travelogue, with details of each jet-setting jaunt around the world. His propensity for sharing travel experiences extended into space, and I’m grateful for it.

The Florida peninsula, including Kennedy Space Center and Cape Canaveral, through the lens of Chun’s iPhone. Credit: Chun Wang via X

Usually, astronauts might share their reflections from space by writing posts on social media, or occasionally sharing pictures and video vignettes from the International Space Station (ISS). This, in itself, is a remarkable change from the way astronauts communicated with the public from space just 15 years ago.

Most of these social media posts involve astronauts showcasing an experiment they’re working on or executing a high-flying tutorial in physics. Often, these videos include acrobatic backflips or show the novelty of eating and drinking in microgravity. Some astronauts, like Don Pettit, who recently came home from the ISS, have a knack for gorgeous orbital photography.

Chun’s videos offer something different. They provide an unfiltered look into how four people live inside a spacecraft with an internal volume comparable to an SUV, and the awe of seeing something beautiful for the first time. His shares have an intimacy, authenticity, and most importantly, an immediacy I’ve never seen before in a video from space.

One of the videos Chun recorded and posted to X shows the Fram2 crew members inside Dragon the day after their launch. The astronauts seem to be enjoying themselves. Their LunchBot meal kits float nearby, and the capsule’s makeshift trash bin contains Huggies baby wipes and empty water bottles, giving the environment a vibe akin to a camping trip, except for the constant hum of air fans.

Later, Chun shared a video of the crew opening the hatch leading to Dragon’s cupola window, a plexiglass extension with panoramic views. Mikkelsen and Chun try to make sense of what they’re seeing.

“Oh, Novaya Zemlya, do you see it?” Mikkelsen asks. “Yeah. Yeah. It’s right here,” Chun replies. “Oh, damn. Oh, it is,” Mikkelsen says.

Chun then drops a bit of Cold War trivia. “The largest atomic bomb was tested here,” he says. “And all this ice. Further north, the Arctic Ocean. The North Pole.”

Flight Day 3 pic.twitter.com/vLlbAKIOvl

— Chun (@satofishi) April 3, 2025

On the third day of the mission, the Dragon spacecraft soared over Florida, heading south to north on its pole-to-pole loop around the Earth. “I can see our launch pad from here,” Mikkelsen says, pointing out NASA’s Kennedy Space Center several hundred miles away.

Flying over our launch site. pic.twitter.com/eHatUsOJ20

— Chun (@satofishi) April 3, 2025

Finally, Chun capped his voyage into space with a 30-second clip from his seat inside Dragon as the spacecraft fires thrusters for a deorbit burn. The capsule’s small rocket jets pulsed repeatedly to slow Dragon’s velocity enough to drop out of orbit and head for reentry and splashdown off the coast of California.

Lasers in LEO

It wasn’t only Chun’s proclivity for posting to social media that made this possible. It was also SpaceX’s own Starlink Internet network, which the Dragon spacecraft connected to with a “Plug and Plaser” terminal mounted in the capsule’s trunk. This device allowed Dragon and its crew to transmit and receive Internet signals through a laser link with Starlink satellites orbiting nearby.

Astronauts have shared videos similar to those from Fram2 in the past, but almost always after they are back on Earth, and often edited and packaged into a longer video. What’s unique about Chun’s videos is that he was able to immediately post his clips, some of which are quite long, to social media via the Starlink Internet network.

“With a Starlink laser terminal in the trunk, we can theoretically achieve speeds up to 100 or more gigabits per second,” said Jon Edwards, SpaceX’s vice president for Falcon launch vehicles, before the Fram2 mission’s launch. “For Fram2, we’re expecting around 1 gigabit per second.”

Compare this with the connectivity available to astronauts on the International Space Station, where crews have access to the Internet with uplink speeds of about 4 to 6 megabits per second and 500 kilobits to 1 megabit per second of downlink, according to Sandra Jones, a NASA spokesperson. The space station communications system provides about 1 megabit per second of additional throughput for email, an Internet telephone, and video conferencing. There’s another layer of capacity for transmitting scientific and telemetry data between the space station and Mission Control.

So, Starlink’s laser connection with the Dragon spacecraft offers roughly 200 to 2,000 times the throughput of the Internet connection available on the ISS. The space station sends and receives communication signals, including the Internet, through NASA’s fleet of Tracking and Data Relay Satellites.

The laser link is also cheaper to use. NASA’s TDRS relay stations are dedicated to providing communication support for the ISS and numerous other science missions, including the Hubble Space Telescope, while Dragon plugs into the commercial Starlink network serving millions of other users.

SpaceX tested the Plug and Plaser device for the first time in space last year on the Polaris Dawn mission, which was most notable for the first fully commercial spacewalk in history. The results of the test were “phenomenal,” said Kevin Coggins, NASA’s deputy associate administrator for Space Communications and Navigation.

“They have pushed a lot of data through in these tests to demonstrate their ability to do data rates just as high as TDRS, if not higher,” Coggins said in a recent presentation to a committee of the National Academies.

Artist’s illustration of a laser optical link between a Dragon spacecraft and a Starlink satellite. Credit: SpaceX

Edwards said SpaceX wants to make the laser communication capability available for future Dragon missions and commercial space stations that may replace the ISS. Meanwhile, NASA is phasing out the government-owned TDRS network. Coggins said NASA’s relay satellites in geosynchronous orbit will remain active through the remaining life of the International Space Station, and then will be retired.

“Many of these spacecraft are far beyond their intended service life,” Coggins said. “In fact, we’ve retired one recently. We’re getting ready to retire another one. In this period of time, we’re going to retire TDRSs pretty often, and we’re going to get down to just a couple left that will last us into the 2030s.

“We have to preserve capacity as the constellation gets smaller, and we have to manage risks,” Coggins said. “So, we made a decision on November 8, 2024, that no new users could come to TDRS. We took it out of the service catalog.”

NASA’s future satellites in Earth orbit will send their data to the ground through a commercial network like Starlink. The agency has agreements worth more than $278 million with five companies—SpaceX, Amazon, Viasat, SES, and Telesat—to demonstrate how they can replace and improve on the services currently provided by TDRS (pronounced “tee-dress”).

These companies are already operating or will soon deploy satellites that could provide radio or laser optical communication links with future space stations, science probes, and climate and weather monitoring satellites. “We’re not paying anyone to put up a constellation,” Coggins said.

After these five companies complete their demonstration phase, NASA will become a subscriber to some or all of their networks.

“Now, instead of a 30-year-old [TDRS] constellation and trying to replenish something that we had before, we’ve got all these new capabilities, all these new things that weren’t possible before, especially optical,” Coggins said. “That’s going to that’s going to mean so much with the volume and quality of data that you’re going to be able to bring down.”

Digital nomads

Chun and his crewmates didn’t use the Starlink connection to send down any prize-winning discoveries about the Universe, or data for a comprehensive global mapping survey. Instead, the Fram2 crew used the connection for video calls and text messages with their families through tablets and smartphones linked to a Wi-Fi router inside the Dragon spacecraft.

“Are we the first generation of digital nomad in space?” Chun asked his followers in one X post.

“It was not 100 percent available, but when it was, it was really fast,” Chun wrote of the Internet connection in an email to Ars. He told us he used an iPhone 16 Pro Max for his 4K videos. From some 200 miles (300 kilometers) up, the phone’s 48-megapixel camera, with a simulated optical zoom, brought out the finer textures of ice sheets, clouds, water, and land formations.

While the flight was fully automated, SpaceX trained the Fram2 crew how to live and work inside the Dragon spacecraft and take over manual control if necessary. None of Fram2 crew members had a background in spaceflight or in any part of the space industry before they started preparing for their mission. Notably, it was the first human spaceflight mission to low-Earth orbit without a trained airplane pilot onboard.

Chun Wang, far right, extends his arm to take an iPhone selfie moments after splashdown in the Pacific Ocean. Credit: SpaceX

Their nearly four days in orbit was largely a sightseeing expedition. Alongside Chun, Mikkelsen put her filmmaking expertise to use by shooting video from Dragon’s cupola. Before the flight, Mikkelsen said she wanted to create an immersive 3D account of her time in space. In some of Wang’s videos, Mikkelsen is seen working with a V-RAPTOR 8K VV camera from Red Digital Cinema, a device that sells for approximately $25,000, according to the manufacturer’s website.

The crew spent some of their time performing experiments, including the first X-ray of a human in space. Scientists gathered some useful data on the effects of radiation on humans in space because Fram2 flew in a polar orbit, where the astronauts were exposed to higher doses of ionizing radiation than a person might see on the International Space Station.

After they splashed down in the Pacific Ocean at the end of the mission, the Fram2 astronauts disembarked from the Dragon capsule without the assistance of SpaceX ground teams, which typically offer a helping hand for balance as crews readjust to gravity. This demonstrated how people might exit their spaceships on the Moon or Mars, where no one will be there to greet them.

Going into the flight, Chun wanted to see Antarctica and Svalbard, the Norwegian archipelago where he lives north of the Arctic Circle. In more than 400 human spaceflight missions from 1961 until this year, nobody ever flew in an orbit directly over the poles. Sophisticated satellites routinely fly over the polar regions to take high-resolution imagery and measure things like sea ice.

The Fram2 astronauts’ observations of the Arctic and Antarctic may not match what satellites can see, but their experience has some lasting catchet, standing alone among all who have flown to space before.

“People often refer to Earth as a blue marble planet, but from our point of view, it’s more of a frozen planet,” Chun told Ars.

A Chinese-born crypto tycoon—of all people—changed the way I think of space Read More »