Apple kicked Musi out of the App Store based on YouTube lie, lawsuit says

“Will Must ever come back?”

Popular music app says YouTube never justified its App Store takedown request.

Musi, a free music-streaming app only available on iPhone, sued Apple last week, arguing that Apple breached Musi’s developer agreement by abruptly removing the app from its App Store for no good reason.

According to Musi, Apple decided to remove Musi from the App Store based on allegedly “unsubstantiated” claims from YouTube that Musi was infringing on YouTube’s intellectual property. The removal came, Musi alleged, based on a five-word complaint from YouTube that simply said Musi was “violating YouTube terms of service”—without ever explaining how. And YouTube also lied to Apple, Musi’s complaint said, by claiming that Musi neglected to respond to YouTube’s efforts to settle the dispute outside the App Store when Musi allegedly showed evidence that the opposite was true.

For years, Musi users have wondered if the service was legal, Wired reported in a May deep dive into the controversial app. Musi launched in 2016, providing a free, stripped-down service like Spotify by displaying YouTube and other publicly available content while running Musi’s own ads.

Musi’s curious ad model has led some users to question if artists were being paid for Musi streams. Reassuring 66 million users who downloaded the app before its removal from the App Store, Musi has long maintained that artists get paid for Musi streams and that the app is committed to complying with YouTube’s terms of service, Wired reported.

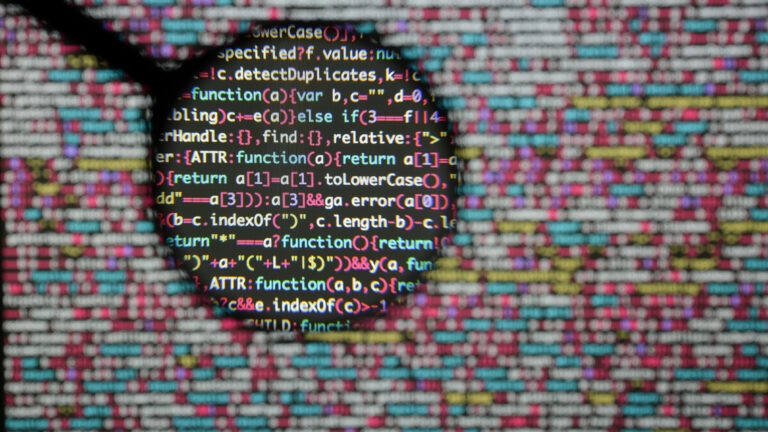

In its complaint, Musi fully admits that its app’s streams come from “publicly available content on YouTube’s website.” But rather than relying on YouTube’s Application Programming Interface (API) to make the content available to Musi users—which potentially could violate YouTube’s terms of service—Musi claims that it designed its own “augmentative interface.” That interface, Musi said, does not “store, process, or transmit YouTube videos” and instead “plays or displays content based on the user’s own interactions with YouTube and enhances the user experience via Musi’s proprietary technology.”

YouTube is apparently not buying Musi’s explanations that its service doesn’t violate YouTube’s terms. But Musi claimed that it has been “engaged in sporadic dialog” with YouTube “since at least 2015,” allegedly always responding to YouTube’s questions by either adjusting how the Musi app works or providing “details about how the Musi app works” and reiterating “why it is fully compliant with YouTube’s Terms of Service.”

How might Musi have violated YouTube’s TOS?

In 2021, Musi claimed to have engaged directly with YouTube’s outside counsel in hopes of settling this matter.

At that point, YouTube’s counsel allegedly “claimed that the Musi app violated YouTube’s Terms of Service” in three ways. First, Musi was accused of accessing and using YouTube’s non-public interfaces. Next, the Musi app was allegedly a commercial use of YouTube’s service, and third, relatedly, “the Musi app violated YouTube’s prohibition on the sale of advertising ‘on any page of any website or application that only contains Content from the Service or where Content from the Service is the primary basis for such sales.'”

Musi supposedly immediately “addressed these concerns” by reassuring YouTube that the Musi app never accesses its non-public interfaces and “merely allows users to access YouTube’s publicly available website through a functional interface and, thus, does not use YouTube in a commercial way.” Further, Musi told YouTube in 2021 that the app “does not sell advertising on any page that only contains content from YouTube or where such content is the primary basis for such sales.”

Apple suddenly becomes mediator

YouTube clearly was not persuaded by Musi’s reassurances but dropped its complaints until 2023. That’s when YouTube once again complained directly to Musi, only to allegedly stop responding to Musi entirely and instead raise its complaint through the App Store in August 2024.

That pivot put Apple in the middle of the dispute, and Musi alleged that Apple improperly sided with YouTube.

Once Apple got involved, Apple allegedly directed Musi to resolve the dispute with YouTube or else risk removal from the App Store. Musi claimed that it showed evidence of repeatedly reaching out to YouTube and receiving no response. Yet when YouTube told Apple that Musi was the one that went silent, Apple accepted YouTube’s claim and promptly removed Musi from the App Store.

“Apple’s decision to abruptly and arbitrarily remove the Musi app from the App Store without any indication whatsoever from the Complainant as to how Musi’s app infringed Complainant’s intellectual property or violated its Terms of Service,” Musi’s complaint alleged, “was unreasonable, lacked good cause, and violated Apple’s Development Agreement’s terms.”

Those terms state that removal is only on the table if Apple “reasonably believes” an app infringes on another’s intellectual property rights, and Musi argued Apple had no basis to “reasonably” believe YouTube’s claims.

Musi users heartbroken by App Store removal

This is perhaps the grandest stand that Musi has made yet to defend its app against claims that its service isn’t legal. According to Wired, one of Musi’s earliest investors backed out of the project, expressing fears that the app could be sued. But Musi has survived without legal challenge for years, even beating out some of Spotify’s top rivals while thriving in this seemingly gray territory that it’s now trying to make more black and white.

Musi says it’s suing to defend its reputation, which it says has been greatly harmed by the app’s removal.

Musi is hoping a jury will agree that Apple breached its developer agreement and the covenant of good faith and fair dealing by removing Musi from the App Store. The music-streaming app has asked for a permanent injunction immediately reinstating Musi in the App Store and stopping Apple from responding to third-party complaints by removing apps without any evidence of infringement.

An injunction is urgently needed, Musi claimed, since the app only exists in Apple’s App Store, and Musi and its users face “irreparable damage” if the app is not restored. Additionally, Musi is seeking damages to be determined at trial to make up for “lost profits and other consequential damages.”

“The Musi app did not and does not infringe any intellectual property rights held by Complainant, and a reasonable inquiry into the matter would have led Apple to conclude the same,” Musi’s complaint said.

On Reddit, Musi has continued to support users reporting issues with the app since its removal from the App Store. One longtime user lamented, “my heart is broken,” after buying a new iPhone and losing access to the app.

It’s unclear if YouTube intends to take Musi down forever with this tactic. In May, Wired noted that Musi isn’t the only music-streaming app taking advantage of publicly available content, predicting that if “Musi were to shut down, a bevy of replacements would likely sprout up.” Meanwhile, some users on Reddit reported that fake Musi apps keep popping up in its absence.

For Musi, getting back online is as much about retaining old users as it is about attracting new downloads. In its complaint, Musi said that “Apple’s decision has caused immediate and ongoing financial and reputational harm to Musi.” On Reddit, one Musi user asked what many fans are likely wondering: “Will Musi ever come back,” or is it time to “just move to a different app”?

Ars could not immediately reach Musi’s lawyers, Apple, or YouTube for comment.

Apple kicked Musi out of the App Store based on YouTube lie, lawsuit says Read More »