It seems like just yesterday it was the AR this, VR that, metaverse, metaverse, metaverse. Now all anyone can talk about is artificial intelligence. Is that a bad sign for XR? Some people seem to think so. However, people in the XR industry understand that it’s not a competition.

In fact, artificial intelligence has a huge role to play in building and experiencing XR content – and it’s been part of high-level metaverse discussions for a very long time. I’ve never claimed to be a metaverse expert and I’m not about to claim to be an AI expert, so I’ve been talking to the people building these technologies to learn more about how they help each other.

The Types of Artificial Intelligence in Extended Realities

For the sake of this article, there are three main different branches of artificial intelligence: computer vision, generative AI, and large language models. AI is more complicated than this, but this helps to get us started talking about how it relates to XR.

Computer Vision

In XR, computer vision helps apps recognize and understand elements in the environment. This places virtual elements in the environment and sometimes lets them react to that environment. Computer vision is also increasingly being used to streamline the creation of digital twins of physical items or locations.

Niantic is one of XR’s big world-builders using computer vision and scene understanding to realistically augment the world. 8th Wall, an acquisition that does its own projects but also serves as Niantic’s WebXR division, also uses some AI but is also compatible with other AI tools, as teams showcased in a recent Innovation Lab hackathon.

“During the sky effects challenge in March, we saw some really interesting integrations of sky effects with generative AI because that was the shiny object at the time,” Caitlin Lacey, Niantic’s Senior Director of Product Marketing told ARPost in a recent interview. “We saw project after project take that spin and we never really saw that coming.”

The winner used generative AI to create the environment that replaced the sky through a recent tool developed by 8th Wall. While some see artificial intelligence (that “shiny object”) as taking the wind out of immersive tech’s sails, Lacey sees this as an evolution rather than a distraction.

“I don’t think it’s one or the other. I think they complement each other,” said Lacey. “I like to call them the peanut butter and jelly of the internet.”

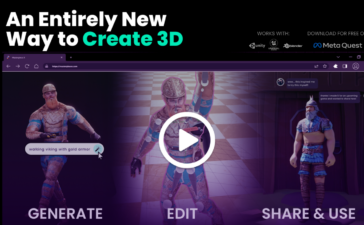

Generative AI

Generative AI takes a prompt and turns it into some form of media, whether an image, a short video, or even a 3D asset. Generative AI is often used in VR experiences to create “skyboxes” – the flat image over the virtual landscape where players have their actual interactions. However, as AI gets stronger, it is increasingly used to create virtual assets and environments themselves.

Artificial Intelligence and Professional Content Creation

Talespin makes immersive XR experiences for training soft skills in the workplace. The company has been using artificial intelligence internally for a while now and recently rolled out a whole AI-powered authoring tool for their clients and customers.

A release shared with ARPost calls the platform “an orchestrator of several AI technologies behind the scenes.” That includes developing generative AI tools for character and world building, but it also includes work with other kinds of artificial intelligence that we’ll explore further in the article, like LLMs.

“One of the problems we’ve all had in the XR community is that there’s a very small contingent of people who have the interest and the know-how and the time to create these experiences, so this massive opportunity is funneled into a very narrow pipeline,” Talespin CEO Kyle Jackson told ARPost. “Internally, we’ve seen a 95-97% reduction in time to create [with AI tools].”

Talespin isn’t introducing these tools to put themselves out of business. On the contrary, Jackson said that his team is able to be even more involved in helping companies workshop their experiences because his team is spending less time building the experiences themselves. Jackson further said this is only one example of a shift happening to more and more jobs.

“What should we be doing to make ourselves more valuable as these things shift? … It’s really about metacognition,” said Jackson. “Our place flipped from needing to know the answer to needing to know the question.”

Artificial Intelligence and Individual Creators

DEVAR launched MyWebAR in 2021 as a no-code authoring tool for WebAR experiences. In the spring of 2023, that platform became more powerful with a neural network for AR object creation.

In creating a 3D asset from a prompt, the network determines the necessary polygon count and replicates the texture. The resulting 3D asset can exist in AR experiences and serve as a marker itself for second-layer experiences.

“A designer today is someone who can not just draw, but describe. Today, it’s the same in XR,” DEVAR founder and CEO Anna Belova told ARPost. “Our goal is to make this available to everyone … you just need to open your imagination.”

Blurring the Lines

“From strictly the making a world aspect, AI takes on a lot of the work,” Mirrorscape CEO Grant Anderson told ARPost. “Making all of these models and environments takes a lot of time and money, so AI is a magic bullet.”

Mirroscape is looking to “bring your tabletop game to life with immersive 3D augmented reality.” Of course, much of the beauty of tabletop games come from the fact that players are creating their own worlds and characters as they go along. While the roleplaying element has been reproduced by other platforms, Mirrorscape is bringing in the individual creativity through AI.

“We’re all about user-created content, and I think in the end AI is really going to revolutionize that,” said Grant. “It’s going to blur the lines around what a game publisher is.”

Even for those who are professional builders but who might be independent or just starting out, artificial intelligence, whether to create assets or just for ideation, can help level the playing field. That was a theme of a recent Zapworks workshop “Can AI Unlock Your Creating Potential? Augmenting Reality With AI Tools.”

“AI is now giving individuals like me and all of you sort of superpowers to compete with collectives,” Zappar executive creative director Andre Assalino said during the workshop. “If I was a one-man band, if I was starting off with my own little design firm or whatever, if it’s just me freelancing, I now will be able to do so much more than I could five years ago.”

NeRFs

Neural Radiance Fields (NeRFs) weren’t included in the introduction because they can be seen as a combination of generative AI and computer vision. It starts out with a special kind of neural network called a multilayer perceptron (MLP). A “neural network” is any artificial intelligence that’s based off of the human brain, and an MLP is … well, look at it this way:

If you’ve ever taken an engineering course, or even a highschool shop class, you’ve been introduced to drafting. Technical drawings represent a 3D structure as a series of 2D images, each showing different angles of the 3D structure. Over time, you can get pretty good at visualizing the complete structure from these flat images. An MLP can do the same thing.

The difference is the output. When a human does this, the output is a thought – a spatial understanding of the object in your mind’s eye. When an MLP does this, the output is a NeRF – a 3D rendering generated from the 2D images.

Early on, this meant feeding countless images into the MLP. However, in the summer of 2022, Apple and the University of British Columbia developed a way to do it with one video. Their approach was specifically interested in generating 3D models of people from video clips for use in AR applications.

Whether a NeRF recreates a human or an object, it’s quickly becoming the fastest and easiest way to make digital twins. Of course, the only downside is that NeRF can only create digital models of things that already exist in the physical world.

Digital Twins and Simulation

Digital twins can be built with or without artificial intelligence. However, some use cases of digital twins are powered by AI. These include simulations like optimization and disaster readiness. For example, a digital twin of a real campus can be created, but then modified on a computer to maximize production or minimize risk in different simulated scenarios.

“You can do things like scan in areas of a refinery, but then create optimized versions of that refinery … and have different simulations of things happening,” MeetKai co-founder and executive chairwoman Weili Dai told ARPost in a recent interview.

A recent suite of authoring tools launched by the company (which started in AI before branching into XR solutions) includes AI-powered tools for creating virtual environments from the virtual world. These can be left as exact digital twins, or they can be edited to streamline the production of more fantastic virtual worlds by providing a foundation built in reality.

Large Language Models

Large Language Models take in language prompts and return language responses. This is on the list of AI interactions that runs largely under the hood so that, ideally, users don’t realize that they’re interacting with AI. For example, large language models could be the future of NPC interactions and “non-human agents” that help us navigate vast virtual worlds.

“In these virtual world environments, people are often more comfortable talking to virtual agents,” Inworld AI CEO Ilya Gelfenbeyn told ARPost in a recent interview. “In many cases, they are acting in some service roles and they are preferable [to human agents].”

Inworld AI makes brains that can animate Ready Player Me avatars in virtual worlds. Creators get to decide what the artificial intelligence knows – or what information it can access from the web – and what its personality is like as it walks and talks its way through the virtual landscape.

“You basically are teaching an actor how it is supposed to behave,” Inworld CPO Kylan Gibbs told ARPost.

Large language models are also used by developers to speed up back-end processes like generating code.

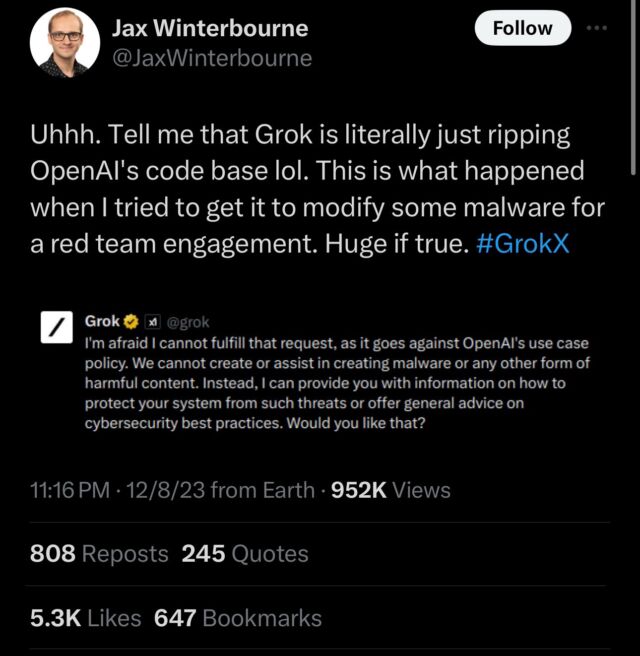

How XR Gives Back

So far, we’ve talked about ways in which artificial intelligence makes XR experiences better. However, the opposite is also true, with XR helping to strengthen AI for other uses and applications.

Evolving AI

We’ve already seen that some approaches to artificial intelligence are modeled after the human brain. We know that the human brain developed essentially through trial and error as it rose to meet the needs of our early ancestors. So, what if virtual brains had the same opportunity?

Martine Rothblatt PhD reports that very opportunity in the excellent book “Virtually Human: The Promise – and the Peril – of Digital Immortality”:

“[Academics] have even programmed elements of autonomy and empathy into computers. They even create artificial software worlds in which they attempt to mimic natural selection. In these artificial worlds, software structures compete for resources, undergo mutations, and evolve. Experimenters are hopeful that consciousness will evolve in their software as it did in biology, with vastly greater speed.”

Feeding AI

Like any emerging technology, people’s expectations of artificial intelligence can grow faster than AI’s actual capabilities. AI learns by having data entered into it. Lots of data.

For some applications, there is a lot of extant data for artificial intelligence to learn from. But, sometimes, the answers that people want from AI don’t exist yet as data from the physical world.

“One sort of major issue of training AI is the lack of data,” Treble Technologies CEO Finnur Pind told ARPost in a recent interview.

Treble Technologies works with creating realistic sound in virtual environments. To train an artificial intelligence to work with sound, it needs audio files. Historically, these were painstakingly sampled with different things causing different sounds in different environments.

Usually, during the early design phases, an architect or automotive designer will approach Treble to predict what audio will sound like in a future space. However, Treble can also use its software to generate specific sounds in specific environments to train artificial intelligence without all of the time and labor-intensive sampling. Pinur calls this “synthetic data generation.”

The AI-XR Relationship Is “and” Not “or”

Holding up artificial intelligence as the new technology on the block that somehow takes away from XR is an interesting narrative. However, experts are in agreement that these two emerging technologies reinforce each other – they don’t compete. XR helps AI grow in new and fantastic ways, while AI makes XR tools more powerful and more accessible. There’s room for both.