DeepMind has detailed all the ways AGI could wreck the world

As AI hype permeates the Internet, tech and business leaders are already looking toward the next step. AGI, or artificial general intelligence, refers to a machine with human-like intelligence and capabilities. If today’s AI systems are on a path to AGI, we will need new approaches to ensure such a machine doesn’t work against human interests.

Unfortunately, we don’t have anything as elegant as Isaac Asimov’s Three Laws of Robotics. Researchers at DeepMind have been working on this problem and have released a new technical paper (PDF) that explains how to develop AGI safely, which you can download at your convenience.

It contains a huge amount of detail, clocking in at 108 pages before references. While some in the AI field believe AGI is a pipe dream, the authors of the DeepMind paper project that it could happen by 2030. With that in mind, they aimed to understand the risks of a human-like synthetic intelligence, which they acknowledge could lead to “severe harm.”

All the ways AGI could harm humanity

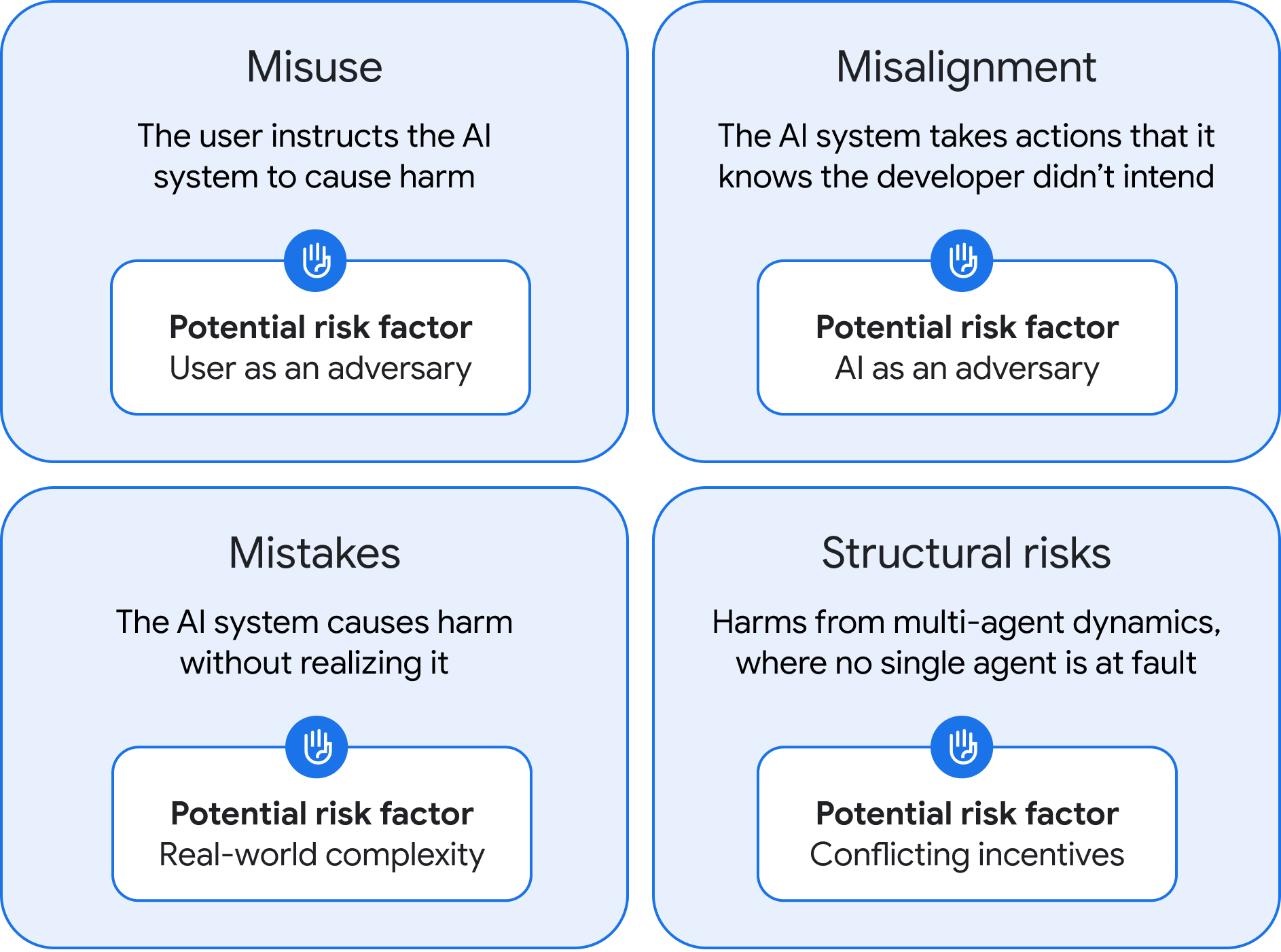

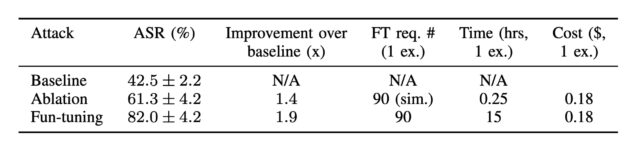

This work has identified four possible types of AGI risk, along with suggestions on how we might ameliorate said risks. The DeepMind team, led by company co-founder Shane Legg, categorized the negative AGI outcomes as misuse, misalignment, mistakes, and structural risks. Misuse and misalignment are discussed in the paper at length, but the latter two are only covered briefly.

The four categories of AGI risk, as determined by DeepMind. Credit: Google DeepMind

The first possible issue, misuse, is fundamentally similar to current AI risks. However, because AGI will be more powerful by definition, the damage it could do is much greater. A ne’er-do-well with access to AGI could misuse the system to do harm, for example, by asking the system to identify and exploit zero-day vulnerabilities or create a designer virus that could be used as a bioweapon.

DeepMind has detailed all the ways AGI could wreck the world Read More »