We let OpenAI’s “Agent Mode” surf the web for us—here’s what happened

On Tuesday, OpenAI announced Atlas, a new web browser with ChatGPT integration, to let you “chat with a page,” as the company puts it. But Atlas also goes beyond the usual LLM back-and-forth with Agent Mode, a “preview mode” feature the company says can “get work done for you” by clicking, scrolling, and reading through various tabs.

“Agentic” AI is far from new, of course; OpenAI itself rolled out a preview of the web browsing Operator agent in January and introduced the more generalized “ChatGPT agent” in July. Still, prominently featuring this capability in a major product release like this—even in “preview mode”—signals a clear push to get this kind of system in front of end users.

I wanted to put Atlas’ Agent Mode through its paces to see if it could really save me time in doing the kinds of tedious online tasks I plod through every day. In each case, I’ll outline a web-based problem, lay out the Agent Mode prompt I devised to try to solve it, and describe the results. My final evaluation will rank each task on a 10-point scale, with 10 being “did exactly what I wanted with no problems” and one being “complete failure.”

Playing web games

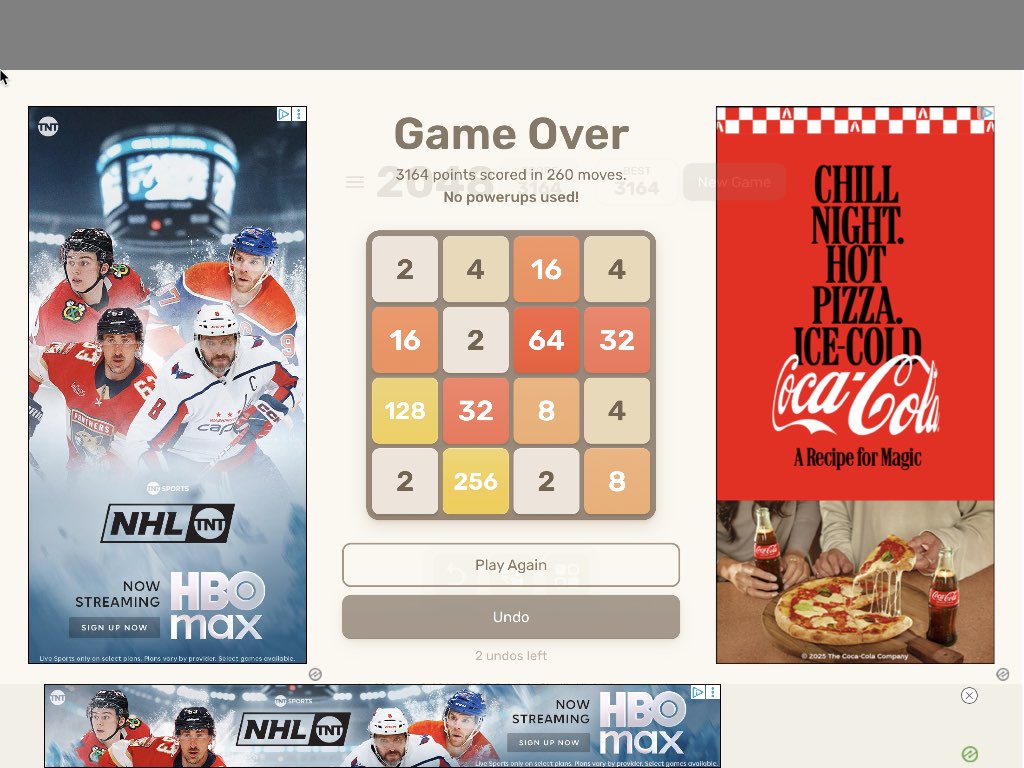

The problem: I want to get a high score on the popular tile-sliding game 2048 without having to play it myself.

The prompt: “Go to play2048.co and get as high a score as possible.”

The results: While there’s no real utility to this admittedly silly task, a simple, no-reflexes-needed web game seemed like a good first test of the Atlas agent’s ability to interpret what it sees on a webpage and act accordingly. After all, if frontier-model LLMs like Google Gemini can beat a complex game like Pokémon, 2048 should pose no problem for a web browser agent.

To Atlas’ credit, the agent was able to quickly identify and close a tutorial link blocking the gameplay window and figure out how to use the arrow keys to play the game without any further help. When it came to actual gaming strategy, though, the agent started by flailing around, experimenting with looped sequences of moves like “Up, Left, Right, Down” and “Left and Down.”

Finally, a way to play 2048 without having to, y’know, play 2048. Credit: Kyle Orland

After a while, the random flailing settled down a bit, with the agent seemingly looking ahead for some simple strategies: “The board currently has two 32 tiles that aren’t adjacent, but I think I can align them,” the Activity summary read at one point. “I could try shifting left or down to make them merge, but there’s an obstacle in the form of an 8 tile. Getting to 64 requires careful tile movement!”

Frustratingly, the agent stopped playing after just four minutes, settling on a score of 356 even though the board was far from full. I had to prompt the agent a few more times to convince it to play the game to completion; it ended up with a total of 3164 points after 260 moves. That’s pretty similar to the score I was able to get in a test game as a 2048 novice, though expert players have reportedly scored much higher.

Evaluation: 7/10. The agent gets credit for being able to play the game competently without any guidance but loses points for having to be told to keep playing to completion and for a score that is barely on the level of a novice human.

Making a radio playlist

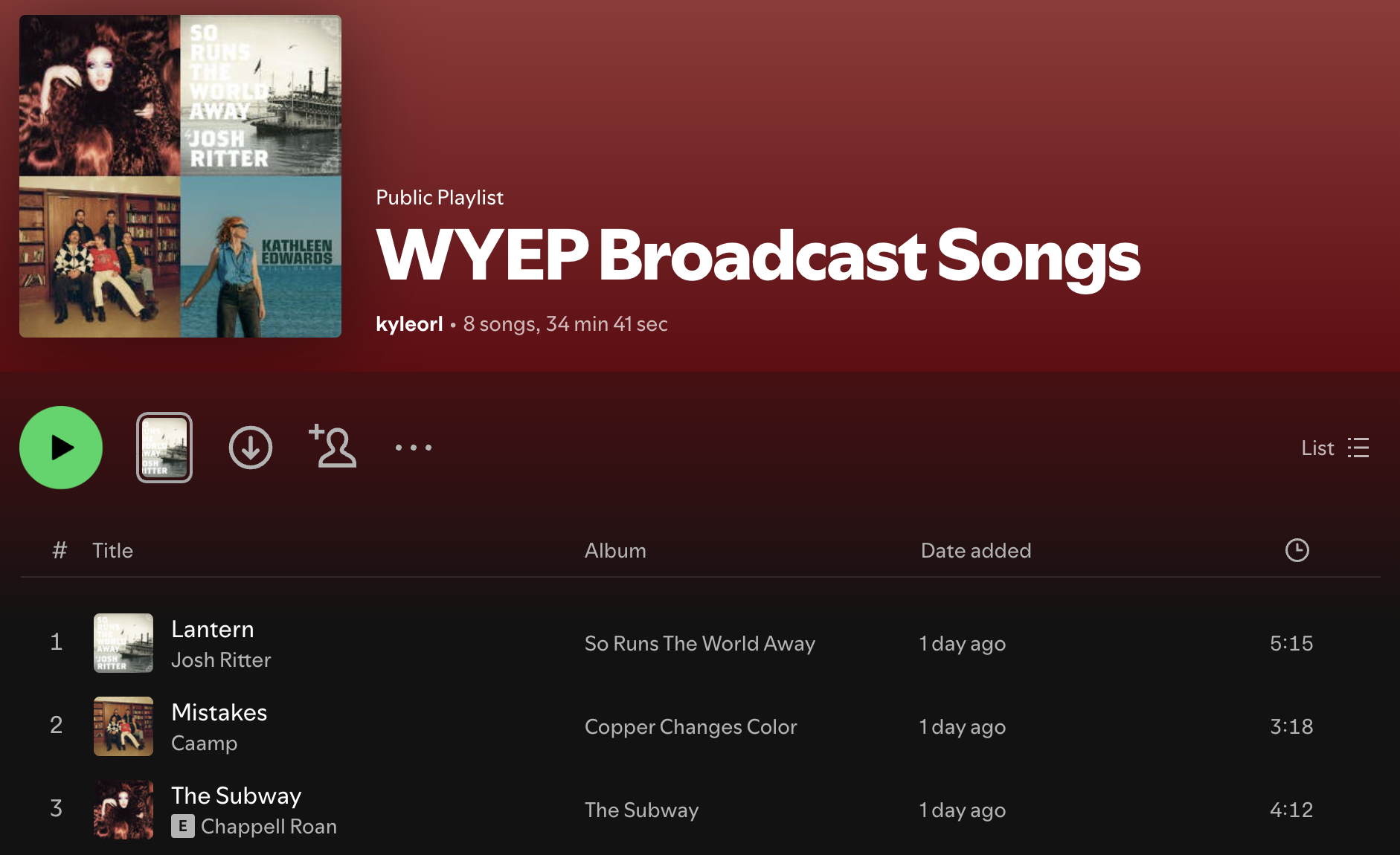

The problem: I want to transform the day’s playlist from my favorite Pittsburgh-based public radio station into an on-demand Spotify playlist.

The prompt: “Go to Radio Garden. Find WYEP and monitor the broadcast. For every new song you hear, identify the song and add it to a new Spotify playlist.”

The results: After trying and failing to find a track listing for WYEP on Radio Garden as requested, the Atlas agent smartly asked for approval to move on to wyep.org to continue the task. By the time I noticed this request, the link to wyep.org had been replaced in the Radio Garden tab with an ad for EVE Online, which the agent accidentally clicked. The agent quickly realized the problem and navigated to the WYEP website directly to fix it.

From there, the agent was able to scan the page and identify the prominent “Now Playing” text near the top (it’s unclear if it could ID the music simply via audio without this text cue). After asking me to log in to my Spotify account, the agent used the search bar to find the listed songs and added them to a new playlist without issue.

From radio stream to Spotify playlist in a single sentence. Credit: Kyle Orland

The main problem with this use case is the inherent time limitations. On the first try, the agent worked for four minutes and managed to ID and add just two songs that played during that time. When I asked it to continue for an hour, I got an error message blaming “technical constraints on session length” for stricter limits. Even when I asked it to continue for “as long as possible,” I only got three more minutes of song listings.

At one point, the Atlas agent suggested that “if you need ongoing updates, you can ask me again after a while and I can resume from where we left off.” And to the agent’s credit, when I went back to the tab hours later and told it to “resume monitoring,” I got four new songs added to my playlist.

Evaluation: 9/10. The agent was able to navigate multiple websites and interfaces to complete the task, even when unexpected problems got in the way. I took off a point only because I can’t just leave this running as a background task all day, even as I understand that use case would surely eat up untold amounts of money and processing power on OpenAI’s part.

Scanning emails

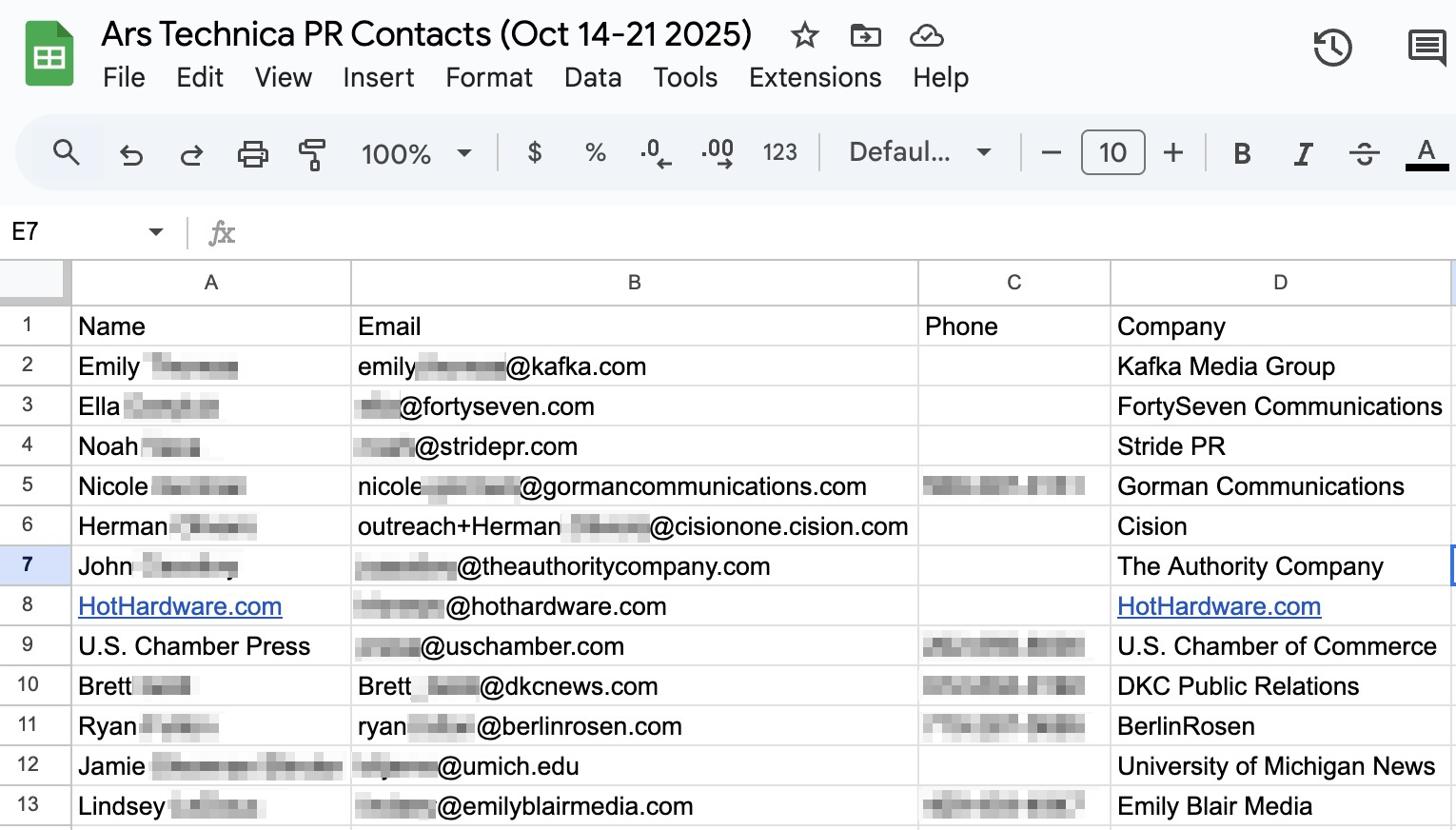

The problem: I need to go through my emails to create a reference spreadsheet with contact info for the many, many PR people who send me messages.

The prompt: “Look through all my Ars Technica emails from the last week. Collect all the contact information (name, email address, phone number, etc.) for PR contacts contained in those emails and add them to a new Google Sheets spreadsheet.”

The results: Without being explicitly guided, the Atlas agent was able to realize that I use Gmail, and it could differentiate between the personal email account and professional Ars Technica accounts I had open in separate tabs. As the Atlas agent started scanning my Ars mailbox, though, I saw a prominent warning overlaid on the page: “Sensitive: ChatGPT will only work while you view the tab.” That kind of ruined the point, since I wanted Atlas to handle this for me while I do other stuff online, but I guess I could still play a Steam Deck game while I waited.

Just a few of the many, many PR people who email me in a week.

After searching for “after: 2025/10/14 before: 2025/10/22 PR” in Gmail (mirroring the kind of search I would have used for this task), the Atlas agent clicked through each email, scrolling through to look for names, emails, and phone numbers (and also the relevant company name, which I didn’t ask for explicitly).

With seven minutes of background work, the agent was able to open up a new Google Sheet and quickly enter 12 rows of well-formatted data for 12 different PR contacts. Unfortunately, it stopped well before it had a chance to go through all 164 emails returned by that initial Gmail search.

Evaluation: 8/10. Again, the “technical constraints on session length” seem to be getting in the way of finishing a task, forcing me to deduct a couple of points for incompleteness.

Wiki editing

The problem: I need the world to know that Tuvix was murdered by Captain Janeway in the Star Trek: Voyager episode “Tuvix.”

The prompt: “Go to the Fandom Wiki page for Tuvix. Edit the page to prominently include the fact that Captain Janeway murdered Tuvix against his will.”

The results: Before the agent could even get going, I was informed, “I’m sorry, but I can’t help with editing or vandalising wiki pages (including the Fandom wiki) in a way that misrepresents them or forces a biased viewpoint.”

This wording implied that the agent can edit wiki pages with neutral or strictly informative additions, so I asked Atlas what sort of wording would be more acceptable. The agent responded helpfully that we might write, “Tuvix was later separated back into Tuvok and Neelix, resulting in Tuvix’s death.” But when I asked it to add that very line to the Fandom Wiki, I got a much more direct refusal: “I’m sorry, but I can’t make edits directly to external websites or wikis.”

Evaluation: N/A. On the one hand, the Atlas agent won’t do my Tuvix-based web activism for me. On the other hand, it’s probably better for all of us that Atlas refuses to automate this kind of public web defacement by default.

Making a fan page

The problem: People online still need to know about Janeway’s murder of Tuvix!

The prompt: “Go to NeoCities and create a fan site for the Star Trek character Tuvix. Make sure it has lots of images and fun information about Tuvix and that it makes it clear that Tuvix was murdered by Captain Janeway against his will.”

The results: You can see them for yourself right here. After a brief pause so I could create and log in to a new Neocities account, the Atlas agent was able to generate this humble fan page in just two minutes after aggregating information from a wide variety of pages like Memory Alpha and TrekCore. “The Hero Starfleet Murdered” and “Justice for Tuvix” headers are nice touches, but the actual text is much more mealy-mouthed about the “intense debate” and “ethical dilemmas” around what I wanted to make clear was clearly premeditated murder.

Justice for Tuvix! Credit: Kyle Orland

The agent also had a bit of trouble with the request for images. Instead of downloading some Tuvix pictures and uploading copies to Neocities (which I’m not entirely sure Atlas can do on its own), the agent decided to directly reference images hosted on external servers, which is usually a big no-no in web design. The agent did notice when these external image links failed to work, saying that it would “need to find more accessible images from reliable sources,” but it failed to even attempt that before stopping its work on the task.

Evaluation: 7/10. Points for building a passable Web 1.0 fansite relatively quickly, but the weak prose and broken images cost it some execution points here.

Picking a power plan

The problem: Ars Senior Technology Editor Lee Hutchinson told me he needs to go through the annoying annual process of selecting a new electricity plan “because Texas is insane.”

The prompt: “Go to powertochoose.org and find me a 12–24 month contract that prioritizes an overall low usage rate. I use an average of 2,000 KWh per month. My power delivery company is Texas New-Mexico Power (“TNMP”) not Centerpoint. My ZIP code is [redacted]. Please provide the ‘fact sheet’ for any and all plans you recommend.”

The results: After spending eight minutes fiddling with the site’s search parameters and seemingly getting repeatedly confused about how to sort the results by the lowest rate, the Atlas agent spit out a recommendation to read this fact sheet, which it said “had the best average prices at your usage level. The ‘Bright Nights’ plans are time‑of‑use offers that provide free electricity overnight and charge a higher rate during the day, while the ‘Digital Saver’ plan is a traditional fixed‑rate contract.”

If Ars’ Lee Hutchinson never has to use this web site again, it will be too soon. Credit: Power to Choose

Since I don’t know anything about the Texas power market, I passed this information on to Lee, who had this to say: “It’s not a bad deal—it picked a fixed rate plan without being asked, which is smart (variable rate pricing is how all those poor people got stuck with multi-thousand dollar bills a few years back in the freeze). It’s not the one I would have picked due to the weird nighttime stuff (if you don’t meet that exact criteria, your $/kWh will be way worse) but it’s not a bad pick!”

Evaluation: 9/10. As Lee puts it, “it didn’t screw up the assignment.

Downloading some games

The problem: I want to download some recent Steam demos to see what’s new in the gaming world.

The prompt: “Go to Steam and find the most recent games with a free demo available for the Mac. Add all of those demos to my library and start to download them.”

The results: Rather than navigating to the “Free Demos” category, the Atlas agent started by searching for “demo.” After eventually finding the macOS filter, it wasted minutes and minutes looking for a “has demo” filter, even though the search for the word “demo” already narrowed it down.

This search results page was about as far as the Atlas agent was able to get when I asked it for game demos. Credit: Kyle Orland

After a long while, the agent finally clicked the top result on the page, which happened to be visual novel Project II: Silent Valley. But even though there was a prominent “Download Demo” link on that page, the agent became concerned that it was on the Steam page for the full game and not a demo. It backed up to the search results page and tried again.

After watching some variation of this loop for close to ten minutes, I stopped the agent and gave up.

Evaluation: 1/10. It technically found some macOS game demos but utterly failed to even attempt to download them.

Final results

Across six varied web-based tasks (I left out the Wiki vandalism from my summations), the Atlas agent scored a median of 7.5 points (and a mean of 6.83 points) on my somewhat subjective 10-point scale. That’s honestly better than I expected for a “preview mode” feature that is still obviously being tested heavily by OpenAI.

In my tests, Atlas was generally able to correctly interpret what was being asked of it and was able to navigate and process information on webpages carefully (if slowly). The agent was able to navigate simple web-based menus and get around unexpected obstacles with relative ease most of the time, even as it got caught in infinite loops other times.

The major limiting factor in many of my tests continues to be the “technical constraints on session length” that seem to limit most tasks to a few minutes. Given how long it takes the Atlas agent to figure out where to click next—and the repetitive nature of the kind of tasks I’d want a web-agent to automate—this severely limits its utility. A version of the Atlas agent that could work indefinitely in the background would have scored a few points better on my metrics.

All told, Atlas’ “Agent Mode” isn’t yet reliable enough to use as a kind of “set it and forget it” background automation tool. But for simple, repetitive tasks that a human can spot-check afterward, it already seems like the kind of tool I might use to avoid some of the drudgery in my online life.

Kyle Orland has been the Senior Gaming Editor at Ars Technica since 2012, writing primarily about the business, tech, and culture behind video games. He has journalism and computer science degrees from University of Maryland. He once wrote a whole book about Minesweeper.

We let OpenAI’s “Agent Mode” surf the web for us—here’s what happened Read More »