Space Invaders Celebrates 45th Anniversary With a New AR Game

Space Invaders is a shooting video game created by Tomohiro Nishikado in 1978 and manufactured and sold by TAITO. It was the first fixed shooter video game and is considered one of the most iconic arcade games ever. As Space Invaders turns 45, TAITO teams up with Google and UNIT9 to give its players an elevated AR gaming experience with Google’s ARCore Geospatial API.

TAITO and Google partnered with global production and innovation studio UNIT9 to transform Space Invaders into an immersive AR game in honor of its 45th anniversary. Players can defend their real-world neighborhoods from 3D invaders emerging from nearby buildings and landmarks.

Meet “SPACE INVADERS: World Defense” AR Game

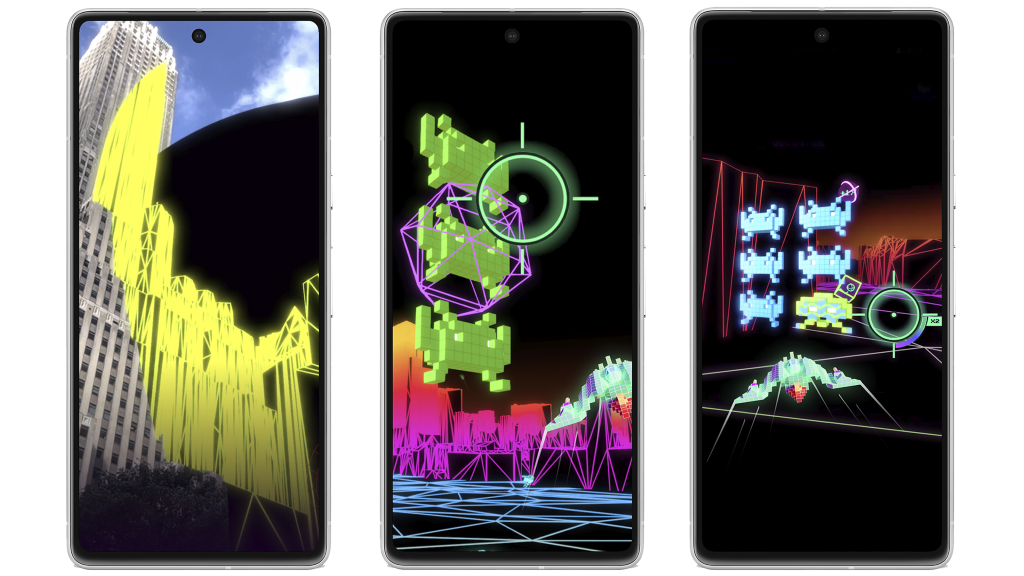

The reimagined iconic video game is SPACE INVADERS: World Defense, a sequel to the original game. It gives players access to enhanced weapons so they can defend their neighborhoods more effectively. New music and sound effects were also added for a more exhilarating and immersive experience.

The most remarkable update, however, is the real-time response to location-specific patterns and nearby buildings. It means that the AR game adapts to the player’s real-life surroundings. For example, if it’s raining, the virtual environment may also show rain, and if there’s a tall building at the player’s location, there will also be a tall building in the AR realm where an Invader may emerge from.

SPACE INVADERS: World Defense Gameplay

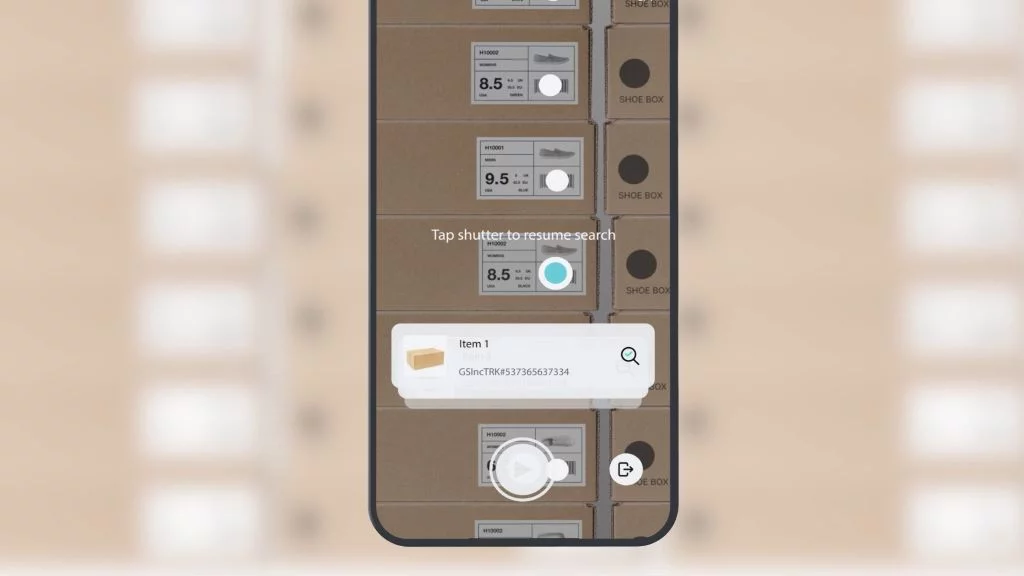

The original game’s classic characters and high-score mechanics are preserved in the AR game SPACE INVADERS: World Defense. The difference is that players should explore their virtual neighborhoods to find Space Invaders and defeat them. They can unlock special power-ups, compete with their friends within their location, and take an AR selfie to post on social media.

Players can easily switch between the World Dimension and Invaders Dimension via a portal. The virtual, 3D Invader world changes in sync with the natural environment, allowing players to complete missions in both the virtual world and the natural world’s AR view.

Harnessing the Power of Google’s ARCore and Geospatial API

UNIT9 harnessed the power of Google’s ARCore and Geospatial API to develop the next-level AR gaming experience of SPACE INVADERS: World Defense. ARCore is a software development kit (SDK) developers use to create AR applications across multiple platforms, including iOS, Android, Unity, and the Web. It seamlessly merges the digital and physical worlds, allowing users to interact with virtual objects in the AR adaptation of their natural surroundings.

As one of the top AR SDKs, the other prominent capabilities of ARCore include tracking the orientation and position of the user’s device, matching the lighting of virtual objects with their surroundings, detecting the location and size of various surface types, and integrating with existing tools like Unreal and Unity.

Combined with Geospatial API, which remotely attaches content to any area Google Street View covers, ARCore integrates geometric data from Google Maps Street View into SPACE INVADERS: World Defense, displaying accurate terrain and building information within a 100-meter radius of the player’s location.

The Beginning of an Exciting New Era

According to UNIT9’s Head of Digital, Media Ridha, Google Geospatial API’s launch marks the beginning of an exciting new era for digital experiences tied to real-world locations that are not only limited to games but for any brand experience linked to a specific place. “It was an honor to work with Google and TAITO to translate one of the most famous IPs out there into the next wave of AR gaming and create an experience that fans of all ages around the world can enjoy,” Ridha said in a press release shared with ARPost.

Matthieu Lorrain, global head of creative innovation at Google Labs Partnerships, is excited to see more developers leverage their platform to push the boundaries of geolocalized experiences. “[Google’s Geospatial API] allowed us to celebrate the iconic Space Invaders game by turning the world into a global playground,” said Lorrain.

SPACE INVADERS: World Defense officially launched on July 17, 2023, and is available on iOS and Android. Players in key markets, including Europe, Japan, and the USA, can download the AR game on their mobile devices and defeat Invaders in the real world, made more immersive with augmented reality.

Space Invaders Celebrates 45th Anniversary With a New AR Game Read More »