A new open-weights AI coding model is closing in on proprietary options

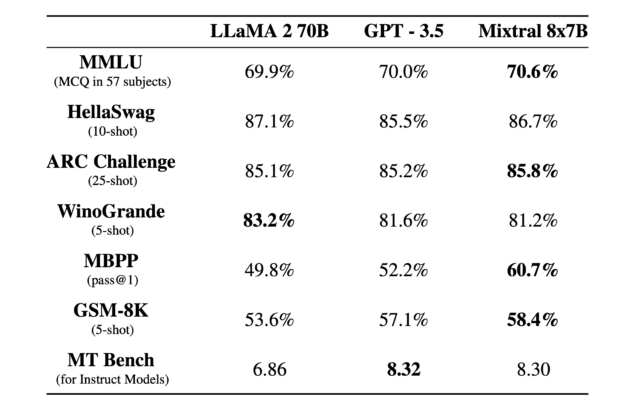

On Tuesday, French AI startup Mistral AI released Devstral 2, a 123 billion parameter open-weights coding model designed to work as part of an autonomous software engineering agent. The model achieves a 72.2 percent score on SWE-bench Verified, a benchmark that attempts to test whether AI systems can solve real GitHub issues, putting it among the top-performing open-weights models.

Perhaps more notably, Mistral didn’t just release an AI model, it released a new development app called Mistral Vibe. It’s a command line interface (CLI) similar to Claude Code, OpenAI Codex, and Gemini CLI that lets developers interact with the Devstral models directly in their terminal. The tool can scan file structures and Git status to maintain context across an entire project, make changes across multiple files, and execute shell commands autonomously. Mistral released the CLI under the Apache 2.0 license.

It’s always wise to take AI benchmarks with a large grain of salt, but we’ve heard from employees of the big AI companies that they pay very close attention to how well models do on SWE-bench Verified, which presents AI models with 500 real software engineering problems pulled from GitHub issues in popular Python repositories. The AI must read the issue description, navigate the codebase, and generate a working patch that passes unit tests. While some AI researchers have noted that around 90 percent of the tasks in the benchmark test relatively simple bug fixes that experienced engineers could complete in under an hour, it’s one of the few standardized ways to compare coding models.

At the same time as the larger AI coding model, Mistral also released Devstral Small 2, a 24 billion parameter version that scores 68 percent on the same benchmark and can run locally on consumer hardware like a laptop with no Internet connection required. Both models support a 256,000 token context window, allowing them to process moderately large codebases (although whether you consider it large or small is very relative depending on overall project complexity). The company released Devstral 2 under a modified MIT license and Devstral Small 2 under the more permissive Apache 2.0 license.

A new open-weights AI coding model is closing in on proprietary options Read More »