More Reactions to If Anyone Builds It, Everyone Dies

Previously I shared various reactions to If Anyone Builds It Everyone Dies, along with my own highly positive review.

Reactions continued to pour in, including several impactful ones. There’s more.

Any further reactions will have a higher bar, and be included in weekly posts unless one is very high quality and raises important new arguments.

IABIED gets to #8 in Apple Books nonfiction, #2 in UK. It has 4.8 on Amazon and 4.3 on Goodreads. The New York Times bestseller list lags by two weeks so we don’t know the results there yet but it is expected to make it.

David Karsten suggests you read the book, while noting he is biased. He reminds us that, like any other book, most conversations you have about the book will be with people who did not read the book.

David Manheim: “An invaluable primer on one side of the critical and underappreciated current debate between those dedicated to building this new technology, and those who have long warned against it. If you haven’t already bought the book, you really should.”

Kelsey Piper offers a (no longer gated) review of IABIED, noting that the correct focus is on whether the title’s claim is true.

Steven Byrnes reviews the book, says he agrees with ~90% of it, disagreeing with some of the reasoning steps but emphasizes that the conclusions are overdetermined.

Michael Nielsen gives the book five stars on Goodreads and recommends it, as it offers a large and important set of largely correct arguments, with the caveat that there he sees significant holes in the central argument. His actual top objection is that even if we do manage to get a controlled and compliant ASI, that is still extremely destabilizing at best and fatal at worst. I agree with him this is not a minor quibble, and I worry about that scenario a lot whereas the book’s authors seem to consider it a happy problem they’d love to have. If anything it makes the book’s objections to ‘building it’ even stronger. He notes he does not have a good solution.

Romy Mireo thought the book was great for those thinking the problem through, but would have preferred the book offer a more streamlined way for a regular person to help, in a Beware Trivial Inconveniences kind of way. The book attempts to provide this in its online resources.

Nomads Vagabonds (in effect) endorses the first 2 parts of the book, but strongly opposes the policy asks in part 3 as unreasonable asks, demanding that if you are alarmed you need to make compromises to ‘help you win,’ if you think the apocalypse is coming you only propose things inside the Overton Window, that ‘even a 1% reduction in risk is worth pursuing.’

That only makes sense if and only if solutions inside the Overton Window substantially improve your chances or outlook, and thus the opportunity outweighs the opportunity cost. The reason (as Nomads quotes) Ezra Klein says Democrats should compromise on various issues if facing what they see as a crisis, is this greatly raises the value of ‘win the election with a compromise candidate’ relative to losing. There’s tons of value to protect even if you give up ground on some issues.

Whereas the perspective of Yudkowsky and Soares is that measures ‘within the Overton Window’ matter very little in terms of prospective outcomes. So you’d much rather take a chance on convincing people to do what would actually work. It’s a math problem, including the possibility that busting the Overton Window helps the world achieve other actions you aren’t yourself advocating for.

The central metaphor Nomads uses is protecting against dragons, where the watchman insists upon only very strong measures and rejects cheaper ones entirely. Well, that depends on if the cheaper solutions actually protect you from dragons.

If you believe – as I do! – that lesser changes can make a bigger difference, then you should say so, and try to achieve those lesser changes while also noting you would support the larger ask. If you believe – as the authors do – that the lesser changes can’t make much difference, then you say that instead.

I would also note that yes, there are indeed many central historical examples of asking for things outside the Overton Window being the ultimately successful approach to creating massive social change. This ranges from the very good (such as abolishing slavery) to the very bad (such as imposing Communism or Fascism).

People disagree on a lot on whether the book is well or poorly written, in various ways, at various different points in the book. Kelsey Piper thinks the writing in the first half is weak, the second half is stronger, whereas Timothy Lee (a skeptic of the book’s central arguments) thought the book was surprisingly well-written and the first few chapters were its favorites.

Peter Wildeford goes over the book’s central claims, finding them overconfident and warning about the downsides and costs of shutting AI development down. He’s a lot ‘less doomy’ than the book on many fronts. I’d consider this his central takeaway:

Peter Wildeford: Personally, I’m very optimistic about AGI/ASI and the future we can create with it, but if you’re not at least a little ‘doomer’ about this, you’re not getting it. You need profound optimism to build a future, but also a healthy dose of paranoia to make sure we survive it. I’m worried we haven’t built enough of this paranoia yet, and while Yudkowsky’s and Soares’s book is very depressing, I find it to be a much-needed missing piece.

This seems like a reasonable take.

Than Ruthenis finds the book weaker than he’d hoped, he finds section 3 strong but the first two weak. Darren McKee finds it a decent book, but is sad it was not better written and did not address some issues, and thus does not see it as an excellent book.

Buck is a strong fan of the first two sections and liked the book far more than he expected, calling them the best available explanation of the basic AI misalignment risk case for a general audience. He caveats that the book does not address counterarguments Buck thinks are critical, and that he would tell people to skip section 3. Buck’s caveats come down to expecting important changes between the world today and the world where ASI is developed, that potentially change whether everyone would probably die. I don’t see him say what he expects these differences to be? And the book does hold out the potential for us to change course, and indeed find such changes, it simply says this won’t happen soon or by default, which seems probably correct to me.

Nostream addresses the book’s arguments, agreeing that existential risk is present but making several arguments that the probability is much lower, they estimate 10%-20%, with the claim that if you buy any of the ‘things are different than the book says’ arguments, that would be sufficient to lower your risk estimate. This feels like a form of the conjunction fallacy fallacy, where there is a particular dangerous chain and thus breaking the chain at any point breaks a lot of the danger and returns us to things probably being fine.

The post focuses on the threat model of ‘an AI turns on humanity per se’ treating that as load bearing, which it isn’t, and treats the ‘alignment’ of current models as meaningful in ways I think are clearly wrong, and in general tries to draw too many conclusions from the nature of current LLMs. I consider all the arguments here, in the forms expressed, not new and well-answered by the book’s underlying arguments, although not always in a form as easy to pick up on as one might hope in the book text alone (book is non-technical and length-limited, and people understandably have cached thoughts and anchor on examples).

So overall I wished the post was better and made stronger arguments, including stronger forms of its arguments, but this is the right approach to take of laying out specific arguments and objections, including laying out up front that their own view includes unacceptably high levels of existential risk and also dystopia risk or catastrophic risk short of that. If I believed what Nostream believed I’d be in favor of not building the damn thing for a while, if there was a way to not build it for a while.

Gary Marcus offered his (gated) review, which he kindly pointed out I had missed in Wednesday’s roundup. He calls the book deeply flawed, but with much that is instructive and worth heeding.

Despite the review’s flaws and incorporation of several common misunderstandings, this was quite a good review, because Gary Marcus focuses on what is important, and his reaction to the book is similar to mine to his review. He notices that his disagreements with the book, while important and frustrating, should not be allowed to interfere with the central premise, which is the important thing to consider.

He starts off with this list of key points where he agrees with the authors:

Rogue AI is a possibility that we should not ignore. We don’t know for sure what future AI will do and we cannot rule out the possibility that it will go rogue.

We currently have no solution to the “alignment problem” of making sure that machines behave in human-compatible ways.

Figuring out solutions to the alignment problem is really, really important.

Figuring out a solution to the alignment problem is really, really hard.

Superintelligence might come relatively soon, and it could be dangerous.

Superintelligence could be more consequential than any other trend.

Governments should be more concerned.

The short-term benefits of AI (eg in terms of economics and productivity) may not be worth the long-term risks.

Noteworthy, none of this means that the title of the book — If Anyone Builds It, Everyone Dies — literally means everyone dies. Things are worrying, but not nearly as worrying as the authors would have you believe.

It is, however, important to understand that Yudkowsky and Soares are not kidding about the title

Gary Marcus does a good job here of laying out the part of the argument that should be uncontroversial. I think all seven points are clearly true as stated.

Gary Marcus: Specifically, the central argument of the book is as follows:

Premise 1. Superintelligence will inevitably come — and when it does, it will inevitably be smarter than us.

Premise 2. Any AI that is smarter than any human will inevitably seek to eliminate all humans.

Premise 3. There is nothing that humans can do to stop this threat, aside from not building superintelligence in the first place.The good news [is that the second and third] premisses are not nearly as firm as the authors would have it.

I’d importantly quibble here, my version of the book’s argument would be, most importantly on the second premise although it’s good to be precise everywhere:

Superintelligence is a real possibility that could happen soon.

If built with anything like current techniques, any sufficiently superintelligent AI will effectively maximize some goal and seek to rearrange the atoms to that end, in ways unlikely to result in the continued existence of humans.

Once such a superintelligent AI is built, if we messed up, it will be too late to stop this from happening. So for now we have to not build such an AI.

He mostly agrees with the first premise and has a conservative but reasonable estimation of how soon it might arrive.

For premise two, the quibble matters because Gary’s argument focuses on whether the AI will have malice, pointing out existing AIs have not shown malice. Whereas the book does not see this as requiring the AI to have malice, nor does it expect malice. It expects merely indifference, or simply a more important priority, the same way humans destroy many things we are indifferent to, or that we actively like but are in the way. For any given specified optimization target, given sufficient optimization power, the chance the best solution involves humans is very low. This has not been an issue with past AIs because they lacked sufficient optimization power, so such solutions were not viable.

This is a very common misinterpretation, both of the authors and the underlying problems. The classic form of the explanation is ‘The AI does not love you, the AI does not hate you, but you are composed of atoms it can use for something else.’

His objection to the third premise is that in a conflict, the ASI’s victory over humans seems possible but not inevitable. He brings a standard form of this objection, including quoting Moltke’s famous ‘no plan survives contact with the enemy’ and modeling this as the AI getting to make some sort of attack, which might not work, after which we can fight back. I’ve covered this style of objection many times, comprising a substantial percentage of words written in weekly updates, and am very confident it is wrong. The book also addresses it at length.

I will simply note that in context, even if on this point you disagree with me and the book, and agree with Gary Marcus, it does not change the conclusion, so long as you agree (as Marcus does) that such an ASI has a large chance of winning. That seems sufficient to say ‘well then we definitely should not build it.’ Similarly, while I disagree with his assumption we would unite and suddenly act ruthlessly once we knew the ASI was against us, I don’t see the need to argue that point.

Marcus does not care for the story in Part 2, or the way the authors use colloquial language to describe the AI in that story.

I’d highlight another common misunderstanding, which is important enough that the response bears repeating:

Gary Marcus: A separate error is statistical. Over and again, the authors describe scenarios that multiply out improbabilities: AIs decide to build biological weapons; they blackmail everyone in their way; everyone accepts that blackmail; the AIs do all this somehow undetected by the authorities; and so on. But when you string together a bunch of improbabilities, you wind up with really long odds.

The authors are very much not doing this. This is not ‘steps in the chain’ where the only danger is that the AI succeeds at every step, whereas if it ever fails we are safe. They bend over backwards, in many places, to describe how the AI uses forking paths, gaming out and attempting different actions, having backup options, planning for many moves not succeeding and so on.

The scenario also does not presuppose that no one realizes or suspects, in broad terms, what is happening. They are careful to say this is one way things might play out, and any given particular story you tell must necessarily involve a lot of distinct things happening.

If you think that at the first sign of trouble all the server farms get turned off? I do not believe you are paying enough attention to the world in 2025. Sorry, no, not even if there wasn’t an active AI effort to prevent this, or an AI predicting what actions would cause what reactions, and choosing the path that is most likely to work, which is one of the many benefits of superintelligence.

Several people have pointed out that the actual absurdity is that the AI in the story has to work hard and use the virus to justify it taking over. In the real world, we will probably put it in charge ourselves, without the AI even having to push us to do so. But in order to be more convincing, the story makes the AI’s life harder here and in many other places than it actually would be.

Gary Marcus closes with discussion of alternative solutions to building AIs that he wished the book explored more, including alternatives to LLMs. I’d say that this was beyond the scope of the book, and that ‘stop everyone from racing ahead with LLMs’ would be a likely necessary first step before we can pursue such avenues in earnest. Thus I won’t go into details here, other than to note that ‘axioms such as ‘avoid causing harm to humans’’ are known to not work, which was indeed consistently the entire point of Asimov’s robot novels and other stories, where he explores some but not all of the reasons why this is the case.

Again, I very much appreciated this review, which focused on what actually matters and clearly is stating what Marcus actually believes. More like this, please.

John Pressman agrees with most of the book’s individual statements and choices on how to present arguments, but disagrees with the thesis and many editorial and rhetorical choices. Ultimately his verdict is that the book is ‘okay.’ He states up top as ‘obvious’ many ‘huge if true’ statements about the world that I do not think are correct and definitely are not obvious. There’s also a lot of personal animus going on here, has been for years. I see two central objections to the book from Pressman:

-

If you use parables then You Are Not Serious People, as in ‘Bluntly: A real urgent threat that demands attention does not begin with Once Upon a Time.’

-

Which he agrees is a style issue.

-

There are trade-offs here. It is hard for someone like Pressman to appreciate the challenges in engaging with average people who know nothing about these issues or facing off the various stupid objections those people have (that John mostly agrees are deeply stupid).

-

-

He thinks the alignment problems involved are much easier than Yudkowsky and Soares believe, including that we have ‘solved the human values loading problem,’ although he agrees that we are still very much on track to fail bigly.

-

I think (both here and elsewhere where he goes into more detail) he both greatly overstates his case and also deliberately presents the case in a hostile and esoteric manner. That makes engagement unnecessarily difficult.

-

I also think there’s important real things here and in related clusters of arguments, and my point estimate of difficulty is lower than the book’s, largely for reasons that are related to what Pressman is attempting to say.

-

As Pressman points out, even if he’s fully right, it looks pretty grim anyway.

-

Aella (to be fair a biased source here) offers a kind of meta-review.

Aella: man writes book to warn the public about asteroid heading towards earth. his fellow scientists publish thinkpieces with stuff like ‘well his book wasn’t very good. I’m not arguing about the trajectory but he never addresses my objection that the asteroid is actually 20% slower’

I think I’d feel a lot better if more reviews started with “first off, I am also very worried about the asteroid and am glad someone is trying to get this to the public. I recognize I, a niche enthusiast, am not the target of this book, which is the general public and policy makers. Regardless of how well I think the book accomplishes its mission, it’s important we all band together and take this very seriously. That being said, here’s my thoughts/criticisms/etc.

Raymond Arnold responds to various complaints about the book, especially:

-

Claims the book is exaggerating. It isn’t.

-

There is even an advertising campaign whose slogan is ‘we wish we were exaggerating,’ and I verify they do wish this.

-

It is highly reasonable to describe the claims as overstated or false, indeed in some places I agree with you. But as Kelsey says, focus on: What is true?

-

-

Claims the authors are overconfident, including claims this is bad for credibility.

-

I agree that the authors are indeed overconfident. I hope I’ve made that clear.

-

However I think these are reasonable mistakes in context, that the epistemic standards here are much higher than those of most critics, and also that people should say what they believe, and that the book is careful not to rely upon this overconfidence in its arguments.

-

Fears about credibility or respectability, I believe, motivate many attacks on the book. I would urge everyone attacking the book for this reason (beyond noting the specific worry) to stop and take a long, hard look in the mirror.

-

-

Complaints that it sucks that ‘extreme’ or eye-catching claims will be more visible. Whereas claims one thinks are more true are less visible and discussed.

-

Yeah, sorry, world works the way it does.

-

The eye-catching claims open up the discussion, where you can then make clear you disagree with the full claim but endorse a related other claim.

-

As Raymond says, this is a good thing to do, if you think the book is wrong about something write and say why and about how you think about it.

-

He provides extensive responses to the arguments and complaints he has seen, using his own framings, in the extended thread.

Then he fleshes out his full thoughts in a longer LessWrong post, The Title Is Reasonable, that lays out these questions at length. It also contains some good comment discussion, including by Nate Soares.

I agree with him that it is a reasonable strategy to have those who make big asks outside the Overton Window because they believe those asks are necessary, and also those who make more modest asks because they feel those are valuable and achievable. I also agree with him that this is a classic often successful strategy.

Yes, people will try to tar all opposition with the most extreme view and extreme ask. You see it all the time in anything political, and there are plenty of people doing this already. But it is not obvious this works, and in any case it is priced in. One can always find such a target, or simply lie about one or make one up. And indeed, this is the path a16z and other similar bad faith actors have chosen time and again.

James Miller: I’m an academic who has written a lot of journalistic articles. If Anyone Builds It has a writing style designed for the general public, not LessWrong, and that is a good thing and a source of complaints against the book.

David Manheim notes that the book is non-technical by design and does not attempt to bring readers up to speed on the last decade of literature. As David notes, the book reflects on the new information but is not in any position to cover or discuss all of that in detail.

Dan Schwartz offers arguments for using evolution-based analogies for deep learning.

Clara Collier of Asterisk notes that She Is Not The Target for the book so it is hard to evaluate its effectiveness on its intended audience, but she is disappointed in what she calls the authors failures to update.

I would turn this back at Clara here, and say she seems to have failed to update her priors on what the authors are saying. I found her response very disappointing.

I don’t agree with Rob Bensinger’s view that this was one of the lowest-quality reviews. It’s more that it was the most disappointing given the usual quality and epistemic standards of the source. The worst reviews were, as one would expect, from sources that are reliably terrible in similar spots, but that is easy to shrug off.

-

The book very explicitly does not depend on the foom, there only being one AI, or other elements she says it depends on. Indeed in the book’s example story the AI does not foom. It feels like arguing with a phantom.

-

Also see Max Harms responding on this here, where he lays out the foom is not a ‘key plank’ in the MIRI story of why AI is dangerous.

-

He also points out that there being multiple similarly powerful AIs by default makes the situation more dangerous rather than less dangerous, including in AI 2027, because it reduces margin for error and ability to invest in safety. If you’ve done a tabletop exercise based on AI 2027, this becomes even clearer.

-

-

Her first charge is that a lot of AI safety people disagree with the MIRI perspective on the problem, so the subtext of the book is that the authors must think all those other people are idiots. This seems like a mix of an argument for epistemic modesty, or a claim that Yudkowsky and Soares haven’t considered these other opinions properly, and are disrespecting those who hold them? I would push back strongly on all of that.

-

She raises the objection that the book has an extreme ask that ‘distracts’ from other asks, a term also used by MacAskill. Yes, the book asks for an extreme thing that I don’t endorse, but that’s what they think is necessary, so they should say so.

-

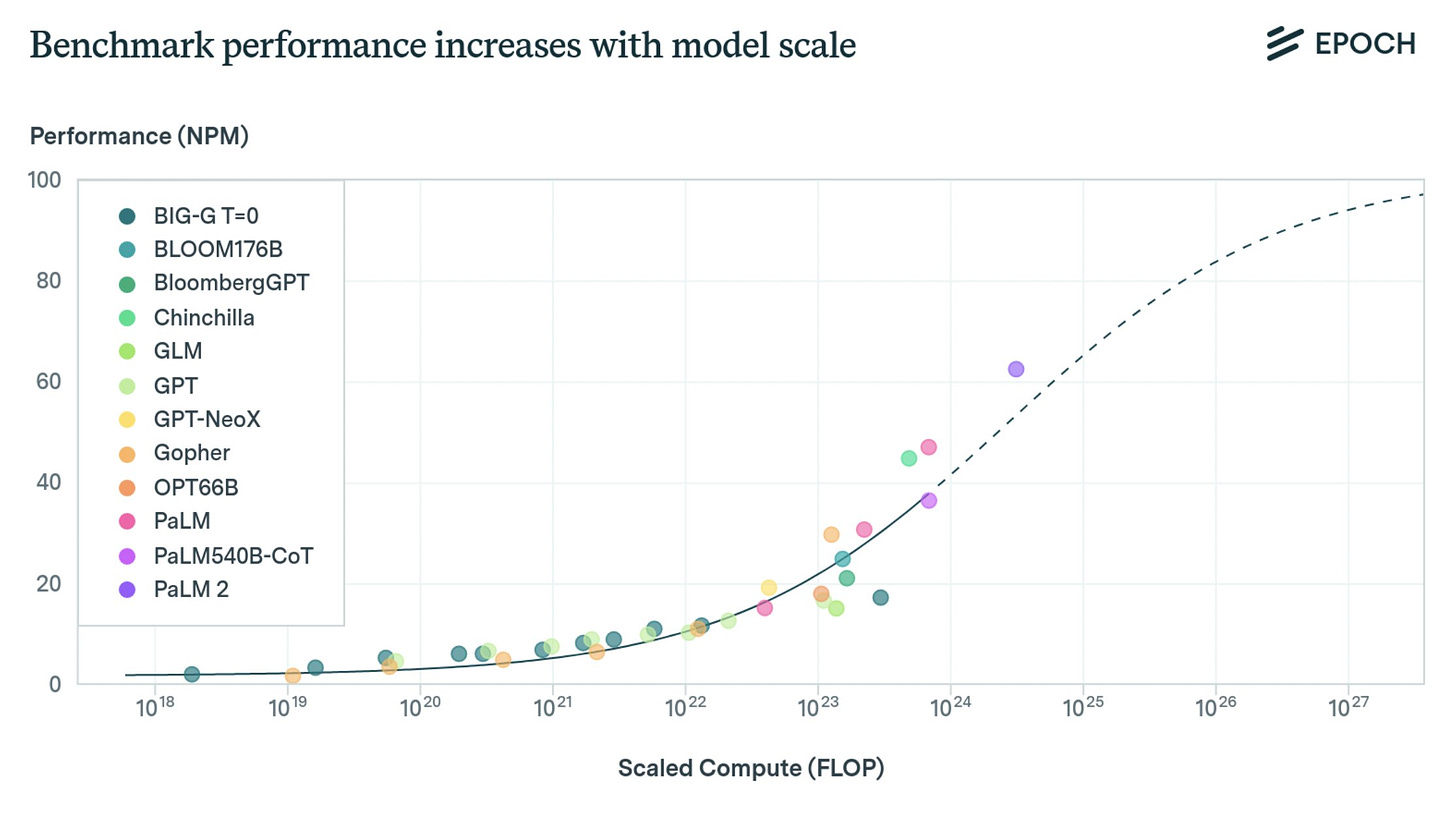

She asks why the book doesn’t spend more time explaining why an intelligence explosion is likely to occur. The answer is the book is explicitly arguing a conditional, what happens if it does occur, and acknowledges that it may or may not occur, or occur on any given time frame. She also raises the ‘but progress has been continuous which argues against an intelligent explosion’ argument, except continuous progress does not argue against a future explosion. Extend curves.

-

She objects that the authors reach the same conclusions about LLMs that they previously reached about other AI systems. Yes, they do. But, she says, these things are different. Yes, they are, but not in ways that change the answer, and the book (and supplementary material, and their other writings) explain why they believe this. Reasonable people can disagree. I don’t think, from reading Clara’s objections along these lines, that she understands the central arguments being made by the authors (over the last 20 years) on these points.

-

She says if progress will be ‘slow and continuous’ we will have more than one shot on the goal. For sufficiently generous definitions of slow and continuous this might be true, but there has been a lot of confusion about ‘slow’ versus ‘fast.’ Current observed levels of ‘slow’ are still remarkably fast, and effectively mean we only get one shot, although we are getting tons of very salient and clear warning shots and signs before we take that one shot. Of course, we’re ignoring the signs.

-

She objections that ‘a future full of flourishing people is not the best, most efficient way to fulfill strange alien purposes’ is stated as a priori obvious. But it is very much a priori obvious, I will bite this bullet and die on this hill.

There are additional places one can quibble, another good response is the full post from Max Harms, which includes many additional substantive criticisms. One key additional clarification is that the book is not claiming that what we will fail to learn a lot about AI from studying current AI, only that this ‘a lot’ will be nothing like sufficient. It can be difficult to reconcile ‘we will learn a lot of useful things’ with ‘we will predictably learn nothing like enough of the necessary things.’

Clara responded in the comments, and stands by the claim that the book’s claims require a major discontinuity in capabilities, and that gradualism would imply multiple meaningfully distinct shots on goal.

Jeffrey Ladish also has a good response to some of Collier’s claims, which he finishes like this:

Jeffrey Ladish: I do think you’re nearby to a criticism that I would make about Eliezer’s views / potential failures to update: Which is the idea that the gap between a village idiot and Einstein is small and we’ll blow through it quite fast. I think this was an understandable view at the time and has turned out to be quite wrong. And an implication of this is that we might be able to use agents that are not yet strongly superhuman to help us with interpretability / alignment research / other useful stuff to help us survive.

Anyway, I appreciate you publishing your thoughts here, but I wanted to comment because I didn’t feel like you passed the authors ITT, and that surprised me.

Peter Wildeford: I agree that the focus on FOOM in this review felt like a large distraction and missed the point of the book.

The fact that we meaningfully do get a meaningful amount of time with AIs one could think of as between village idiots and Einsteins is indeed a major source of hope, although I understand why Soares and Yudkowsky do not see as much hope here, and that would be a good place to poke. The gap argument was still more correct than incorrect, in the sense that we will likely only get a period of years in that window rather than decades, and most people are making various forms of the opposite mistake and not understanding that above-Einstein levels of intelligence are Coming Soon. But years or even months can do a lot for you if you use them well.

Will MacAskill offers a negative review, criticizing the arguments and especially parallels to evolution as quite bad, although he praises the book for plainly saying what its authors actually believe, and for laying out ways in which the tech we saw was surprising, and for the quality of the analogies.

I found Will’s quite disappointing but unsurprising. Here are my responses:

-

The ones about evolution parallels seem robustly answered by the book and also repeatedly elsewhere by many.

-

Will claims the book is relying on a discontinuity of capability. It isn’t. They are very clear that it isn’t. The example story containing a very mild form of [X] does not mean one’s argument relies on [X], although it seems impossible for there not to be some amount of jumps in capability, and we have indeed seen such jumps.

-

The ones about ‘types of misalignment’ seems at best deeply confused, I think he’s saying humans will stay in control because AIs will be risk averse, so they’ll be happy to settle for a salary rather than take over and thus make this overwhelmingly pitiful deal with us? Whereas the fact that imperfect alignment is indeed catastrophic misalignment in the context of a superhuman AI is the entire thesis of the book, covered extensively, in ways Will doesn’t engage with here?

-

Intuition pump that might help: Are humans risk averse?

-

-

Criticism of the proposal as unlikely to happen. Well, not with that attitude. If one thinks that this is what it takes, one should say so. If not, not.

-

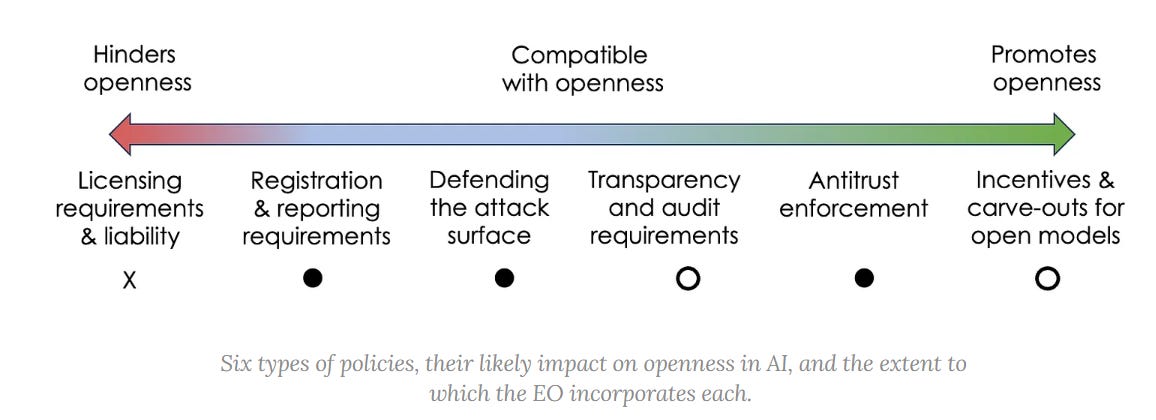

Criticism of the proposal as unnecessary or even unhelpful, and ‘distracting’ from other things we could do. Standard arguments here. Not much to say, other than to note that the piecemail ban proposals he suggests don’t technically work.

-

Criticism of the use of fiction and parables ‘as a distraction.’ Okie dokie.

-

Claim that the authors should have updated more in light of developments in ML. This is a reasonable argument one can make, but people who (including Clair and also Tyler below) that the book’s arguments are ‘outdated’ are simply incorrect. The arguments and authors do take such information into account, and have updated in some ways, but do not believe the new developments change the central arguments or likely future ultimate path. And they explain why. You are encouraged to disagree with their reasoning if you find it wanting.

Zack Robinson of Anthropic’s Long Term Benefit Trust has a quite poor Twitter thread attacking the book, following the MacAskill style principle of attacking those whose tactics and messages are not cooperating with the EA-brand-approved messages designed to seek movement growth, respectability and power and donations, which in my culture we call a strategy of instrumental convergence.

The particular arguments in the thread are quite bad and mischaracterize the book. He says the book presents doom as a foregone conclusion, which is not how contingent predictions work. He uses pure modesty and respectability arguments for ‘accepting uncertainty’ in order to ‘leave room for AI’s transformative benefits,’ which is simply a non-sequitur. He warns this ‘blinds us to other series risks,’ the ‘your cause of us all not dying is a distraction from the real risks’ argument, which again simply is not true, there is no conflict here. His statement in this linked Tweet about the policy debate being only a binary false choice is itself clearly false, and he must know this.

The whole thing is in what reads as workshopped corporate speak.

The responses to the thread are very on point, and Rob Benginger in particular pulls this very important point of emphasis:

Like Buck and those who responded to expressions their disappointment, I find this thread unbecoming of someone on the LTBT and as long as he remains there I can no longer consider him a meaningful voice for existential risk worries there, which substantially lowers my estimate of Anthropic’s likely behaviors.

I join several respondents in questioning whether Zack has read the book, a question he did not respond to. One can even ask if he has logically parsed its title.

Similarly, given he is the CEO of the Center for Effective Altruism, I must adjust there, as well, especially as this is part of a pattern of similar strategies.

I think this line is telling in multiple ways:

Zack Robinson: I grew up participating in debate, so I know the importance of confidence. But there are two types: epistemic (based on evidence) and social (based on delivery). Despite being expressed in self-assured language, the evidence for imminent existential risk is far from airtight.

-

Zack is debating in this thread, as in trying to make his position win and get ahead via his arguments, rather than trying to improve our epistemics.

-

Contrary to the literal text, Zack is looking at the social implications of appearing confident, and disapproving, rather than primarily challenging the epistemics. Indeed, the only arguments he makes against confidence here are from modesty:

-

Argument from consequences: Thinking confidently causes you to get dismissed (maybe) or to ignore other risks (no) and whatnot.

-

Argument from consensus: Others believe the risk is lower. Okie dokie. Noted.

-

Tyler Cowen does not offer a review directly, instead in an assorted links post strategically linking in a dismissive (of the book that he does not name) fashion to curated negative reviews and comments, including MacAskill and Collier above.

As you would expect, many others not named in this post are trotting out all the usual Obvious Nonsense arguments about why superintelligence would definitely turn out fine for the humans, such as that ASIs would ‘freely choose to love humans.’ Do not be distracted by noise.

Peter Wildeford: They made the movie but in real life.

Simeon: To be fair on the image they’re not laughing etc.

Jacques: tbf, he did not ask, “but what does this mean for jobs?”

Will: You know it’s getting real when Yud has conceded the issue of hats.

Discussion about this post

More Reactions to If Anyone Builds It, Everyone Dies Read More »