Nation-state hackers deliver malware from “bulletproof” blockchains

Hacking groups—at least one of which works on behalf of the North Korean government—have found a new and inexpensive way to distribute malware from “bulletproof” hosts: stashing them on public cryptocurrency blockchains.

In a Thursday post, members of the Google Threat Intelligence Group said the technique provides the hackers with their own “bulletproof” host, a term that describes cloud platforms that are largely immune from takedowns by law enforcement and pressure from security researchers. More traditionally, these hosts are located in countries without treaties agreeing to enforce criminal laws from the US and other nations. These services often charge hefty sums and cater to criminals spreading malware or peddling child sexual abuse material and wares sold in crime-based flea markets.

Next-gen, DIY hosting that can’t be tampered with

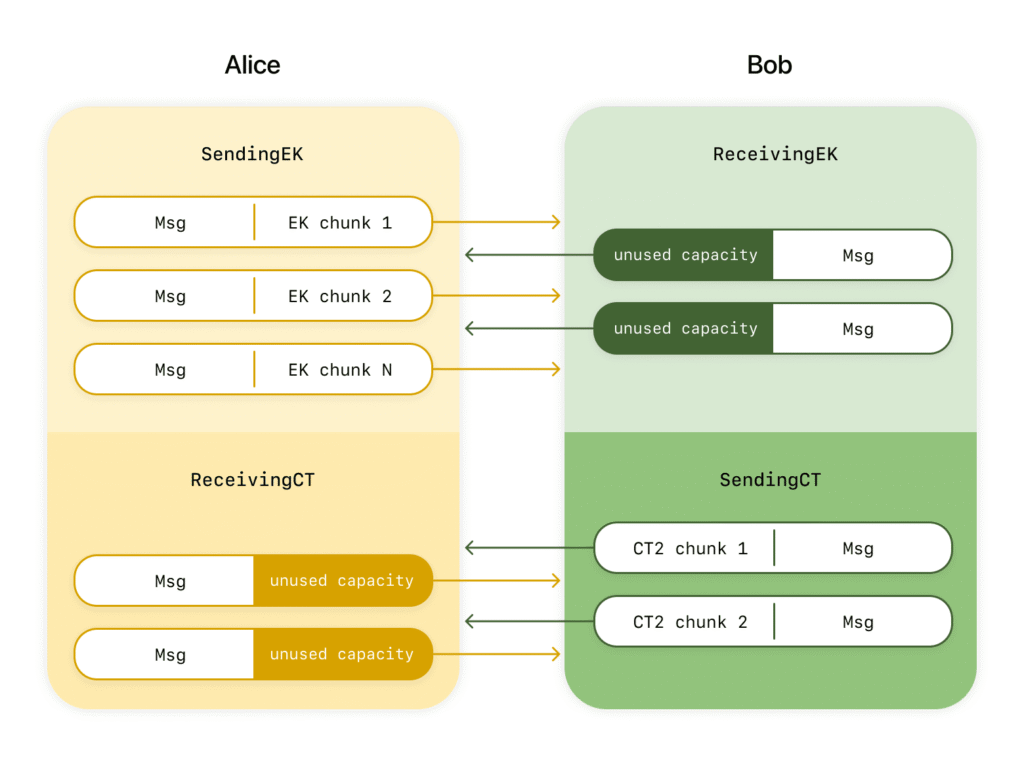

Since February, Google researchers have observed two groups turning to a newer technique to infect targets with credential stealers and other forms of malware. The method, known as EtherHiding, embeds the malware in smart contracts, which are essentially apps that reside on blockchains for Ethereum and other cryptocurrencies. Two or more parties then enter into an agreement spelled out in the contract. When certain conditions are met, the apps enforce the contract terms in a way that, at least theoretically, is immutable and independent of any central authority.

“In essence, EtherHiding represents a shift toward next-generation bulletproof hosting, where the inherent features of blockchain technology are repurposed for malicious ends,” Google researchers Blas Kojusner, Robert Wallace, and Joseph Dobson wrote. “This technique underscores the continuous evolution of cyber threats as attackers adapt and leverage new technologies to their advantage.”

There’s a wide array of advantages to EtherHiding over more traditional means of delivering malware, which besides bulletproof hosting include leveraging compromised servers.

-

- The decentralization prevents takedowns of the malicious smart contracts because the mechanisms in the blockchains bar the removal of all such contracts.

- Similarly, the immutability of the contracts prevents the removal or tampering with the malware by anyone.

- Transactions on Ethereum and several other blockchains are effectively anonymous, protecting the hackers’ identities.

- Retrieval of malware from the contracts leaves no trace of the access in event logs, providing stealth

- The attackers can update malicious payloads at anytime

Nation-state hackers deliver malware from “bulletproof” blockchains Read More »