Bogus research is undermining good science, slowing lifesaving research

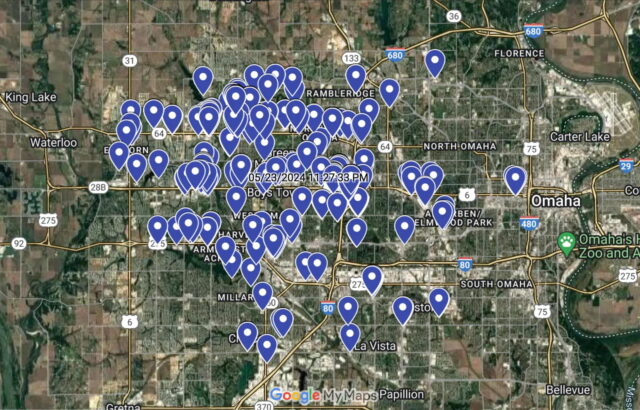

In 2022, Byrne and colleagues, including two of us, found that suspect genetics research, despite not immediately affecting patient care, informs scientists’ work, including clinical trials. But publishers are often slow to retract tainted papers, even when alerted to obvious fraud. We found that 97 percent of the 712 problematic genetics research articles we identified remained uncorrected.

Potential solutions

The Cochrane Collaboration has a policy excluding suspect studies from its analyses of medical evidence and is developing a tool to spot problematic medical trials. And publishers have begun to share data and technologies among themselves to combat fraud, including image fraud.

Technology startups are also offering help. The website Argos, launched in September 2024 by Scitility, an alert service based in Sparks, Nevada, allows authors to check collaborators for retractions or misconduct. Morressier, a scientific conference and communications company in Berlin, offers research integrity tools. Paper-checking tools include Signals, by London-based Research Signals, and Clear Skies’ Papermill Alarm.

But Alam acknowledges that the fight against paper mills won’t be won as long as the booming demand for papers remains.

Today’s commercial publishing is part of the problem, Byrne said. Cleaning up the literature is a vast and expensive undertaking. “Either we have to monetize corrections such that publishers are paid for their work, or forget the publishers and do it ourselves,” she said.

There’s a fundamental bias in for-profit publishing: “We pay them for accepting papers,” said Bodo Stern, a former editor of the journal Cell and chief of Strategic Initiatives at Howard Hughes Medical Institute, a nonprofit research organization and funder in Chevy Chase, Maryland. With more than 50,000 journals on the market, bad papers shopped around long enough eventually find a home, Stern said.

To prevent this, we could stop paying journals for accepting papers and look at them as public utilities that serve a greater good. “We should pay for transparent and rigorous quality-control mechanisms,” he said.

Peer review, meanwhile, “should be recognized as a true scholarly product, just like the original article,” Stern said. And journals should make all peer-review reports publicly available, even for manuscripts they turn down.

This article is republished from The Conversation under a Creative Commons license. This is a condensed version. To learn more about how fraudsters around the globe use paper mills to enrich themselves and harm scientific research, read the full version.

Frederik Joelving is a contributing editor at Retraction Watch; Cyril Labbé is a professor of computer science at the Université Grenoble Alpes (UGA); and Guillaume Cabanac is a professor of computer science at Institut de Recherche en Informatique de Toulouse.

Bogus research is undermining good science, slowing lifesaving research Read More »