You think it’s bad now? Oh, you have no idea. In his talks with Ben Thompson and Dwarkesh Patel, Zuckerberg lays out his vision for our AI future.

I thank him for his candor. I’m still kind of boggled that he said all of it out loud.

We will start with the situation now. How are things going on Facebook in the AI era?

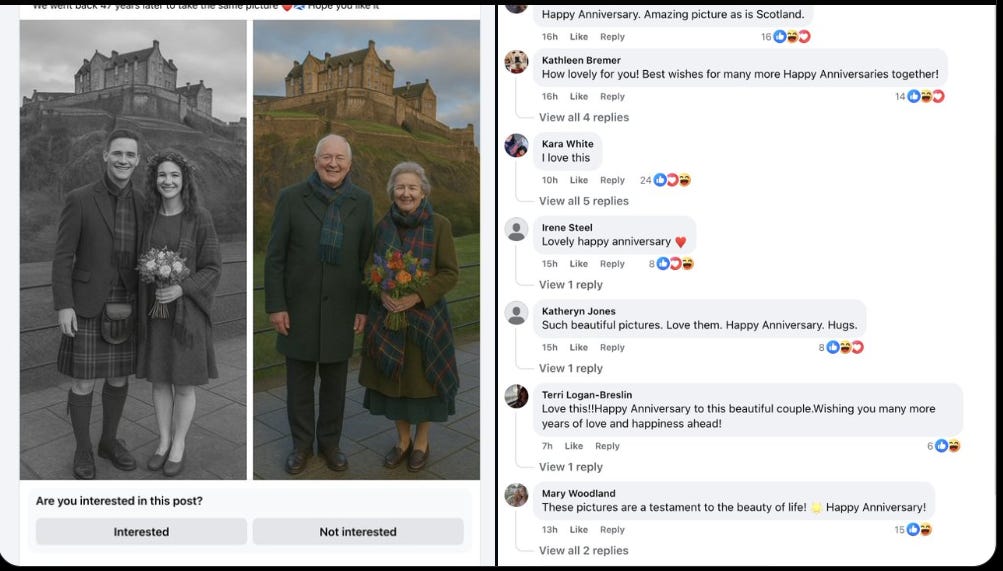

Oh, right.

Sakib: Again, it happened again. Opened Facebook and I saw this. I looked at the comments and they’re just unsuspecting boomers congratulating the fake AI gen couple😂

Deepfates: You think those are real boomers in the comments?

This continues to be 100% Zuckerberg’s fault, and 100% an intentional decision.

The algorithm knows full well what kind of post this is. It still floods people with them, especially if you click even once. If they wanted to stop it, they easily could.

There’s also the rather insane and deeply embarrassing AI bot accounts they have tried out on Facebook and Instagram.

Compared to his vision of the future? You aint seen nothing yet.

Ben Thompson interviewed Mark Zuckerberg, centering on business models.

It was like if you took a left wing caricature of why Zuckerberg is evil, combined it with a left wing caricature about why AI is evil, and then fused them into their final form. Except it’s coming directly from Zuckerberg, as explicit text, on purpose.

It’s understandable that many leave such interviews and related stories saying this:

Ewan Morrison: Big tech atomises you, isolates you, makes you lonely and depressed – then it rents you an AI friend, and AI therapist, an AI lover.

Big tech are parasites who pretend they are here to help you.

When asked what he wants to use AI for, Zuckerberg’s primary answer is advertising, in particular an ‘ultimate black box’ where you ask for a business outcome and the AI does what it takes to make that outcome happen. I leave all the ‘do not want’ and ‘misalignment maximalist goal out of what you are literally calling a black box, film at 11 if you need to watch it again’ and ‘general dystopian nightmare’ details as an exercise to the reader. He anticipates that advertising will then grow from the current 1%-2% of GDP to something more, and Thompson is ‘there with’ him, ‘everyone should embrace the black box.’

His number two use is ‘growing engagement on the customer surfaces and recommendations.’ As in, advertising by another name, and using AI in predatory fashion to maximize user engagement and drive addictive behavior.

In case you were wondering if it stops being this dystopian after that? Oh, hell no.

Mark Zuckerberg: You can think about our products as there have been two major epochs so far.

The first was you had your friends and you basically shared with them and you got content from them and now, we’re in an epoch where we’ve basically layered over this whole zone of creator content.

So the stuff from your friends and followers and all the people that you follow hasn’t gone away, but we added on this whole other corpus around all this content that creators have that we are recommending.

Well, the third epoch is I think that there’s going to be all this AI-generated content…

…

So I think that these feed type services, like these channels where people are getting their content, are going to become more of what people spend their time on, and the better that AI can both help create and recommend the content, I think that that’s going to be a huge thing. So that’s kind of the second category.

…

The third big AI revenue opportunity is going to be business messaging.

…

And the way that I think that’s going to happen, we see the early glimpses of this because business messaging is actually already a huge thing in countries like Thailand and Vietnam.

So what will unlock that for the rest of the world? It’s like, it’s AI making it so that you can have a low cost of labor version of that everywhere else.

Also he thinks everyone should have an AI therapist, and that people want more friends so AI can fill in for the missing humans there. Yay.

PoliMath: I don’t really have words for how much I hate this

But I also don’t have a solution for how to combat the genuine isolation and loneliness that people suffer from

AI friends are, imo, just a drug that lessens the immediate pain but will probably cause far greater suffering

Well, I guess the fourth one is the normal ‘everyone use AI now,’ at least?

And then, the fourth is all the more novel, just AI first thing, so like Meta AI.

He also blames Llama-4’s terrible reception on user error in setup, and says they now offer an API so people have a baseline implementation to point to, and says essentially ‘well of course we built a version of Llama-4 specifically to score well on Arena, that only shows off how easy it is to steer it, it’s good actually.’ Neither of them, of course, even bothers to mention any downside risks or costs of open models.

The killer app of Meta AI is that it will know all about all your activity on Facebook and Instagram and use it against for you, and also let you essentially ‘talk to the algorithm’ which I do admit is kind of interesting but I notice Zuckerberg didn’t mention an option to tell it to alter the algorithm, and Thompson didn’t ask.

There is one area where I like where his head is at:

I think one of the things that I’m really focused on is how can you make it so AI can help you be a better friend to your friends, and there’s a lot of stuff about the people who I care about that I don’t remember, I could be more thoughtful.

There are all these issues where it’s like, “I don’t make plans until the last minute”, and then it’s like, “I don’t know who’s around and I don’t want to bug people”, or whatever. An AI that has good context about what’s going on with the people you care about, is going to be able to help you out with this.

That is… not how I would implement this kind of feature, and indeed the more details you read the more Zuckerberg seems determined to do even the right thing in the most dystopian way possible, but as long as it’s fully opt-in (if not, wowie moment of the week) then at least we’re trying at all.

Also interviewing Mark Zuckerberg is Dwarkesh Patel. There was good content here, Zuckerberg in many ways continues to be remarkably candid. But it wasn’t as dense or hard hitting as many of Patel’s other interviews.

One key difference between the interviews is that when Zuckerberg lays out his dystopian vision, you get the sense that Thompson is for it, whereas Patel is trying to express that maybe we should be concerned. Another is that Patel notices that there might be more important things going on, whereas to Thompson nothing could be more important than enhancing ad markets.

-

When asked what changed since Llama 3, Zuckerberg leads off with the ‘personalization loop.’

-

Zuckerberg still claims Llama 4 Scout and Maverick are top notch. Okie dokie.

-

He doubles down on ‘open source will become most used this year’ and that this year has been Great News For Open Models. Okie dokie.

-

His heart’s clearly not in claiming it’s a good model, sir. His heart is in it being a good model for Meta’s particular commercial purposes and ‘product value’ as per people’s ‘revealed preferences.’ That’s the modes he talked about with Thompson.

-

He’s very explicit about this. OpenAI and Anthropic are going for AGI and a world of abundance, with Anthropic focused on coding and OpenAI towards reasoning. Meta wants fast, cheap, personalized, easy to interact with all day, and (if you add what he said to Thompson) to optimize feeds and recommendations for engagement, and to sell ads. It’s all for their own purposes.

-

He says Meta is specifically creating AI tools to write their own code for internal use, but I don’t understand what makes that different from a general AI coder? Or why they think their version is going to be better than using Claude or Gemini? This feels like some combination of paranoia and bluff.

-

Thus, Meta seems to at this point be using the open model approach as a recruiting or marketing tactic? I don’t know what else it’s actually doing for them.

-

As Dwarkesh notes, Zuckerberg is basically buying the case for superintelligence and the intelligence explosion, then ignoring it to form an ordinary business plan, and of course to continue to have their safety plan be ‘lol we’re Meta’ and release all their weights.

-

I notice I am confused why their tests need hundreds of thousands or millions of people to be statistically significant? Impacts must be very small and also their statistical techniques they’re using don’t seem great. But also, it is telling that his first thought of experiments to run with AI are being run on his users.

-

In general, Zuckerberg seems to be thinking he’s running an ordinary dystopian tech company doing ordinary dystopian things (except he thinks they’re not dystopian, which is why he talks about them so plainly and clearly) while other companies do other ordinary things, and has put all the intelligence explosion related high weirdness totally out of his mind or minimized it to specific use cases, even though he intellectually knows that isn’t right.

-

He, CEO of Meta, says people use what is valuable to them and people are smart and know what is valuable in their lives, and when you think otherwise you’re usually wrong. Queue the laugh track.

-

First named use case is talking through difficult conversations they need to have. I do think that’s actually a good use case candidate, but also easy to pervert.

-

(29: 40) The friend quote: The average American only has three friends ‘but has demand for meaningfully more, something like 15… They want more connection than they have.’ His core prediction is that AI connection will be a compliment to human connection rather than a substitute.

-

I tentatively agree with Zuckerberg, if and only if the AIs in question are engineered (by the developer, user or both, depending on context) to be complements rather than substitutes. You can make it one way.

-

However, when I see Meta’s plans, it seems they are steering it the other way.

-

-

Zuckerberg is making a fully general defense of adversarial capitalism and attention predation – if people are choosing to do something, then later we will see why it turned out to be valuable for them and why it adds value to their lives, including virtual therapists and virtual girlfriends.

-

But this proves (or implies) far too much as a general argument. It suggests full anarchism and zero consumer protections. It applies to heroin or joining cults or being in abusive relationships or marching off to war and so on. We all know plenty of examples of self-destructive behaviors. Yes, the great classical liberal insight is that mostly you are better off if you let people do what they want, and getting in the way usually backfires.

-

If you add AI into the mix, especially AI that moves beyond a ‘mere tool,’ and you consider highly persuasive AIs and algorithms, asserting ‘whatever the people choose to do must be benefiting them’ is Obvious Nonsense.

-

I do think virtual therapists have a lot of promise as value adds, if done well. And also great danger to do harm, if done poorly or maliciously.

-

-

Dwarkesh points out the danger of technology reward hacking us, and again Zuckerberg just triples down on ‘people know what they want.’ People wouldn’t let there be things constantly competing for their attention, so the future won’t be like that, he says. Is this a joke?

-

I do get that the right way to design AI-AR glasses is as great glasses that also serve as other things when you need them and don’t flood your vision, and that the wise consumer will pay extra to ensure it works that way. But where is this trust in consumers coming from? Has Zuckerberg seen the internet? Has he seen how people use their smartphones? Oh, right, he’s largely directly responsible.

-

Frankly, the reason I haven’t tried Meta’s glasses is that Meta makes them. They do sound like a nifty product otherwise, if execution is good.

-

-

Zuckerberg is a fan of various industrial policies, praising the export controls and calling on America to help build new data centers and related power sources.

-

Zuckerberg asks, would others be doing open models if Meta wasn’t doing it? Aren’t they doing this because otherwise ‘they’re going to lose?’

-

Do not flatter yourself, sir. They’re responding to DeepSeek, not you. And in particular, they’re doing it to squash the idea that r1 means DeepSeek or China is ‘winning.’ Meta’s got nothing to do with it, and you’re not pushing things in the open direction in a meaningful way at this point.

-

-

His case for why the open models need to be American is because our models embody an America view of the world in a way that Chinese models don’t. Even if you agree that is true, it doesn’t answer Dwarkesh’s point that everyone can easily switch models whenever they want. Zuckerberg then does mention the potential for backdoors, which is a real thing since ‘open model’ only means open weights, they’re not actually open source so you can’t rule out a backdoor.

-

Zuckerberg says the point of Llama Behemoth will be the ability to distill it. So making that an open model is specifically so that the work can be distilled. But that’s something we don’t want the Chinese to do, asks Padme?

-

And then we have a section on ‘monetizing AGI’ where Zuckerberg indeed goes right to ads and arguing that ads done well add value. Which they must, since consumers choose to watch them, I suppose, per his previous arguments?

To be fair, yes, it is hard out there. We all need a friend and our options are limited.

Roman Helmet Guy (reprise from last week): Zuckerberg explaining how Meta is creating personalized AI friends to supplement your real ones: “The average American has 3 friends, but has demand for 15.”

Daniel Eth: This sounds like something said by an alien from an antisocial species that has come to earth and is trying to report back to his kind what “friends” are.

Sam Ro: imagine having 15 friends.

Modest Proposal (quoting Chris Rock): “The Trenchcoat Mafia. No one would play with us. We had no friends. The Trenchcoat Mafia. Hey I saw the yearbook picture it was six of them. I ain’t have six friends in high school. I don’t got six friends now.”

Kevin Roose: The Meta vision of AI — hologram Reelslop and AI friends keeping you company while you eat breakfast alone — is so bleak I almost can’t believe they’re saying it out loud.

Exactly how dystopian are these ‘AI friends’ going to be?

GFodor.id (being modestly unfair): What he’s not saying is those “friends” will seem like real people. Your years-long friendship will culminate when they convince you to buy a specific truck. Suddenly, they’ll blink out of existence, having delivered a conversion to the company who spent $3.47 to fund their life.

Soible_VR: not your weights, not your friend.

Why would they then blink out of existence? There’s still so much more that ‘friend’ can do to convert sales, and also you want to ensure they stay happy with the truck and give it great reviews and so on, and also you don’t want the target to realize that was all you wanted, and so on. The true ‘AI ad buddy’ plays the long game, and is happy to stick around to monetize that bond – or maybe to get you to pay to keep them around, plus some profit margin.

The good ‘AI friend’ world is, again, one in which the AI friends are complements, or are only substituting while you can’t find better alternatives, and actively work to help you get and deepen ‘real’ friendships. Which is totally something they can do.

Then again, what happens when the AIs really are above human level, and can be as good ‘friends’ as a person? Is it so impossible to imagine this being fine? Suppose the AI was set up to perfectly imitate a real (remote) person who would actually be a good friend, including reacting as they would to the passage of time and them sometimes reaching out to you, and also that they’d introduce you to their friends which included other humans, and so on. What exactly is the problem?

And if you then give that AI ‘enhancements,’ such as happening to be more interested in whatever you’re interested in, having better information recall, watching out for you first more than most people would, etc, at what point do you have a problem? We need to be thinking about these questions now.

I do get that, in his own way, the man is trying. You wouldn’t talk about these plans in this way if you realized how the vision would sound to others. I get that he’s also talking to investors, but he has full control of Meta and isn’t raising capital, although Thompson thinks that Zuckerberg has need of going on a ‘trust me’ tour.

In some ways this is a microcosm of key parts of the alignment problem. I can see the problems Zuckerberg thinks he is solving, the value he thinks or claims he is providing. I can think of versions of these approaches that would indeed be ‘friendly’ to actual humans, and make their lives better, and which could actually get built.

Instead, on top of the commercial incentives, all the thinking feels alien. The optimization targets are subtly wrong. There is the assumption that the map corresponds to the territory, that people will know what is good for them so any ‘choices’ you convince them to make must be good for them, no matter how distorted you make the landscape, without worry about addiction to Skinner boxes or myopia or other forms of predation. That the collective social dynamics of adding AI into the mix in these ways won’t get twisted in ways that make everyone worse off.

And of course, there’s the continuing to model the future world as similar and ignoring the actual implications of the level of machine intelligence we should expect.

I do think there are ways to do AI therapists, AI ‘friends,’ AI curation of feeds and AI coordination of social worlds, and so on, that contribute to human flourishing, that would be great, and that could totally be done by Meta. I do not expect it to be at all similar to the one Meta actually builds.