Google reveals Nano Banana 2 AI image model, coming to Gemini today

With Nano Banana 2, Google promises consistency for up to five characters at a time, along with accurate rendering of as many as 14 different objects per workflow. This, along with richer textures and “vibrant” lighting will aid in visual storytelling with Nano Banana 2. Google is also expanding the range of available aspect ratios and resolutions, from 512px square up to 4K widescreen.

So what can you do with Nano Banana 2? Google has provided some example images with associated prompts. These are, of course, handpicked images, but Nano Banana has been a popular image model for good reason. This degree of improvement seems believable based on past iterations of Nano Banana.

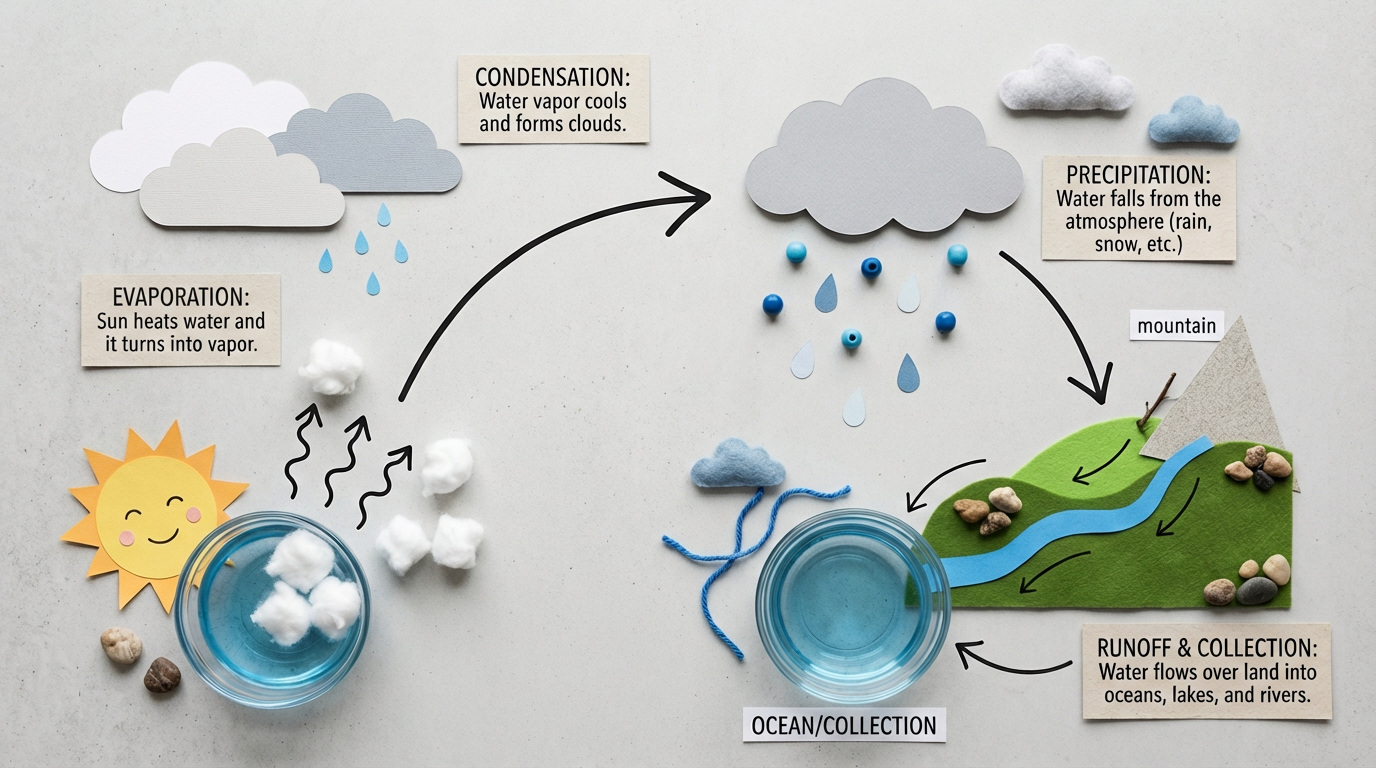

Prompt: High-quality flat lay photography creating a DIY infographic that simply explains how the water cycle works, arranged on a clean, light gray textured background. The visual story flows from left to right in clear steps. Simple, clean black arrows are hand-drawn onto the background to guide the viewer’s eye. The overall mood is educational, modern, and easy to understand. The image is shot from a top-down, bird’s-eye view with soft, even lighting that minimizes shadows and keeps the focus on the process.

Credit: Google

Prompt: High-quality flat lay photography creating a DIY infographic that simply explains how the water cycle works, arranged on a clean, light gray textured background. The visual story flows from left to right in clear steps. Simple, clean black arrows are hand-drawn onto the background to guide the viewer’s eye. The overall mood is educational, modern, and easy to understand. The image is shot from a top-down, bird’s-eye view with soft, even lighting that minimizes shadows and keeps the focus on the process. Credit: Google

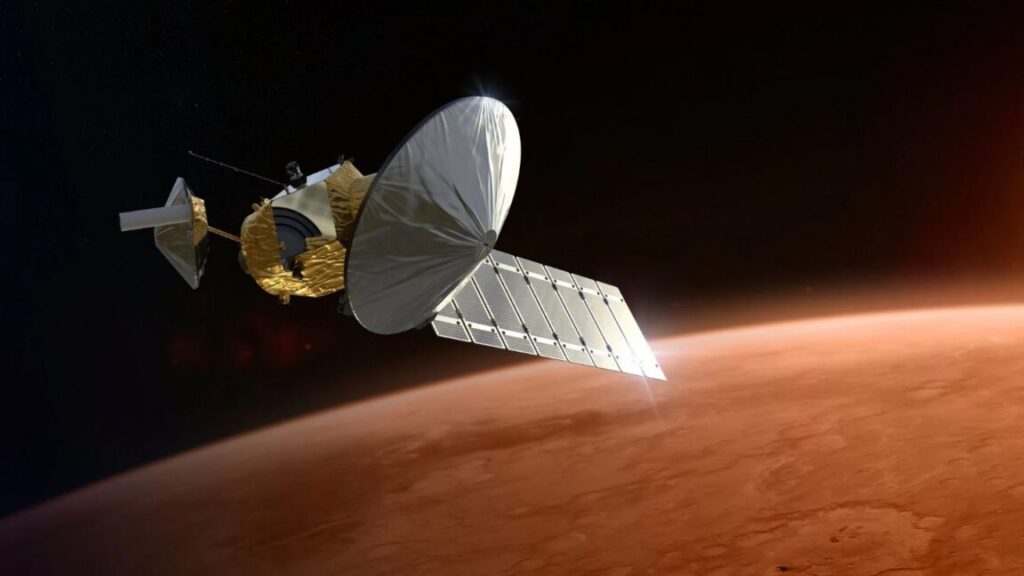

Prompt: Create an image of Museum Clos Lucé. In the style of bright colored Synthetic Cubism. No text. Your plan is to first search for visual references, and generate after. Aspect ratio 16:9. Credit: Google

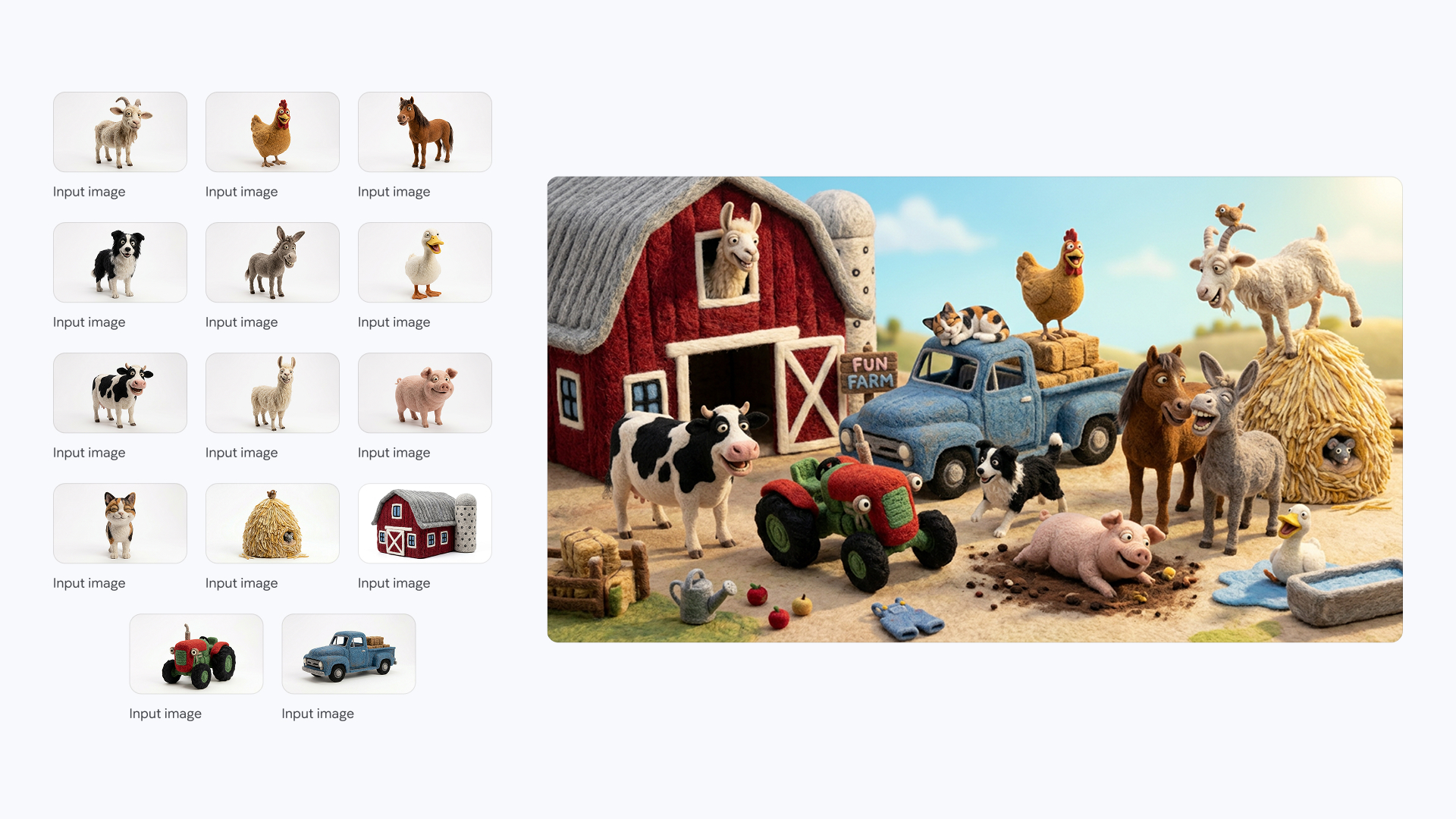

Create an image of these 14 characters and items having fun at the farm. The overall atmosphere is fun, silly and joyful. It is strictly important to keep identity consistent of all the 14 characters and items. Credit: Google

Google must be pretty confident in this model’s capabilities because it will be the only one available going forward. Starting now, Nano Banana 2 will replace both the standard and Pro variants of Nano Banana across the Gemini app, search, AI Studio, Vertex AI, and Flow.

In the Gemini app and on the website, Nano Banana 2 will be the image generator for the Fast, Thinking, and Pro settings. It’s possible there will eventually be a Nano Banana 2 Pro—Google tends to release elements of new model families one at a time. For now, it’s all “Flash” Image.

Google reveals Nano Banana 2 AI image model, coming to Gemini today Read More »