Why does OpenAI need six giant data centers?

Training next-generation AI models compounds the problem. On top of running existing AI models like those that power ChatGPT, OpenAI is constantly working on new technology in the background. It’s a process that requires thousands of specialized chips running continuously for months.

The circular investment question

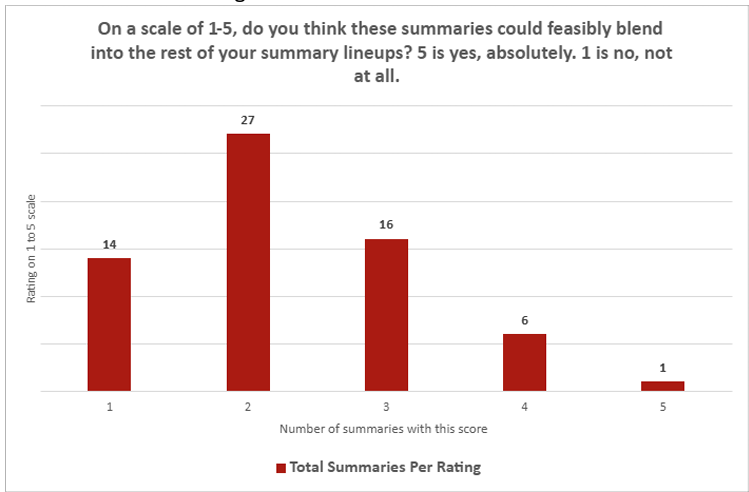

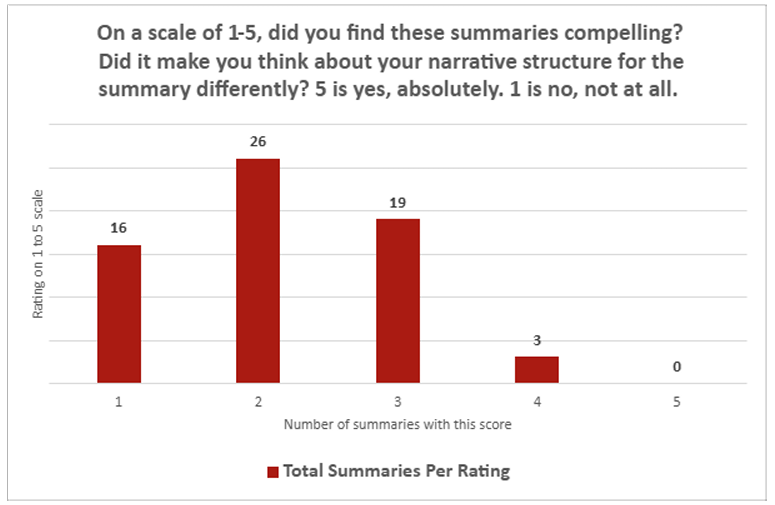

The financial structure of these deals between OpenAI, Oracle, and Nvidia has drawn scrutiny from industry observers. Earlier this week, Nvidia announced it would invest up to $100 billion as OpenAI deploys Nvidia systems. As Bryn Talkington of Requisite Capital Management told CNBC: “Nvidia invests $100 billion in OpenAI, which then OpenAI turns back and gives it back to Nvidia.”

Oracle’s arrangement follows a similar pattern, with a reported $30 billion-per-year deal where Oracle builds facilities that OpenAI pays to use. This circular flow, which involves infrastructure providers investing in AI companies that become their biggest customers, has raised eyebrows about whether these represent genuine economic investments or elaborate accounting maneuvers.

The arrangements are becoming even more convoluted. The Information reported this week that Nvidia is discussing leasing its chips to OpenAI rather than selling them outright. Under this structure, Nvidia would create a separate entity to purchase its own GPUs, then lease them to OpenAI, which adds yet another layer of circular financial engineering to this complicated relationship.

“NVIDIA seeds companies and gives them the guaranteed contracts necessary to raise debt to buy GPUs from NVIDIA, even though these companies are horribly unprofitable and will eventually die from a lack of any real demand,” wrote tech critic Ed Zitron on Bluesky last week about the unusual flow of AI infrastructure investments. Zitron was referring to companies like CoreWeave and Lambda Labs, which have raised billions in debt to buy Nvidia GPUs based partly on contracts from Nvidia itself. It’s a pattern that mirrors OpenAI’s arrangements with Oracle and Nvidia.

So what happens if the bubble pops? Even Altman himself warned last month that “someone will lose a phenomenal amount of money” in what he called an AI bubble. If AI demand fails to meet these astronomical projections, the massive data centers built on physical soil won’t simply vanish. When the dot-com bubble burst in 2001, fiber optic cable laid during the boom years eventually found use as Internet demand caught up. Similarly, these facilities could potentially pivot to cloud services, scientific computing, or other workloads, but at what might be massive losses for investors who paid AI-boom prices.

Why does OpenAI need six giant data centers? Read More »