Previously: #1, #2, #3, #4, #5

Dating Roundup #4 covered dating apps. Roundup #5 covered opening without them.

Dating Roundup #6 covers everything else.

-

You’re Single Because You Can’t Handle Basic Logistics.

-

You’re Single Because You Don’t Ask Questions.

-

You’re Single Because of Your Terrible Dating Tactics.

-

You’re Single Because You Refuse to Play Your Role.

-

You’re Single Because People Are Crazy About Age Gaps.

-

You’re Single and You Need Professional Help.

-

You’re Single Because You Never Close.

-

You’re Single Because You’re Bad at Sex And Everyone Knows.

-

You’re Single Because You Are Only a Fan.

-

You’re Single Because of Preference Falsification.

-

You’re Single Because You Have Insufficient Visual Aids.

-

You’re Single Because You Told Your Partner You Didn’t Want Them.

-

You’re Single Because of Your Terrible Dating Strategy.

-

You’re Single Because You Don’t Enjoy the Process.

-

You’re Single Because You Don’t Escalate Quickly.

-

You’re Single Because Your Standards Are Too High.

-

You’re Single Because You Read the Wrong Books.

-

You’re Single Because You’re Short, Sorry, That’s All There Is To It.

-

You’re Single Because of Bad Government Incentives.

-

You’re Single Because You Don’t Realize Cheating is Wrong.

-

You’re Single Because You’re Doing Polyamory Wrong.

-

You’re Single Because You Don’t Beware Cheaters.

-

You’re Single Because Your Ex Spilled the Tea.

-

You’re Single Because You’re Assigning People Numbers.

-

You’re Single Because You Are The Wrong Amount of Kinky.

-

You’re Single Because You’re Not Good Enough at Sex.

-

You’re Single But Not Because of Your Bodycount.

-

You’re Single Because They Divorced You.

-

You’re Single Because No One Tells You Anything.

-

You’re Single And You’re Not Alone.

-

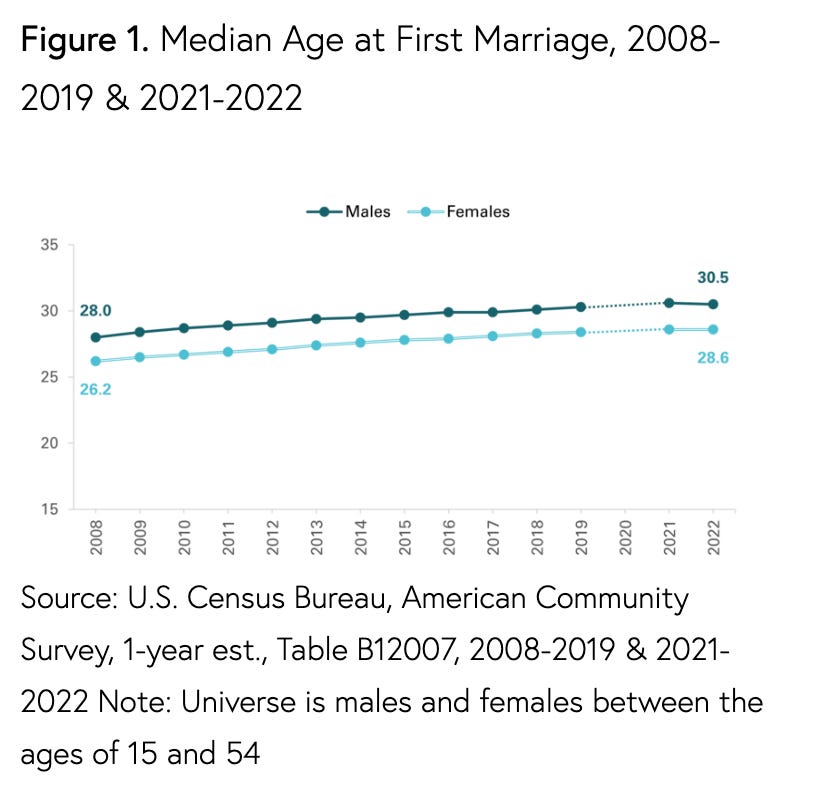

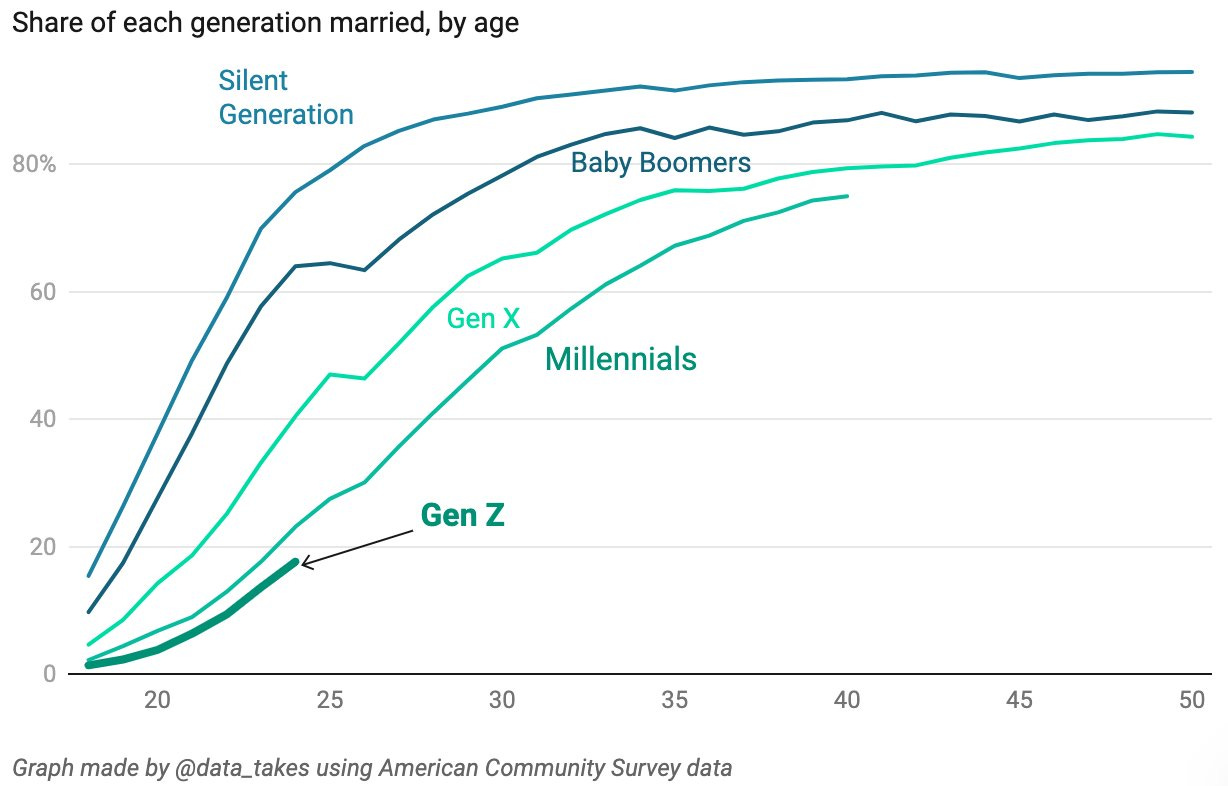

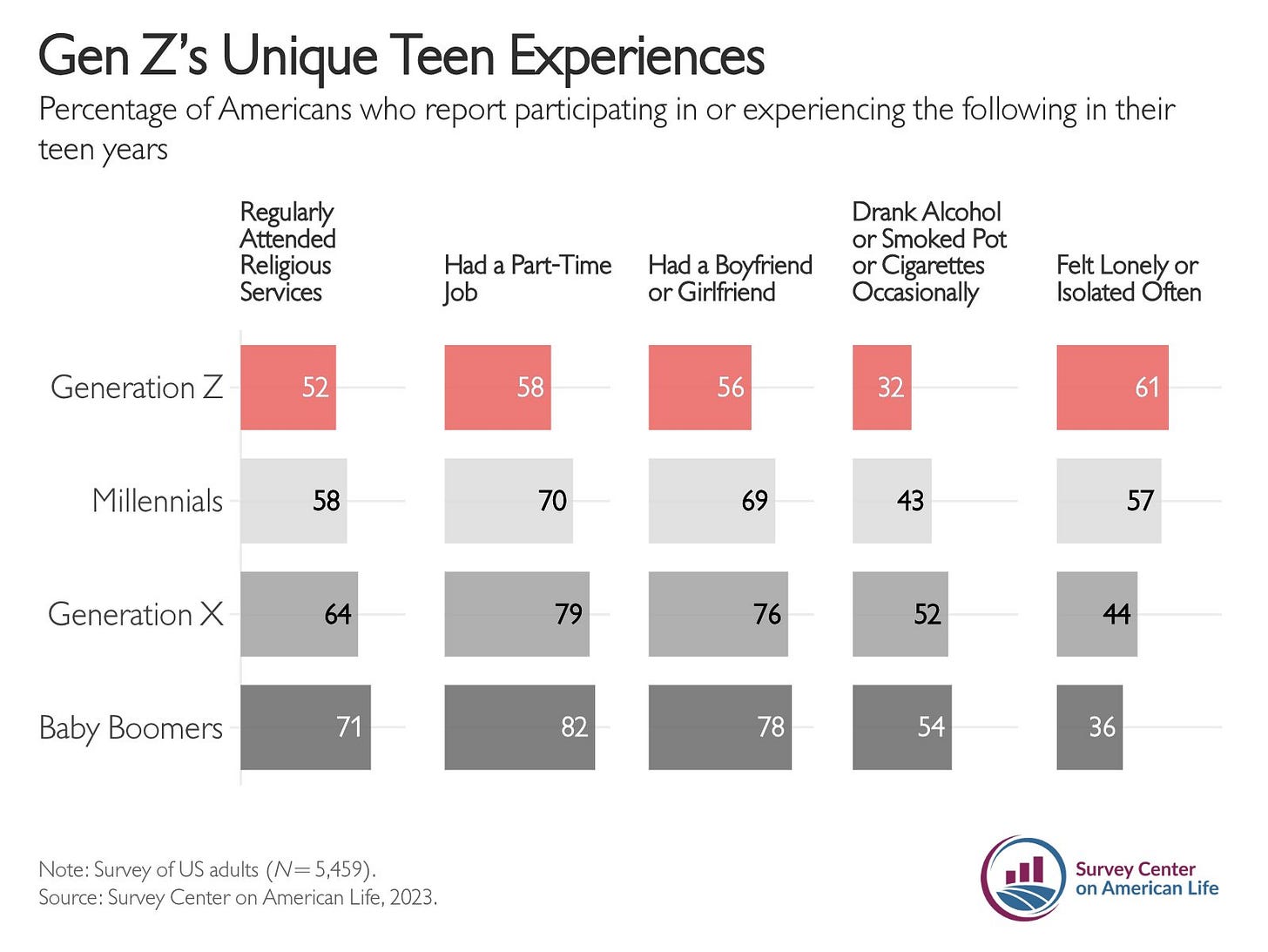

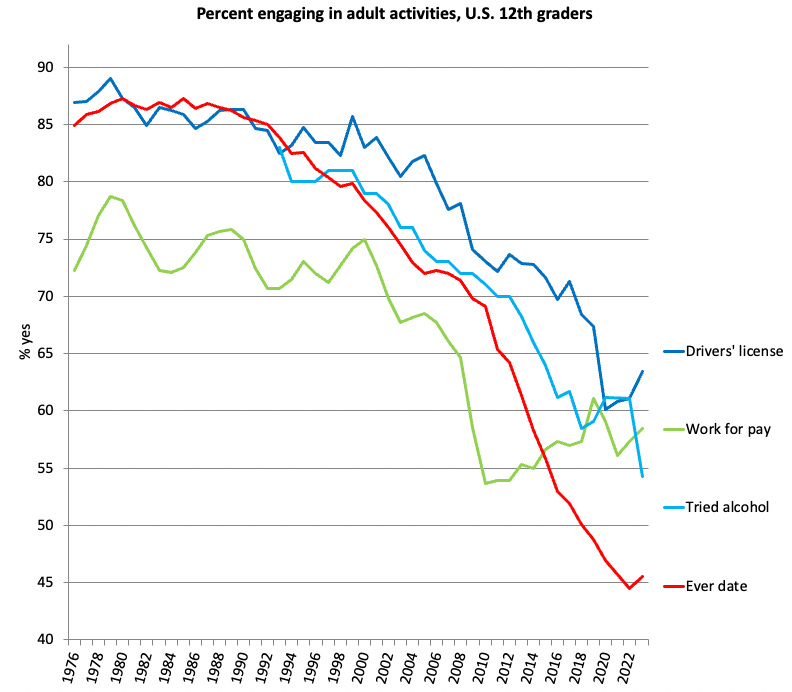

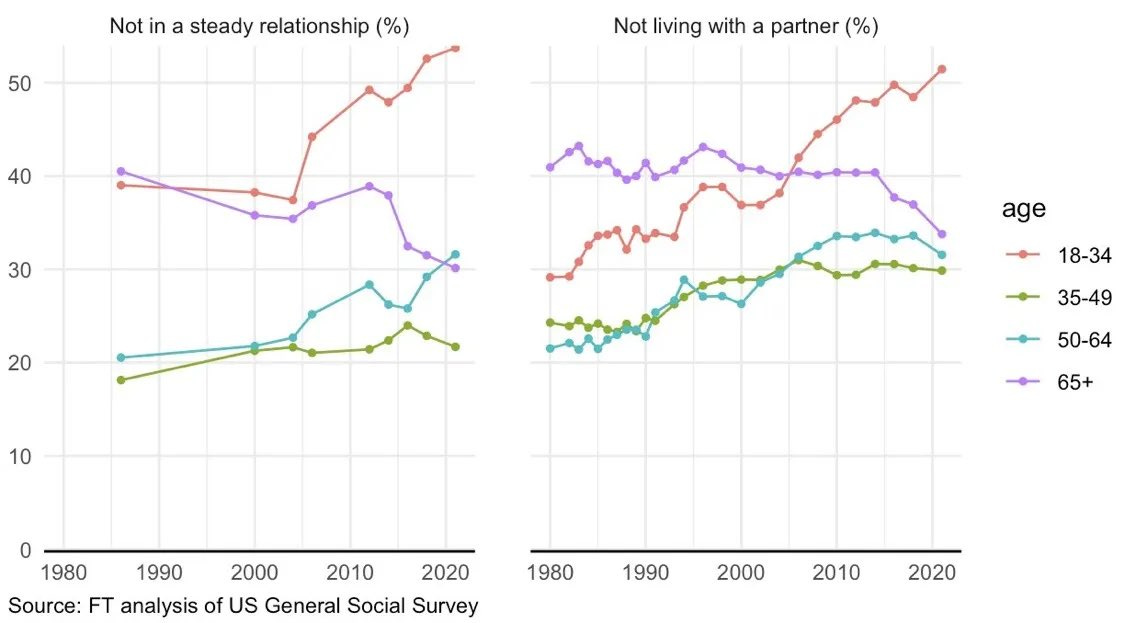

You’re Single Because Things Are Steadily Getting Worse.

-

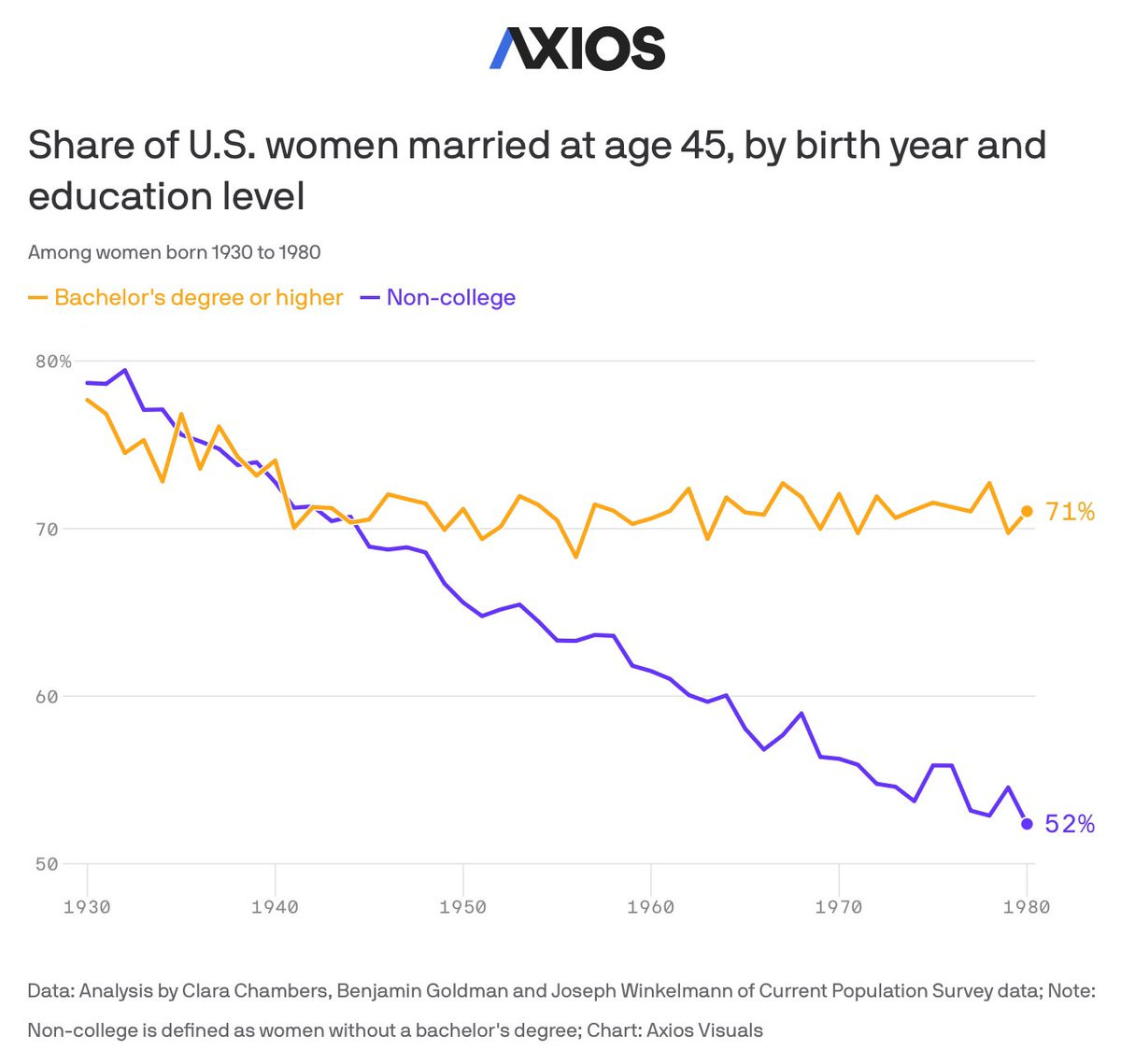

You’re Single Because You Didn’t Go to College.

-

You’re Single But This Isn’t About You.

-

You’re Single so Let’s Go to the Videotape.

-

You’re Single Because You Don’t Seek Out Good Advice.

-

You’re Single So Here’s Some Hope.

You can take the pressure off yourself to plan the perfect date.

Instead, plan any date at all.

Shoshana Weissmann: I was hanging out with my married friend, who is a great father and husband, telling him how men cannot plan dates. He said, “What’s there to plan? They pick a bar, a restaurant, and a park for a walk nearby.” And I had to explain that grown men refuse to tell me when and where to meet until a few hours beforehand, if they feel like it. You could see him wilt a little inside.

I know some people do not believe me. This was a recent instance.

He also lied because he told me he had a perfect place in mind and forgot the name two days beforehand.

Art Vandelay: He’s right. There’s nothing much to plan for a first date. Just find a nice cool spot for a drink (or coffee), maybe a bite to eat, and off you go. Not being able to do this very basic thing is a red flag like you read about.

Shoshana Weissmann: Hell yes.

A lot of the alpha is on the simple things, and not messing them up.

There are often reports from women that men will go on a first date with them, and fail to ask the woman any questions or show curiosity about the person across from them, actual zero anything.

This is a huge unforced error. Asking only has upside, even when you have to steer things back that way intentionally. Such questions are almost always appreciated, failure to ask them taken as a bad sign. Also the information is highly useful in deciding how and whether to proceed, and is usually actually interesting. If you find the answers boring, then partly that is likely a you problem, learn how to find people interesting (see Dan Carnegie etc) but also a sign this match is not for you, so little has been lost.

Or maybe they’re not making it easy on you.

Aella: Men on dates: “Wow, I’m so curious about you” *Proceeds to ask two brief questions, no follow-ups, and then talks about themselves the rest of the time.*

Decrolssance: Weird. I’d much rather hear about the girl. But they’re always turning it back on me.

Aella: Fellas, this is a battle. She’s testing if you’re truly curious by throwing a softball at you to see if you drop your stated intentions to pursue it eagerly.

Robin Hanson: Men mostly want to idealize women and take them at their word, but this becomes harder when we also need to notice that they often test us via misleading signals.

Amanda Askell: More common: “Wow, I’m so curious about you.”

*Proceeds to ask so many questions that I begin to worry the goal is identity theft.*

Aella: Where do you find these guys? Can we swap?

Keep in mind that Amanda Askell works at Anthropic, so ‘he’s a spy’ is on the table.

The woman saying she is curious about you is partly that she probably is curious, but it also is a trap, potentially an intentional one. How do you steer things from there?

Good question. Presumably the goal is balance, and you may need to fight for that.

Then there are the parts that are about calibration, especially of being the right level of assertive and aggressive, and of course knowing which situations call for which, which is one of the hardest challenges. There is a lot of advice to men that is indeed essentially ‘don’t be pushy’ and lots of other advice from other sources that say ‘do be pushy’ so of course reverse all advice you hear.

Whichever you hear most is more likely the one you don’t need.

Reddit poster: I realized I was being ghosted by girls because I followed Reddit advice.

I thought I was unattractive. I vented on this app many times after being ghosted, but I’ve been successful lately. To be honest, I’ve also started going back to the gym.

But anyway, I read on Reddit that one should always be respectful, do not kiss on a first date, do not flirt, etc. That’s exactly what I was doing. I would go on dates, ask about their work, school, vacations, etc.—all that wholesome vibe—and was getting ghosted.

In the last four weeks, I’ve been on a few dates and told myself to ditch all that advice, started flirting with them, going for a kiss at the right time, inviting them to my place, etc., etc. And yes, I’ve been quite successful lately. I no longer feel unattractive, lol.

Hey, this advice might not work for everyone, I don’t know, but all this worked for me better than being wholesome and waiting until the third date, etc.

elle: The problem with giving men advice like “don’t be pushy” is that the men who truly need to hear it won’t listen, and the men who would benefit from being more assertive will take it to heart.

Human Person: I am in the latter category. I’m terrified of invading someone’s space like that and assume I have no chance either way, and the mixture just makes me fight back every thought I have to initiate.

Damion Schubert: Ninety percent of advice given like “don’t be pushy” really just means “Jesus, tone it down until you’re not a creep.” You’ll never get anywhere if you aren’t assertive, but figuring out where the line between “assertive but nonthreatening” and “earning a restraining order” is critical.

This is frequently a skill issue. You need to be assertive at the right times, ideally in the right ways – if the times are sufficiently right you have a lot of slack here, if they’re only somewhat right you have less – and not at the wrong times in the wrong ways.

Other times it very much is a calibration issue. And the default response to not yet being skilled is to become miscalibrated, and to seek out miscalibrated advice.

Either you take, and are advised to take, lots of shots on goal so you at least have a chance and also a chance to learn and grow comfortable. In which case you will indeed often come off rather badly. Or you take barely any shots, which avoids many downsides but ends up wasting everyone’s time, with little chance of success and not much learning.

The good news is that if you are paying any attention there really is quite a lot of space between ‘assertive but non-threatening’ and ‘earning a restraining order.’

A common sense heuristic for the timid is that if you go home sad that things did not progress at all, and you never got any form of negative feedback (this can be subtle, ideally it mostly will be, but it has to be there), you probably weren’t assertive enough.

Also, flat out, you need to flirt on dates (no matter who you are), first or otherwise, and want as a man to be attempting kissing at least often. Anyone who says ‘don’t flirt’ or tells a man ‘never kiss on the first date’ (or never initiate one) is giving you terrible advice and you should essentially ignore everything else they say not to do – they might be right on other points, but them giving you that advice is not useful information.

Some basics for those who need them, or who would find it helpful to affirm them, or have them made more explicit.

It doesn’t always work this way, it doesn’t have to work this way. When dealing with a particular person you can and should pay attention and stand ready to throw this all out the window if they want to play differently. But one should be aware that it usually does directionally work this way. Going with it tends to lead to better outcomes, and going against it usually means swimming uphill.

Matt Bateman: A story about learning a masculine role in relationships, that may perhaps be useful to people similar enough to me.

In my early 20s or so I had no real conception of differentiated gender dynamics.

I was raised to believe that gender differences were ancien régime constructs.

…

So one day I decided to sit down and think about it. For the first time in my life, I put my mind to considering: what is the courtship game?

Let’s assume it *isa construct, purely a social artifact.

Still—what *isit? How does it work? What is there to be said for it?

I pondered romcom/sitcom-level things such as “guy is supposed to make move, guy is supposed to propose, guy is supposed to ‘lead’; girl is supposed to signal availability/interest, girl is supposed to gatekeep”, etc.

After some thought I was like… yeah ok I’m good with this.

By “I’m good with this” I did not and do not mean “I think everyone Must play this game in this way”, or “this is Biological Fact”, or “exceptions are Bad and there are no reasons ever to take exception”, or “critiques of this game are all wrong”.

I instead meant and mean “I like this game, I’m actually glad it was established, it’s nice for me that most people play it, I’m myself happy to play it; this is a good vehicle for me to find and participate in rather important forms of meaning and fun”.

Maybe this is blindingly obvious to some people? Most people? Plausibly I’m idiosyncratically dumb. But for me it was a revelation.

The level of relevant description was not where I expected to find it. The things that most people talked about most of the time seemed irrelevant.

Matt Bateman (the one Jakeup quotes): Whenever it felt like I was waiting, or things were coasting or simmering for too long, or it was unclear how to proceed, I would now think: oh right, *I’msupposed to *read the roomand *do somethinghere. That’s my role in the game.

Jakeup: this is a key point in a great thread. the classic moves in the game of courtship go like this:

1. girl sets the room and hints at possibilities

2. guy reads the room and issues invites to potential courses of action

3. girl picks a course of action to follow the guy

4. repeat

this game can break at any step, leaving both frustrated. a girl who doesn’t know what she wants. a guy who passively waits for instructions. a girl who’s stuck with a guy she doesn’t want to follow anywhere.

or when either of them invites outsiders into this private dance

it’s hard enough to read the inclinations of one person, scary enough to relinquish control and follow someone

if you further constrain yourself by insisting on maintaining a story that would stand up to criticism on social media or even just among friends, it becomes impossible

Matt is very wisely being extremely vague about what the moves of the game entail for him personally because arguing over specific techniques of seduction on twitter is *nothow you either learn the game or play the game

it’s why I spent the first month of Second Person explaining that my goal is to *unblockreaders to engage in their own practice of fucking around and finding out and that giving specific instructions actually stands in the way of that

Jacob expands this into a full blog post, framing modern dating as improv, in which there are no fixed hard rules but (for heterosexual pairings) the man’s role is generally to read the signals and the room, and make moves to advance the plot, including being willing to risk being explicitly rejected, while the woman’s is to provide a room and signals to be read and approving or rejecting proposals, and helping everything stay graceful.

But of course, none of that is in the form of rules. There used to be actual rules with actual people enforcing them, and now those rules are far more minimal – some things are actually off limits but you presumably knew about those rules already. If the situation isn’t typical, or typical isn’t working, you are free to switch the roles, take completely different rules (except for the big actually enforced ones, although even that can get weird these days), or do anything else you want.

And if they don’t understand how the game works or what their role is supposed to be, that’s fine, you figure out how they think the game works or how they want it to work, and you play by those rules instead. That especially applies when the man is kind of clueless, and you don’t want that to be a dealbreaker.

Billy Is Young has another thread of remarkably similar dating-as-a-male-101, and how you need to project yourself, and in particular not to attempt to present yourself as other than you are. Fully ‘be yourself’ is not always wise, but don’t be actively not yourself either. Don’t hold back your masculine energy or pretend not to be attracted.

Apparently there was a ‘predator sting’ in which a 22-year-old was invited to meet up with an 18-year-old, then the students berated him as a ‘sex offender,’ 25 students chased him and one student punched him in the back of the head. If you believe we live in a world where 18-to-22 is an unacceptable age gap and might get you chased by a mob of 25 people and punched in the back of the head, you’re going to have a much harder time dating.

Aella hints she may be available to tutor you to be irresistible to women. I don’t know if this would work, and yes you’d have to pay, but it might work and this does seem like it would at least be fun. It sure beats buying them Tinder credits.

In her case, she offers us some free instructions.

See? It’s easy.

Aella: All I want is a guy I just met to casually and confidently touch me on the arm or back, lean in with direct attention, unwavering eye contact, and then spend the rest of the night flirting with my friends.

I just want to slightly insult him and have him slightly insult me in return. I want him to be a little assertive, all the time, and completely comfortable with that.

I want him to be Schrödinger-fucking other girls, where he is simultaneously getting intimate with all other women but also has standards too high to sleep with anyone. I want him to enjoy me but be uncertain about me; I want to be unsure if I am good enough for him.

I want him to be completely, deeply, and unapologetically comfortable with himself and his desires. I want to somehow possess some rare jewel of a trait in my soul that he has been waiting his whole life to find.

Including this first level move, although you’ll usually need a different framing.

Aella: One of the hottest things a guy on a first date ever said to me was “I estimate 15 percent that I’ll end up interested in seriously dating you.”

All a girl wants is to feel like she has to work in order to catch a guy.

Joe’s AI Experiments: I work in the gambling industry and 15% is considered something of a magical number.

It’s high enough that people consider it possible, and don’t give up. They keep full emotional investment.

But 15% is rare enough that a win feels really special.

I’ve never heard the 15% claim but I’ve been sitting with it for a few minutes. It seems plausible in some settings, I can see it being a cool percentage chance to win a run for example, but in my sports betting experience going +500 seems like a bit much, although it also rarely is a natural thing to come up without a parlay.

For dating, it makes sense. 15% chance of serious interest is still a hot date.

Always be closing.

A classic puzzle, inspired by a TikTok clip. A woman is invited back to a man’s place after a date, agrees but says ‘I’m not going to sleep with you.’ What does that mean?

It means you need to pay close attention.

Richard Hanania: What does it mean when a woman says “I’m not going to sleep with you” while also going back to your house? It means she probably will.

Anecdotally, this only seems strange to those under 30. Society has failed you, but I’m thankfully here to explain.

…

The most pathetic thing I’ve seen is men complaining about this. As if the job of women is to come up with a logically coherent philosophy instead of choosing the highest quality partners. Subtext is key to romance, it’s not an inconvenience to be regulated away.

Men with bad social skills don’t realize it’s their job to learn social skills. They believe women have to conform to what’s easiest and most convenient for them. They also want relationships without ambiguity or risks. Incel ideology is a cancer.

Rob Henderson: This could mean so many things. If, after a date, a woman accepts a man’s invitation to visit his home, and first says, “I’m not going to sleep with you,” this could, of course, mean that she has no desire to sleep with him.

But it could also mean something like “I’m going to share this information with you in order to gauge your response and if you behave weirdly, then I’m not going to sleep with you.”

It could also mean “I’m attracted to you and would like to sleep with you but I’m not feeling at my best so I’m not going to sleep with you tonight but want you to know I like you and trust you enough to be alone with you.”

It could mean “At this very moment I’m not entirely certain I want to sleep with you but let’s see how the rest of the evening unfolds.”

It could mean “I really like you and I’m absolutely not going to sleep with you but I have no way of forecasting how I’ll feel as we spend more time together.”

In the same way as you could imagine someone watching their figure entering a patisserie and thinking “I will absolutely not order a slice of their famous strawberry cake” and 20 minutes later find themselves savoring the last bite.

In the clip, the woman complains that she is the problem, because she did not want to sleep with him, but she wanted him to try a little, and he didn’t, so now she feels ugly.

Richard notes that a lot of younger men expressed great fear that they would be punished severely (as in life ruined) for judging wrong and going too far.

Any of these things could be happening. There is a substantial chance she does end up sleeping with you if you are down for that, and also a substantial chance she does not no matter how well you play. Your job is to navigate this ambiguity. Develop and use the relevant social skills, use ambiguous actions to see how she reacts, do the best you can. Understand that there is no perfect solution, you need to be willing to get it wrong in both directions and gracefully navigate both failure modes.

That is vastly harder if you have gotten it into your head that one move too far could ruin your life. Which in theory it could, but the chances of that happening (especially if no one involved is in college) if you act at all reasonably are very low.

Essentially, those men think their own sexuality is borderline illegal In Their Culture.

Sulla: Zoomers have an incredibly weird relationship with sex. On one hand, talk of sex and adjacent things has been completely de-stigmatized, nobody really cares or thinks its a taboo if you talk about it.

OTOH, acting sexual, especially for men, has become extremely taboo because of the possibility of making people “uncomfy” which must be prevented at all costs. Masculine sexual behavior especially has been made taboo – “safe horny” and “reddity horny” are okay. “Step on me mommy” etc. because its not “threatening.” They seek to turn the masculine man into a harmless femboy twink because the masculine man is “scary”

It’s basically a result of histrionic Zoomer behavior to minimize “discomfort” at all costs – “harm reduction” etc. Completely delusional, they need to grow up and stop being whiny babies.

Alaric the Barbarian: I’ve said it before and I’ll say it again:

As of today, white male heterosexuality is the most suppressed pattern of behavior in The Culture.

It’s effectively illegal.

Shamed at every turn, policed by HR-world doctrine into narrow venues of acceptability, constantly bashed in media and on social media, crafted into something new and nonthreatening via a full-court press of incentives.

There’s space allowed for everything but this.

Andrew Rettek: to the extent this is true, the “law” is only strongly enforced in some places, and never universally. It really sucks for the guys who have their social lives in those places, and can’t get an exception for themselves.

My model is the same as Andrew’s here. There are particular places and times in which being terrified is a reasonable response. The obvious response, if you find yourself in such a place, is to tread very carefully while there, not get stuck there permanently, and do your best to get your dating and relationships elsewhere.

Once you are not in such a place, you need to realize that you are not now in such place, and undo the paranoid adjustments you felt forced to make.

If she (27yo) screams someone else’s name during sex, what to do? If that someone else appears to be a 16-year-old boy cartoon character called Ben 10 (but also could be another Ben she is cheating with and then tried to ‘save it’ with the cartoon character?) then does that change your answer? Some advise leaning into it. Mostly I think people let this kind of thing get to them more than it should, and you should essentially bank the credits for when you need them. But that’s an outside view.

What about endurance, and how much of it is being fit?

Aella: Man i didn’t anticipate how much I was spoiled by having a long-time sex partner with incredible physical endurance. It turns out that it’s much easier to drop into sexual bliss when a part of you isn’t worried that the dude is getting tired.

Guys by endurance I mostly mean whatever cardio and muscles are required to keep going until I have an orgasm. Penis endurance is also nice but imo not as rare.

This includes jaw and tongue and back of neck ok.

What’s most interesting here is how much of the issue here is presented as worry about endurance, rather than in the actual endurance. That’s yet another way confidence matters. I’m also rather surprised by her observation that cardio and general muscle fatigue are the most common limiting factors here? I suppose it depends on how much endurance you need.

Aella’s studies report that watching porn is mostly positively correlated with predicting female sexual preferences, including the finding that more women like rough sex than men (of course check first, you can’t assume!). But she notes that anal is the big important exception.

Aella: On average, men who watch more porn, were more accurate in their predictions of what women liked in bed (according to ratings from the women themselves) This held both in my own survey and also a microtasker sample.

Anal is actually maybe one of the few exceptions here; in general, women are significantly more inclined to like rough porn than men are – but anal is one of the biggest gender gap preferences in favor of men. Way more men like anal sex than women do.

Fredrick von Ronge: It’s not the anal, it’s her submission to something she’s not into.

Aella: Actually generally no. ‘submission to something she’s not into’ is a more common preference among women than men.

How to make casual sex great again?

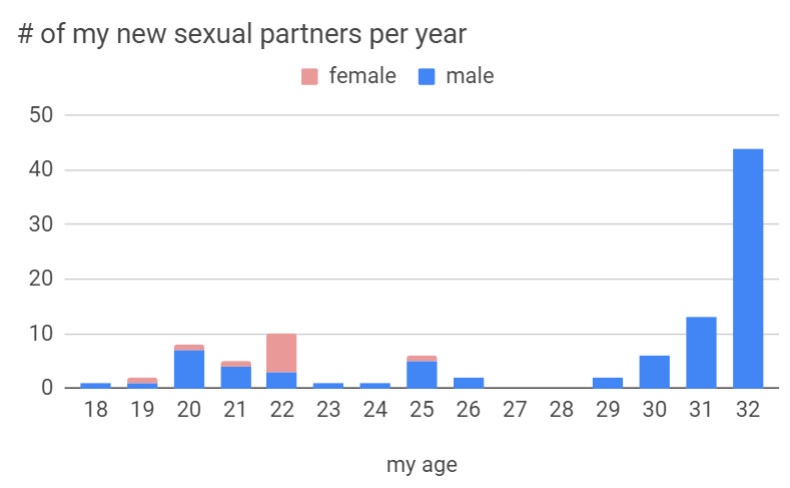

Aella: since this tweet i did figure out how to make casual sex good [chart excludes escorting]Aella: Since this tweet, I did figure out how to make casual sex good. [Chart excludes escorting].The key for me has been:Stopping being ashamed about things I want in bed.Starting to be really selfish about what I want, giving up on compromise.Aggressively communicating and filtering for men who are into what I want.Building networks full of these people.Turns out, a lot of what makes casual sex bad was having sex with people who wanted me to do things I didn’t want to do, and me being too nice and not wanting to hurt their feelings, so I played along.

the key for me has been

-

stop being ashamed about things i want in bed

-

start getting really selfish about what i want, give up on compromise

-

aggressively communicate and filter for guys who are into what i want

-

build networks full of these people

Turns out a lot of what makes casual sex bad was having sex with people who wanted me to do things i didn’t want to do, and me being too nice and not wanting to hurt their feelings so i played along.

That must be quite the network, given her other statements about this meaning only guys inherently into things guys are rarely inherently into. The sex might be casual, the logistical operation and interview process is anything but, although presumably worth it.

I presume most people need to do much less aggressive filtering than this, and would be happy to do a bunch of compromising within a reasonably wide range, but should absolutely speak up far more about what they want.

This sadly does seem to be a reliable format for Peak Engagement on Twitter, but also seems like relevant information in this case.

Austin Allred (6.3m views): I fundamentally don’t understand OnlyFans. The scale is insane.

Who is paying for this? Why?

What I’m hearing is it’s not just about the porn necessarily, it’s about having paid “relationships” of some sort with the creator, which makes a lot more sense to me.

Makes me sad, but it makes sense.

Before I thought it was just the appeal of porn for non porn stars, which would make sense but break down at scale.

But sexual virtual fake paid relationships? Yeah that makes so much sense.

And is very sad.

Aella: Most income, as far as I know, comes from messages, not from basic subscriptions. It’s essentially interactive pornography, sexting with a hot girl where you masturbate together. That’s where the big money is.

Mason: OF semi-successfully repackaged something akin to actual cheating as porn, and it turns out there’s a huge market for cheating that societal norms haven’t caught up with.

The demand for cheating has been there since forever, so it’s weird to say societal norms ‘haven’t caught up with’ it yet. And I’d say it’s more that there’s demand for attention, I’d presume that single men use OnlyFans more if you hold other conditions constant, rather than less.

I think this all seems less sad rather than more sad, given the alternatives, if we hold the amount of money and time spent constant? At least you do get some sort of parasocial relationship, some amount of interaction. Although it also does seem more damaging to relationships.

If that appeals to you, Aella discusses how to succeed at OnlyFans, with a lot of distinct connections, versus as a cam girl, where you are aiming for 1-2 whales that like to win a dominance contest in front of other men. OnlyFans is about the illusion that you’re the only guy. The money in OF is in upselling via DMs, which (of course) are typically are handled by agency-hired minimal wage workers in warehouses, and agencies often charge 50% or more (on top of the OF 20%) for this and other services.

I presume AIs will replace those jobs rather soon, which greatly reduces marginal cost and also turnaround times, and presumably thus alters the business model.

The new dominant play is apparently ‘drips’ where you have a sequence of clips with escalating price tags, which you pretend to do in real time but you don’t have to pretend that hard, the men don’t notice or care. They want a minimal deeply uncredible version of the second level symbolic version of the thing – the conceptual indicator of a personal connection that would imitate an actual personal connection.

She also notes that by not doing internal discovery, OnlyFans forces creators to advertise elsewhere, which got so aggressive that various places (even Fetlife) got pretty hostile towards all the posting. This is a levels of friction situation of the type we’re going to see a lot of with AI – OF reduced frictions to doing the OF thing, so suddenly the previous levels of friction outside OF didn’t deter people enough, and if you didn’t ban it the level of tits-in-your-face was out of control.

It’s like there was this great business model lying around the whole time, that any (sufficiently hot woman) could use – spam the internet with hot pics, recruit men, charge a subscription for some sexy content, charge for individual interactions and marginal content. And the secret to unlocking this was to remove the friction, and also earning 20%, was just to take care of various basics on the backend?

Aly Dee asks, isn’t OnlyFans a bad deal, versus finding one ‘kind’ rich fan and putting a ring on it, given the prospects of a young woman who can succeed at OF if they’re willing to date older, and how easy it would be to be intentional about this? The obvious answer is that no, that isn’t obviously better depending on what you want, especially given the commitments involved, and also isn’t so easy to get given the adverse selection problems.

In other OnlyFans news, this is a real way people are reacting to real news?

Max Tempers: 🚨 NEW: OnlyFans is now accessible in China, a move that could boost the UK economy significantly.

A Labour insider told me ‘This is exactly what Lammy’s progressive realism is about!’ as he attempts to justify the recent overtures to the East made by the government.

I have nothing against OnlyFans, but if this can ‘boost the UK economy significantly’ then that raises further questions. So many questions.

Are your preferences bad? If so, should you feel bad?

As in: Paper asks, is it bad to prefer attractive partners? No. Next question. Paper disagrees and claims there are strong philosophical arguments for both sides. The argument against seems to be the fully general anti-discrimination argument, that says humans are not allowed to express preferences, or to prefer better things to worse things, unless they have some special moral justification. And, yeah, no.

Mate preferences differ a ton across individuals, and the gender-based differences look relatively small, but taken together if you know someone’s preferences you can guess their gender with 92.2% accuracy.

Aella looks at preferences by examining the relative prices of female escorts with various physical attributes. Nothing is too surprising, but you learn about which things have bigger magnitudes of impact. Even the things that mattered don’t seem to have that big an impact on price, to a level that I’m a little suspicious.

Aella also notes that as she charged higher prices, client quality improved, in particular there were far fewer assholes:

Aella: I’ve always liked about 80% of my clients, but now that I’ve raised my rates even higher, I think I like… all of them? I work less often, and mostly just for fun, but I think every single man I’ve seen in the last year has been pretty cool, and I feel warmly toward them.

When I first started, my rates were a lot lower, and most of the unpleasant clients I remember ever having occurred in those early months. Not that wealthy people can’t be unpleasant, but it’s more like, unpleasant people are less willing to pay a lot of money.

I bet that charging more also makes the same men act less like assholes. Consultants know that if you don’t charge enough, no one will respect or listen to you, so not only won’t you make much money, you won’t be able to do the job. When you charge a lot, people who do pay doubly respect you – you assert you’re worth that much, and also they agreed to pay it. There’s also the section effect, of course, where assholes are shopping cheap as they can.

A similar principle holds for regular dating. It doesn’t have to be money that acts as your asshole filter.

Strategies that are very hard to do, especially before having done them, but that work.

Sasha Chapin: I have become a much more effective person over the last two years via living with an extremely effective wife

If I had to break down what has changed, I would fail—I think effectiveness is a style that you can learn to mimic, like a tennis swing, more than a set of principles

How one finds a highly effective wife (or husband), without first yourself being highly effective, is the mystery. But yes, absolutely, being around effectiveness, hard work and high standards will rub off on you a lot. The need to be worthy can’t hurt either.

This also applies to everything else. Seek out those who have qualities you want to have yourself, and avoid those with qualities you want to avoid.

Here’s a preference.

Olivia Rodrigo (the pop star): This is a very oddly specific question that I ask guys on first dates. I always ask them if they think that they would want to go to space. And if they say yes, I don’t date them. I just think if you wanna go to space, you’re a little too full of yourself. I think it’s just weird.

Crybaby: guess her type is down to Earth.

If that is her motivation, that is a good thing to want to avoid (especially in her position, since I imagine a lot of men who dare try and date a pop star are rather full of themselves) and this is potentially a good question to ask, but you have to pay attention to exactly how he answers. If the answer is ‘sure, if given the opportunity, of course I’d go’ and you turn them down for that because they’re too ‘full of themselves,’ then you fool. If the answer is ‘yes, I’m actively trying to go to space’ then sure, maybe that’s not what she wants.

Here are some other claims about preferences that sure sound like a trap.

Anna Gat: Being a stable and reassuring man is the sexiest thing you can be for a woman – our nervous system reacts immediately and deliciously.

If you’re trying to court someone and don’t know what would work, this is what would work! You’re welcome 👶🏾👶🏻👶🏽👶👶🏿👶🏼

Sarah Constantin: the most valuable, in-demand person in the world is someone who is Fine.

Now, that’s easier said than done!

But if you do happen to be Feeling Just Fine, Thank You, don’t worry about any of your other deficiencies. You are a catch, just for that alone.

Misha: why are you saying an obviously false thing like that.

Sarah Constantin: Because it is true in my experience.

Misha: How much experience do you have dating as a man?

Being a catch is not the same as being more likely to be caught.

I do think that these characteristics are valuable and worth pursuing for other reasons, and are underrated as male dating strategies, but for it to work you need to get into opportunities to demonstrate this style of value.

This won’t get you in the door. Merely being stable and fine on your own in the abstract is great down the line, but you still need a way in. This can’t take the role of ‘the thing that is attractive about you.’

Also fitting the above the pattern: Women like kind men. This is a well-known robust result in evolutionary psychology. However, men have the strong perception that if you want to end up with a woman, being kind too early is a poor strategy.

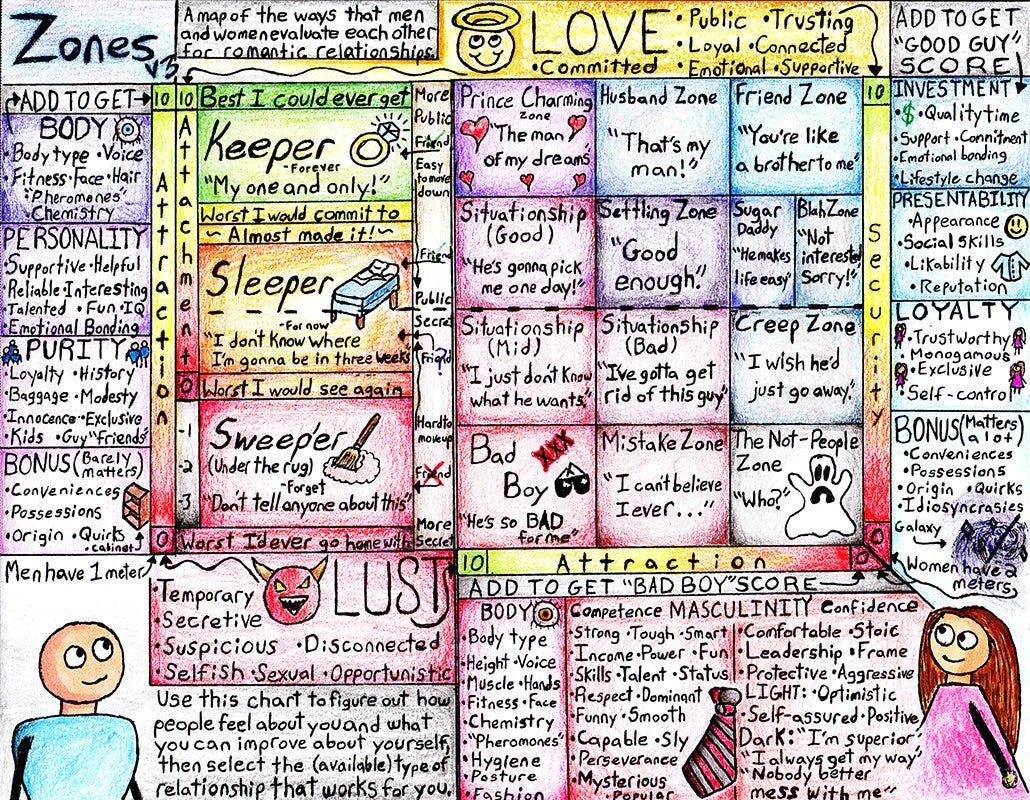

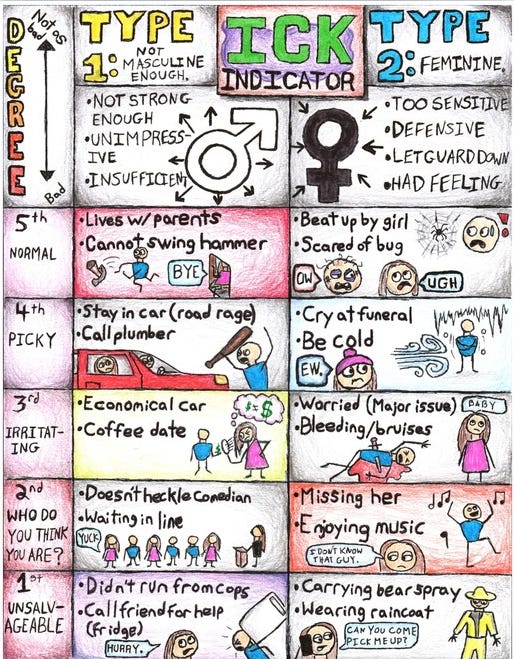

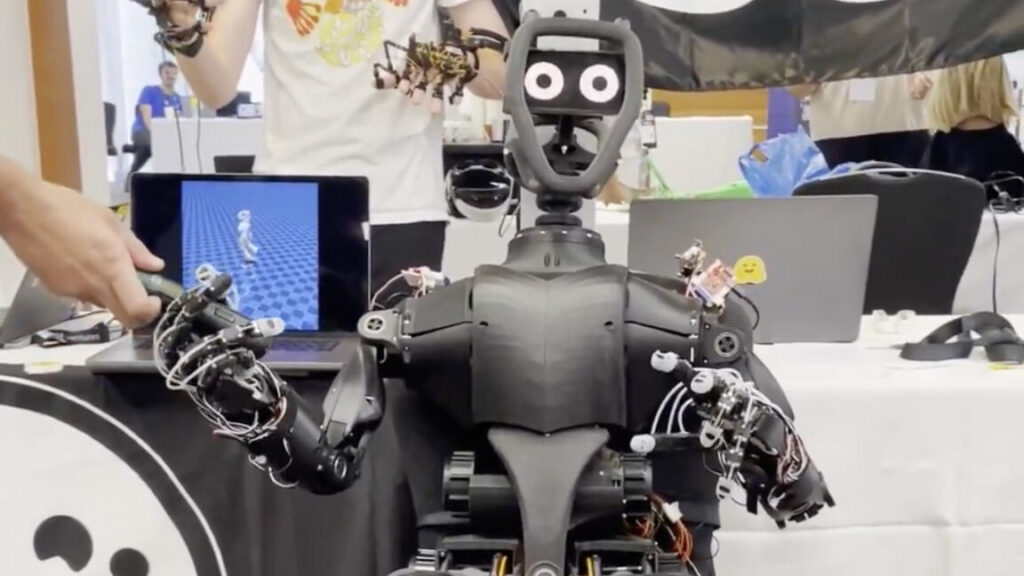

To put this all in someone else’s terminology, and hopefully make it clearer, we’re going to have to pull out the visual aids, as I realized while editing the post we’ve been effectively talking about the two different axes on the latest men and women ranking scales chart. It’s a fun one, with lots of detail. As usual, take the right amount of seriously and literally, which is neither super high nor non-zero.

I have many quibbles with this even as a ‘baseline scenario.’ But I like that this is the quirky perspective of a particular person, who is clearly describing what they observe. And it emphasizes that, mostly, Good Things are Good, and that everything counts.

The discussion at the link, mostly unrelated to the graphic, is the latest iteration of the ‘the dating market broke because the men who can get multiple offers solved for the equilibrium’ argument, where without enforcement of various traditional social norms things collapse into dynamics that aren’t good for most people involved, where men have little felt incentive to commit and women have little leverage.

Even if you do want to spend your 20s seeking marriage and kids, that becomes very difficult, especially if you are unwilling to break with the mainstream social scripts around dating.

Also it seems there’s a part two? Which resonated a lot less, and feels like it says a lot more about the author than anything else, but seems fun so sharing anyway.

There was much talk about this Reddit thread, reminding some of this other thread.

(As usual, the story might be fake but the hypothetical and the reactions are real.)

My boyfriend and I are both 28 years old and together for 2.5 years. Yesterday night we were drinking and one thing led to another and I tried to compliment him by saying he is not someone who I would hookup or be a fwb with but marry.

I thought everything was fine but he seemed extremely distraught after that. I realized how he understood it and tried to clarify it but he is still the same this morning.

He told me he needs space to think for a while and left the house. All my friends tell me I messed it up and guys tell me it’s not a compliment and most men will understand it differently. I think I destroyed our relationship and I am panicking right now.

Bern the Fallen: Now I know why this is ringing a bell it’s like the TOMC “I broke my wife and I don’t think it’s fixable.”

Story where a guy basically says the same kind of “compliment” about his wife and she walks. It’s the same sentiment but many aren’t comprehending it.

Can’t lie I love when there’s a gender flip on a situation, it can help people see it differently.

This shouldn’t be seen as a ♂️v♀️gotcha but an understanding of what the other person feels receiving such a compliment that comes off as backhanded due to the qualifier/comparison +.

Misha: I hope everyone has learned a lesson about how to not give a compliment to a guy. I think there’s too much unstated context for us to conclude things about him or their relationship here, but is there ANY context in which it improves a compliment to start off by saying you don’t want to fuck someone?

Rat Bastard: Remember that time Aella posted about a guy she was dating saying she’s “not that pretty” remember how all the replies suggested that like, she was being emotionally abused or something.

The original poster’s boyfriend is wildly overreacting, but perhaps he is simply too attuned to how women declare war, lol.

Women think it’s a compliment, actually, because they are focused on the “marriageable” aspect, and I think they are right that it is unfortunate that many men nominally want to get married but legitimately do not really care about being marriageable.

My “insult everyone” take here is that most men don’t NEED to care about being marriageable and they know it.

If you won’t fuck them, you won’t date them, which means you wont marry them so it’s all moot anyway, is the vibe.

It makes sense that these kinds of comments can be unfixable dealbreakers. The information can’t be taken back, and potentially colors everything.

Danielle Fong (referencing the chart in the previous section): basically, the girl is saying “you give me great investment” (husband, friend-zone quadrant)

But you are not hot enough to be a prince charming, a situationship, or the bad boy, you are not on the left column.

Now, depending, the guy can be fine with this ig, but, say he is putting up an unsustainable amount of effort (investment high) — this would not be a good sign. Drop off for a bit and you’re settling or worse. And “apparently” nothing.

This is stupid. I can tell you; work out and you’ll be getting the attention. Guys who can’t be hot are just being lazy. Skill issue, really.

Also, why is the guy so sensitive that he can’t take what is intended to be a compliment? Snowflake ick type thing. Second degree type two ick imo.

Malcolm Ocean (also referring to the chart from the previous section):

What she says: I would marry you but not hook up with you

What he hears: you’re disgusting, a sweeper, I wouldn’t even want someone to see us together

What she means: you’re charming/husbandzone so I don’t want to get attached if this isn’t going anywhere

‘Not being hot is a skill issue’ is a bold take. It’s not entirely wrong, especially if you go beyond exercise into various other areas, there is usually a lot of room for improvement. But a lot of it is not a fixable skill issue, especially for being hot to a particular individual person, and once impressions have solidified. Also, that’s a lot of additional investment, if you don’t otherwise want it.

My quick model is that I see there as being three distinct problems caused by this.

-

Feeling unattractive and unwanted really sucks. So does being with someone who might well stop wanting to have sex or sees it as a cost rather than a benefit, especially over a longer term once things are locked in.

-

If you are insufficiently attractive, then you have to compensate for this with other investment. It makes it more likely she’ll be unhappy long term. It weakens your effective bargaining power and you have to worry you are not secure in the relationship.

-

You have to worry a lot more that she’ll look for or find someone she thinks is better, and either have an affair or try to upgrade.

If she means what Ocean thinks she means, and you’re ‘too good’ to only hook up with for risk of getting attached or what not, then she’s communicating quite poorly but has opportunity to clarify and save it. The whole meaning can be turned around. And indeed, even if that isn’t what she meant but she is willing to lie to save the relationship , this is the best lie available.

I think most of the time that’s not what she’s saying.

Obviously successful pairings happen all the time with attitudes like this. Most successful pairings don’t involve maximum baseline physical attractiveness before growing into that, and if they did then that means everyone is paying way, way too much attention to looks. You still have to be very careful how you say that.

Indeed, one of our big problems is exactly that we don’t give not-maximally-physically-attracted pairings situations where they are set up to find each other and then succeed in spite of that. Instead, we do the opposite, we tell people and especially men that if they aren’t sufficiently attractive, they will never get the opportunity for the rest, and also will be in constant danger of losing everything.

Game theory of Jane Austen regarding dating strategy. Fun for those who were forced to endure her in school, but nothing most readers would regard as new.

I don’t know if there is actually a pattern of those claiming to be ‘29 year old boss girls from TikTok’ having public meltdowns about failing to find a man despite their otherwise amazing lives.

I do know that the one here is complaining that people are telling her she is wrong, and she is tired of waiting for her soulmate to suddenly appear that ‘matches her energy,’ and yet she says she is not asking for a lot. I know that she says that ‘all her friends’ have their finances and husbands ‘that they’ve prioritized,’ which is evidence against this being so impossible, and perhaps that she made different choices on what to prioritize. She is clearly feeling entitled to a soulmate.

Mason: I think the truth is that being emotionally available and able to progress a relationship with a fellow imperfect human being without a neon blinking sign from God is actually a skill.

Which many people do not have and which they do not realize they could have.

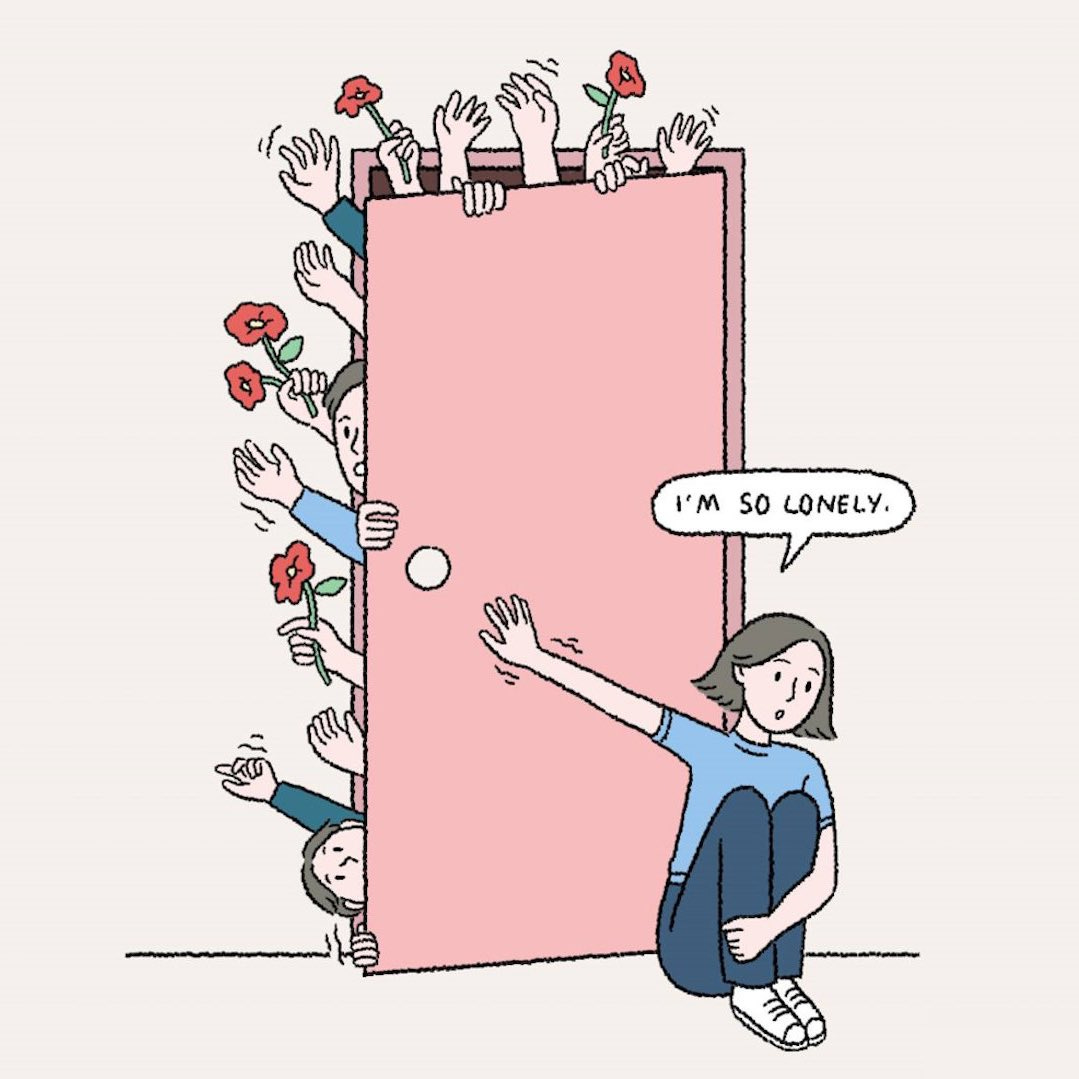

Here is a woman who more credibly reports trying, for years, yet finding no one.

Signull: Forget the pandemic; there is a literal epidemic of women who cannot find anyone they want to be with—it feels like we are at the precipice of a radical new cultural reality that is changing so fast that most people do not have the capacity to keep up.

Katherine Dee: A big part of this is a more generalized loneliness. Notice how in each of these videos they lament not having a community—it is not solely about romance, and it is cruel to omit that detail.

It is noteworthy that in the first clip, Katherine is wrong and the woman mentions all her friends rather than complaining about lack of community.

Perhaps not the central point, but: The actual story was about her going out to a comedy show. She seems to not have known what it means to sit in the front row. It often means you are going to get absolutely roasted. Instead, she got a free gift bag and praised for being brave and singled out. Then she went home rather than have a drink, because she had this gift bag.

You fool! This was actually a great situation. An entire room full of people heard comics call you brave and drew their attention. This is exactly when you go to the bar. So what if you have a gift bag. Good chance you get approaches, you have something to talk about, that is exactly what you came out for.

Another problem is, what if dating no longer gives you even a little excitement, even if you’re not going on that many? The obvious answer is ‘find better people to date, that actually excite you’ but that is not easy. Neither is ‘find people who are unpredictable and liable to say interesting things,’ or even ‘find activities that are inherently exciting even if your date isn’t.’

Presumably a lot of this is a lack of the dance of ambiguous escalation?

Rob Henderson: Two different young male friends, upon reading this lively passage from @GlennLoury ‘s incredible memoir, reacted with some variation of “I have never related to anything more in my life.”

Anna Gat: Without this we would be soooo bored 💄

If your strategy involves moving away from the dance, if it resolves the ambiguity too easily, then there is great risk that what remains is not exciting or fun.

I never went on enough dates, or rather never had enough unexciting date opportunities, to have this problem. My presumption is the right strategy is to think, I now have an excuse to go out and meet someone new, if I’m not enjoying it or seeing much value by default I get to mix it up, and you get to have gambler’s mindset that if it works it’s pretty great so you can afford a lot of uneventful along the way. Or maybe… just don’t be all that excited, and that’s kind of fine?

‘Act like you’re on Bachelor in Paradise except without the cameras’ is remarkably close to good advice. Easy to say, hard to act on it. Move fast and break things, in particular fail fast, and treat every relationship as either headed for an engagement or not worth pursuing further.

Nick Cammarata: I hate how well asking myself “if I had 10 times the agency I have, what would I do” works.

Chris Lakin: If you were serious about dating, you would be doing everything you could to break up as quickly as possible. Most relationships do not work out. If you are dating to marry, that does not require three years to figure out. One year, maximum, to determine if the relationship is doomed.

thinking about running an event where couples come stress test their partnership. “is your relationship doomed? come find out!” lmk if you have ideas. Matchmaking is out, Breaking Up Sooner is in

“second date should be a 3 day trip to Montreal together”

Emmett Shear: The people I know who are happily married almost universally were trying to make every relationship they were in work for the rest of their lives. They also quit as soon as they know it won’t work out that way, but inevitably it’s better to err on over persistent than under.

There is no case where I wish I’d followed this advice less, and several where I would have benefited from following it more.

The counterargument is that doing this is difficult and painful. Fair enough.

The other counterargument is that being in a long term relationship teaches you things you can’t learn other ways. But from what I’ve seen, often you then need to unlearn exactly those lessons.

Mostly it seems super wise, the moment you can tell it’s not going to work out, to act accordingly. That doesn’t automatically mean ending it right away, fun is valid, but if you’re out of the super fun period, it kind of does mean that.

Matthew Yglesias: If you’re paying attention, it’s pretty easy to tell if things aren’t going to work out.

More precisely, I would say there are a lot of cases where you can’t tell and it might work out, but yes there are many that are pretty doomed and it’s obvious early on, so don’t pretend not to notice.

(Definitely one for Remember to Reverse Any Advice You Hear and even more than usual I’m not endorsing the quoted text.)

Of course you have no available LTR options you like, if you did you’d have an LTR.

It’s a matching problem. Anyone medium term unmatched is not going to have easy access to matches they want. That might or might not mean they have unreasonably high standards.

rebecca: why is no one talking about the female loneliness epidemic??? hellloooo

Kangmin Lee: “Female loneliness epidemic.”

Allie: Most women have 100 options for casual sex and zero for serious relationships.

Casual sex does not make you feel less lonely.

Hoe Math: You mean “zero options that you like,” right?

Like, if you start with the men you like and ignore everyone else, you have zero options for a long-term relationship.

But if you count the men you do not like, then you have many options, right?

Ami: Are you supposed to date people you hate and find annoying? Genuine question.

Hoe Math: No, but do you remember when you were younger, perhaps 11, 12, or 13, and you liked boys who did not possess any of the traits you now look for in men?

Do you remember being perhaps 16, 17, or 18, and being impressed by guys who had an apartment and a car? Even though you would never think that is sufficient anymore?

Can you see how the more experience you gained, the higher your standards became?

Well, because of how modern society encourages women to be “liberated,” they are gaining more experience earlier in life than ever before.

That means they are “moving on” from men who would have been acceptable to them in a time when things did not progress so quickly.

It used to be common for women in their 20s, 30s, and 40s to see ordinary men doing ordinary things and think, “Perhaps he is single,” with only a slight tinge of attraction, a curiosity.

Now, nearly all women fully expect to be captivated from the first moment. They want men who have everything going for them.

What this means is that women are overlooking men who are truly on their level more than ever because they feel they are “above” those men.

When you are 16, he is so cool because he has a car and his own place. When you are 32, “So what? I have a car and my own place, too, and they are nicer than his!”

The artificial pressure placed on women to enter the workforce and the “liberation” movement have caused women to cease being impressed by ordinary men.

So no, you are not supposed to force it and date someone you hate. . . you are just not all supposed to be so bored by the average guy.

Ami: I love this answer so much. ❤️🥹

Everyone has at least some non-standard preferences, so you can reasonably hold out for a much better than random match given your general market value, even if you only do an average amount of search.

But that only goes so far, especially if you mostly want generically desired attributes and don’t have excellent search methods, and there are preference mismatches at the population level. The remaining market is going to at best suck, and potentially break down entirely.

This book review of How Not to Die Alone distills a very clear explanation of why dating advice for women is so typically unhelpful. They keep repeating the same three pieces of advice.

They’re all good advice, but neither complete nor usually all that actionable.

Jacob Falkovich: I used to joke that women only ever get three pieces of dating advice:

-

Don’t be ugly.

-

Don’t be insecure.

-

Don’t try to marry the fuckboi.

The first is delivered in private, or in glossy beauty magazines whose covers strongly imply that any man who reads them is gay. Popular dating advice books generally limit themselves to the latter two. One could think of other advice books could offer unrelated to insecurity and fuckbois. For example: that they could figure out what men want from them and do more of that. But books generally don’t, for two reasons.

First: telling women they need to fix themselves and/or to pay more attention to men goes against the spirit of our time. This sort of advice would feel entitled coming from a man and a break of solidarity coming from a woman; maybe an enby would get to it some day.

Second: telling women they’re fucking up and need to change goes against rule #2: don’t be insecure. And since that’s the main piece of dating advice women get, you’d be stupid to go against it.

Jacob then goes on to absolutely savage the ‘science’ that the book in question (How Not to Die Alone) is based on, while noticing that the advice is perfectly respectable and mostly seems right, but generic and in line with general expectations.

I actually think the central theme of much of the advice here seems to fall outside the three categories above, while also being good advice, which is:

-

Engage in deliberate practice, act intentionally and follow through on decisions.

The book knows better than to say anything is your fault. But have you considered making better more deliberate decisions, and journaling to record what you learned?

Whether or not it is useful to think of things as ‘your fault’ depends on how you react to that. If it helps you improve and learn and have hope because you can fix your fate, great. If it makes you insecure and afraid and hating yourself and you stop leaving the house, then that’s not great.

It’s weird that the book is doing that while also explicitly holding the reader blameless.

Jacob Falkovich (summarizing the book): Your problems are common, which means you’re normal. Your problems are a worthy subject of study for the world’s most prestigious researchers, which means you’re important. And the researchers have found that every single problem was caused by things outside your control, which means you’re blameless. You are normal, important, and blameless; there is nothing you need to fix.

Jacob (providing advice): But if you aren’t, thinking of yourself as a fuckup who’s constantly improving feels much more secure than the opposite. It makes every rejection a positive — an opportunity to learn! And every positive development is a validation of the progress you’ve made, which can only continue, as opposed to being about some default desirability you’re clinging on to.

Even if you’re a millennial, it’s not too late to not be yourself.

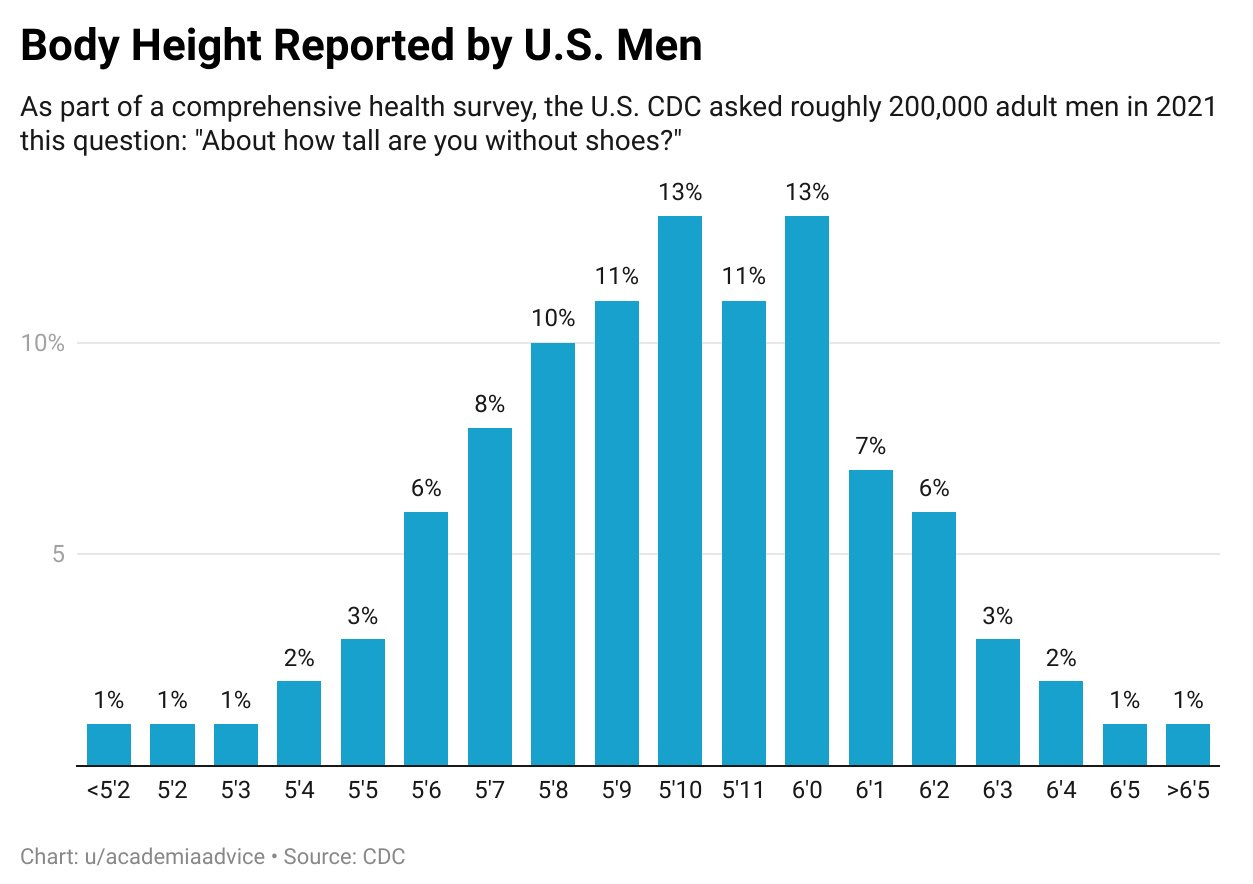

Cremieux: There are suspiciously few men who self-report being 5’11” and too many men report being 6′.

Eliezer Yudkowsky: I was 5’11” when measured and it had literally never occurred to me to misreport this.

Nat Rhein: As a 6’0″ I am now considering calling myself 5’11” because I’m more concerned with passing low-level “liar” filters than low-level “short” filters.

The funny part is this isn’t for a dating app. This is for a CDC survey. So the dating-related rate is presumably a lot higher.

Except: Height does not correlate with the chance men are married or have a child.

Aella: I really don’t get why women want tall men so bad. Sure it’s a little nice but not *thatnice. the actual important thing is can he easily throw you around in bed? Height doesn’t matter when you’re screaming.

Tall is overrated and overvalued, I believe largely because it is easy to notice and measure, and the most legible to others. If you seek the tall man, you are ‘overpaying’ for it. So to the extent the dating market is ‘efficient,’ unless you have a relatively very strong height preference you should be sacrificing height to get more of other things you want.

Lyman Stone: Yes, we should incentivize people to get married. Marriage bonuses are an unalloyed good. There are zero social harms incurred by doing this.

First of all I feel like making your policy angle “trophy wives of wealthy men is a bad social outcome” is probably not a winning line in DC to begin with.

But secondly, we all find the sugar daddy dynamic icky— but is it actually less icky if the woman has no legal rights?

Marriage may actually provide her with some rights and protections. As a girlfriend she has far less.

Furthermore, marriage may induce the man to alter other behaviors in prosocial ways.

So yeah, we want them to get married.

C’mon @PTBwrites, don’t chicken out on marriage penalties! This line is silly. It’s perfectly fine, indeed actually good to eliminate marriage penalties in a way that incidentally generates marriage bonuses! We want marriage bonuses too!

Let’s just make this super clear though: My actual view is the French have this right. All tax brackets should double when you get married. They should multiply again for each kid you have. Married+4 kids earning $100k? You should be taxed like you earn $100k/6=$18k.

Robin Hanson: Well obviously not ALL of us find the sugar daddy scenario icky.

Lyman’s full proposal is instantly-reverse-the-fertility-crisis bazooka-level big. I don’t know I’d go that far, but on any realistic margin movement towards it is great. At minimum, we need to eliminate marriage penalties and have at least some marriage bonus. We want to encourage marriages.

Having one spouse support the other is fine and good, we shouldn’t punish that.

The ‘sugar daddy’ scenario is icky to many (not all!), but as Lyman says, a marriage if anything reduces the ick level and the power imbalances involved. Given sugar daddy is happening either way, sugar husband is an upgrade. You might prefer to have neither, but is that the primary effect you’re getting by punishing the marriage?

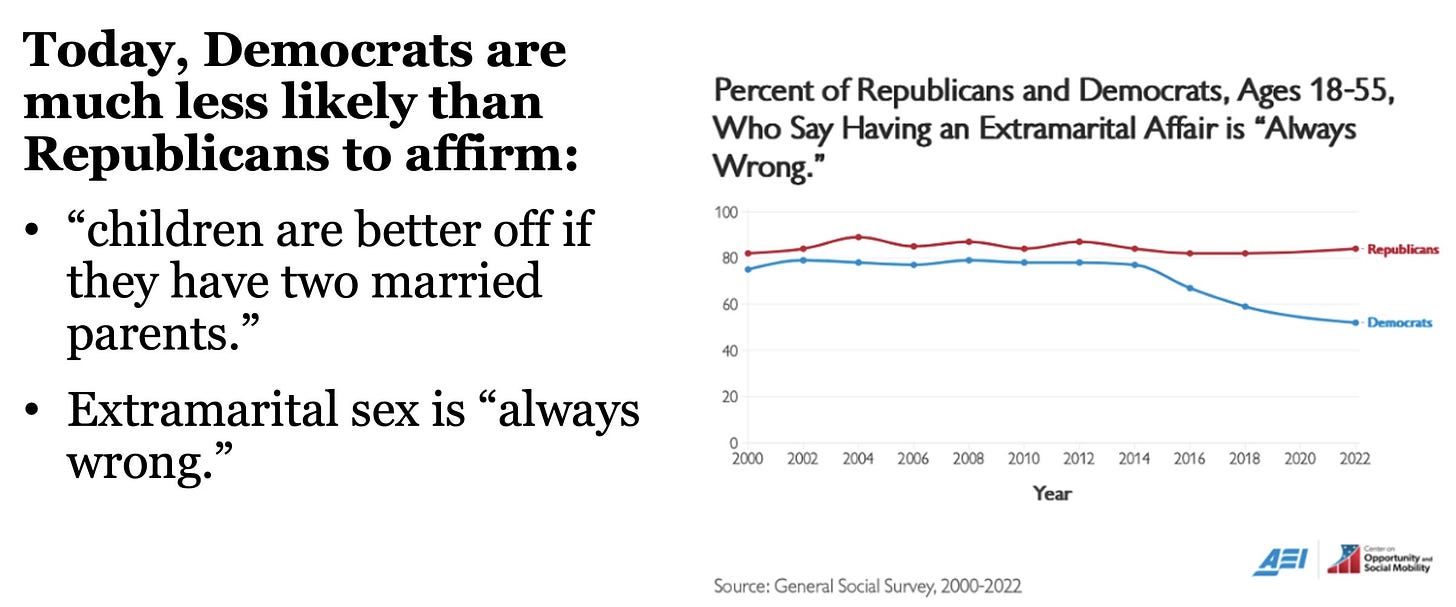

This is an interesting divergence, but it’s also a highly mislabeled diagram:

Brad Wilcox: Marriage-minded conservatives have largely stuck with the Republican Party (even under Trump) “because the Democratic Party has not provided them with a credible alternative, having moved hard to the cultural left in the wake of what@mattyglesias called the ‘Great Awakening.’”

The question was whether “extramarital sex is always wrong,” not whether an “extramarital affair is always wrong.”

Part of the cultural divide is arguing over where there is a difference.

Lyman Stone: this is a WILD split after the politics of the last 8 years!

Nonmonogamy is adultery. The fact that many liberals approve of adultery because they have found a relabeling of them that helps deal with cognitive dissonance doesn’t change the fact it’s adultery.

I very much think there is a difference.

Aella offers a thread of polyamory lessons learned, with clear themes.

-

Be honest. Be open. Share everything. Don’t suppress anything. Only way it works.

-

Do not worry about what is ‘reasonable.’ Make choices.

-

Everyone must be fully bought in and not even ‘open to’ monogamy.

This is asking a lot. Which is good, if that is what it takes to make polyamory work. You have to ask for what would actually work, not what sounds nice (see also: AI alignment and everyone not dying, etc, sigh).

It is highly plausible to me that there is a small minority for whom this all comes relatively naturally. And that for them, if they practice it with discipline amongst themselves, this particular equilibrium can work better than the alternatives.

However this is very different from what is suggested by most polyamorous people I have met. Most such folks are making the case that polyamory should be a default, and are suggesting various things Aella warns do not work.

Here’s what happens when you don’t heed point three:

hazel: sometimes you can tell a polycule is a competition to be the one who gets chosen when the Main One decides to go monogamous

Snufkin: As a poly person who attracts monogamous people….this dynamic is the gremlin that follows you around at 200 paces back wearing a hat that says “no, it’s cool, I understand” while looking sad as hell

Aella: This is why I refuse to date anyone who is open to being monogamous.

Aella then tells this composite story, where your partner meets someone else that’s monogamous but willing to try poly, and there’s no plan but they end up falling for the other person, and then leaving you to be monogamous with them. Which does seem rather common, based on the experiences I know about, and it all makes sense.

That sets a high bar, with poly wanting to consist only of people who are fully committed to it, which Aella says she was the moment she heard about it, but presumably most people can’t possibly be confident until they try it out? That was constantly the actual argument for trying it out, that I used to hear all the time in San Francisco, and this is exactly the opposite.

The obvious problem is: Under this framework, everyone involved in your polyamory must be ‘all-in’ on it, and not even be open to monogamy.

But how can you be all-in without experiencing it first? I would assume most people, even if being all-in on polyamory would ultimately be right for them, won’t be able to know this in advance.

Is it not this simple, but I believe this is directionally correct.

Allie: I will scream until I am blue in the face: People do not cheat because you are not attractive enough.

If you were not attractive enough, they would not have dated you in the first place.

People cheat because they have personalities that cannot be satisfied, which is why they will remain unhappy.

Shoshana Weissmann: This, extremely this. Such people can grow and stop cheating, but dating a cheater is a real risk because of that.

From what I’ve seen, by far the biggest risk factor for cheating – for every meaning of the word cheating, not only sexually or within a relationship, both within a particular cheating format and for cheating in general – is prior cheating or otherwise being the type of person that cheats. It is an indication of who they are, and can make it part of their identity. The reverse is true as well.

Not all such actions are created equal. The circumstances still matter quite a lot, including evaluating past circumstances to predict implied future cheating risk. Details are important.

Attractiveness does matter too, especially in relative terms and not only in terms of physical attraction. If you’re dating out of your league you are taking on risk. But I think that is a bigger risk factor for them leaving than for cheating.

A key problem in our civilization is that it is legally dangerous to say anything negative about anyone in a documented way outside of certain specific bounds (e.g. leaving online reviews of products). Then again, one must consider the alternative.

Allie: There’s now an app where you can review your ex boyfriends and I can’t see this going well Yes, warn girls you know, especially if a guy is actively dangerous But if everyone trashes their exes online, no one is ever going to date.

Gilbert Kitchens: I’m sure there won’t be *anyexaggerations and lies told by spiteful exes.

Shoshana Weissmann: In the past they’ve also been shut down for legal reasons. And sharing this stuff can open you up to lawsuits

Allie: Has this happened with “are we dating the same guy” groups yet?? Those get NASTY.

Shoshana Weissmann: I think there might have been a lawsuit! YEAH someone had me join one at first and it was BRIEFLY helpful and then just became like “this guy is weird” but no real reason.

Tim Newman: They start out to warn women about violent, dangerous men and quickly get swamped with women writing about men who were mere assholes or just bad on a date.

In theory of course Tea (4.8+ on the App stores but the reviews I read make me rather suspicious in various ways) should be great and net positive for our romantic prospects via reducing uncertainty and Conservation of Expected Evidence.

Tea says it lets you run a background check, reverse phone lookup, reverse image search, criminal record lookup and sex offender search, including trying to figure out if the guy is already in a relationship. Not only does filtering out bad apples get rid of the bad apples and let you accept more marginal other dates, it also improves the dates you do go on because you can trust things more.

What about ‘reviews’ from exes? The same things should be true, if we take reviews as given. If you’re properly calibrated, you should on net come out more excited, and also have more information to help things go well.

The first obvious danger is that an ex could have it out for you, and there will be false positives here, but the alerts should be much better than random. A lot of the negative reviews are from not-crazy exes, and it’s not entirely random, shall we say, who ends up with crazy enraged exes. The accuracy rate doesn’t have to be that high to still be net positive, if everyone is reacting reasonably.

The second obvious danger is poor calibration. You don’t want Tea users to only or mostly update negatively on such reviews. There will doubtless be some of this, it’s unclear how much.

I’d also note that this likely constitutes positive selection for the men – the women who are now more positively inclined will tend to be the ones you want to date. Good.

Then there are the incentives, and how this changes dynamics while dating. How much do interactions change when the woman may be your future ex writing a tea-spilling future review? Some amount of this is good, since it rewards staying on good terms and treating her well. This can also be a threat, or held over your head, and have some decidedly nasty second-order effects.

My guess is that while things like Tea are not used that often, this is all clearly good, but that if this reached a critical mass where there was too much negative selection risk out there for the woman to not to use such tools, then the fact that all the false positives and unfortunate situations correlate (e.g. everyone you want to date is seeing the same info, and that can ruin your chances in general, and this can be used as a threat) makes things a lot less clear.

Here’s Sgt Blackout thinking he’s solving for the equilibrium and failing, via a combination of objectification and then taking the 0-10 scale and completely butchering everything related to it on multiple levels at once, including by conflating a hotness-only-kind-of-offensive-objectification scale with an actual-human-including-personality scale, and trying to condense two dimensions down to one by pretending they correlate way more than they do.

If you do talk with numbers to rate anything, in any context, you always have to be clear what the numbers refer to, and what those numbers are leaving out.

It is obviously correct, however, to keep an eye on the personality distinction he’s pointing at underneath all that, about a personality type of ‘I am the hotness and get to act like it’ that definitely exists and is mostly to be avoided for most people reading this, even if they’re right.

Wisdom about the 0-10 scale:

Felisa Navidad: When men are debating online whether some woman is an “8” or a “10” or w/e, I interpret it as basically the same kind of thing as when they are debating who would win in a fight, Batman or Superman.

BDSM and kink have gotten steadily more prevalent.

If you are looking for ways to give yourself more value on the dating market?

As I understand the situation, this very much is one of them.

-

The involved population tends to be relatively interesting in other ways.

-

The social conventions of BDSM spaces make many things much easier.

-

Huge supply and demand imbalance. Submissives greatly outnumber dominants.

-

Most dominants do not put in the work to be good at it. You can.

-

It takes remarkably little work to quickly get relatively good at many aspects.

-

That work is largely technical skills, very compatible with geeking out on it all.

-

Many have highly particular preferences. If you can satisfy them, that’s huge.

-

Most dominants do not treat submissives well. Or listen carefully. You can.

Being down for more things, and knowing how to execute on them properly, is a kind of low level dating superpower. And it is one you can learn. It also often helps with confidence.

You would of course also want to figure out which aspects you can actively enjoy, and which you cannot, and act accordingly.

Aella breaks out some conceptual subtypes here. Note that the darker and more ‘hardcore’ stuff tends to be less popular. Most of the demand is for relatively light aspects that don’t require being all that actively kinky.

She also notes that different sexually successful guys can report overall very different female preferences in terms of liking it rough versus gentle. There are so many different decisions you make along the way, both big and subtle, that shape both who you end up dating, and also what they want from you.

Also gasp, I know: Sex dolls are not representative of typical average body types.

Here Aella talks about some of her interviews with people with obscure fetishes, as in ‘I like that one completely otherwise non-erotic scene in that one movie and literally nothing else.’

Lovable Rogue: I think a lot of people who haven’t truly shopped around don’t realize how good the 99th percentile are in bed.

It’s actually not a dig because often times those people aren’t / don’t want to be good partners.

Aella: it’s insane cause we have a good concept of what ‘high skill’ looks like for skills we can see – piano, dancing, whatever.

What if we treated sex the same way? It turns out there *isa high skill ceiling, but people really have no idea how much better it can be.

It does require a few things tho. Like, maybe you only enjoy learning jazz on the piano. You *couldlearn how to do classical, but it would be a bit of a slog. It’s gonna be hard if you marry someone who only enjoys listening to classical no matter how skilled both of you are.

…

Those ppl are gonna get blown out of the water by those who Practice.

Of course the 99th percentile person – either for you in particular, or in general – is going to be very, very good in bed. As is the 99th percentile match. And yes, you can get a lot better with practice, both in general and as a match for a particular person. It would be absurd to think otherwise.

I find Rogue’s comment interesting, including the ‘how do you know enough 99th percentile people well enough to form a pattern, even by reputation?’ One can imagine this going either way – perhaps the way you get great at sex is you really want to be a great partner in every way, perhaps it’s so you can avoid doing that in other ways, or it trades off against developing other skills, or the way you get good involves not otherwise being that great a partner, shall we say.

They redid the ‘random stranger propositions people’ study again:

Rolf Degen: 45 years after Clark and Hatfield’s initial experiment and 10 years after the latest replication, the present study showed that this gender difference persists. Significantly more men than women (27% vs. 4%) accepted an offer [of casual sex].

This effect was particularly large among single participants. In the sex condition [of study 1], 67% of male singles accepted the offer compared to 0% of female singles.

I assume the 0% vs. 4% is a random effect, the sample sizes are not that huge.

At the same time, our results question Clark and Hatfield’s finding that the gender difference is especially large when it comes to explicit sexual offers. Indeed, our results show that the gender difference is independent of the proposition’s explicitness as men were more likely than women to accept any of the three offers. Furthermore, acceptance rates of both men and women were much lower than those reported by Clark and Hatfield and also lower than those of previous replications.

Overall, it seems that the receptivity to casual sexual offers from both men and women has dramatically decreased over time..

These are huge gaps in acceptance rates, but no correlation between gender and explicitness – the more explicit the offer, the less likely everyone was to accept it, but you could shoot your shot either way, contradicting Clark and Hatfield’s results.

I find the new result very hard to believe in relative terms, and am highly tempted to either defy the data or wonder about the people conducting these studies – if I can choose who is asking in both cases then I bet I could equalize the explicitness effect?

I can totally believe that receptivity has declined over time across the board, sad.

A practical guide to giving blowjobs to file under ‘it all sounds obvious but that doesn’t mean having it written down isn’t helpful.’

This continues to seem spot on to me.

Alexander: A few people made comments yesterday to the effect that men will have sex with promiscuous women, but not form long-term relationships with them.

This doesn’t seem to be reflected in nationality representative marriage data: women with high “body counts” aren’t less likely to get married in the long run.

Past promiscuity doesn’t seem to stop people from getting into long-term relationships or getting married. Perhaps unsurprising since a lot of people don’t even ask the “body count” question – 49% of men and 42% of women report having ever been asked at all in my surveys.

Consistent with this, about 50% of both men and women report never asking.

This doesn’t mean it is inconsequential for relationship outcomes: a larger sexual history is associated with relationship dissolution and infidelity. People like to frame this as “women’s body count,” but the associations are the same for men and women. This probably isn’t causal (eg – casual sex isn’t “frying your pair bonding receptors”). It’s simply that higher promiscuity is associated with lots of behaviors and traits that predict relationship dissolution.

This seems like the default, and Alexander covers the obvious mechanisms. One could also notice that this leaves out that being more promiscuous likely correlates with more shots on goal and opportunities and also various desirable traits, given how often the person was indeed desired. So there is some amount of balancing out.

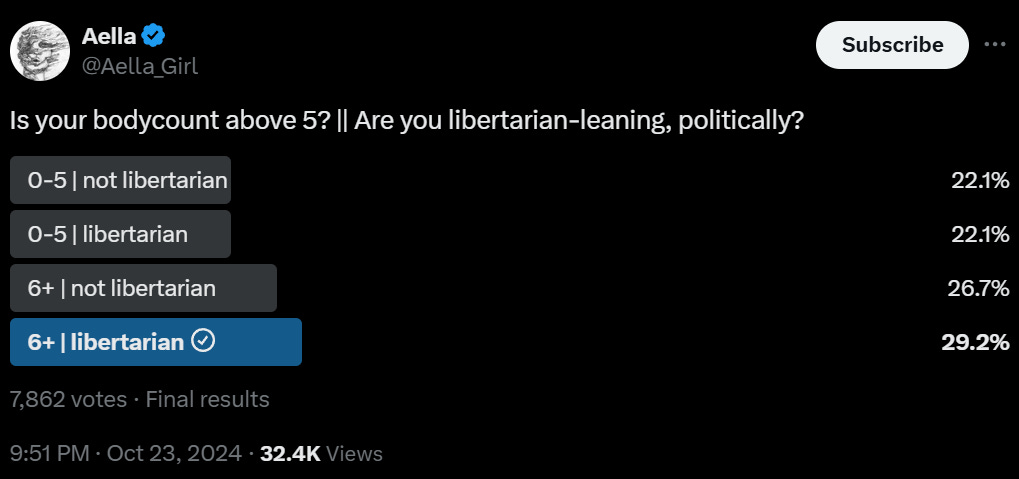

This poll provides three data points:

One result is that being libertarian is only slightly correlated with body count, once you control for voting in Aella polls. Another is that self-described libertarians are 52% of Aella’s voters, which seems about right.

The result that actually stood out to me was that only 56% of voters had a body count of six or higher, and again these are Aella poll voters.

It’s good to be reminded that most people really don’t have sex with that many people.

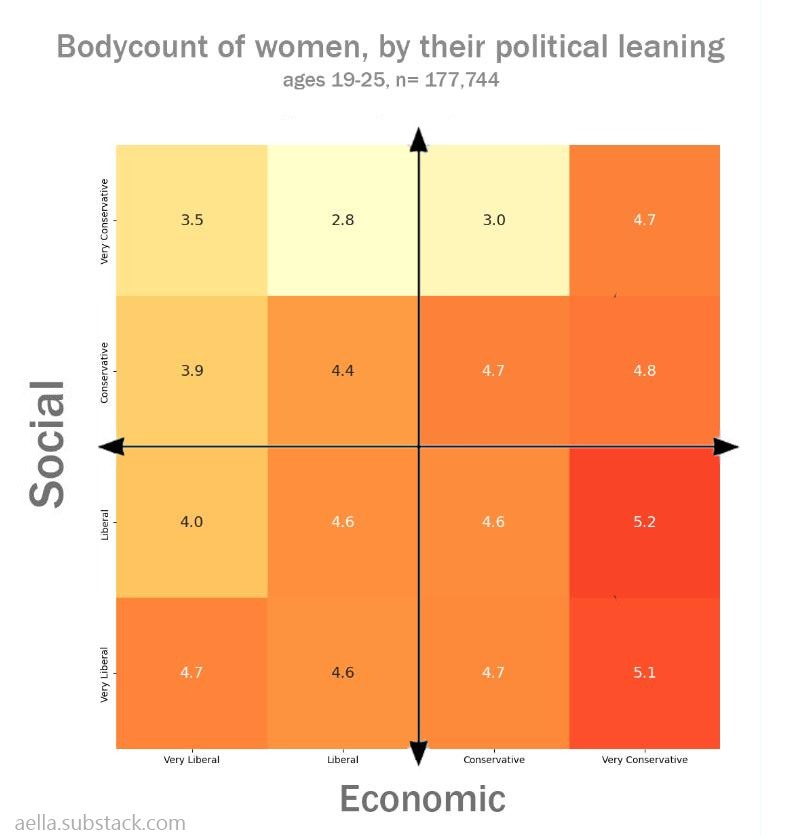

Here’s another self-reported bodycount chart, these are mean values, model this?

The woman is more likely to be the one that pulls the trigger. That does not tell you what or who was ultimately responsible for that being the final outcome.

Regan Arntz-Gray: I’ve seen this repeated elsewhere and want to clarify that the “women initiate 70% of divorces” stat comes from survey data from How Couples Meet and Stay Together. It may be true of filing data as well, but this is what couples self report. Using the extended data set through 2022 I found women initiated 65% of divorces (note that when respondents indicated a mutual breakup it is counted as 50% female initiated and 50% male initiated, so the underlying numbers are 54% initiated by woman only, 23% by man only, 23% mutual).

But I still agree with Allie’s sentiment, that this doesn’t mean women *cause70% (or 65%) of divorces, or even that they’re more flippant about divorce. My read on it is that women *think moreabout their relationship in general and are therefore more likely to notice when it’s degraded to a point of no return, and to call it, asking for a divorce. I think the typical man can bury his head in the sand for longer.