Utah leaders hinder efforts to develop solar energy supply

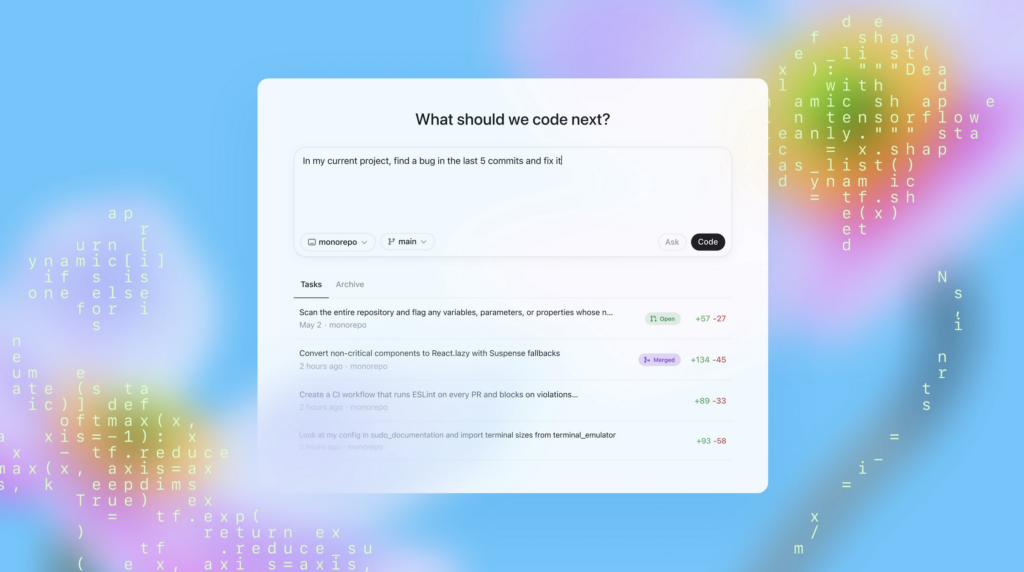

Solar power accounts for two-thirds of the new projects waiting to connect to the state’s power grid.

Utah Gov. Spencer Cox believes his state needs more power—a lot more. By some estimates, Utah will require as much electricity in the next five years as it generated all last century to meet the demands of a growing population as well as chase data centers and AI developers to fuel its economy.

To that end, Cox announced Operation Gigawatt last year, declaring the state would double energy production in the next decade. Although the announcement was short on details, Cox, a Republican, promised his administration would take an “any of the above” approach, which aims to expand all sources of energy production.

Despite that goal, the Utah Legislature’s Republican supermajority, with Cox’s acquiescence, has taken a hard turn against solar power—which has been coming online faster than any other source in Utah and accounts for two-thirds of the new projects waiting to connect to the state’s power grid.

Cox signed a pair of bills passed this year that will make it more difficult and expensive to develop and produce solar energy in Utah by ending solar development tax credits and imposing a hefty new tax on solar generation. A third bill aimed at limiting solar development on farmland narrowly missed the deadline for passage but is expected to return next year.

While Operation Gigawatt emphasizes nuclear and geothermal as Cox’s preferred sources, the legislative broadside, and Cox’s willingness to go along with it, caught many in the solar industry off guard. The three bills, in their original form, could have brought solar development to a halt if not for solar industry lobbyists negotiating a lower tax rate and protecting existing projects as well as those under construction from the brunt of the impact.

“It took every dollar of political capital from all the major solar developers just to get to something tolerable, so that anything they have under development will get built and they can move on to greener pastures,” said one industry insider, indicating that solar developers will likely pursue projects in more politically friendly states. ProPublica spoke with three industry insiders—energy developers and lobbyists—all of whom asked to remain anonymous for fear of antagonizing lawmakers who, next month, will again consider legislation affecting the industry.

The Utah Legislature’s pivot away from solar mirrors President Donald Trump taking a more hostile approach to the industry than his predecessor. Trump has ordered the phaseout of lucrative federal tax incentives for solar and other renewable energy, which expanded under the Biden administration. The loss of federal incentives is a bigger hit to solar companies than the reductions to Utah’s tax incentives, industry insiders acknowledged. The administration has also canceled large wind and solar projects, which Trump has lamented as “the scam of the century.” He described solar as “farmer killing.”

Yet Cox criticized the Trump administration’s decision to kill a massive solar project in neighboring Nevada. Known as a governor who advocates for a return to more civil political discourse, Cox doesn’t often pick fights. But he didn’t pull punches with the decision to halt the Esmeralda 7 project planned on 62,300 acres of federal land. The central Nevada project was expected to produce 6.2 gigawatts of power—enough to supply nearly eight times the number of households in Las Vegas. (Although the Trump administration canceled the environmental review of the joint project proposed by multiple developers, it has the potential to move forward as individual projects.)

“This is how we lose the AI/energy arms race with China,” Cox wrote on X when news surfaced of the project’s cancellation. “Our country needs an all-of-the-above approach to energy (like Utah).”

But he didn’t take on his own Legislature, at least publicly.

Many of Utah’s Republican legislators have been skeptical of solar for years, criticizing its footprint on the landscape and viewing it as an unreliable energy source, while lamenting the retirement of coal-generated power plants. The economies of several rural counties rely on mining coal. But lawmakers’ skepticism hadn’t coalesced into successful anti-solar legislation—until this year. When Utah lawmakers convened at the start of 2025, they took advantage of the political moment to go after solar.

“This is a sentiment sweeping through red states, and it’s very disconcerting and very disturbing,” said Steve Handy, Utah director of The Western Way, which describes itself as a conservative organization advocating for an all-of-the-above approach to energy development.

The shift in sentiment against solar energy has created a difficult climate for an all-of-the-above approach. Solar projects can be built quickly on Utah’s vast, sun-drenched land, while nuclear is a long game with projects expected to take a decade or more to come online under optimistic scenarios.

Cox generally supports solar, “in the right places,” especially when the captured energy can be stored in large batteries for distribution on cloudy days and after the sun goes down.

Cox said that instead of vetoing the anti-solar bills, he spent his political capital to moderate the legislation’s impact. “I think you’ll see where our fingerprints were,” he told ProPublica. He didn’t detail specific changes for which he advocated but said the bills’ earlier iterations would have “been a lot worse.”

“We will continue to see solar in Utah.”

Cox’s any-of-the-above approach to energy generation draws from a decades-old Republican push similarly titled “all of the above.” The GOP policy’s aim was as much about preserving and expanding reliance on fossil fuels (indeed, the phrase may have been coined by petroleum lobbyists) as it was turning to cleaner energy sources such as solar, wind, and geothermal.

As governor of a coal-producing state, Cox hasn’t shown interest in reducing reliance on such legacy fuels. But as he slowly rolls out Operation Gigawatt, his focus has been on geothermal and nuclear power. Last month, he announced plans for a manufacturing hub for small modular reactors in the northern Utah community of Brigham City, which he hopes will become a nuclear supply chain for Utah and beyond. And on a recent trade mission to New Zealand, he signed an agreement to collaborate with the country on geothermal energy development.

Meanwhile, the bills Cox signed into law already appear to be slowing solar development in Utah. Since May, when the laws took effect, 51 planned solar projects withdrew their applications to connect to the state’s grid—representing more than a quarter of all projects in Utah’s transmission connection queue. Although projects drop out for many reasons, some industry insiders theorize the anti-solar legislation could be at play.

Caught in the political squeeze over power are Utah customers, who are footing higher electricity bills. Earlier this year, the state’s utility, Rocky Mountain Power, asked regulators to approve a 30 percent hike to fund increased fuel and wholesale energy costs, as well as upgrades to the grid. In response to outrage from lawmakers, the utility knocked the request down to 18 percent. Regulators eventually awarded the utility a 4.7 percent increase—a decision the utility promptly appealed to the state Supreme Court.

Juliet Carlisle, a University of Utah political science professor focusing on environmental policy, said the new solar tax could signal to large solar developers that Utah energy policy is “becoming more unpredictable,” prompting them to build elsewhere. This, in turn, could undermine Cox’s efforts to quickly double Utah’s electricity supply.

Operation Gigawatt “relies on rapid deployment across multiple energy sources, including renewables,” she said. “If renewable growth slows—especially utility-scale solar, which is currently the fastest-deploying resource—the state may face challenges meeting demand growth timelines.”

Rep. Kay Christofferson, R-Lehi, had sponsored legislation to end the solar industry’s state tax credits for several legislative sessions, but this was the first time the proposal succeeded.

Christofferson agrees Utah is facing unprecedented demand for power, and he supports Cox’s any-of-the-above approach. But he doesn’t think solar deserves the advantages of tax credits. Despite improving battery technology, he still considers it an intermittent source and thinks overreliance on it would work against Utah’s energy goals.

In testimony on his bill, Christofferson said he believed the tax incentives had served their purpose of getting a new industry off the ground—16 percent of Utah’s power generation now comes from solar, ranking it 16th in the nation for solar capacity.

Christofferson’s bill was the least concerning to the industry, largely because it negotiated a lengthy wind-down of the subsidies. Initially it would have ended the tax credit after Jan. 1, 2032. But after negotiations with the solar industry, he extended the deadline to 2035.

The bill passed the House, but when it reached the Senate floor, Sen. Brady Brammer, R-Pleasant Grove, moved the end of the incentives to 2028. He told ProPublica he believes solar is already established and no longer needs the subsidy. Christofferson tried to defend his compromise but ultimately voted with the legislative majority.

Unlike Christofferson’s bill, which wasn’t born of an antipathy for renewable energy, Rep. Casey Snider, R-Paradise, made it clear in public statements and behind closed doors to industry lobbyists that the goal of his bill was to make solar pay.

The bill imposes a tax on all solar production. The proceeds will substantially increase the state’s endangered species fund, which Utah paradoxically uses to fight federal efforts to list threatened animals for protection. Snider cast his bill as pro-environment, arguing the money could also go to habitat protection.

As initially written, the bill would have taxed not only future projects, but also those already producing power and, more worrisome for the industry, projects under construction or in development with financing in place. The margins on such projects are thin, and the unanticipated tax could kill projects already in the works, one solar industry executive testified.

“Companies like ours are being effectively punished for investing in the state,” testified another.

The pushback drew attacks from Snider, who accused solar companies of hypocrisy on the environment.

Industry lobbyists who spoke to ProPublica said Snider wasn’t as willing to negotiate as Christofferson. However, they succeeded in reducing the tax rate on future developments and negotiated a smaller, flat fee for existing projects.

“Everyone sort of decided collectively to save the existing projects and let it go for future projects,” said one lobbyist.

Snider told ProPublica, “My goal was never to run anybody out of business. If we wanted to make it more heavy-handed, we could have. Utah is a conservative state, and I would have had all the support.”

Snider said, like the governor, he favors an any-of-the-above approach to energy generation and doesn’t “want to take down any particular industry or source.” But he believes utility-scale solar farms need to pay to mitigate their impact on the environment. He likened his bill to federal law that requires royalties from oil and gas companies to be used for conservation. He hopes federal lawmakers will use his bill as a model for federal legislation that would apply to solar projects nationwide.

“This industry needs to give back to the environment that they claim very heavily they are going to protect,” he said. “I do believe there’s a tinge of hypocrisy to this whole movement. You can’t say you’re good for the environment and not offset your impacts.”

One of the more emotional debates over solar is set to return next year, after a bill that would end tax incentives for solar development on agricultural land failed to get a vote in the final minutes of this year’s session. Sponsored by Rep. Colin Jack, R-St. George, the bill has been fast-tracked in the next session, which begins in January.

Jack said he was driven to act by ranchers who were concerned that solar companies were outbidding them for land they had been leasing to graze cows. Solar companies pay substantially higher rates than ranchers can. His bill initially had a slew of land use restrictions—such as mandating the distance between projects and residential property and creeks, minimum lot sizes and 4-mile “green zones” between projects—that solar lobbyists said would have strangled their industry. After negotiating with solar developers, Jack eliminated the land use restrictions while preserving provisions to prohibit tax incentives for solar farms on private agricultural land and to create standards for decommissioning projects.

Many in rural Utah recoil at rows of black panels disrupting the landscape and fear solar farms will displace the ranching and farming way of life. Indeed, some wondered whether Cox, who grew up on a farm in central Utah, would have been as critical of Trump scuttling a 62,300-acre solar farm in his own state as he was of the Nevada project’s cancellation.

Peter Greathouse, a rancher in western Utah’s Millard County, said he is worried about solar farms taking up grazing land in his county. “Twelve and a half percent is privately owned, and a lot of that is not farmable. So if you bring in these solar places that start to eat up the farmland, it can’t be replaced,” he said.

Utah is losing about 500,000 acres of agricultural land every 10 years, most of it to housing. A report by The Western Way estimated solar farms use 0.1 percent of the United States’ total land mass. That number is expected to grow to 0.46 percent by 2050—a tiny fraction of what is used by agriculture. Of the land managed by the Utah Trust Lands Administration, less than 3,000 of the 2.9 million acres devoted to grazing have been converted to solar farms.

Other ranchers told ProPublica they’ve been able to stay on their land and preserve their way of life by leasing to solar. Landon Kesler’s family, which raises cattle for team roping competitions, has leased land to solar for more than a decade. The revenue has allowed the family to almost double its land holdings, providing more room to ranch, Kesler said.

“I’m going to be quite honest, it’s absurd,” Kesler said of efforts to limit solar on agricultural land. “Solar very directly helped us tie up other property to be used for cattle and ranching. It didn’t run us out; it actually helped our agricultural business thrive.”

Solar lobbyists and executives have been working to bolster the industry’s image with lawmakers ahead of the next legislative session. They’re arguing solar is a good neighbor.

“We don’t use water, we don’t need sidewalks, we don’t create noise, and we don’t create light,” said Amanda Smith, vice president of external affairs for AES, which has one solar project operating in Utah and a second in development. “So we just sort of sit out there and produce energy.”

Solar pays private landowners in Utah $17 million a year to lease their land. And, more important, solar developers argue, it’s critical to powering data centers the state is working to attract.

“We are eager to be part of a diversified electricity portfolio, and we think we bring a lot of values that will benefit communities, keep rates low and stable, and help keep the lights on,” Rikki Seguin, executive director of Interwest Energy Alliance, a western trade organization that advocates for utility-scale renewable energy projects, told an interim committee of lawmakers this summer.

The message didn’t get a positive reception from some lawmakers on the committee. Rep. Carl Albrecht, R-Richfield, who represents three rural Utah counties and was among solar’s critics last session, said the biggest complaint he hears from constituents is about “that ugly solar facility” in his district.

“Why, Rep. Albrecht, did you allow that solar field to be built? It’s black. It looks like the Dead Sea when you drive by it,” Albrecht said.

This story was originally published by ProPublica.

Utah leaders hinder efforts to develop solar energy supply Read More »