So long, data limits

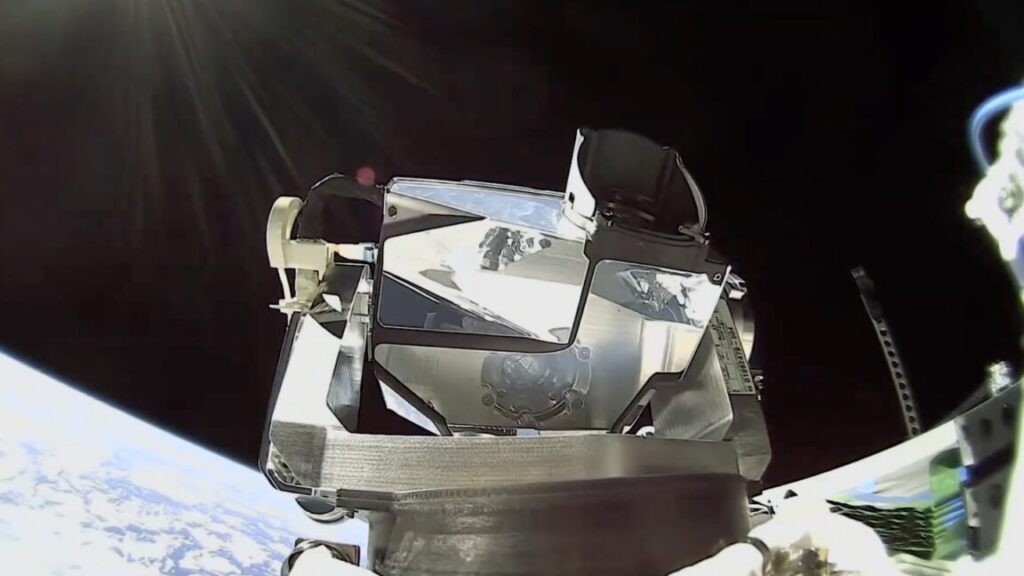

Lasers have other benefits over ground stations. Optical links offer significantly more throughput than traditional radio communication systems, and they’re not constrained by regulations on radio spectrum usage.

“What it does for our customers and for the company is we are able to get more than 10x, maybe even 50x, the amount of data that they’re able to bring down, and we’re able to offer them that on a latency of nearly instant,” Stang said in an interview with Ars.

SpaceX’s mini-lasers are designed to achieve link speeds of 25Gbps at distances up to 2,500 miles (4,000 kilometers). These speeds will “open new business models” for satellite operators who can now rely on the same “Internet speed and responsiveness as cloud providers and telecom networks on the ground,” Muon said in a statement.

Muon’s platform, called Halo, comes in different sizes, with satellites ranging up to a half-ton. “With persistent optical broadband, Muon Halo satellites will move from being isolated vehicles to becoming active, real‑time nodes on Starlink’s global network,” Stang said in a press release. “That shift transforms how missions are designed and how fast insights flow to decision‑makers on Earth.”

Muon said the first laser-equipped satellite will launch in early 2027 for an undisclosed customer.

“We like to believe part of why SpaceX trusts us to be the ones to be able to lead on this is because our system is designed to really deal with very different levels of requirements,” Smirin said. “As far as we’re aware, this is the first integration into a satellite. We have a ton of interest from commercial customers for our capabilities in general, and we expect this should just boost that quite significantly.”

FireSat is one of the missions where Starlink connectivity would have an impact by rapidly informing first responders of a wildfire, Smirin said. According to Muon, using satellite laser links would cut FireSat data latency from an average of 20 minutes to near real-time.

“It’s not just for the initial detection,” Smirin said. “It’s also once a fire is ongoing, [cutting] the time and the latency for seeing the intensity and direction of the fire, and being able to update that in near real-time. It has incredible value to incident commanders on the ground, because they’re trying to figure out a way to position their equipment and their people.”

Thinking big

Ubiquitous connectivity in space could eventually lead to new types of missions. “Now, you’ve got a data center in space,” Smirin said. “You can do AI there. You can connect with data centers on the ground.”

While this first agreement between Muon and SpaceX covers commercial data relay, it’s easy to imagine other applications, such as continuous live drone-like high-resolution streaming video from space for surveillance or weather monitoring. Live video from space has historically been limited to human spaceflight missions or rocket-mounted cameras that operate for a short time.

One example of that is the dazzling live video beamed back to Earth, through Starlink, from SpaceX’s Starship rockets. The laser terminals on Starship operate through the extreme heat of reentry, returning streaming video as plasma envelops the vehicle. This environment routinely causes radio blackouts for other spacecraft as they reenter the atmosphere. With optical links, that’s no longer a problem.

“This starts to enable a whole new category of capabilities, much the same way as when terrestrial computers went from dial-up to broadband,” Smirin said. “You knew what it could do, but we blew through bulletin boards very quickly to many different applications.”