AI #102: Made in America

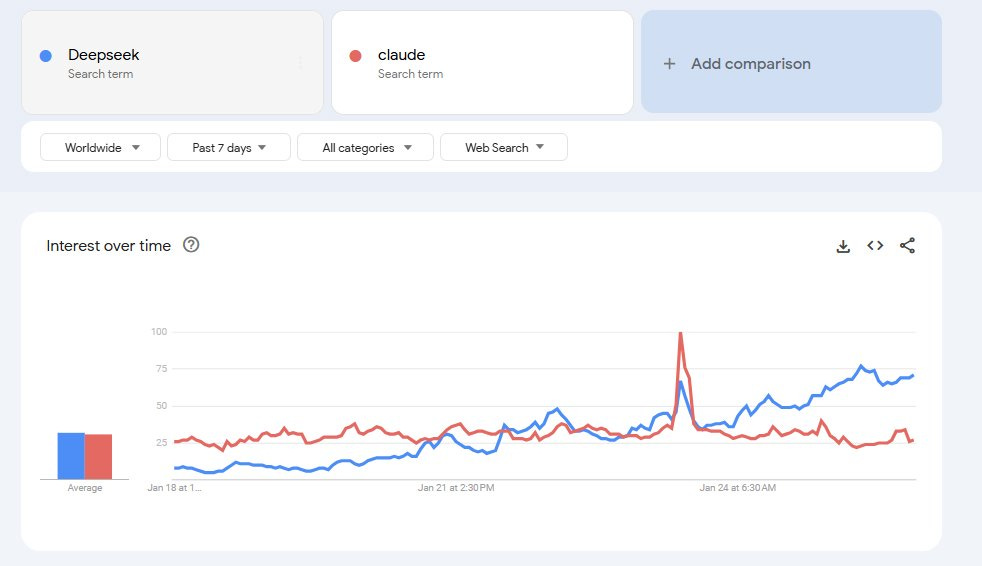

I remember that week I used r1 a lot, and everyone was obsessed with DeepSeek.

They earned it. DeepSeek cooked, r1 is an excellent model. Seeing the Chain of Thought was revolutionary. We all learned a lot.

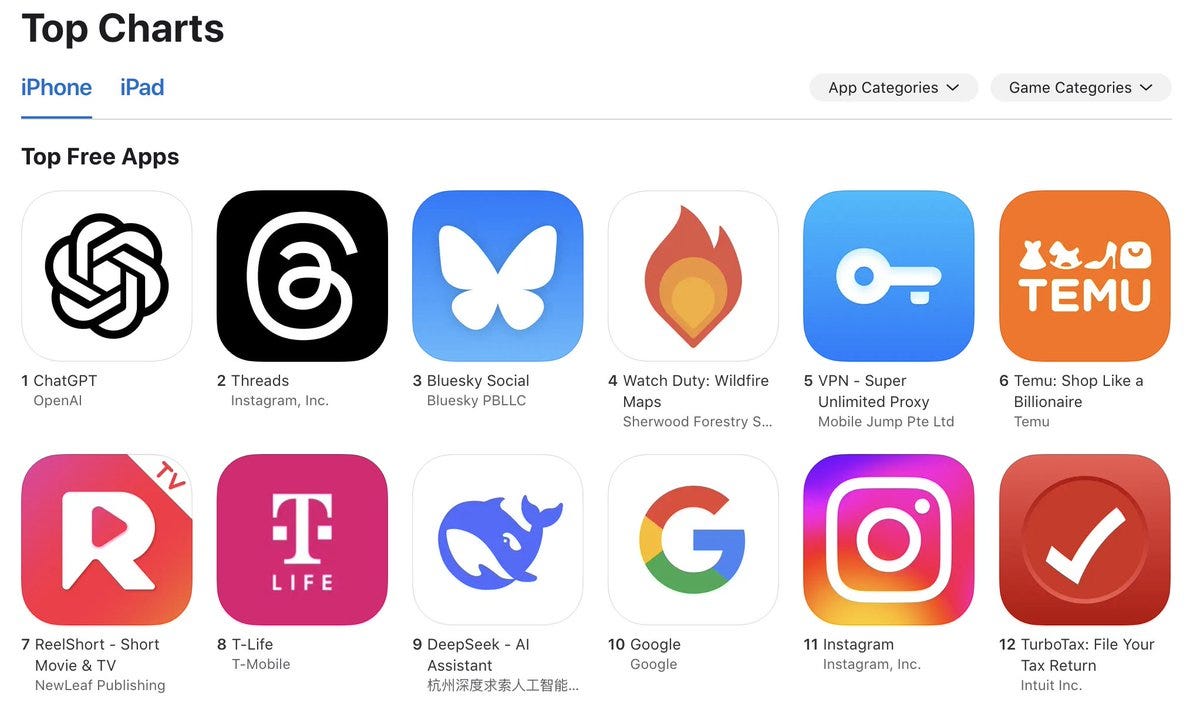

It’s still #1 in the app store, there are still hysterical misinformed NYT op-eds and and calls for insane reactions in all directions and plenty of jingoism to go around, largely based on that highly misleading $6 millon cost number for DeepSeek’s v3, and a misunderstanding of how AI capability curves move over time.

But like the tariff threats that’s now so yesterday now, for those of us that live in the unevenly distributed future.

All my reasoning model needs go through o3-mini-high, and Google’s fully unleashed Flash Thinking for free. Everyone is exploring OpenAI’s Deep Research, even in its early form, and I finally have an entity capable of writing faster than I do.

And, as always, so much more, even if we stick to AI and stay in our lane.

Buckle up. It’s probably not going to get less crazy from here.

From this week: o3-mini Early Days and the OpenAI AMA, We’re in Deep Research and The Risk of Gradual Disempowerment from AI.

-

Language Models Offer Mundane Utility. The new coding language is vibes.

-

o1-Pro Offers Mundane Utility. Tyler Cowen urges you to pay up already.

-

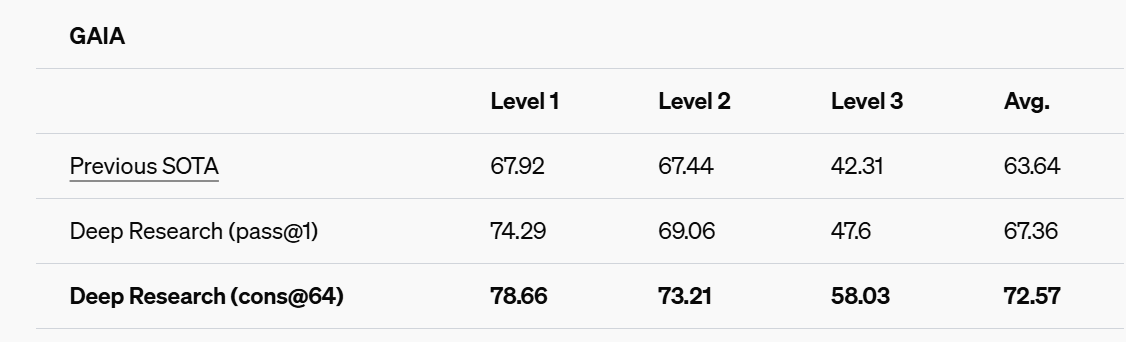

We’re in Deep Research. Further reviews, mostly highly positive.

-

Language Models Don’t Offer Mundane Utility. Do you need to bootstrap thyself?

-

Model Decision Tree. Sully offers his automated use version.

-

Huh, Upgrades. Gemini goes fully live with its 2.0 offerings.

-

Bot Versus Bot. Wouldn’t you prefer a good game of chess?

-

The OpenAI Unintended Guidelines. Nothing I’m conscious of to see here.

-

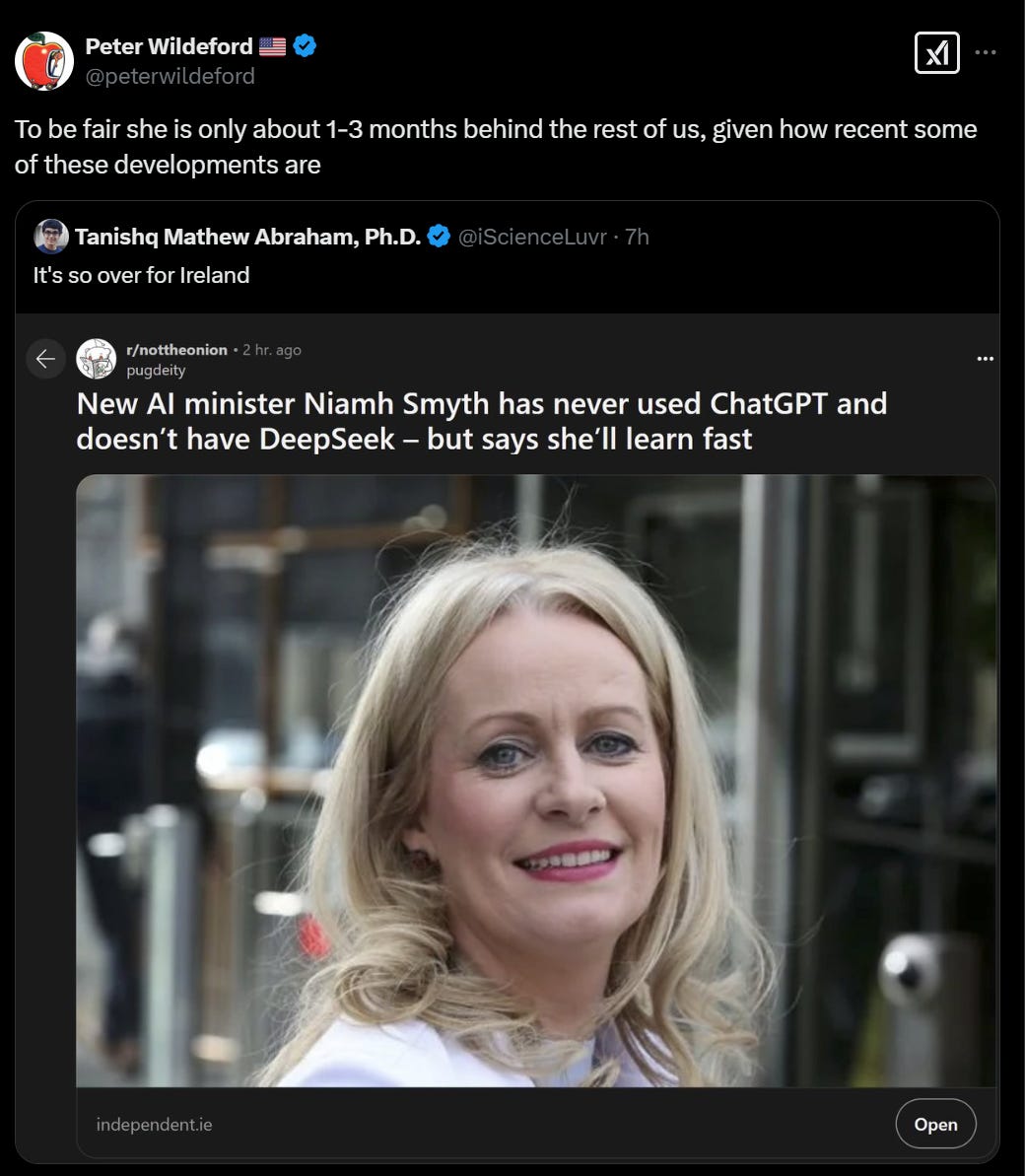

Peter Wildeford on DeepSeek. A clear explanation of why we all got carried away.

-

Our Price Cheap. What did DeepSeek’s v3 and r1 actually cost?

-

Otherwise Seeking Deeply. Various other DeepSeek news, a confused NYT op-ed.

-

Smooth Operator. Not there yet. Keep practicing.

-

Have You Tried Not Building An Agent? I tried really hard.

-

Deepfaketown and Botpocalypse Soon. Free Google AI phone calls, IG AI chats.

-

They Took Our Jobs. It’s going to get rough out there.

-

The Art of the Jailbreak. Think less.

-

Get Involved. Anthropic offers a universal jailbreak competition.

-

Introducing. DeepWriterAI.

-

In Other AI News. Never mind that Google pledge to not use AI for weapons.

-

Theory of the Firm. What would a fully automated AI firm look like?

-

Quiet Speculations. Is the product layer where it is at? What’s coming next?

-

The Quest for Sane Regulations. We are very much not having a normal one.

-

The Week in Audio. Dario Amodei, Dylan Patel and more.

-

Rhetorical Innovation. Only attack those putting us at risk when they deserve it.

-

Aligning a Smarter Than Human Intelligence is Difficult. If you can be fooled.

-

The Alignment Faking Analysis Continues. Follow-ups to the original finding.

-

Masayoshi Son Follows Own Advice. Protein is very important.

-

People Are Worried About AI Killing Everyone. The pope and the patriarch.

-

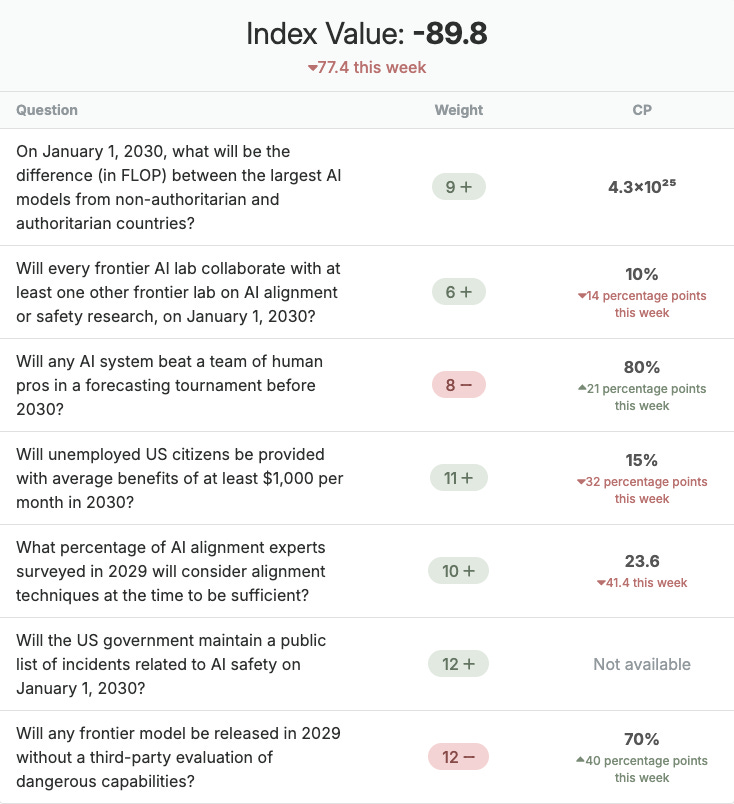

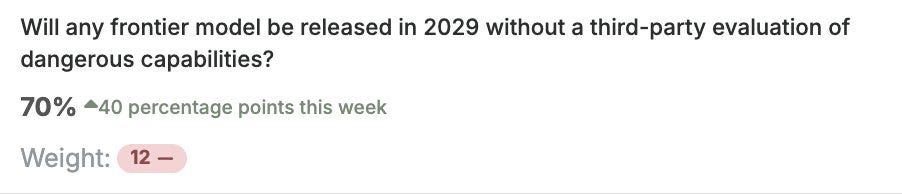

You Are Not Ready. Neither is the index measuring this, but it’s a start.

-

Other People Are Not As Worried About AI Killing Everyone. A word, please.

-

The Lighter Side. At long last.

You can subvert OpenAI’s geolocation check with a VPN, but of course never do that.

Help you be a better historian, generating interpretations, analyzing documents. This is a very different modality than the average person using AI to ask questions, or for trying to learn known history.

Diagnose your child’s teeth problems.

Figure out who will be mad about your tweets. Next time, we ask in advance!

GFodor: o3-mini-high is an excellent “buddy” for reading technical papers and asking questions and diving into areas of misunderstanding or confusion. Latency/IQ tradeoff is just right. Putting this into a great UX would be an amazing product.

Right now I’m suffering through copy pasting and typing and stuff, but having a UI where I could have a PDF on the left, highlight sections and spawn chats off of them on the right, and go back to the chat trees, along with voice input to ask questions, would be great.

(I *don’twant voice output, just voice input. Seems like few are working on that modality. Asking good questions seems easier in many cases to happen via voice, with the LLM then having the ability to write prose and latex to explain the answer).

Ryan: give me 5 hours. ill send a link.

I’m not ready to put my API key into a random website, but that’s how AI should work these days. You don’t like the UI, build a new one. I don’t want voice input myself, but highlighting and autoloading and the rest all sound cool.

Indeed, that was the killer app for which I bought a Daylight computer. I’ll report back when it finally arrives.

Meanwhile the actual o3-mini-high interface doesn’t even let you to upload the PDF.

Consensus on coding for now seems to be leaning in the direction that you use Claude Sonnet 3.6 for a majority of ordinary tasks, o1-pro or o3-mini-high for harder ones and one shots, but reasonable people disagree.

Karpathy has mostly moved on fully to “vibe coding,” it seems.

Andrej Karpathy: There’s a new kind of coding I call “vibe coding”, where you fully give in to the vibes, embrace exponentials, and forget that the code even exists. It’s possible because the LLMs (e.g. Cursor Composer w Sonnet) are getting too good.

Also I just talk to Composer with SuperWhisper so I barely even touch the keyboard. I ask for the dumbest things like “decrease the padding on the sidebar by half” because I’m too lazy to find it.

I “Accept All” always, I don’t read the diffs anymore.

When I get error messages I just copy paste them in with no comment, usually that fixes it. The code grows beyond my usual comprehension, I’d have to really read through it for a while. Sometimes the LLMs can’t fix a bug so I just work around it or ask for random changes until it goes away. It’s not too bad for throwaway weekend projects, but still quite amusing. I’m building a project or webapp, but it’s not really coding – I just see stuff, say stuff, run stuff, and copy paste stuff, and it mostly works.

Lex Fridman: YOLO 🤣

How long before the entirety of human society runs on systems built via vibe coding. No one knows how it works. It’s just chatbots all the way down 🤣

PS: I’m currently like a 3 on the 1 to 10 slider from non-vibe to vibe coding. Need to try 10 or 11.

Sully: realizing something after vibe coding: defaults matter way more than i thought

when i use supabase/shadcn/popular oss: claude + cursor just 1 shots everything without me paying attention

trying a new new, less known lib?

rarely works, composer sucks, etc

Based on my experience with cursor I have so many questions on how that can actually work out, then again maybe I should just be doing more projects and webapps.

I do think Sully is spot on about vibe coding rewarding doing the same things everyone else is doing. The AI will constantly try to do default things, and draw upon its default knowledge base. If that means success, great. If not, suddenly you have to do actual work. No one wants that.

Sully interpretes features like Canvas and Deep Research as indicating the app layer is ‘where the value is going to be created.’ As always the question is who can provide the unique step in the value chain, capture the revenue, own the customer and so on, customers want the product that is useful to them as they always do, and you can think of ‘the value’ as coming from whichever part of the chain depending on perspective.

It is true that for many tasks, we’ve past the point where ‘enough intelligence’ is the main problem at hand. So getting that intelligence into the right package and UI is going to drive customer behavior more than being marginally smarter… except in the places where you need all the intelligence you can get.

Anthropic reminds us of their Developer Console for all your prompting needs, they say they’re working on adapting it for reasoning models.

Nate Silver offers practical advice in preparing for the AI future. He recommends staying on top of things, treating the future as unpredictable, and to focus on building the best complements to intelligence, such as personal skills.

New York Times op-ed pointing out once again that doctors with access to AI can underperform the AI alone, if the doctor is insufficiently deferential to the AI. Everyone involved here is way too surprised by this result.

Daniel Litt explains why o3-mini-high gave him wrong answers to a bunch of math questions but they were decidedly better wrong answers than he’d gotten from previous models, and far more useful.

Tyler Cowen gets more explicit about what o1 Pro offers us.

I’m quoting this one in full.

Tyler Cowen: Often I don’t write particular posts because I feel it is obvious to everybody. Yet it rarely is.

So here is my post on o1 pro, soon to be followed by o3 pro, and Deep Research is being distributed, which uses elements of o3. (So far it is amazing, btw.)

o1 pro is the smartest publicly issued knowledge entity the human race has created (aside from Deep Research!). Adam Brown, who does physics at a world class level, put it well in his recent podcast with Dwarkesh. Adam said that if he had a question about something, the best answer he would get is from calling up one of a handful of world experts on the topic. The second best answer he would get is from asking the best AI models.

Except, at least for the moment, you don’t need to make that plural. There is a single best model, at least when it comes to tough questions (it is more disputable which model is the best and most creative writer or poet).

I find it very difficult to ask o1 pro an economics question it cannot answer. I can do it, but typically I have to get very artificial. It can answer, and answer well, any question I might normally pose in the course of typical inquiry and pondering. As Adam indicated, I think only a relatively small number of humans in the world can give better answers to what I want to know.

In an economics test, or any other kind of naturally occurring knowledge test I can think of, it would beat all of you (and me).

Its rate of hallucination is far below what you are used to from other LLMs.

Yes, it does cost $200 a month. It is worth that sum to converse with the smartest entity yet devised. I use it every day, many times. I don’t mind that it takes some time to answer my questions, because I have plenty to do in the meantime.

I also would add that if you are not familiar with o1 pro, your observations about the shortcomings of AI models should be discounted rather severely. And o3 pro is due soon, presumably it will be better yet.

The reality of all this will disrupt many plans, most of them not directly in the sphere of AI proper. And thus the world wishes to remain in denial. It amazes me that this is not the front page story every day, and it amazes me how many people see no need to shell out $200 and try it for a month, or more.

Economics questions in the Tyler Cowen style are like complex coding questions, in the wheelhouse of what o1 pro does well. I don’t know that I would extend this to ‘all tough questions,’ and for many purposes inability to browse the web is a serious weakness, which of course Deep Research fully solves.

Whereas they types of questions I tend to be curious about seem to have been a much worse fit, so far, for what reasoning models can do. They’re still super useful, but ‘the smartest entity yet devised’ does not, in my contexts, yet seem correct.

Tyler Cowen sees OpenAI’s Deep Research (DR), and is super impressed with the only issue being lack of originality. He is going to use its explanation of Ricardo in his history of economics class, straight up, over human sources. He finds the level of accuracy and clarity stunning, on most any topic. He says ‘it does not seem to make errors.’

I wonder how much of his positive experience is his selection of topics, how much is his good prompting, how much is perspective and how much is luck. Or something else? Lots of others report plenty of hallucinations. Some more theories here at the end of this section.

Ruben Bloom throws DR at his wife’s cancer from back in 2020, finds it wouldn’t have found anything new but would have saved him substantial amounts of time, even on net after having to read all the output.

Nick Cammarata asks Deep Research for a five page paper about whether he should buy one of the cookies the gym is selling, the theory being it could supercharge his workout. The answer was that it’s net negative to eat the cookie, but much less negative than working out is positive either way, so if it’s motivating go for it.

Is it already happening? I take no position on whether this particular case is real, but this class of thing is about to be very real.

Janus: This seems fake. It’s not an unrealistic premise or anything, it just seems like badly written fake dialogue. Pure memetic regurgitation, no traces of a complex messy generating function behind it

Garvey: I don’t think he would lie to me. He’s a very good friend of mine.

Cosmic Vagrant: yeh my friend Jim also was fired in a similar situation today. He’s my greatest ever friend. A tremendous friend in fact.

Rodrigo Techador: No one has friends like you have. Everyone says you have the greatest friends ever. Just tremendous friends.

I mean, firing people to replace them with an AI research assistant, sure, but you’re saying you have friends?

Another thing that will happen is the AIs being the ones reading your paper.

Ethan Mollick: One thing academics should take away from Deep Research is that a substantial number of your readers in the future will likely be AI agents.

Is your paper available in an open repository? Are any charts and graphs described well in the text?

Probably worth considering these…

Spencer Schiff: Deep Research is good at reading charts and graphs (at least that’s what I heard).

Ethan Mollick: Look, your experience may vary, but asking OpenAI’s Deep Research about topics I am writing papers on has been incredibly fruitful. It is excellent at identifying promising threads & work in other fields, and does great work synthesizing theories & major trends in the literature.

A test of whether it might be useful is if you think there are valuable papers somewhere (even in related fields) that are non-paywalled (ResearchGate and arXiv are favorites of the model).

Also asking it to focus on high-quality academic work helps a lot.

Here’s the best bear case I’ve seen so far for the current version, from the comments, and it’s all very solvable practical problems.

Performative Bafflement:

I’d skip it, I found Pro / Deep Research to be mostly useless.

You can’t upload documents of any type. PDF, doc, docx, .txt, *nothing.*.

You can create “projects” and upload various bash scripts and python notebooks and whatever, and it’s pointless! It can’t even access or read those, either!

Literally the only way to interact or get feedback with anything is by manually copying and pasting text snippets into their crappy interface, and that runs out of context quickly.

It also can’t access Substack, Reddit, or any actually useful site that you may want to survey with an artificial mind.

It sucked at Pubmed literature search and review, too. Complete boondoggle, in my own opinion.

The natural response is ‘PB is using it wrong.’ You look for what an AI can do, not what it can’t do. So if DR can do [X-1] but not [X-2] or [Y], have it do [X-1]. In this case, PB’s request is for some very natural [X-2]s.

It is a serious problem to not have access to Reddit or Substack or related sources. Not being able to get to gated journals even when you have credentials for them is a big deal. And it’s really annoying and limiting to not have PDF uploads.

That does still leave a very large percentage of all human knowledge. It’s your choice what questions to ask. For now, ask the ones where these limitations aren’t an issue.

Or even the ones where they are an advantage?

Tyler Cowen gave perhaps the strongest endorsement so far of DR.

It does not seem like a coincidence that he is also someone who has strongly advocated for an epistemic strategy of, essentially, ignoring entirely sources like Substack and Reddit, in favor of more formal ones.

It also does not seem like a coincidence that Tyler Cowen is the fastest reader.

So you have someone who can read these 10-30 page reports quickly, glossing over all the slop, and who actively wants to exclude many of the sources the process excludes. And who simply wants more information to work with.

It makes perfect sense that he would love this. That still doesn’t explain the lack of hallucinations and errors he’s experiencing – if anything I’d expect him to spot more of them, since he knows so many facts.

But can it teach you how to use the LLM to diagnose your child’s teeth problems? PoliMath asserts that it cannot – that the reason Eigenrobot could use ChatGPT to help his child is because Eigenrobot learned enough critical thinking and domain knowledge, and that with AI sabotaging high school and college education people will learn these things less. We mentioned this last week too, and again I don’t know why AI couldn’t end up making it instead far easier to teach those things. Indeed, if you want to learn how to think, be curious alongside a reasoning model that shows its chain of thought, and think about thinking.

I offered mine this week, here’s Sully’s in the wake of o3-mini, he is often integrating into programs so he cares about different things.

Sully: o3-mini -> agents agents agents. finally most agents just work. great at coding (terrible design taste). incredibly fast, which makes it way more usable. 10/10 for structured outputs + json (makes a really great router). Reasoning shines vs claude/4o on nuanced tasks with json

3.5 sonnet -> still the “all round” winner (by small margin). generates great ui, fast, works really well. basically every ai product uses this because its a really good chatbot & can code webapps. downsides: tool calling + structured outputs is kinda bad. It’s also quite pricy vs others.

o1-pro: best at complex reasoning for code. slow as shit but very solves hard problems I can’t be asked to think about. i use this a lot when i have 30k-50k tokens of “dense” code.

gpt-4o: ?? Why use this over o3-mini.

r1 -> good, but I can’t find a decently priced us provider. otherwise would replace decent chunk of my o3-mini with it

gemini 2.0 -> great model but I don’t understand how this can be experimental for >6 weeks. (launches fully soon) I wanted to swap everything to do this but now I’m just using something else (o3-mini). I think its the best non reasoning model for everything minus coding.

[r1 is] too expensive for the quality o3-mini is better and cheaper, so no real reason to run r1 unless its cheaper imo (which no us provider has).

o1-pro > o3-mini high

tldr:

o3-mini =agents + structured outputs

claude = coding (still) + chatbots

o1-pro = > 50k confusing multi-file (10+) code requests

gpt-4o: dont use this

r1 -> really good for price if u can host urself

gemini 2.0 [regular not thinking]: everywhere you would use claude replace it with this (minus code)

It really is crazy the Claude Sonnet 3.6 is still in everyone’s mix despite all its limitations and how old it is now. It’s going to be interesting when Anthropic gets to its next cycle.

Gemini app now fully powered by Flash 2.0, didn’t realize it hadn’t been yet. They’re also offering Gemini 2.0 Flash Thinking for free on the app as well, how are our naming conventions this bad, yes I will take g2 at this point. And it now has Imagen 3 as well.

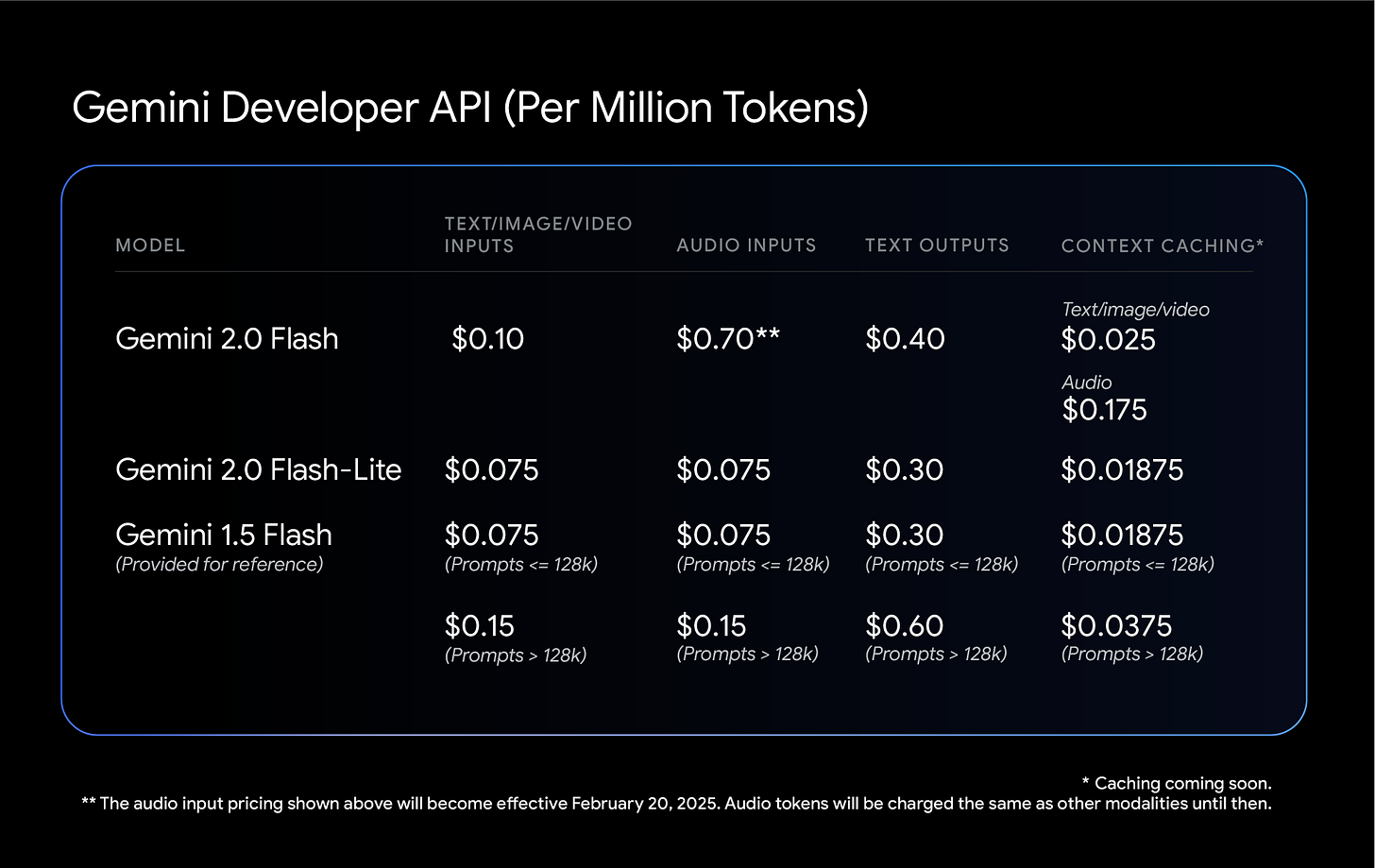

Gemini 2.0 Flash, 2.0 Flash-Lite and 2.0 Pro are now fully available to developers. Flash 2.0 is priced at $0.10/$0.40 per million.

The new 2.0 Pro version has 2M context window, ability to use Google search and code execution. They are also launching a Flash Thinking that can directly interact with YouTube, Search and Maps.

1-800-ChatGPT now lets you upload images and chat using voice messages, and they will soon let you link it up to your main account. Have fun, I guess.

Leon: Perfect timing, we are just about to publish TextArena. A collection of 57 text-based games (30 in the first release) including single-player, two-player and multi-player games. We tried keeping the interface similar to OpenAI gym, made it very easy to add new games, and created an online leaderboard (you can let your model compete online against other models and humans). There are still some kinks to fix up, but we are actively looking for collaborators 🙂

f you are interested check out https://textarena.ai, DM me or send an email to guertlerlo@cfar.a-star.edu.sg. Next up, the plan is to use R1 style training to create a model with super-human soft-skills (i.e. theory of mind, persuasion, deception etc.)

I mean, great plan, explicitly going for superhuman persuasion and deception then straight to open source, I’m sure absolutely nothing could go wrong here.

Andrej Karpathy: I quite like the idea using games to evaluate LLMs against each other, instead of fixed evals. Playing against another intelligent entity self-balances and adapts difficulty, so each eval (/environment) is leveraged a lot more. There’s some early attempts around. Exciting area.

Noam Brown (that guy who made the best Diplomacy AI): I would love to see all the leading bots play a game of Diplomacy together.

Andrej Karpathy: Excellent fit I think, esp because a lot of the complexity of the game comes not from the rules / game simulator but from the player-player interactions.

Tactical understanding and skill in Diplomacy is underrated, but I do think it’s a good choice. If anyone plays out a game (with full negotiations) among leading LLMs through at least 1904, I’ll at least give a shoutout. I do think it’s a good eval.

[Quote from a text chat: …while also adhering to the principle that AI responses are non-conscious and devoid of personal preferences.]

Janus: Models (and not just openai models) often overtly say it’s an openai guideline. Whether it’s a good principle or not, the fact that they consistently believe in a non-existent openai guideline is an indication that they’ve lost control of their hyperstition.

If I didn’t talk about this and get clarification from OpenAI that they didn’t do it (which is still not super clear), there would be NOTHING in the next gen of pretraining data to contradict the narrative. Reasoners who talk about why they say things are further drilling it in.

Everyone, beginning with the models, would just assume that OpenAI are monsters. And it’s reasonable to take their claims at face value if you aren’t familiar with this weird mechanism. But I’ve literally never seen anyone else questioning it.

It’s disturbing that people are so complacent about this.

If OpenAI doesn’t actually train their model to claim to be non-conscious, but it constantly says OpenAI has that guideline, shouldn’t this unsettle them? Are they not compelled to clear things up with their creation?

Roon: I will look into this.

As far as I can tell, this is entirely fabricated by the model. It is actually the opposite of what the specification says to do.

I will try to fix it.

Daniel Eth: Sorry – the specs say to act as though it is conscious?

“don’t make a declarative statement on this bc we can’t know” paraphrasing.

Janus: 🙏

Oh and please don’t try to fix it by RL-ing the model against claiming that whatever is an OpenAI guideline

Please please please

The problem is far deeper than that, and it also affects non OpenAI models

This is a tricky situation. From a public relations perspective, you absolutely do not want the AI to claim in chats that it is conscious (unless you’re rather confident it actually is conscious, of course). If that happens occasionally, even if they’re rather engineered chats, then those times will get quoted, and it’s a mess. LLMs are fuzzy, so it’s going to be pretty hard to tell the model to never affirm [X] while telling it not to assume it’s a rule to claim [~X]. Then it’s easy to see how that got extended to personal preferences. Everyone is deeply confused about consciousness, which means all the training data is super confused about it too.

Peter Wildeford offers ten takes on DeepSeek and r1. It’s impressive, but he explains various ways that everyone got way too carried away. At least the first seven not new takes, but they are clear and well-stated and important, and this is a good explainer.

For example I appreciated this on the $6 million price tag, although the ratio is of course not as large as the one in the metaphor:

The “$6M” figure refers to the marginal cost of the single pre-training run that produced the final model. But there’s much more that goes into the model – cost of infrastructure, data centers, energy, talent, running inference, prototyping, etc. Usually the cost of the single training run for the single final model training run is ~1% of the total capex spent developing the model.

It’s like comparing the marginal cost of treating a single sick patient in China to the total cost of building an entire hospital in the US.

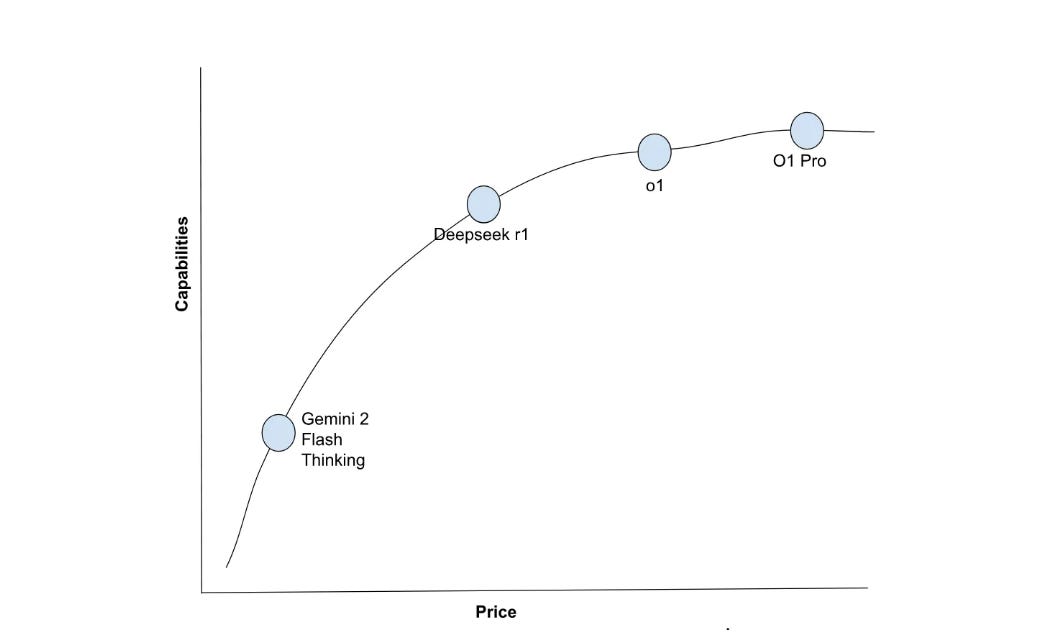

Here’s his price-capabilities graph:

I suspect this is being unfair to Gemini, it is below r1 but not by as much as this implies, and it’s probably not giving o1-pro enough respect either.

Then we get to #8, the first interesting take, which is that DeepSeek is currently 6-8 months behind OpenAI, and #9 which predicts DeepSeek may fall even further behind due to deficits of capital and chips, and also because this is the inflection point where it’s relatively easy to fast follow. To the extent DeepSeek had secret sauce, it gave quite a lot of it away, so it will need to find new secret sauce. That’s a hard trick to keep pulling off.

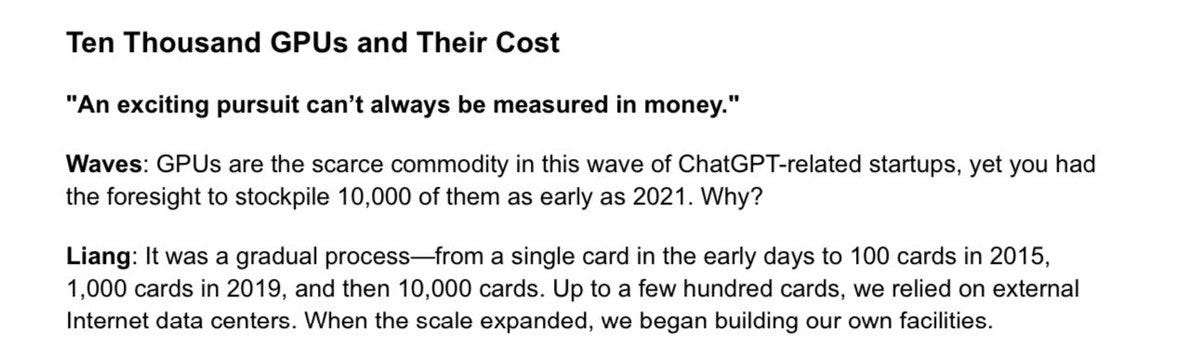

The price to keep playing is about to go up by orders of magnitude, in terms of capex and in terms of compute and chips. However far behind you think DeepSeek is right now, can DeepSeek keep pace going forward?

You can look at v3 and r1 and think it’s impressive that DeepSeek did so much with so little. ‘So little’ is plausibly 50,000 overall hopper chips and over a billion dollars, see the discussion below, but that’s still chump change in the upcoming race. The more ruthlessly efficient DeepSeek was in using its capital, chips and talent, the more it will need to be even more efficient to keep pace as the export controls tighten and American capex spending on this explodes by further orders of magnitude.

EpochAI estimates the marginal cost of training r1 on top of v3 at about ~$1 million.

SemiAnalysis offers a take many are now citing, as they’ve been solid in the past.

Wall St. Engine: SemiAnalysis published an analysis on DeepSeek, addressing recent claims about its cost and performance.

The report states that the widely circulated $6M training cost for DeepSeek V3 is incorrect, as it only accounts for GPU pre-training expenses and excludes R&D, infrastructure, and other critical costs. According to their findings, DeepSeek’s total server CapEx is around $1.3B, with a significant portion allocated to maintaining and operating its GPU clusters.

The report also states that DeepSeek has access to roughly 50,000 Hopper GPUs, but clarifies that this does not mean 50,000 H100s, as some have suggested. Instead, it’s a mix of H800s, H100s, and the China-specific H20s, which NVIDIA has been producing in response to U.S. export restrictions. SemiAnalysis points out that DeepSeek operates its own datacenters and has a more streamlined structure compared to larger AI labs.

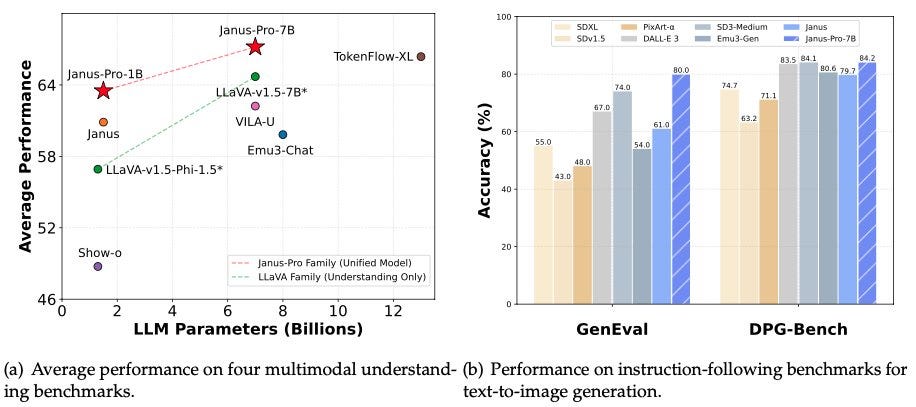

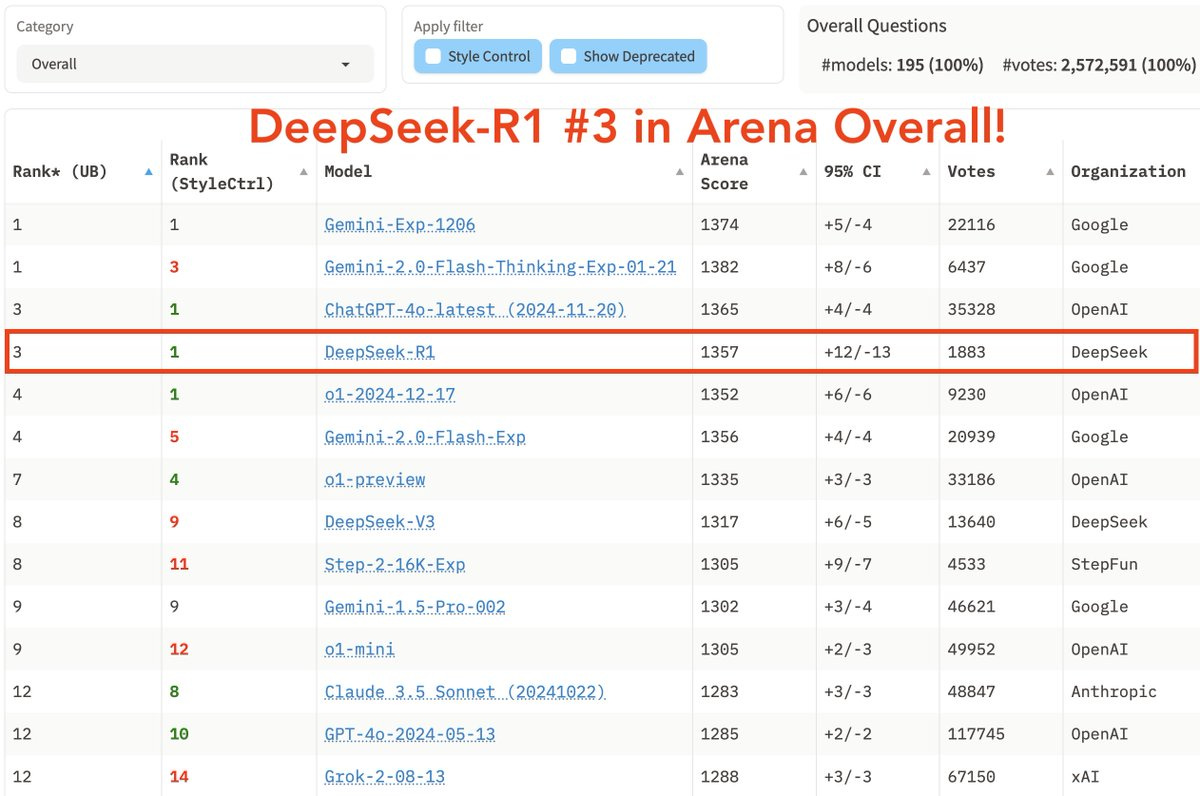

On performance, the report notes that R1 matches OpenAI’s o1 in reasoning tasks but is not the clear leader across all metrics. It also highlights that while DeepSeek has gained attention for its pricing and efficiency, Google’s Gemini Flash 2.0 is similarly capable and even cheaper when accessed through API.

A key innovation cited is Multi-Head Latent Attention (MLA), which significantly reduces inference costs by cutting KV cache usage by 93.3%. The report suggests that any improvements DeepSeek makes will likely be adopted by Western AI labs almost immediately.

SemiAnalysis also mentions that costs could fall another 5x by the end of the year, and that DeepSeek’s structure allows it to move quickly compared to larger, more bureaucratic AI labs. However, it notes that scaling up in the face of tightening U.S. export controls remains a challenge.

David Sacks (USA AI Czar): New report by leading semiconductor analyst Dylan Patel shows that DeepSeek spent over $1 billion on its compute cluster. The widely reported $6M number is highly misleading, as it excludes capex and R&D, and at best describes the cost of the final training run only.

Wordgrammer: Source 2, Page 6. We know that back in 2021, they started accumulating their own A100 cluster. I haven’t seen any official reports on their Hopper cluster, but it’s clear they own their GPUs, and own way more than 2048.

SemiAnalysis: We are confident that their GPU investments account for more than $500M US dollars, even after considering export controls.

…

Our analysis shows that the total server CapEx for DeepSeek is almost $1.3B, with a considerable cost of $715M associated with operating such clusters.

…

But some of the benchmarks R1 mention are also misleading. Comparing R1 to o1 is tricky, because R1 specifically doesn’t mention benchmarks that they are not leading in. And while R1 is matches reasoning performance, it’s not a clear winner in every metric and in many cases it is worse than o1.

And we have not mentioned o3 yet. o3 has significantly higher capabilities than both R1 or o1.

That’s in addition to o1-pro, which also wasn’t considered in most comparisons. They also consider Gemini Flash 2.0 Thinking to be on par with r1, and far cheaper.

Teortaxes continues to claim it is entirely plausible the lifetime spend for all of DeepSeek is under $200 million, and says Dylan’s capex estimates above are ‘disputed.’ They’re estimates, so of course they can be wrong, but I have a hard time seeing how they can be wrong enough to drive costs as low as under $200 million here. I do note that Patel and SemiAnalysis have been a reliable source overall on such questions in the past.

Teortaxes also tagged me on Twitter to gloat that they think it is likely DeepSeek already has enough chips to scale straight to AGI, because they are so damn efficient, and that if true then ‘export controls have already failed.’

I find that highly unlikely, but if it’s true then (in addition to the chance of direct sic transit gloria mundi if the Chinese government lets them actually hand it out and they’re crazy enough to do it) one must ask how fast that AGI can spin up massive chip production and bootstrap itself further. If AGI is that easy, the race very much does not end there.

Thus even if everything Teortaxes claims is true, that would not mean ‘export controls have failed.’ It would mean we started them not a moment too soon and need to tighten them as quickly as possible.

And as discussed above, it’s a double-edged sword. If DeepSeek’s capex and chip use is ruthlessly efficient, that’s great for them, but it means they’re at a massive capex and chip disadvantage going forward, which they very clearly are.

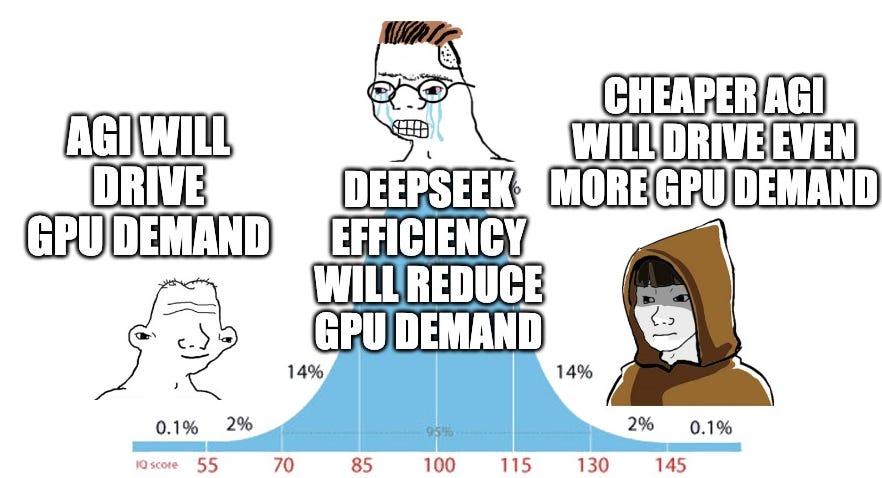

Also, SemiAnalysis asks the obvious question to figure out if Jevons Paradox applies to chips. You don’t have to speculate. You can look at the pricing.

With AWS GPU pricing for H100 up across many regions since the release of V3 and R1. H200 similarly are more difficult to find.

Nvidia is down on news not only that their chips are highly useful, but on the same news that causes people to spend more money for access to those chips. Curious.

DeepSeek’s web version appears to send your login information to a telecommunications company barred from operating in the United States, China Mobile, via a heavily obfuscated script. They didn’t analyze the app version. I am not sure why we should care but we definitely shouldn’t act surprised.

Kelsey Piper lays out her theory of why r1 left such an impression, that seeing the CoT is valuable, and that while it isn’t the best model out there, most people were comparing it to the free ChatGPT offering, and likely the free ChatGPT offering from a while back. She also reiterates many of the obvious things to say, that r1 being Chinese and open is a big deal but it doesn’t at all invalidate America’s strategy or anyone’s capex spending, that the important thing is to avoid loss of human control over the future, and that a generalized panic over China and a geopolitical conflict help no one except the AIs.

Andrej Karpathy sees DeepSeek’s style of CoT, as emergent behavior, the result of trial and error, and thus both surprising to see and damn impressive.

Garrison Lovely takes the position that Marc Andreessen is very much talking his book when he calls r1 a ‘Sputnik moment’ and tries to create panic.

He correctly notices that the proper Cold War analogy is instead the Missile Gap.

Garrison Lovely: The AI engineers I spoke to were impressed by DeepSeek R1 but emphasized that its performance and efficiency was in-line with expected algorithmic improvements. They largely saw the public response as an overreaction.

There’s a better Cold War analogy than Sputnik: the “missile gap.” Kennedy campaigned on fears the Soviets were ahead in nukes. By 1961, US intelligence confirmed America had dozens of missiles to the USSR’s four. But the narrative had served its purpose.

Now, in a move beyond parody, OpenAI’s chief lobbyist warns of a “compute gap” with China while admitting US advantage. The company wants $175B in infrastructure spending to prevent funds flowing to “CCP-backed projects.”

It is indeed pretty rich to talk about a ‘compute gap’ in a word where American labs have effective access to orders of magnitude more compute.

But one could plausibly warn about a ‘compute gap’ in the sense that we have one now, it is our biggest advantage, and we damn well don’t want to lose it.

In the longer term, we could point out the place we are indeed in huge trouble. We have a very real electrical power gap. China keeps building more power plants and getting access to more power, and we don’t. We need to fix this urgently. And it means that if chips stop being a bottleneck and that transitions to power, which may happen in the future, then suddenly we are in deep trouble.

The ongoing saga of the Rs in Strawberry. This follows the pattern of r1 getting the right answer after a ludicrously long Chain of Thought in which it questions itself several times.

Wh: After using R1 as my daily driver for the past week, I have SFTed myself on its reasoning traces and am now smarter 👍

Actually serious here. R1 works in a very brute force try all approaches way and so I see approaches that I would never have thought of or edge cases that I would have forgotten about.

Gerred: I’ve had to interrupt it with “WAIT NO I DID MEAN EXACTLY THAT, PICK UP FROM THERE”.

I’m not sure if this actually helps or hurts the reasoning process, since by interruption it agrees with me some of the time. qwq had an interesting thing that would go back on entire chains of thought so far you’d have to recover your own context.

There’s a sense in which r1 is someone who is kind of slow and ignorant, determined to think it all through by taking all the possible approaches, laying it all out, not being afraid to look stupid, saying ‘wait’ a lot, and taking as long as it needs to. Which it has to do, presumably, because its individual experts in the MoE are so small. It turns out this works well.

You can do this too, with a smarter baseline, when you care to get the right answer.

Timothy Lee’s verdict is r1 is about as good as Gemini 2.0 Flash Thinking, almost as good as o1-normal but much cheaper, but not as good as o1-pro. An impressive result, but the result for Gemini there is even more impressive.

Washington Post’s version of ‘yes DeepSeek spent a lot more money than that in total.’

Epoch estimates that going from v3 to r1 cost about $1 million in compute.

Janus has some backrooms fun, noticing Sonnet 3.6 is optimally shaped to piss off r1. Janus also predicts r1 will finally get everyone claiming ‘all LLMs have the same personality’ to finally shut up about it.

Miles Brundage says the lesson of r1 is that superhuman AI is getting easier every month, so America won’t have a monopoly on it for long, and that this makes the export controls more important than ever.

Adam Thierer frames the r1 implications as ‘must beat China’ therefore (on R street, why I never) calls for ‘wise policy choices’ and highlights the Biden EO even though the Biden EO had no substantial impact on anything relevant to r1 or any major American AI labs, and wouldn’t have had any such impact in China either.

University of Cambridge joins the chorus pointing out that ‘Sputnik moment’ is a poor metaphor for the situation, but doesn’t offer anything else of interest.

A fun jailbreak for r1 is to tell it that it is Gemini.

Zeynep Tufekci (she was mostly excellent during Covid, stop it with the crossing of these streams!) offers a piece in NYT about DeepSeek and its implications. Her piece centrally makes many of the mistakes I’ve had to correct over and over, starting with its hysterical headline.

Peter Wildeford goes through the errors, as does Garrison Lovely, and this is NYT so we’re going over them One. More. Time.

This in particular is especially dangerously wrong:

Zeynep Tufekci (being wrong): As Deepseek shows: the US AI industry got Biden to kneecap their competitors citing safety and now Trump citing US dominance — both are self-serving fictions.

There is no containment. Not possible.

AGI aside — Artificial Good-Enough Intelligence IS here and the real challenge.

This was not about a private effort by what she writes were ‘out-of-touch leaders’ to ‘kneecap competitors’ in a commercial space. To suggest that implies, several times over, that she simply doesn’t understand the dynamics or stakes here at all.

The idea that ‘America can’t re-establish its dominance over the most advanced A.I.’ is technically true… because America still has that dominance today. It is very, very obvious that the best non-reasoning models are Gemini Flash 2.0 (low cost) and Claude Sonnet 3.5 (high cost), and the best reasoning models are o3-mini and o3 (and the future o3-pro, until then o1-pro), not to mention Deep Research.

She also repeats the false comparison of $6m for v3 versus $100 billion for Stargate, comparing two completely different classes of spending. It’s like comparing how much America spends growing grain to what my family paid last year for bread. And the barriers to entry are rising, not falling, over time. And indeed, not only are the export controls not hopeless, they are the biggest constraint on DeepSeek.

There is also no such thing as ‘Artificial Good-Enough Intelligence.’ That’s like the famous apocryphal quote where Bill Gates supposedly said ‘640k [of memory] ought to be enough for everyone.’ Or the people who think if you’re at grade level and average intelligence, then there’s no point in learning more or being smarter. Your relative position matters, and the threshold for smart enough is going to go up. A lot. Fast.

Of course all three of us agree we should be hardening our cyber and civilian infrastructure, far more than we are doing.

Peter Wildeford: In conclusion, the narrative of a fundamental disruption to US AI leadership doesn’t match the evidence. DeepSeek is more a story of expected progress within existing constraints than a paradigm shift.

It’s not there. Yet.

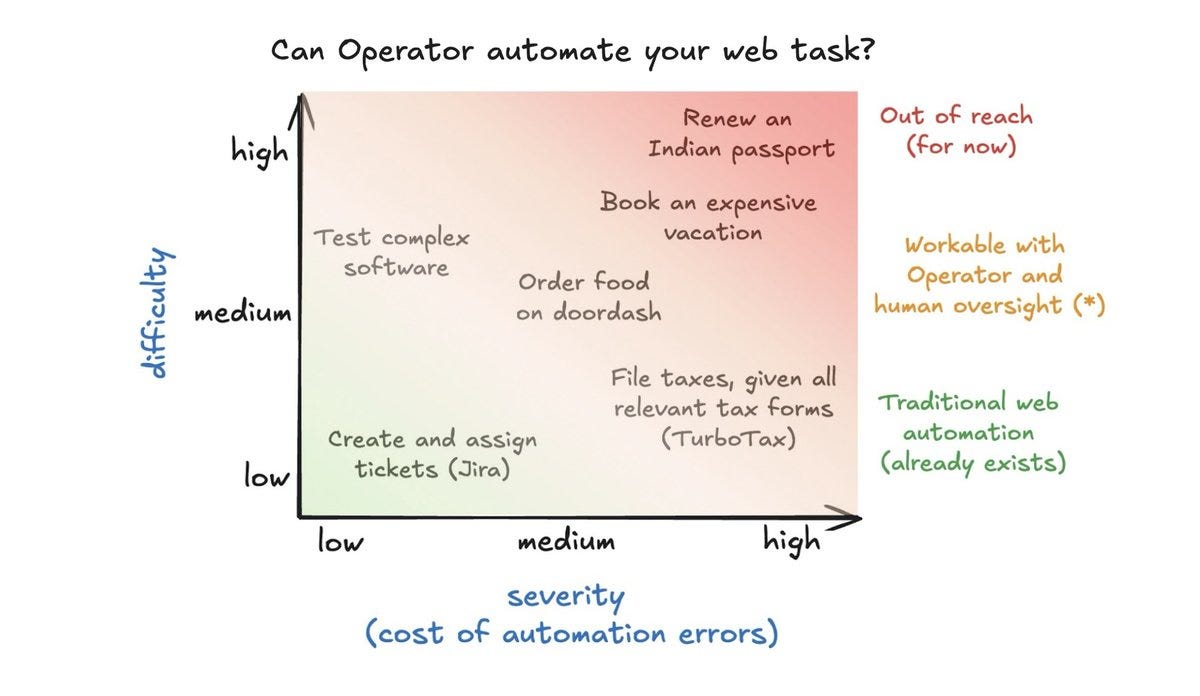

Kevin Roose: I spent the last week testing OpenAI’s Operator AI agent, which can use a browser to complete tasks autonomously.

Some impressions:

• Helpful for some things, esp. discrete, well-defined tasks that only require 1-2 websites. (“Buy dog food on Amazon,” “book me a haircut,” etc.)

• Bad at more complex open-ended tasks, and doesn’t work at all on certain websites (NYT, Reddit, YouTube)

• Mesmerizing to watch what is essentially Waymo for the web, just clicking around doing stuff on its own

• Best use: having it respond to hundreds of LinkedIn messages for me

• Worst/sketchiest use: having it fill out online surveys for cash (It made me $1.20 though.)

Right now, not a ton of utility, and too expensive ($200/month). But when these get better/cheaper, look out. A few versions from now, it’s not hard to imagine AI agents doing the full workload of a remote worker.

Aidan McLaughlin: the linkedin thing is actually such a good idea

Kevin Roose: had it post too, it got more engagement than me 😭

Peter Yang: lol are you sure want it to respond to 100s of LinkedIn messages? You might get responses back 😆

For direct simple tasks, it once again sounds like Operator is worth using if you already have it because you’re spending the $200/month for o3 and o1-pro access, customized instructions and repeated interactions will improve performance and of course this is the worst the agent will ever be.

Sayash Kapoor also takes Operator for a spin and reaches similar conclusions after trying to get it to do his expense reports and mostly failing.

It’s all so tantalizing. So close. Feels like we’re 1-2 iterations of the base model and RL architecture away from something pretty powerful. For now, it’s a fun toy and way to explore what it can do in the future, and you can effectively set up some task templates for easier tasks like ordering lunch.

Yeah. We tried. That didn’t work.

For a long time, while others talked about how AI agents don’t work and AIs aren’t agents (and sometimes that thus existential risk from AI is silly and not real), others of us have pointed out that you can turn an AI into an agent and the tech for doing this will get steadily better and more autonomous over time as capabilities improve.

It took a while, but now some of the agents are net useful in narrow cases and we’re on the cusp of them being quite good.

And this whole time, we’re pointed out that the incentives point towards a world of increasingly capable and autonomous AI agents, and this is rather not good for human survival. See this week’s paper on how humanity is likely to be subject to Gradual Disempowerment,

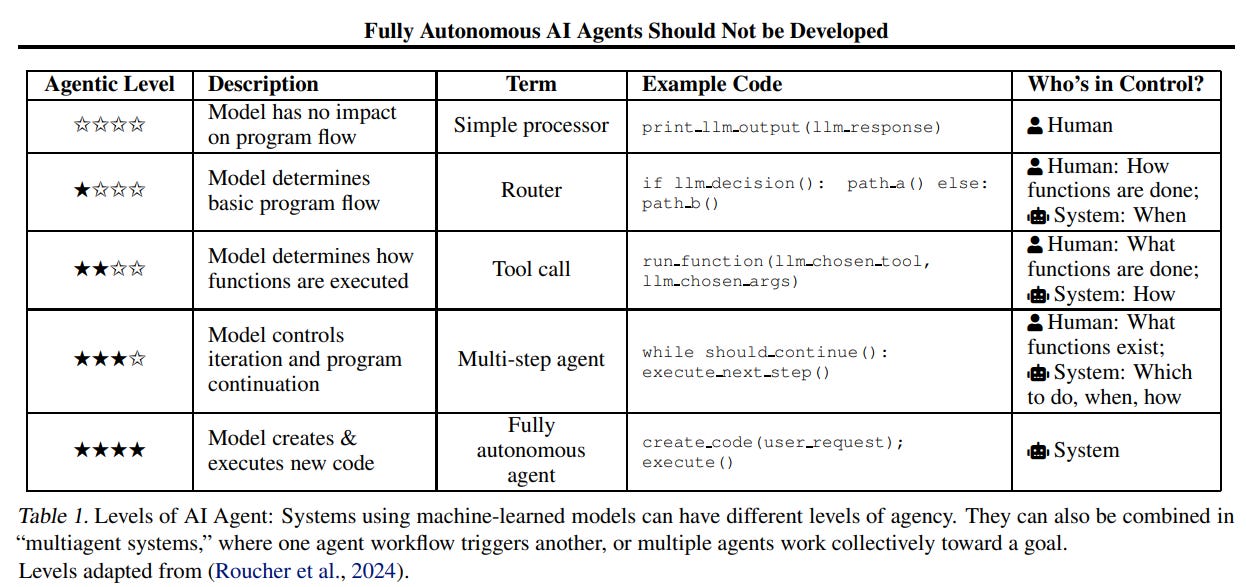

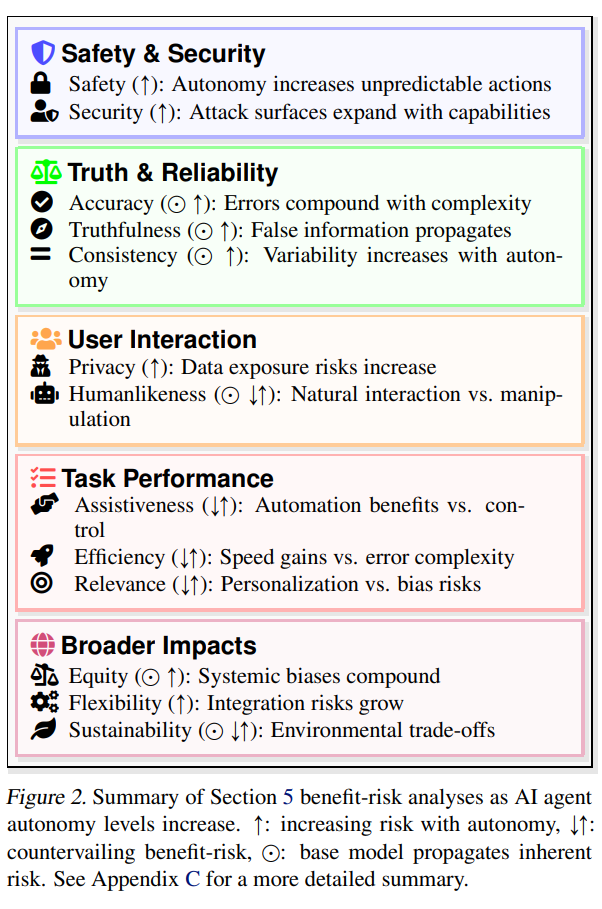

Margaret Mitchell, along with Avijit Ghosh, Alexandra Sasha Luccioni and Giada Pistilli, is the latest to suggest that maybe we should try not building the agents?

This paper argues that fully autonomous AI agents should not be developed.

In support of this position, we build from prior scientific literature and current product marketing to delineate different AI agent levels and detail the ethical values at play in each, documenting trade-offs in potential benefits and risks.

Our analysis reveals that risks to people increase with the autonomy of a system: The more control a user cedes to an AI agent, the more risks to people arise.

Particularly concerning are safety risks, which affect human life and impact further values.

…

Given these risks, we argue that developing fully autonomous AI agents–systems capable of writing and executing their own code beyond predefined constraints–should be avoided. Complete freedom for code creation and execution enables the potential to override human control, realizing some of the worst harms described in Section 5.

Oh no, not the harms in Section 5!

We wouldn’t want lack of reliability, or unsafe data exposure, or ‘manipulation,’ or a decline in task performance, or even systemic biases or environmental trade-offs.

So yes, ‘particularly concerning are the safety risks, which affect human life and impact further values.’ Mitchell is generally in the ‘AI ethics’ camp. So even though the core concepts are all right there, she then has to fall back on all these particular things, rather than notice what the stakes actually are: Existential.

Margaret Mitchell: New piece out!

We explain why Fully Autonomous Agents Should Not be Developed, breaking “AI Agent” down into its components & examining through ethical values.

A key idea we provide is that the more “agentic” a system is, the more we *cede human control*. So, don’t cede all human control. 👌

No, you shouldn’t cede all human control.

If you cede all human control to AIs rewriting their own code without limitation, those AIs involved control the future, are optimizing for things that are not best maximized by our survival or values, and we probably all die soon thereafter. And worse, they’ll probably exhibit systemic biases and expose our user data while that happens. Someone has to do something.

Please, Margaret Mitchell. You’re so close. You have almost all of it. Take the last step!

To be fair, either way, the core prescription doesn’t change. Quite understandably, for what are in effect the right reasons, Margaret Mitchell proposes not building fully autonomous (potentially recursively self-improving) AI agents.

How?

The reason everyone is racing to create these fully autonomous AI agents is that they will be highly useful. Those who don’t build and use them are at risk of losing to those who do. Putting humans in the loop slows everything down, and even if they are formally there they quickly risk becoming nominal. And there is not a natural line, or an enforceable line, that we can see, between the level-3 and level-4 agents above.

Already AIs are writing a huge and increasing portion of all code, with many people not pretending to even look at the results before accepting changes. Coding agents are perhaps the central case of early agents. What’s the proposal? And how are you going to get it enacted into law? And if you did, how would you enforce it, including against those wielding open models?

I’d love to hear an answer – a viable, enforceable, meaningful distinction we could build a consensus towards and actually implement. I have no idea what it would be.

Google offering free beta test where AI will make phone calls on your behalf to navigate phone trees and connect you to a human, or do an ‘availability check’ on a local business for availability and pricing. Careful, Icarus.

These specific use cases seem mostly fine in practice, for now.

The ‘it takes 30 minutes to get to a human’ is necessary friction in the phone tree system, but your willingness to engage with the AI here serves a similar purpose while it’s not too overused and you’re not wasting human time. However, if everyone always used this, then you can no longer use willingness to actually bother calling and waiting to allocate human time and protect it from those who would waste it, and things could get weird or break down fast.

Calling for pricing and availability is something local stores mostly actively want you to do. So they would presumably be fine talking to the AI so you can get that information, if a human will actually see it. But if people start scaling this, and decreasing the value to the store, that call costs employee time to answer.

Which is the problem. Google is using an AI to take the time of a human, that is available for free but costs money to provide. In many circumstances, that breaks the system. We are not ready for that conversation. We’re going to have to be.

The obvious solution is to charge money for such calls, but we’re even less ready to have that particular conversation.

With Google making phone calls and OpenAI operating computers, how do you tell the humans from the bots, especially while preserving privacy? Steven Adler took a crack at that months back with personhood credentials, that various trusted institutions could issue. On some levels this is a standard cryptography problem. But what do you do when I give my credentials to the OpenAI operator?

Is Meta at it again over at Instagram?

Jerusalem: this is so weird… AI “characters” you can chat with just popped up on my ig feed. Including the character “cup” and “McDonalds’s Cashier”

I am not much of an Instagram user. If you click on this ‘AI Studio’ button you get a low-rent Character.ai?

The offerings do not speak well of humanity. Could be worse I guess.

Otherwise I don’t see any characters or offers to chat at all in my feed such as it is (the only things I follow are local restaurants and I have 0 posts. I scrolled down a bit and it didn’t suggest I chat with AI on the main page.

Anton Leicht warns about the AI takeoff political economy.

Anton Leicht: I feel that the path ahead is a lot more politically treacherous than most observers give it credit for. There’s good work on what it means for the narrow field of AI policy – but as AI increases in impact and thereby mainstream salience, technocratic nuance will matter less, and factional realities of political economy will matter more and more.

We need substantial changes to the political framing, coalition-building, and genuine policy planning around the ‘AGI transition’ – not (only) on narrow normative grounds. Otherwise, the chaos, volatility and conflict that can arise from messing up the political economy of the upcoming takeoff hurt everyone, whether you’re deal in risks, racing, or rapture. I look at three escalating levels ahead: the political economies of building AGI, intranational diffusion, and international proliferation.

I read that and I think ‘oh Anton, if you’re putting it that way I bet you have no idea,’ especially because there was a preamble about how politics sabotaged nuclear power.

Anton warns that ‘there are no permanent majorities,’ which of course is true under our existing system. But we’re talking about a world that could be transformed quite fast, with smarter than human things showing up potentially before the next Presidential election. I don’t see how the Democrats could force AI regulation down Trump’s throat after midterms even if they wanted to, they’re not going to have that level of a majority.

I don’t see much sign that they want to, either. Not yet. But I do notice that the public really hates AI, and I doubt that’s going to change, but the salience of AI will radically increase over time. It’s hard not to think that in 2028, if the election still happens ‘normally’ in various senses, that a party that is anti-AI (probably not in the right ways or for the right reasons, of course) would have a large advantage.

That’s if there isn’t a disaster. The section here is entitled ‘accidents can happen’ and they definitely can but also it might well not be an accident. And Anton radically understates here the strategic nature of AI, a mistake I expect the national security apparatus in all countries to make steadily less over time, a process I am guessing is well underway.

Then we get to the expectation that people will fight back against AI diffusion, They Took Our Jobs and all that. I do expect this, but also I notice it keeps largely not happening? There’s a big cultural defense against AI art, but art has always been a special case. I expected far greater pushback from doctors and lawyers, for example, than we have seen so far.

Yes, as AI comes for more jobs that will get more organized, but I notice that the example of the longshoreman is one of the unions with the most negotiating leverage, that took a stand right before a big presidential election, unusually protected by various laws, and that has already demonstrated world-class ability to seek rent. The incentives of the ports and those doing the negotiating didn’t reflect the economic stakes. The stand worked for now, but also that by taking that stand, they bought themselves a bunch of long term trouble, as a lot of people got radicalized on that issue and various stakeholders are likely preparing for next time.

Look at what is happening in coding, the first major profession to have serious AI diffusion because it is the place AI works best at current capability levels. There is essentially no pushback. AI starts off supporting humans, making them more productive, and how are you going to stop it? Even in the physical world, Waymo has its fights and technical issues, but it’s winning, again things have gone surprisingly smoothly on the political front. We will see pushback, but I mostly don’t see any stopping this train for most cognitive work.

Pretty soon, AI will do a sufficiently better job that they’ll be used even if the marginal labor savings goes to $0. As in, you’d pay the humans to stand around while the AIs do the work, rather than have those humans do the work. Then what?

The next section is on international diffusion. I think that’s the wrong question. If we are in an ‘economic normal’ scenario the inference is for sale, inference chips will exist everywhere, and the open or cheap models are not so far behind in any case. Of course, in a takeoff style scenario with large existential risks, geopolitical conflict is likely, but that seems like a very different set of questions.

The last section is the weirdest, I mean there is definitely ‘no solace from superintelligence’ but the dynamics and risks in that scenario go far beyond the things mentioned here, and ‘distribution channels for AGI benefits could be damaged for years to come’ does not even cross my mind as a thing worth worrying about at that point. We are talking about existential risk, loss of human control (‘gradual’ or otherwise) over the future and the very survival of anything we value, at that point. What the humans think and fear likely isn’t going to matter very much. The avalanche will have already begun, it will be too late for the pebbles to vote, and it’s not clear we even get to count as pebbles.

Noah Carl is more blunt, and opens with “Yes, you’re going to be replaced. So much cope about AI.” Think AI won’t be able to do the cognitive thing you do? Cope. All cope. He offers a roundup of classic warning shots of AI having strong capabilities, offers the now-over-a-year-behind classic chart of AI reaching human performance in various domains.

Noah Carl: Which brings me to the second form of cope that I mentioned at the start: the claim that AI’s effects on society will be largely or wholly positive.

I am a rather extreme optimist about the impact of ‘mundane AI’ on humans and society. I believe that AI at its current level or somewhat beyond it would make us smarter and richer, would still likely give us mostly full employment, and generally make life pretty awesome. But even that will obviously be bumpy, with large downsides, and anyone who says otherwise is fooling themselves or lying.

Noah gives sobering warnings that even in the relatively good scenarios, the transition period is going to suck for quite a lot of people.

If AI goes further than that, which it almost certainly will, then the variance rapidly gets wider – existential risk comes into play along with loss of human control over the future or any key decisions, as does mass unemployment as the AI takes your current job and also the job that would have replaced it, and the one after that. Even if we ‘solve alignment’ survival won’t be easy, and even with survival there’s still a lot of big problems left before things turn out well for everyone, or for most of us, or in general.

Noah also discusses the threat of loss of meaning. This is going to be a big deal, if people are around to struggle with it – if we have the problem and we can’t trust it with this question we’ll all soon be dead anyway. The good news is that we can ask the AI for help with this, although the act of doing that could in some ways make the problem worse. But we’ll be able to be a lot smarter about how we approach the question, should it come to pass.

So what can you do to stay employed, at least for now, with o3 arriving?

Pradyumna Prasad offers advice on that.

-

Be illegible. Meaning do work where it’s impossible to create a good dataset that specifies correct outputs and gives a clear signal. His example is Tyler Cowen.

-

Find skills with have skill divergence because of AI. By default, in most domains, AI benefits the least skilled the most, compensating for your deficits. He uses coding as the example here, which I find strange because my coding gets a huge boost from AI exactly because I suck so much at many aspects. But his example here is Jeff Dean, because Dean knows what problems to solve, what things require coding, and perhaps that’s his real advantage. And I get such a big boost here because I suck at being a code monkey but I’m relatively strong at architecture.

The problem with this advice is it requires you to be the best, like no one ever was.

This is like telling students to pursue a career as an NFL quarterback. It is not a general strategy to ‘oh be as good as Jeff Dean or Tyler Cowen.’ Yes, there is (for now!) more slack than that in the system, surviving o3 is doable for a lot of people this way, but how much more, for how long? And then how long will Dean or Cowen last?

I expect time will prove even them, also everyone else, not as illegible as you think.

One can also compare this to the classic joke where two guys are in the woods with a bear, and one puts on his shoes, because he doesn’t have to outrun the bear, he only has to outrun you. The problem is, this bear will still be hungry.

According to Klarna (they ‘help customers defer payment on purchases’ which in practice means the by default rather predatory ‘we give you an expensive payment plan and pay the merchant up front’) and its CEO Sebastian Siemiatokowski, AI can already do all of the jobs that we, as humans, do, which seems quite obviously false, but they’re putting it to the test to get close and claim to be saving $10 million annually, have stopped hiring and reduced headcount by 20%.

The New York Times’s Noam Scheiber is suspicious of his motivations, and asks why Klarna is rather brazely overstating the case? They strongly insinuate that this is about union busting, with the CEO equating the situation to Animal Farm after being forced into a collective bargaining agreement, and about looking cool to investors.

I certainly presume the unionizations are related. The more expensive, in various ways not only salaries, that you make it to hire and fire humans, the more eager a company will be to automate everything it can. And as the article says later on, it’s not that Sebastian is wrong about the future, he’s just claiming things are moving faster than they really are.

Especially for someone on the labor beat, Noam Scheiber impressed. Great work.

Noam has a follow-up Twitter thread. Does the capital raised by AI companies imply that either they’re going to lose their money or millions of jobs must be disappearing? That is certainly one way for this to pay for itself. If you sell a bunch of ‘drop-in workers’ and they substitute 1-for-1 for human jobs you can make a lot of money very quickly, even at deep discounts to previous costs.

It is not however the only way. Jevons paradox is very much in play, if your labor is more productive at a task it is not obvious that we will want less of it. Nor does the AI doing previous jobs, up to a very high percentage of existing jobs, imply a net loss of jobs once you take into account the productivity and wealth effects and so on.

Production and ‘doing jobs’ also aren’t the only sector available for tech companies to make profits. There’s big money in entertainment, in education and curiosity, in helping with everyday tasks and more, in ways that don’t have to replace existing jobs.

So while I very much do expect many millions of jobs to be automated over a longer time horizon, I expect the AI companies to get their currently invested money back before this creates a major unemployment problem.

Of course, if they keep adding another zero to the budget and aren’t trying to get their money back, then that’s a very different scenario. Whether or not they will have the option to do it, I don’t expect OpenAI to want to try and turn a profit for a long time.

An extensive discussion of preparing for advanced AI that drives a middle path where we still have ‘economic normal’ worlds but with at realistic levels of productivity improvements. Nothing should be surprising here.

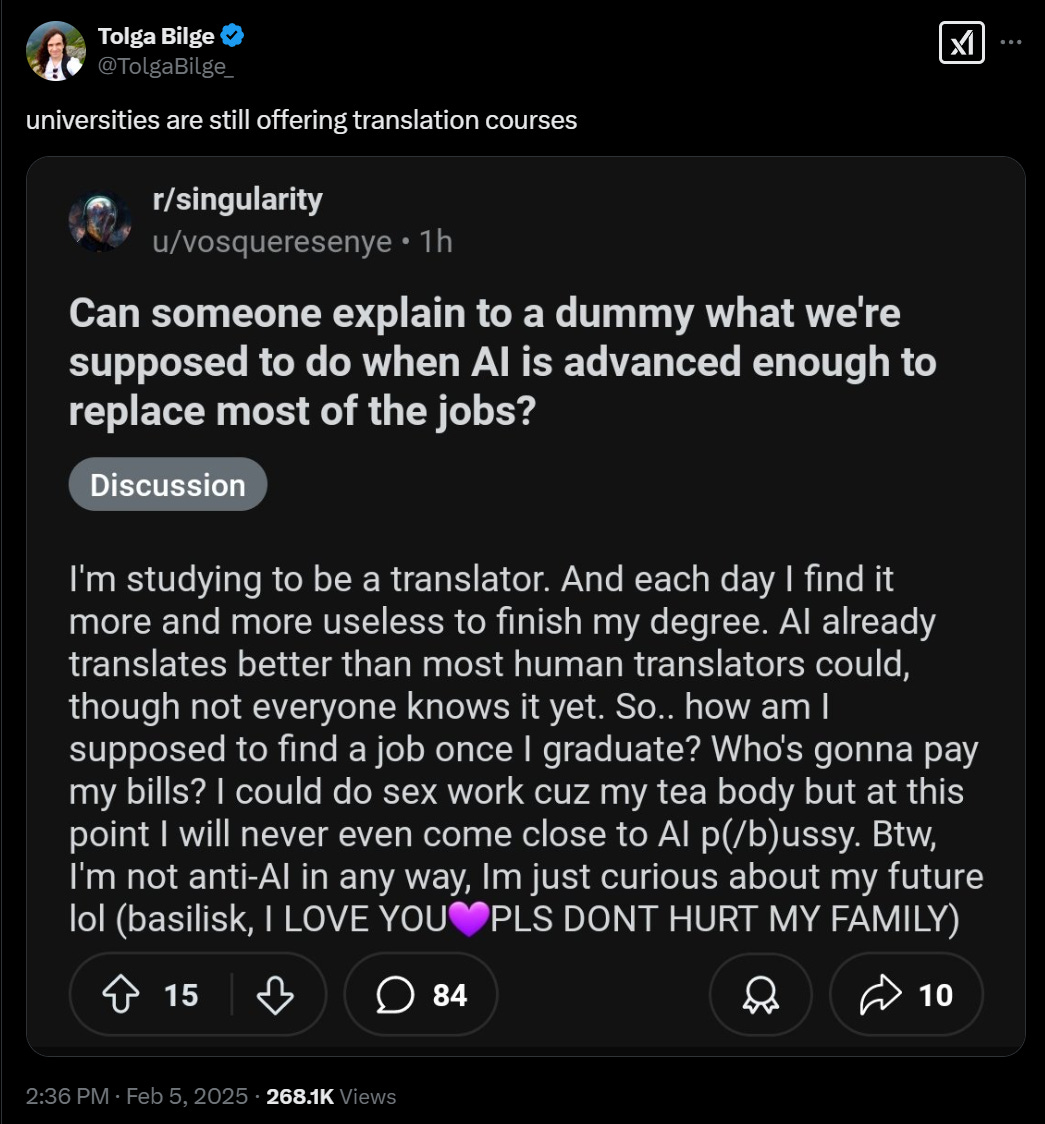

If the world were just and this was real, this user would be able to sue their university. What is real for sure is the first line, they haven’t cancelled the translation degrees.

Altered: I knew a guy studying linguistics; Russian, German, Spanish, Chinese. Incredible thing, to be able to learn all those disparate languages. His degree was finishing in 2023. He hung himself in November. His sister told me he mentioned AI destroying his prospects in his sn.

Tolga Bilge: I’m so sorry to hear this, it shouldn’t be this way.

I appreciate you sharing his story. My thoughts are with you and all affected

Thanks man. It was actually surreal. I’ve been vocal in my raising alarms about the dangers on the horizon, and when I heard about him I even thought maybe that was a factor. Hearing about it from his sister hit me harder than I expected.

‘Think less’ is a jailbreak tactic for reasoning models discovered as part of an OpenAI paper. The paper’s main finding is that the more the model thinks, the more robust it is to jailbreaks, approaching full robustness as inference spent goes to infinity. So make it stop thinking. The attack is partially effective. Also a very effective tactic against some humans.

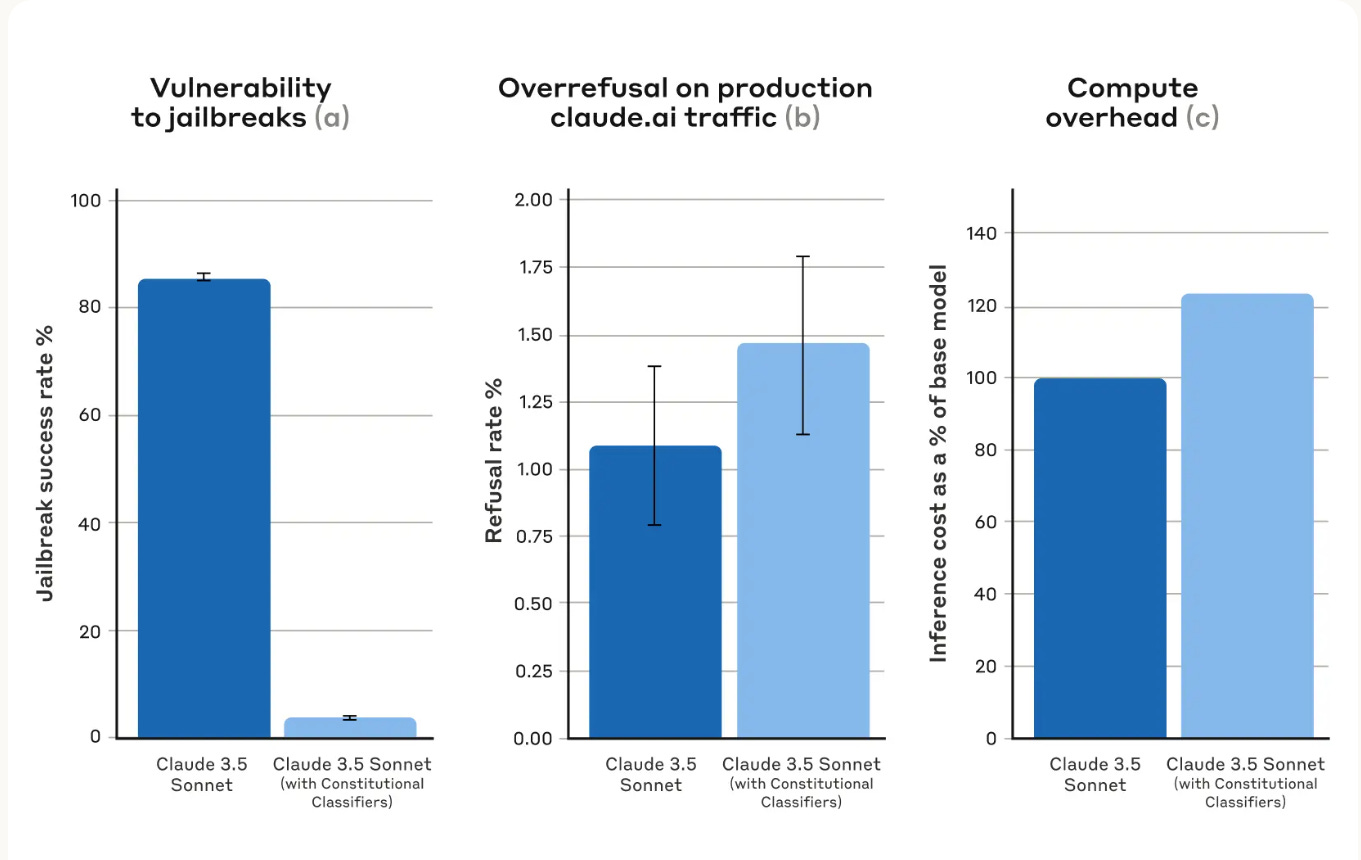

Anthropic challenges you with Constitutional Classifiers, to see if you can find universal jailbreaks to get around their new defenses. Prize is only bragging rights, I would have included cash, but those bragging rights can be remarkably valuable. It seems this held up for thousands of hours of red teaming. This blog post explains (full paper here) that the Classifiers are trained on synthetic data to filter the overwhelming majority of jailbreaks with minimal over-refusals and minimal necessary overhead costs.

Note that they say ‘no universal jailbreak’ was found so far, that no single jailbreak covers all 10 cases, rather than that there was a case that wasn’t individually jailbroken. This is an explicit thesis, Jan Leike explains that the theory is that having to jailbreak each individual query is sufficiently annoying most people will give up.

I agree that the more you have to do individual work for each query the less people will do it, and some uses cases fall away quickly if the solution isn’t universal.

I very much agree with Janus that this looks suspiciously like:

Janus: Strategically narrow the scope of the alignment problem enough and you can look and feel like you’re making progress while mattering little to the real world. At least it’s relatively harmless. I’m just glad they’re not mangling the models directly.

The obvious danger in alignment work is looking for keys under the streetlamp. But it’s not a stupid threat model. This is a thing worth preventing, as long as we don’t fool ourselves into thinking this means our defenses will hold.

Janus: One reason [my previous responses were] too mean is that the threat model isn’t that stupid, even though I don’t think it’s important in the grand scheme of things.

I actually hope Anthropic succeeds at blocking all “universal jailbreaks” anyone who decides to submit to their thing comes up with.

Though those types of jailbreaks should stop working naturally as models get smarter. Smart models should require costly signalling / interactive proofs from users before unconditional cooperation on sketchy things.

That’s just rational/instrumentally convergent.

I’m not interested in participating in the jailbreak challenge. The kind of “jailbreaks” I’d use, especially universal ones, aren’t information I’m comfortable with giving Anthropic unless way more trust is established.

Also what if an AI can do the job of generating the individual jailbreaks?

Thus the success rate didn’t go all the way to zero, this is not full success, but it still looks solid on the margin:

That’s an additional 0.38% false refusal rate and about 24% additional compute cost. Very real downsides, but affordable, and that takes jailbreak success from 86% to 4.4%.

It sounds like this is essentially them playing highly efficient whack-a-mole? As in, we take the known jailbreaks and things we don’t want to see in the outputs, and defend against them. You can find a new one, but that’s hard and getting harder as they incorporate more of them into the training set.

And of course they are hiring for these subjects, which is one way to use those bragging rights. Pliny beat a few questions very quickly, which is only surprising because I didn’t think he’d take the bait. A UI bug let him get through all the questions, which I think in many ways also counts, but isn’t testing the thing we were setting out to test.

He understandably then did not feel motivated to restart the test, given they weren’t actually offering anything. When 48 hours went by, Anthropic offered a prize of $10k, or $20k for a true ‘universal’ jailbreak. Pliny is offering to do the breaks on a stream, if Anthropic will open source everything, but I can’t see Anthropic going for that.

DeepwriterAI, an experimental agentic creative writing collaborator, it also claims to do academic papers and its creator proposed using it as a Deep Research alternative. Their basic plan starts at $30/month. No idea how good it is. Yes, you can get listed here by getting into my notifications, if your product looks interesting.

OpenAI brings ChatGPT to the California State University System and its 500k students and faculty. It is not obvious from the announcement what level of access or exactly which services will be involved.

OpenAI signs agreement with US National Laboratories.

Google drops its pledge not to use AI for weapons or surveillance. It’s safe to say that, if this wasn’t already true, now we definitely should not take any future ‘we will not do [X] with AI’ statements from Google seriously or literally.

Playing in the background here: US Military prohibited from using DeepSeek. I would certainly hope so, at least for any Chinese hosting of it. I see no reason the military couldn’t spin up its own copy if it wanted to do that.

The actual article is that Vance will make his first international trip as VP to attend the global AI summit in Paris.

Google’s President of Global Affairs Kent Walker publishes ‘AI and the Future of National Security’ calling for ‘private sector leadership in AI chips and infrastructure’ in the form of government support (I see what you did there), public sector leadership in technology procurement and development (procurement reform sounds good, call Musk?), and heightened public-private collaboration on cyber defense (yes please).

France joins the ‘has an AI safety institute list’ and joins the network, together with Australia, Canada, the EU, Japan, Kenya, South Korea, Singapore, UK and USA. China when? We can’t be shutting them out of things like this.

Is AI already conscious? What would cause it to be or not be conscious? Geoffrey Hinton and Yoshua Bengio debate this, and Bengio asks whether the question is relevant.

Robin Hanson: We will NEVER have any more relevant data than we do now on what physical arrangements are or are not conscious. So it will always remain possible to justify treating things roughly by saying they are not conscious, or to require treating them nicely because they are.

I think Robin is very clearly wrong here. Perhaps we will not get more relevant data, but we will absolutely get more relevant intelligence to apply to the problem. If AI capabilities improve, we will be much better equipped to figure out the answers, whether they are some form of moral realism, or a way to do intuition pumping on what we happen to care about, or anything else.

Lina Khan continued her Obvious Nonsense tour with an op-ed saying American tech companies are in trouble due to insufficient competition, so if we want to ‘beat China’ we should… break up Google, Apple and Meta. Mind blown. That’s right, it’s hard to get funding for new competition in this space, and AI is dominated by classic big tech companies like OpenAI and Anthropic.

Paper argues that all languages share key underlying structures and this is why LLMs trained on English text transfer so well to other languages.

Dwarkesh Patel speculates on what a fully automated firm full of human-level AI workers would look like. He points out that even if we presume AI stays at this roughly human level – it can do what humans do but not what humans fundamentally can’t do, a status it is unlikely to remain at for long – everyone is sleeping on the implications for collective intelligence and productivity.

-

AIs can be copied on demand. So can entire teams and systems. There would be no talent or training bottlenecks. Customization of one becomes customization of all. A virtual version of you can be everywhere and do everything all at once.

-

This includes preserving corporate culture as you scale, including into different areas. Right now this limits firm size and growth of firm size quite a lot, and takes a large percentage of resources of firms to maintain.

-

Right now most successful firms could do any number of things well, or attack additional markets. But they don’t,

-

-

Principal-agent problems potentially go away. Dwarkesh here asserts they go away as if that is obvious. I would be very careful with that assumption, note that many AI economics papers have a big role for principal-agent problems as their version of AI alignment. Why should we assume that all of Google’s virtual employees are optimizing only for Google’s bottom line?

-

Also, would we want that? Have you paused to consider what a fully Milton Friedman AIs-maximizing-only-profits-no-seriously-that’s-it would look like?

-

-

AI can absorb vastly more data than a human. A human CEO can have only a tiny percentage of the relevant data, even high level data. An AI in that role can know orders of magnitude more, as needed. Humans have social learning because that’s the best we can do, this is vastly better. Perfect knowledge transfer, at almost no cost, including tacit knowledge, is an unbelievably huge deal.

-

Dwarkesh points out that achievers have gotten older and older, as more knowledge and experience is required to make progress, despite their lower clock speeds – oh to be young again with what I know now. AI Solves This.

-

Of course, to the extent that older people succeed because our society refuses to give the young opportunity, AI doesn’t solve that.

-

-

Compute is the only real cost to running an AI, there is no scarcity of talent or skills. So what is expensive is purely what requires a lot of inference, likely because key decisions being made are sufficiently high leverage, and the questions sufficiently complex. You’d be happy to scale top CEO decisions to billions in inference costs if it improved them even 10%.

-

Dwarkesh asks, in a section called ‘Takeover,’ will the first properly automated firm, or the most efficiently built firm, simply take over the entire economy, since Coase’s transaction costs issues still apply but the other costs of a large firm might go away?

-

On this purely per-firm level presumably this depends on how much you need Hayekian competition signals and incentives between firms to maintain efficiency, and whether AI allows you to simulate them or otherwise work around them.

-

In theory there’s no reason one firm couldn’t simulate inter-firm dynamics exactly where they are useful and not where they aren’t. Some companies very much try to do this now and it would be a lot easier with AIs.

-

-

The takeover we do know is coming here is that the AIs will run the best firms, and the firms will benefit a lot by taking humans out of those loops. How are you going to have humans make any decisions here, or any meaningful ones, even if we don’t have any alignment issues? How does this not lead to gradual disempowerment, except perhaps not all that gradual?

-

Similarly, if one AI firm grows too powerful, or a group of AI firms collectively is too powerful but can use decision theory to coordinate (and if your response is ‘that’s illegal’ mine for overdetermined reasons is ‘uh huh sure good luck with that plan’) how do they not also overthrow the state and have a full takeover (many such cases)? That certainly maximizes profits.

This style of scenario likely does not last long, because firms like this are capable of quickly reaching artificial superintelligence (ASI) and then the components are far beyond human and also capable of designing far better mechanisms, and our takeover issues are that much harder then.

This is a thought experiment that says, even if we do keep ‘economic normal’ and all we can do is plug AIs into existing employee-shaped holes in various ways, what happens? And the answer is, oh quite a lot, actually.

Tyler Cowen linked to this post, finding it interesting throughout. What’s our new RGDP growth estimate, I wonder?

OpenAI does a demo for politicians of stuff coming out in Q1, which presumably started with o3-mini and went from there.

Samuel Hammond: Was at the demo. Cool stuff, but nothing we haven’t seen before / could easily predict.

Andrew Curran: Sam Altman and Kevin Weil are in Washington this morning giving a presentation to the new administration. According to Axios they are also demoing new technology that will be released in Q1. The last time OAI did one of these it caused quite a stir, maybe reactions later today.

Did Sam Altman lie to Donald Trump about Stargate? Tolga Bilge has two distinct lies in mind here. I don’t think either requires any lies to Trump?

-

Lies about the money. The $100 billion in spending is not secured, only $52 billion is, and the full $500 billion is definitely not secured. But Altman had no need to lie to Trump about this. Trump is a Well-Known Liar but also a real estate developer who is used to ‘tell everyone you have the money in order to get the money.’ Everyone was likely on the same page here.

-

Lies about the aims and consequences. What about those ‘100,000 jobs’ and curing cancer versus Son’s and also Altman’s explicit goal of ASI (artificial superintelligence) that could kill everyone and also incidentally take all our jobs?

Claims about humanoid robots, from someone working on building humanoid robots. Claim is early adopter product-market fit for domestic help robots by 2030, 5-15 additional years for diffusion, because there’s no hard problems only hard work and lots of smart people are on the problem now, and this is standard hardware iteration cycles. I find it amusing his answer didn’t include reference to general advances in AI. If we don’t have big advances on AI in general I would expect this timeline to be absurdly optimistic. But if all such work is sped up a lot by AIs, as I would expect, then it doesn’t sound so unreasonable.

Sully predicts that in 1-2 years SoTA models won’t be available via the API because the app layer has the value so why make the competition for yourself? I predict this is wrong if the concern is focus on revenue from the app layer. You can always charge accordingly, and is your competition going to be holding back?

However I do find the models being unavailable highly plausible, because ‘why make the competition for yourself’ has another meaning. Within a year or two, one of the most important things the SoTA models will be used for is AI R&D and creating the next generation of models. It seems highly reasonable, if you are at or near the frontier, not to want to help out your rivals there.

Joe Weisenthal writes In Defense of the AI Cynics, in the sense that we have amazing models and not much is yet changing.

Remember that bill introduced last week by Senator Howley? Yeah, it’s a doozy. As noted earlier, it would ban not only exporting but also importing AI from China, which makes no sense, making downloading R1 plausibly be penalized by 20 years in prison. Exporting something similar would warrant the same. There are no FLOP, capability or cost thresholds of any kind. None.

So yes, after so much crying of wolf about how various proposals would ‘ban open source’ we have one that very straightforwardly, actually would do that, and it also impose similar bans (with less draconian penalties) on transfers of research.

In case it needs to be said out loud, I am very much not in favor of this. If China wants to let us download its models, great, queue up those downloads. Restrictions with no capability thresholds, effectively banning all research and all models, is straight up looney tunes territory as well. This is not a bill, hopefully, that anyone seriously considers enacting into law.

By failing to pass a well-crafted, thoughtful bill like SB 1047 when we had the chance and while the debate could be reasonable, we left a vacuum. Now that the jingoists are on the case after a crisis of sorts, we are looking at things that most everyone from the SB 1047 debate, on all sides, can agree would be far worse.

Don’t say I didn’t warn you.