Now that I am tracking all the movies I watch via Letterboxd, it seems worthwhile to go over the results at the end of the year, and look for lessons, patterns and highlights.

-

The Rating Scale.

-

The Numbers.

-

Very Briefly on the Top Picks and Whether You Should See Them.

-

Movies Have Decreasing Marginal Returns in Practice.

-

Theaters are Awesome.

-

I Hate Spoilers With the Fire of a Thousand Suns.

-

Scott Sumner Picks Great American Movies Then Dislikes Them.

-

I Knew Before the Cards Were Even Turned Over.

-

Other Notes to Self to Remember.

-

Strong Opinions, Strongly Held: I Didn’t Like It.

-

Strong Opinions, Strongly Held: I Did Like It.

-

Megalopolis.

-

The Brutalist.

-

The Death of Award Shows.

-

On to 2025.

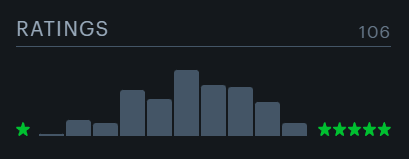

Letterboxd ratings go from 0.5-5. Here is how I interpret the rating scale.

You can find all my ratings and reviews on Letterboxd. I do revise from time to time. I encourage you to follow me there.

5: All-Time Great. I plan to happily rewatch this multiple times. If you are an adult and haven’t seen this, we need to fix that, potentially together, right away, no excuses.

4.5: Excellent. Would happily rewatch. Most people who watch movies frequently should see this movie without asking questions.

4: Great. Very glad I saw it. Would not mind a rewatch. If the concept here appeals to you, then you should definitely see it.

3.5: Very Good. Glad I saw it once. This added value to my life.

3: Good. It was fine, happy I saw it I guess, but missing it would also have been fine.

2.5: Okay. It was watchable, but actually watching it was a small mistake.

2: Bad. Disappointing. I immediately regret this decision. Kind of a waste.

1.5: Very Bad. If you caused this to exist, you should feel bad. But something’s here.

1: Atrocious. Total failure. Morbid curiosity is the only reason to finish this.

0.5: Crime Against Cinema. You didn’t even try to do the not-even-trying thing.

The key thresholds are: Happy I saw it equals 3+, and Rewatchable equals 4+.

Here is the overall slope of my ratings, across all films so far, which slants to the right because of rewatches, and is overall a standard bell curve after selection:

So here’s everything I watched in 2024 (plus the first week of 2025) that Letterboxd classified as released in 2024.

The rankings are in order, including within a tier.

The correlation of my ratings with Metacritic is 0.54, with Letterboxd it is 0.53, and the two correlate with each other at 0.9.

See The Fall Guy if you haven’t. It’s not in my top 10, and you could argue doesn’t have the kind of depth and ambition that people with excellent taste in cinema like the excellent Film Colossus are looking for.

I say so what. Because it’s awesome. Thumbs up.

You should almost certainly also see Anora, Megalopolis and Challengers.

Deadpool & Wolverine is the best version of itself, so see it if you’d see that.

A Complete Unknown is worthwhile if you like Bob Dylan. If you don’t, why not?

Dune: Part 2 is about as good as Dune: Part 1, so your decision here should be easy.

Conclave is great drama, as long as you wouldn’t let a little left-wing sermon ruin it.

The Substance is bizarre and unique enough that I’d recommend that too.

After that, you can go from there, and I don’t think anything is a slam dunk.

This is because we have very powerful selection tools to find great movies, and great movies are much better than merely good movies.

That includes both great in absolute terms, and great for your particular preferences.

If you used reasonable algorithms to see only 1 movie a year, you would be able to reliably watch really awesome movies.

If you want to watch a movie or two per week, you’re not going to do as well. The marginal product you’re watching is now very different. And if you’re watching ‘everything’ for some broad definition of new releases, there’s a lot of drek.

There’s also decreasing returns from movies repeating similar formulas. As you gain taste in and experience with movies, some things that are cool the first time become predictable and generic. You want to mitigate this rather than lean into it, if you can.

There are increasing returns from improved context and watching skills, but they don’t make up for the adverse selection and repetition problems.

Seeing movies in theaters is much better than seeing them at home. As I’ve gotten bigger and better televisions I have expected this effect to mostly go away. It hasn’t. It has shrunk somewhat, but the focusing effects and overall experience matter a lot, and the picture and sound really are still much better.

It seems I should be more selective about watching marginal movies at home versus other activities, but I should be less selective on going to the theater, and I’ve joined AMC A-List to help encourage that, as I have an AMC very close to my apartment.

The correlation with my seeing it in a theater was 0.52, almost as strong as the correlation with others movie ratings.

Obviously a lot of this was selection. Perhaps all of it? My impression was that this was the result of me failing to debias the results, as my experiences in a movie theater seem much better than those outside of one.

But when I ran the correlation between [Zvi Review – Letterboxd] versus Theater, I got -0.015, essentially no correlation at all. So it seems like I did adjust properly for this, or others did similar things to what I did, perhaps. It could also be the two AI horror movies accidentally balancing the scales.

I also noticed that old versus new on average did not make a difference, once you included rewatches. I have a total of 106 reviews, 34 of which are movies from 2024. The average of all reviews for movies not released in 2024, which involved a much lower ratio of seeing them in theaters, is 3.11, versus 3.10 for 2024.

The caveat is that this included rewatches where I already knew my opinion, so newly watched older movies at home did substantially worse than this.

I hate spoilers so much that I consider the Metacritic or Letterboxd rating a spoiler.

That’s a problem. I would like to filter with it, and otherwise filter on my preferences, but I don’t actually want to know the relevant information. I want exactly one bit of output, either a Yes or a No.

It occurs to me that I should either find a way to make an LLM do that, or a way to make a program (perhaps plus an LLM) do that.

I’ve had brief attempts at similar things in the past with sports and they didn’t work, but that’s a place where trying and failing to get info is super dangerous. So this might be a better place to start.

I also don’t know what to do about the problem that when you have ‘too much taste’ or knowledge of media, this often constitutes a spoiler – there’s logically many ways a story could go in reality, but in fiction you realize the choice has been made. Or you’re watching a reality TV show, where the editors know the outcome, so based on their decisions you do too. Whoops. Damn it. One of the low-key things I loved about UnReal was that they broadcast their show-within-a-show Everlasting as it happens, so the editors of each episode do not know what comes next. We need more of that.

I saw five movies Scott Sumner also watched. They were five of the eight movies I rated 4 or higher. All platinum hits. Super impressive selection process.

He does a much better job here than Metacritic or Letterboxd.

But on his scale, 3 means a movie is barely worth watching, and his average is ~3.

My interpretation is that Scott really dislikes the traditional Hollywood movie. His reviews, especially of Challengers and Anora, make this clear. Scott is always right, in an important sense, but a lot of what he values is different, and the movie being different from what he expects.

My conclusion is that if Scott Sumner sees a Hollywood movie, I should make an effort to see it, even if he then decides he doesn’t like it, and I should also apply that to the past.

I did previously make a decision to try and follow Sumner’s reviews for other movies. Unfortunately, I started with Chimes at Midnight, and I ended up giving up on it, I couldn’t bring myself to care despite Scott giving it 4.0 and saying ‘Masterpiece on every level, especially personally for Wells.’ I suspect it’s better if one already knows the Shakespeare? I do want to keep trying, but I’ll need to use better judgment.

I considered recording my predictions for films before I went to see them.

I did not do this, because I didn’t want to anchor myself. But when I look back and ask what I was expecting, I notice my predictions were not only good, but scary good.

I’ve learned what I like, and what signals to look for. In particular, I’ve learned how to adjust the Metacritic and Letterboxd rankings based on the expected delta.

When I walked into The Fall Guy, I was super excited – the moment I saw the poster I instantly thought to myself ‘I’m in.’ I knew Megalopolis was a risk, but I expected to like that too.

The movies that I hated? If I expected to not like them, why did I watch them anyway? In many cases, I wanted to watch a generic movie and relax, and then missed low a bit, even if I was sort of fine with it. In other cases, it was morbid curiosity and perhaps hoping for So Bad It’s Good, which combined with ‘it’s playing four blocks away’ got me to go to Madame Web.

The worry is that the reason this happens is I am indeed anchoring, and liking what I already decided to like. There certainly isn’t zero of this – if you go into a movie super pumped thinking it’s going to be great that helps, and vice versa. I think this is only a small net effect, but I could be wrong.

-

If the movie stinks, just don’t go. You know if the movie stinks.

-

Trust your instincts and your gut feelings more than you think you should.

-

Maybe gut feelings are self-fulfilling prophecies? Doesn’t matter. They still count.

-

You love fun, meta, self-aware movies of all kinds, trust this instinct.

-

You do not actually like action movies that play it straight, stop watching them.

-

If the movie sounds like work or pain, it probably is, act accordingly.

-

If the movie sounds very indy, the critics will overrate it.

-

A movie being considered for awards is not a positive signal once you control for the Metacritic and Letterboxd ratings. If anything it is a negative.

-

Letterboxd ratings adjusted for context are more accurate than Metacritic.

-

Opinions of individuals very much have Alpha if you have enough context.

What are the places I most strongly disagreed with the critical consensus?

I disliked three movies in green on Metacritic: Gladiator 2, Monkey Man and Juror #2.

I think I might be wrong about Monkey Man, in that I buy that it’s actually doing a good job at the job it set out to do, but simply wasn’t for me, see the note that I need to stop watching (non-exceptional) action movies that play it straight.

I strongly endorse disliking Gladiator 2 on reflection. Denzel Washington was great but the rest of the movie failed to deliver on pretty much every level.

I’m torn on Juror #2. I do appreciate the moral dilemmas it set up. I agree they’re clever and well-executed. I worry this was a case where I have seen so many courtroom dramas, especially Law & Order episodes, that there was too much of a Get On With It impatience – that this was a place where I had too much taste of the wrong kind to enjoy the movie, especially when not at a theater.

The moments this is building towards? Those hit hard. They work.

The rest of the time, though? Bored. So bored, so often. I know what this movie thinks those moments are for. But there’s no need. This should have been closer to 42 minutes.

I do appreciate how this illustrates the process where the system convicts an innocent man. Potentially more than one. And I do appreciate the dilemmas the situation puts everyone in. And what this says about what, ultimately, often gets one caught, and what is justice. There’s something here.

But man, you got to respect my time more than this.

One could also include Civil War. I decided that this was the second clear case (the first was Don’t Look Up) of ‘I don’t want to see this and you can’t make me,’ so I didn’t see it, and I’m happy with at least waiting until after the election to do that.

I actively liked four movies that the critics thought were awful: Subservience, Joker: Folie a Duex, Unfrosted and of course Megalopolis.

For Subservience, and also for Afraid, I get that on a standard cinema level, these are not good films. They pattern match to C-movie horror. But if you actually are paying attention, they do a remarkably good job in the actual AI-related details, and being logically consistent. I value that highly. So I don’t think either of us are wrong.

There’s a reason Subservience got a remarkably long review from me:

The sixth law of human stupidity says that if anyone says ‘no one would be so stupid as to,’ then you know a lot of people would do so at the first opportunity.

People like to complain about the idiot ball and the idiot plot. Except, no, this is exactly the level of idiot that everyone involved would be, especially the SIM company.

If you want to know how I feel when I look at what is happening in the real world, and what is on track to happen to us? Then watch this movie. You will understand both how I feel, and also exactly how stupid I expect us to be.

No, I do not think that if we find the AI scheming against us then we will even shut down that particular AI. Maybe, if we’re super lucky?

The world they built has a number of obvious contradictions in it, and should be long dead many times over before the movie starts or at least utterly transformed, but in context I am fine with it, because it is in service of the story that needs to be told here.

The alignment failure here actually makes sense, and the capabilities developments at least sort of make sense as well if you accept certain background assumptions that make the world look like it does. And yes, people have made proposals exactly this stupid, that fail in pretty much exactly this way, exactly this predictably.

Also, in case you’re wondering why ‘protect the primary user’ won’t work, in its various forms and details? Now you know, as they say.

And yeah, people are this bad at explaining themselves.

In some sense, the suggested alignment solution here is myopia. If you ensure your AIs don’t do instrumental convergence beyond the next two minutes, maybe you can recover from your mistakes? It also of course causes all the problems, the AI shouldn’t be this stupid in the ways it is stupid, but hey.

Of course, actual LLMs would never end up doing any of this, not in these ways, unless perplexity suggested to them that they were an AI horror movie villain or you otherwise got them into the wrong context.

Also there’s the other movie here, which is about technological unemployment and cultural reactions to it, which is sadly underdeveloped. They could have done so much more with that.

Anyway, I’m sad this wasn’t better – not enough people will see it or pay attention to what it is trying to tell them, and that’s a shame. Still, we have the spiritual sequel to Megan, and it works.

Finally (minor spoilers here), it seems important that the people describing the movie have no idea what happened in the movie? As in, if you look at the Metacritic summary… it is simply wrong. Alice’s objective never changes. Alice never ‘wants’ anything for herself, in any sense. If anything, once you understand that, it makes it scarier.

Unfrosted is a dumb Jerry Seinfeld comedy. I get that. I’m not saying the critics are wrong, not exactly? But the jokes are good. I laughed quite a lot, and a lot more than at most comedies or than I laughed when I saw Seinfeld in person and gave him 3.5 GPTs – Unfrosted gets at least 5.0 GPTs. Live a little, everyone.

I needed something to watch with the kids, and this overperformed. There are good jokes and references throughout. This is Seinfeld having his kind of fun and everyone having fun helping him have it. Didn’t blow me away, wasn’t trying to do so. Mission accomplished.

Joker: Folie a Duex was definitely not what anyone expected, and it’s not for everyone, but I stand by my review here, and yes I have it slightly above Wicked:

You have to commit to the bit. The fantasy is the only thing that’s real.

Beware audience capture. You are who you choose to be.

Do not call up that which you cannot put down.

A lot of people disliked the ending. I disagree in the strongest terms. The ending makes both Joker movies work. Without it, they’d both be bad.

With it, I’m actively glad I saw this.

I liked what Film Colossus said about it, that they didn’t like the movie but they really loved what it was trying to do. I both loved what it was trying to do and kinda liked the movie itself, also I brought a good attitude.

For Megalopolis, yes it’s a mess, sure, but it is an amazingly great mess with a lot of the right ideas and messages, even if it’s all jumbled and confused.

If you don’t find a way to appreciate it, that is on you. Perhaps you are letting your sense of taste get in the way. Or perhaps you have terrible taste in ideas and values? Everyone I know of who said they actively liked this is one of my favorite people.

This movie is amazing. It is endlessly inventive and fascinating. Its heart, and its mind, are exactly where they need to be. I loved it.

Don’t get me wrong. The movie is a mess. You could make a better cut of it. There are unforced errors aplenty. I have so, so many notes.

The whole megalopolis design is insufficiently dense and should have been shoved out into the Bronx or maybe Queens.

But none of that matters compared to what you get. I loved it all.

And it should terrify you that we live in a country that doesn’t get that.

Then there’s The Brutalist, which the critics think is Amazingly Great (including Tyler Cowen here). Whereas ultimately I thought it was medium, on the border between 3 and 3.5, and I’m not entirely convinced my life is better because I saw it.

So the thing is… the building is ugly? Everything he builds is ugly?

That’s actually part of why I saw the film – I’d written a few weeks ago about how brutalist/modern architecture appears to be a literal socialist conspiracy to make people suffer, so I was curious to see things from their point of view. We get one answer about ‘why architecture’ and several defenses of ‘beauty’ against commercial concerns, and talk about standing the test of time. And it’s clear he pays attention to detail and cares about the quality of his work – and that technically he’s very good.

But. The. Buildings. Are. All. Ugly. AF.

He defends concrete as both cheap and strong. True enough. But it feels like there’s a commercial versus artistic tension going the other way, and I wish they’d explored that a bit? Alas.

Instead they focus on what the film actually cares about, the Jewish immigrant experience. Which here is far more brutalist than the buildings. It’s interesting to see a clear Oscar-bound film make such a robust defense of Israel, and portray America as a sick, twisted, hostile place for God’s chosen people, even when you have the unbearable weight of massive talent.

Then there’s the ending. I mouthed ‘WTF?’ more than once, and I still have no idea WTF. In theory I get the artistic choice, but really? That plus the epilogue and the way that was shot, and some other detail choices, made me think this was about a real person. But no, it’s just a movie that decided to be 3.5 hours long with an intermission and do slice-of-hard-knock-life things that didn’t have to go anywhere.

Ultimately, I respect a lot of what they’re doing here, and that they tried to do it at all, and yes Pierce and Brody are great (although I don’t think I’d be handing out Best Actor here or anything). But also I feel like I came back from an assignment.

Since I wrote that, I’ve read multiple things and had time to consider WTF, and I understand the decision, but that new understanding of the movie makes me otherwise like the movie less and makes it seem even more like an assignment. Contra Tyler I definitely did feel like this was 3.5 hours long.

I do agree with many of Tyler’s other points (including ‘recommended, for some’!) although the Casablanca angle seems like quite a stretch.

One detail I keep coming back to, that I very much appreciate and haven’t seen anyone else mention, is the scene where he is made to dance, why it happens and how that leads directly to other events. I can also see the ‘less interesting’ point they might have been going for instead, and wonder if they knew what they were doing there.

My new ultimately here is that I have a fundamentally different view than the movie of most of the key themes in the movie does, and that made it very difficult for me to enjoy it. When he puts that terrible chair and table in the front of the furniture store, I don’t think ‘oh he’s a genius’ I think ‘oh what a pretentious arse, that’s technically an achievement but in practice it’s ugly and non-functional, no one will want it, it can’t be good for business.’

It’s tough to enjoy watching a (highly brutal in many senses, as Tyler notes!) movie largely about someone being jealous of and wanting the main character’s talent when you agree he’s technically skilled but centrally think his talents suck, and when you so strongly disagree with its vision, judgment and measure of America. Consider that the antagonist is very clearly German. The upside-down Statue of Liberty tells you a lot.

We’ve moved beyond award shows, I think, now that we have Metacritic and Letterboxd, if your goal is to find the best movies.

In terms of the Oscars and award shows, I’ll be rooting for Anora, but wow the awards process is dumb when you actually look at it. Knowing what is nominated, or what won, no longer provides much alpha on movie quality.

Giving the Golden Globe for Best Musical or Comedy to Emelia Perez (2.8 on Letterboxd, 71 on Metacritic) over Anora (4.1 on Letterboxd, 91 on Metacritic) or Challengers tells you that they cared about something very different from movie quality.

There were and have been many similar other such cases, as well, but that’s the one that drove it home this year – it’s my own view, plus the view of the public, plus the view of the critics when they actually review the movies, and they all got thrown out the window.

Your goal is not, however, purely to find the best movies.

Robin Hanson: The Brutalist is better than all these other 2024 movies I’ve seen: Anora, Emilia Perez, Wicked, Conclave, Dune 2, Complete Unknown, Piano Lesson, Twisters, Challengers Juror #2, Megalopolis, Civil War. Engaging, well-made, but not satisfying or inspiring.

Tyler Cowen: A simple question, but if this is how it stands why go see all these movies?

Robin Hanson: For the 40 years we’ve been together, my wife & I have had a tradition of seeing most of the Oscar nominated movies every year. Has bonded us, & entertained us.

I like that tradition, and have tried at times a similar version of it. I think this made great sense back in the 1990s, or even 2000s, purely for the selection effects.

Today, you could still say do it to be part of the general conversation, or as tradition. And I’m definitely doing some amount of ‘see what everyone is likely to talk about’ since that is a substantial bonus.

But I think we’d do a lot better if the selection process was simply some aggregate of Metacritic, Letterboxd and (projected followed by actual) box office. You need box office, because you want to avoid niche movies that get high ratings from those that choose to watch them, but would do much less well with a general audience.

I definitely plan on continuing to log and review all the movies I see going forward. If you’re reading this and think I or others should consider following you there, let me know in the comments, you have permission to pitch that, or to pitch as a more general movie critic. You are also welcome to make recommendations, if they are specifically for me based on the information here – no simply saying ‘I thought [X] was super neat.’

Tracking and reviewing everything been a very useful exercise. You learn a lot by looking back. And I expect that feeding the data to LLMs will allow me to make better movie selections not too long from now. I highly recommend it to others.