AI developments have picked up the pace. That does not mean that everything else stopped to get out of the way. The world continues.

Do I have the power?

Emmett Shear speaking truth: Wielding power is of course potentially dangerous and it should be done with due care, but there is no virtue in refusing the call.

There is also an art to avoiding power, and some key places to exercise it. Be keenly aware of when having power in a given context would ruin everything.

Eliezer Yudkowsky reverses course, admits aliens are among us and we have proof.

Eliezer Yudkowsky: To understand the user interfaces on microwave ovens, you need to understand that microwave UI designers are aliens. As in, literal nonhuman aliens who infiltrated Earth, who believe that humans desperately want to hear piercingly loud beeps whenever they press a button.

One junior engineer who hadn’t been taken over and was still actually human, suggested placing a visible on-off switch for turning the sound off — for example, in case your spouse or children were sleeping, and you didn’t want to wake them up. That junior engineer was immediately laughed off the team by senior aliens who were very sure that humans wanted to hear loud screaming beeps every time they pressed buttons. And furthermore sure that, even if anyone didn’t want their microwave emitting piercingly loud beeps at 4am, they would be perfectly happy to look up a complicated set of directions for how to turn the sound on or off, rather than needing a visible on-off switch. And even if any humans had trouble remembering that, they’d be much rarer than humans who couldn’t figure out how to set the timer for popcorn without a clearly labeled “Popcorn” button, which does a different random thing in every brand of microwave oven. There’s only so much real estate in a microwave control panel; it’s much more important to have an inscrutable button that says “Potato”, than a physical switch that turns the sound off (and which stays in the same position after power cycles, and which can be inspected to see if the sound is currently off or on).

This is the same species of aliens that thinks humans want piercing blue lights to shine from any household appliance that might go in somebody’s bedroom at night, like a humidifier. They are genuinely aghast at the thought that anyone might want an on-off switch for the helpful blue light on their humidifier. Everyone likes piercing blue LEDs in their bedroom! When they learned that some people were covering up the lights with black tape, they didn’t understand how anybody could accidentally do such a horrible thing — besides humans being generally stupid, of course. They put the next generation of humidifier night-lights underneath translucent plastic set into the power control — to make sure nobody could cover up the helpful light with tape, without that also making it impossible to turn the humidifier on or off.

Nobody knows why they insist on hollowing out and inhabiting human appliance designers in particular.

Mark Heyer: A nice rant Eliezer, one that I would subscribe to, having been in the information design business. However, I have an interesting counter-example of how to fix the problem.

In the 90s I worked at a rocketship internet startup in SV, providing services and products nationwide. As the internet people were replaced with suits, my boss, a tough ex-Marine, called me into his office and asked what my future was with the company. I channeled Steve Jobs and told him that I wanted to look at everything we did as a company and make it right for the users. He pounded his fist on the desk and said “Make it happen!”

After that I was called into every design and process meeting to certify that what they were doing was right for the users. The Ah-so moment was finding that the engineers and designers knew that those blue lights knew that they sucked – but were ruled by the real aliens, the suits above, who didn’t know or care about customers. I empowered them to make the right decisions and it turned out that everyone in the company supported my mission.

So it can be fixed. All it takes is a leader to establish the mandate. And to point out that happy customers buy more of their products.

United Airlines gives up on the Boeing Max 10 after sufficiently long delays, accepts some Max 9s and starts looking to Airbus. Boeing looking more and more like a zombie company, an Odd Lots episode recently drove the point home as well. They look entirely captured by consultant types who have no intention of ever building anything, and by the time they decide to try no one left will know how.

I saw a claim that Russia can deanonymize Telegram accounts but the link has since been deleted by its source.

Russian documents show high willingness to use tactical nuclear weapons in various circumstances. The obvious thing to point out is the possibility that they are lying. Whether or not you intend to use nuclear weapons in various situations, if you were Russia, wouldn’t you want everyone else to think that you were going to do so?

Red Sea continues to be de facto blockaded, America unwilling or unable to do anything about it.

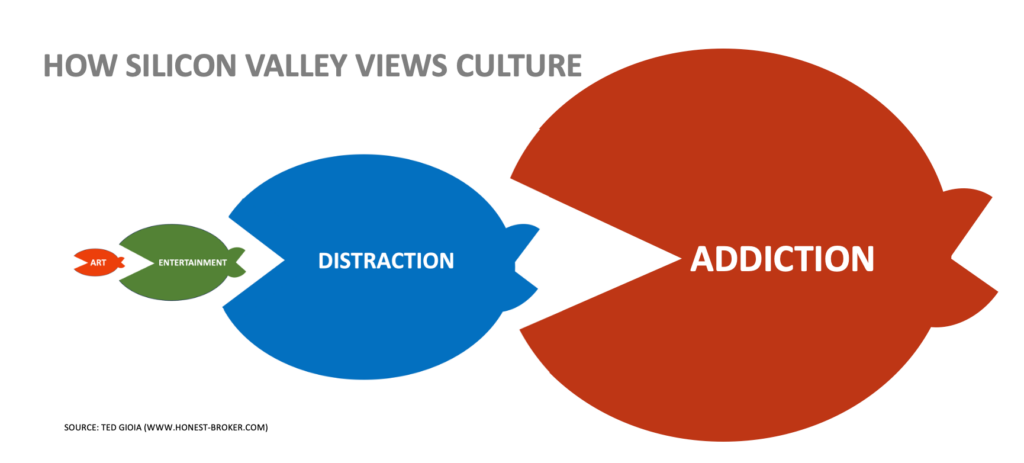

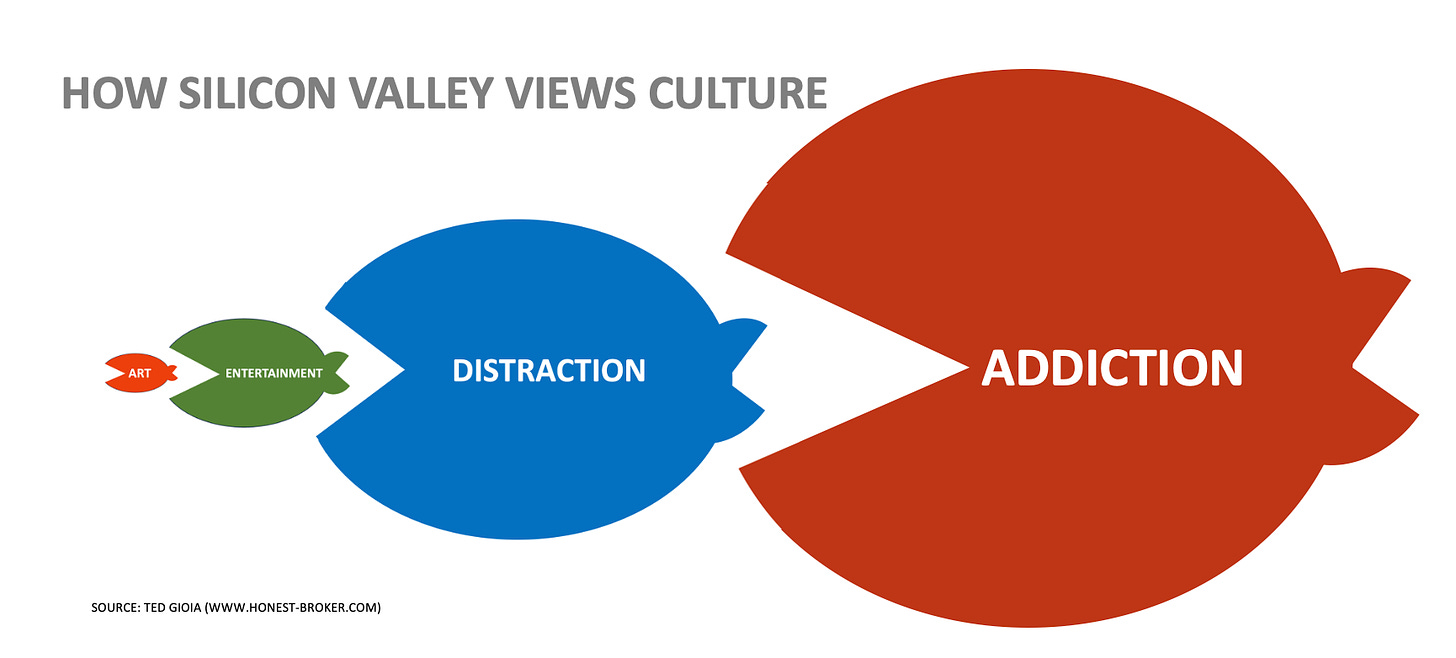

Honest Broker Ted Gloria sees us moving not only from Art to Entertainment, but then to Distraction and finally Addiction, a total victory for ‘dopamine culture,’ which is crowding out traditional slower activities. As usual with such critiques, not all the details resonate, but the overall message hits the mark.

The illustrations here are excellent:

I think it is very good advice to essentially never be on the true, de facto right side of this graph for most items. You want to be on the left as often as you can, and spend most of the rest of the time in the center.

I suspect part of the issue is the conflation of old and traditional with slow, and new and modern with fast. There is a correlation, but it is incomplete.

Were newspapers slow culture? In some sense yes, you sat down and focused on them, they were part of a morning ritual. But also they were kind of clickbait in print form quite a lot of the time. I would say that reading books, or in-depth blogs, constitutes much more of the long-term-better slow form of journalism here.

I would say something similar about communication. Handwritten letters are slow, but that is a bug rather than a feature. Good slow communication is talking in person, or through longform or carefully writing. Which certainly can include email. Voice communication is quick in some senses, but is doing the thing that counts, I think. This is also the place where quick short bursts sometimes make sense, and you want a mix throughout.

On video, I see film alone as the slow thing, TV as the fast thing, and other much shorter things as the dopamine thing. Similarly, shouldn’t music’s left be live music? Or more likely there are four levels, not every graph fits every concept. For images, there’s view on phone versus view on a computer or TV screen. Details matter.

For sports, gambling very much depends on what exactly you are doing. If you are playing fantasy sports and counting stats, or betting on the next play, yeah, that’s dopamine. Whereas classic slower gambling is often if anything slower and more participatory than merely watching, rather than less.

In any case, yes, we all constantly hear the calls for slower modes, slower living, unplugging periodically and all that. And we all know those calls are largely right. Then most of us keep ignoring them.

Dan Luu on how scale is effectively the enemy, rather than the friend, of good customer service and ability to fight scams and other spam. I buy his model here. Smaller sites and services can and do invest in bespoke service and in things that don’t scale such as one dedicated employee reading everything or tracking down the bad guys. Whereas at scale, these companies do not invest the same way, and what they do is forced to focus on legibility and consistency and following rules.

As their scale also scales the rewards to attacks and as their responses get worse, the attacks become more frequent. That leads to more false positives, and a skepticism that any given case could be one of them. In practice, claims like Zuckerberg’s that only the biggest companies like Meta can invest the resources to do good content moderation are clearly false, because scale reliably makes content moderation worse.

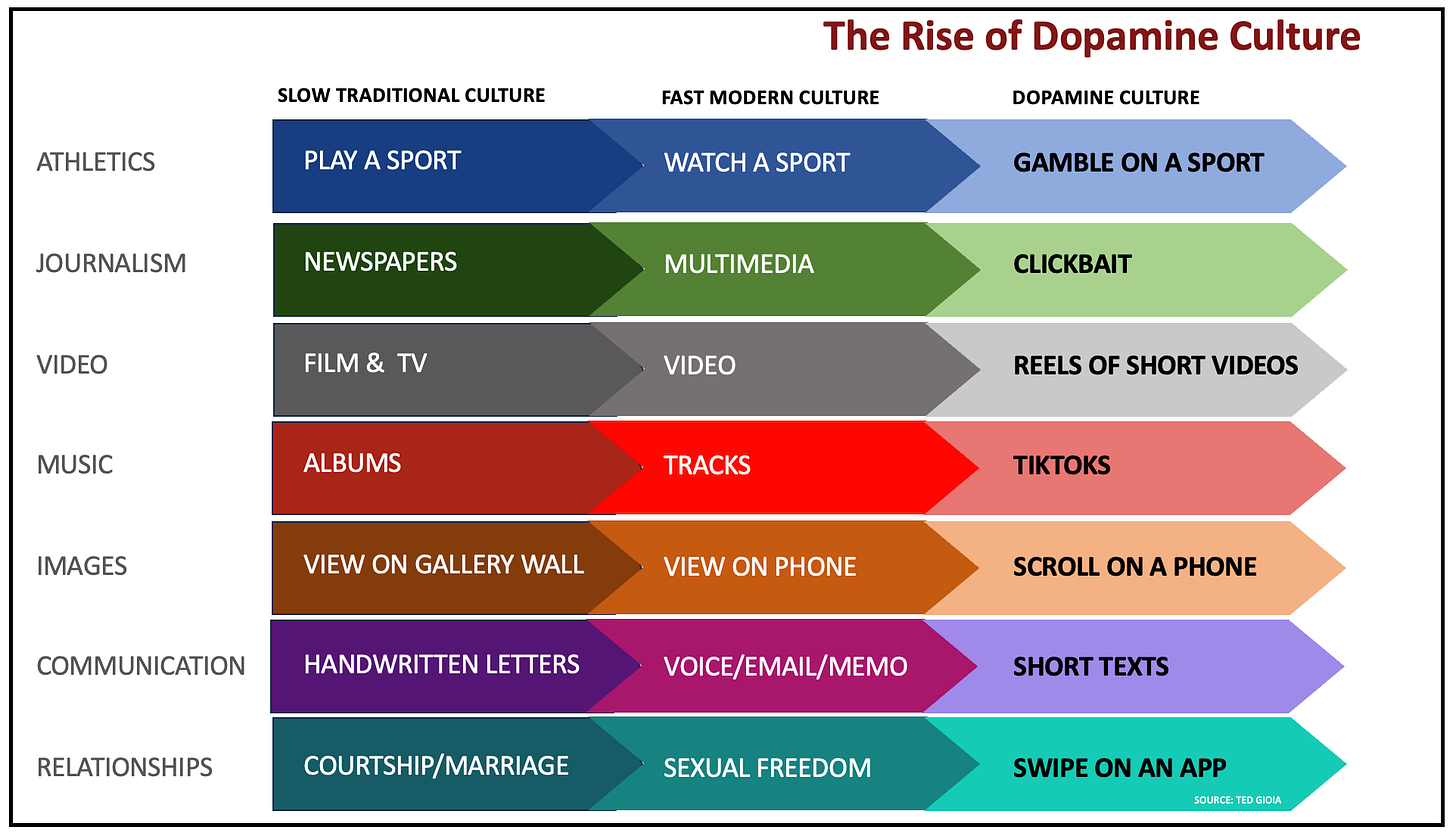

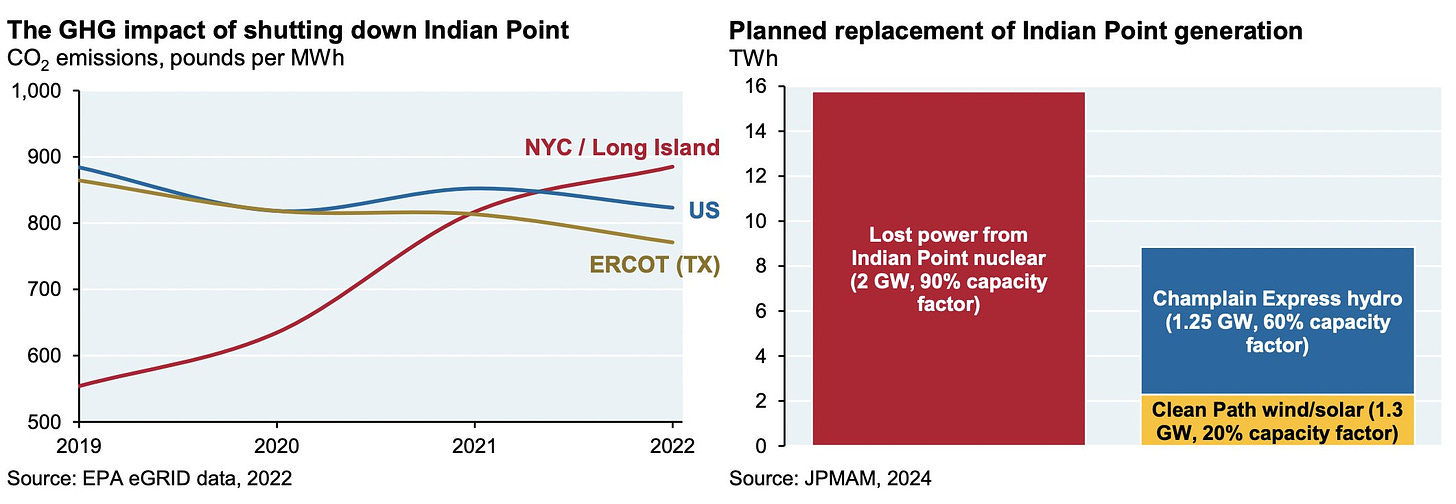

In case you were wondering what New York is doing to replace its nuclear power, well, it is going better than it did in Germany, at least we are using natural gas:

Oh. Right. That.

Josh Barro: Politicians love to complain about airline fees. But they also wrote a tax code that applies a 7.5% excise tax to airfares but not to fees, encouraging airlines to charge fees instead of fares.

We could reverse this. Let’s instead charge airlines 25% on all fees and 2.5% on the fares themselves. Then there will be proper tension between ‘get listed first and look cheaper than you are’ versus ‘save money on taxes.’

FTC attempts to block merger of Kroger and Albertsons’s in part because of antitrust concerns regarding ‘union grocery labor.’ I mean, wow.

EPA bans asbestos. Good. Wait, what? They hadn’t banned it before? I got Gemini to agree that this was crazy zero-shot, which is not a label it likes putting on things.

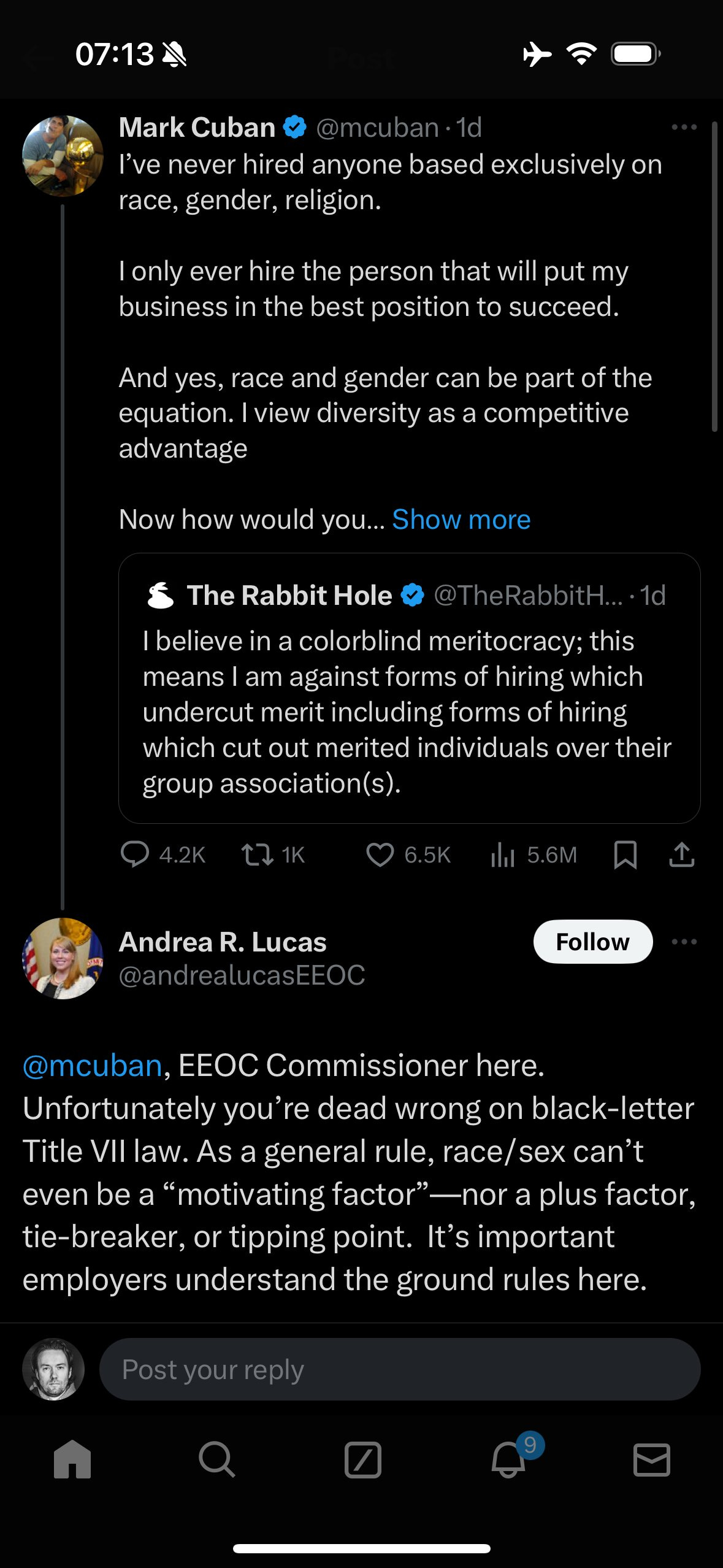

It sure seems like a commissioner of the EEOC is explicitly saying that race and sex can’t be any kind of factor in hiring, and that lots of corporate DEI initiatives are very much violating Title VII?

DHH: How does corporate DEI in America proceed after getting such a clear notice that using race or sex as a “motivating factor” in hiring decisions is plainly illegal?

And given this, you’d think that Corporate America just opened itself up to the mother of all discrimination suits on the basis of its 2021 hiring drive. Will be fascinating to see how this plays out in the class-action lawsuits that’ll surely follow.

If that is true, it sure seems like there are a lot of Title VII violations going on? For example, here’s the recent story about air traffic controller hiring being filtered through a ‘biographical test,’ although the practice is no longer ongoing since it has been banned more generally. Were sufficiently obfuscated proxies used that the government’s actions are plausibly legal here, regardless of the obvious admitted motivations, although the lawsuit here is real and ongoing? In practice I have to assume that Lucas is wrong in practice and such laws will almost never be applied to actions like those Cuban suggests. And indeed I cannot think of a single case where a civil action was successful in such a case, despite many clear examples of such actions?

Also the government’s actions in the FAA case seem at first glance to have been rather corrupt as hell. Give out a ‘biographical test’ that eliminates 90% or more of candidates on the basis of questions highly unrelated to prospective job performance, and have favored groups get explicit instructions on what answers to give on the test to lie their way past it?

Washington State Supreme Court rules the bar exam no longer a requirement to practice law, cites impact on ‘marginalized groups.’ I am all for ending or easing occupational licensing, but the courts imposing the change on this basis seems kind of insane. Either the alternative process can verify someone can safety practice law or you rightfully decide this should be the client’s problem in a free market, in which case you should do it anyway, or it doesn’t and you think it shouldn’t, so you shouldn’t make the change.

I worry that there are essentially no rating systems or other ways to verify you have found a decent lawyer (presumably because lawyers work to prevent this in various ways), and so the first question new prospective clients will ask is ‘did you pass the bar?’ because it is at least a legible question. So lawyers will effectively have to pass the bar anyway to get private clients, and those who can’t end up as public defenders and prosecutors. That doesn’t sound great.

San Francisco spends $34 million on custom payroll system, which inevitably does not work, and they are ‘ready to start over.’

Patrick McKenzie: Have they considered giving up and starting over with another workforce given a much simpler compensation structure? Because writing a computer program whose true requirements are intentionally designed to be impossible to write down is extremely non-trivial.

I once again propose that we dramatically raise base pay for various government workers such as police, in exchange for getting rid of the various insane rules and loopholes they use to get far more pay than we allocate, while keeping average total pay similar. We would recruit far better, both in quality and quantity, by doing so, and elicit much better behaviors with far lower deadweight loss. Are we this obsessed with a low headline number?

That thread includes this graphic, which likely requires periodic reminders:

Government working depends partly on @Ringwiss, a Twitter account that has mastered parliamentary procedure and history, and will answer your questions with lightning speed. It is run by a 20-year-old economics student named Kacper Surdy.

You really can be the best if you actually read the materials and do your work.

Of course, no one pays for this, and keeping it free is vital if it is going to deliver its value. So flagging this now. Once he graduates, EAs or others need to give him a full time job doing this as a public resource.

Prospera forces you to choose whose laws you are subject to, and you must choose from the OECD countries. The good news is you can mix and match, which means there are often things like medical procedures that can be done in Prospera that cannot be done anywhere else. There still seems like so much you are unable to do, that it would be great if someone were able to do it.

Utah doing the whole age verification requirement thing over again, despite the problems of ‘no way to actually do it’ and also ‘blatantly unconstitutional.’ Sigh. As usual, the arguments against seem to be overreaching, but also the bill is really terrible.

Free speech under attack in Canada, Ireland and the UK. Here too, although the first amendment helps a lot. We should be so thankful we have it.

Details available elsewhere but the Chips Act is failing to produce chips due to all the requirements it imposes on anyone seeking to produce chips.

What would happen if we banned the same cookie pop-ups that the EU makes mandatory?

Abe Murray: I hate regulation but …. hear me out on this one. The US *mustmake it illegal to show those stupid cookie popups all over the web. We can’t allow the EU to export its stupid paternalistic pollution to us here in the land of freedom.

How much has this cost us in lost time and attention as a society? The milliseconds must add up to weeks of lost productivity across millions of people.

Plus there are the 2nd order effects of teaching a generation of humans that stupid ineffective regulations are an ok thing and should be quietly accepted. That is a massive negative cost.

Casy Handmer: I would become a single issue voter on this issue. It is unacceptable that we allow the Internet to be polluted by insanely indifferently stupidly ineffective “regulatory” regimes beyond our borders. Normalize the expectation that regulators are accountable to their users!

It’s terrible, everyone knows it’s terrible, and it will never ever be fixed.

I would not go single-issue or anything but I would be strongly in favor. On this issue, Europe is obviously in the wrong and I have never heard an actual human argue otherwise. Sometimes all sides of other debates can come together.

Apple being forced to dismantle many of the safeguards of the iPhone ecosystem under orders of the EU via the DMA. Everyone is trying to force them to make all sorts of changes. They are no longer allowed to verify that apps work before letting them into the store. They are being asked if they are going to do ‘forced scrolling’ to allow competitors to be seen, order of apps shown to be shuffled, while copycat apps attempt to fool users. Apple is being forced to do things via implied threats of what happens if they don’t ‘comply’ on their own.

I am no fan of the iPhone and avoid the Apple ecosystem, but this is very much destroying the core value Apple is offering.

I continue to not look forward to when I finish reading the EU AI Act, which I am eventually going to have to do.

An ode to the excellent AppleTV+ show Slow Horses. I have Slow Horses solidly in Tier 2, definitely Worth It.

Suits was the most streamed show of 2023, perhaps not despite but because of it being epically medium. Regular old many-22-episode-season shows keep catching fire years later. They keep bringing people comfort. Shows like The Office and Friends are worth nine figures per contract, then unavailable at any price. Yet the streaming services do not understand, and do not seek to imitate. Instead, they produce shorter seasons, and ask the question ‘how many people got this far into the show?’ and when that drops they cancel, long before they can get to 100 let alone 200 episodes.

I think this is a serious mistake. I do understand that when you are hiring an all-star cast to do something explicitly prestige-level, you are doing a different thing. That’s fine. But yes, we really do love the thing that Suits is trying to do. There is a reason that what I watch when on the elliptical is Law & Order, and if I sustain that long enough to run out I’ll likely turn next to SVU. We need that reliability, that comfort, that volume, and it pays off. Not always, but sometimes.

Everyone seems to reliably underestimate the value in increasing the quantity. I hate that our best people stop producing television so they can try to do movies. You’re giving me so much less content! Come back.

On a related note, I am over the moon that I finally have a new late night show I can watch a recording of the next day. For many years I absolutely loved Craig Ferguson, alas he hated the job and quit. I very much enjoyed Chris Hardwick doing At Midnight, also great relaxed no-stakes comfort television that makes you think without demanding that you do so, but he quit too to do the rather stupid The Wall.

And I’ve loved Taylor Tomlinson’s stand-up for a while now, including going to her Have it All tour, which was top notch.

So you can imagine my ‘no way, you’re kidding’ smile when I saw that the old Craig Ferguson slot was going to Taylor Tomlinson to do a show called After Midnight. Perfection.

And it has delivered on its promise. Forty minutes of comedians improvising jokes and riffing off each other every night, such a great format. I presume it will only get better. This is The Way.

Interesting and also great fun, from a public defender: My Clients, The Liars. Lying to your lawyer will not help your cause, yet most guilty defendants, especially those caught dead to rights and not for the first time, do it constantly. Another note is that the author effectively says it is mostly very easy to tell which clients are innocent, because those clients dump information on him in the hopes any will be useful, whereas guilty clients come up with excuses not to pursue evidence for their story.

Also from the same source, one might want to learn the eleven magic words: “Is there anything the court would like to review to reconsider?”

Perhaps there is. It seems every felon in California can now challenge their conviction retroactively on grounds of systemic bias. That bias can be proved through group comparisons, where criminal history is excluded as a consideration. This seems likely to grow into a giant disaster as long as it goes unfixed.

Arresting eleven person bike ring cut local bike thefts by 90%. As Patrick McKenzie notes, much of crime is a business that scales, and the state is bad at understanding this. He uses the example of credit card fraud, and notes that the crime business is remarkably similar to any other.

It’s not as if we don’t often know who the criminals are:

NYPD News: Last year, 38 individuals accounted for assaulting 60 @NYCTSubway employees. Those 38 individuals have been arrested 1,126 times combined.

NYPD Chief of Transit: If anyone is curious what your NYPD cops are doing … well … they’re doing their jobs! They’ve arrested these 38 individuals a combined total of 1,126 times! The better question is why are they forced to arrest these people so many times & where are the consequences for their repeated illegal actions? Know this, the NYPD does NOT determine and/or impose consequences. That is the responsibility of the other stakeholders in the criminal justice system (lawmakers, judges, prosecutors). NY’ers deserve better.

Colby Cash: Carceral conditions for all in the absence of incarceration for criminals: part 3,157 in a continuing series

Morgan McKay: Security Checkpoint to check bags at Grand Central set up just a short time ago – NYPD asks this woman if they can check her bag – as you can see it was a quick search Officers tell me that the checkpoint spots will vary – right now checking about every 4th person walking by.

What happens if New Yorkers do not want their bags checked by the national Guard?

“Then go home,” @GovKathyHochul says on @fox5ny “You’re not taking the subway.”

I am not saying all 1,126 arrests were justified, but surely something has gone horribly wrong with the punishments here. There is a clip of mayor Adams talking about subway crime. He repeatedly talks about ‘feeling safe,’ never talking about being safe. I do get that feeling safe is important, people who feel unsafe are unhappy and might not ride the subway, but our focus surely must be on actually being safe.

Eric Adams (NYC Mayor): Nothing encourages the feeling of safety more than having a uniformed officer present from the bag checks when you first come into the system to watching them walk through the subway cars to the platforms.

So the Governor sends the national guard in to check bags at Grand Central. Which is completely insane. The purported solution has exactly nothing to do with the cause.

That is not even a way to feel safe, if you are anything like me. If anything, this is a way to make me feel actively unsafe and a reason to avoid taking the subway. I will never feel fully safe around inspection points and men with guns, for obvious reasons.

Even if some might somehow feel more safe from this (why?) there is absolutely zero actual safety reason to do this, it in no way stops crime, even in theory anyone who actually did want to do crime with things in a bag could walk to the next stop and anyway this is crazy. We could use more people in uniform to make things more safe if we wanted to, but that would involve them being asked to do police work.

Case of assault and being held for ransom at SFSU suspended as all charges are ‘alleged’ and ‘unfounded,’ despite what appears to be audio, video and eyewitnesses.

California’s new $20 minimum wage rule specifically exempts grandfathered in restaurants that serve bread (as in prior to September 2023), as in Panera in particular, run by a longtime Newsom donor after extensive lobbying. Panera now has a full regulatory moat and cost edge against any and all competition. It is listed in this section. Note which section this is in.

Here is a rather crazy statistic:

Cremieux: Incredible stat: the 1% of male adoptees with biological parents who had three+ convictions were responsible for 30% of the sample’s convictions. Crime is very concentrated.

These are all adaptees. There are still possible non-genetic factors, but this is also rather a large effect from a pool of people already in bad shape. This suggests that targeted interventions could be highly cost-effective. It also suggests that rational people would check such information and update on it, even if the government cannot and often makes such actions technically illegal.

Ricki Heicklen writes in Asterisk about Michael Lewis and Going Infinite. She notices that Michael Lewis, while getting the overall vibes of the situation at FTX mostly correct, seems completely uninterested in how and why people make mistakes, most importantly himself and his own mistakes. How did he not realize anything was amiss, and why was he so uninterested in that question? Why did he constantly take the word of someone doing all the fraud and crime, and those around him doing likewise? She absolutely hammers him. The contrast to my own (fully compatible with hers) take is interesting throughout. I was more interested in what happened to FTX and SBF than what happened to Lewis, but also asked how Lewis could not realize even after the fact about all the crime.

Fascinating: An assisted suicide pod that passed an independent legal review showing it complies with Swiss law. At the push of a button, the pod would fill with nitrogen gas, rapidly lowering oxygen levels and killing the user.

Holden: Why is it the assisted suicide people can easily device a contraption to kill a human being unmolested while capital punishment is in a constant struggle for ways that aren’t denounced as cruel and illegal?

Mo Sabet: They did this for capital punishment. It went poorly.

I mean, yes, if you mess it up. Also the person did not want to die. There is that.

Very good profile of Dwarkesh Patel, who is rapidly becoming the clear GOAT of podcasters to not miss, ahead of previous title holder Tyler Cowen. Both take similar approaches, doing extensive research and asking incisive, deeply specific questions, without wasting time on things you already know. Episodes demand your full attention, often justifying pausing to contemplate and take notes, or converse with an LLM.

So it is odd to see people comparing him to a very different Lex Fridman.

Shreeda Segan: Today, Patel is quickly becoming known as ‘the new Lex Fridman’ and even ‘Lex Fridman but better.’ Here, again, he credits his success to prep. On Lex Fridman and other competitors, Patel says “Sometimes it doesn’t feel like they’re trying. In other fields, if something is your full-time job, there’s an expectation for you to spend a lot of effort on it.

The idea of popular podcasters just walking into a studio after just a single day of prep… It’s like this is your full-time job, man. Why don’t you spend a week or two instead?”

What is the Lex Fridman approach?

Lex Fridman is there to ensure no listener gets left behind, and otherwise to let the subject talk at length. He is not there to challenge the guest or to dig deep into the technical details. When that approach works, as it did with the con artist Matt Cox, it can be great. In areas I know well, it tends to be a lot of ‘get on with it’ without striking much new ground. Those are diametrically opposite approaches. There is a time and a place for both. Mostly I want to go deep.

The biggest takeaways from Patel’s story are that there are big returns to being Crazy Prepared, that you can optimize the hell out of things without worrying about hitting the Pareto frontier because there are always basic things others aren’t doing, and also big returns for asking people for help and what you want. He took a big swing, put in an extraordinary effort to take advantage, and it worked.

Also related:

Luis Garicano: Axiom for young people:

No one (journalists, academics, managers, politicians, consultants etc.) ever, does their homework.

If you do the homework , you win: e.g. if you show up to a meeting having read the paper to the end, you will often be the only one who did.

Dwarkesh Patel is another example of a kid who (as he explains) got big because he (by his own account) was the only one who was willing to spend two weeks preparing his interviews. Here is a fabulous example [his interview with Demis].

Kevin Erdmann: Reminds me of this quote from “Teller”, which I think applies to success in general,

“Sometimes magic is just someone spending more time on something than anyone else might reasonably expect.”

Virginia state legislator kills, at least for now, the new stadium deal that would have cost the public over a billion dollars. Our government failing in such brazen fashion by continuously bribing sports teams owned by billionaires in zero sum games continues to illustrate how they operate more generally.

Educational requirements are gradually disappearing from job postings. Declines are small, and the tight labor market is doubtless a lot of it. It still seems like progress.

Economics journals are demanding that papers include reproducibility of the results. The threads here are people finding this process outrageously expensive. It turns out that if you do not plan ahead with reproducibility in mind, it is going to be really annoying to fix that later. If you do plan ahead for it, it is presumably not so bad?

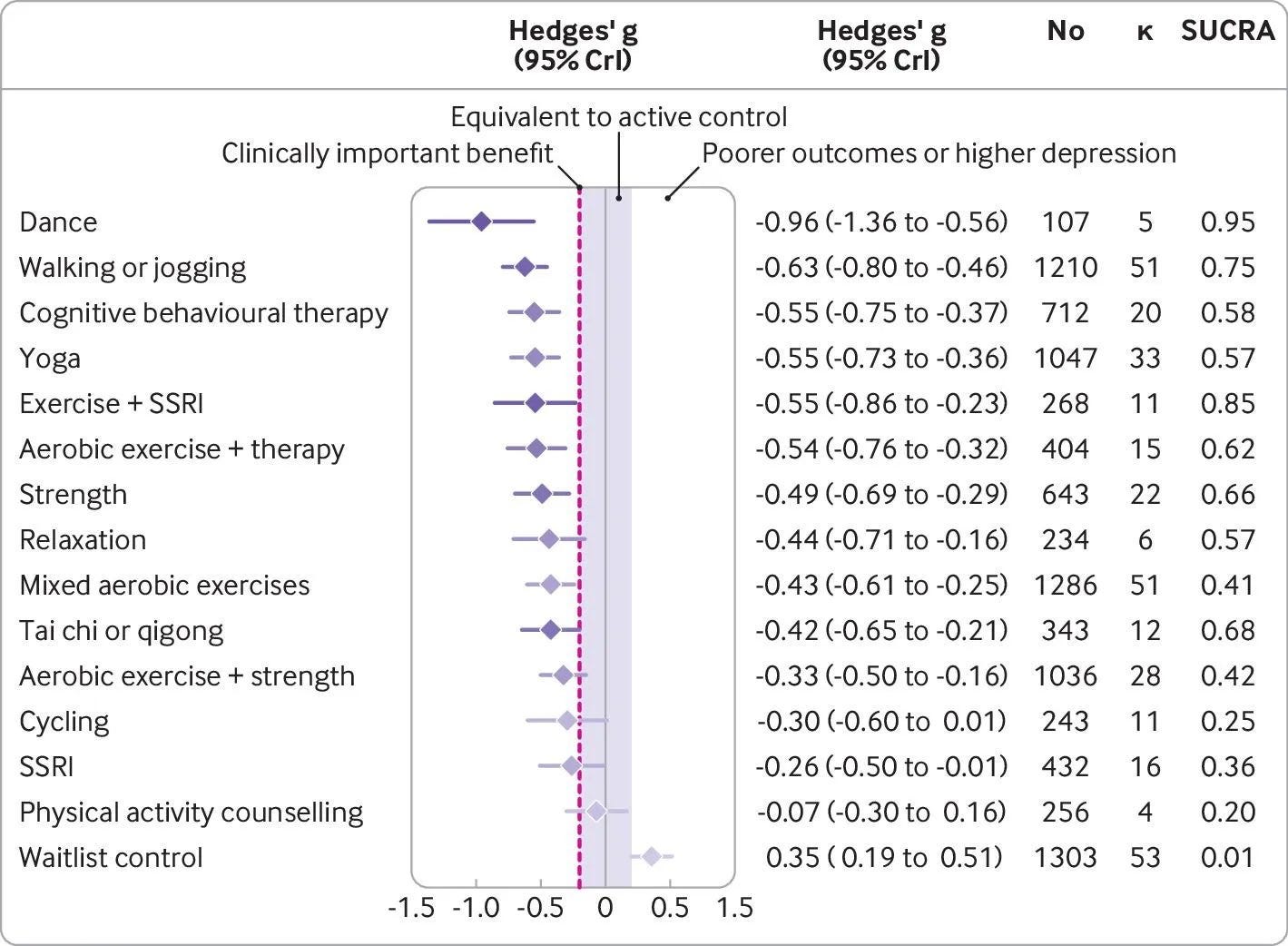

You know what makes people feel better? Dancing. Effect size listed here is ludicrous. Also other exercise helps as well, but dancing is an Ozempic-level cheat code. That is, if you believe the study in question, which I initially said ‘I do directionally although I am skeptical on full effect size’:

Except, of course, when you are ‘skeptical on full effect size’ that is not a great sign, and, well…

Cremieux Recueil: This study should be retracted, both for issues the authors can address and issues with the underlying data. The study suffers from some noticeable, obvious miscoding of effect sizes. For example, the authors reported an effect size of -11.22 Hedge’s g. That’s MUCH larger than the difference in people’s preference for chocolate over feces-flavored ice cream.

Attempting to replicate the authors’ effect sizes with their provided data, it’s simply not possible. Most effect sizes are not even within 1 Hedge’s g of what they reported, and 1 g is a huge effect.

I asked the authors what happened. They replied by uploading some new code. Their new code showed that they did not estimate the effects they verbally described estimating. In fact, they didn’t estimate treatment effects, they estimated change scores.

Even if this wasn’t the case, there is extremely low power and there’s a good deal of publication bias.

The power was so poor that, among studies classified as having a low risk of selective reporting (doubtful given other mistakes), the mean effect was estimated at 0, with CIs from almost -1 to 1.

It goes on. I am including it because this got a lot of play, and one does not want to silently delete in such situations.

Time management is not as hard as many pretend, but it is also not this easy.

Emmett Shear: You have 168 hours per week.

For most, sleep takes 56 of those.

A full time job is anyone 40.

Food, grooming, exercise add another 18 if you’re reasonably efficient.

Misc obligatory bullshit paperwork like taxes or errands, another 7.

This leaves you with 47 hours!

47 hours to dispose of as you see fit. You can get so much done in 47 hours! And that’s without counting overlapping eg. food with socialization.

The limit is not time. It is energy, gumption, courage. Those are real barriers! But they are not time.

I know a few exceptional people who truly run out of time, they are going every minute and there are no gaps. The rest of us are killing time on Twitter, watching Netflix, indulging in whatever activity feels that it doesn’t demand too much of us.

If you think your problem is not enough hours, and you’re talking about it on Twitter…believe me your problem isn’t hours.

I think this is important to notice because if you try to solve an energy or courage problem with a time solution, you won’t succeed in getting any more done.

This is like one of those ‘help me my family is dying’ math problems. So let’s think step by step.

Let’s start with sleep. Most people want about eight hours of sleep a night. 7*8 = 56.

However, if your plan is to start the sleep process at 11pm and set an alarm for 7am, you are not going to get eight hours of sleep. There are a few lucky people who can fall asleep instantly without first getting too tired, and then reliably sleep through the night, and then wake up on a dime ready to go. But they are few.

Realistically, if you want eight hours of sleep, you need to allocate nine hours for this process. So that’s 63 hours, and we are down to 40 to spare.

What about a full-time job? Well, once again, 40 is the core baseline activity (and many people short on time work more). It is not the time it actually costs you. Even if you are not asked to work overtime or stay late, there is commute time. According to the U.S. Census, the average commute was 27.6 minutes each way in 2019. Yes, this is a choice, you can prioritize working from home or a short commute, but we cannot pretend this is free.

Let’s be generous and say you can do this in three hours a week. That leaves 37.

Is 18 hours a reasonable budget for exercise, grooming and food? A solid exercise program likely takes something like 5. Grooming including clothes is going to be several more. Food is something you can rush quite a lot if you want to exist on cereal, Mealsquares and Soylent and live without joy. Less extremely you can eat at your desk. So yes, I do think 18 is a realistic goal here for some, in the sense that we all make choices. But if you do that, it likely means you are not using this time as a psychic recharge or source of joy. You aren’t scoring your victory points.

Another 7 hours for mandatory bullshit sounds nice. It is realistic if you keep things simple, or you are rich enough to hire a lot of help. In between, I am doubtful.

Then there is everything not included here. Health problems and being sick will cost you a lot of time periodically, more so as you get older, even if things are mostly fine. Family emergencies are what they are. You can call having children ‘a choice’ but if your plan for living does not involve them it has no future, and this very much is not killing time on Twitter or Netflix. You get called into court, for jury duty or otherwise.

A big one is that this plan does not charge you for transitions. The idea of ‘going every minute and there are no gaps’ is not so easy to pull off even if your brain could handle it. Lots of things get scheduled. Then you need to confidently be ready when they start, block off time, not start next thing until it finishes, and so on. How many hours does it cost to schedule a one-hour meeting? It varies, but ‘one’ is incorrect.

The little things add up.

And yes: You also have to stay sane, and have sources of joy, and have sources of energy, and have some time to process. You can say ‘time is not the issue here’ and ‘your real issue is energy or courage or gumption or money’ but time is one of the costs of maintaining such resources, so there is non-zero fungibility going on here. One does not (with notably rare exceptions) simply have maximum gumption and courage and energy all the time, and this does not mean that you aren’t running out of time.

And you need to maintain various other relationships in your life, various social considerations and so on. And you need to be doing a bunch of information intake and exploration and playing around without strict particular goals you are maximizing. These things are not as optional as you might think.

Also you need slack, on all these levels. If you are allocating every minute of the day, every day, that is generally not something I would advise.

You certainly can spend periods of your life laser focused on one goal. I had a period where I did that. I woke up, I did work, I paused to grab two quick meals. When work involved keeping an eye on things, I would also often watch TV, which helped keep me sane. Modulo the minimum requirements on other fronts, that was pretty much it, for several years. I do not think that means that you can say to those refusing to do similarly that ‘time is not your problem.’

I am now someone who gets a lot done, in the sense that a lot of people tell me ‘I have no idea how you get all of that done.’ Simultaneously, I look at my time spent, and I notice I could be doing so much more, in theory, if I used all the hours in the day more efficiently.

What is the right way to think about that?

I do not know.

Tyler Cowen asks, how is a $600 a night hotel room better? Location, location, location of course. Although in most cases there is a place very close that is far cheaper. I have paid ~$300/night total largely for location though, especially for key tournaments.

The rest is less convincing, unless you need a great view that badly to have ‘performed vacation.’ Yes, the WiFi is more reliable in expectation, and the beds are on average modestly better, but you don’t need to go this high for that, that is about avoiding the extreme low end, and reviews are a thing to help you. And as Tyler notes, they will attempt to make the $600 hotel effectively an $800 (or more) hotel in various ways. The rest is simply not so valuable, unless of course you are so rich you do not care.

I think of this as ‘the $600 hotel is 1.1x better than the $300 hotel, but if you are a billionaire or expensing or signaling, or you want to form memories of the finest experience to save your marriage or what not, or this is a super high leverage moment in your life otherwise, you will choose it anyway.’

Claims about experts:

Paul Graham: One way you can tell real experts is that they hedge less. They’ll tell you what’s *notthe case. People with merely moderate expertise can’t say that, because they’re not sure.

Of course, people who know next to nothing about a subject also speak decisively about it. There’s a sort of midwit peak of hedging. So this test only works to distinguish experts at the high end.

I would instead say that true experts hedge in the right places. They are unafraid to, as Paul notes, say what is clearly not the case. You can often telling the difference even as a non-expert.

It seems there is potentially a way to, in theory, disable all the nuclear bombs on earth remotely and without countermeasure. All you need is a 1000 TeV machine requiring an accelerator circumference of 1000km with the magnets of ~10 Tesla and power of 50 GW which exceeds that of Great Britain. Attempting to actually do this seems highly destabilizing, and of course if it can do this, what else can do it do, and what happens if your calculations were not correct?

One reason to note this is that we are constantly thinking about the future and about AI as if there won’t be more large surprises waiting for us in physics. This suggests that there might be such surprises. This particular trick looks like it is not so practical at least for now, but what will be next?

We tend to watch movies more when they reinforce our mythos and values, says paper. Stories of entrepreneurs do better in entrepreneurial societies (and presumably reinforce that trend), same for gender roles and everything else. Well, yes.

Matthew Yglesias asks if polygraphs are real or fake, gets mixed response. They are clearly real in the sense that the machines exist and that they often cause people to confess or otherwise be more truthful than they would be otherwise or choose their actions for fear of being tested later. They also clearly correlate with truth, and raise the cost of deception.

However they are fake in the sense that they are easy to fool if you put effort into it and know how to do so, some people naturally are able to best them, and the error rate is substantial even in ideal circumstances.

It is indeed odd that polygraphs are illegal, except where they are mandatory. Matt Yglesias calls it a ‘fake solution’ in border security. It also makes a weird kind of sense. In some situations, we care about protecting people’s rights and dignity, and about avoiding false positives. In others, we really do not. So if you are someone we have disdain for, or in a position where we care sufficiently more about false negatives than false positives, or both, then polygraph. It is not a full solution, and in practice it might or might not be net positive, but I can see plausible situations where it would be. It is not like our non-polygraph detection systems are foolproof, so this is another case of the machine being held to different standards than humans.

Imagine if we had a machine about as reliable as eyewitness testimony. What happens?

Two classic mistakes, one of which I am highly sympathetic to:

Paul Graham: It’s a bad sign when a site forces you play a video to learn what they offer. They won’t let you jump around a text explanation. You’re going to hear the words they want you to hear, in the order they want, at the speed they want. How can anything made by such people be good?

Rick: My least favorite is “schedule a time to come to our webinar”, it’s at least an order of magnitude worse than having a video I can watch now and skip around in.

I hate when people do this. Text Über Alles. Forcing users to watch a video indicates and forces upon them a certain mindset. Yet the evidence it provides on overall lack of quality is not so strong. This has become the ‘standard’ thing to do, what people reveal they want. People like Paul and myself are mostly not the target.

Rick’s extension, however, seems highly reliable. If they make you take a webinar at a given time, chances of value production greatly decline.

Patrick McKenzie confirms that yes, if in The Atlantic they are claiming specifically about The New York Times that a new hire was chided about saying Chick-fil-A was their favorite sandwich, then yes this happened, it has confirmation and was probably confirmed by The Times itself. I notice I was confused when people said ‘this didn’t happen’ because why wouldn’t that happen?

ACLU is trying to destroy the Biden NLRB, potentially invalidating all its decisions, over arbitration in a single firing with essentially no stakes, reports Matt Bruenig among others. The only explanation I can think of here is that the ACLU has been sufficiently ideologically captured by those who did the firing that they were forced to go all-in where it makes no sense. In theory the ACLU could be on a true rule-of-law kick for freedom of contract and the improper firing of a government official, but yeah, that doesn’t make any sense.

As Josh Barro says, the details of the firing sound absurd, but he reports it checks out.

I love the structure of the description, wonderful use of the rule-of-three.

ACLU: [Ms. Oh was] terminated for violation of her obligation to maintain a workplace free of harassment, including in her engaging in repeated hurtful and inciteful conduct for colleagues that impugns their reputation and her demonstration of a pattern of hostility toward people of color, particularly black men, and her significant insubordination.

Matt Bruenig: What exactly did Ms. Oh, an Asian woman, do that is being characterized like this?

-

After the national political director, a manager that Ms. Oh and her colleagues had submitted complaints against, left the organization, Ms. Oh joked in a meeting announcing the departure that “the beatings will continue until morale improves.” The ACLU DEI officer said this comment was racist because the former national political director is a black man.

-

Ms. Oh said in a phone meeting that she was “afraid to raise certain issues” with her direct supervisor. This was also described as racist because that supervisor is a black man.

-

Ms. Oh claimed that another manager “lied to her when she identified the members of management who had ultimate responsibility over whether to proceed with a particular campaign.” This was also racist because that manager is a black woman.

Sounds like it is time to solve for the equilibrium.

Matt Bruenig later put out another column, pointing out that the ACLU’s arguments here are a direct attack on free speech, and that seems obviously right. Free speech used to be what the old real ACLU was all about, so this is strong evidence that we are very much dealing with the new fake ACLU.

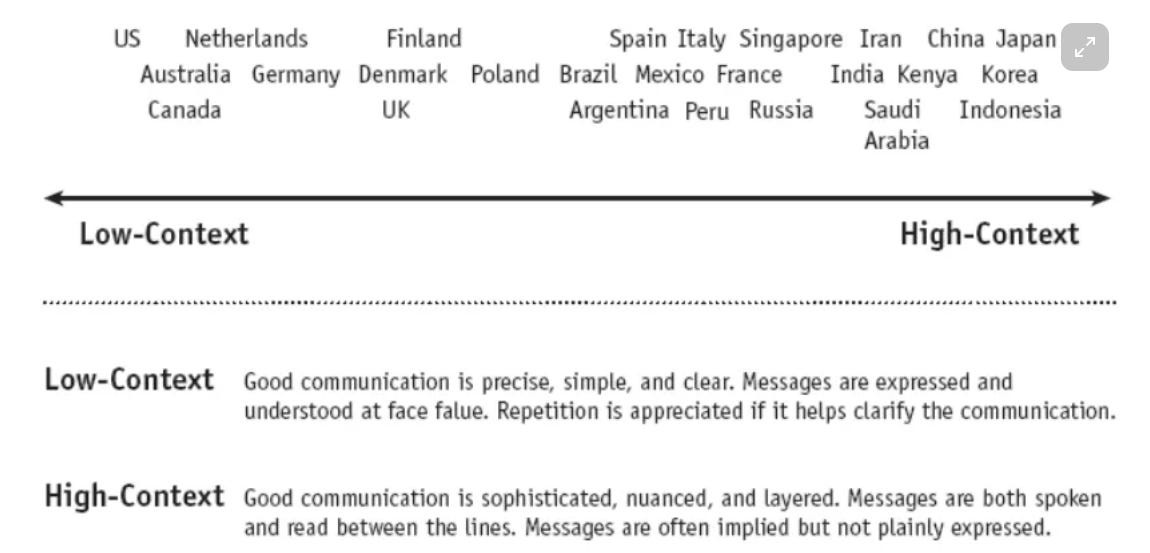

Why do East Asian firms value drinking so highly? The answer given is that by lowering inhibitions it leads to social bonding, which promotes social harmony, which they highly value. It also it allows candid communication, bypassing the inability to speak and deference to authority that is otherwise ubiquitous. You need copious amounts of alcohol to defeat the final boss of the SNAFU principle.

Mostly though I saw this as an excuse to ask better questions. The section on different communication norms has some great stuff, yes we know most of it but I like when such things are well-modeled and spelled out.

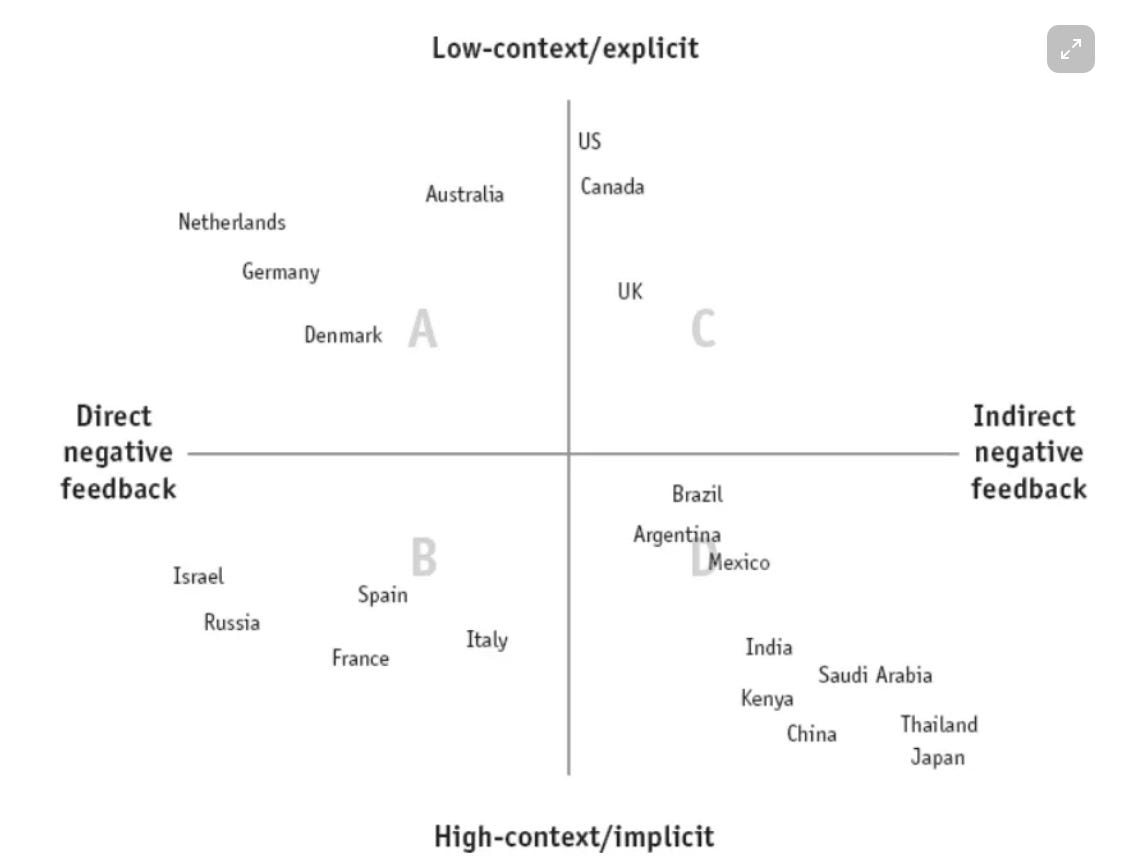

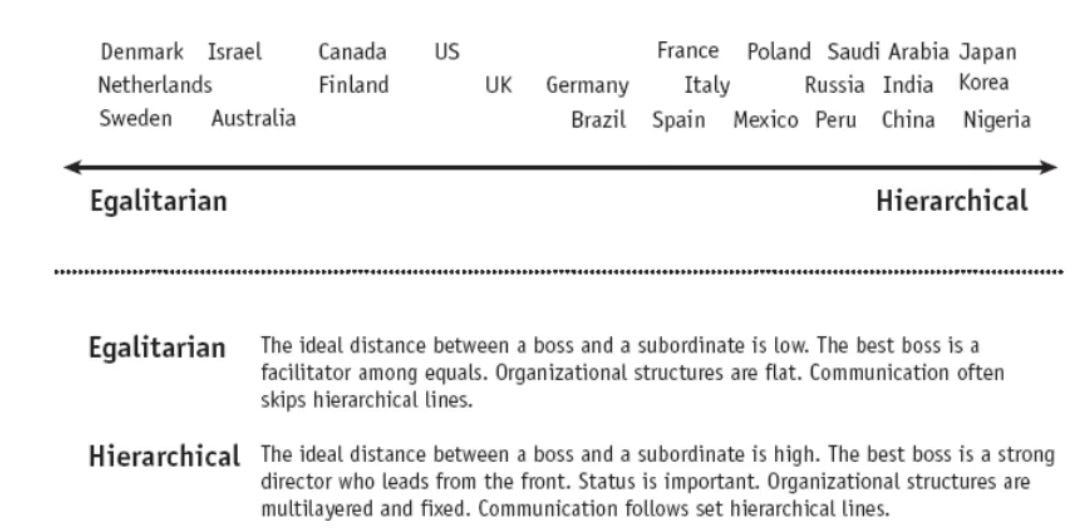

Interesting that USA is dramatically low-context in relative terms, but is near the middle in confrontation and negative feedback. Mostly those two correlate rather strongly, and egalitarian-hierarchical looks like the same graph as well:

So this means that America has this One Weird Trick where it is willing to aggressively communicate directly, without being otherwise confrontational and hostile. When I go even to other places in America that have this less than here in New York, it drives me insane. I bet that this trait does quite a lot of work for us.

This then becomes so important that in many places only men who can and are willing to drink heavily and do associated activities like strip clubs can get ahead, women cannot do it because it is unsafe and if something goes wrong they will get the blame, and men who don’t want to play along also get left out.

How do you solve this? Even if you can create a new organization that does not do these activities, and you then get to hire lots of great sober and female talent, you still need to solve the communication problem, or find a way to survive not doing so. You would have to make dramatic cultural changes that complement this move.

We are about to ask whether we could. We also must stop to ask whether we should.

Cate Hall: Scott has graduated from scissor statements to scissor grants.

Scott Alexander [as an ACX Grants award]: Marcin Kowrygo, $50,000, for the Far Out Initiative. Recently a woman in Scotland was found to be incapable of experiencing any physical or psychological suffering2. Scientists sequenced her genome and found a rare mutation affecting the FAAH-OUT pseudogene, which regulates levels of pain-related neurotransmitters. Marcin and his team are working on pharmacologic and genetic interventions that can imitate her condition. If they succeed, they hope to promote them as painkillers, splice them into farm animals to produce cruelty-free meat, or just give them to everyone all the time and end all suffering in the world forever. They are extremely serious about this.

near: Possibly the best use of $50,000 I’ve seen in my life.

Alice Earendel: Finally, we can create the drug ‘soma,’ from the hit sci-fi novel ‘don’t create the drug soma.’

(Fr, pigs that don’t feel pain, and so can’t be dissuaded by it from eating their tormenters, are the start of a sci-fi horror short story.)

Daniel Eth: Weirdly, my aggressively-pro-this thing tweet which I expected to generate tons of pushback instead largely led to agreement 🤷♂️

Daniel Eth (quoted Tweet): Hot take, but ~this should probably be like the second biggest EA cause area, after X-risk. The fact that things like this are approximately totally neglected by EA makes me think worse of the non-X-risk parts of EA.

I notice my instincts are on the ‘maybe this is not a great idea’ side of the spectrum here. Suffering is a mainly measure, rather than the target metric. Eliminating the measure in general seems like a deeply terrible idea.

Emmett Shear: People who want to end all experience of negative reinforcement either (a) believe you negative reinforcement is not needed for an effective system to maintain homeostasis, or (b) believe you should avoid experiencing some real things happening in your mind.

I think both (a) and (b) are clearly somewhere between “false” and “wrong” and that existence of negative reinforcement is important for system function and it is good to experience what is there.

I do not trust myself to be able to handle this power if offered it, let alone trusting others or society as a whole.

Are there ways we could ‘use this power for good’? In theory, yes, absolutely.

In practice, if we discovered we could, I do not think people would properly stop to think whether we should, and notice I expect this to go quite poorly. This seems like a way to get tons of the things suffering helps you notice are bad, with no way to stop them. This is the ultimate Chesterton’s Fence situation, and the ultimate EA failure mode.

I notice that if you say ‘oh but the animals were genetically modified to not suffer, so everything we are doing is fine’ that my brain responds with a terrified Little “No.” Either what you are doing was fine before, or you did not hereby make it fine. Same thing goes for people.

Again, there are tactical ways to use this to score huge actual net wins. I have zero faith in our ability to do that, any more than we limited cocaine use to dentists.

So I don’t know what to do about this. It seems crazy not to investigate. On the margin, it seems like it must be good. But then I solve for the equilibrium, or what it would do if unleashed fully, and I see huge upside potential but expect it to by default go very badly and see no way to coordinate for a better outcome.

No, the parallel is not lost on me.

Scott Alexander also lays out his other grants here. Overall I am happy with his selections. Definitely some I would not have picked, but some potentially very good picks, and a solid willingness to go outside the box or narrow cause areas relative to what I have seen in past grants, so good show. Balsa got passed over, but such rounds are about positive selection, not negative selection, and we did get actual Georgism.

Robin Hanson says academia has virtues it would be good to see more of elsewhere, but lacks other important virtues from outside academia. He does not see why one could not get the best of both worlds. I think there is some room to combine the best, but not as much as one would hope.

I also question the virtues.

-

Robin says academics invite ‘strong criticism.’ I would instead say that they disregard criticism that does not follow their formal rules or respect their notions of expertise and status, while elevating very particular types of criticism that do follow those protocols, and considering it blameworthy to be vulnerable to such criticism. This does not, in practice, seem to me to be so good for seeking important truth.

-

Robin says they prioritize original insight. I would say they place an emphasis on things being technically new, over what is important to notice or talk about, in a way that does not cause focus on what matters. Some amount of this is good but the obsession with formal credit and being first seems counterproductive at the margin.

-

Robin says they use precise language and announce core claims up front. I do think this is something others need to do more, but also academics use nitpicking on precision to dismiss those who do not play their games, ignoring what people have to say via technical excuses. And the obsession with precision prevents academics from talking in plain language, making them very difficult for others to understand and painful to read, all while making the process of writing and communicating take far longer. This blog is an attempt to do a synthesis, where one is precise in ways that matter without going (too far) overboard.

I notice that these criticisms tie the bad to the good. If you are obsessed with new ideas and precise language and the ability to cite the record, you are going to neglect the most important topics more, because they won’t fit those priorities. Similarly, this focus on language exactness and formal criticisms leads to attempts to use language for prestige.

Bill proposes spending $5 billion on prosecuting those who share online child sexual abuse material (CSAM, can we ever call anything by its name anymore?). This is a good cause, and I strongly agree that prosecute the offenders is the correct way to do this, as opposed to violating civil liberties. However it seems like massive overkill. Do we need to spend this much here? What would we get for it?

Meanwhile Zuckerberg got quite the grilling from various Senators over related issues. The hearing starts with Graham saying ‘you have blood on your hands’ and ‘you have a product that is killing people’ and getting applause. The product in question is an app for sharing photos and videos.

The clip directly linked, which is the one that showed up in my feed as ‘Zuckerberg should be fired for this,’ shows Ted Cruz blaming Zuckerberg for two things.

-

For knowing something he did not have admit to knowing. The idea is that Instagram knew that certain searches might contain CSAM, and put up a warning, offering to help the person get resources or to see results anyway. But as Zuckerberg points out, the whole idea is to trigger this if the results had even a tiny chance of such CSAM, rather than only either blocking or not blocking. So of course he gets roasted for it. Clearly, he should not have offered this message, instead having searches be either blocked or not blocked, no middle ground?

-

For not knowing something he had no reason to know. Meanwhile, Cruz was furious Zuckerberg did not know ‘how many times this message was displayed’ and then demanded that he find out and tell Cruz within five days, as Zuckerberg protested quite reasonably that this was not information he was confident was being tracked. Other than grandstanding what use is that number?

Then the next Tweet is about Hawley hammering Zuckerberg for daring to commission his own study on potential harms, which he claims means they ‘internally know full well’ how terrible Instagram is, and conflating Zuckerberg’s statement that overall the evidence does not provide a demonstrable link between social media (X) and harm to teenagers (Y) with a claim that there is definitely no link between X and Y. Also did you know that if Zuckerberg gets sent an email, he knows its contents?

We have an existence proof that you can make me sympathize with Zuckerberg.

If you were Zuckerberg, you would want to know as little as possible, as well.

I do think Zuckerberg is wrong, and being at best disingenuous, about the weight of the evidence. Haidt lays out a bunch of it here, I understand and buy many of the causal mechanisms and I have not seen the case against made in remotely convincing fashion. However it is strongly in Zuckerberg’s interest not to gather the evidence, and rathe than minimize that problem, we are maximizing it.

I am also with Sam Black that Haidt’s invocation of Pascal’s Wager here is a gigantic red flag, an attempt to sidestep the need to prove the case. It is not a good argument here any more than it is with AI. Social media has massive benefits, and an attempt to restrict it would have massive costs, the same as AI, and here the risks are not even existential. Even in AI where the stakes are everyone dying and the loss of all value in the universe, ‘you cannot fully rule this out’ is a bad argument, that the unworried claim is being made in order to discredit the worried. The calls to action are because the risk in AI is high, not because the risk is not strictly zero.

It is easy to see why this is not the case, and yet.

Miles Brundage: I think at least once a week about how Jeff Bezos could trivially increase the quantity/quality of journalism and improve public discourse by making The Washington Post free and bumping up the budget a bit, and doesn’t do so.

(Don’t know if up to date numbers are available on either front but the annual budget of WaPo is ~500M; Bezos has ~200B)

Daniel Eth: Any other mega-billionaire could too by working out a deal with Bezos/WashPo. Blaming Bezos but not the others is just the Copenhagen Interpretation of Ethics.

Rich people often do things like this. Many media companies are run at a loss, often a high loss, as passion projects or de facto charities. I consider this highly effective altruism. This blog is among those who benefit from such a system, allowing all content to be fully free. Running the Washington Post without subscription revenue and with a bigger budget would be an extreme case, but would doubtless be very good bang for the buck. In a better world, various people would step up and take up a collection.

It should still be noted that Bezos is still doing everyone a solid with the Washington Post. He is not (as far as we can tell) interfering with the content, and he is running it far less ruthlessly as a business than would most replacements.

Dominic Cummings offers more snippets, almost entirely bad news, I disagree with some points but far more accurate than one might think.

A claim by Daniel Treisman in Asterisk that Democracy typically emerges ‘by mistake’ rather than as the inevitable result of a systematic process. This seems to me to be thinking about the problem wrong. Democracy is an inevitable aspiration, and a Shilling point that all can agree upon when there is unhappiness with the current regime or people otherwise want a greater voice. These exist at all development levels, and are enhanced as Democracy becomes more common and its legitimacy enhanced relative to other regimes, and also gains strength with economic development. There are also strong motivations by groups and leaders to try and defend or implement autocratic government as well.

So what determines what succeeds? Treisman’s case is that this is usually what he calls ‘accident,’ that the majority of the time the autocrats that lose power ‘make mistakes.’ This is measured against what he judges, in hindsight, to have been optimal policy for retaining power. Unnecessary concessions, especially ones that fuel greater concessions, are common in this view, as are cracking down in ways that only make things worse.

But already, one sees the problem. And a large part of the problem with autocracy is that such systems are going to not have great insight into the situation and make a lot of mistakes in this sense, see the SNAFU principle. Yes, of course the proximate causes of failure will often be particular mistakes, in the sense that perfect play had a better shot, but that will always be true. How often have Democracies or democratic revolutionary attempts fallen or failed ‘due to mistakes’?

Certainly, if one were to tell the stories of 2016 and 2020 (and no doubt 2024) in America, in terms of those who advocated for and against democracy in various ways or at least believed they were doing so and could counterfactually have been doing so, they are full of huge mistakes on all sides. A lot of those mistakes seem very non-inevitable, very particular and human. As they usually are. When was the last time there were major messy events anywhere, and there were no important mistakes made by this standard?

So I don’t see it the way Daniel sees it, but also I think he is doing good pushback to the extent anyone is thinking of results as inevitable in this sense. But at the same time, looking at the map, the results look highly non-random in terms of who ended up in which camps. Mostly, in the end, it is not an accident, and national character and circumstance functions as fate, if we aren’t considering alternative endings to a few key events (e.g. the American and French Revolutions, World Wars and Cold War).

Also, as was recently pointed out online, all these democracies are highly imperfect, with numerous veto points and special exceptions and other tricks to let the system function in practice.

Scott Alexander in a newly unlocked post discusses the philosophy of fantasy, and in particular speculates that the everything is built around the possibility for someone seemingly ordinary to go save the world and suddenly have lots of agency and power. The only way that anyone can become the hero is if the hero roll is assigned mostly randomly, you are secretly the son of the king or something. Or of course if there is some Origin Story situation where they get ‘superpowers’ they now have to master via a personal journey, Scott does not mention we have invented a second variant with a bunch of different conventions.

Then, because (as Scott coined) Someone Has To And No One Else Will, or as Marvel’s Uncle Ben puts it With Great Power Comes Great Responsibility, they actually go out there and Do Something to perhaps Save the World. Which hopefully makes people realize they also have power, or could have it, and could go out and Do Something themselves, whether or not Saving the World could be involved.

There’s definitely something to that. I also think the other explanations he cites, that no one is creative and everyone enjoys consistent tropes, matter as well.

I also think a lot of it is about being allowed to not let the laws of physical reality get in the way of a good story. If you set your story in a realistic world, people dislike it when something physically impossible or otherwise nonsensical happens, oops.

If you set your story in a mostly realistic world, then decide to disregard the rules when it is inconvenient for the plot or someone’s emotional journey or a really cool moment, people will not like that.

If you set your story in an entirely unrealistic world with all new different stuff, people will get confused, and they will see you have too many degrees of freedom, and it will all seem arbitrary.

Meanwhile, these others have gone ahead and created these conventions that readers understand and that will mostly allow you to pull your shenanigans as needed, and where the reader expects some twists where you pull random rabbits out of hats.

Following the specifics of existing conventions or stories gives you permission to do arbitrary stuff without it having to otherwise be the best stuff. That lets you make or use more interesting choices, rather than being forced to go generic. It also means that you do not have to ‘justify’ your decisions, things do not need to tie together, you do not need to give everything logically away.

In particular, when you are doing it right, this lets you show a potential Chekhov’s Gun without being obligated to fire it, because you could be doing worldbuilding. There is not the same strict ‘every moment must be in here for a reason’ that I often cannot get out of my brain.

This all of course gets turned on its head and ruined once the formula becomes too generic. This is part of what happened to Marvel. Being in the MCU went from ‘lets you do cool different things’ to the opposite, where everything was on rails with slightly different physical laws. No good.

I am continuously dismayed by the ‘everyone is always selling something’ worldview.

Especially when the people espousing it are using it as an argument to sell something.

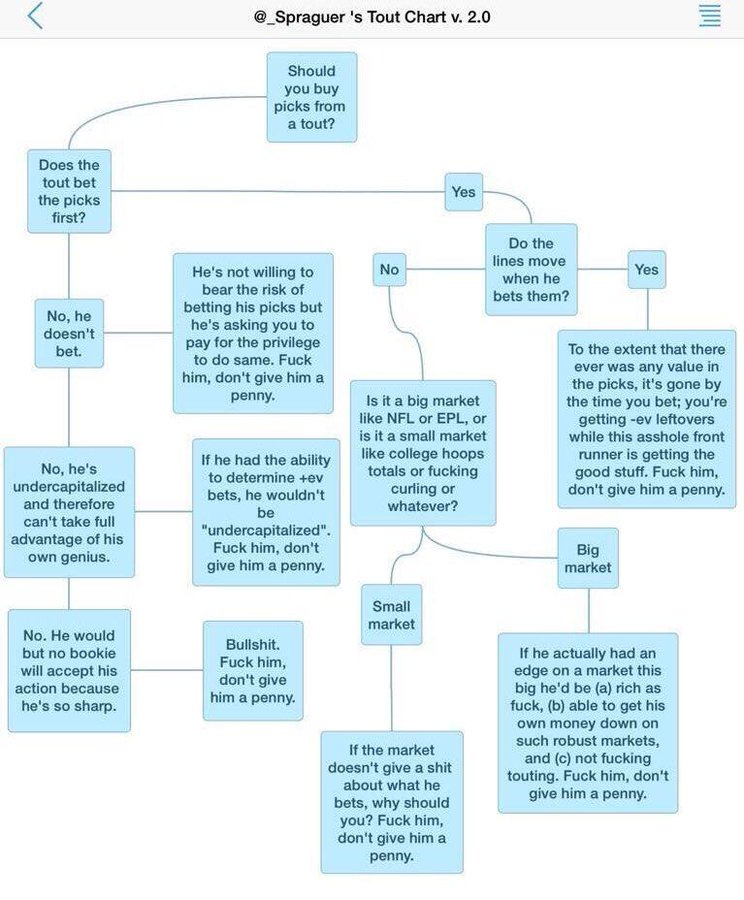

Right Angle Sports: The anti-tout sentiment is more out of control than ever, and it usually comes from the biggest attention seekers on this platform. Remember that EVERYONE is selling something. They may not want money for picks at this moment, but they want views, likes, reposts, and to build their brands for influence and other money making opportunities.

Seth Burn: Chart is undefeated.

Chart indeed remains undefeated.

Every NFL team season in a Simpsons clip. 10/10, no notes.

Walker Harrison is one of many analyzing the new playoff overtime rules. He finds that it is very slightly better (50.3% winning chance) to receive if everyone acts rationally. This is close enough that idiosyncratic considerations would dominate. I continue to presume that it is right to take the ball, that it is not as close as such calculations indicate, and people are overthinking this. The exception is if you think the opponent will make larger mistakes if you kick, whereas taking the ball might ‘force them’ into playing correctly.

College football considering a 14-team playoff where the SEC and Big 10 champions get byes and no one else does, as opposed to current new 12-team playoff where the top 4 conference champions get byes. Somehow other conferences are upset. I get the argument that being SEC or Big 10 champion is much harder and means more, and also they have more leverage, but also he who lives by the superconference dies by the superconference, and this is too many teams. I would stick with 12, or at most expand to 13, one bye for the Big 12 and ACC combined seems reasonable. The other talk is of guaranteed slots for conferences, and I say none of that, if you don’t have two worthy teams why should you get a second slot?

Is a similar reckoning coming for NCAA basketball and march madness? Here’s a headline: SEC’s Sankey doesn’t envision P5-only NCAA Tournament, but ‘things continue to change.’

“You have to give credit to teams like Saint Peter’s a couple years ago, Florida Atlantic’s run,” SEC commissioner Greg Sankey said. “There are great stories and we certainly want to respect those great stories, but things continue to change.”

It is very clear what he is thinking. It is very clear why he is thinking it.

Matt Brown: NEW EXTRA POINTS: I understand the arguments for expanding the NCAA Tournament, and even agree with many of them. But altering automatic bids shouldn’t be part of a “dialogue.” It should be a core principle worth defending.

Seth Burn: 100% agree. The SEC cannot be allowed to come after the autobids. It was bad enough when expansion allowed them to dilute the autobids via play-in games.

I have no objection to 72 teams, so long as all 16 play-in games are at-large teams. Let the bubble sort itself out in Dayton.

I agree as well. Automatic qualifiers for all conference winners into the round of 64, or GTFO, and the dream is dead. If you want to add additional play-in games for those on the bubble right now? Sure, why not, that’s good TV.

Television networks that lack WNBA contracts consider paying Iowa star Caitlin Clark to stay in school for another year to continue being NCAA ratings gold. It did not happen this time, but why not in the future? If she gets to allocate a bunch of wealth, she should be taking bids and capture a good portion of that. If she is worth more in college than in the pros, and it sounds like she was, then we should keep her in college, but of course pay her accordingly.

Regulated American sportsbooks offer in-game potentially highly correlated parlays, they make mistakes with the math, then when the parlays hit they often try to void the ticket and either not pay at all or renegotiate the odds, citing ‘obvious errors.’

In case it needs saying, this is extremely unacceptable behavior, completely out of line. Yes you can void for an obvious error, but the time to do that is before the game begins, and when we say obvious it better be obvious. Your correlation calculator being out of whack? That’s not it.

New Jersey scores points by having none of it. Although it seems they are what one might call overzealous?

Rebuck said he saw Europe’s lax standard for palps and decided to impose much stiffer criteria in New Jersey. Soon after his state legalized sports betting, in 2018, an operator mistakenly listed the Kentucky men’s basketball team as a double-digit underdog instead of a heavy favorite.

After investigating, New Jersey ordered the operator to pay up because Kentucky’s overmatched opponent still had a theoretical chance of winning. On another occasion, an operator was allowed to void bets on a field goal in a football game being longer than two yards because a field goal must be longer than 10 yards and is almost always at least 18.

A flipped large favorite is the canonical valid example of an obvious error that a book is permitted to void. The standard of ‘you cannot void a bet that could possibly lose’ is not a reasonable one. If the game is already over and then you try to void it, it is admittedly tricky, since it means you are ‘taking a free shot’ at the customer, and the magnitude required goes up a lot.

If the game hadn’t started yet or much progressed since the wager, then not letting them void the ticket is absurd. That’s what I would emphasize here. If the game hasn’t started and market odds haven’t moved a ton, I’m sympathetic. If you sit on it in case the house wins anyway? Not so much. If you only realize after the game? Well, tough.

These parlays were indeed big mistakes. The customer here estimates they had a 1% chance of winning and were being paid 200:1 (+20000). That’s over a 100% return in expectation, and you can do this in a lot distinct games, so it will add up fast and is a huge mistake. It is not however an obvious one.

Even in other states that don’t protect the player so much, the customer for a voided parlay has various tools to fight back and get paid. My model is that customers who can perform class and work the system, who have a decent case and are willing to fight, generally win their fights in such situations.

North Carolina governor Roy Cooper cuts an ad for sports betting? This seems pretty not okay?

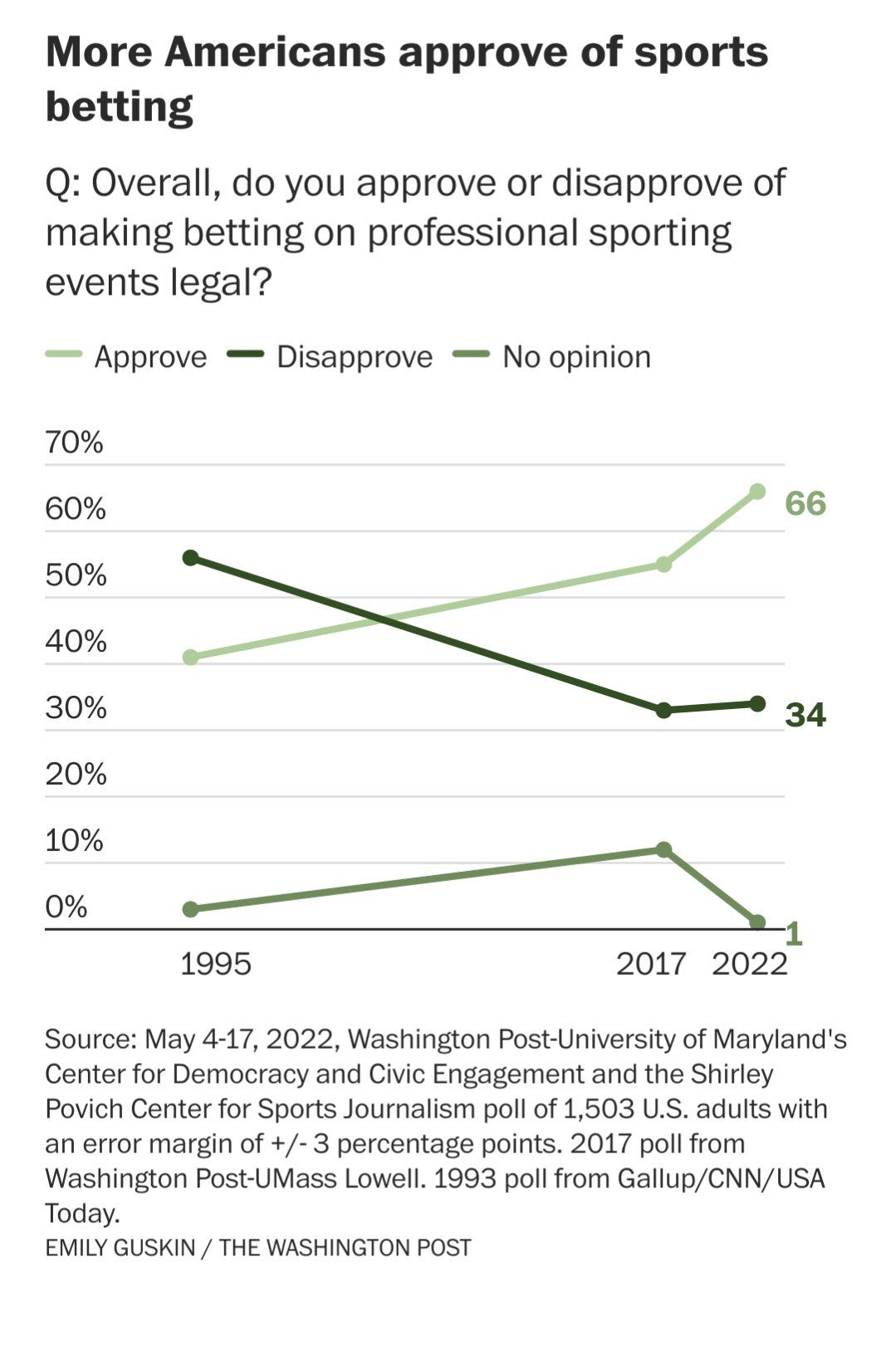

Haralabos Voulgaris: How many years till Sports Gambling addiction becomes a massive problem in the USA. The number of college aged (and younger) kids obsessed with gambling is way too high, and nearly every platform and league are promoting the *fout of gambling to their fans.

And Yes I get the irony, I was always a mass proponent of legalized gambling in the USA but not this version where its this much in your face. Its too much and it shows no signs of abating.

TBD: Universities are pimping out their students for 30 dollar referral fees. It should be a scandal.

I feel similarly. Sports betting is great in moderation, when used wisely, or when played as a game of skill. It can enhance the game rather than take away from it. I love that ESPN will now tell me the odds. It is a great antidote to dumb punditry. Discussion can enhance your understanding, and also train your mind on things like probability and focusing on what matters.

It can also ruin lives, and focus on it can warp and crowd out everything else, both for an individual or for sports in general. Making it available, in expensive form, on everyone’s phone, with constant advertising, is a serious problem. Having everyone with any platform, reach or authority sell out to push this onto young people (and others) is highly toxic.

In some ways it feels like this peaked a year or two ago, but the problem has not gone away.

I think the right model is largely that of cigarettes, and many others are coming around to this as well.

bomani: this is a terrible medium to discuss gambling because it’s unserious place fueled by unserious people. but a serious reckoning is coming and i fear we’ll all be too compromised to properly address it. but it’s guaranteed to happen.

Kyle Boddy: Being a former professional gambler across a lot of domains naturally makes most think that I like the legalization push we’ve seen.

But mostly, I don’t.

Spend years of your life in casinos and you may agree.

People should be free to gamble, no question about it. But I doubt I’ll ever get over it being intertwined with sports broadcasts and league announcements. It’s weird, unsettling, and vaguely predatory.

Just look at how much states are making off addicts and desperate people betting insane parlays. Honestly, that’s the only thing I have a very hard time accepting: The glorification of 20-30% holds on parlays that are beyond ridiculous.

Marketing the inevitable statistical outlier wins of the 12-leg garbage parlay or teaser should likely be made illegal. Possibly the bet itself should be banned, but I’d not go that far yet.

Seth Burn: I agree with a lot of this. My thought is that banning advertising, the same as we did with cigarettes, might be the best we can do.

Advertising and ways of ‘pushing the product’ generally need to get restricted, so you cannot link it to any given brand or offering. It should be taxed. It should ideally come with some modest social stigma. But we of course must accept that it is something people are going to do and that telling people probabilities is legal. We may also want some restrictions on gambling on phones to avoid people falling into bad patterns.

The phone thing is a big deal. Ryann Hassett notes that America used to think that gambling needed to be physically difficult to reach in order to protect us, and now we all have it on our phones and no one seems to be objecting all that much, while we still retain our restrictions on physical gambling locations.

I believe the distinction between sports betting (and I would add poker) on one hand, and other gambling on the other, is a lot of this. People instinctively understand that easy access to slot machines in particular is deeply dangerous and destructive, any kind of luck-based video machine with immediate feedback loops. Whereas things that are tied to events and other people and skill-based decisions are still dangerous, but different, less scary and with more upside.

A few states have legalized virtual slots on phones. I believe they will regret this, and the damage will snowball with time. We can never fully prevent gambling, but we want to not make it easy.

Worst and most shameful of all, of course, is the lottery.

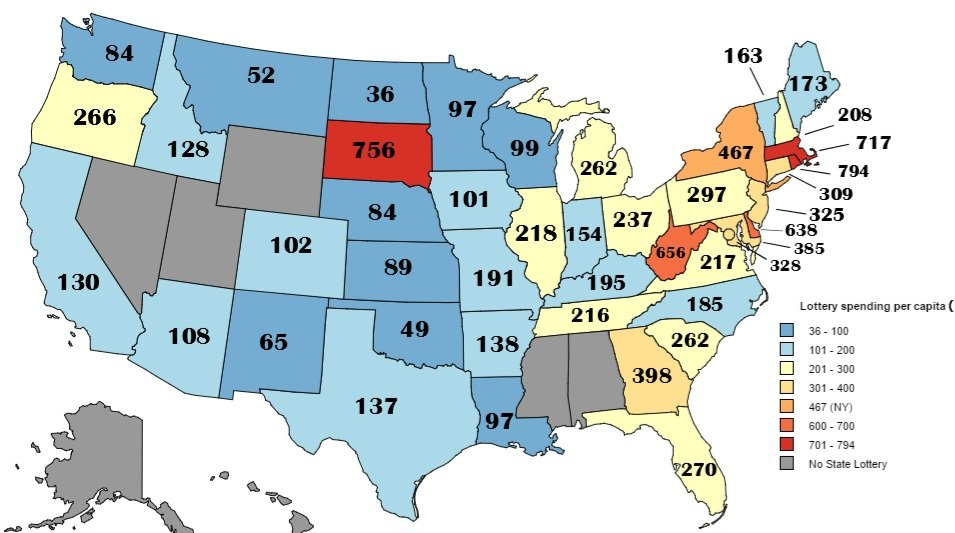

Amazing Maps: Average yearly spending on lottery tickets by state

There is no adjustment for income, so this is even worse for West Virginia and South Dakota than it looks. West Virginia has a median income of $26,187, so that’s over 2% of income. Nevada, of course, has its own issues.

I won’t spoil this, but it is awesome, and wow I cannot believe this was allowed to happen. There is a third trick that I thought was going to be involved to make all this work, but turns out it wasn’t, the other competitors made the errors on their own.

I won’t spoil this either, it is table tennis.

A fun thing: Infinite Craft. Combine two things, get new thing.

People ask me occasionally for my list of tier 1 games, those one Must Play. Alas, I do not have a complete list assembled. I can, however, say that Persona 3 (I played the FES version) is definitely on that list, although it had some clear issues with repetitiveness in its dungeons.

There is now a remake, Persona 3 Reload, which brings it up to ‘Persona 5 standards.’ You don’t get to tell everyone they Must Play more than one such 75-hour odyssey, so I only get to pick one. This is the one.

Persona 3 Reload has a core story and message people need to really understand. It was important even before concerns about AI, it works without it, but now the game is very clearly also about AI, our reaction to AI and existential risk from AI more than almost any other story is about AI, and that has almost nothing to do with Aigis (the game’s actual AI).

Of course I am a huge Shin Megami Tensei fan, so adjust for that in terms of the gameplay. Of the others I have played, I would then likely put Devil Survivor, Persona 5 and SMT IV, in Tier 2, and I’d have Devil Survivor 2, Persona 4, SMT III and SMT IV: Apocalypse in Tier 3.

Persona 5 has the edge in terms of the game play, as it has demon negotiations, custom designed dungeons and monthly opposition that ties into characters, better tension on how to spend your time, better quality of the individual social link stories, and other signs that it learned from the previous two iterations. But the story in Persona 3 wins hands down, and that is more important than all that other stuff.

Persona 4 and Persona 5 are both attempts to get that same message across, retelling the core story using different characters and metaphors. Persona 4 is the lesser work that I am still very happy I played, Persona 5 would be Tier 1 if I didn’t instead choose Persona 3.

I am not going to have this opportunity, but playing 3 over again made me want to make Persona 6. The central plot is obvious, you make everything straight up text.

SMT V was in progress, I had finally gotten around to resuming it, and then suddenly they announce SMT V: Revenge is coming in a few months as a superior edition, so I switched to Persona 3 Reload for now.

You can play the games in any order, except for SMT IV before SMT IV: Apocalypse. The mainline games are more hardcore and grindy, so take that into account.

To be clear, if you do not enjoy the core gameplay of grinding in these games, you mostly will not like them. But I think Persona 3 is pretty great. Memento Mori.

I finished Octopath Traveler 2, and can put it solidly into Tier 2. If you like what this game is doing it is a great time. The whole is greater than the sum of its parts. I’d have two notes. Mechanically, I ended up with a highly effective strategy that worked on essentially everything, allowing me to do enough damage in one go to bypass the scary final phases of most bosses. It felt like the game made this too easy on several levels, and I wonder to what extent other strategies that I missed are close to as good.

In terms of story, it worked great and the whole thing was fair and many things came together nicely, except that there were some things that felt underexplored or like loose ends. It also illustrated some unique things that games can do with story that wouldn’t work in a passive medium. A television adaptation of this wouldn’t capture it at all. You could use the basic plots and still have something but it would be totally different.