Discord faces backlash over age checks after data breach exposed 70,000 IDs

Discord to block adult content unless users verify ages with selfies or IDs.

Discord is facing backlash after announcing that all users will soon be required to verify ages to access adult content by sharing video selfies or uploading government IDs.

According to Discord, it’s relying on AI technology that verifies age on the user’s device, either by evaluating a user’s facial structure or by comparing a selfie to a government ID. Although government IDs will be checked off-device, the selfie data will never leave the user’s device, Discord emphasized. Both forms of data will be promptly deleted after the user’s age is estimated.

In a blog, Discord confirmed that “a phased global rollout” would begin in “early March,” at which point all users globally would be defaulted to “teen-appropriate” experiences.

To unblur sensitive media or access age-restricted channels, the majority of users will likely have to undergo Discord’s age estimation process. Most users will only need to verify their ages once, Discord said, but some users “may be asked to use multiple methods, if more information is needed to assign an age group,” the blog said.

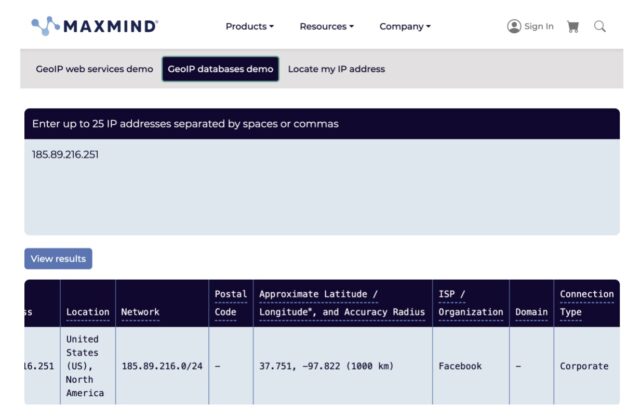

On social media, alarmed Discord users protested the move, doubting whether Discord could be trusted with their most sensitive information after Discord age verification data was recently breached. In October, hackers stole government IDs of 70,000 Discord users from a third-party service that Discord previously trusted to verify ages in the United Kingdom and Australia.

At that time, Discord told users that the hackers were hoping to use the stolen data to “extort a financial ransom from Discord.” In October, Ars Senior Security Editor Dan Goodin joined others warning that “the best advice for people who have submitted IDs to Discord or any other service is to assume they have been or soon will be stolen by hackers and put up for sale or used in extortion scams.”

For bad actors, Discord will likely only become a bigger target as more sensitive information is collected worldwide, users now fear.

It’s no surprise then that hundreds of Discord users on Reddit slammed the decision to expand age verification globally shortly after The Verge broke the news. On a PC gaming subreddit discussing alternative apps for gamers, one user wrote, “Hell, Discord has already had one ID breach, why the fuck would anyone verify on it after that?”

“This is how Discord dies,” another user declared. “Seriously, uploading any kind of government ID to a 3rd party company is just asking for identity theft on a global scale.”

Many users seem just as sketched out about sharing face scans. On the Discord app subreddit, some users vowed to never submit selfies or IDs, fearing that breaches may be inevitable and suspecting Discord of downplaying privacy risks while allowing data harvesting.

Who can access Discord age-check data?

Discord’s system is supposed to make sure that only users have access to their age-check data, which Discord said would never leave their phones.

The company is hoping to convince users that it has tightened security after the breach by partnering with k-ID, an increasingly popular age-check service provider that’s also used by social platforms from Meta and Snap.

However, self-described Discord users on Reddit aren’t so sure, with some going the extra step of picking apart k-ID’s privacy policy to understand exactly how age is verified without data ever leaving the device.

“The wording is pretty unclear and inconsistent even if you dig down to the k-ID privacy policy,” one Redditor speculated. “Seems that ID scans are uploaded to k-ID servers, they delete them, but they also mention using ‘trusted 3rd parties’ for verification, who may or may not delete it.” That user seemingly gave up on finding reassurances in either company’s privacy policies, noting that “everywhere along the chain it reads like ‘we don’t collect your data, we forward it to someone else… .’”

Discord did not immediately respond to Ars’ requests to comment directly on how age checks work without data leaving the device.

To better understand user concerns, Ars reviewed the privacy policies, noting that k-ID said its “facial age estimation” tool is provided by a Swiss company called Privately.

“We don’t actually see any faces that are processed via this solution,” k-ID’s policy said.

That part does seem vague, since Privately isn’t explicitly included in the “we” in that statement. Similarly, further down, the policy more clearly states that “neither k-ID nor its service providers collect any biometric information from users when they interact with the solution. k-ID only receives and stores the outcome of the age check process.” In that section, “service providers” seems to refer to partners like Discord, which integrate k-ID’s age checks, rather than third parties like Privately that actually conduct the age check.

Asked for comment, a k-ID spokesperson told Ars that “the Facial Age Estimation technology runs entirely on the user’s device in real time when they are performing the verification. That means there is no video or image transmitted, and the estimation happens locally. The only data to leave the device is a pass/fail of the age threshold which is what Discord receives (and some performance metrics that contain no personal data).”

K-ID’s spokesperson told Ars that no third parties store personal data shared during age checks.

“k-ID, does not receive personal data from Discord when performing age-assurance,” k-ID’s spokesperson said. “This is an intentional design choice grounded in data protection and data minimisation principles. There is no storage of personal data by k-ID or any third parties, regardless of the age assurance method used.”

Turning to Privately’s website, that offers a little more information on how on-device age estimation works, while providing likely more reassurances that data won’t leave devices.

Privately’s services were designed to minimize data collection and prioritize anonymity to comply with the European Union’s General Data Protection Regulation, Privately noted. “No user biometric or personal data is captured or transmitted,” Privately’s website said, while bragging that “our secret sauce is our ability to run very performant models on the user device or user browser to implement a privacy-centric solution.”

The company’s privacy policy offers slightly more detail, noting that the company avoids relying on the cloud while running AI models on local devices.

“Our technology is built using on-device edge-AI that facilitates data minimization so as to maximise user privacy and data protection,” the privacy policy said. “The machine learning based technology that we use (for age estimation and safeguarding) processes user’s data on their own devices, thereby avoiding the need for us or for our partners to export user’s personal data onto any form of cloud services.”

Additionally, the policy said, “our technology solutions are built to operate mostly on user devices and to avoid sending any of the user’s personal data to any form of cloud service. For this we use specially adapted machine learning models that can be either deployed or downloaded on the user’s device. This avoids the need to transmit and retain user data outside the user device in order to provide the service.”

Finally, Privately explained that it also employs a “double blind” implementation to avoid knowing the origin of age estimation requests. That supposedly ensures that Privately only knows the result of age checks and cannot connect the result to a user on a specific platform.

Discord expects to lose users

Some Discord users may never be asked to verify their ages, even if they try to access age-restricted content. Savannah Badalich, Discord’s global head of product policy, told The Verge that Discord “is also rolling out an age inference model that analyzes metadata, like the types of games a user plays, their activity on Discord, and behavioral signals like signs of working hours or the amount of time they spend on Discord.”

“If we have a high confidence that they are an adult, they will not have to go through the other age verification flows,” Badalich said.

Badalich confirmed that Discord is bracing for some users to leave Discord over the update but suggested that “we’ll find other ways to bring users back.”

On Reddit, Discord users complained that age verification is easy to bypass, forcing adults to share sensitive information without keeping kids away from harmful content. In Australia, where Discord’s policy first rolled out, some kids claimed that Discord never even tried to estimate their ages, while others found it easy to trick k-ID by using AI videos or altering their appearances to look older. A teen girl relied on fake eyelashes to do the trick, while one 13-year-old boy was estimated to be over 30 years old after scrunching his face to seem more wrinkled.

Badalich told The Verge that Discord doesn’t expect the tools to work perfectly but acts quickly to block workarounds, like teens using Death Stranding‘s photo mode to skirt age gates. However, questions remain about the accuracy of Discord’s age estimation model in assessing minors’ ages, in particular.

It may be noteworthy that Privately only claims that its technology is “proven to be accurate to within 1.3 years, for 18-20-year-old faces, regardless of a customer’s gender or ethnicity.” But experts told Ars last year that flawed age-verification technology still frequently struggles to distinguish minors from adults, especially when differentiating between a 17- and 18-year-old, for example.

Perhaps notably, Discord’s prior scandal occurred after hackers stole government IDs that users shared as part of the appeal process in order to fix an incorrect age estimation. Appeals could remain the most vulnerable part of this process, The Verge’s report indicated. Badalich confirmed that a third-party vendor would be reviewing appeals, with the only reassurance for users seemingly that IDs shared during appeals “are deleted quickly—in most cases, immediately after age confirmation.”

On Reddit, Discord fans awaiting big changes remain upset. A disgruntled Discord user suggested that “corporations like Facebook and Discord, will implement easily passable, cheapest possible, bare minimum under the law verification, to cover their ass from a lawsuit,” while forcing users to trust that their age-check data is secure.

Another user joked that she’d be more willing to trust that selfies never leave a user’s device if Discord were “willing to pay millions to every user” whose “scan does leave a device.”

This story was updated on February 9 to clarify that government IDs are checked off-device.

Discord faces backlash over age checks after data breach exposed 70,000 IDs Read More »