Reddit sues Anthropic over AI scraping that retained users’ deleted posts

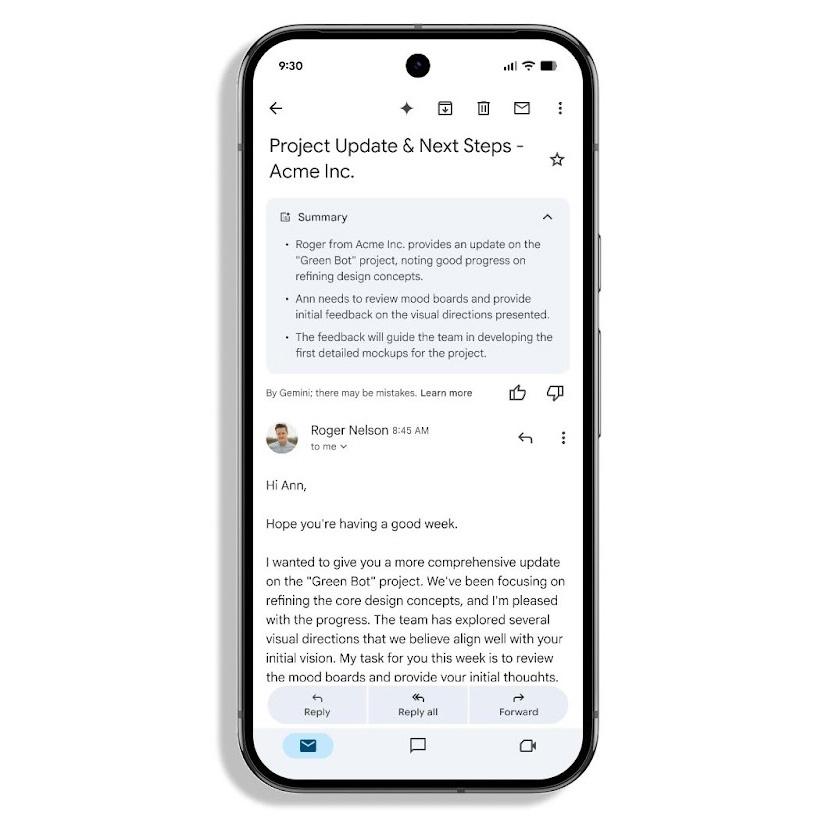

Of particular note, Reddit pointed out that Anthropic’s Claude models will help power Amazon’s revamped Alexa, following about $8 billion in Amazon investments in the AI company since 2023.

“By commercially licensing Claude for use in several of Amazon’s commercial offerings, Anthropic reaps significant profit from a technology borne of Reddit content,” Reddit alleged, and “at the expense of Reddit.” Anthropic’s unauthorized scraping also burdens Reddit’s servers, threatening to degrade the user experience and costing Reddit additional damages, Reddit alleged.

To rectify alleged harms, Reddit is hoping a jury will award not just damages covering Reddit’s alleged losses but also punitive damages due to Anthropic’s alleged conduct that is “willful, malicious, and undertaken with conscious disregard for Reddit’s contractual obligations to its users and the privacy rights of those users.”

Without an injunction, Reddit users allegedly have “no way of knowing” if Anthropic scraped their data, Reddit alleged. They also are “left to wonder whether any content they deleted after Claude began training on Reddit data nevertheless remains available to Anthropic and the likely tens of millions (and possibly growing) of Claude users,” Reddit said.

In a statement provided to Ars, Anthropic’s spokesperson confirmed that the AI company plans to fight Reddit’s claims.

“We disagree with Reddit’s claims and will defend ourselves vigorously,” Anthropic’s spokesperson said.

Amazon declined to comment. Reddit did not immediately respond to Ars’ request to comment. But Reddit’s chief legal officer, Ben Lee, told The New York Times that Reddit “will not tolerate profit-seeking entities like Anthropic commercially exploiting Reddit content for billions of dollars without any return for redditors or respect for their privacy.”

“AI companies should not be allowed to scrape information and content from people without clear limitations on how they can use that data,” Lee said. “Licensing agreements enable us to enforce meaningful protections for our users, including the right to delete your content, user privacy protections, and preventing users from being spammed using this content.”

Reddit sues Anthropic over AI scraping that retained users’ deleted posts Read More »