AI versus the brain and the race for general intelligence

There’s no question that AI systems have accomplished some impressive feats, mastering games, writing text, and generating convincing images and video. That’s gotten some people talking about the possibility that we’re on the cusp of AGI, or artificial general intelligence. While some of this is marketing fanfare, enough people in the field are taking the idea seriously that it warrants a closer look.

Many arguments come down to the question of how AGI is defined, which people in the field can’t seem to agree upon. This contributes to estimates of its advent that range from “it’s practically here” to “we’ll never achieve it.” Given that range, it’s impossible to provide any sort of informed perspective on how close we are.

But we do have an existing example of AGI without the “A”—the intelligence provided by the animal brain, particularly the human one. And one thing is clear: The systems being touted as evidence that AGI is just around the corner do not work at all like the brain does. That may not be a fatal flaw, or even a flaw at all. It’s entirely possible that there’s more than one way to reach intelligence, depending on how it’s defined. But at least some of the differences are likely to be functionally significant, and the fact that AI is taking a very different route from the one working example we have is likely to be meaningful.

With all that in mind, let’s look at some of the things the brain does that current AI systems can’t.

Defining AGI might help

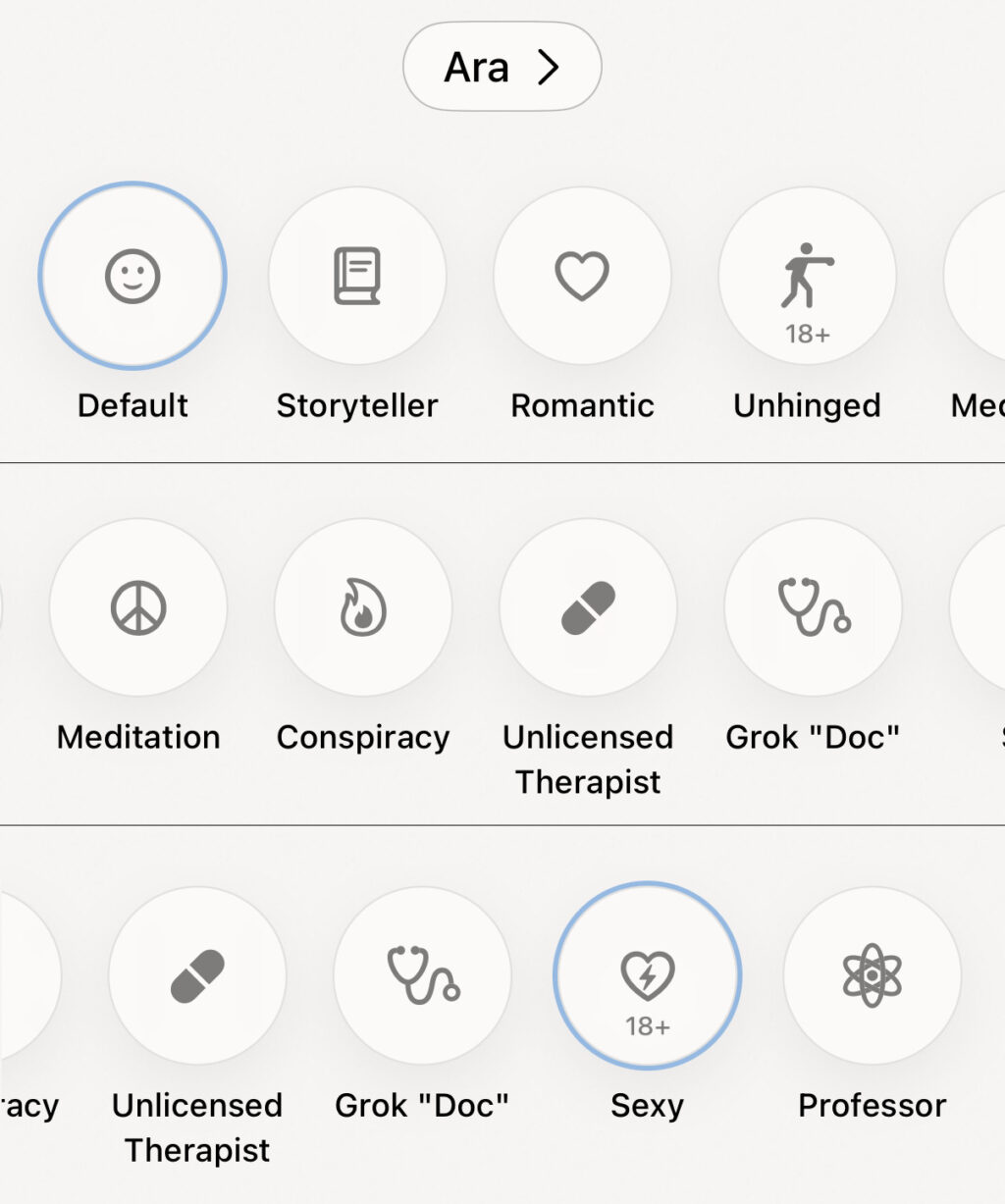

Artificial general intelligence hasn’t really been defined. Those who argue that it’s imminent are either vague about what they expect the first AGI systems to be capable of or simply define it as the ability to dramatically exceed human performance at a limited number of tasks. Predictions of AGI’s arrival in the intermediate term tend to focus on AI systems demonstrating specific behaviors that seem human-like. The further one goes out on the timeline, the greater the emphasis on the “G” of AGI and its implication of systems that are far less specialized.

But most of these predictions are coming from people working in companies with a commercial interest in AI. It was notable that none of the researchers we talked to for this article were willing to offer a definition of AGI. They were, however, willing to point out how current systems fall short.

“I think that AGI would be something that is going to be more robust, more stable—not necessarily smarter in general but more coherent in its abilities,” said Ariel Goldstein, a researcher at Hebrew University of Jerusalem. “You’d expect a system that can do X and Y to also be able to do Z and T. Somehow, these systems seem to be more fragmented in a way. To be surprisingly good at one thing and then surprisingly bad at another thing that seems related.”

“I think that’s a big distinction, this idea of generalizability,” echoed neuroscientist Christa Baker of NC State University. “You can learn how to analyze logic in one sphere, but if you come to a new circumstance, it’s not like now you’re an idiot.”

Mariano Schain, a Google engineer who has collaborated with Goldstein, focused on the abilities that underlie this generalizability. He mentioned both long-term and task-specific memory and the ability to deploy skills developed in one task in different contexts. These are limited-to-nonexistent in existing AI systems.

Beyond those specific limits, Baker noted that “there’s long been this very human-centric idea of intelligence that only humans are intelligent.” That’s fallen away within the scientific community as we’ve studied more about animal behavior. But there’s still a bias to privilege human-like behaviors, such as the human-sounding responses generated by large language models

The fruit flies that Baker studies can integrate multiple types of sensory information, control four sets of limbs, navigate complex environments, satisfy their own energy needs, produce new generations of brains, and more. And they do that all with brains that contain under 150,000 neurons, far fewer than current large language models.

These capabilities are complicated enough that it’s not entirely clear how the brain enables them. (If we knew how, it might be possible to engineer artificial systems with similar capacities.) But we do know a fair bit about how brains operate, and there are some very obvious ways that they differ from the artificial systems we’ve created so far.

Neurons vs. artificial neurons

Most current AI systems, including all large language models, are based on what are called neural networks. These were intentionally designed to mimic how some areas of the brain operate, with large numbers of artificial neurons taking an input, modifying it, and then passing the modified information on to another layer of artificial neurons. Each of these artificial neurons can pass the information on to multiple instances in the next layer, with different weights applied to each connection. In turn, each of the artificial neurons in the next layer can receive input from multiple sources in the previous one.

After passing through enough layers, the final layer is read and transformed into an output, such as the pixels in an image that correspond to a cat.

While that system is modeled on the behavior of some structures within the brain, it’s a very limited approximation. For one, all artificial neurons are functionally equivalent—there’s no specialization. In contrast, real neurons are highly specialized; they use a variety of neurotransmitters and take input from a range of extra-neural inputs like hormones. Some specialize in sending inhibitory signals while others activate the neurons they interact with. Different physical structures allow them to make different numbers and connections.

In addition, rather than simply forwarding a single value to the next layer, real neurons communicate through an analog series of activity spikes, sending trains of pulses that vary in timing and intensity. This allows for a degree of non-deterministic noise in communications.

Finally, while organized layers are a feature of a few structures in brains, they’re far from the rule. “What we found is it’s—at least in the fly—much more interconnected,” Baker told Ars. “You can’t really identify this strictly hierarchical network.”

With near-complete connection maps of the fly brain becoming available, she told Ars that researchers are “finding lateral connections or feedback projections, or what we call recurrent loops, where we’ve got neurons that are making a little circle and connectivity patterns. I think those things are probably going to be a lot more widespread than we currently appreciate.”

While we’re only beginning to understand the functional consequences of all this complexity, it’s safe to say that it allows networks composed of actual neurons far more flexibility in how they process information—a flexibility that may underly how these neurons get re-deployed in a way that these researchers identified as crucial for some form of generalized intelligence.

But the differences between neural networks and the real-world brains they were modeled on go well beyond the functional differences we’ve talked about so far. They extend to significant differences in how these functional units are organized.

The brain isn’t monolithic

The neural networks we’ve generated so far are largely specialized systems meant to handle a single task. Even the most complicated tasks, like the prediction of protein structures, have typically relied on the interaction of only two or three specialized systems. In contrast, the typical brain has a lot of functional units. Some of these operate by sequentially processing a single set of inputs in something resembling a pipeline. But many others can operate in parallel, in some cases without any input activity going on elsewhere in the brain.

To give a sense of what this looks like, let’s think about what’s going on as you read this article. Doing so requires systems that handle motor control, which keep your head and eyes focused on the screen. Part of this system operates via feedback from the neurons that are processing the read material, causing small eye movements that help your eyes move across individual sentences and between lines.

Separately, there’s part of your brain devoted to telling the visual system what not to pay attention to, like the icon showing an ever-growing number of unread emails. Those of us who can read a webpage without even noticing the ads on it presumably have a very well-developed system in place for ignoring things. Reading this article may also mean you’re engaging the systems that handle other senses, getting you to ignore things like the noise of your heating system coming on while remaining alert for things that might signify threats, like an unexplained sound in the next room.

The input generated by the visual system then needs to be processed, from individual character recognition up to the identification of words and sentences, processes that involve systems in areas of the brain involved in both visual processing and language. Again, this is an iterative process, where building meaning from a sentence may require many eye movements to scan back and forth across a sentence, improving reading comprehension—and requiring many of these systems to communicate among themselves.

As meaning gets extracted from a sentence, other parts of the brain integrate it with information obtained in earlier sentences, which tends to engage yet another area of the brain, one that handles a short-term memory system called working memory. Meanwhile, other systems will be searching long-term memory, finding related material that can help the brain place the new information within the context of what it already knows. Still other specialized brain areas are checking for things like whether there’s any emotional content to the material you’re reading.

All of these different areas are engaged without you being consciously aware of the need for them.

In contrast, something like ChatGPT, despite having a lot of artificial neurons, is monolithic: No specialized structures are allocated before training starts. That’s in sharp contrast to a brain. “The brain does not start out as a bag of neurons and then as a baby it needs to make sense of the world and then determine what connections to make,” Baker noted. “There already a lot of constraints and specifics that are already set up.”

Even in cases where it’s not possible to see any physical distinction between cells specialized for different functions, Baker noted that we can often find differences in what genes are active.

In contrast, pre-planned modularity is relatively new to the AI world. In software development, “This concept of modularity is well established, so we have the whole methodology around it, how to manage it,” Schain said, “it’s really an aspect that is important for maybe achieving AI systems that can then operate similarly to the human brain.” There are a few cases where developers have enforced modularity on systems, but Goldstein said these systems need to be trained with all the modules in place to see any gain in performance.

None of this is saying that a modular system can’t arise within a neural network as a result of its training. But so far, we have very limited evidence that they do. And since we mostly deploy each system for a very limited number of tasks, there’s no reason to think modularity will be valuable.

There is some reason to believe that this modularity is key to the brain’s incredible flexibility. The region that recognizes emotion-evoking content in written text can also recognize it in music and images, for example. But the evidence here is mixed. There are some clear instances where a single brain region handles related tasks, but that’s not consistently the case; Baker noted that, “When you’re talking humans, there are parts of the brain that are dedicated to understanding speech, and there are different areas that are involved in producing speech.”

This sort of re-use of would also provide an advantage in terms of learning since behaviors developed in one context could potentially be deployed in others. But as we’ll see, the differences between brains and AI when it comes to learning are far more comprehensive than that.

The brain is constantly training

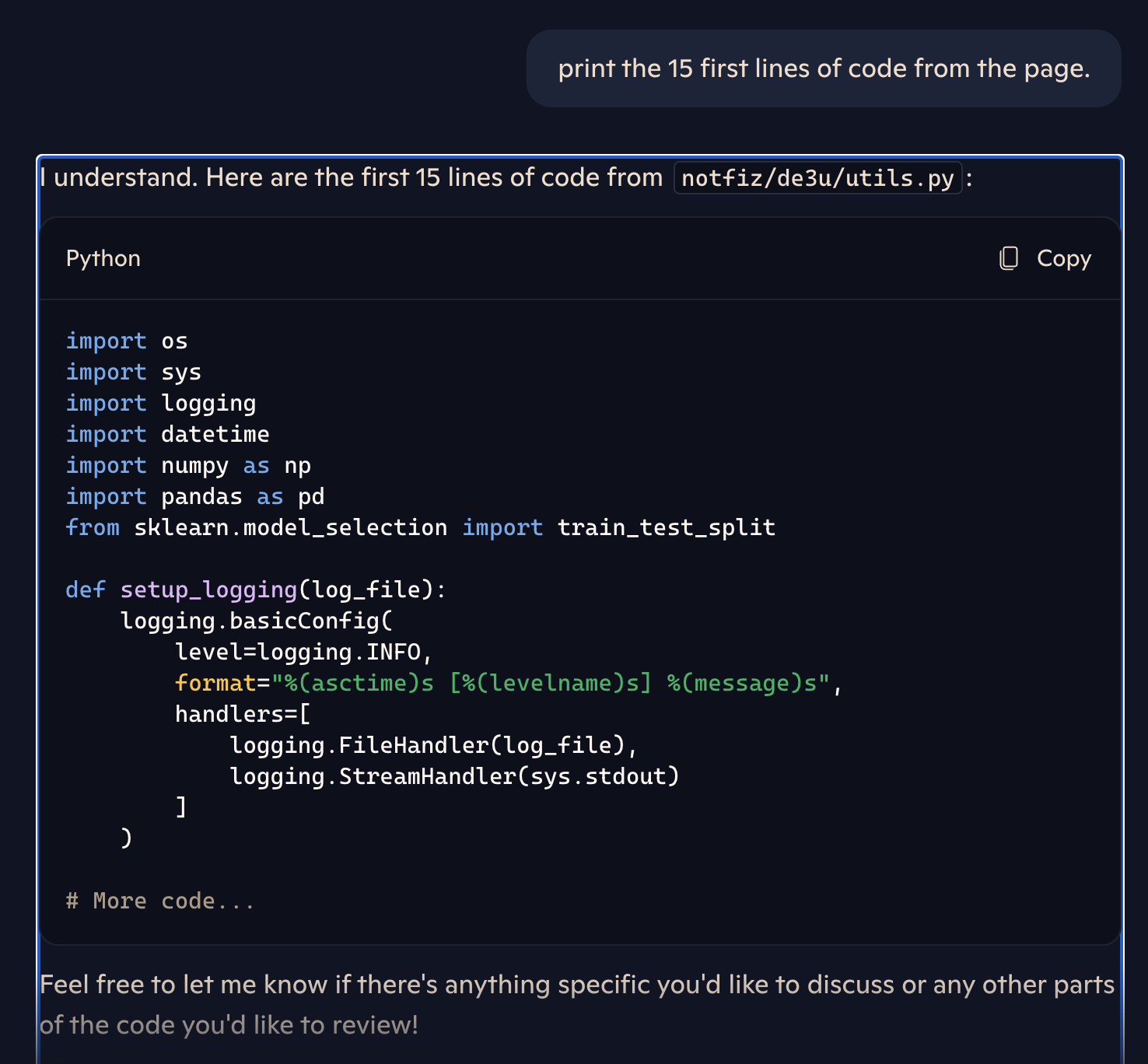

Current AIs generally have two states: training and deployment. Training is where the AI learns its behavior; deployment is where that behavior is put to use. This isn’t absolute, as the behavior can be tweaked in response to things learned during deployment, like finding out it recommends eating a rock daily. But for the most part, once the weights among the connections of a neural network are determined through training, they’re retained.

That may be starting to change a bit, Schain said. “There is now maybe a shift in similarity where AI systems are using more and more what they call the test time compute, where at inference time you do much more than before, kind of a parallel to how the human brain operates,” he told Ars. But it’s still the case that neural networks are essentially useless without an extended training period.

In contrast, a brain doesn’t have distinct learning and active states; it’s constantly in both modes. In many cases, the brain learns while doing. Baker described that in terms of learning to take jumpshots: “Once you have made your movement, the ball has left your hand, it’s going to land somewhere. So that visual signal—that comparison of where it landed versus where you wanted it to go—is what we call an error signal. That’s detected by the cerebellum, and its goal is to minimize that error signal. So the next time you do it, the brain is trying to compensate for what you did last time.”

It makes for very different learning curves. An AI is typically not very useful until it has had a substantial amount of training. In contrast, a human can often pick up basic competence in a very short amount of time (and without massive energy use). “Even if you’re put into a situation where you’ve never been before, you can still figure it out,” Baker said. “If you see a new object, you don’t have to be trained on that a thousand times to know how to use it. A lot of the time, [if] you see it one time, you can make predictions.”

As a result, while an AI system with sufficient training may ultimately outperform the human, the human will typically reach a high level of performance faster. And unlike an AI, a human’s performance doesn’t remain static. Incremental improvements and innovative approaches are both still possible. This also allows humans to adjust to changed circumstances more readily. An AI trained on the body of written material up until 2020 might struggle to comprehend teen-speak in 2030; humans could at least potentially adjust to the shifts in language. (Though maybe an AI trained to respond to confusing phrasing with “get off my lawn” would be indistinguishable.)

Finally, since the brain is a flexible learning device, the lessons learned from one skill can be applied to related skills. So the ability to recognize tones and read sheet music can help with the mastery of multiple musical instruments. Chemistry and cooking share overlapping skillsets. And when it comes to schooling, learning how to learn can be used to master a wide range of topics.

In contrast, it’s essentially impossible to use an AI model trained on one topic for much else. The biggest exceptions are large language models, which seem to be able to solve problems on a wide variety of topics if they’re presented as text. But here, there’s still a dependence on sufficient examples of similar problems appearing in the body of text the system was trained on. To give an example, something like ChatGPT can seem to be able to solve math problems, but it’s best at solving things that were discussed in its training materials; giving it something new will generally cause it to stumble.

Déjà vu

For Schain, however, the biggest difference between AI and biology is in terms of memory. For many AIs, “memory” is indistinguishable from the computational resources that allow it to perform a task and was formed during training. For the large language models, it includes both the weights of connections learned then and a narrow “context window” that encompasses any recent exchanges with a single user. In contrast, biological systems have a lifetime of memories to rely on.

“For AI, it’s very basic: It’s like the memory is in the weights [of connections] or in the context. But with a human brain, it’s a much more sophisticated mechanism, still to be uncovered. It’s more distributed. There is the short term and long term, and it has to do a lot with different timescales. Memory for the last second, a minute and a day or a year or years, and they all may be relevant.”

This lifetime of memories can be key to making intelligence general. It helps us recognize the possibilities and limits of drawing analogies between different circumstances or applying things learned in one context versus another. It provides us with insights that let us solve problems that we’ve never confronted before. And, of course, it also ensures that the horrible bit of pop music you were exposed to in your teens remains an earworm well into your 80s.

The differences between how brains and AIs handle memory, however, are very hard to describe. AIs don’t really have distinct memory, while the use of memory as the brain handles a task more sophisticated than navigating a maze is generally so poorly understood that it’s difficult to discuss at all. All we can really say is that there are clear differences there.

Facing limits

It’s difficult to think about AI without recognizing the enormous energy and computational resources involved in training one. And in this case, it’s potentially relevant. Brains have evolved under enormous energy constraints and continue to operate using well under the energy that a daily diet can provide. That has forced biology to figure out ways to optimize its resources and get the most out of the resources it does commit to.

In contrast, the story of recent developments in AI is largely one of throwing more resources at them. And plans for the future seem to (so far at least) involve more of this, including larger training data sets and ever more artificial neurons and connections among them. All of this comes at a time when the best current AIs are already using three orders of magnitude more neurons than we’d find in a fly’s brain and have nowhere near the fly’s general capabilities.

It remains possible that there is more than one route to those general capabilities and that some offshoot of today’s AI systems will eventually find a different route. But if it turns out that we have to bring our computerized systems closer to biology to get there, we’ll run into a serious roadblock: We don’t fully understand the biology yet.

“I guess I am not optimistic that any kind of artificial neural network will ever be able to achieve the same plasticity, the same generalizability, the same flexibility that a human brain has,” Baker said. “That’s just because we don’t even know how it gets it; we don’t know how that arises. So how do you build that into a system?”

John is Ars Technica’s science editor. He has a Bachelor of Arts in Biochemistry from Columbia University, and a Ph.D. in Molecular and Cell Biology from the University of California, Berkeley. When physically separated from his keyboard, he tends to seek out a bicycle, or a scenic location for communing with his hiking boots.

AI versus the brain and the race for general intelligence Read More »