Home Microsoft 365 plans use Copilot AI features as pretext for a price hike

Microsoft hasn’t said for how long this “limited time” offer will last, but presumably it will only last for a year or two to help ease the transition between the old pricing and the new pricing. New subscribers won’t be offered the option to pay for the Classic plans.

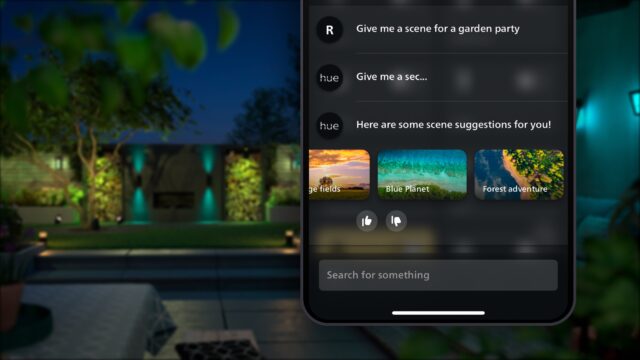

Subscribers on the Personal and Family plans can’t use Copilot indiscriminately; they get 60 AI credits per month to use across all the Office apps, credits that can also be used to generate images or text in Windows apps like Designer, Paint, and Notepad. It’s not clear how these will stack with the 15 credits that Microsoft offers for free for apps like Designer, or the 50 credits per month Microsoft is handing out for Image Cocreator in Paint.

Those who want unlimited usage and access to the newest AI models are still asked to pay $20 per month for a Copilot Pro subscription.

As Microsoft notes, this is the first price increase it has ever implemented for the personal Microsoft 365 subscriptions in the US, which have stayed at the same levels since being introduced as Office 365 over a decade ago. Pricing for the business plans and pricing in other countries has increased before. Pricing for Office Home 2024 ($150) and Office Home & Business 2024 ($250), which can’t access Copilot or other Microsoft 365 features, is also the same as it was before.

Home Microsoft 365 plans use Copilot AI features as pretext for a price hike Read More »