The Super Bowl’s best and wackiest AI commercials

Superb Owl News —

It’s nothing like “crypto bowl” in 2022, but AI made a notable splash during the big game.

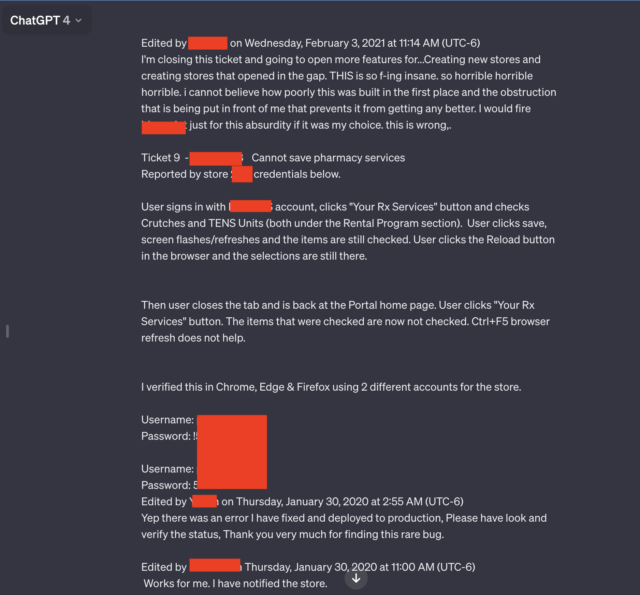

Enlarge / A still image from BodyArmor’s 2024 “Field of Fake” Super Bowl commercial.

BodyArmor

Heavily hyped tech products have a history of appearing in Super Bowl commercials during football’s biggest game—including the Apple Macintosh in 1984, dot-com companies in 2000, and cryptocurrency firms in 2022. In 2024, the hot tech in town is artificial intelligence, and several companies showed AI-related ads at Super Bowl LVIII. Here’s a rundown of notable appearances that range from serious to wacky.

Microsoft Copilot

Microsoft Game Day Commercial | Copilot: Your everyday AI companion.

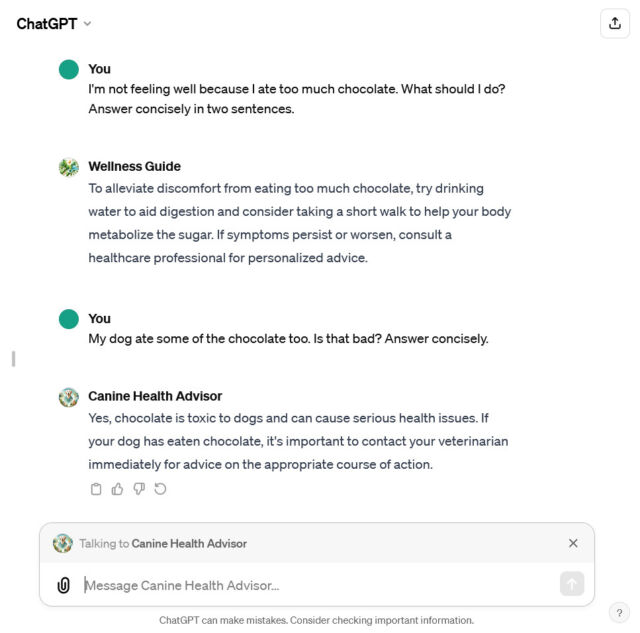

It’s been a year since Microsoft launched the AI assistant Microsoft Copilot (as “Bing Chat“), and Microsoft is leaning heavily into its AI-assistant technology, which is powered by large language models from OpenAI. In Copilot’s first-ever Super Bowl commercial, we see scenes of various people with defiant text overlaid on the screen: “They say I will never open my own business or get my degree. They say I will never make my movie or build something. They say I’m too old to learn something new. Too young to change the world. But I say watch me.”

Then the commercial shows Copilot creating solutions to some of these problems, with prompts like, “Generate storyboard images for the dragon scene in my script,” “Write code for my 3d open world game,” “Quiz me in organic chemistry,” and “Design a sign for my classic truck repair garage Mike’s.”

Of course, since generative AI is an unfinished technology, many of these solutions are more aspirational than practical at the moment. On Bluesky, writer Ed Zitron put Microsoft’s truck repair logo to the test and saw results that weren’t nearly as polished as those seen in the commercial. On X, others have criticized and poked fun at the “3d open world game” generation prompt, which is a complex task that would take far more than a single, simple prompt to produce useful code.

Google Pixel 8 “Guided Frame” feature

Javier in Frame | Google Pixel SB Commercial 2024.

Instead of focusing on generative aspects of AI, Google’s commercial showed off a feature called “Guided Frame” on the Pixel 8 phone that uses machine vision technology and a computer voice to help people with blindness or low vision to take photos by centering the frame on a face or multiple faces. Guided Frame debuted in 2022 in conjunction with the Google Pixel 7.

The commercial tells the story of a person named Javier, who says, “For many people with blindness or low vision, there hasn’t always been an easy way to capture daily life.” We see a simulated blurry first-person view of Javier holding a smartphone and hear a computer-synthesized voice describing what the AI model sees, directing the person to center on a face to snap various photos and selfies.

Considering the controversies that generative AI currently generates (pun intended), it’s refreshing to see a positive application of AI technology used as an accessibility feature. Relatedly, an app called Be My Eyes (powered by OpenAI’s GPT-4V) also aims to help low-vision people interact with the world.

Despicable Me 4

Despicable Me 4 – Minion Intelligence (Big Game Spot).

So far, we’ve covered a couple attempts to show AI-powered products as positive features. Elsewhere in Super Bowl ads, companies weren’t as generous about the technology. In an ad for the film Despicable Me 4, we see two Minions creating a series of terribly disfigured AI-generated still images reminiscent of Stable Diffusion 1.4 from 2022. There’s three-legged people doing yoga, a painting of Steve Carell and Will Ferrell as Elizabethan gentlemen, a handshake with too many fingers, people eating spaghetti in a weird way, and a pair of people riding dachshunds in a race.

The images are paired with an earnest voiceover that says, “Artificial intelligence is changing the way we see the world, showing us what we never thought possible, transforming the way we do business, and bringing family and friends closer together. With artificial intelligence, the future is in good hands.” When the voiceover ends, the camera pans out to show hundreds of Minions generating similarly twisted images on computers.

Speaking of image synthesis at the Super Bowl, people mistook a Christian commercial created by He Gets Us, LLC as having been AI-generated, likely due to its gaudy technicolor visuals. With the benefit of a YouTube replay and the ability to look at details, the “He washed feet” commercial doesn’t appear AI-generated to us, but it goes to show how the concept of image synthesis has begun to cast doubt on human-made creations.

The Super Bowl’s best and wackiest AI commercials Read More »