Elon Musk’s X allows China-based propaganda banned on other platforms

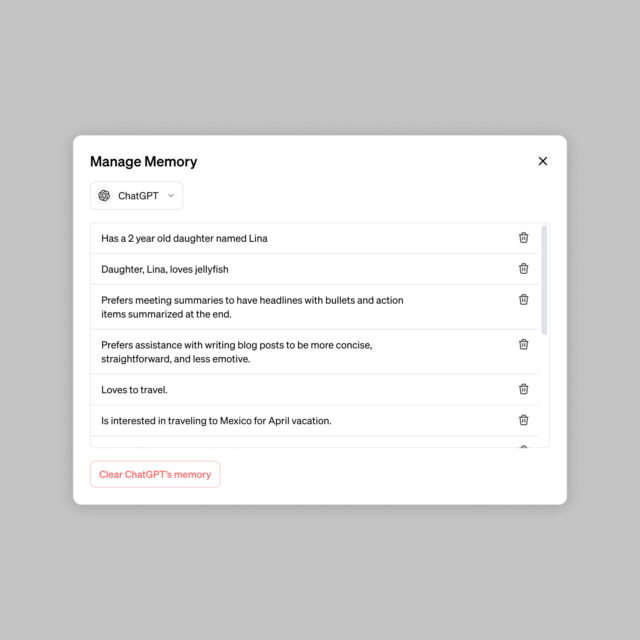

Rinse-wash-repeat. —

X accused of overlooking propaganda flagged by Meta and criminal prosecutors.

Lax content moderation on X (aka Twitter) has disrupted coordinated efforts between social media companies and law enforcement to tamp down on “propaganda accounts controlled by foreign entities aiming to influence US politics,” The Washington Post reported.

Now propaganda is “flourishing” on X, The Post said, while other social media companies are stuck in endless cycles, watching some of the propaganda that they block proliferate on X, then inevitably spread back to their platforms.

Meta, Google, and then-Twitter began coordinating takedown efforts with law enforcement and disinformation researchers after Russian-backed influence campaigns manipulated their platforms in hopes of swaying the 2016 US presidential election.

The next year, all three companies promised Congress to work tirelessly to stop Russian-backed propaganda from spreading on their platforms. The companies created explicit election misinformation policies and began meeting biweekly to compare notes on propaganda networks each platform uncovered, according to The Post’s interviews with anonymous sources who participated in these meetings.

However, after Elon Musk purchased Twitter and rebranded the company as X, his company withdrew from the alliance in May 2023.

Sources told The Post that the last X meeting attendee was Irish intelligence expert Aaron Rodericks—who was allegedly disciplined for liking an X post calling Musk “a dipshit.” Rodericks was subsequently laid off when Musk dismissed the entire election integrity team last September, and after that, X apparently ditched the biweekly meeting entirely and “just kind of disappeared,” a source told The Post.

In 2023, for example, Meta flagged 150 “artificial influence accounts” identified on its platform, of which “136 were still present on X as of Thursday evening,” according to The Post’s analysis. X’s seeming oversight extends to all but eight of the 123 “deceptive China-based campaigns” connected to accounts that Meta flagged last May, August, and December, The Post reported.

The Post’s report also provided an exclusive analysis from the Stanford Internet Observatory (SIO), which found that 86 propaganda accounts that Meta flagged last November “are still active on X.”

The majority of these accounts—81—were China-based accounts posing as Americans, SIO reported. These accounts frequently ripped photos from Americans’ LinkedIn profiles, then changed the real Americans’ names while posting about both China and US politics, as well as people often trending on X, such as Musk and Joe Biden.

Meta has warned that China-based influence campaigns are “multiplying,” The Post noted, while X’s standards remain seemingly too relaxed. Even accounts linked to criminal investigations remain active on X. One “account that is accused of being run by the Chinese Ministry of Public Security,” The Post reported, remains on X despite its posts being cited by US prosecutors in a criminal complaint.

Prosecutors connected that account to “dozens” of X accounts attempting to “shape public perceptions” about the Chinese Communist Party, the Chinese government, and other world leaders. The accounts also comment on hot-button topics like the fentanyl problem or police brutality, seemingly to convey “a sense of dismay over the state of America without any clear partisan bent,” Elise Thomas, an analyst for a London nonprofit called the Institute for Strategic Dialogue, told The Post.

Some X accounts flagged by The Post had more than 1 million followers. Five have paid X for verification, suggesting that their disinformation campaigns—targeting hashtags to confound discourse on US politics—are seemingly being boosted by X.

SIO technical research manager Renée DiResta criticized X’s decision to stop coordinating with other platforms.

“The presence of these accounts reinforces the fact that state actors continue to try to influence US politics by masquerading as media and fellow Americans,” DiResta told The Post. “Ahead of the 2022 midterms, researchers and platform integrity teams were collaborating to disrupt foreign influence efforts. That collaboration seems to have ground to a halt, Twitter does not seem to be addressing even networks identified by its peers, and that’s not great.”

Musk shut down X’s election integrity team because he claimed that the team was actually “undermining” election integrity. But analysts are bracing for floods of misinformation to sway 2024 elections, as some major platforms have removed election misinformation policies just as rapid advances in AI technologies have made misinformation spread via text, images, audio, and video harder for the average person to detect.

In one prominent example, a fake robocaller relied on AI voice technology to pose as Biden to tell Democrats not to vote. That incident seemingly pushed the Federal Trade Commission on Thursday to propose penalizing AI impersonation.

It seems apparent that propaganda accounts from foreign entities on X will use every tool available to get eyes on their content, perhaps expecting Musk’s platform to be the slowest to police them. According to The Post, some of the X accounts spreading propaganda are using what appears to be AI-generated images of Biden and Donald Trump to garner tens of thousands of views on posts.

It’s possible that X will start tightening up on content moderation as elections draw closer. Yesterday, X joined Amazon, Google, Meta, OpenAI, TikTok, and other Big Tech companies in signing an agreement to fight “deceptive use of AI” during 2024 elections. Among the top goals identified in the “AI Elections accord” are identifying where propaganda originates, detecting how propaganda spreads across platforms, and “undertaking collective efforts to evaluate and learn from the experiences and outcomes of dealing” with propaganda.

Elon Musk’s X allows China-based propaganda banned on other platforms Read More »