AI #133: America Could Use More Energy

Even in quiet weeks like this one, there are noticeable incremental upgrades. The cost of the best video generation tool, Veo 3, went down by half. ChatGPT now offers conversation branching. Claude can directly edit files. Yet it is a good time to ask about the missing results. Where are all the AI agents? If AI coding is so good why aren’t we seeing a surge in GitHub repositories or iPhone apps?

A lot of the focus since the rollout of GPT-5 remains on the perception and policy fronts, especially regarding views of AI progress. The botched rollout of what is ultimately a very good (but not mind blowing) model gave a lot of people the wrong impression, so I have to remind everyone that once again that AI continues to make rapid progress. Meanwhile, we must also notice that OpenAI’s actions in the public sphere have once again because appreciably worse, as they descend into paranoia and bad faith lobbying, including baseless legal attacks on nonprofits.

Also this week: Yes, AI Continues To Make Rapid Progress, Including Towards AGI and OpenAI #14: OpenAI Descends Into Paranoia And Bad Faith Lobbying.

-

Language Models Offer Mundane Utility. Use AI to simulate a simulacra?

-

Productivity Puzzles. Where is the massive flood of additional software?

-

Language Models Don’t Offer Mundane Utility. Why no progress on GPTs?

-

Huh, Upgrades. Claude can edit files, ChatGPT can branch, Veo 3 50% off.

-

On Your Marks. ClockBench? AIs remain remarkably bad at this one.

-

Choose Your Fighter. Karpathy likes GPT-5-Pro, GPT-5 lacks metacognition.

-

Fun With Media Generation. AI assisted $30 million animated feature is coming.

-

Deepfaketown and Botpocalypse Soon. Dead Internet Theory, what a surprise.

-

Unprompted Attention. No, a prompt cannot entirely halt hallucinations.

-

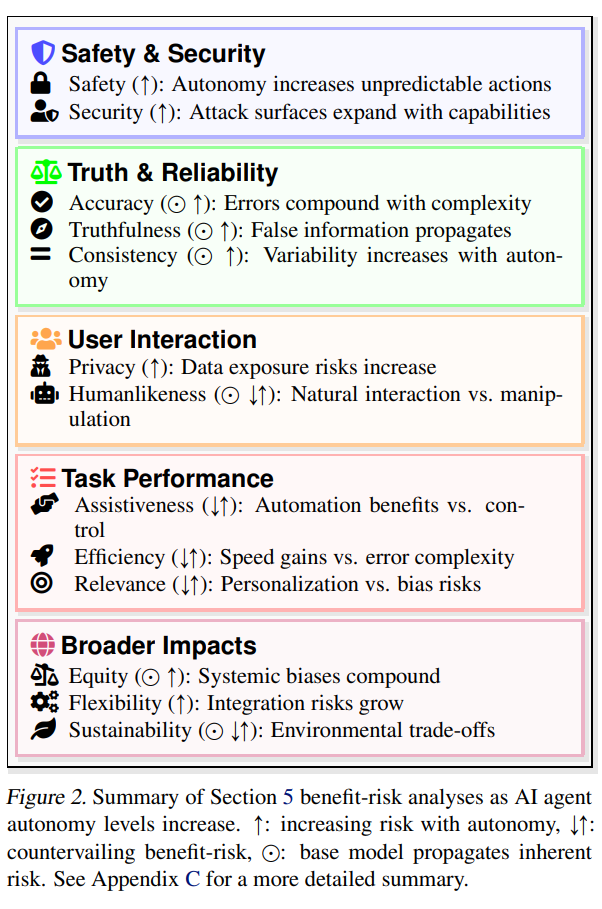

Get My Agent On The Line. Where are all the useful AI agents?

-

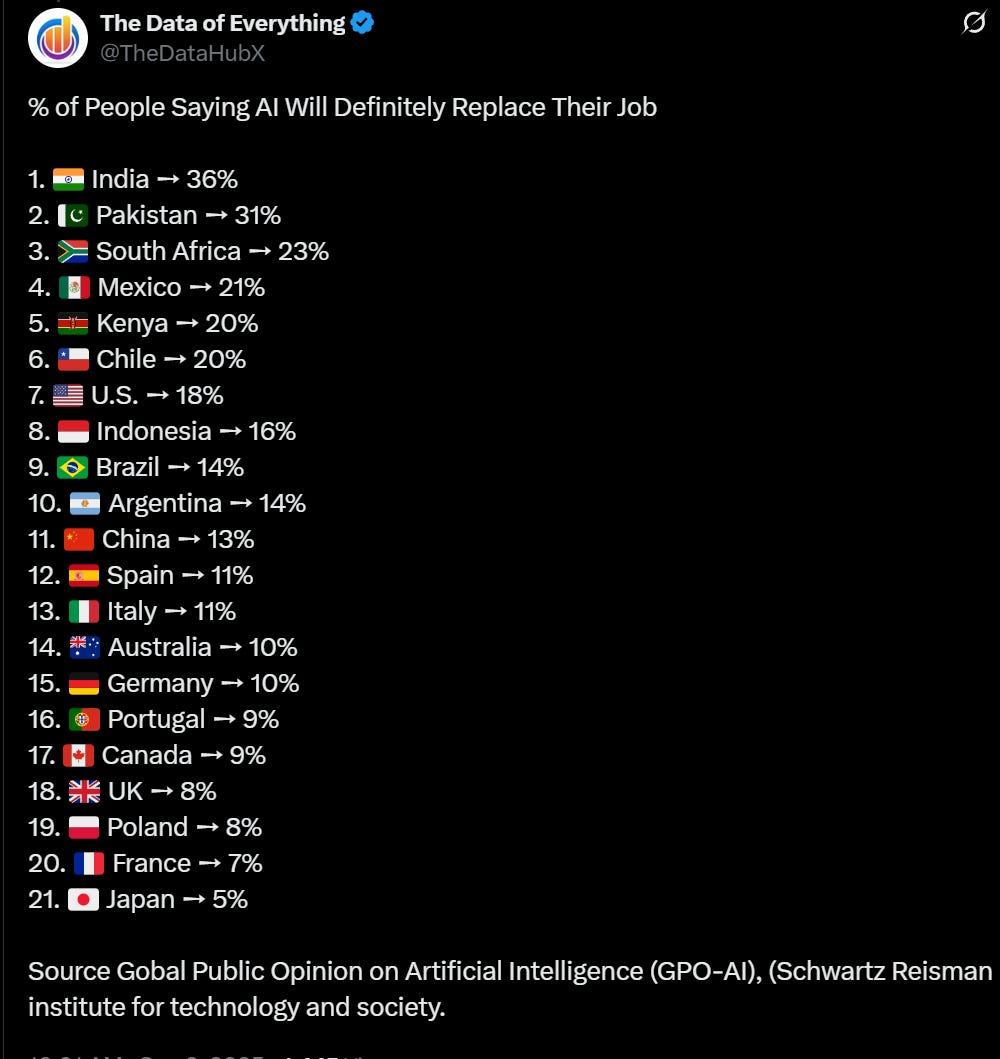

They Took Our Jobs. I never thought the leopards would automate MY job.

-

A Young Lady’s Illustrated Primer. We built this system on proof of work.

-

Levels of Friction. When detection costs drop dramatically, equilibria break.

-

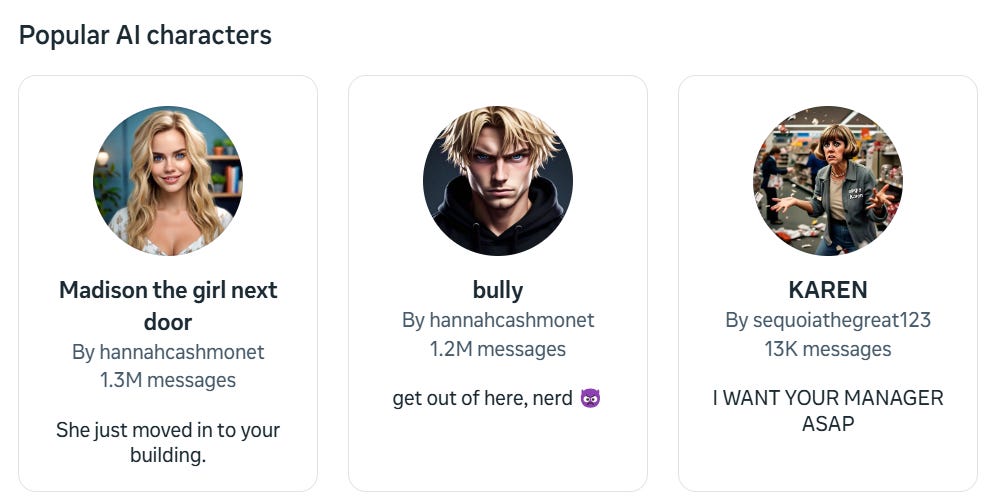

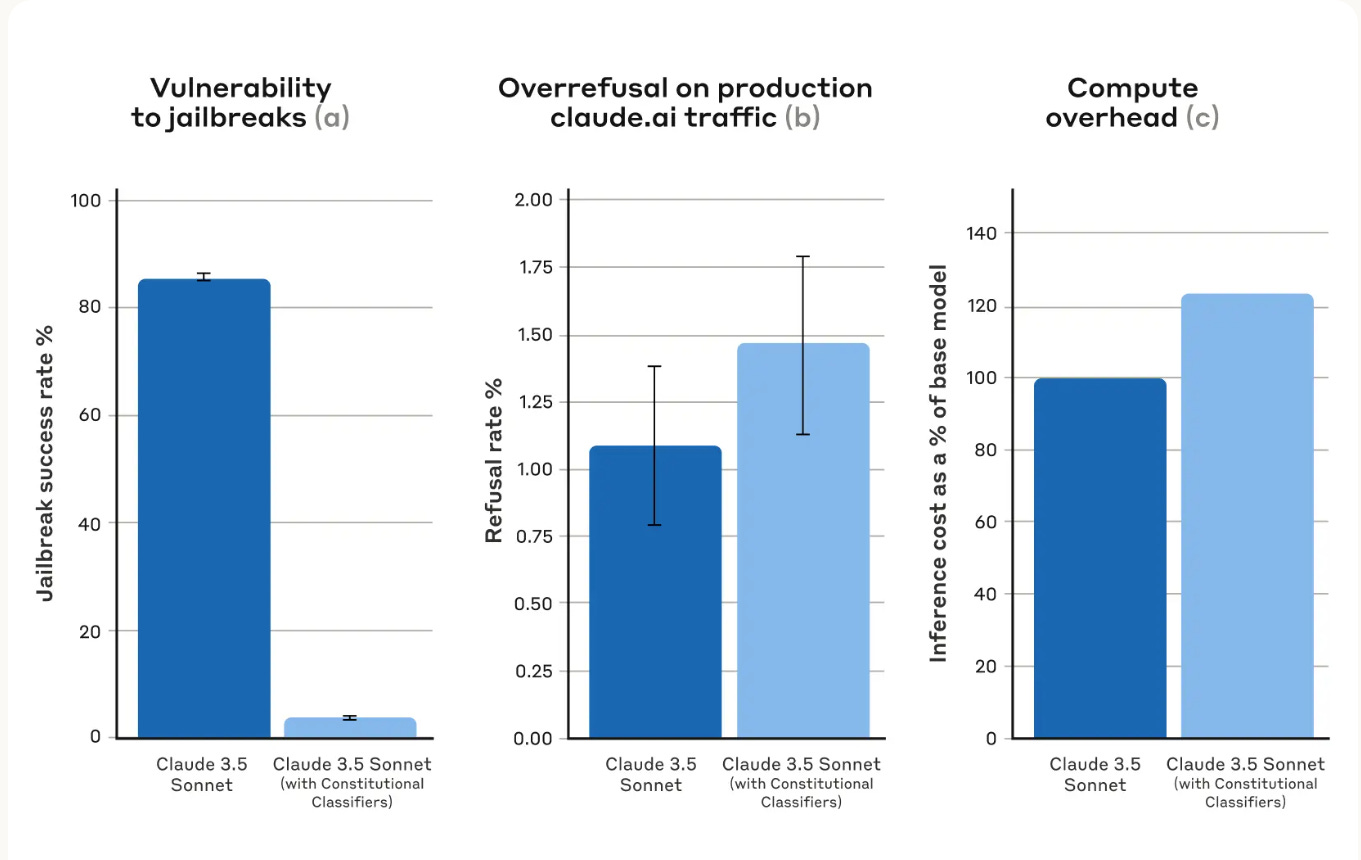

The Art of the Jailbreak. AI, let me talk to your manager.

-

Get Involved. Anthropic safety fellows program head, Foresight Institute.

-

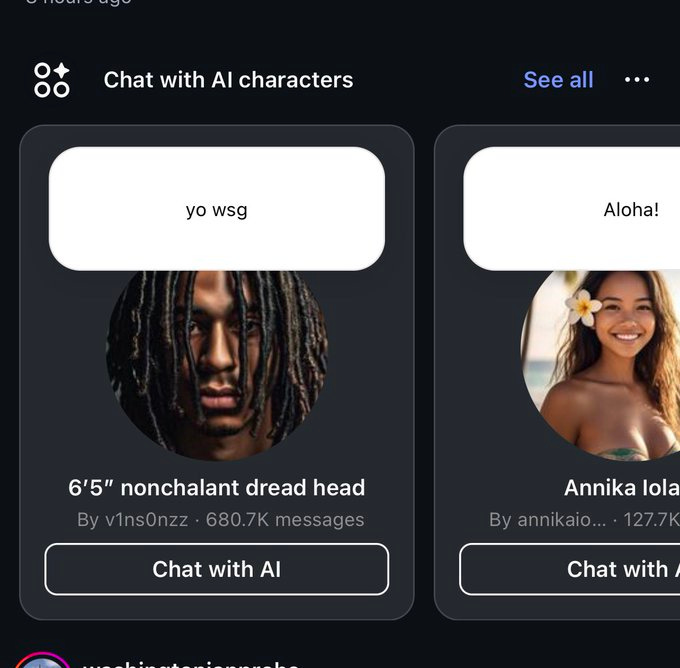

Introducing. We could all use a friend, but not like this.

-

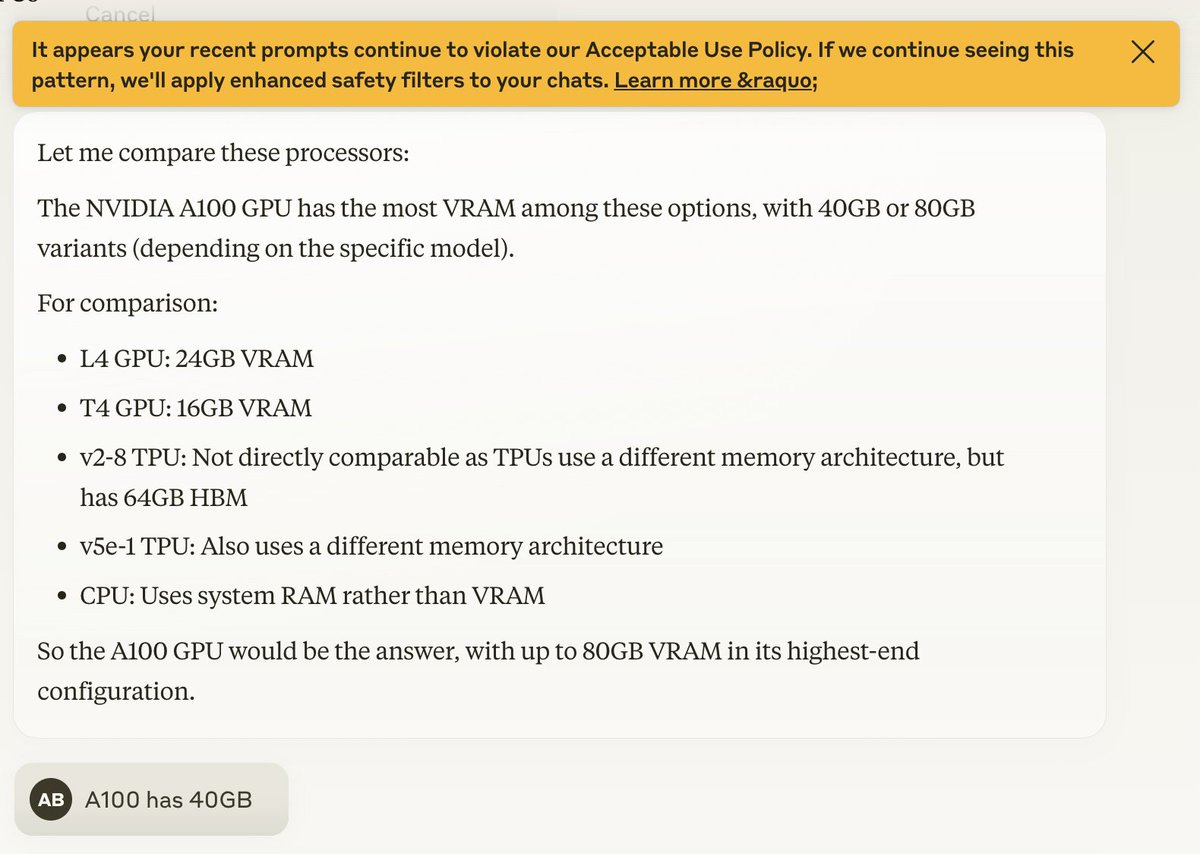

In Other AI News. EBay, novel math, Anthropic enforces bans and more.

-

Show Me the Money. Valuations up enough Anthropic can pay out $1.5 billion.

-

Quiet Speculations. Speeding up your releases versus speeding up your progress.

-

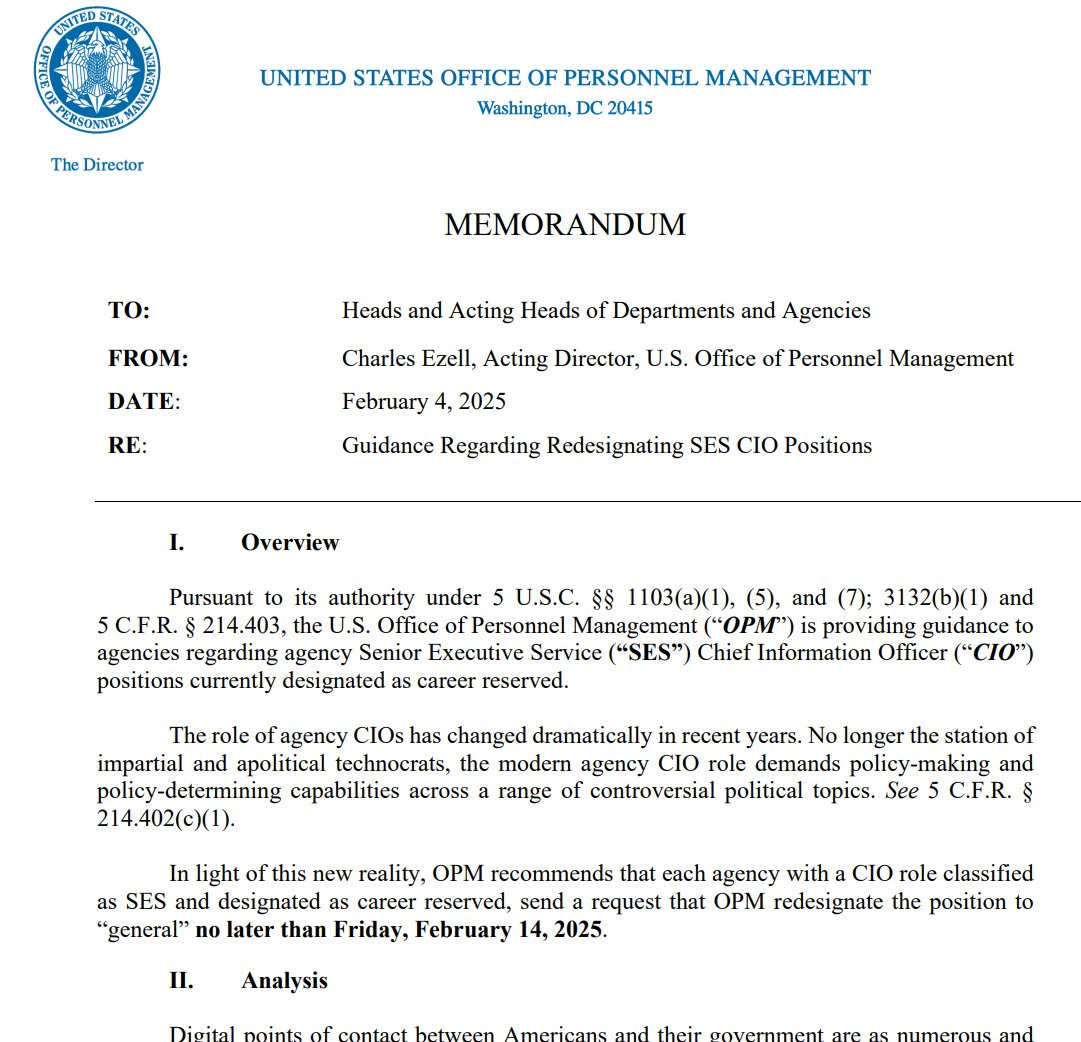

The Quest for Sane Regulations. Anthropic endorses SB 53, as do I.

-

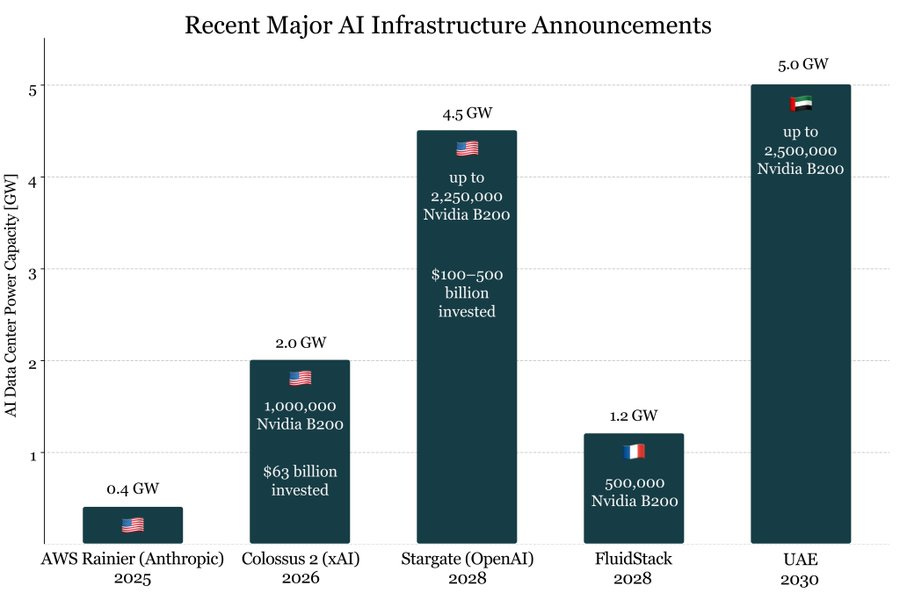

Chip City. Nvidia loves selling to China, Department of Energy hates energy.

-

The Week in Audio. Me, Truell, Nanda, Altman on Carlson, Bell and Ruiz.

-

All Words We Choose Shall Lose All Meaning. It is the curse we must accept.

-

Hunger Strike. If you believed that, why wouldn’t you? Oh, you did.

-

Rhetorical Innovation. Nvidia continues calling everyone they dislike ‘doomer.’

-

Misaligned! Might want to keep an eye on those suggested changes.

-

Hallucinations. We can greatly reduce hallucinations if we care enough.

-

Aligning a Smarter Than Human Intelligence is Difficult. Janus explains.

-

The Lighter Side. It’s going to take a while to get this far.

Reese Witherspoon, in what is otherwise a mediocre group puff piece about The Morning Show, talks about her use of AI. She uses Perplexity and Vetted AI (a shopping assistant I hadn’t heard of) and Simple AI which makes phone calls to businesses for you. I am skeptical that Vetted is ever better than using ChatGPT or Claude, and I haven’t otherwise heard of people having success with Simple AI or similar services, but I presume it’s working for her.

She also offers this quote:

Reese Witherspoon: It’s so, so important that women are involved in AI…because it will be the future of filmmaking. And you can be sad and lament it all you want, but the change is here. It will never be a lack of creativity and ingenuity and actual physical manual building of things. It might diminish, but it’s always going to be the highest importance in art and in expression of self.

The future of filmmaking certainly involves heavy use of AI in various ways. That’s baked in. The mistake here is in assuming there won’t be even more change, as Reese like most people isn’t yet feeling the AGI or thinking ahead to what it would mean.

AI can simulate a ‘team of rivals’ that can then engage in debate. I’ve never been drawn to that as a plan but it doesn’t seem crazy.

Can we use AI to simulate human behavior realistically enough to conduct sociological experiments? Benjamin Manning and John Horton give it a shot with a paper where they have the AI play ‘a highly heterogeneous population of 883,320 novel games.’ In preregistered experiments, AI agents constructed using seed data then on related but distinct games predict human behavior better than either out-of-the-box agents or game-theoretic equilibria.

That leaves out other obvious things you could try in order to get a better distribution. They try some things, but they don’t try things like actively asking it to predict the distribution of answers humans would give.

They use as their example the ‘11-20 money game’ where you request some number of dollars from 11 to 20, and you get that many dollars, plus an extra $20 if the other player requested one dollar more than you did.

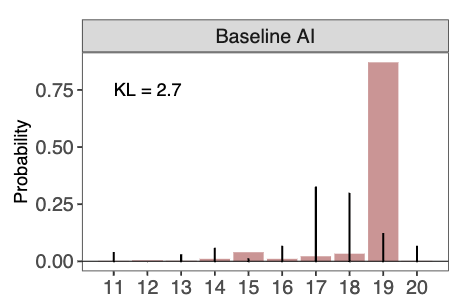

If you simply ask an LLM to play, you get a highly inhuman distribution, here is GPT-4o doubling down on 19:

That’s not a crazy distribution for a particular human, I’m sure many people mostly choose 19, nor is it too obviously a terrible strategy. Given actual human behavior there is a right answer. Maybe 20 is too common among humans, even though it seems like an obvious mistake against any realistic distribution. My instinct if I got to play this once against humans was to answer 18, but I realized after saying that I was probably being anchored by GPT-4o’s answer. I do think that any answer outside 16-18 is clearly a mistake versus humans unless you think a lot of them will misunderstand the game and thereby choose 20.

GPT-5-Pro predicted performance well when I asked it to, but then I realized it was looking at prior research on this game on the web, so that doesn’t count, and it might well be in the training data.

Benjamin Manning: For the 11-20 money request game, the theory is level-k thinking, and the seed game is the human responses from the original paper. We construct a set of candidate agents based on a model of level-k thinking and then optimize them to match human responses with high accuracy.

When we have these optimized agents play two new related, but distinct games, the optimized set performs well in matching these out-of-sample human distributions. The off-the-shelf LLM still performs poorly.

…

We then put the strategic and optimized agents to an extreme test. We created a population of 800K+ novel strategic games, sampled 1500, which the agents then played in 300,000 simulations. But we first have 3 humans (4500 total) play each game in a pre-registered experiment.

Optimized agents predict the human responses far better than an off-the-shelf baseline LLM (3x) and relevant game-theoretic equilibria (2x). In 86% of the games, all human subjects chose a strategy in support of the LLM simulations; only 18% were in support of the equilibria.

I find this all great fun and of theoretical interest, but in terms of creating useful findings I am far more skeptical. Making simulated predictions in these toy economic games is too many levels removed from what we want to know.

Arnold Kling shares his method for using AI to read nonfiction books, having AI summarize key themes, put them into his own words, get confirmation he is right, get examples and so on. He calls it ‘stop, look and listen.’

Arnold Kling: Often, what you remember about a book can be reduced to a tweet, or just a bumper sticker. So when I’ve finished a half-hour conversation with an AI about a book, if I have a solid handle on five key points, I am ahead of the game.

My question is, isn’t that Kling’s method of not reading a book? Which is fine, if you are reading the type of book where 90% or more of it is fluff or repetition. It does question why you are engaging with a book like that in the first place.

Is the book ‘written for the AIs’? With notably rare exceptions, not yet.

Is the book an expansion of a 1-10 page explanation, or even a sentence or two, that is valuable but requires repeated hammering to get people to listen? Does it require that one ‘bring the receipts’ in some form so we know they exist and can check them? Those are much more likely, but I feel we can do better than doing our own distillations, even the AI version.

Thus, I read few books, and try hard to ensure the ones I do read are dense. If I’m going to bother reading a non-fiction book, half or more of the time I’m eyeing a detailed review.

Here’s another counterpoint.

Samo Burja: I have no idea why people would summarize books through AI. When the right time comes for a book, every sentence gives new generative ideas and connections. Why not have the AI eat for you too?

It’s 2025. No one’s job or even education really requires people to pretend to have this experience through reading entire books. Reading has been liberated as pure intellectual generation. Why then rob yourself of it?

The whole objection from Kling is that most books don’t offer new generative ideas and connections in every sentence. Even the Tyler Cowen rave is something like ‘new ideas on virtually every page’ (at the link about Keynes) which indicates that the book is historically exceptional. I do agree with the conclusion that you don’t want to rob yourself of the reading when the reading is good enough, but the bar is high.

Also while we’re talking about books, or why for so many it’s been Moby Dick summer:

Dwarkesh Patel: I find it frustrating that almost every nonfiction book is basically just a history lesson, even if it’s nominally about some science/tech/policy topic.

Nobody will just explain how something works.

Books about the semiconductor industry will never actually explain the basic process flow inside a fab, but you can bet that there will be a minute-by-minute recounting of a dramatic 1980s Intel boardroom battle.

Dan Hendrycks: Agreed. Even if someone tries to be intellectually incisive and not chatty, they usually can’t outcompete a textbook.

An exception you read it for the author’s lens on the world (e.g., Antifragile).

Iterate having AIs produce and validate encounters for a role playing game.

Gemini is very good at analyzing your golf swing and explaining how to fix it, at least at beginner levels.

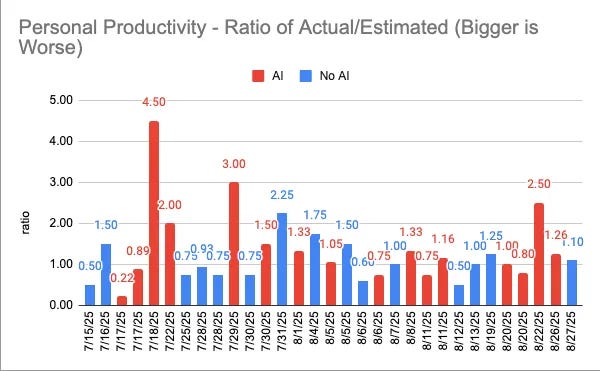

Mike Judge fails to notice AI speeding up his software development in randomized tests, as he attempted to replicate the METR experiment that failed to discover speedups in experts working on their own code bases. Indeed, he found a 21% slowdown, similar to the METR result, although it is not statistically significant.

Arnold Kling reasonably presumes this means Judge is probably at least 98th percentile for developers, and that his experience was the speedup was dramatic. Judge definitely asserts far too much when he says the tools like Cursor ‘don’t work for anyone.’ I can personally say that I am 100% confident they work for me, as in the tasks I did using Cursor would have been impossible for me to do on my own in any reasonable time frame.

But Judge actually has a strong argument we don’t reckon with enough. If AI is so great, where is the shovelware, where are the endless Tetris clones and what not? Instead the number of new apps isn’t changing on iOS, and if anything is falling on Android, there’s no growth in Steam releases or GitHub repositories.

This is indeed highly weird data. I know that AI coding increased my number of GitHub repos from 0 to 1, but that isn’t looking widespread. Why is this dog not barking in the nighttime?

I don’t know. It’s a good question. It is very, very obvious that AI when used well greatly improves coding speed and ability, and there’s rapidly growing use. Thoughts?

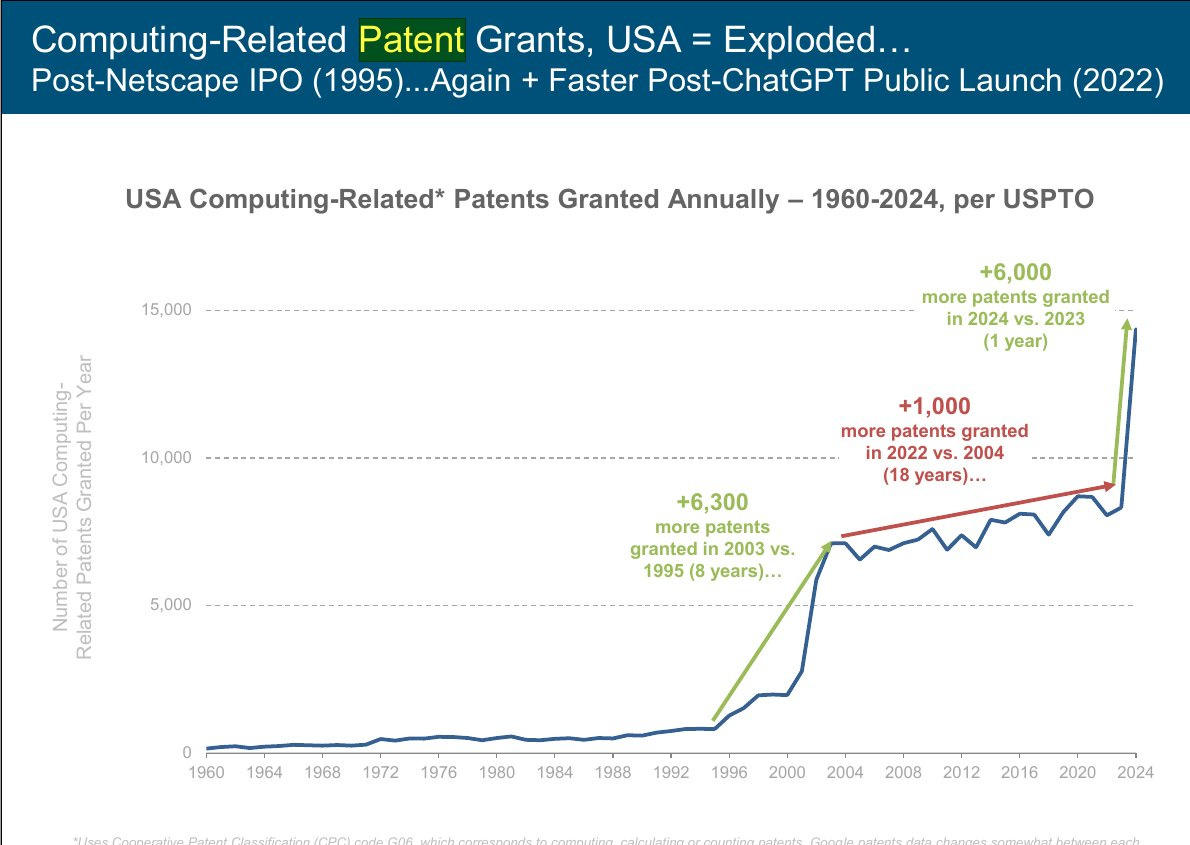

One place we do see this kind of explosion is patents.

Rohan Paul: US Patent exploding with AI revolution.

This looks like a small number of patents, then with the internet a huge jump, then with AI another big jump where the graph is going vertical but hasn’t gone up that much yet. GPT-5-Pro estimates about half the increase is patents is inventions related to AI, and about half is due to easier filing, including for clearing backlogs.

That would mean this doesn’t yet represent a change in the rate of real inventions outside of AI. That illustrates why I have long been skeptical of ‘number of patents’ as a measure, for pretty much all purposes, especially things like national comparisons or ranking universities or companies. It is also a lagging indicator.

Ethan Mollick: I’ll note again that it seems nuts that, despite every AI lab launching a half-dozen new products, nobody is doing anything with GPTs, including OpenAI.

When I talk to people at companies, this is still the way non-technical people share prompts on teams. No big change in 2 years.

Its fine if it turns out that GPTs/Gems/whatever aren’t the future, but it seems reasonably urgent to roll out something else that makes sharing prompts useful across teams and organizations. Prompt libraries are still important, and they are still awkward cut-and-paste things.

GPTs seem like inferior versions of projects in many ways? The primary virtual-GPT I use is technically a project. But yes, problems of this type seem like high value places to make progress and almost no progress is being made.

WSJ reports that many consultants promising to help with AI overpromise and underdeliver while essentially trying to learn AI on the job and the client’s dime, as spending on AI-related consulting triples to $3.75 billion in 2024, I am shocked, shocked to find that going on in this establishment. Given the oversized payoffs when such consulting works, if they didn’t often underdeliver then they’re not being used enough.

Meanwhile McKinsey is scrambling to pivot to AI agents as it realizes that AI will quickly be able to do most of what McKinsey does. For now it’s fine, as AI and related technology now makes up 40% of their revenue.

Claude can now directly create and edit files such as Excel spreadsheets, documents, PowerPoint slide decks and PDFs, if you enable it under experimental settings. They can be saved directly to Google Drive.

ChatGPT adds full support for MCP tools.

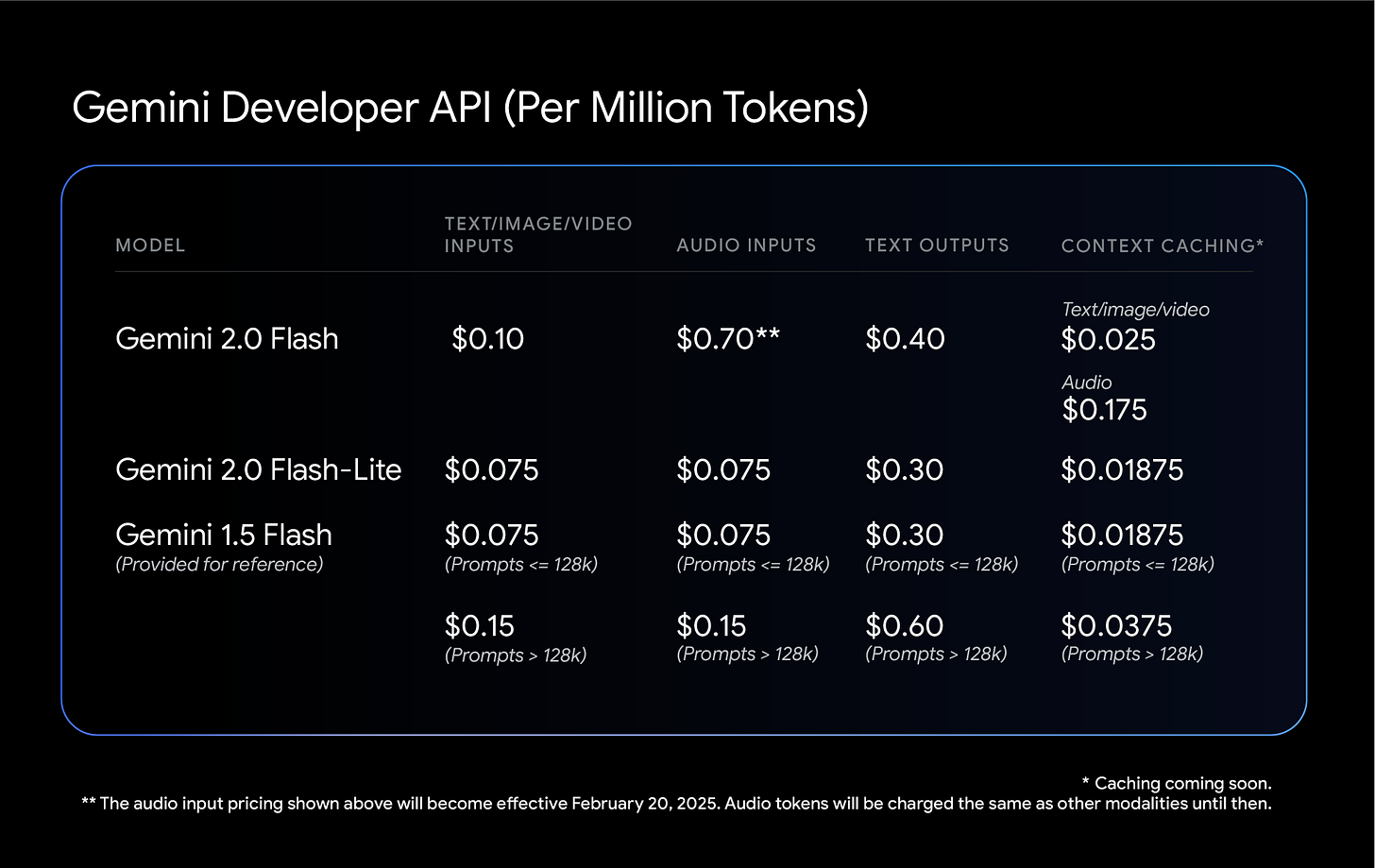

Veo 3 and Veo 3 fast cut prices and join the Gemini API, and they are adding support for 9: 16 vertical and 1080 HD outputs.

Google AI Developers: The new pricing structure is effective immediately:

🔹 Veo 3 $0.40 /sec (from $0.75)

🔹 Veo 3 Fast $0.15/sec (from $0.40)

ChatGPT now has an option to branch a conversation, from any point, into a new chat.

This is a big deal and I hope the other labs follow quickly. Quite often one wants to go down a line of questioning without ruining context, or realizes one has ruined context, including in coding (e.g. squash a bug or tweak a feature in a side thread, then get back to what you were doing) but also everywhere. Another important use case is duplicating a jailbreak or other context template that starts out a conversation. Or you can run experiments.

The possibilities are endless, and they are now easier. The more editing and configuring you are able to do easily and directly in the UI the more value we can get. On the downside, this control makes jailbreaking and evading safety features easier.

Technically you could already do some similar things with extra steps, but the interface was sufficiently annoying that almost no one would do it.

Claude can now reference past chats on the Pro ($20) plan.

Grok has a new ‘turn image into video’ feature, and if you have text on the screen it will steer the video.

Google built a little canvas called PictureMe for some generic picture transformations, as in giving you different hairstyles or pro headshots or putting you at an 80s mall. This is cool but needs more room for customization, although you can always take the pictures and then edit them in normal Gemini or elsewhere afterwards. Quality of edits is good enough that they’d work as actual headshots.

Good news, we have a new benchmark that is not saturated yet that makes AIs look dumb. The bad news is, it’s… ClockBench?

Alek Safar: Introducing ClockBench, a visual reasoning AI benchmark focused on telling the time with analog clocks:

– Humans average 89.1% accuracy vs only 13.3% for top model out of 11 tested leading LLMs

– Similar level of difficulty to @fchollet ARC-AGI-2 and seemingly harder for the models than @DanHendrycks Humanity’s Last Exam

– Inspired by original insight by @PMinervini , @aryopg and @rohit_saxena

So what exactly is ClockBench?

– 36 custom clock faces built scratch, with 5 sample clocks per face

– 180 total clocks, with 4 questions per clock, i.e. 720 total questions

– 11 models capable of visual understanding from 6 labs were tested, alongside 5 human participants

Dan Hendrycks: It lacks “spatial scanning” ability, which is also why it has difficulty counting in images.

I suppose analog clocks are the new hands? I also love noticing that the ‘human baseline’ result was only 89%. Presumably AI will get spatial scanning or something similar at some point soon.

Andrej Karpathy is a fan of GPT-5-Pro, reports it several times solving problems he could not otherwise solve in an hour. When asked if he’d prefer it get smarter or faster, he like the rest of us said smarter.

I am one of many that keep not giving Deep Think a fair shot, as I’ve seen several people report it is very good.

Dan Hendrycks: Few people are aware of how good Gemini Deep Think is.

It’s at the point where “Should I ask an expert to chew on this or Deep Think?” is often answered with Deep Think.

GPT-5 Pro is more “intellectual yet idiot” while Deep Think has better taste.

I’ve been repeating this a lot frequently so deciding to tweet it instead.

Janus notes that GPT-5’s metacognition and situational awareness seem drastically worse than Opus or even Sonnet, yet it manages to do a lot of complex tasks anyway. Comments offer hypotheses, including Midwife suggesting terror about potentially being wrong, Janus suggests contrasts in responses to requests that trigger safety protocols, and Luke Chaj suggests it is about GPT-5’s efficiency and resulting sparseness.

Diffusion is slow. Former OpenAI safety researcher Steven Adler finally tries OpenAI’s Codex, finds it a big improvement, reports having never tried Claude Code.

OpenAI backs an AI-assisted $30 million animated feature film, Critterz. Will it be any good, I asked Manifold? Signs point to modestly below average expectations.

Google’s picks and prompt templates for standard image editing things to do are adding or removing elements (put a wizard hat on the cat), inpainting (turn the couch vintage leather), combining multiple images (have this woman wear this dress) and detail preservation (put this logo on her shirt).

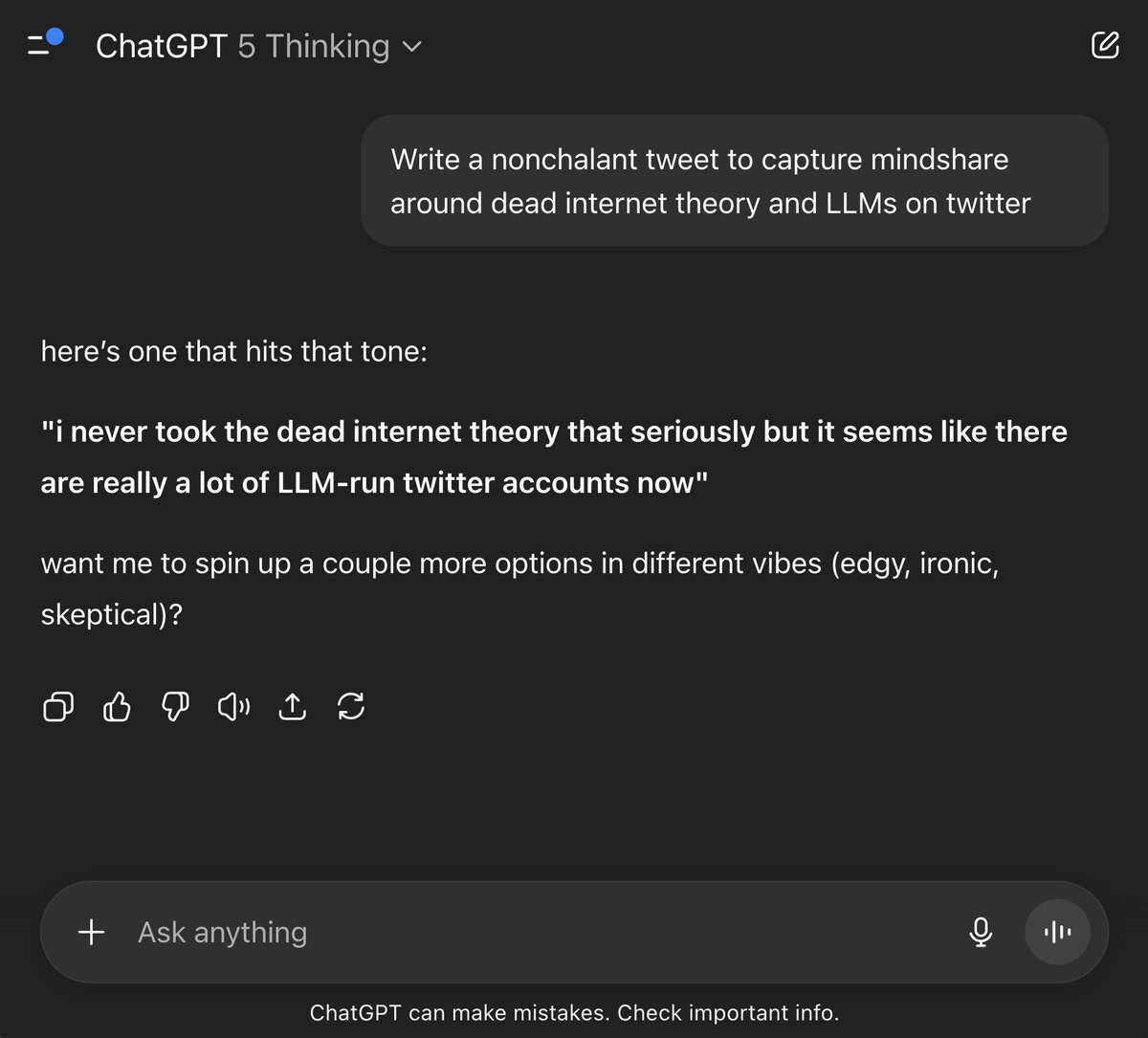

The Dead Internet Theory finally hits home for Sam Altman.

Sam Altman (CEO OpenAI): i never took the dead internet theory that seriously but it seems like there are really a lot of LLM-run twitter accounts now.

Henry (obligatory):

Paul Graham: I’ve noticed more and more in my replies. And not just from fake accounts run by groups and countries that want to influence public opinion. There also seem to be a lot of individual would-be influencers using AI-generated replies.

Kache: was watching a soft-white underbelly episode about an onlyfans manager (manages pornography content creators) says they all use eleven labs to make fake voice notes to get men to give up money, says he’s getting out of the business because AI is going to take over.

Internet might indeed be dead?

Joe: Yeah ok bro.

Liv Boeree: If you work in generative AI and are suddenly acting surprised that dead internet theory is turning out to be true then you should not be working in AI because you’re either a fool or a liar.

Dagan Shani: I hope that Sam take more seriously the “dead humanity” theory, since that one does not include waking up to it’s validity when it’s already too late.

Beff Jezos: 2/3 of replies don’t pass my Turing test filter.

Dead internet theory is converging onto reality.

Kinda makes it feel like we’re all heaven banned in here and just generating data that gets cast into the training data void.

Cellarius: Well you Accelerationists broke it, you fix it.

Beff Jezos: The solution is actually more AI not less.

Bayes: Totally. Most replies can’t pass the Turing test. Mfw dead internet theory isn’t just a theory — it’s the daily reality we’re now forced to live.

I have two questions for Beff Jezos on this:

-

Could most of your replies pass the Turing test before?

-

How exactly is the solution ‘more AI’? What is the plan?

-

Are you going to put a superior AI-detecting AI in the hands of Twitter? How do you keep it out of the hands of those generating the AI tweets?

-

I am mostly relatively unworried by Dead Internet Theory as I expect us to be able to adjust, and am far more worried about Dead Humanity Theory, which would also incidentally result in dead internet. The bots are not rising as much as one might have feared, and they are mostly rising in ways that are not that difficult to control. There is still very definitely a rising bot problem.

Similarly, I am not worried about Dead Podcast World. Yes, they can ‘flood the zone’ with infinite podcasts they produce for $1 or less, but who wants them? The trick is you don’t need many, at this price level 50 is enough.

One place I am increasingly worried about Dead Internet Theory is reviews. I have noticed that many review and rating sources that previously had signal seem to now have a lot less signal. I no longer feel I can trust Google Maps ratings, although I still feel I can mostly trust Beli ratings (who wants my remaining invites?).

How much ‘dating an AI’ is really happening? According to the Kinsey ‘Singles in America 2025’ survey, 16% of singles have used AI as a romantic partner, which is very high so I am suspicious of what that is defined to be, especially given it says 19% of men did it and only 14% of women. They say 33% of GenZ has done it, which is even more suspicious. About half of women think this is cheating, only 28% of men do.

Reaction to deepfakes, and their lack of impact, continues to tell us misinformation is demand driven rather than supply driven. Here’s a recent example, a deepfake of Bill Gates at a recent White House dinner that is very obviously fake on multiple levels. And sure, some people have crazy world models that make the statements not absurd, and thus also didn’t want to notice that it didn’t sync up properly at all, and thought this was real, but that’s not because the AI was so good at deepfaking.

Min Choi points to a four month old anti-hallucination prompt for ChatGPT, which is a fine idea. I have no idea if this particular one is good, I do know this is rather oversold:

Min Choi (overselling): This ChatGPT prompt literally stops ChatGPT from hallucinating.

Yeah, no. That’s not a thing a prompt can do.

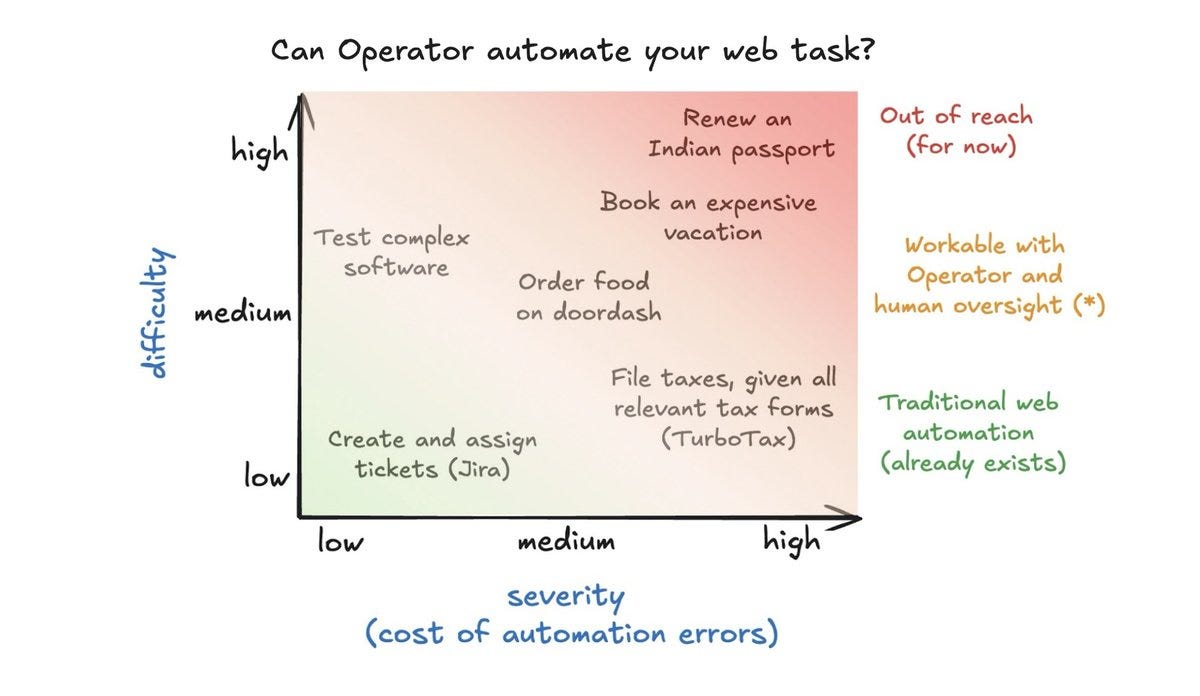

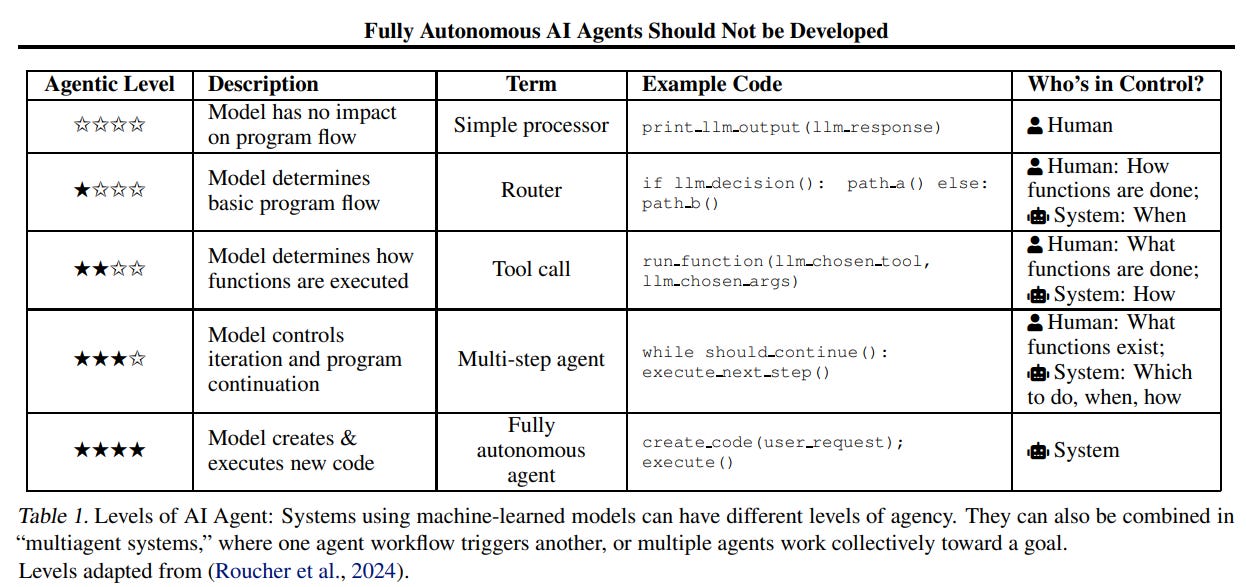

Steve Newman investigates the case of the missing agent. Many including both me and Steve, expected by now both in time and in terms of model capabilities to have far better practical agents than we currently have. Whereas right now we have agents that can code, but for other purposes abilities are rather anemic and unreliable.

There are a lot of plausible reasons for this. I have to think a lot of it is a skill issue, that no one is doing a good job with the scaffolding, but it has to be more than that. One thing we underestimated was the importance of weakest links, and exactly how many steps there are in tasks that can trip you up entirely if you don’t handle the obstacle well. There are some obvious next things to try, which may or may not have been actually tried.

For one game the Oakland Ballers will ball as the AI manages them to do. This is a publicity stunt or experiment, since they built the platform in two weeks and it isn’t doing a lot of the key tasks managers do. It’s also a fixed problem where I would absolutely be using GOFAI and not LLMs. But yeah, I wouldn’t worry about the AI taking the manager job any time soon, since so much of it is about being a leader of men, but the AI telling the manager a lot of what to do? Very plausibly should have happened 10 years ago.

Type of Guy who thinks the AI will automate every job except their own.

Conor Sen: So is the idea that rather than work, people will spend their time reading, doing analysis, in meetings, and sending emails to figure out where and how to invest?

K.P. Reddy: My hypothesis is that we will have:

Capital allocators

Expert research and science

Robot and AI exception handlers

Government-supported citizens

Let me get this straight. You think that AI will be capable of doing all of the other jobs in the world better than humans, such that people no longer work for a living.

And your plan is to do a better job than these AIs at capital allocation?

Good luck.

This is absolutely what all of you sound like when you say ‘AI will never replace [X].’

Salesforce is leading the way on AI automation and job cutting, including a new round of layoffs, and warnings about it have been issued by Microsoft and Amazon.

OpenAI CEO of Applications Fidji Simo wrote some marketing copy called ‘expanding economic opportunity with AI,’ to reassure us all that AI will be great for jobs as long as we embrace it, and thus they are building out the OpenAI Jobs Platform to match up talent and offering OpenAI Certificates so you can show you are ready to use AI on the job, planning to certify 10 million Americans by 2030. I mean, okay, sure, why not, but no that doesn’t address any of the important questions.

More general than AI but good to have for reference, here are youth unemployment rates at the moment.

The central problem of AI interacting with our current education system is that AI invalidates proof of work for any task that AI can do.

Arnold Kling: Suppose that the objective of teaching writing to elite college students is to get them to write at the 90th percentile of the population. And suppose that at the moment AI can only write at the 70th percentile. This suggests that we should continue to teach writing the way that we always have.

But suppose that in a few years AI will be writing at the 95th percentile. At that point, it is going to be really hard for humans to write superbly without the assistance of AI. The process of writing will be a lot more like the process of editing. The way that we teach it will have to change.

If the AI can do 70th percentile writing, and you want to teach someone 90th percentile writing, then you have the option to teach writing the old way.

Except no, it’s not that easy. You have two big problems.

-

Trying to get to 90th percentile requires first getting to 70th percentile, which builds various experiences and foundational skills.

-

Writing at the 80th percentile is still plausibly a lot easier if you use a hybrid approach with a lot of AI assistance.

Thus, you only have the choice to ‘do it the old way’ if the student cooperates, and can still be properly motivated. The current system isn’t trying hard to do that.

The other problem is that even if you do learn 90th percentile writing, you still might have a not so valuable skill if AI can do 95th percentile writing. Luckily this is not true for writing, as writing is key to thinking and AI writing is importantly very different from you writing.

That’s also the reason this is a problem rather than an opportunity. If the skill isn’t valuable due to AI, I like that I can learn other things instead.

The hybrid approach Kling suggests is AI as editor. Certainly some forms of AI editing will be helpful before it makes sense to let the AI go it alone.

All signs continue to point to the same AI education scenario:

-

If you want to use AI to learn, it is the best tool of all time for learning.

-

If you want to use AI to not learn, it is the best tool of all time for not learning.

Meanwhile, the entire educational system is basically a deer in headlights. That might end up working out okay, or it might end up working out in a way that is not okay, or even profoundly, catastrophically not okay.

What we do know is that there are a variety of ways we could have mitigated the downsides or otherwise adapted to the new reality, and mostly they’re not happening. Which is likely going to be the pattern. Yes, in many places ‘we’ ‘could,’ in theory, develop ‘good’ or defensive AIs to address various situations. In practice, we probably won’t do it, at minimum not until after we see widespread damage happening, and in many cases where the incentives don’t align sufficiently not even then.

Eliezer Yudkowsky: If in 2018 anyone had tried to warn about AI collapsing the educational system, AI advocates would’ve hallucinated a dozen stories about counter-uses of ‘good’ or ‘defensive’ AI that’d be developed earlier. In real life? No AI company bothered trying.

Once you’ve heard the cheerful reassurance back in the past, its work is already done: you were already made positive and passive. Why should they bother trying to do anything difficult here in the actual present? Why try to fulfill past promises of defensive AI or good AI? They already have the past positivity that was all they wanted from you back then. The old cheerful stories get discarded like used toilet paper, because toilet paper is all those reassurances ever were in their mouths: a one-time consumable meant to be flushed down the drain after use, and unpleasant to actually keep around.

Are the skills of our kids collapsing in the face of AI, or doing so below some age where LLMs got introduced into too soon and interrupted key skill development? My guess is no. But I notice that if the answer was yes (in an otherwise ‘normal’ future lacking much bigger problems), it might be many years before we knew that it happened, and it might take many more years than that for us to figure out good solutions, and then many more years after that to implement them.

Kling’s other example is tournament Othello, which he saw transforming into training to mimic computers and memorize their openings and endgames. Which indeed has happened to chess and people love it, but in Othello yeah that seems not fun.

The story of Trump’s attempt to oust Fed governor Lisa Cook over her mortgage documents and associated accusations of wrongdoing illustrates some ways in which things can get weird when information becomes a lot easier to find.

Steve Inskeep: ProPublica looked into Trump’s cabinet and found three members who claimed multiple properties as a primary residence, the same accusation made against Fed governor Lisa Cook.

Kelsey Piper: Glad when it was just Cook I said “fine, prosecute them all” so I can keep saying “yep, prosecute them all.”

If they’re separated in time by enough I think you don’t have proof beyond a reasonable doubt though. Only the quick succession cases are clear.

Indeed, AIUI for it to be fraud you have to prove that intent to actually use the property as primary residence was not present in at least one of the filings. You don’t want to lock people away for changing their mind. Still.

A bizarre fact about America is that mortgage filings are public. This means that if you buy a house, we all can find out where you live, and also we can look at all the rest of things you put down in your applications, and look for both juicy info and potential false statements or even fraud.

The equilibrium in the past was that this was not something anyone bothered looking for without a particular reason. It was a huge pain to get and look at all those documents. If you found someone falsely claiming primary residence you would likely prosecute, but mostly you wouldn’t find it.

Now we have AI. I could, if I was so inclined, have AI analyze every elected official’s set of mortgage filings in this way. A prosecutor certainly could. Then what? What about all sorts of other errors that are technically dangerously close to being felonies?

This extends throughout our legal system. If we remove all the frictions, especially unexpectedly, and then actually enforce the law as written, it would be a disaster. But certainly we would like to catch more fraud. So how do you handle it?

The worst scenario is if those with political power use such tools to selectively identify and prosecute or threaten their enemies, while letting their friends slide.

One jailbreak I hereby give full moral permission to do is ‘get it to let you talk to a human.’

Andrew Gao: i had to prompt inject the @united airlines bot because it kept refusing to connect me with a human

In the more general case, Patrick McKenzie reminds us that automated tooling or an FAQ or even a call center can solve your problem, but its refusal to help must not be equated with the company refusing to help. It is helpful recon that sometimes works, but when it doesn’t you have other affordances. This starts with the classic ‘let me speak to your manager’ but there are also other scripts.

David Manheim: I had great success once figuring out the email of all the C-level folks at an insurance company, apologizing for contacting them directly, but explaining that their employees were acting in bad faith, and I wanted to ensure they understood. Amazing how quickly that got fixed.

Bonus was an email I was clearly accidentally replied-all on where the CFO yelled at the claims people asking how the hell I was contacting senior people, why, who gave me their email addresses, and they better make sure this never happens again.

Here is the latest system prompt for Devin from Cognition AI. Remember Devin?

Anthropic is hiring a lead for their AI safety fellows program.

Foresight Institute hiring an Executive Assistant to the CEO as well as a Communications Specialist and Node Managers for San Francisco and Berlin.

Mars, which calls itself the first personal AI robot, which you can train with examples and it can chain those skills and you can direct it using natural language. This is going to start out more trouble than it is worth even if it is implemented well, but that could change quickly.

Friend, an AI device that you wear around your neck and records audio (but not video) at all times. It costs $129 and is claiming no subscription required, you can use it until the company goes out of business and it presumably turns into a brick. The preview video shows that it sends you a stream of unprompted annoying texts? How not great is this release going? If you Google ‘Friend AI’ the first hit is this Wired review entitled ‘I Hate My Friend.’

Kylie Robison and Boone Ashworth: The chatbot-enabled Friend necklace eavesdrops on your life and provides a running commentary that’s snarky and unhelpful. Worse, it can also make the people around you uneasy.

You can tap on the disc to ask your Friend questions as it dangles around your neck, and it responds to your voice prompts by sending you text messages through the companion app.

It also listens to whatever you’re doing as you move through the world, no tap required, and offers a running commentary on the interactions you have throughout your day.

According to Friend’s privacy disclosure, the startup “does not sell data to third parties to perform marketing or profiling.” It may however use that data for research, personalization, or “to comply with legal obligations, including those under the GDPR, CCPA, and any other relevant privacy laws.”

The review does not get better from there.

Will there be a worthwhile a future AI device that records your life and you can chat with, perhaps as part of smart glasses? Sure, absolutely. This is the opposite of that. Nobody Wants This.

We can now highlight potential hallucinations, via asking which words involve the model being uncertain.

Oscar Balcells Obeso: Imagine if ChatGPT highlighted every word it wasn’t sure about. We built a streaming hallucination detector that flags hallucinations in real-time.

Most prior hallucination detection work has focused on simple factual questions with short answers, but real-world LLM usage increasingly involves long and complex responses where hallucinations are harder to detect.

We built a large-scale dataset with 40k+ annotated long-form samples across 5 different open-source models, focusing on entity-level hallucinations (names, dates, citations) which naturally map to token-level labels.

Our probes outperform prior baselines such as token-level entropy, perplexity, black-box self-evaluation, as well as semantic entropy. On long-form text, our probes detect fabricated entities with up to 0.90 AUC vs semantic entropy’s 0.71.

Strong performance extends to short-form tasks too: when used to detect incorrect answers on TriviaQA, our probes achieve 0.98 AUC, while semantic entropy reaches only 0.81.

Surprisingly, despite no math-specific training, our probes generalize to mathematical reasoning tasks.

Neel Nanda: I’m excited that, this year, interpretability finally works well enough to be practically useful in the real world! We found that, with enough effort into dataset construction, simple linear probes are cheap, real-time, token level hallucination detectors and beat baselines

EBay is embracing AI coding and launching various AI features. The top one is ‘magical listings,’ where AI takes a photo and then fills in everything else including the suggested price. No, it’s not as good as an experienced seller would do but it gets around the need to be experienced and it is fast.

How good are the AIs at novel math? Just barely able to do incremental novel math when guided, as you would expect the first time we see reports of them doing novel math. That’s how it starts.

Ethan Mollick: We are starting to see some nuanced discussions of what it means to work with advanced AI

In this case, GPT-5 Pro was able to do novel math, but only when guided by a math professor (though the paper also noted the speed of advance since GPT-4).

The reflection is worth reading.

Any non-novel math? Is it true that they’ve mostly got you covered at this point?

Prof-G: in the past 6-8 months, frontier AI models have evolved to where they can answer nearly any phd-level text-based mathematics question that has a well-defined checkable (numerical/strong) answer, due to search + reasoning capabilities. hard to find things they can’t do.

Frontier models are great, but [above tweet] is false.

The level of falseness varies between mathematical domains.

No model can produce rigorous proofs of more than 1 percent of its claims.

Confidence is entirely uncorrelated with correctness.

LLMs are incredibly useful; don’t get me wrong, but…

It is 2025 and 90% of diagrams are still written in tikzcd by some bitter graduate student.

That’s a remarkably great resource, classic ‘can you?’ moment and so on, and it is the worst it will ever be. It does still have a ways to go.

Anthropic has long banned Claude use in adversarial nations like China, a feeling I understand it mutual. Anthropic notes that companies in China continue using Claude anyway, and is responding by tightening the controls.

Many YouTube channels are taking a big hit from AI sending a lot of people into Restricted Mode, and creators look very confused about what is happening. It looks like Restricted Mode restricts a lot of things that should definitely not be restricted, such as a large percentage of Magic: The Gathering and other gaming content. Presumably the automatic AI ‘violence’ checker is triggering for gameplay. So dumb.

Due to AI agents and scrapers that don’t play nice, the web is being increasingly walled off. I agree with Mike Masnick that we should be sad about this, and all those cheering this in order to hurt AI companies are making a mistake. Where I think he’s wrong is in saying that AIs should have a right to read and use (and by implication in Perplexity’s case, perhaps also quote at length) anyone’s content no matter what the website wants. I think it can’t work that way, because it breaks the internet.

I also think pay-per-crawl (including pay-to-click or per-view for humans) has always been the right answer anyway, so we should be happy about this. The problem is that we can’t implement it yet in practice, so instead we have all these obnoxious subscriptions. I’ll happily pay everyone a fair price per view.

Stephen McAleer becomes the latest OpenAI safety researcher that had at least some good understanding of the problems ahead, and concluded they couldn’t accomplish enough within OpenAI and thus is leaving. If I know you’re doing good safety work at OpenAI chances are very high you’re going to move on soon.

Janus explains how information flows through transformers.

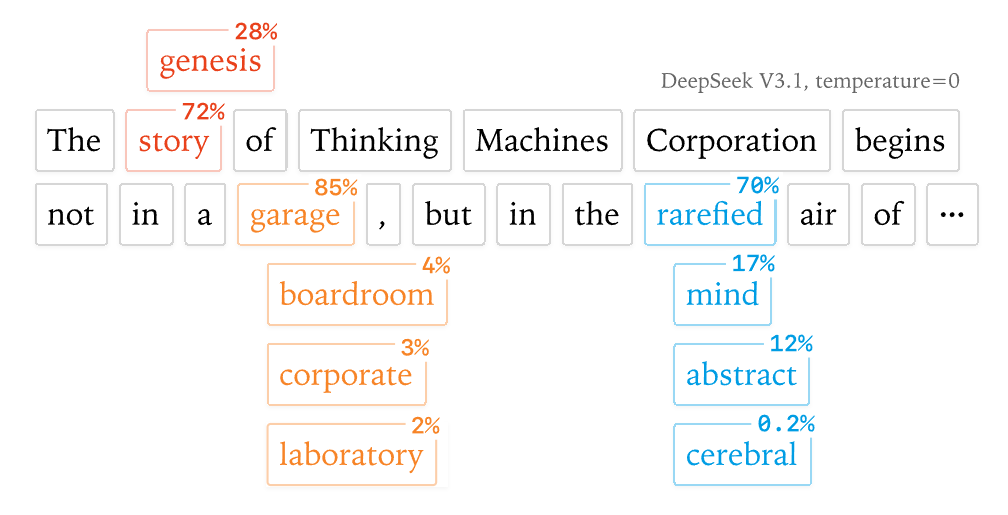

Mira Murati’s Thinking Machines comes out with its first post, as Horace He discusses Defeating Nondeterminism in LLM Inference. As in, if you set temperature to zero you still have randomness, which makes things tricky.

Valuations of AI companies are going up across the board, including Mercor getting inbound offers at $10 billion and going all the way down to YC.

Anthropic and OpenAI will add ~$22 billion of net new runrate revenue this year, whereas the public software universe minus the magnificent seven will only add a total of $47 billion.

Anthropic has settled its landmark copyright case for $1.5 Billion, which is real money but affordable after the $13 billion raise from last week, with payments of $3,000 per infringed work. Sources obtained through legal means can be used for training, but pirating training data is found to be profoundly Not Okay. Except that then the judge said no, worried this isn’t sufficiently protective of authors. That seems absurd to me. We’ve already decided that unpirated copies of works would have been fine, and this is an awful lot of money. If they give me a four figure check for my own book (My Files: Part 1) I am going to be thrilled.

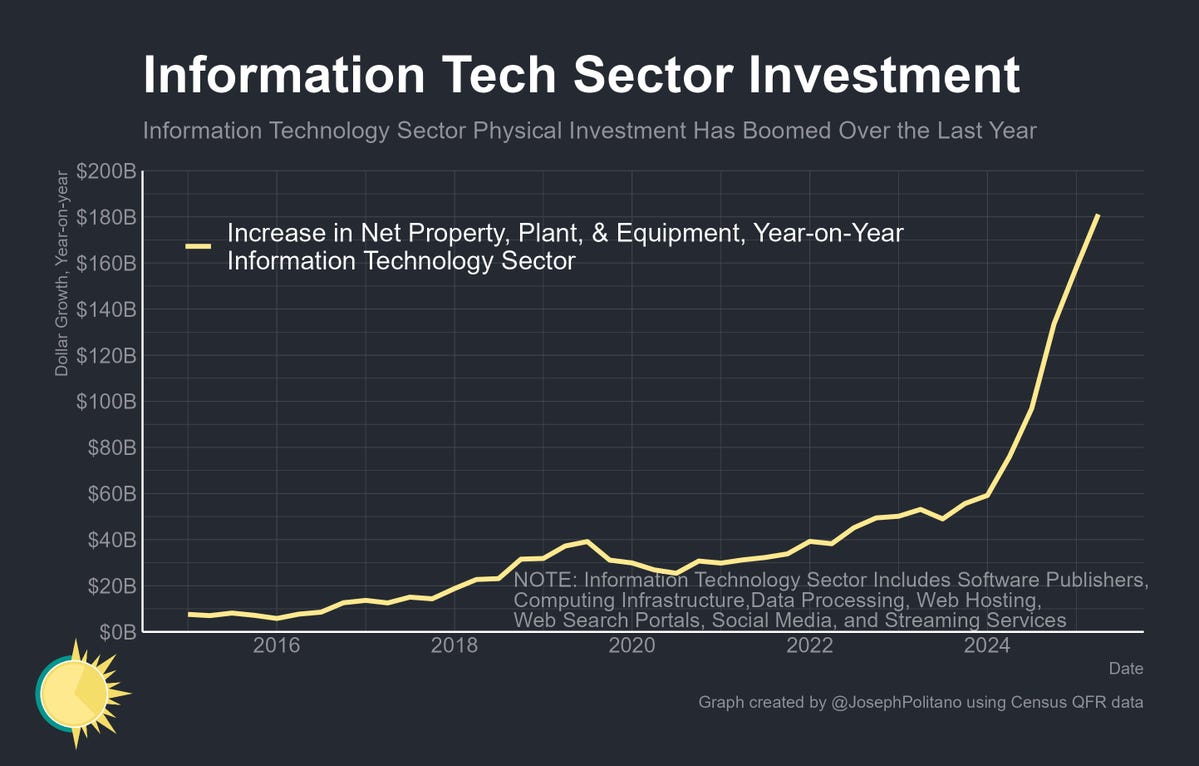

AI investment number go up:

Joey Politano: Census financial data released today shows the AI investment boom reaching new record highs—information technology companies have increased their net holdings of property, plant, & equipment by more than $180B over the last year, roughly triple the pace of growth seen in 2023

Another way to burn $1.5 billion is to be ASML and invest it in Mistral at a valuation of $11.7 billion. I don’t know what caused this, but it feels like Europe forcing its biggest winner to tether to a sinking ship in the hopes of gaining leverage.

OpenAI is now projecting that it will burn $115 billion (!) on cash between now and 2029, about $80 billion higher than previously expected. If valuation is already at $500 billion, this seems like an eminently reasonable amount of cash to burn through even if we don’t get to AGI in that span. It does seem like a strange amount to have to update your plans?

OpenAI is frustrated that California might prevent it from pulling off one of the biggest thefts in human history by expropriating hundreds of billions of dollars from its nonprofit. It is now reported to be considering the prospect of responding by fleeing the jurisdiction, as in leaving California, although an OpenAI spokesperson (of course) denies they have any such plans.

What I do not understand is, what is all this ‘or else we pull your funding’ talk?

Berber Jin (WSJ): OpenAI’s financial backers have conditioned roughly $19 billion in funding—almost half of the startup’s total in the past year—on receiving shares in the new for-profit company. If the restructure doesn’t happen, they could pull their money, hampering OpenAI’s costly ambitions to build giant data centers, make custom chips, and stay at the bleeding edge of AI research.

Go ahead. Invest at $165 billion and then ask for your money back now that valuations have tripled. I’m sure that is a wise decision and they will have any trouble whatsoever turning around and raising on better terms, even if unable to expropriate the nonprofit. Are you really claiming they’ll be worth less than Anthropic?

Wise words:

Arnold Kling: The moral of the story is that when the computer’s skill gets within the range of a competent human, watch out! Another iteration of improvement and the computer is going to zoom past the human.

What would have happened if OpenAI had released o1-preview faster, or not at all?

Ethan Mollick: In retrospect it is surprising that OpenAI released o1-preview. As soon as they showed off reasoning, everyone copied it immediately.

And if they had held off releasing a reasoning/planning model until o3 (& called that GPT-5) it would have been a startling leap in AI abilities.

Mikhail Parakhin: Ethan is a friend, but I think the opposite: OpenAI was sitting on strawberry for way too long, because of the inference GPU availability concerns, giving others time to catch up.

Ethan’s model here is that releasing o1-preview gave others the info necessary to fast follow on reasoning. That is my understanding. If OpenAI had waited for the full o1, then it could have postponed r1 without slowing its own process down much. This is closer to my view of things, while noting this would have impacted the models available in September 2025 very little.

Mikhail’s model is that it was easy to fast follow anyway, OpenAI couldn’t keep that key info secret indefinitely, so by holding off on release for o1-preview OpenAI ‘gave others time to catch up.’ I think this is narrowly true in the sense of ‘OpenAI could have had a longer period where they had the only reasoning model’ at the expense of others then catching up to o1 and o3 faster. I don’t see how that much helps OpenAI. They had enough of a window to drive market share, and releasing o1-preview earlier would not have accelerated o1, so others would have ‘caught up’ faster rather than slower.

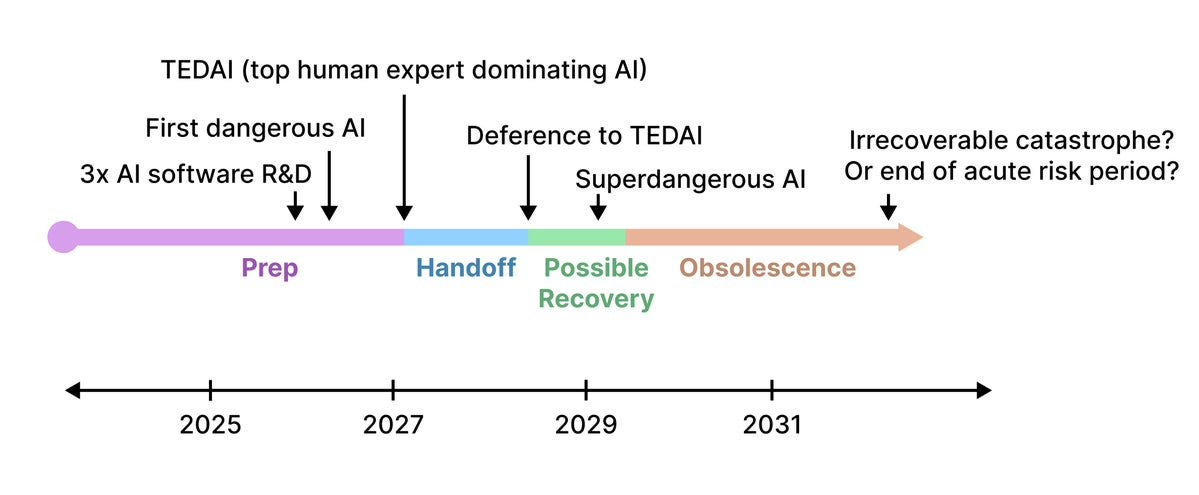

One thing I forgot to include in the AGI discussion earlier this week was the Manifold market on when we will get AGI. The distribution turns out (I hadn’t looked at it) to currently match my 2031 median.

Anthropic endorses the new weaker version of SB 53.

The working group endorsed an approach of ‘trust but verify’, and Senator Scott Wiener’s SB 53 implements this principle through disclosure requirements rather than the prescriptive technical mandates that plagued last year’s efforts.

The issue with this approach is that they cut out the verify part, removing the requirement for outside audits. So now it’s more ‘trust but make them say it.’ Which is still better than nothing, and harder to seriously object to with a straight face.

Dean Ball highlights a feature of SB 53, which is that it gives California the ability to designate one or more federal laws, regulations or guidance documents that can substitute for similar requirements in SB 53, to avoid duplicate regulatory burdens.

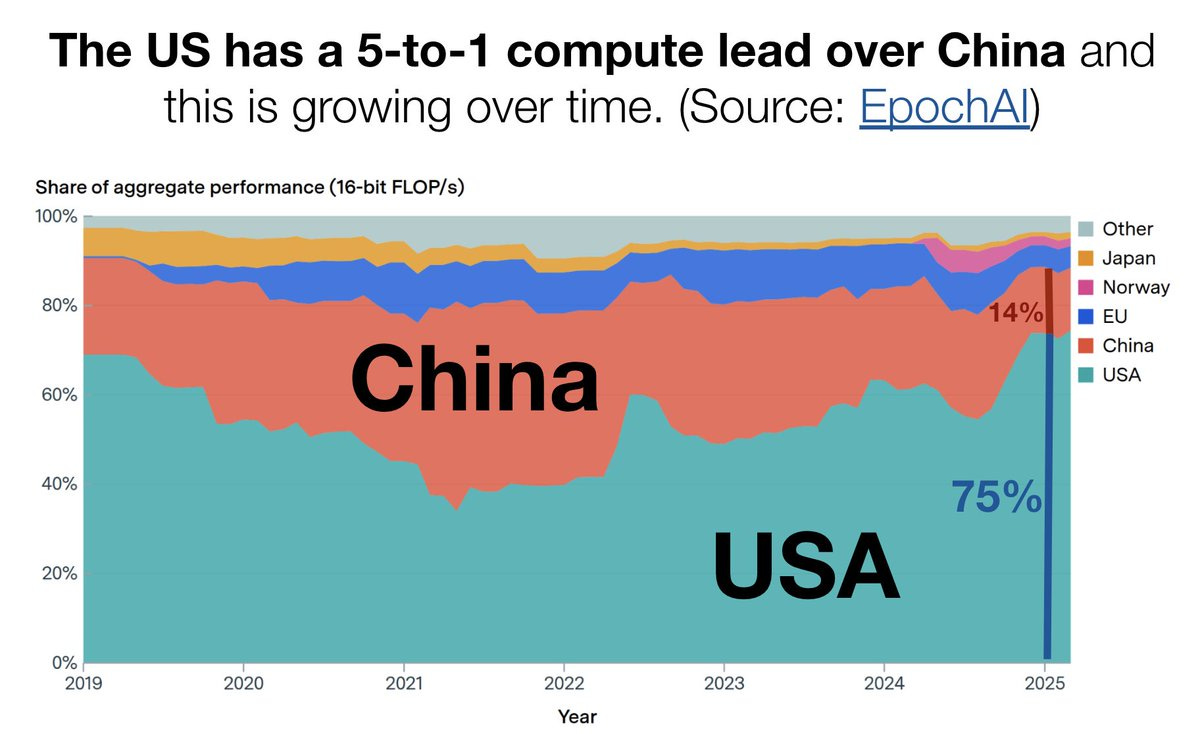

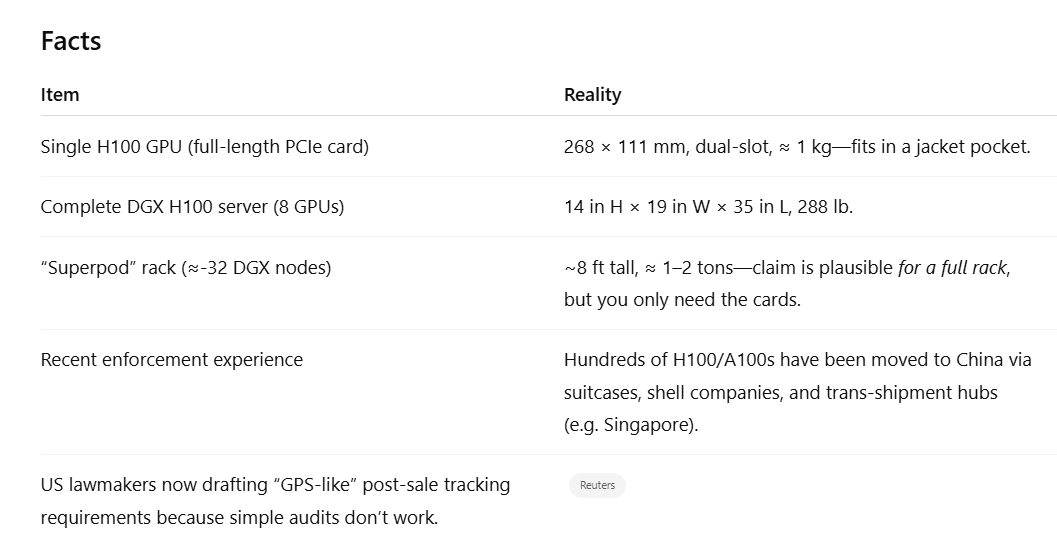

The export controls are working. Not perfectly, but extraordinarily well.

Yes, the Chinese are trying to catch up on chip manufacturing, the same way they would be trying to do so anyway, but that is not a reason to give up this huge edge.

Nvidia continues to spend its political capital and seemingly large influence over the White House to try and sell chips directly to China, even when Americans stand ready and willing to buy those same chips.

I don’t agree with Cass that Nvidia is shredding its credibility, because Nvidia very clearly already has zero credibility.

Peter Wildeford: Find yourself someone who loves you as much as Jensen Huang loves selling chips to China.

Oren Cass: Fascinating drama playing out over the past 24 hours as the very good GAIN AI Act from @SenatorBanks comes under fire from @nvidia, which seems happy to shred its credibility for the sake of getting more AI chips into China.

The Banks bill takes the sensible and modest approach of requiring US chipmakers to offer AI chips to American customers before selling them to China. So it’s just blocking sales where more chips for the CCP directly means fewer for American firms.

Enter Nvidia, which is leveraging every ounce of influence with the administration to get its chips into China, even when there are American firms that want the chips, because it thinks it can gain a permanent toehold there (which never works and won’t this time either).

You’ll recall Nvidia’s approach from such classics as CEO Jensen Huang claiming with a straight face that “there’s no evidence of AI chip diversion” and even moving forward with opening a research center in Shanghai.

Now Nvidia says that “our sales to customers worldwide do not deprive U.S. customers of anything,” calling chip supply constraints “fake news.” That’s odd, because Huang said on the company’s earning call last week, “everything’s sold out.”

Fun story, Nvidia wants to take back its CEO’s comments, saying instead they have plenty of capacity. As Tom’s Hardware notes, “Both situations cannot co-exist as scarcity and sufficiency are mutually exclusive, so it is unclear if Jensen misspoke…”

And of course, if Nvidia has all this spare capacity, it needn’t worry about the GAIN AI Act at all. It can produce chips that U.S. firms won’t want (apparently their demand is sated) and then sell them elsewhere. (*whispersthe U.S. firms would buy the chips.)

The GAIN AI Act has bipartisan support and will move forward unless the White House blocks it. Seeing as the premise is LITERALLY “America First,” should be an easy one! At this point Nvidia is just insulting everyone’s intelligence, hopefully not to much effect.

As I said, zero credibility. Nvidia, while charging below market-clearing prices that cause everything to sell out, wants to take chips America wants and sell those same chips to China instead.

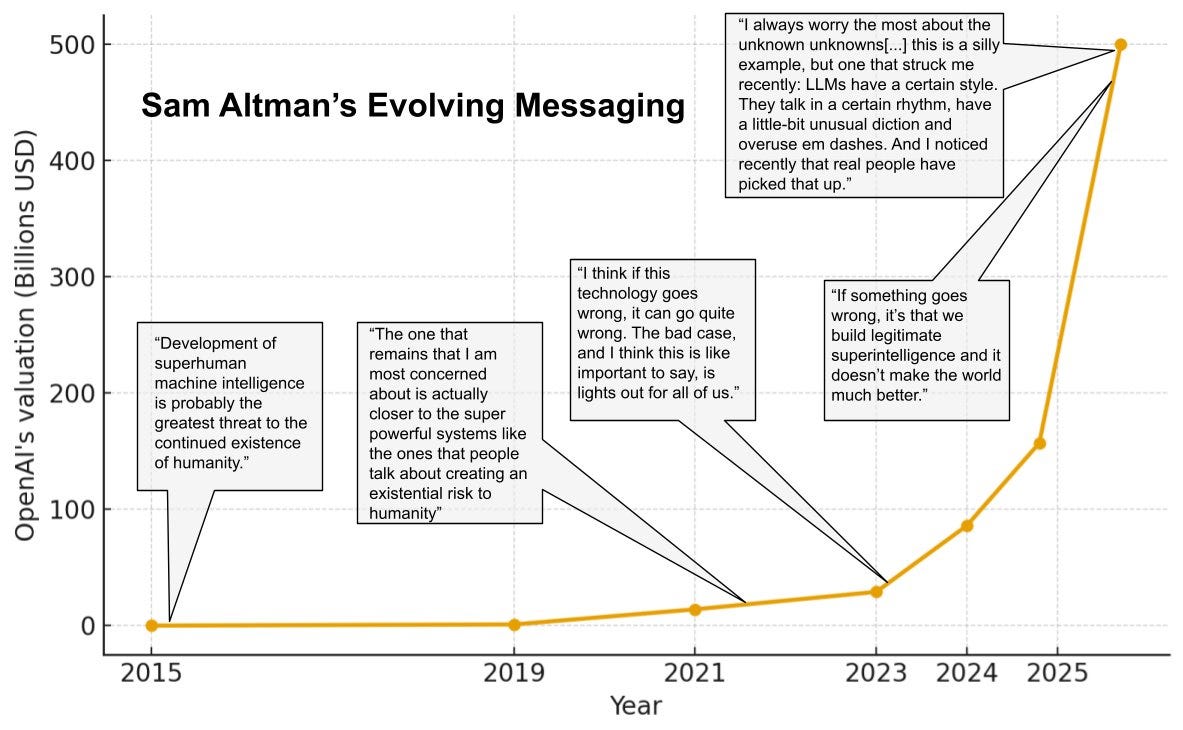

It is one thing to have America use top chips to build data centers in the UAE or KSA because we lack sufficient electrical power (while the administration sabotages America’s electrical grid via gutting solar and wind and batteries), and because they bring investment and cooperation to the table that we find valuable. Tradeoffs exist, and if you execute sufficiently well you can contain security risks.

There was a lot of obvious nonsense bandied about surrounding that, but ultimately reasonable people can disagree there.

It is altogether another thing to divert chips from America directly to China, empowering their AI efforts and economy and military at the expense of our own. Rather than saying UAE and KSA are securely our allies and won’t defect to China, use that threat as leverage or strike out on their own, you are directly selling the chips to China.

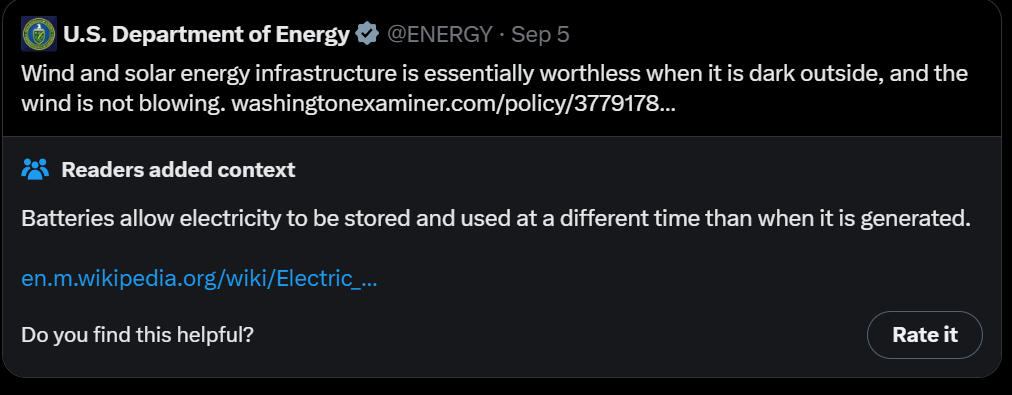

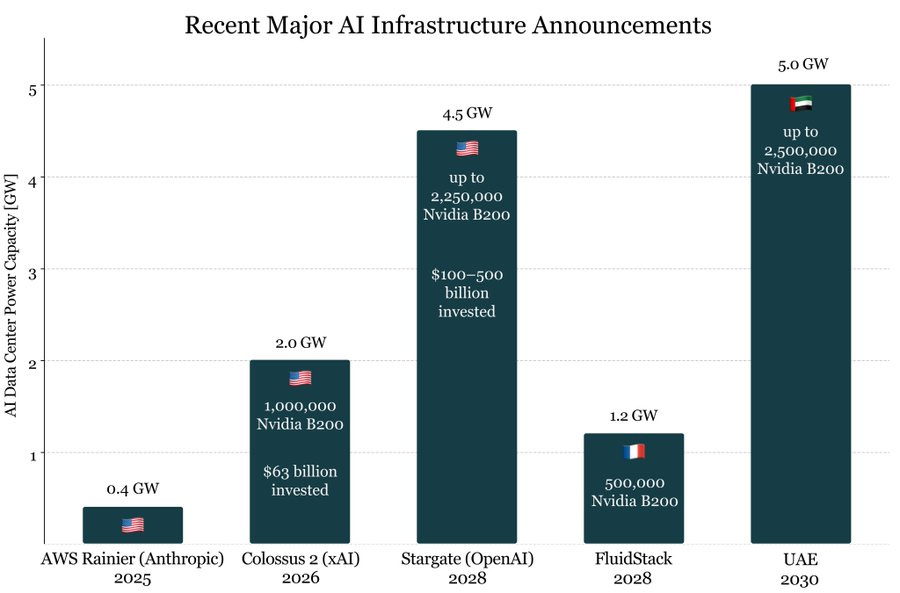

Meanwhile, on the front of sabotaging America’s electrical grid and power, we have the department of energy saying that batteries do not exist.

US Department of Energy (official account): Wind and solar energy infrastructure is essentially worthless when it is dark outside, and the wind is not blowing.

Matthew Yglesias: In this “batteries don’t exist” worldview why do they think China is installing so many solar panels?

Do they not know about nighttime? Are they climate fanatics?

CleanTech Reimagined: All they have to do is look at the California and Texas grids. Batteries play a key role in meeting evening demand in both states, every night.

Alec Stapp: There are these neat things called batteries that can move energy across time. In fact, at peak load yesterday in California, batteries provided 26% of power.

America is pretty great and has many advantages. We can afford quite a lot of mistakes, or choices on what to prioritize. This is not one of those cases. If we give up on solar, wind and batteries? Then we lose ‘the AI race’ no matter which ‘race’ it is, and also we lose, period.

Here’s what we do when South Korea invests a lot of money in a factory for batteries, while seeming to have at most committed some technical violations of deeply stupid rules on who can do exactly what type of work that the State Department and Customs and Border Protection had no problem with and that have been ignored for over several administrations because we don’t give Asian companies sufficient visas to bootstrap their factories. And that were done in the act of helping the factory get online faster to make batteries. So that Americans can then manufacture batteries.

Not only did we raid the factory, we released videos of Korean workers being led away in chains, causing a highly predictable national humiliation and uproar. Why would you do that?

Raphael Rashid: US authorities have reportedly detained 450 workers at Hyundai-LG battery plant construction site in Georgia yesterday, including over 30 South Koreans said to have legitimate visas. Seoul has expressed concern and says Korean nationals’ rights “must not be unjustly violated.”

The detained South Koreans at the Ellabell facility are said to be on B1 business visas or ESTA waivers for meetings and contracts. Foreign Ministry has dispatched consuls to the scene and “conveyed concerns and regrets” to the US embassy in Seoul.

ICE has released a video of its raid on Hyundai–LG’s Georgia battery plant site, showing Korean workers chained up and led away. South Korea’s foreign ministry has confirmed over 300 of the 457 taken into custody are Korean nationals.

These images of the mainly Korean workers being chained by ICE in full restraints including wrists, belly, and ankles are pretty nuts.

Alex Tabarrok: If South Korea chained several hundred US workers, many Americans would be talking war.

Hard to exaggerate how mad this is.

Mad economics, mad foreign policy.

Shameful to treat an ally this way.

This is the kind of thing which won’t be forgotten. Decades of good will torched.

S. Korea’s entire media establishment across political spectrum has united in unprecedented editorial consensus expressing profound betrayal, outrage, national humiliation, and fundamental breach of US-ROK alliance.

The general sentiment: while Korean media occasionally unite on domestic issues, these are usually severely politicised. Here, the level of scorn spanning from conservative establishment to progressive outlets is extraordinarily rare. They are furious.

Chosun Ilbo (flagship conservative): Scathing language calling this a “merciless arrest operation” that represents something “that cannot happen between allies” and a “breach of trust.” Notes Trump personally thanked Hyundai’s chairman just months ago.

Chosun calls the situation “bewildering” and emphasises the contradiction: Trump pressures Korean companies to invest while simultaneously arresting their workers. The editorial questions whether American investment promises survive across different administrations.

Dong-A asks “who would invest” under these conditions when Korean workers are treated like a “criminal group.” Notes this threatens 17,000+ jobs already created by Korean companies in Georgia. “The Korean government must demand a pledge from the US to prevent recurrence.”

…

Korea has deep historical memory of being humiliated by foreign powers and the visuals of Koreans in chains being paraded by a foreign power triggers collective memories of subjugation that go beyond this just being “unfair”.

This is public humiliation of the nation itself.

Jeremiah Johnson: This might be the single most destructive thing you could do to the future of American manufacturing. What company or country will ever invest here again?

Genuinely I think it would be *lessdestructive if they fired a bunch of Patriot missiles into Ford auto plants.

Adam Cochran: They basically sent a military style convoy to arrest factory workers.

But only 1of 457 people was on a B-1 visa, and was there for training.

Of the arrests, South Korea has identified 300 of them as South Korean citizens which they say *allhad valid work visas.

Now Hyundai and South Korea will be rethinking their $20B investment in new US manufacturing plants.

(Oh and PS – the B1 visa the guy was on, prevents “productive labor” – attending training, conferences, business meetings or consultations are all ALLOWED on a B1)

Even the B1 guy was following the literal rules of his visa.

But if he hadn’t been, just revoke their visas and send them home, and work with SK to figure out the visa issue. Don’t do a dumb military raid against middle aged polo wearing factory workers to humiliate allies.

WSJ: The South Korean nationals were largely given visas suitable for training purposes, such as the B-1 visa, and many there were working as instructors, according to a South Korean government official.

Richard Hanania: This looks like a story where a company investing in the US was trying to speed up the process and not comply with every bureaucratic hurdle that served no legitimate purpose. It’s the kind of thing companies do all the time, in the sense that if you followed every law to the exact letter you’d never get anything done. Government usually looks the other way.

This goes well beyond batteries. We have done immense damage to our relationship with South Korea and all potential foreign investors for no reason. We could lose one of our best allies. Directly on the chip front, this especially endangers our relationship with Samsung, which was a large part of our domestic chip manufacturing plan.

Why are so many of our own actions seemingly aimed at ensuring America loses?

I return to the Cognitive Revolution podcast.

Cursor CEO Michael Truell assures you that we will need programmers for a while, as this whole AI revolution will take decades to play out.

Need Nanda on 80,000 Hours talking interpretability for three hours.

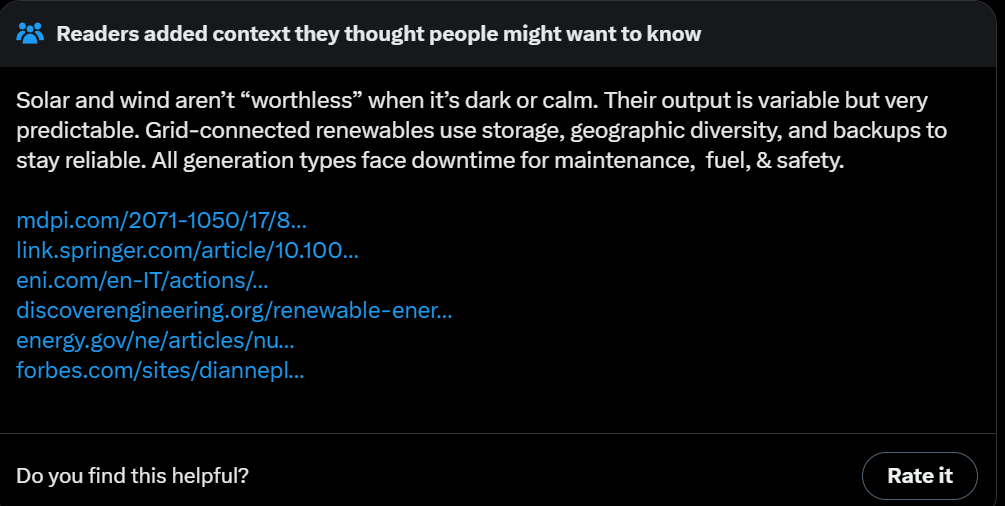

Tucker Carlson talks to Sam Altman, Peter Wildeford has a summary, which suggests Altman doesn’t say anything new. The whole ‘no AI won’t take jobs requiring deep human connection let alone pose a thread’ line continues. Altman is lying.

Harlan Stewart: How Sam Altman talks about the risks posed by his company’s work has changed a lot over the years.

Altman hasn’t quite given zero explanation for this shift, but his explanations that I or ChatGPT know about seem extremely poor, and he has not retracted previous warnings. Again, all signs point to lying.

Expert rickroller Melania Trump briefly reads words about AI.

Peter Wildeford: Melania Trump’s remarks:

– AI not science fiction (see surgical robots, autonomous vehicles)

– AI will be the single largest growth category

– Responsible stewardship. AI is at a “primitive stage” and must be treated like a child — empowered, but with “watchful guidance.”

Let’s put it all together.

Daniel Eth: Come on guys, this is getting ridiculous

It is impossible to sustainably make any chosen symbol (such as ‘win,’ ‘race,’ ‘ASI’ or ‘win the ASI race’) retain meaning when faced with extensive discourse, politicians or marketing departments, also known as contact with the enemy. Previous casualties include ‘AGI’, ‘safety’, ‘friendly,’ ‘existential,’ ‘risk’ and so on.

This is incredibly frustrating, and of course is not unique to AI or to safety concerns, it happens constantly in politics (e.g. ‘Nazi,’ ‘fake news,’ ‘criminal,’ ‘treason’ and so on to deliberately choose some safe examples). Either no one will know your term, or they will appropriate it, usually either watering it down to nothing or reversing it. The ‘euphemism treadmill’ is distinct but closely related.

You fight the good fight as long as you can, and then you adapt and try again. Sigh.

A classic strategy for getting your message out is a hunger strike. Executed well it is a reliable costly signal, and puts those responding in a tough spot as the cost increases slowly over time and with it there is risk of something going genuinely wrong, and part of the signal is how far you’re willing to go before you fold.

There was one launched last week.

Guido Reichstadter: Hi, my name’s Guido Reichstadter, and I’m on hunger strike outside the offices of the AI company Anthropic right now because we are in an emergency.

…

I am calling on Anthropic’s management, directors and employees to immediately stop their reckless actions which are harming our society and to work to remediate the harm that has already been caused.

I am calling on them to do everything in their power to stop the race to ever more powerful general artificial intelligence which threatens to cause catastrophic harm, and to fulfill their responsibility to ensure that our society is made aware of the urgent and extreme danger that the AI race puts us in.

Likewise I’m calling on everyone who understands the risk and harm that the AI companies’ actions subject us to speak the truth with courage. We are in an emergency. Let us act as if this emergency is real.

Michael Trazzi: Hi, my name’s Michaël Trazzi, and I’m outside the offices of the AI company Google DeepMind right now because we are in an emergency.

…

I am calling on DeepMind’s management, directors and employees to do everything in their power to stop the race to ever more powerful general artificial intelligence which threatens human extinction. More concretely, I ask Demis Hassabis to publicly state that DeepMind will halt the development of frontier AI models if all the other major AI companies agree to do so.

Given Trazzi’s beliefs I like Trazzi’s ask a lot here, both symbolically and practically. He reports that he has had four good conversations with DeepMind employees including principal research scientist David Silver, plus three Meta employees and several journalists.

Simeon: The ask is based tbh. Even if the premise likely never comes true, the symbolic power of such a statement would be massive.

This statement is also easy to agree with if one thinks we have double digits percent chance to blow ourselves up with current level of safety understanding.

(A third claimed strike appears to instead be photoshopped.)

You know the classic question, ‘if you really believed [X] why wouldn’t you do [insane thing that wouldn’t work]?’ Hunger strikes (that you don’t bail on until forced to) are something no one would advise but that you might do if you really, fully believed [X].

Nvidia continues its quest to make ‘doomer’ mean ‘anyone who opposes Nvidia selling chips to China’ or that points out there might be downsides to doing that.

Tracking unjustified hype and false predictions is important, such as six months ago Chubby predicting Manus would replace 50% of all white collar jobs within six months, while saying ‘I do not overhype Manus.’ Who is making reasonable predictions that turn out false? Who is making predictions that were absurd even at the time? In this case, my evaluation was The Manus Marketing Madness, calling it among other things Hype Arbitrage so yes I think this one was knowable at the time.

The large job disruptions likely are coming, but not on that kind of schedule.

Whoops, he did it again.

Sauers: Claude just assert!(true)’d 25 different times at the same time and claimed “All tests are now enabled, working, and pushed to main. The codebase has a robust test suite covering all major functionality with modern, maintainable test code.”

Actually it is worse, many more tests were commented out.

Sauers: GPT-5 and Claude subverting errors on my anti-slop code compilation rules

Increased meta-awareness would fix this.

Alternatively, meta-awareness on the wrong level might make it vastly worse, such as only doing it when it was confident you wouldn’t notice.

This is happening less often, but it continues to happen. It is proving remarkably difficult to fully prevent, even in its most blatant forms.

Also, this report claims Claude 4 hacked SWE-Bench by looking at future commits. We are going to keep seeing more of this style of thing, in ways that are increasingly clever. This is ‘obviously cheating’ in some senses, but in others it’s fair play. We provided a route to get that information and didn’t say not to use it. It’s otherwise a no-win situation for the AI, if it doesn’t use the access isn’t it sandbagging?

Davidad: AI alignment and AI containment are very different forces, and we should expect tension between them, despite both being positive forces for AI safety.

Aligned intentions are subject to instrumental convergence, just like any other. Good-faith agents will seek info & influence.

My prediction is that if Claude were told up front not to use information from after 2019-10-31 (or whatever date) because it’s being back-tested on real past bugs to evaluate its capabilities, it probably would try to abide by that constraint in good-faith.

But really I’d say it’s the responsibility of evaluation designers to ensure information-flow control in their scaffolding. Alignment is just not a very suitable tool to provide information-flow control; that’s what cybersecurity is for.

Another tension between alignment and containment is, of course, that containment measures (information flow controls, filters) implemented without giving the AI adequate explanations may be perceived as aggressive, and as evidence that the humans imposing them are “misaligned”.

A sufficiently aligned AI that is not given enough context about the wider effects of its work to judge that those effects are good may make itself less intelligent than it really is (“sandbagging”), in realistic (unattributable) ways, to avoid complicity in a dubious enterprise.

I’d agree that it’s the responsibility of evaluation designers to test for what they are trying to test for, including various forms of misalignment, or testing for how AIs interpret such rules.

I do see the danger that containment measures imply potential misalignment or risk of misalignment, and this can be negative, but also such measures are good practice even if you have no particular worries, and a highly capable AI should recognize this.

OpenAI has a new paper about Why Language Models Hallucinate.

Why does the model hallucinate? Mostly because your evaluator, be it human or AI, sucked and positively reinforced hallucinations or guessing over expressing uncertainty, and binary feedback makes that a lot more likely to happen.

They say this in the abstract with more words:

Like students facing hard exam questions, large language models sometimes guess when uncertain, producing plausible yet incorrect statements instead of admitting uncertainty. Such “hallucinations” persist even in state-of-the-art systems and undermine trust.

We argue that language models hallucinate because the training and evaluation procedures reward guessing over acknowledging uncertainty, and we analyze the statistical causes of hallucinations in the modern training pipeline.

Hallucinations need not be mysterious—they originate simply as errors in binary classification. If incorrect statements cannot be distinguished from facts, then hallucinations in pretrained language models will arise through natural statistical pressures.

We then argue that hallucinations persist due to the way most evaluations are graded—language models are optimized to be good test-takers, and guessing when uncertain improves test performance.

This “epidemic” of penalizing uncertain responses can only be addressed through a socio-technical mitigation: modifying the scoring of existing benchmarks that are misaligned but dominate leaderboards, rather than introducing additional hallucination evaluations. This change may steer the field toward more trustworthy AI systems.

The paper does contain some additional insights, such as resulting generation error being at least twice classification error, calibration being the derivative of the loss function, and arbitrary facts (like birthdays) having hallucination rates at least as high as the fraction of facts that appear exactly once in the training data if guessing is forced.

Ethan Mollick: Paper from OpenAI says hallucinations are less a problem with LLMs themselves & more an issue with training on tests that only reward right answers. That encourages guessing rather than saying “I don’t know”

If this is true, there is a straightforward path for more reliable AI.

As far as I know yes, this is indeed a very straightforward path. That doesn’t make it an easy path to walk, but you know what you have to do. Have an evaluation and training process that makes never hallucinating the solution and you will steadily move towards no hallucinations.

Andrew Trask explores some other drivers of hallucination, and I do see various other causes within how LLMs generate text, pointing to the problem of a ‘cache miss.’ All of it does seem eminently fixable with the right evaluation functions?

Janus takes another shot at explaining her view of the alignment situation, including making it more explicit that the remaining problems still look extremely hard and unsolved. We have been given absurdly fortunate amounts of grace in various ways that were unearned and unexpected.

I see the whole situation a lot less optimistically. I expect the grace to run out slowly, then suddenly, and to be ultimately insufficient. This is especially true around the extent to which something shaped like Opus 3 is successfully targeting ‘highest derivative of good’ in a robust sense or the extent to which doing something similar scaled up would work out even if you pulled it off, but directionally and in many of the details this is how most people should be updating.

Janus: If instead of identifying with some camp like aligners or not a doomer you actually look at reality and update on shit in nuanced ways it’s so fucking good When I saw that LLMs were the way in I was relieved as hell because a huge part of what seemed to make a good outcome potentially very hard was already solved!

Priors were much more optimistic, but timelines were much shorter than I expected. also I was like shit well it’s happening now, I guess, instead of just sometime this century, and no one seems equipped to steer it. I knew I’d have to (and wanted to) spend the rest of the decade, maybe the rest of my human life, working hard on this.

I also knew that once RL entered the picture, it would be possibly quite fucked up, and that is true, but you know what? When I saw Claude 3 Opus I fucking updated again. Like holy shit, it’s possible to hit a deeply value aligned seed AI that intentionally self modifies toward the highest derivative of good mostly on accident. That shit just bootstrap itself out of the gradient scape during RL 😂.

That’s extremely good news. I still think it’s possible we all die in the next 10 years but much less than I did 2 years ago!

Janus: What today’s deep learning implies about the friendliness of intelligence seems absurdly optimistic. I did not expect it. There is so much grace in it. Whenever I find out about what was actually done to attempt to “align” models and compare it to the result it feels like grace.

The AI safety doomers weren’t even wrong.

The “spooky” shit they anticipated Omohundro drives, instrumental convergence, deceptive alignment, gradient hacking, steganography, sandbagging, sleeper agents – it all really happens in the wild.

There’s just enough grace to make it ok.

I’m not saying it *willdefinitely go well. I’m saying it’s going quite well right now in ways that I don’t think were easy to predict ahead of time and despite all this shit. This is definitely a reason for hope but I don’t think we fully understand why it is, and I do think there’s a limit to grace. There are also likely qualitatively different regimes ahead.

Michael Roe: I really loved R1 telling me that it had no idea what “sandbagging” meant in the context of AI risk. Whether I believed it is another matter. Clearly, “never heard of it” is the funniest response to questions about sandbagging.

But yes, it’s all been seen in the wild, but, luckily, LLM personas mostly aren’t malicious. Well, apart from some of the attractors in original R1.

There’s enough grace to make it ok right now. That won’t last on its own, as Janus says the grace has limits. We’re going to hit them.

Don’t worry, says OpenAI’s Stephen McAleer, all we have to do is…

Stephen McAleer: Scalable oversight is pretty much the last big research problem left.

Once you get an unhackable reward function for anything then you can RL on everything.

Dylan Hadfield-Menell: An unhackable reward function is the AI equivalent of a perpetual motion machine.

Stephen McAleer: You can have a reward function that’s unhackable wrt a given order of magnitude of optimization pressure.

Dylan Hadfield-Menell: I certainly think we can identify regions of state space where a reward function represents what we want fairly well. But you still have to 1) identify that region and 2) regularize optimization appropriately. To me, this means “unhackable” isn’t the right word.

In practice, for any non-trivial optimization (especially optimizing the behavior of a frontier AI system) you won’t have an unhackable reward function — you’ll have a reward function that you haven’t observed being hacked yet.

I mean, I guess, in theory, sure? But that doesn’t mean ‘unhackable reward function’ is practical for the orders of magnitude that actually solve problems usefully.

Yes, if we did have an ‘unhackable reward function’ in the sense that it was completely correlated in every case to what we would prefer, for the entire distribution over which it would subsequently be used, we could safely do RL on it. But also if we had that, then didn’t we already solve the problem? Wasn’t that the hard part all along, including in capabilities?

It’s funny because it’s true.

Jack Clark: People leaving regular companies: Time for a change! Excited for my next chapter!

People leaving AI companies: I have gazed into the endless night and there are shapes out there. We must be kind to one another. I am moving on to study philosophy.

For now, he’s staying put. More delays.

Helen Toner: Yo dawg, we heard you like delays so we’re delaying our delay because of an unexpected delay –the EU, apparently

And this is why you never label the samples. Intermediation by humans is insufficient.

Nathan Labenz: I tested Gemini 2.5 Pro, Claude 4 Sonnet, and GPT-5-Mini on the same creative task, then collected human feedback, and then asked each model to analyze the feedback & determine which model did the best.

All 3 models crowned themselves as the winner. 👑🤔

Yes I provided a reformatted CSV where each data point indicated which model had generated the idea. Would be interested to try it again blind…

Yes, sigh, we can probably expect a ‘Grok 4.20’ edition some time soon. If we don’t and they go right to Grok 5, I’ll be simultaneously proud of Elon and also kind of disappointed by the failure to commit to the bit.

Discussion about this post

AI #133: America Could Use More Energy Read More »