Why imperfection could be key to Turing patterns in nature

In essence, it’s a type of symmetry breaking. Any two processes that act as activator and inhibitor will produce periodic patterns and can be modeled using Turing’s diffusion function. The challenge is moving from Turing’s admittedly simplified model to pinpointing the precise mechanisms serving in the activator and inhibitor roles.

This is especially challenging in biology. Per the authors of this latest paper, the classical approach to a Turing mechanism balances reaction and diffusion using a single length scale, but biological patterns often incorporate multiscale structures, grain-like textures, or certain inherent imperfections. And the resulting patterns are often much blurrier than those found in nature.

Can you say “diffusiopherosis”?

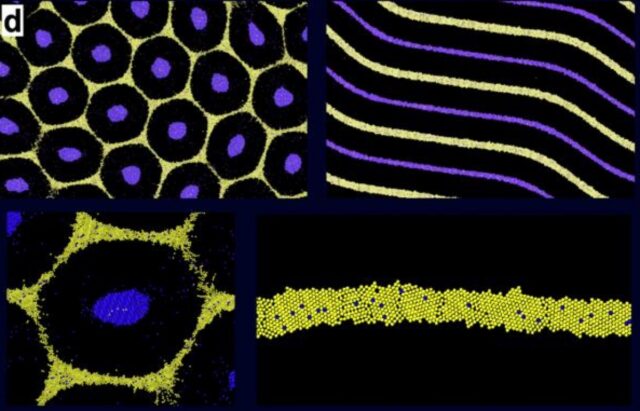

Simulated hexagon and stripe patterns obtained by diffusiophoretic assembly of two types of cells on top of the chemical patterns. Credit: Siamak Mirfendereski and Ankur Gupta/CU Boulder

In 2023, UCB biochemical engineers Ankur Gupta and Benjamin Alessio developed a new model that added diffusiopherosis into the mix. It’s a process by which colloids are transported via differences in solute concentration gradients—the same process by which soap diffuses out of laundry in water, dragging particles of dirt out of the fabric. Gupta and Alessio successfully used their new model to simulate the distinctive hexagon pattern (alternating purple and black) on the ornate boxfish, native to Australia, achieving much sharper outlines than the model originally proposed by Turing.

The problem was that the simulations produced patterns that were too perfect: hexagons that were all the same size and shape and an identical distance apart. Animal patterns in nature, by contrast, are never perfectly uniform. So Gupta and his UCB co-author on this latest paper, Siamak Mirfendereski, figured out how to tweak the model to get the pattern outputs they desired. All they had to do was define specific sizes for individual cells. For instance, larger cells create thicker outlines, and when they cluster, they produce broader patterns. And sometimes the cells jam up and break up a stripe. Their revised simulations produced patterns and textures very similar to those found in nature.

“Imperfections are everywhere in nature,” said Gupta. “We proposed a simple idea that can explain how cells assemble to create these variations. We are drawing inspiration from the imperfect beauty of [a] natural system and hope to harness these imperfections for new kinds of functionality in the future.” Possible future applications include “smart” camouflage fabrics that can change color to better blend with the surrounding environment, or more effective targeted drug delivery systems.

Matter, 2025. DOI: 10.1016/j.matt.2025.102513 (About DOIs).

Why imperfection could be key to Turing patterns in nature Read More »