Terminator’s Cameron joins AI company behind controversial image generator

a net in the sky —

Famed sci-fi director joins board of embattled Stability AI, creator of Stable Diffusion.

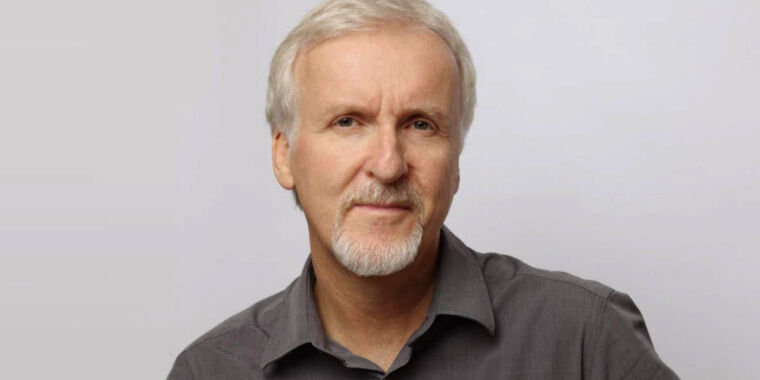

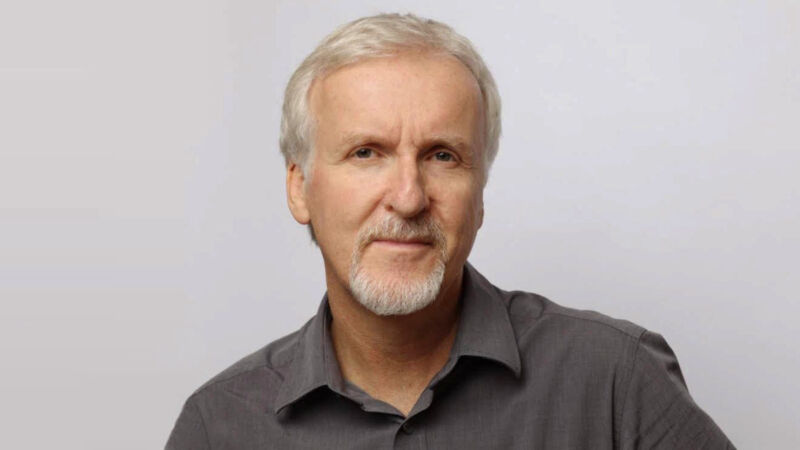

Enlarge / Filmmaker James Cameron.

On Tuesday, Stability AI announced that renowned filmmaker James Cameron—of Terminator and Skynet fame—has joined its board of directors. Stability is best known for its pioneering but highly controversial Stable Diffusion series of AI image-synthesis models, first launched in 2022, which can generate images based on text descriptions.

“I’ve spent my career seeking out emerging technologies that push the very boundaries of what’s possible, all in the service of telling incredible stories,” said Cameron in a statement. “I was at the forefront of CGI over three decades ago, and I’ve stayed on the cutting edge since. Now, the intersection of generative AI and CGI image creation is the next wave.”

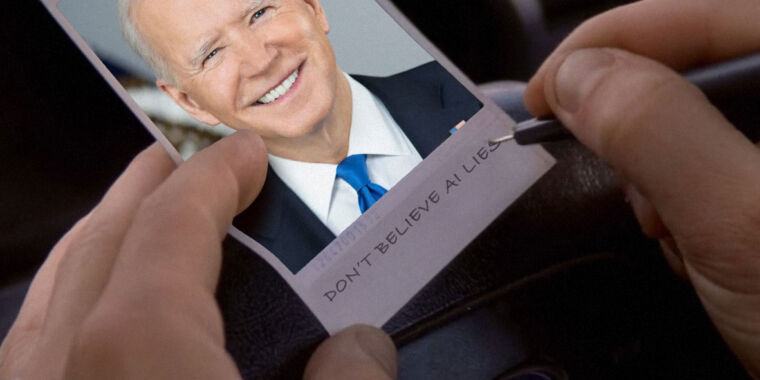

Cameron is perhaps best known as the director behind blockbusters like Avatar, Titanic, and Aliens, but in AI circles, he may be most relevant for the co-creation of the character Skynet, a fictional AI system that triggers nuclear Armageddon and dominates humanity in the Terminator media franchise. Similar fears of AI taking over the world have since jumped into reality and recently sparked attempts to regulate existential risk from AI systems through measures like SB-1047 in California.

In a 2023 interview with CTV news, Cameron referenced The Terminator‘s release year when asked about AI’s dangers: “I warned you guys in 1984, and you didn’t listen,” he said. “I think the weaponization of AI is the biggest danger. I think that we will get into the equivalent of a nuclear arms race with AI, and if we don’t build it, the other guys are for sure going to build it, and so then it’ll escalate.”

Hollywood goes AI

Of course, Stability AI isn’t building weapons controlled by AI. Instead, Cameron’s interest in cutting-edge filmmaking techniques apparently drew him to the company.

“James Cameron lives in the future and waits for the rest of us to catch up,” said Stability CEO Prem Akkaraju. “Stability AI’s mission is to transform visual media for the next century by giving creators a full stack AI pipeline to bring their ideas to life. We have an unmatched advantage to achieve this goal with a technological and creative visionary like James at the highest levels of our company. This is not only a monumental statement for Stability AI, but the AI industry overall.”

Cameron joins other recent additions to Stability AI’s board, including Sean Parker, former president of Facebook, who serves as executive chairman. Parker called Cameron’s appointment “the start of a new chapter” for the company.

Despite significant protest from actors’ unions last year, elements of Hollywood are seemingly beginning to embrace generative AI over time. Last Wednesday, we covered a deal between Lionsgate and AI video-generation company Runway that will see the creation of a custom AI model for film production use. In March, the Financial Times reported that OpenAI was actively showing off its Sora video synthesis model to studio executives.

Unstable times for Stability AI

Cameron’s appointment to the Stability AI board comes during a tumultuous period for the company. Stability AI has faced a series of challenges this past year, including an ongoing class-action copyright lawsuit, a troubled Stable Diffusion 3 model launch, significant leadership and staff changes, and ongoing financial concerns.

In March, founder and CEO Emad Mostaque resigned, followed by a round of layoffs. This came on the heels of the departure of three key engineers—Robin Rombach, Andreas Blattmann, and Dominik Lorenz, who have since founded Black Forest Labs and released a new open-weights image-synthesis model called Flux, which has begun to take over the r/StableDiffusion community on Reddit.

Despite the issues, Stability AI claims its models are widely used, with Stable Diffusion reportedly surpassing 150 million downloads. The company states that thousands of businesses use its models in their creative workflows.

While Stable Diffusion has indeed spawned a large community of open-weights-AI image enthusiasts online, it has also been a lightning rod for controversy among some artists because Stability originally trained its models on hundreds of millions of images scraped from the Internet without seeking licenses or permission to use them.

Apparently that association is not a concern for Cameron, according to his statement: “The convergence of these two totally different engines of creation [CGI and generative AI] will unlock new ways for artists to tell stories in ways we could have never imagined. Stability AI is poised to lead this transformation.”

Terminator’s Cameron joins AI company behind controversial image generator Read More »