The hunt for rare bitcoin is nearing an end

Rarity from thin air —

Rare bitcoin fragments are worth many times their face value.

Getty Images | Andriy Onufriyenko

Billy Restey is a digital artist who runs a studio in Seattle. But after hours, he hunts for rare chunks of bitcoin. He does it for the thrill. “It’s like collecting Magic: The Gathering or Pokémon cards,” says Restey. “It’s that excitement of, like, what if I catch something rare?”

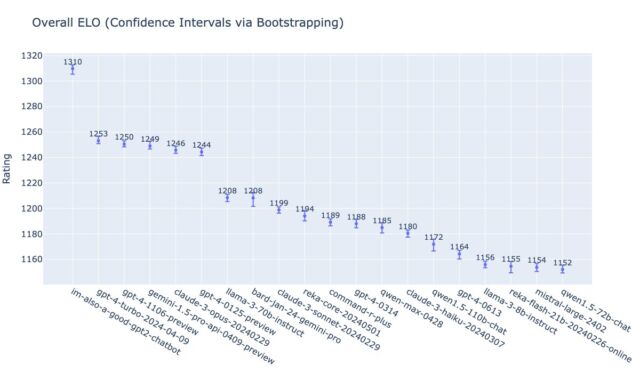

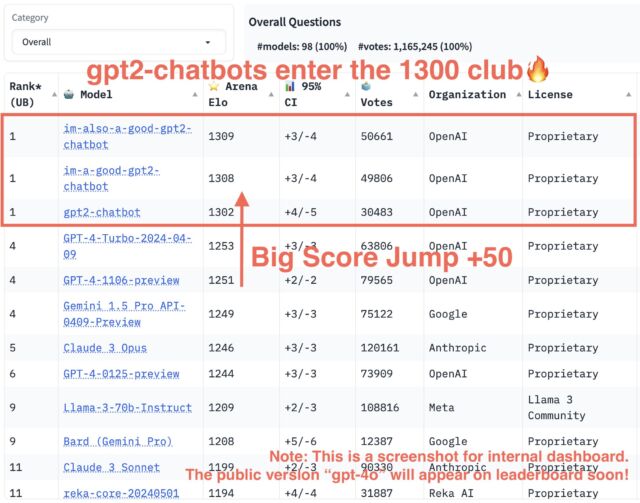

In the same way a dollar is made up of 100 cents, one bitcoin is composed of 100 million satoshis—or sats, for short. But not all sats are made equal. Those produced in the year bitcoin was created are considered vintage, like a fine wine. Other coveted sats were part of transactions made by bitcoin’s inventor. Some correspond with a particular transaction milestone. These and various other properties make some sats more scarce than others—and therefore more valuable. The very rarest can sell for tens of millions of times their face value; in April, a single sat, normally worth $0.0006, sold for $2.1 million.

Restey is part of a small, tight-knit band of hunters trying to root out these rare sats, which are scattered across the bitcoin network. They do this by depositing batches of bitcoin with a crypto exchange, then withdrawing the same amount—a little like depositing cash with a bank teller and immediately taking it out again from the ATM outside. The coins they receive in return are not the same they deposited, giving them a fresh stash through which to sift. They rinse and repeat.

In April 2023, when Restey started out, he was one of the only people hunting for rare sats—and the process was entirely manual. But now, he uses third-party software to automatically filter through and separate out any precious sats, which he can usually sell for around $80. “I’ve sifted through around 230,000 bitcoin at this point,” he says.

Restey has unearthed thousands of uncommon sats to date, selling only enough to cover the transaction fees and turn a small profit—and collecting the rest himself. But the window of opportunity is closing. The number of rare sats yet to be discovered is steadily shrinking and, as large organizations cotton on, individual hunters risk getting squeezed out. “For a lot of people, it doesn’t make [economic] sense anymore,” says Restey. “But I’m still sat hunting.”

Rarity out of thin air

Bitcoin has been around for 15 years, but rare sats have existed for barely more than 15 months. In January 2023, computer scientist Casey Rodarmor released the Ordinals protocol, which sits as a veneer over the top of the bitcoin network. His aim was to bring a bitcoin equivalent to non-fungible tokens (NFTs) to the network, whereby ownership of a piece of digital media is represented by a sat. He called them “inscriptions.”

There had previously been no way to tell one sat from another. To remedy the problem, Rodarmor coded a method into the Ordinals protocol for differentiating between sats for the first time, by ordering them by number from oldest to newest. Thus, as a side effect of an apparatus designed for something else entirely, rare sats were born.

By allowing sats to be sequenced and tracked, Rodarmor had changed a system in which every bitcoin was freely interchangeable into one in which not all units of bitcoin are equal. He had created rarity out of thin air. “It’s an optional, sort of pretend lens through which to view bitcoin,” says Rodarmor. “It creates value out of nothing.”

When the Ordinals system was first released, it divided bitcoiners. Inscriptions were a near-instant hit, but some felt they were a bastardization of bitcoin’s true purpose—as a system for peer-to-peer payments—or had a “reflexive allergic reaction,” says Rodarmor, to anything that so much as resembled an NFT. The enthusiasm for inscriptions resulted in network congestion as people began to experiment with the new functionality, thus driving transaction fees to a two-year high and adding fuel to an already-fiery debate. One bitcoin developer called for inscriptions to be banned. Those that trade in rare sats have come under attack, too, says Danny Diekroeger, another sat hunter. “Bitcoin maximalists hate this stuff—and they hate me,” he says.

The fuss around the Ordinals system has by now mostly died down, says Rodarmor, but a “loud minority” on X is still “infuriated” by the invention. “I wish hardcore bitcoiners understood that people are going to do things with bitcoin that they think are stupid—and that’s okay,” says Rodarmor. “Just, like, get over it.”

The hunt for rare sats, itself an eccentric mutation of the bitcoin system, falls into that bracket. “It’s highly wacky,” says Rodarmor.

The hunt for rare bitcoin is nearing an end Read More »