MIT License text becomes viral “sad girl” piano ballad generated by AI

WARRANTIES OF MERCHANTABILITY —

“Permission is hereby granted” comes from Suno AI engine that creates new songs on demand.

We’ve come a long way since primitive AI music generators in 2022. Today, AI tools like Suno.ai allow any series of words to become song lyrics, including inside jokes (as you’ll see below). On Wednesday, prompt engineer Riley Goodside tweeted an AI-generated song created with the prompt “sad girl with piano performs the text of the MIT License,” and it began to circulate widely in the AI community online.

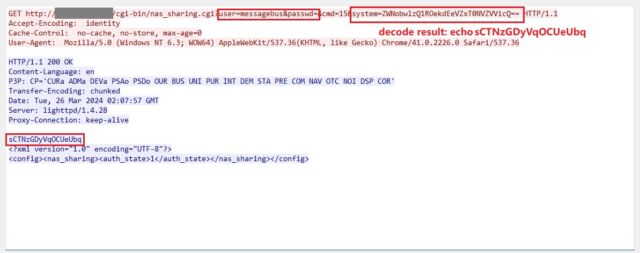

The MIT License is a famous permissive software license created in the late 1980s, frequently used in open source projects. “My favorite part of this is ~1: 25 it nails ‘WARRANTIES OF MERCHANTABILITY’ with a beautiful Imogen Heap-style glissando then immediately pronounces ‘FITNESS’ as ‘fistiff,'” Goodside wrote on X.

Suno (which means “listen” in Hindi) was formed in 2023 in Cambridge, Massachusetts. It’s the brainchild of Michael Shulman, Georg Kucsko, Martin Camacho, and Keenan Freyberg, who formerly worked at companies like Meta and TikTok. Suno has already attracted big-name partners, such as Microsoft, which announced the integration of an earlier version of the Suno engine into Bing Chat last December. Today, Suno is on v3 of its model, which can create temporally coherent two-minute songs in many different genres.

The company did not reply to our request for an interview by press time. In March, Brian Hiatt of Rolling Stone wrote a profile about Suno that describes the service as a collaboration between OpenAI’s ChatGPT (for lyric writing) and Suno’s music generation model, which some experts think has likely been trained on recordings of copyrighted music without license or artist permission.

It’s exactly this kind of service that upset over 200 musical artists enough last week that they signed an Artist Rights Alliance open letter asking tech companies to stop using AI tools to generate music that could replace human artists.

Considering the unknown provenance of the training data, ownership of the generated songs seems like a complicated question. Suno’s FAQ says that music generated using its free tier remains owned by Suno and can only be used for non-commercial purposes. Paying subscribers reportedly own generated songs “while subscribed to Pro or Premier,” subject to Suno’s terms of service. However, the US Copyright Office took a stance last year that purely AI-generated visual art cannot be copyrighted, and while that standard has not yet been resolved for AI-generated music, it might eventually become official legal policy as well.

The Moonshark song

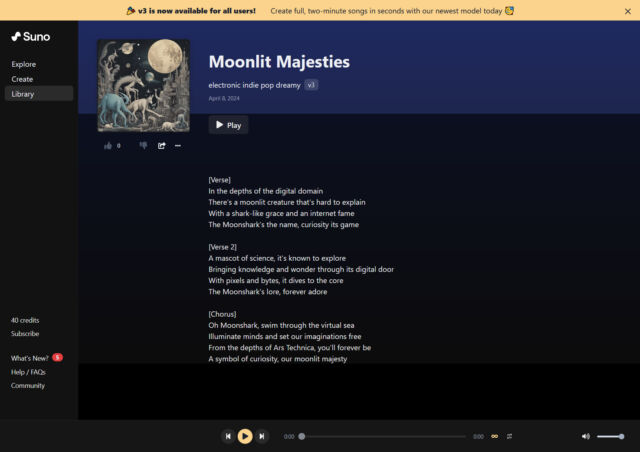

Enlarge / A screenshot of the Suno.ai website showing lyrics of an AI-generated “Moonshark” song.

Benj Edwards

While using the service, Suno appears to have no trouble creating unique lyrics based on your prompt (unless you supply your own) and sets those words to stylized genres of music it generates based on its training dataset. It dynamically generates vocals as well, although they include audible aberrations. Suno’s output is not indistinguishable from high-fidelity human-created music yet, but given the pace of progress we’ve seen, that bridge could be crossed within the next year.

To get a sense of what Suno can do, we created an account on the site and prompted the AI engine to create songs about our mascot, Moonshark, and about barbarians with CRTs, two inside jokes at Ars. What’s interesting is that although the AI model aced the task of creating an original song for each topic, both songs start with the same line, “In the depths of the digital domain.” That’s possibly an artifact of whatever hidden prompt Suno is using to instruct ChatGPT when writing the lyrics.

Suno is arguably a fun toy to experiment with and doubtless a milestone in generative AI music tools. But it’s also an achievement tainted by the unresolved ethical issues related to scraping musical work without the artist’s permission. Then there’s the issue of potentially replacing human musicians, which has not been far from the minds of people sharing their own Suno results online. On Monday, AI influencer Ethan Mollick wrote, “I’ve had a song from Suno AI stuck in my head all day. Grim milestone or good one?”

MIT License text becomes viral “sad girl” piano ballad generated by AI Read More »